1. Introduction

Natural gas is widely used by countries worldwide because of its large storage capacity, high utilisation rate, high economic efficiency, and clean and non-polluting characteristics [

1]. In the natural gas industry, the choice of transport method plays a crucial role in ensuring energy security. Pipeline transmission is the best choice among natural gas transport methods [

2]. It has significant advantages in technology, economy, and safety. It can also meet the needs of large-scale and long-distance natural gas transport. The centrifugal compressor used in long-distance gas pipelines provides pressurisation, which plays a pivotal role throughout the gas transmission system. Its performance is not only directly related to the safety of the gas transmission system, but also has a far-reaching impact on the economic benefits.

However, mainline natural gas pipeline compressor unit utilisation efficiency is low. When it is difficult to analyse the safety boundaries and operating points of the compressor, the number of units that can be operated relies on manual experience. They are usually configured according to maximum transmission conditions. The unit needs to be constantly commissioned if it is not possible to quickly determine the number of units switched on, and the extra electricity used to operate a large number of compressors will be wasted. To achieve the reasonable configuration of the compressor unit, it is necessary to accurately predict the performance parameters of the compressor [

3].

The methods used for predicting compressor performance parameters are mainly mechanism modelling and data-driven approaches. Early compressor performance analysis is mainly based on mechanistic modelling. The flow loss model is established using manual calculations to determine the performance of the compressors [

4]. However, the accuracy of this model is low and it requires a large number of manual calculations.

With advances in computer technology, Computational Fluid Dynamics (CFD) has opened up new possibilities for analysing compressor performance parameters. The technique is capable of modelling the complex flow field inside a centrifugal compressor. Three-dimensional CFD analysis was applied by Wang with the aim of investigating the flow inside a centrifugal compressor [

5], and the effect of different gas models on the predicted results of the CFD was verified.

It is difficult for CFD techniques to accurately model unsteady and turbulent flow mechanisms, although they can analyse compressor performance to some extent. However, the accuracy of the simulation is insufficient and the convergence effect is poor. The machine learning modelling technique adopts a data-driven approach, which removes the complex physical model and improves the efficiency and accuracy of prediction. An increasing number of scholars have analysed the performance of compressors by means of machine learning modelling. Various regression models and artificial neural networks (ANNs) were used by Pau to predict the performance of centrifugal compressors. In particular, the accuracy and efficiency of Gaussian Process Regression (GPR) and artificial neural networks to model pressure ratios for a given mass flow rate and the speed of a centrifugal compressor were investigated. The results show that both GPR and ANN can predict compressor performance well [

6]. A hybrid modelling approach combining loss models with radial basis function neural networks was proposed by Chu. Compared with the traditional mechanism model, the prediction accuracy of the hybrid model is improved [

7].

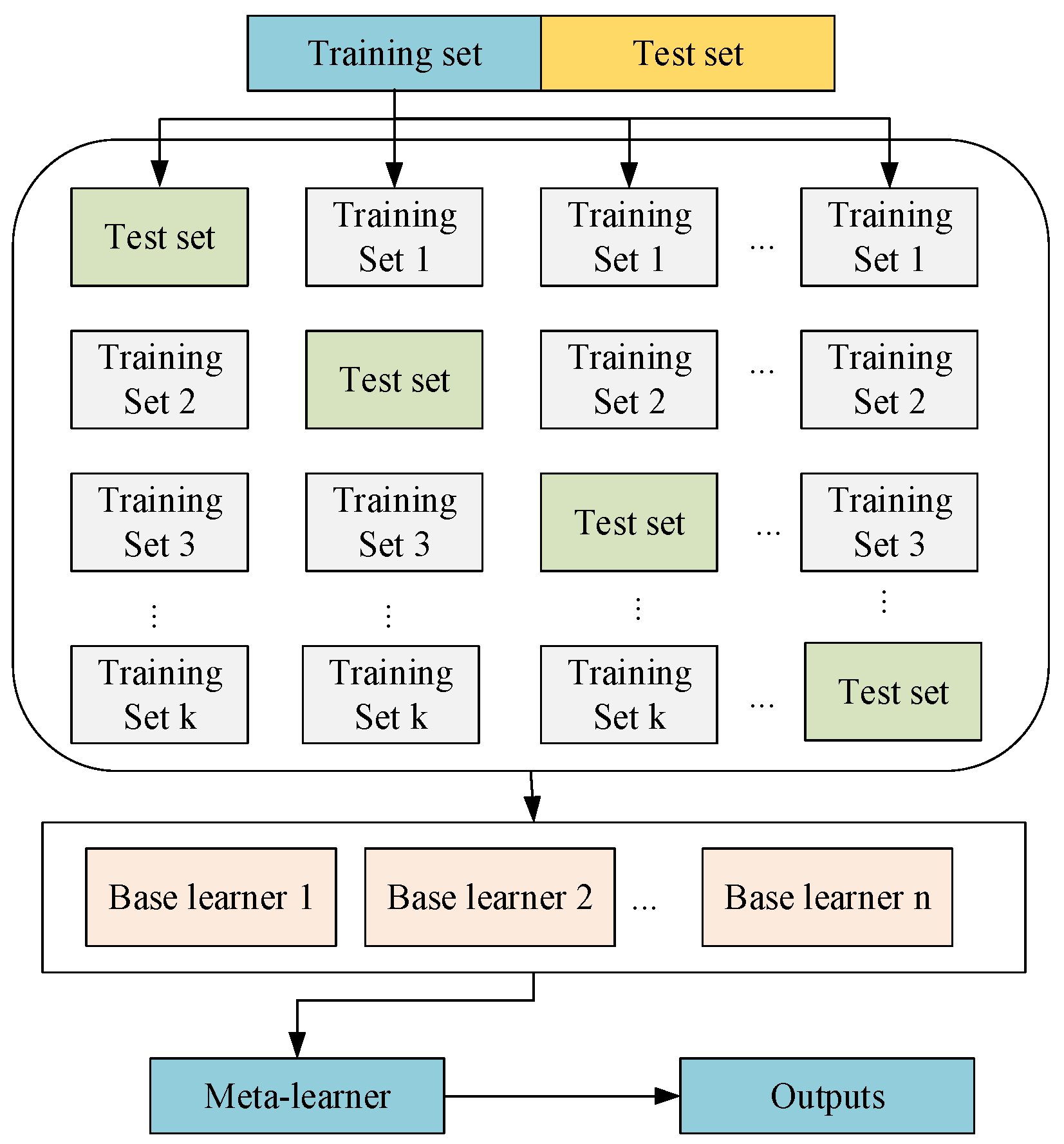

The above methods use a single model, which does not take advantage of the strengths of different models and makes it difficult to improve accuracy. A Stacking integrated learning approach is used to solve these problems. Multiple models are integrated to better utilise the advantages of different algorithms and compensate for the deficiencies of a single model. Shi combined strong learning algorithms such as support vector machines and long- and short-term memory networks, which complement each other’s differences and diversities to improve prediction accuracy [

8]. However, the researchers only focused on improving the accuracy of predictive models to enhance predictive performance, and ignored useful information in the prediction error of the model, which makes it difficult for existing research to further improve the accuracy of predictions. In addition, the first layer of the traditional Stacking model processes the predictions of the test set by direct averaging [

9]. This results in some of the superiority of the base model being masked and the shortcomings being magnified.

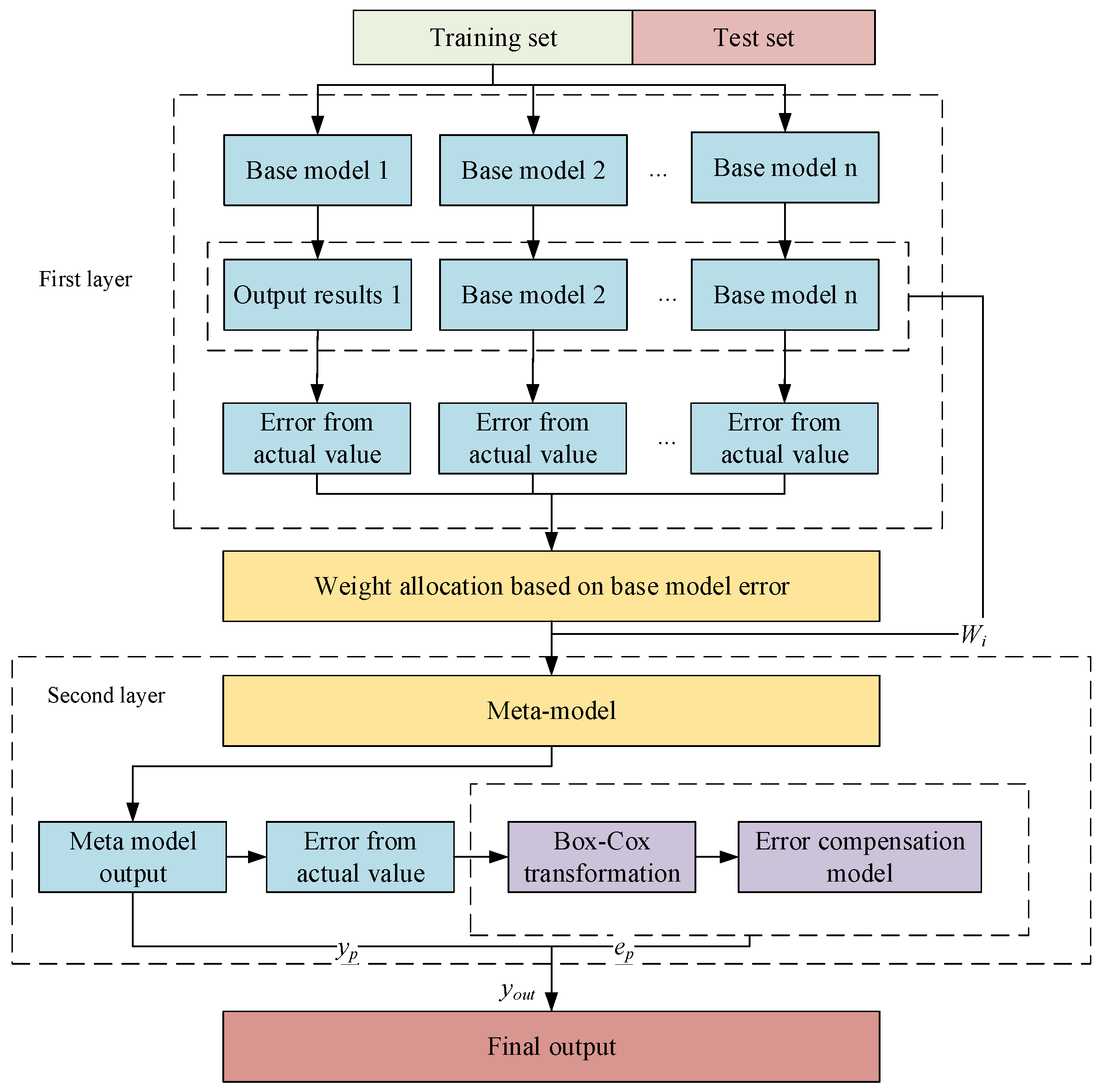

To solve the above problems, this work proposes an improved error-based ensemble learning model for compressor performance parameter prediction. The weights are calculated based on the error between the predicted and actual values of the validation set on each fold. The different predictions of the base model on the test set are then weighted.

3. Results and Discussion

3.1. Experimental Data

The compressor data used in the experiment were obtained from the Shengu Group. The effect of scale differences on the data needs to be eliminated; thus, the data were processed using normalisation [

21].

Predictive models with different parameters have different input variables. This input variable has an important effect on the output variable. For example, the temperature, pressure, and speed of the compressor can be used as input variables. To ensure the prediction’s accuracy, it is necessary to construct the three models mentioned above to predict the compressor’s power, surge, and blockage parameters.

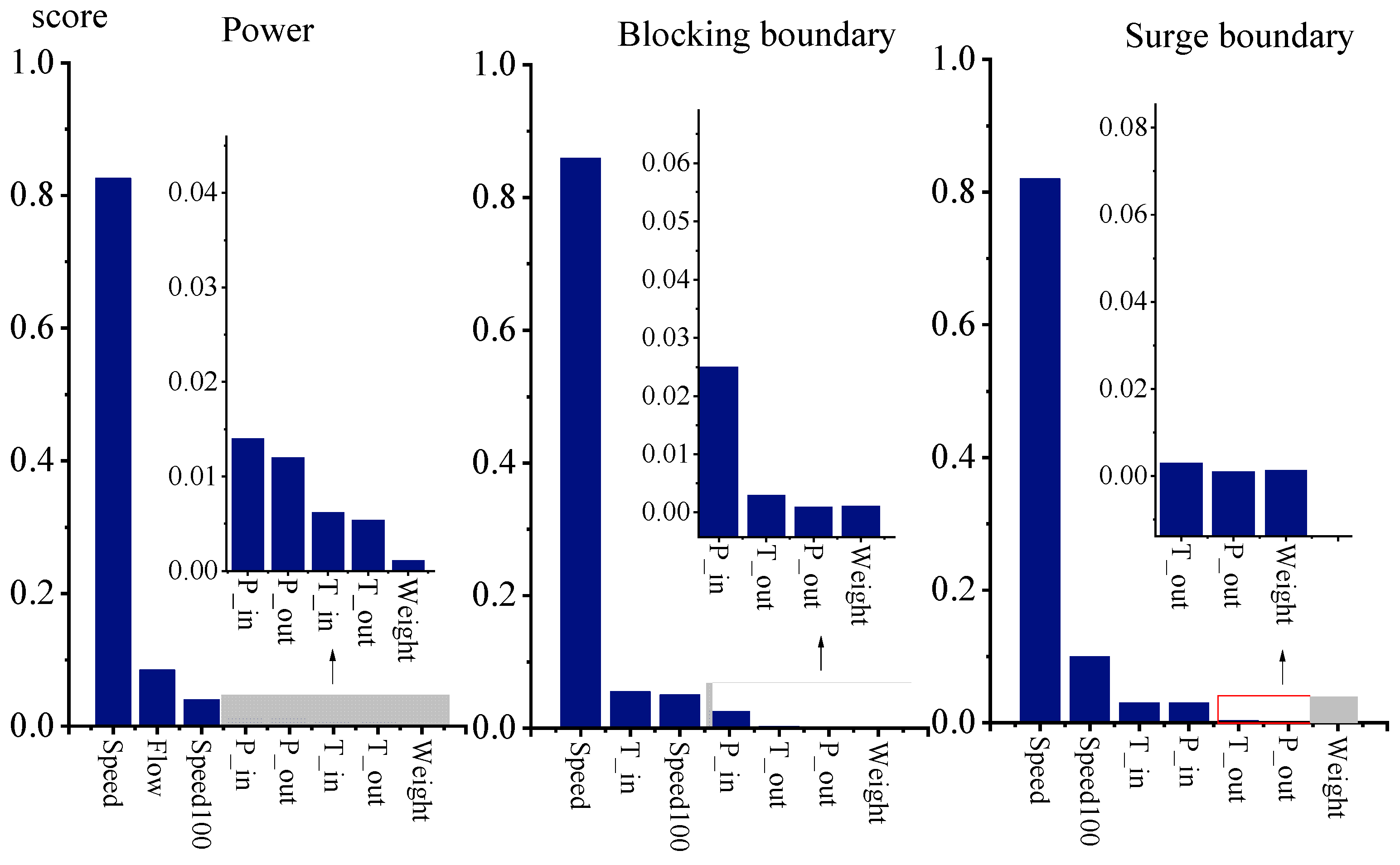

The Random Forest algorithm was used to evaluate the importance of variables and screen the model’s input variables [

22]. The importance of the variables corresponding to power, compressor blockage, and surge boundaries is shown in

Figure 4. According to the importance results, some of the data with low importance were excluded to reduce the training time and complexity of the model. Finally, the input and output variables corresponding to the different prediction objectives were determined, as shown in

Table 1.

Based on the data presented in

Table 1 below, speed was selected as the input data for the strong correlation coefficient input layer of the codec model. The other data were used as input data for the weak correlation coefficient input layer. The material flowing through the compressor is only natural gas, so the molecular weight is of low importance and the molecular weight data are discarded here. If the substance passing through the compressor is not homogeneous, it cannot be discarded. All of the input variables in the above table were used for the RBF base model; 90% of the dataset was taken as training data and 10% as test data [

23].

3.2. Base Learner Experimental Results

The indicators of coefficient of determination (R

2) and root-mean-square error (RMSE) were used as evaluation indicators for the results of the experiment [

24]. The closer the value of a is to 1, the better the model predicts. The smaller the value of RMSE, the better the effect. The mathematical expression of the above rating criteria is shown in Equations (8) and (9):

In the equation, is the actual value of the output variable, is the predicted value of the output variable, and is the average value of the output variable.

The base model needs to have some predictive accuracy. It cannot produce a large error, or else the meta-model will be difficult to correct. From the above results, it can be seen that the base model predicts the compressor performance parameters better. The selection criteria for the base model are satisfied.

The processed dataset is used to conduct experiments, and different data are inputted to different encoders according to their importance. The experimental results obtained are shown in

Table 2. The prediction outcomes obtained using the radial basis function neural network model are displayed in

Table 3.

The above results show that each base learner has more accurate prediction results. It should also be ensured that there are differences between these learners so that the model is able to learn information about the data from multiple sources. The Pearson correlation coefficient was selected as the evaluation index for the difference analysis of the base learners [

25]. The larger the Pearson coefficient, the greater the correlation and the smaller the difference between algorithms [

26]. The calculation of the Pearson correlation coefficient of the two-dimensional vector is as follows (10):

Here, and are the average values of vectors x and y, respectively.

The diversity of the base learner also has a crucial impact on the prediction performance of the subsequent fusion model. Choosing a multivariate learner can reduce the prediction error of the output layer to a certain extent. The correlation results for different performance parameters of the compressor are shown in

Table 4. The results show that the correlation between the base models is low. The lower correlation differentiates the base models and facilitates the integrated learning algorithm to take advantage of different algorithms.

3.3. Stacking Model Analysis

3.3.1. Analysis of Traditional Stacking Ensemble Learning Model

This work uses the model described above as the first-layer model that constitutes the Stacking model. To prevent the model from overfitting, the linear regression model is used as the second-layer model. The calculation results are shown in

Figure 5, where model A is the encoder–decoder model and model B is the radial basis function neural network model. The model’s R

2 and RMSE are evaluated for the model’s prediction capabilities. A comparison between the integrated and standalone models is presented in

Table 5.

Figure 5 below shows the predicted results for some of the power parameters. The condition data in the horizontal axis coordinates represent the sample points of the test set. From the table and graphical results, it can be seen that the evaluation metrics of the base Stacking integrated learning model are all better than the single model. The root-mean-square error decreased by 24.8% and 46.5% compared to the integrated model in terms of power prediction. The superiority of the integrated learning algorithm is verified. In the graphical prediction results, the predicted values of the Stacking model are always close to the true values. Although the prediction value of the single model for a certain sample has a larger error, this error is finally corrected by the Stacking model. The robustness of the model is improved to some extent.

The prediction results for blocking boundaries are shown below in

Figure 6. The prediction accuracy of the Stacking model is also higher than that of the single model. The root-mean-square error of the stacked model is reduced by 30.9% and 7% compared to the single model.

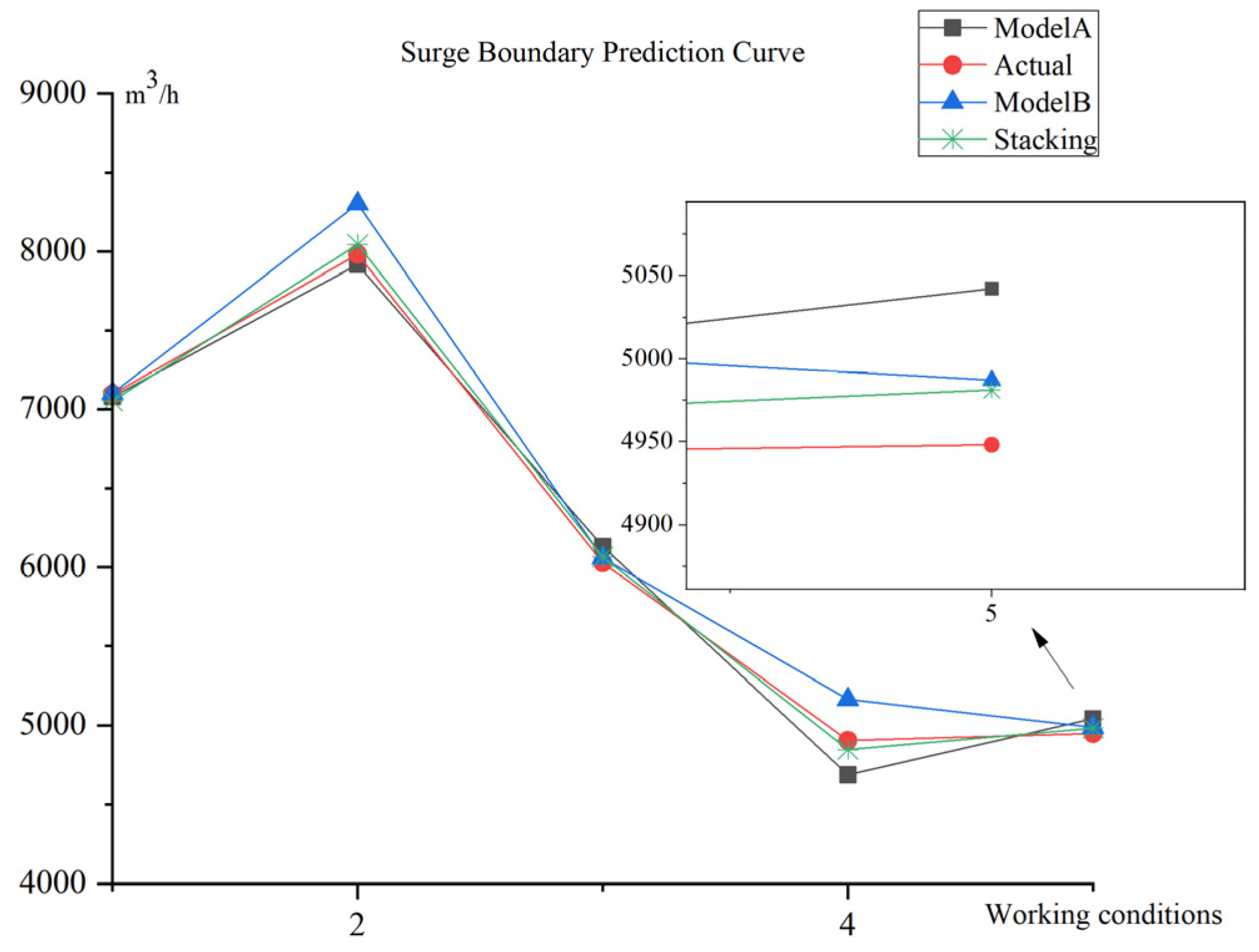

The prediction results for the surge boundary are shown in

Figure 7. The prediction results also show the superiority of the Stacking model. Its root-mean-square error is reduced by 13.4% and 12.5% compared to the single model.

3.3.2. Analysis of Improved Stacking Integrated Learning Model

The same training set was used based on the improved Stacking integrated learning algorithm proposed in the article. The base learner uses fivefold cross-validation. In order to demonstrate the superiority of the improved model and the effectiveness of each improvement point, this study used separate prediction models for each of the different improvement points. The models corresponding to each improvement point and the final model in this study are as follows:

Model 1: Classic Stacking model;

Model 2: Weight allocation for the traditional Stacking model;

Model 3: Error correction is performed on the prediction results of the traditional Stacking model;

Model 4: The model described in this article.

Therefore, this work designed an ablation experiment to analyse each improvement point’s impact on the Stacking model’s prediction performance and compared the prediction results of each model with the actual values of the compressor performance parameters. The ablation experiment model’s evaluation index values are shown in

Table 6. As shown in the table, the error at each improvement point is reduced relative to the base model, improving the accuracy of the prediction. In addition, for the power parameters of the compressor, the prediction effect of the traditional Stacking model is better. However, the improved ensemble model can still improve the prediction accuracy to a certain extent. The dataset size is relatively small for the boundary parameters of the compressor. The predictions are slightly less effective than for the power parameters of the compressor, but the prediction accuracies of the boundary parameters are all further improved by error-based weight assignment and compensation. The root-mean-square error in the prediction of power for model 4 is reduced by 32.0%, 11.9%, and 19.6% compared to the other models. The errors are reduced by 25.9%, 13.1%, and 9.9% in terms of the surge boundary prediction. In the blocking boundary prediction, the reductions are 30.5%, 15.2%, and 4.3%, respectively. In summary, the effectiveness of the enhanced Stacking model described in this article has been verified through experiments.

4. Conclusions

In this work, the power parameters of a large centrifugal compressor and the safe operating boundaries of the compressor were analysed and predicted by means of an error-based improved Stacking model. The RBF neural network model and encoder–decoder model with hierarchical inputs were integrated through the meta-model, and weights were assigned to the dataset based on the validation set error of the K-fold cross-validation. Finally, the output error of the meta-model was Box–Cox transformed for error compensation. A comparative study of the new model’s performance was also conducted through experiments. The results prove that, compared with a single model and the traditional Stacking model, the prediction accuracy of the integrated model is improved to varying degrees, and it has a better anti-interference ability. The integrated model can better predict the compressor’s power and safety boundary parameters.