Deep Learning Based on Multi-Decomposition for Short-Term Load Forecasting

Abstract

:1. Introduction

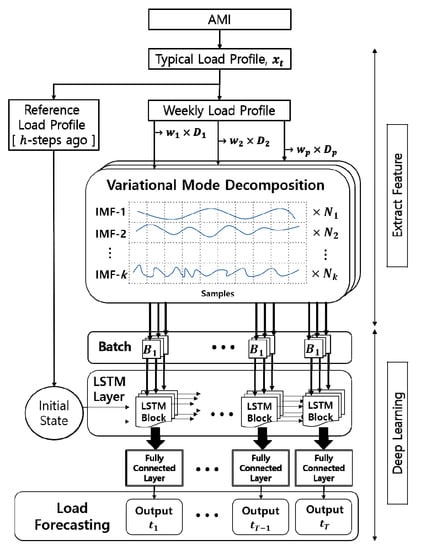

2. The Proposed Multi-Decomposition for Feature Extraction

2.1. Enhanced AMI for Small-Scale Load and Real Time

2.2. Empirical Mode Decomposition

- In the entire dataset, the number of zero crossings must either be equal to or differ from the number of extrema by no more than one;

- The lower and upper envelope means, defined by interpolating the local signal minima and maxima, respectively, must equal zero.

2.3. Variational Mode Decomposition

VMD Algorithm

- For each mode, , compute the associated analytic signal using the Hilbert transform to obtain the unilateral frequency spectrum;

- For each mode, , shift the mode frequency spectrum to the baseband (narrow frequency) by mixing it with an exponential tuned to the corresponding estimated center frequency;

- Estimate the bandwidth using the Gaussian smoothed demodulated signal.

2.4. Decomposition for Feature Selection

2.5. Three-Step Regularization Process

3. Deep Learning

3.1. Long Short-Term Memory Neural Networks

3.2. Nonlinear Autoregressive Network with Exogenous Inputs

4. Experiments

4.1. Prediction of the Time Scale

4.2. Extract Feature Layer

4.3. Long Short-Term Memory Layer

4.4. Model Construction

4.4.1. Hyperparameter Tuning and Training Options

4.4.2. Training and Testing

4.4.3. Performance Measures

5. Load Profile Analysis by Multi-Decomposition Methods

5.1. Weekly Seasonality

5.2. Comparison of Decomposition Performance

6. Case Studies

6.1. Comparative Conventional Load Forecasting Models

6.2. Weekly Load Forecasting

6.3. Benchmark for Different Prediction Time Scales

7. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Nomenclature

| AMI | advanced measuring infrastructure |

| ANN | artificial neural network |

| LSTM | long short-term memory |

| EMD | empirical mode decomposition |

| VMD | variational mode decomposition |

| LTLF | long-term load forecasting |

| MTLF | medium-term load forecasting |

| STLF | short-term load forecasting |

| USTLF | ultra-short-term load forecasting |

| DSM | demand side management |

| ARIMA | auto-regressive integrated moving average |

| GPR | Gaussian processing regression |

| GRU | gated recurrent unit |

| SVR | support vector regression |

| RNN | recurrent neural network |

| NARX | nonlinear autoregressive exogenous |

| CNN | convolutional neural network |

| IMFs | intrinsic mode functions |

| k | the mode index |

| intrinsic mode | |

| weekly load profile | |

| w | frequency of mode |

| K | the total number of modes |

| the typical load profile | |

| the load weekly seasonality feature | |

| IMF of the load weekly seasonality feature | |

| the Dirac distribution | |

| the weekly decay’s exponent factor | |

| the IMF normalization factor | |

| BN | batch normalization |

| the memory cell state of LSTM | |

| the forget gate of LSTM | |

| the input gate of LSTM | |

| the input node of LSTM | |

| the output gate of LSTM | |

| the output value of LSTM | |

| RMSE | root mean squared error |

| MAE | mean absolute error |

| MAPE | mean absolute percent error |

| VMF | variational mode function |

| EMF | empirical mode function |

References

- Ekonomou, L.; Christodoulou, C.A.; Mladenov, V. A short-term load forecasting method using artificial neural networks and wavelet analysis. Int. J. Power Syst. 2016, 1, 64–68. [Google Scholar]

- Mirowski, P.; Chen, S.; Ho, T.K.; Yu, C.-N. Demand forecasting in smart grids. Bell Syst. Tech. J. 2014, 18, 135–158. [Google Scholar] [CrossRef]

- Zhang, X. Short-term load forecasting for electric bus charging stations based on fuzzy clustering and least squares support vector machine optimized by wolf pack algorithm. Energies 2018, 11, 1449. [Google Scholar] [CrossRef]

- Fiot, J.-B.; Dinuzzo, F. Electricity demand forecasting by multi-task learning. IEEE Trans. Smart Grid 2018, 9, 544–551. [Google Scholar] [CrossRef]

- Dahl, M.; Brun, A.; Kirsebom, O.; Andresen, G. Improving short-term heat load forecasts with calendar and holiday data. Energies 2018, 11, 1678. [Google Scholar] [CrossRef]

- Teeraratkul, T.; O’Neill, D.; Lall, S. Shape-based approach to household electric load curve clustering and prediction. IEEE Trans. Smart Grid 2018, 9, 5196–5206. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, N.; Chen, Q.; Kirschen, D.S.; Li, P.; Xia, Q. Data-driven probabilistic net load forecasting with high penetration of behind-the-meter pv. IEEE Trans. Power Syst. 2018, 33, 3255–3264. [Google Scholar] [CrossRef]

- Haben, S.; Singleton, C.; Grindrod, P. Analysis and clustering of residential customers energy behavioral demand using smart meter data. IEEE Trans. Smart Grid 2016, 7, 136–144. [Google Scholar] [CrossRef]

- Stephen, B.; Tang, X.; Harvey, P.R.; Galloway, S.; Jennett, K.I. Incorporating practice theory in sub-profile models for short term aggregated residential load forecasting. IEEE Trans. Smart Grid 2017, 8, 1591–1598. [Google Scholar] [CrossRef]

- Hayes, B.P.; Gruber, J.K.; Prodanovic, M. Multi-nodal short-term energy forecasting using smart meter data. IET Gener. Transm. Dis. 2018, 12, 2988–2994. [Google Scholar] [CrossRef]

- Xie, J.; Chen, Y.; Hong, T.; Laing, T.D. Relative humidity for load forecasting models. IEEE Trans. Smart Grid 2018, 9, 191–198. [Google Scholar] [CrossRef]

- Xie, J.; Hong, T. Temperature scenario generation for probabilistic load forecasting. IEEE Trans. Smart Grid 2018, 9, 1680–1687. [Google Scholar] [CrossRef]

- Li, P.; Zhang, J.; Li, C.; Zhou, B.; Zhang, Y.; Zhu, M.; Li, N. Dynamic similar sub-series selection method for time series forecasting. IEEE Access 2018, 6, 32532–32542. [Google Scholar] [CrossRef]

- Lin, L.; Xue, L.; Hu, Z.; Huang, N. Modular predictor for day-ahead load forecasting and feature selection for different hours. Energies 2018, 11, 1899. [Google Scholar] [CrossRef]

- Xie, J.; Hong, T. Variable selection methods for probabilistic load forecasting: Empirical evidence from seven states of the united states. IEEE Trans. Smart Grid 2018, 9, 6039–6046. [Google Scholar] [CrossRef]

- Li, B.; Zhang, J.; He, Y.; Wang, Y. Short-term load-forecasting method based on wavelet decomposition with second-order gray neural network model combined with adf test. IEEE Access 2017, 5, 16324–16331. [Google Scholar] [CrossRef]

- Rafiei, M.; Niknam, T.; Aghaei, J.; Shafie-khah, M.; Catalão, J.P.S. Probabilistic load forecasting using an improved wavelet neural network trained by generalized extreme learning machine. IEEE Trans. Smart Grid 2018, 9, 6961–6971. [Google Scholar] [CrossRef]

- Auder, B.; Cugliari, J.; Goude, Y.; Poggi, J.-M. Scalable clustering of individual electrical curves for profiling and bottom-up forecasting. Energies 2018, 11, 1893. [Google Scholar] [CrossRef]

- Qiu, X.; Ren, Y.; Suganthan, P.N.; Amaratunga, G.A.J. Empirical mode decomposition based ensemble deep learning for load demand time series forecasting. Appl. Soft Comput. 2017, 54, 246–255. [Google Scholar] [CrossRef]

- Bedi, J.; Toshniwal, D. Empirical mode decomposition based deep learning for electricity demand forecasting. IEEE Access 2018, 6, 49144–49156. [Google Scholar] [CrossRef]

- Liu, H.; Mi, X.; Li, Y. An experimental investigation of three new hybrid wind speed forecasting models using multi-decomposing strategy and elm algorithm. Renew. Energy 2018, 123, 694–705. [Google Scholar] [CrossRef]

- Lahmiri, S. Comparing variational and empirical mode decomposition in forecasting day-ahead energy prices. IEEE Syst. J. 2017, 11, 1907–1910. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational mode decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Huang, N.; Yuan, C.; Cai, G.; Xing, E. Hybrid short term wind speed forecasting using variational mode decomposition and a weighted regularized extreme learning machine. Energies 2016, 9, 989. [Google Scholar] [CrossRef]

- Lin, Y.; Luo, H.; Wang, D.; Guo, H.; Zhu, K. An ensemble model based on machine learning methods and data preprocessing for short-term electric load forecasting. Energies 2017, 10, 1186. [Google Scholar] [CrossRef]

- Ruiz-Abellón, M.; Gabaldón, A.; Guillamón, A. Load forecasting for a campus university using ensemble methods based on regression trees. Energies 2018, 11, 2038. [Google Scholar] [CrossRef]

- Dong, Y.; Zhang, Z.; Hong, W.-C. A hybrid seasonal mechanism with a chaotic cuckoo search algorithm with a support vector regression model for electric load forecasting. Energies 2018, 11, 1009. [Google Scholar] [CrossRef]

- Li, M.-W.; Geng, J.; Hong, W.-C.; Zhang, Y. Hybridizing chaotic and quantum mechanisms and fruit fly optimization algorithm with least squares support vector regression model in electric load forecasting. Energies 2018, 11, 2226. [Google Scholar] [CrossRef]

- Sheng, H.; Xiao, J.; Cheng, Y.; Ni, Q.; Wang, S. Short-term solar power forecasting based on weighted gaussian process regression. IEEE Trans. Ind. Electron. 2018, 65, 300–308. [Google Scholar] [CrossRef]

- Manic, M.; Amarasinghe, K.; Rodriguez-Andina, J.J.; Rieger, C. Intelligent buildings of the future: Cyberaware, deep learning powered, and human interacting. IEEE Ind. Electron. Mag. 2016, 10, 32–49. [Google Scholar] [CrossRef]

- Li, C.; Ding, Z.; Yi, J.; Lv, Y.; Zhang, G. Deep belief network based hybrid model for building energy consumption prediction. Energies 2018, 11, 242. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, N.; Tan, Y.; Hong, T.; Kirschen, D.S.; Kang, C. Combining probabilistic load forecasts. IEEE Trans. Smart Grid 2018. Available online: https://arxiv.org/abs/1803.06730 (accessed on 5 November 2018). [CrossRef]

- Wang, J.; Gao, Y.; Chen, X. A novel hybrid interval prediction approach based on modified lower upper bound estimation in combination with multi-objective salp swarm algorithm for short-term load forecasting. Energies 2018, 11, 1561. [Google Scholar] [CrossRef]

- Sun, W.; Zhang, C. A hybrid ba-elm model based on factor analysis and similar-day approach for short-term load forecasting. Energies 2018, 11, 1282. [Google Scholar] [CrossRef]

- Ruiz, L.G.B.; Cuéllar, M.P.; Calvo-Flores, M.D.; Jiménez, M.D.C.P. An application of non-linear autoregressive neural networks to predict energy consumption in public buildings. Energies 2016, 9, 684. [Google Scholar] [CrossRef]

- DiPietro, R.; Rupprecht, C.; Navab, N.; Hager, G.D. Analyzing and exploiting narx recurrent neural networks for long-term dependencies. arXiv, 2017; arXiv:1702.07805. [Google Scholar]

- Bouktif, S.; Fiaz, A.; Ouni, A.; Serhani, M. Optimal deep learning lstm model for electric load forecasting using feature selection and genetic algorithm: Comparison with machine learning approaches. Energies 2018, 11, 1636. [Google Scholar] [CrossRef]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-term residential load forecasting based on lstm recurrent neural network. IEEE Trans. Smart Grid 2018. [Google Scholar] [CrossRef]

- Chen, K.; Chen, K.; Wang, Q.; He, Z.; Hu, J.; He, J. Short-term load forecasting with deep residual networks. IEEE Trans. Smart Grid 2018. Available online: https://arxiv.org/abs/1805.11956 (accessed on 5 November 2018). [CrossRef]

- Shi, H.; Xu, M.; Li, R. Deep learning for household load forecasting—A novel pooling deep rnn. IEEE Trans. Smart Grid 2018, 9, 5271–5280. [Google Scholar] [CrossRef]

- Kuo, P.-H.; Huang, C.-J. A high precision artificial neural networks model for short-term energy load forecasting. Energies 2018, 11, 213. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, M.; Bao, Z.; Zhang, S. Short-term load forecasting with multi-source data using gated recurrent unit neural networks. Energies 2018, 11, 1138. [Google Scholar] [CrossRef]

- Merkel, G.; Povinelli, R.; Brown, R. Short-term load forecasting of natural gas with deep neural network regression. Energies 2018, 11, 2008. [Google Scholar] [CrossRef]

- Li, Y.; Huang, Y.; Zhang, M. Short-term load forecasting for electric vehicle charging station based on niche immunity lion algorithm and convolutional neural network. Energies 2018, 11, 1253. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv, 2014; arXiv:1412.3555. [Google Scholar]

- Zhan, T.-S.; Chen, S.-J.; Kao, C.-C.; Kuo, C.-L.; Chen, J.-L.; Lin, C.-H. Non-technical loss and power blackout detection under advanced metering infrastructure using a cooperative game based inference mechanism. IET Gener. Transm. Dis. 2016, 10, 873–882. [Google Scholar] [CrossRef]

| Decomposition | IMF | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

| EMD | 0.58 | 0.42 | 0.40 | 0.28 | 0.46 | 0.35 | 0.02 | 0.43 | 0.82 | 0.96 |

| VMD | 0.98 | 0.83 | 0.80 | 0.63 | 0.53 | 0.26 | 0.15 | 0.01 | −0.02 | −0.02 |

| Prediction Horizon | Index | ARIMA | GPR | SVR | NARX | EMD NARX | VMD NARX | LSTM | EMD LSTM | VMD LSTM |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 step ahead (5 min) | MAE | 7.45 | 6.03 | 3.43 | 7.52 | 7.33 | 3.25 | 2.92 | 5.53 | 1.95 |

| RMSE | 11.77 | 10.21 | 6.89 | 11.89 | 11.21 | 6.62 | 4.98 | 8.72 | 4.28 | |

| MAPE (%) | 3.46 | 2.67 | 1.96 | 3.61 | 3.39 | 1.84 | 1.12 | 2.21 | 0.71 | |

| 12 steps ahead (1 h) | MAE | 17.28 | 16.11 | 14.76 | 17.71 | 17.02 | 15.12 | 9.01 | 11.69 | 4.81 |

| RMSE | 22.12 | 20.94 | 20.12 | 24.12 | 22.49 | 19.31 | 12.87 | 15.08 | 7.53 | |

| MAPE (%) | 6.20 | 6.06 | 5.70 | 6.35 | 6.27 | 5.43 | 3.54 | 4.27 | 1.90 | |

| 36 steps ahead (3 h) | MAE | 57.14 | 53.96 | 48.72 | 58.85 | 56.54 | 50.69 | 30.25 | 38.52 | 16.27 |

| RMSE | 64.50 | 61.35 | 59.31 | 70.64 | 66.80 | 56.38 | 38.05 | 43.66 | 22.40 | |

| MAPE (%) | 20.62 | 19.91 | 18.17 | 21.79 | 20.97 | 20.03 | 11.63 | 14.26 | 6.01 | |

| 288 steps ahead (24 h) | MAE | 51.22 | 48.55 | 43.25 | 52.68 | 51.50 | 45.12 | 28.19 | 32.65 | 15.60 |

| RMSE | 59.38 | 58.81 | 56.88 | 58.12 | 57.24 | 56.85 | 35.98 | 37.38 | 21.80 | |

| MAPE (%) | 18.90 | 17.91 | 16.13 | 19.16 | 19.06 | 16.71 | 10.62 | 11.78 | 5.75 | |

| 576 steps ahead (48 h) | MAE | 57.24 | 52.87 | 46.57 | 57.48 | 55.65 | 48.23 | 28.60 | 32.48 | 15.85 |

| RMSE | 63.28 | 60.31 | 59.72 | 62.51 | 61.49 | 57.53 | 36.24 | 42.27 | 22.11 | |

| MAPE (%) | 22.08 | 19.72 | 17.76 | 21.49 | 20.99 | 17.18 | 10.92 | 12.18 | 5.89 | |

| 864 steps ahead (72 h) | MAE | 60.45 | 58.55 | 51.92 | 59.75 | 59.01 | 53.44 | 29.12 | 34.37 | 16.09 |

| RMSE | 68.24 | 62.42 | 58.35 | 67.38 | 66.26 | 58.72 | 36.85 | 43.52 | 22.18 | |

| MAPE (%) | 24.14 | 21.76 | 18.72 | 22.43 | 21.19 | 19.05 | 11.05 | 12.86 | 5.96 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.H.; Lee, G.; Kwon, G.-Y.; Kim, D.-I.; Shin, Y.-J. Deep Learning Based on Multi-Decomposition for Short-Term Load Forecasting. Energies 2018, 11, 3433. https://doi.org/10.3390/en11123433

Kim SH, Lee G, Kwon G-Y, Kim D-I, Shin Y-J. Deep Learning Based on Multi-Decomposition for Short-Term Load Forecasting. Energies. 2018; 11(12):3433. https://doi.org/10.3390/en11123433

Chicago/Turabian StyleKim, Seon Hyeog, Gyul Lee, Gu-Young Kwon, Do-In Kim, and Yong-June Shin. 2018. "Deep Learning Based on Multi-Decomposition for Short-Term Load Forecasting" Energies 11, no. 12: 3433. https://doi.org/10.3390/en11123433