The Effects of Certificate-of-Need Laws on the Quality of Hospital Medical Services

Abstract

:1. Introduction

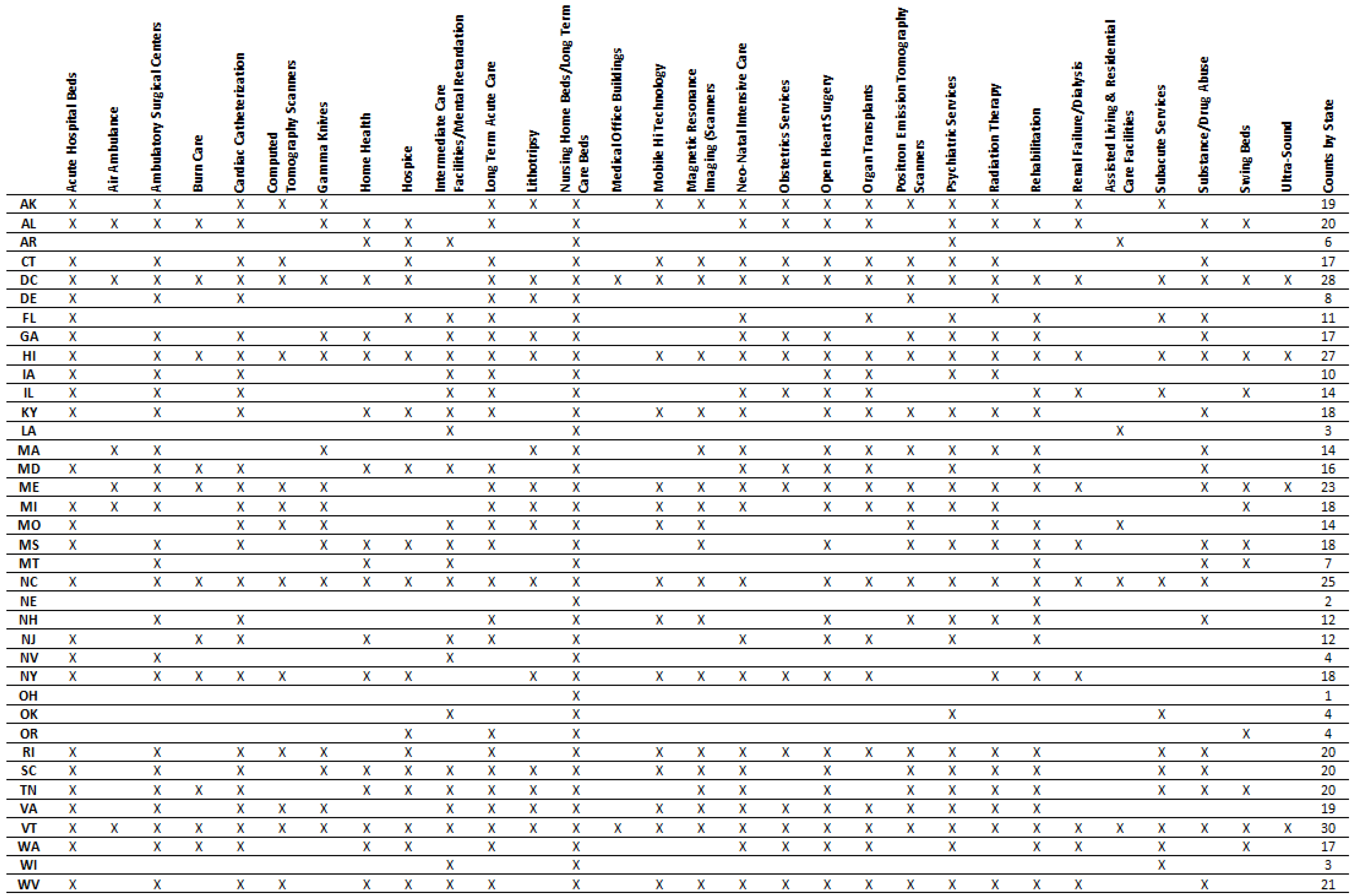

2. Regulatory Background

3. Data

4. Empirical Framework

5. Results

5.1. Descriptive Statistics

5.2. Hospital Quality Indicators

5.3. Pooled Panel Regression Results

6. Robustness Checks

6.1. Regression Results with Health Controls

6.2. Regression Results by Year (2011–2015)

6.3. Regression Results Excluding Low-Provider HRRs

6.4. Regression Results Excluding HRRs with the Most Uneven Number of Hospitals on Each Side of the Border

6.5. Regression Results Excluding States with Few CON Laws

6.6. Aggregate Hospital Quality Measures

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | The National Health Planning and Resources Development Act of 1974, the original impetus for CON laws, contains the following language in its statement of purpose: “The massive infusion of Federal funds into the existing health care system has contributed to inflationary increases in the cost of health care and failed to produce an adequate supply or distribution of health resources, and consequently has not made possible equal access for everyone to such resources”. Pub. L. No. 93-641 (1975). |

| 2 | |

| 3 | For a summary of Virginia’s application process, see Virginia Department of Health (2015, p. 18). |

| 4 | A hospital’s performance on the Deaths among Surgical Inpatients with Serious Treatable Complications measure is an accurate indicator of quality of care, assuming providers in CON and non-CON states turn away patients at the same rate. If this assumption does not hold, it may be that hospitals in CON states only appear to perform worse on this measure. For example, if CON regulations give incumbents the market power to be able to turn away all but the most seriously ill patients, the CON hospitals’ quality metrics would tend to be lower because they are treating a pool of less healthy patients, not because they provide lower-quality care. Alternatively, use of the Deaths among Surgical Inpatients with Serious Treatable Complications measure will result in underestimation of the effect of CON laws on hospital quality if patients with the most serious risk of dying choose high-quality hospitals and if those patients develop complications not because of poorer hospital care but because they are very ill. Therefore, the direction of the potential bias is theoretically ambiguous. |

| 5 | |

| 6 | For more detail about how these measures are calculated, see QualityNet (2016). |

| 7 | This research studies whether the existence of at least one CON law in a state influences the quality of hospital care. An alternative design might study the effect of those CON laws that directly affect hospital services. |

| 8 | One interesting feature of the quality data is that, on average, the quality of medical services is increasing over time. All regression specifications for the pooled sample include year-fixed effects. |

| 9 | We use year indicators in all columns. |

| 10 | For 2011, this criterion eliminates 24 HRRs and 417 providers from our subsample. For 2012, we exclude 23 HRRs and 414 hospitals. For 2013, we exclude 23 HRRs and 419 hospitals. For 2014, we exclude 21 HRRs and 401 hospitals. For 2015, we exclude 22 HRRs and 427 hospitals. |

| 11 | |

| 12 | Some of the measures by AHRQ might suffer from the problem that the individual measures from which the published aggregate measures are constructed are only few. |

References

- AHPA (American Health Planning Association). 2005. The Federal Trade Commission & Certificate of Need Regulation: An AHPA Critique. Available online: http://www.ahpanet.org/files/AHPAcritiqueFTC.pdf (accessed on 15 May 2021).

- AHRQ (Agency for Healthcare Research and Quality). 2010. AHRQ Quality Indicators: Composite Measures User Guide for the Patient Safety Indicators (PSI). Available online: http://www.qualityindicators.ahrq.gov/Downloads/Modules/PSI/V42/Composite_User_Technical_Specification_PSI.pdf (accessed on 15 May 2021).

- American FactFinder. 2016. United States Census Bureau. Available online: https://factfinder.census.gov/faces/nav/jsf/pages/index.xhtm (accessed on 15 May 2021).

- Bailey, James. 2018. The Effect of Certificate of Need Laws on All-Cause Mortality. Health Services Research 53: 49–62. [Google Scholar] [CrossRef] [PubMed]

- Bailey, James. 2021. Certificate of Need and Inpatient Psychiatric Services. The Journal of Mental Health Policy and Economics 24: 117–24. [Google Scholar]

- Baker, Matthew C., and Thomas Stratmann. 2021. Barriers to Entry in the Healthcare Markets: Winners and Losers from Certificate-of-Need Laws. Socio-Economic Planning Sciences 77: 101007. [Google Scholar] [CrossRef]

- Browne, James A., Jourdan M. Cancienne, Aaron J. Casp, Wendy M. Novicoff, and Brian C. Werner. 2018. Certificate-of-Need State Laws and Total Knee Arthroplasty. The Journal of Arthroplasty 33: 2020–24. [Google Scholar] [CrossRef] [PubMed]

- Casp, Aaron J., Nicole E. Durig, Jourdan M. Cancienne, Brian C. Werner, and James A. Browne. 2019. Certificate-of-Need State Laws and Total Hip Arthroplasty. The Journal of Arthroplasty 34: 401–7. [Google Scholar] [CrossRef] [PubMed]

- CDC (Centers for Disease Control and Prevention). 2016. Healthcare-Associated Venous Thromboembolism. Available online: http://www.cdc.gov/ncbddd/dvt/ha-vte.html (accessed on 15 May 2021).

- Chiu, Kevin. 2021. The Impact of Certificate of Need Laws on Heart Attack Mortality: Evidence from County Borders. Journal of Health Economics 79: 102518. [Google Scholar] [CrossRef]

- Cimasi, Robert James. 2005. The U.S. Healthcare Certificate of Need Sourcebook. Frederick: Beard Books. [Google Scholar]

- CMS (Centers for Medicare & Medicaid Services). 2007. Frequently Asked Questions (FAQs): Implementation and Maintenance of CMS Mortality Measures for AMI & HF. Available online: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/downloads/HospitalMortalityAboutAMI_HF.pdf (accessed on 15 May 2021).

- CMS (Centers for Medicare & Medicaid Services). 2008a. List of Diagnosis Related Groups (DRGs), FY2008. Available online: https://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/MedicareFeeforSvcPartsAB/downloads/DRGdesc08.pdf (accessed on 26 August 2016).

- CMS (Centers for Medicare & Medicaid Services). 2008b. Mode and Patient-Mix Adjustment of the CAHPS® Hospital Survey (HCAHPS). Available online: http://www.hcahpsonline.org/files/DescriptionHCAHPSModeandPMAwithBottomBoxModeApr_30_08.pdf (accessed on 15 May 2021).

- CMS (Centers for Medicare & Medicaid Services). 2012. Frequently Asked Questions (FAQs): CMS 30-Day Risk-Standardized Readmission Measures for Acute Myocardial Infarction (AMI), Heart Failure (HF), and Pneumonia. Available online: http://www.ihatoday.org/uploadDocs/1/cmsreadmissionfaqs.pdf (accessed on 15 May 2021).

- CMS (Centers for Medicare & Medicaid Services). 2016a. Hospital Compare. Available online: https://www.cms.gov/medicare/quality-initiatives-patient-assessment-instruments/hospitalqualityinits/hospitalcompare.html (accessed on 15 May 2021).

- CMS (Centers for Medicare & Medicaid Services). 2016b. Readmissions Reduction Program (HRRP). Available online: https://www.cms.gov/medicare/medicare-fee-for-service-payment/acuteinpatientpps/readmissions-reduction-program.html (accessed on 15 May 2021).

- CMS (Centers for Medicare & Medicaid Services). 2016c. The HCAHPS Survey—Frequently Asked Questions. Available online: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/Downloads/HospitalHCAHPSFactSheet201007.pdf (accessed on 4 March 2016).

- CMS (Centers for Medicare & Medicaid Services). 2016d. Cost Reports by Fiscal Year. Available online: https://www.cms.gov/Research-Statistics-Data-and-Systems/Downloadable-Public-Use-Files/Cost-Reports/Cost-Reports-by-Fiscal-Year.html (accessed on 15 May 2021).

- County Health Rankings and Roadmaps. 2011–2015. Rankings Data. Available online: http://www.countyhealthrankings.org/rankings/data (accessed on 15 May 2021).

- County Health Rankings and Roadmaps. 2017. Rankings Data. Available online: https://www.countyhealthrankings.org/sites/default/files/media/document/key_measures_report/2017CHR_KeyFindingsReport_0.pdf (accessed on 15 May 2021).

- Cutler, David M., Robert S. Huckman, and Jonathan T. Kolstad. 2009. Input Constraints and the Efficiency of Entry: Lessons from Cardiac Surgery. NBER Working Paper No. 15214. Cambridge: National Bureau of Economic Research. [Google Scholar]

- Dartmouth Atlas Project. 2016. Dartmouth Atlas of Health Care (Database). Lebanon: The Dartmouth Institute for Health Policy and Clinical Practice, Available online: http://www.dartmouthatlas.org/ (accessed on 15 May 2021).

- Ettner, Susan L., Jacqueline S. Zinn, Haiyong Xu, Heather Ladd, Eugene Nuccio, Dara H. Sorkin, and Dana B. Mukamel. 2020. Certificate of Need and the Cost of Competition in Home Healthcare Markets. Home Health Care Services Quarterly 39: 51–64. [Google Scholar] [CrossRef]

- Fayissa, Bichaka, Saleh Alsaif, Fady Mansour, Tesa E. Leonce, and Franklin G. Mixon. 2020. Certificate-Of-Need Regulation and Healthcare Service Quality: Evidence from the Nursing Home Industry. Healthcare 8: 423. [Google Scholar] [CrossRef]

- Gaynor, Martin, and Robert J. Town. 2011. Competition in Health Care Markets. NBER Working Paper No. 17208. Cambridge: National Bureau of Economic Research. [Google Scholar]

- Goodman, David C., Elliott S. Fisher, and Chiang-Hua Chang. 2011. After Hospitalization: A Dartmouth Atlas Report on Post-Acute Care for Medicare Beneficiaries. Available online: http://www.dartmouthatlas.org/downloads/reports/Post_discharge_events_092811.pdf (accessed on 15 May 2021).

- Halm, Ethan A., Clara Lee, and Mark R. Chassin. 2002. Is Volume Related to Outcome in Health Care? A Systematic Review and Methodologic Critique of the Literature. Annals of Internal Medicine 137: 511–20. [Google Scholar] [CrossRef] [PubMed]

- Herb, Joshua N., Rachael T. Wolff, Philip M. McDaniel, G. Mark Holmes, Trevor J. Royce, and Karyn B. Stitzenberg. 2021. Travel Time to Radiation Oncology Facilities in the United States and the Influence of Certificate of Need Policies. International Journal of Radiation Oncology, Biology, Physics 109: 344–51. [Google Scholar] [CrossRef] [PubMed]

- Ho, Vivian, Meei-Hsiang Ku-Goto, and James G. Jollis. 2009. Certificate of Need (CON) for Cardiac Care: Controversy over the Contributions of CON. Health Services Research 44: 483–500. [Google Scholar] [CrossRef] [PubMed]

- Horwitz, Leora, Chohreh Partovian, Zhenqiu Lin, Jeph Herrin, Jacqueline Grady, Mitchell Conover, Julia Montague, Chloe Dillaway, Kathleen Bartczak, Joseph Ross, and et al. 2011. Hospital-Wide (All-Condition) 30-Day Risk-Standardized Readmission Measure; Baltimore: Centers for Medicare & Medicaid Services. Available online: https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/MMS/downloads/MMSHospital-WideAll-ConditionReadmissionRate.pdf (accessed on 15 May 2021).

- Hospital Compare Data Archive. n.d. Multiple Years. Data.Medicare.gov. Available online: https://data.medicare.gov/data/archives/hospital-compare (accessed on 15 May 2021).

- Li, Suhui, and Avi Dor. 2015. How Do Hospitals Respond to Market Entry? Evidence from a Deregulated Market for Cardiac Revascularization. Health Economics 24: 990–1008. [Google Scholar] [CrossRef] [Green Version]

- Lorch, S. A., P. Maheshwari, and O. Even-Shoshan. 2011. The Impact of Certificate of Need Programs on Neonatal Intensive Care Units. Journal of Perinatology 32: 39–44. [Google Scholar] [CrossRef] [PubMed]

- Medicare.gov. 2016. What Is Hospital Compare? Available online: https://www.medicare.gov/hospitalcompare/About/What-Is-HOS.html (accessed on 1 September 2016).

- Mitchell, Matthew, and Thomas Stratmann. 2022. The Economics of a Bed Shortage: Certificate-of-Need Regulation and Hospital Bed Utilization during the COVID-19 Pandemic. Journal of Risk and Financial Management 15: 10. [Google Scholar] [CrossRef]

- NCSL (National Conference of State Legislatures). 2016. CON: Certificate of Need State Laws. Washington: NCSL, Available online: http://www.ncsl.org/research/health/con-certificate-of-need-state-laws.aspx (accessed on 15 May 2021).

- Ohsfeldt, Robert L., and Pengxiang Li. 2018. State Entry Regulation and Home Health Agency Quality Ratings. Journal of Regulatory Economics 53: 1–19. [Google Scholar] [CrossRef] [Green Version]

- Page, Leigh. 2010. 5 Most Common Hospital Acquired Conditions Based on Total Costs. Becker’s Hospital Review. Available online: http://www.beckershospitalreview.com/hospital-management-administration/5-most-common-hospital-acquired-conditions-based-on-total-costs.html (accessed on 15 May 2021).

- Paul, Jomon Aliyas, Huan Ni, and Aniruddha Bagchi. 2014. Effect of Certificate of Need Law on Emergency Department Length of Stay. Journal of Emergency Medicine 47: 453–61. [Google Scholar] [CrossRef] [PubMed]

- Piper, Thomas R. 2003. Certificate of Need: Protecting Consumer Interests. Paper presented at the PowerPoint Presentation before a Joint Federal Trade Commission—Department of Justice Hearing on Health Care and Competition Law and Policy, Washington, DC, June 10; Available online: http://www.ahpanet.org/files/PiperFTCDOJpresFINAL.pdf (accessed on 15 May 2021).

- Polsky, Daniel, Guy David, Jianing Yang, Bruce Kinosian, and Rachel M. Werner. 2014. The Effect of Entry Regulation in the Health Care Sector: The Case of Home Health. Journal of Public Economics 110: 1–14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- QualityNet. 2016. Measure Methodology Reports: Readmission Measures. Available online: https://www.qualitynet.org/dcs/ContentServer?c=Page&pagename=QnetPublic%2FPage%2FQnetTier4&cid=1219069855841 (accessed on 15 May 2021).

- Rahman, Momotazur, Omar Galarraga, Jacqueline S. Zinn, David C. Grabowski, and Vincent Mor. 2016. The Impact of Certificate-of-Need Laws on Nursing Home and Home Health Care Expenditures. Medical Care Research and Review: MCRR 73: 85–105. [Google Scholar] [CrossRef] [Green Version]

- Rosen, Amy K., Qi Chen, Michael Shwartz, Corey Pilver, Hillary J. Mull, Kamal F. M. Itani, and Ann Borzecki. 2016. Does Use of a Hospital-Wide Readmission Measure versus Condition-Specific Readmission Measures Make a Difference for Hospital Profiling and Payment Penalties? Medical Care 54: 155–61. [Google Scholar] [CrossRef]

- Rosenthal, Gary E., and Mary V. Sarrazin. 2001. Impact of State Certificate of Need Programs on Outcomes of Care for Patients Undergoing Coronary Artery Bypass Surgery. Report to the Florida Hospital Association. Available online: https://www.vdh.virginia.gov/Administration/documents/COPN/Jamie%20Baskerville%20Martin/Rosenthal%20et%20al.pdf (accessed on 15 May 2021).

- Schultz, Olivia A., Lewis Shi, and Michael Lee. 2021. Assessing the Efficacy of Certificate of Need Laws Through Total Joint Arthroplasty. Journal for Healthcare Quality: Official Publication of the National Association for Healthcare Quality 43: e1–e7. [Google Scholar] [CrossRef]

- South Carolina Health Planning Committee. 2015. South Carolina Health Plan; Columbia: South Carolina Department of Health and Environmental Control. Available online: http://www.scdhec.gov/Health/docs/FinalSHP.pdf (accessed on 15 May 2021).

- Steen, John. 2016. Regionalization for Quality: Certificate of Need and Licensure Standards. American Health Planning Association. Available online: http://www.ahpanet.org/files/Regionalization%20for%20Quality.pdf (accessed on 2 February 2016).

- Stratmann, Thomas, and David Wille. 2016. Certificate of Need Laws and Hospital Quality. Mercatus Working Paper. Arlington: Mercatus Center at George Mason University, Available online: https://www.mercatus.org/system/files/mercatus-stratmann-wille-con-hospital-quality-v1.pdf (accessed on 15 May 2021).

- Thomas, Richard K. 2015. AHPA Perspective: Certificate of Need Regulation. Falls Church: PowerPoint Presentation Prepared for Virginia Department of Health by the American Health Planning Association. Available online: http://slideplayer.com/slide/7468203/ (accessed on 15 May 2021).

- Vaughan-Sarrazin, Mary S., Edward L. Hannan, Carol J. Gormley, and Gary E. Rosenthal. 2002. Mortality in Medicare Beneficiaries following Coronary Artery Bypass Graft Surgery in States with and without Certificate of Need Regulation. JAMA 288: 1859–66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Virginia Department of Health. 2015. Certificate of Public Need Workgroup—Final Report. Report Document No. 467. Available online: https://www.vdh.virginia.gov/Administration/documents/COPN/Final%20Report.pdf (accessed on 15 May 2021).

- Werner, Rachel M., and Eric T. Bradlow. 2006. Relationship between Medicare’s Hospital Compare Performance Measures and Mortality Rates. JAMA 296: 2694–702. [Google Scholar] [CrossRef] [Green Version]

- Wu, Bingxiao, Jeah Jung, Hyunjee Kim, and Daniel Polsky. 2019. Entry Regulation and the Effect of Public Reporting: Evidence from Home Health Compare. Health Economics 28: 492–516. [Google Scholar] [CrossRef] [PubMed]

- Yuce, Tarik K., Jeanette W. Chung, Cynthia Barnard, and Karl Y. Bilimoria. 2020. Association of State Certificate of Need Regulation With Procedural Volume, Market Share, and Outcomes Among Medicare Beneficiaries. JAMA 324: 2058. [Google Scholar] [CrossRef] [PubMed]

- Ziino, Chason, Abiram Bala, and Ivan Cheng. 2021. Utilization and Reimbursement Trends Based on Certificate of Need in Single-Level Cervical Discectomy. The Journal of the American Academy of Orthopaedic Surgeons 29: e518–e522. [Google Scholar] [CrossRef] [PubMed]

- Zuckerman, Rachael. 2016. Reducing Avoidable Hospital Readmissions to Create a Better, Safer Health Care System; Washington: US Department of Health and Human Services. Available online: http://www.hhs.gov/blog/2016/02/24/reducing-avoidable-hospital-readmissions.html (accessed on 15 May 2021).

- Zuckerman, Rachael B., Steven H. Sheingold, E. John Orav, Joel Ruhter, and Arnold M. Epstein. 2016. Readmissions, Observation, and the Hospital Readmissions Reduction Program. New England Journal of Medicine 374: 1543–51. [Google Scholar] [CrossRef] [PubMed]

| Full Sample (n = 4538) | Restricted Sample (n = 921) | |||||

|---|---|---|---|---|---|---|

| Measure Name (CMS Code) | Providers in CON States | Providers in Non-CON States | Overall Reporting Rate | Providers in CON States | Providers in Non-CON States | Overall Reporting Rate |

| Death among Surgical Inpatients with Serious Treatable Complications (PSI #4) | 1295 (44%) | 624 (39%) | 42% | 122 (30%) | 175 (34%) | 32% |

| Postoperative Pulmonary Embolism or Deep Vein Thrombosis (PSI #12) | 2013 (68%) | 1107 (70%) | 69% | 202 (50%) | 334 (64%) | 58% |

| Percentage of patients giving their hospital a 9 or 10 overall rating (HCAHPS) | 2498 (85%) | 1324 (83%) | 84% | 286 (71%) | 428 (83%) | 78% |

| Pneumonia Readmission Rate (READM-30-PN) | 2734 (93%) | 1348 (85%) | 90% | 364 (90%) | 425 (82%) | 86% |

| Pneumonia Mortality Rate (MORT-30-PN) | 2724 (92%) | 1339 (84%) | 90% | 361 (90%) | 423 (82%) | 85% |

| Heart Failure Readmission Rate (READM-30-HF) | 2637 (89%) | 1268 (80%) | 86% | 329 (82%) | 391 (75%) | 78% |

| Heart Failure Mortality Rate (MORT-30-HF) | 2602 (88%) | 1237 (78%) | 85% | 321 (80%) | 384 (74%) | 77% |

| Heart Attack Readmission Rate (READM-30-AMI) | 1602 (54%) | 725 (46%) | 51% | 145 (36%) | 216 (42%) | 39% |

| Heart Attack Mortality Rate (MORT-30-AMI) | 1866 (63%) | 838 (53%) | 60% | 172 (43%) | 253 (49%) | 46% |

| HRR Number | Non-CON States | CON States | HRR Number | Non-CON States | CON States |

|---|---|---|---|---|---|

| 22 | TX | AR, OK | 296 | PA | NY |

| 103 | CO, KS | NE | 324 | ND | MT |

| 104 | CO, WY | NE | 327 | IN | OH |

| 151 | ID | OR | 335 | PA | OH |

| 179 | IN | IL, KY | 340 | KS | OK |

| 180 | IN | OH | 343 | CA | OR |

| 196 | SD | IA, NE | 346 | PA | NJ |

| 205 | IN | KY | 351 | PA | NY, OH |

| 219 | TX | LA | 356 | PA | NJ |

| 250 | MN | MI, WI | 357 | PA | OH, WV |

| 251 | MN | WI | 359 | PA | NY |

| 253 | MN | IA | 370 | SD | NE |

| 256 | MN | WI | 371 | MN, SD | IA |

| 267 | KS | MO, OK | 383 | KS, NM, TX | OK |

| 268 | KS | MO | 391 | TX | OK |

| 274 | WY | MT | 423 | CO, ID, UT, WY | NV |

| 276 | ID | MT | 440 | ID | OR, WA |

| 277 | KS | NE | 445 | PA | MD, WV |

| 279 | AZ, CA | NV | 448 | MN | IA, WI |

| 280 | CA | NV | - | - | - |

| HRR Number | Providers in Non-CON States | Providers in CON States | HRR Number | Providers in Non-CON States | Providers in CON States |

|---|---|---|---|---|---|

| 22 | 3 | 5 | 296 | 1 | 5 |

| 103 | 29 | 10 | 324 | 6 | 1 |

| 104 | 4 | 1 | 327 | 2 | 21 |

| 151 | 7 | 2 | 335 | 2 | 7 |

| 179 | 10 | 11 | 340 | 2 | 38 |

| 180 | 17 | 5 | 343 | 2 | 7 |

| 196 | 1 | 13 | 346 | 15 | 1 |

| 205 | 9 | 21 | 351 | 14 | 3 |

| 219 | 2 | 17 | 356 | 38 | 6 |

| 250 | 11 | 4 | 357 | 34 | 10 |

| 251 | 61 | 11 | 359 | 5 | 1 |

| 253 | 10 | 2 | 370 | 11 | 2 |

| 256 | 7 | 7 | 371 | 46 | 7 |

| 267 | 3 | 8 | 383 | 17 | 2 |

| 268 | 17 | 30 | 391 | 68 | 2 |

| 274 | 7 | 26 | 423 | 34 | 1 |

| 276 | 1 | 14 | 440 | 9 | 23 |

| 277 | 1 | 32 | 445 | 1 | 9 |

| 279 | 4 | 17 | 448 | 1 | 10 |

| 280 | 6 | 11 | - | - | - |

| Panel A: All CON States versus All Non-CON States | Non-CON States | CON States | Difference | t Statistic | Observations |

| Percentage Over Age 65 | 15.6 | 15.8 | −0.3 | 0.42 | 51 |

| Percentage African American | 3.5 | 12.6 | −9.1 | 2.72 | 51 |

| Percentage Hispanic | 16.2 | 8 | 8.2 | −2.99 | 51 |

| Percentage Rural | 39.2 | 37.3 | 1.9 | −0.32 | 51 |

| Percentage Uninsured | 20.7 | 19.2 | 1.5 | −0.81 | 51 |

| Average Graduation Rate (HS) | 81.8 | 79.9 | 1.9 | −0.92 | 51 |

| Unemployment Rate | 6.2 | 7.4 | −1.2 | 2.36 | 51 |

| Household Income (USD) | 51,156 | 50,582 | 574 | −0.22 | 51 |

| Panel B: HRRs in Both CON and Non-CON States | HRR in Non-CON | HRR in CON | Difference | t Statistic | Observations |

| Percentage Over Age 65 | 16.7 | 17.2 | −0.5 | 0.78 | 78 |

| Percentage African American | 4.3 | 4.6 | −0.3 | 0.15 | 78 |

| Percentage Hispanic | 7.8 | 7.6 | 0.2 | −0.12 | 78 |

| Percentage Rural | 45.1 | 51.8 | −6.7 | 1.47 | 78 |

| Percentage Uninsured | 18.2 | 20.6 | −2.4 | 1.77 | 78 |

| Average Graduation Rate (HS) | 84.2 | 81 | 3.3 | −1.71 | 76 |

| Unemployment Rate | 6.5 | 6.8 | −0.4 | 0.83 | 78 |

| Household Income (USD) | 50,046 | 46,080 | 3965 | −2.12 | 78 |

| Measure Name (CMS Code) | Mean Sample | Non-CON States | CON States | Difference | Clustered t Statistic | Observations |

|---|---|---|---|---|---|---|

| Death among Surgical Inpatients with Serious Treatable Complications (deaths per 1000 surgical discharges with complications) (PSI #4) | 115.1 | 113.2 | 116.0 | −2.9 | 4.24 | 9537 |

| Postoperative Pulmonary Embolism or Deep Vein Thrombosis (per 1000 surgical discharges) (PSI #12) | 4.5 | 4.3 | 4.6 | −0.3 | 4.95 | 15,390 |

| Percentage of patients giving their hospital a 9 or 10 overall rating (percentage points) (HCAHPS) | 69.7 | 70.5 | 69.3 | 1.2 | −4.35 | 19,853 |

| Pneumonia Readmission Rates (percentage points) (READM-30-PN) | 17.8 | 17.5 | 17.9 | −0.5 | 13.17 | 20,645 |

| Pneumonia Mortality Rate (percentage points) (MORT-30-PN) | 11.9 | 11.8 | 12.0 | −0.3 | 5.22 | 20,559 |

| Heart Failure Readmission Rate (percentage points) (READM-30-HF) | 23.5 | 23.2 | 23.6 | −0.5 | 9.79 | 19,316 |

| Heart Failure Mortality Rate (percentage points) (MORT-30-HF) | 11.7 | 11.6 | 11.8 | −0.2 | 3.79 | 18,901 |

| Heart Attack Readmission Rate (percentage points) (READM-30-AMI) | 18.5 | 18.3 | 18.7 | −0.4 | 8.20 | 11,377 |

| Heart Attack Mortality Rate (percentage points) (MORT-30-AMI) | 15.1 | 15.0 | 15.1 | −0.1 | 1.44 | 12,792 |

| Measure Name (CMS Code) | Mean Sample | HRRs in Non-CON States | HRRs in CON States | Difference | Clustered t Statistic | Observations |

|---|---|---|---|---|---|---|

| Death Among Surgical Inpatients with Serious Treatable Complications (deaths per 1000 surgical discharges with complications) (PSI #4) | 113.1 | 111.1 | 116.0 | −4.9 | 3.12 | 1539 |

| Postoperative Pulmonary Embolism or Deep Vein Thrombosis (per 1000 surgical discharges) (PSI #12) | 4.4 | 4.5 | 4.2 | 0.4 | −2.99 | 2779 |

| Percentage of patients giving their hospital a 9 or 10 overall rating (percentage points) (HCAHPS) | 71.3 | 71.9 | 70.5 | 1.4 | −2.37 | 4006 |

| Pneumonia Readmission Rate (percentage points) (READM-30-PN) | 17.6 | 17.5 | 17.7 | −0.2 | 2.83 | 4141 |

| Pneumonia Mortality Rate (percentage points) (MORT-30-PN) | 12.0 | 11.8 | 12.2 | −0.5 | 5.12 | 4112 |

| Heart Failure Readmission Rate (percentage points) (READM-30-HF) | 23.3 | 23.2 | 23.5 | −0.3 | 2.64 | 3659 |

| Heart Failure Mortality Rate (percentage points) (MORT-30-HF) | 11.8 | 11.6 | 12.1 | −0.4 | 4.87 | 3552 |

| Heart Attack Readmission Rate (percentage points) (READM-30-AMI) | 18.5 | 18.4 | 18.5 | −0.1 | 0.94 | 1806 |

| Heart Attack Mortality Rate (percentage points) (MORT-30-AMI) | 15.1 | 15.0 | 15.3 | −0.3 | 2.74 | 2033 |

| Measure Name (CMS Code) | (A) Full-Sample Bivariate Model | (B) Full-Sample Multivariate Model | (C) Restricted-Sample Bivariate Model | (D) Restricted-Sample Multivariate Model | (E) HRR Fixed-Effects Bivariate Model | (F) HRR Fixed-Effects Multivariate Model |

|---|---|---|---|---|---|---|

| Death among Surgical Inpatients with Serious Treatable Complications (deaths per 1000 surgical discharges with complications) (PSI #4) | 2.861 *** (1.093) 9537 | 1.919 * (1.138) 9375 | 4.896 * (2.735) 1504 | 3.312 (2.425) 1490 | 6.011 *** (1.701) 1504 | 6.161 *** (2.278) 1490 |

| Postoperative Pulmonary Embolism or Deep Vein Thrombosis (per 1000 surgical discharges) (PSI #12) | 0.283 *** (0.0993) 15,390 | 0.248 ** (0.124) 14,771 | −0.434 ** (0.178) 2673 | −0.245 * (0.146) 2575 | −0.0643 (0.110) 2673 | 0.117 (0.150) 2575 |

| Percentage of patients giving their hospital a 9 or 10 overall rating (percentage points) (HCAHPS) | −1.163 * (0.616) 19,853 | −1.546 *** (0.508) 18,779 | −1.416 * (0.741) 3878 | −0.387 (0.682) 3633 | −1.334 (0.996) 3878 | −0.964 (0.957) 3633 |

| Pneumonia Readmission Rate (percentage points) (READM-30-PN) | 0.451 *** (0.0695) 20,645 | 0.369 *** (0.0696) 19,431 | 0.185 (0.126) 4082 | 0.146 * (0.0838) 3823 | 0.135 (0.0904) 4082 | 0.0854 (0.0958) 3823 |

| Pneumonia Mortality Rate (percentage points) (MORT-30-PN) | 0.258 ** (0.107) 20,559 | 0.0947 (0.0785) 19,362 | 0.486 *** (0.116) 4053 | 0.414 *** (0.0890) 3799 | 0.423 *** (0.135) 4053 | 0.379 *** (0.122) 3799 |

| Heart Failure Readmission Rate (percentage points) (READM-30-HF) | 0.481 *** (0.104) 19,316 | 0.464 *** (0.119) 18,344 | 0.268 (0.166) 3588 | 0.231 (0.147) 3427 | 0.291 * (0.163) 3588 | 0.248 (0.184) 3427 |

| Heart Failure Mortality Rate (percentage points) (MORT-30-HF) | 0.166 (0.129) 18,901 | 0.0608 (0.0883) 17,971 | 0.434 *** (0.146) 3486 | 0.344 *** (0.0923) 3338 | 0.248 ** (0.100) 3486 | 0.198 ** (0.0822) 3338 |

| Heart Attack Readmission Rate (percentage points) (READM-30-AMI) | 0.351 *** (0.0817) 11,377 | 0.351 *** (0.0819) 11,048 | 0.0984 (0.155) 1784 | 0.183 (0.121) 1747 | 0.139 (0.106) 1784 | 0.179 (0.124) 1747 |

| Heart Attack Mortality Rate (percentage points) (MORT-30-AMI) | 0.0706 (0.0939) 12,792 | −0.0245 (0.0722) 12,358 | 0.328 * (0.173) 2006 | 0.216 (0.137) 1956 | 0.321 * (0.170) 2006 | 0.263 (0.173) 1956 |

| Controls | No | Yes | No | Yes | No | Yes |

| Year | Yes | Yes | Yes | Yes | Yes | Yes |

| HRR fixed effects | No | No | No | No | Yes | Yes |

| Measure Name (CMS Code) | (A) HRR Fixed-Effects Model with Health Controls | (B) HRR Fixed-Effects Model Bottom Fifty Percent Obesity | (C) HRR Fixed-Effects Model Top Fifty Percent Obesity | (D) HRR Fixed-Effects Model Bottom Fifty Percent Smoking | (E) HRR Fixed-Effects Model Top Fifty Percent Smoking | (F) HRR Fixed-Effects Model Bottom Fifty Percent Fair or Poor Health | (G) HRR Fixed-Effects Model Top Fifty Percent Fair or Poor Health |

|---|---|---|---|---|---|---|---|

| Death among Surgical Inpatients with Serious Treatable Complications (deaths per 1000 surgical discharges with complications) (PSI #4) | 5.940 *** (2.128) 1481 | 5.975 * (3.265) 698 | 5.551 * (3.131) 792 | 5.975 * (3.195) 790 | 7.651 *** (2.794) 700 | 7.744 ** (3.221) 598 | 5.149 * (2.762) 892 |

| Postoperative Pulmonary Embolism or Deep Vein Thrombosis (per 1000 surgical discharges) (PSI #12) | 0.0884 (0.155) 2513 | 0.267 (0.193) 1369 | 0.199 (0.139) 1206 | 0.0390 (0.212) 1429 | 0.570 * (0.291) 1146 | 0.0187 (0.191) 1095 | 0.119 (0.253) 1480 |

| Percentage of patients giving their hospital a 9 or 10 overall rating (percentage points) (HCAHPS) | −0.603 (0.964) 3417 | −1.151 (1.139) 1985 | −0.358 (1.111) 1648 | −0.938 (0.997) 1992 | −0.444 (1.599) 1641 | −2.015 * (1.169) 1543 | −0.376 (1.151) 2090 |

| Pneumonia Readmission Rate (percentage points) (READM-30-PN) | 0.0561 (0.106) 3553 | 0.180 (0.133) 2196 | −0.0129 (0.123) 1627 | 0.100 (0.122) 2190 | −0.0673 (0.134) 1633 | 0.00811 (0.172) 1689 | 0.157 (0.0968) 2134 |

| Pneumonia Mortality Rate (percentage points) (MORT-30-PN) | 0.354 *** (0.131) 3526 | 0.319 ** (0.148) 2182 | 0.340 * (0.186) 1617 | 0.236 (0.157) 2183 | 0.595 *** (0.189) 1616 | 0.131 (0.170) 1683 | 0.642 *** (0.162) 2116 |

| Heart Failure Readmission Rate (percentage points) (READM-30-HF) | 0.231 (0.182) 3201 | 0.416 * (0.243) 1959 | 0.195 (0.202) 1468 | 0.386 (0.234) 1990 | −0.0602 (0.216) 1437 | 0.555 * (0.298) 1517 | 0.0936 (0.173) 1910 |

| Heart Failure Mortality Rate (percentage points) (MORT-30-HF) | 0.216 *** (0.0819) 3124 | 0.259 ** (0.130) 1909 | 0.00910 (0.176) 1429 | 0.113 (0.123) 1944 | 0.390 *** (0.130) 1394 | 0.0594 (0.132) 1475 | 0.352 *** (0.104) 1863 |

| Heart Attack Readmission Rate (percentage points) (READM-30-AMI) | 0.144 (0.117) 1714 | −0.0493 (0.218) 885 | 0.360 * (0.204) 862 | 0.173 (0.177) 986 | 0.0557 (0.257) 761 | −0.0156 (0.213) 742 | 0.196 (0.210) 1005 |

| Heart Attack Mortality Rate (percentage points) (MORT-30-AMI) | 0.248 (0.181) 1907 | 0.495 ** (0.219) 1038 | −0.171 (0.238) 918 | 0.385 * (0.199) 1119 | 0.0951 (0.307) 837 | 0.690 *** (0.204) 837 | −0.0412 (0.241) 1119 |

| Controls | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Year fixed effets | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| HRR fixed effects | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Measure Name (CMS Code) | 2011 | 2012 | 2013 | 2014 | 2015 |

|---|---|---|---|---|---|

| Death among Surgical Inpatients with Serious Treatable Complications (deaths per 1000 surgical discharges with complications) (PSI #4) | 10.50 *** (1.441) 292 | 8.152 *** (2.729) 313 | 6.282 (3.954) 305 | 5.235 * (2.589) 290 | 4.168 ** (1.871) 290 |

| Postoperative Pulmonary Embolism or Deep Vein Thrombosis (per 1000 surgical discharges) (PSI #12) | −0.132 (0.260) 503 | 0.0622 (0.154) 516 | 0.134 (0.132) 519 | 0.236 (0.153) 516 | 0.263 (0.190) 521 |

| Percentage of patients giving their hospital a 9 or 10 overall rating (percentage points) (HCAHPS) | −1.289 (1.485) 667 | −1.397 (0.979) 710 | −1.422 (1.042) 730 | −0.624 (0.968) 746 | −0.620 (1.128) 780 |

| Pneumonia Readmission Rate (percentage points) (READM-30-PN) | 0.181 (0.149) 745 | 0.107 (0.119) 800 | 0.0641 (0.0813) 807 | 0.0280 (0.103) 737 | 0.0526 (0.0787) 734 |

| Pneumonia Mortality Rate (percentage points) (MORT-30-PN) | 0.527 *** (0.151) 741 | 0.364 ** (0.137) 795 | 0.319 ** (0.156) 801 | 0.329 *** (0.108) 732 | 0.355 *** (0.125) 730 |

| Heart Failure Readmission Rate (percentage points) (READM-30-HF) | 0.293 (0.240) 687 | 0.214 (0.198) 709 | 0.277 (0.167) 705 | 0.212 * (0.124) 665 | 0.258 * (0.129) 661 |

| Heart Failure Mortality Rate (percentage points) (MORT-30-HF) | 0.319 ** (0.121) 674 | 0.275 *** (0.0822) 690 | 0.128 (0.124) 685 | 0.141 * (0.0792) 647 | 0.213 * (0.124) 642 |

| Heart Attack Readmission Rate (percentage points) (READM-30-AMI) | 0.491 ** (0.194) 352 | 0.484 ** (0.186) 350 | 0.202 (0.134) 351 | −0.0754 (0.0920) 345 | −0.179 (0.149) 349 |

| Heart Attack Mortality Rate (percentage points) (MORT-30-AMI) | 0.338 (0.217) 411 | 0.363 ** (0.140) 403 | 0.173 (0.173) 389 | 0.170 (0.147) 381 | 0.249 (0.188) 372 |

| Number of providers | 921 | 1060 | 1076 | 957 | 999 |

| Measure Name (CMS Code) | (A) Original Fixed-Effects Model | (B) Omitting Low HRRs | (C) Omitting Unbalanced HRRs | (D) Omitting Low-CON States |

|---|---|---|---|---|

| Death among Surgical Inpatients with Serious Treatable Complications (deaths per 1000 surgical discharges with complications) (PSI #4) | 6.161 *** (2.278) 1490 | 7.38 *** (1.69) 839 | 6.36 *** (2.35) 574 | 6.82 *** (1.87) 1124 |

| Postoperative Pulmonary Embolism or Deep Vein Thrombosis (per 1000 surgical discharges) (PSI #12) | 0.117 (0.150) 2575 | 0.205 (0.221) 1410 | 0.309 (0.278) 1020 | 0.217 (0.140) 1950 |

| Percentage of patients giving their hospital a 9 or 10 overall rating (percentage points) (HCAHPS) | −0.964 (0.957) 3633 | −1.51 (1.051) 2083 | −1.88 ** (0.917) 1411 | −2.55 *** (0.918) 2586 |

| Pneumonia Readmission Rate (percentage points) (READM-30-PN) | 0.0854 (0.0958) 3823 | 0.059 (0.087) 2229 | 0.063 (0.113) 1578 | 0.167 (0.148) 2726 |

| Pneumonia Mortality Rate (percentage points) (MORT-30-PN) | 0.379 *** (0.122) 3799 | 0.434 *** (0.121) 2216 | 0.410 *** (0.145) 1565 | 0.303 ** (0.149) 2715 |

| Heart Failure Readmission Rate (percentage points) (READM-30-HF) | 0.248 (0.184) 3427 | 0.0457 (0.171) 2021 | 0.0785 (0.193) 1455 | 0.501 ** (0.244) 2450 |

| Heart Failure Mortality Rate (percentage points) (MORT-30-HF) | 0.198 ** (0.082) 3338 | 0.271 *** (0.083) 1955 | 0.210 ** (0.087) 1411 | 0.228 ** (0.101) 2384 |

| Heart Attack Readmission Rate (percentage points) (READM-30-AMI) | 0.179 (0.124) 1747 | 0.132 (0.110) 996 | −0.0447 (0.141) 713 | 0.381 *** (0.138) 1331 |

| Heart Attack Mortality Rate (percentage points) (MORT-30-AMI) | 0.263 (0.173) 1956 | 0.139 (0.215) 1128 | 0.308 (0.239) 808 | 0.212 (0.175) 1476 |

| Panel A: All CON States versus All Non-CON States | Non-CON States | CON States | Difference | Clustered t Statistic | Observations |

| 30-Day Readmission Rate after Medical Discharge (percentage points) | 15.0 | 15.5 | −0.5 | 7.05 | 9341 |

| 14-Day Ambulatory Visit Rate after Medical Discharge (percentage points) | 63.8 | 64.2 | −0.4 | 1.32 | 11,811 |

| 30-Day Emergency Room Visit Rate after Medical Discharge (percentage points) | 19.3 | 20.1 | −0.9 | 9.26 | 10,163 |

| 30-Day Readmission Rate after Surgical Discharge (percentage points) | 11.2 | 12.0 | −0.8 | 6.41 | 5387 |

| 30-Day Emergency Room Visit Rate after Surgical Discharge (percentage points) | 15.0 | 15.8 | −0.8 | 6.69 | 6150 |

| Hospital-wide 30-Day Readmission Rate (percentage points) | 15.4 | 15.7 | −0.3 | 13.42 | 13,235 |

| Aggregate Patient Safety Indicator (ratio) | 0.75 | 0.75 | 0.0 | −0.51 | 9815 |

| Panel B: HRRs in Both CON and Non-CON States | HRRs in Non-CON States | HRRs in CON States | Difference | Clustered t Statistic | Observations |

| 30-Day Readmission Rate after Medical Discharge (percentage points) | 15.1 | 15.4 | −0.3 | 1.67 | 1600 |

| 14-Day Ambulatory Visit Rate after Medical Discharge (percentage points) | 62.2 | 63.8 | −1.7 | 2.02 | 2215 |

| 30-Day Emergency Room Visit Rate after Medical Discharge (percentage points) | 19.3 | 19.9 | −0.6 | 2.78 | 1774 |

| 30-Day Readmission Rate after Surgical Discharge (percentage points) | 11.2 | 11.5 | −0.2 | 0.74 | 877 |

| 30-Day Emergency Room Visit Rate after Surgical Discharge (percentage points) | 14.9 | 15.4 | −0.5 | 1.62 | 988 |

| Hospital-wide 30-Day Readmission Rate (percentage points) | 15.4 | 15.6 | −0.1 | 3.01 | 2735 |

| Aggregate Patient Safety Indicator (ratio) | 0.77 | 0.77 | 0.0 | −1.53 | 1690 |

| Measure Name | Coefficient on CON |

|---|---|

| 30-Day Readmission Rate after Medical Discharge, 2011–2013 (percentage points) | 0.180 (0.174) 1553 |

| 14-Day Ambulatory Visit Rate after Medical Discharge, 2011–2013 (percentage points) | 0.070 (1.39) 2143 |

| 30-Day Emergency Room Visit Rate after Medical Discharge, 2011–2013 (percentage points) | 0.402 (0.316) 1721 |

| 30-Day Readmission Rate after Surgical Discharge, 2011–2013 (percentage points) | 1.02 *** (0.305) 860 |

| 30-Day Emergency Room Visit Rate after Surgical Discharge, 2011–2013 (percentage points) | 1.06 *** (0.464) 967 |

| Hospital-wide 30-Day Readmission Rate, 2013–2015 (percentage points) | 0.071 (0.056) 2522 |

| Aggregate Patient Safety Indicator, 2013–2015 (ratio) | 0.009 (0.021) 1632 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stratmann, T. The Effects of Certificate-of-Need Laws on the Quality of Hospital Medical Services. J. Risk Financial Manag. 2022, 15, 272. https://doi.org/10.3390/jrfm15060272

Stratmann T. The Effects of Certificate-of-Need Laws on the Quality of Hospital Medical Services. Journal of Risk and Financial Management. 2022; 15(6):272. https://doi.org/10.3390/jrfm15060272

Chicago/Turabian StyleStratmann, Thomas. 2022. "The Effects of Certificate-of-Need Laws on the Quality of Hospital Medical Services" Journal of Risk and Financial Management 15, no. 6: 272. https://doi.org/10.3390/jrfm15060272