1. Introduction

The placement of dental implants to restore missing teeth is now recognized as a standard dental procedure [

1]. A priori dental implant planning is required to achieve good treatment results because it helps to find the correct position of the implant and eliminates surgical risks [

2,

3,

4]. Generally, an implant placement plan is created using a patient’s cone beam computed tomography (CBCT) image, which offers three-dimensional (3D) information to surgeons instead of traditional methods [

5]. Finding missing teeth regions missing tooth areas is a critical step that the oral implantologist should determine during implant placement planning [

6,

7].

With the widespread use of dental implants nowadays, automatic detection of missing tooth areas is necessary to develop an automatic implant plan. Unfortunately, due to the lack of automated technologies, clinicians had to plan to place dental implants manually, which is not efficient as it requires more time and effort [

6,

7]. Therefore, several studies utilized the power of A.I. to improve the effectiveness and efficiency of clinical treatment methods. In contrast to human intelligence, A.I. is known as machine intelligence [

8], where the machines can simulate human minds in the ability to learn and analyze and then solve problems [

9,

10]. As A.I. technologies have rapidly developed, they has been widely used in several fields, including healthcare. These technologies include the Internet of Things (IoT), machine learning, deep learning, natural language processing, and robotics. The A.I. technologies are successfully applied in the healthcare industry to improve clinical treatment outcomes, enhance the performance of the decision-making process, and enhance clinical diagnosis in various medical task domains [

10,

11].

One of the main types of machine learning based on artificial neural networks is deep learning (DL), which uses multiple layers of processing to extract knowledge and insight from the data. DL has been applied for several medical applications, such as computer-aided diagnosis and surgical planning, disease classification, tumor detection, and anatomic modeling [

12]. In dentistry, it has been applied for tasks such as third-molar extraction, caries detection, prediction of extraction difficulty for mandibular third molars, mandibular canal detection, and tooth numbering [

13,

14,

15,

16].

This paper further extends the research of applying artificial intelligence and deep learning in dentistry. It aims to enhance the 3D missing tooth area planning using pretrained deep learning models by automatically detecting and classifying the CBCT images and passing only those which need further planning.

2. Related Works

Nowadays, healthcare is one of the basic needs of all people, and smart healthcare has become essential to provide efficient services for several healthcare sectors that improve the quality of people’s lives. Different advanced technologies have been recently used in smart healthcare, which includes artificial intelligence (A.I.), machine learning (ML), deep learning (DL), Internet of Things (IoT), big data analytics, medical sensors, and others [

17]. For instance, the traditional diagnosis methods for conditions such as brain, heart, kidney, and liver diseases were manual and error-prone. Unlike exclusive human expertise, applying artificial intelligence methods provides auto diagnosis and reduces detection errors [

18]. In dentistry, artificial intelligence has been recently applied to provide clinicians with high-quality patient care and prediction tools that simplify complicated diagnosis protocols. For instance, deep learning methods can effectively detect caries using radiographs as the input layer for these DL methods. Based on A.I. training, multiple hidden layers of DL methods can be used to classify the radiographs into “caries” and “no-caries” [

19].

Another important application of A.I. in dentistry is the automated detection of teeth and small edentulous regions. In these applications, cone beam computed tomography (CBCT) images of different patients (such as fully and partially dentate patients) were used for this purpose. Gerhardt et al. [

20] used the A.I. tool (Virtual Patient Creator, Relu BV, Leuven, Belgium) to train and test the model with 175 CBCT images. This A.I. tool was used to label, segment, and detect the missing teeth. Based on the 3D U-Net architecture, a deep learning method was used in this tool for detecting and segmenting the teeth in two subsequent networks. The teeth were detected in the down-sampled image, and rough segmentation was provided for the first network. The regions of interest (R.O.I.) can be proposed using the rough segmentation that enabled the use of deep learning for multi-class classification after cropping and down-sampling the images to a fixed resolution. The automated tooth segmentations for all CBCT scans provided by this tool in the form of individual 3D models were judged by an expert. If adjustments were needed, the tooth segmentations were manually refined using this tool.

Similarly, Zhang et al. [

21] and Chen et al. [

22] conducted studies on automated detection/labeling of 2D teeth, where CNN was applied to detect teeth in periapical radiographs. The experimental results of these two studies showed that the obtained precision rates were 95% and 90%, respectively. These findings ensured the importance of A.I. in providing accurate and efficient detection for proper surgical and treatment planning and automated dental charting.

In [

23], Liu et al. used an A.I.-based system to design the implant plan automatically. They applied transfer learning using A.I. to guide implant placement in the posterior mandible. These systems are known as single-shot multibox detectors (S.S.D.) and voxel-to-voxel prediction network for pose estimation (V2V-PoseNet). The characteristic annotators were detected, and the implant position was determined using this A.I. system. In this study, the CNN model was trained using a dataset of 2500 different edentulous sites for CBCT images. The experimental results showed that the A.I. system could use deep learning to effectively detect the anatomy of the object region and generate the ideal implant plan.

Similarly, in [

2], Kurt Bayrakdar et al. used an AI-based system for implant planning. A dataset of 75 CBCT images was used in this study to train the deep convolutional neural network for providing an automatic detection of canals/sinuses/fossae linked with alveolar bones and missing tooth regions. The voxel-perfect segmentations were required for predictions of crucial implant planning. The segmentation of missing teeth depends on the segmentations of present teeth and jaws. Using the neighboring teeth location, tilt, and placement, the missing tooth mask was extracted that can be used as a guide to a slicing algorithm and helps predict a mesiodistal angle of implant placement. The outcomes of A.I. methods were compared with the manual assessment using the Wilcoxon signed-rank test and Bland–Altman analysis. The experimental results showed no significant difference between these two methods for the bone height measurements obtained. However, it was significant for bone thickness measurements in all regions of maxilla and mandible. The detection accuracy obtained in this study was 95.3% for missing tooth regions, 72.2% for canals, and 66.4% for sinuses/fossae.

Deep learning methods have recently been used for dental implant planning to detect missing tooth regions. A dataset of 455 panoramic radiographic images was used in [

6] to annotate, segment, and detect the missing tooth regions. The tooth masks were generated first during the image segmentation process based on the instance-by-instance method. Mask R-CNN and ResNet-101 were used for the segmentation model. For the detection of missing tooth region, the Faster R-CNN model was used. The backbone of this model was ResNet-101. The experimental results showed that the detection model obtained an average of 92.14% for tooth instance segmentation and 59.09% for missing tooth region detection. In addition, deep learning has also been applied to perform different tasks in dentistry, which include dental caries detection [

24] and prediction of extraction difficulty for mandibular third molars [

25].

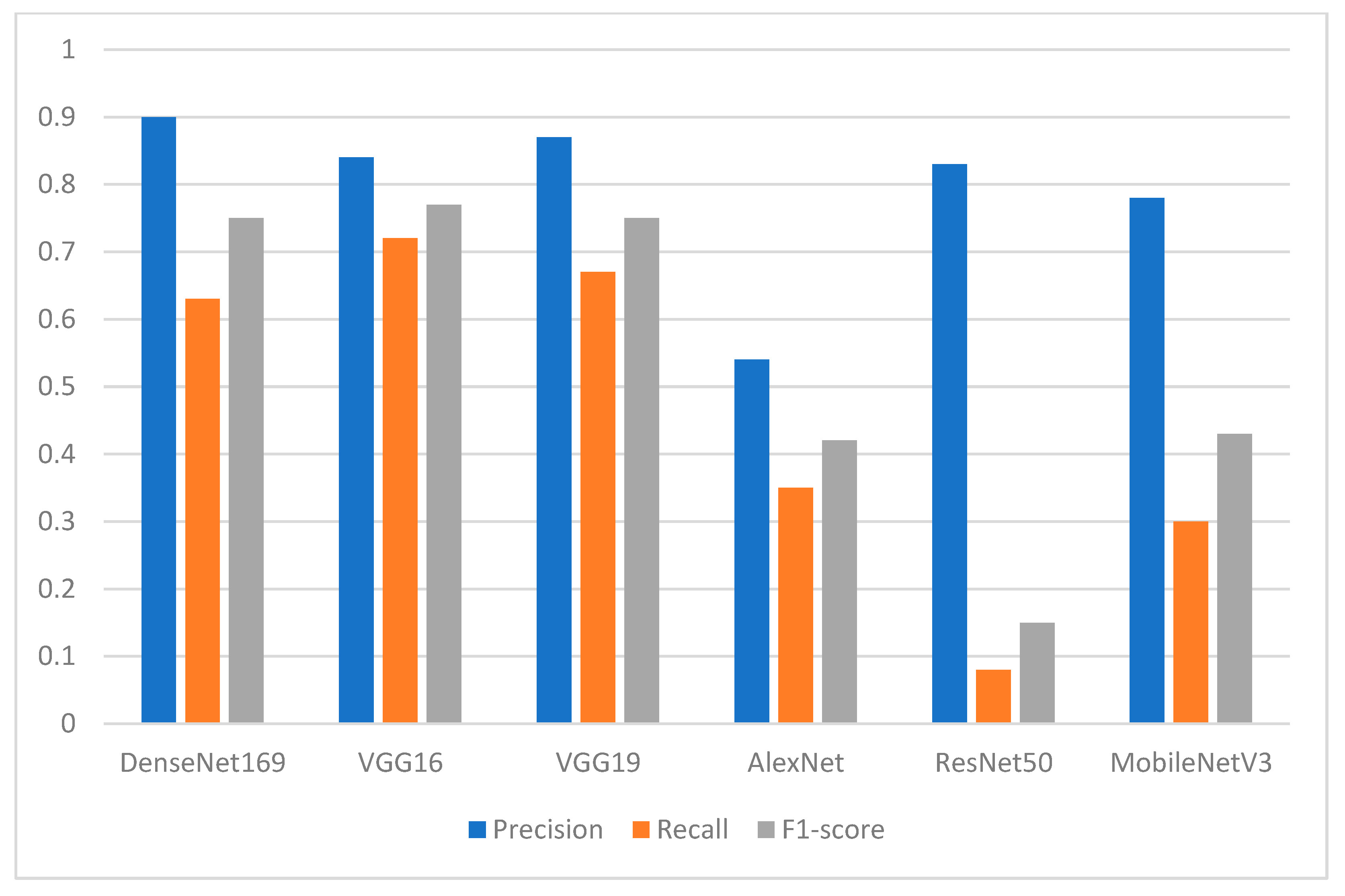

In this paper, several pretrained deep learning models were applied for enhancing 3D missing tooth area planning, including the AlexNet, VGG16, VGG19, ResNet50, DenseNet169, and Mo-bileNetV3 models.

3. Materials and Methods

3.1. Study Design

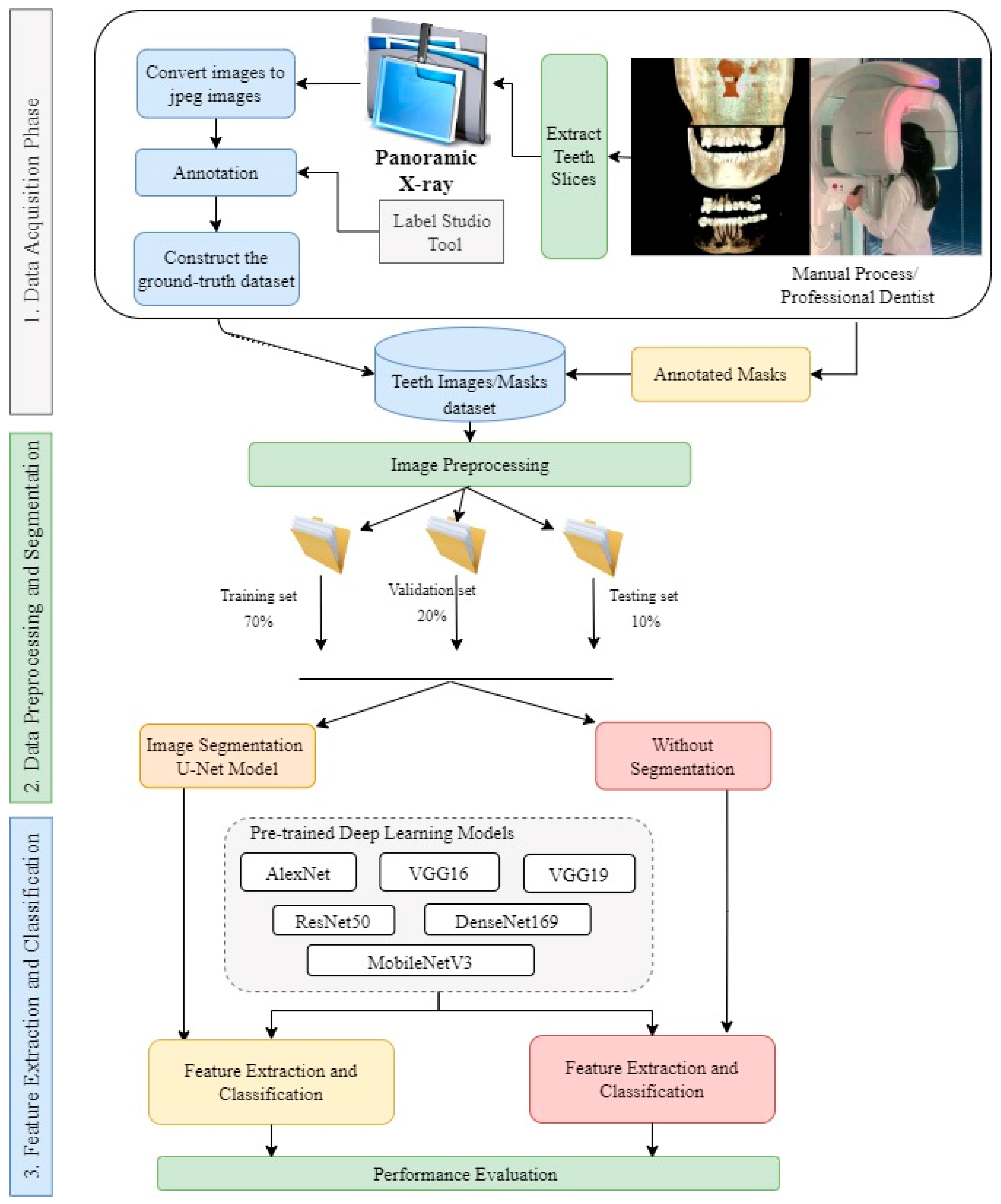

In this study, deep learning models were trained to detect and classify the missing teeth region from a dataset segmented from CBCT images of confidential patients who regularly visited the Taibah University dental hospital. The experimental part of this study was implemented jointly at the Faculty of Dentistry and the College of Computer Science and Engineering at Taibah University, K.S.A. Generally, the proposed approach is divided into three main stages: the data acquisition stage, the data preprocessing and segmentation stage, and the feature extraction and classification stage. To ensure that the proposed model was able to classify the images correctly, the collected images were of different patients of different ages. As a result, 500 CBCTimages were collected. Since the images were in 3D dimension, an expert with more than 10 years of professional experience in dental implant planning was to be responsible for slicing and extracting 2D X-ray images from the original CBCT images. The professional dentist was also asked to manually annotate the sliced/extracted images as well as manually segment the missing tooth regions and positions. As a result, the obtained images were used as ground-truth masks for training the proposed deep learning models. In the second stage, the dataset along with the masks, were fed to the segmentation stage, in which augmentation was also performed. In this study, we designed two experiment types based on the presented segmentation techniques. For this purpose, the U-Net model was used in the segmentation stage. In terms of classification models, several deep learning architectures were implemented and tested, including the AlexNet, VGG16, VGG19, ResNet50, DenseNet169, and MobileNetV3 models. These models were used to classify the resulting binary masks from the segmentation stage well as to classify the original images without segmentation. As the pretrained DL models are originally capable of classifying up to 1000 different classes, the last fully connected layer of these models was replaced so that it can be capable of classifying the binary classes of the used dataset. We also compared the output of the proposed models to the ground-truth dataset that was annotated by the domain expert.

Figure 1 shows the whole process of the followed methodology.

3.2. Ethics Statement

Generating a real dataset of images, which are more than confidential patients’ CBCT images representing different missing tooth positions of patients with different age ranges, the CBCT images were officially obtained from patients between 16 and 72 years of age. The research ethics committee, College of Dentistry, Taibah University, Madinah, Saudi Arabia, approved this study protocol (approval # 14032021, approved on 21 March 2021). Due to the non-interventional retrospective design and anonymous analyzed data, the institutional review committee waived the requirement for individual informed consent.

3.3. Data Acquisition

This study screened scans from patients who had visited Taibah University Dental Hospital (TUDH) and had undergone CBCT imaging between 2018 and 2022. CBCT images of patients between 16 and 72 years of age were included. Because image quality is an important factor affecting the performance of deep neural networks in computer vision tasks, poor-quality images were excluded from the collected dataset to avoid impacting the effectiveness of the applied deep learning models and their results. Therefore, images belonging to patients with a history of trauma, pathology, surgical intervention congenital syndrome, fracture, or any other foreign body (which produced artifacts in the image) were excluded from the study. Moreover, blurred images, such as those containing an ill-defined and unclear definition of bony borders, were excluded from the study. Of 890 screened scans, 500 images fulfilled the eligibility criteria. Anonymous code was given to each subject, all the data was saved in an excel sheet with a security password, and the computer was secured with a password. Notably, visualizing anatomical structures depends on technological parameters such as image resolution and CBCT reconstruction time. In this study, all CBCT scans performed in the TUDH adhered to a standardized scanning protocol with the same machine (KaVo 3D eXam; KaVo) at the same specified device settings (i.e.,120 kVp and 5 mA using a field of view of 16×13 cm, voxel size of 0.25 mm, and 26.9 s of acquisition time) as a change in any of these factors may affect the visualization of anatomical structures.

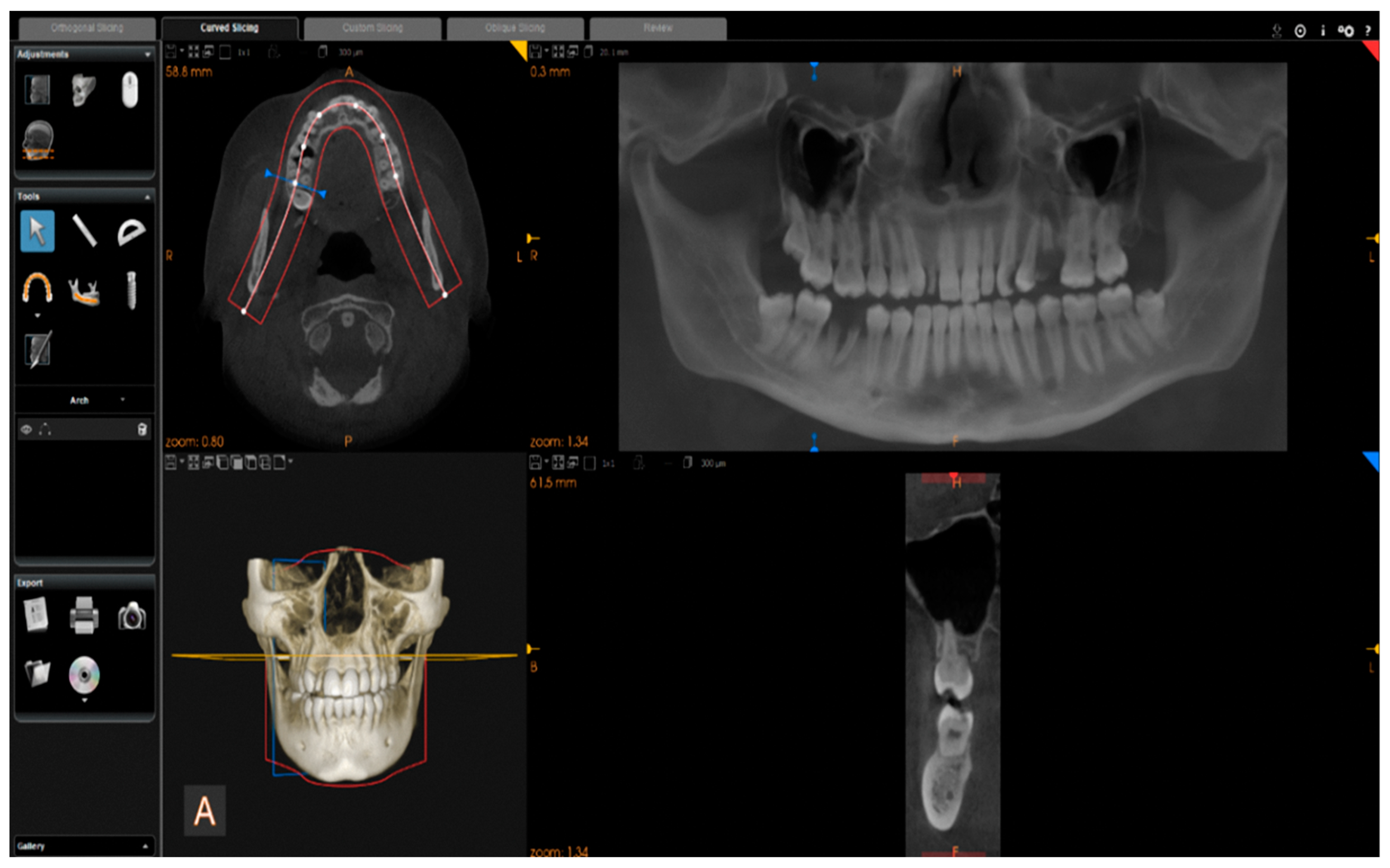

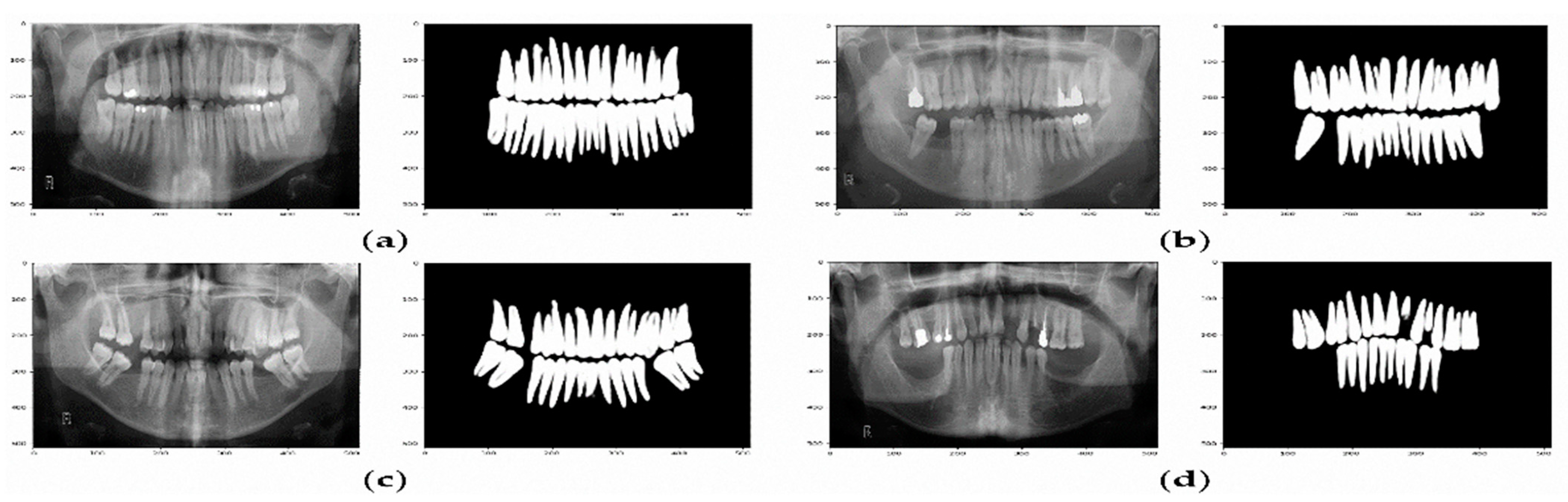

Images were reconstructed in C.S. 3D Imaging Software (Carestream Dent L.L.C., Atlanta, GA, USA). The head positions were standardized anteroposteriorly and sagittally according to a standard method. From CBCT volumes, the panoramic images were reconstructed by selecting a custom focal trough that passed through the lingual cusps of the maxillary teeth and extended posteriorly to the condyles. (

Figure 2).

This conversion process is summarized in Algorithm 1.

| Algorithm 1 Conversion of CBCTimages to X-ray Images |

| 1. Input: 3D cone beam CBCTdataset |

| 2. Output: X-ray images |

| 3. Begin |

| 4. = [ ] // empty dataset |

| 5. for all in dataset do:

|

| 6. if image_quality is poor Then: |

| 7. = |

| 8. else: |

| 9.

// save images as DICOM |

| 10. // reconstruct images using C.S. 3D Imaging Software |

| 11. // slice images |

| 12.) // Save images as PNG format |

| 13. end for |

| Return X-ray images |

3.4. Data Preprocessing and Segmentation

The X-ray images dataset obtained by applying algorithm 1 was passed to the next stage. In the data preprocessing phase, the images were standardized, and all panoramic dental X-ray images were resized to 512 × 512 pixels and normalized in the range of 0 to 1.

After preprocessing, the datasets were randomized and divided into 70% training, 20% validation, and 10% test data, as shown in

Figure 1. Since there are two separate experiments, the training set was also used to provide experience for segmentation models regarding teeth segments. For this purpose, only 120 images were used for segmentation, while the remaining data were used to evaluate the segmentation models.

3.5. Detection and Classification Using the Pretrained DL Models

A total of six pretrained CNN models were used in this study, which include AlexNet, VGG16, VGG19, ResNet50, DenseNet169, and MobileNetV3. Each of these models is described in detail in the following subsections.

3.5.1. AlexNet

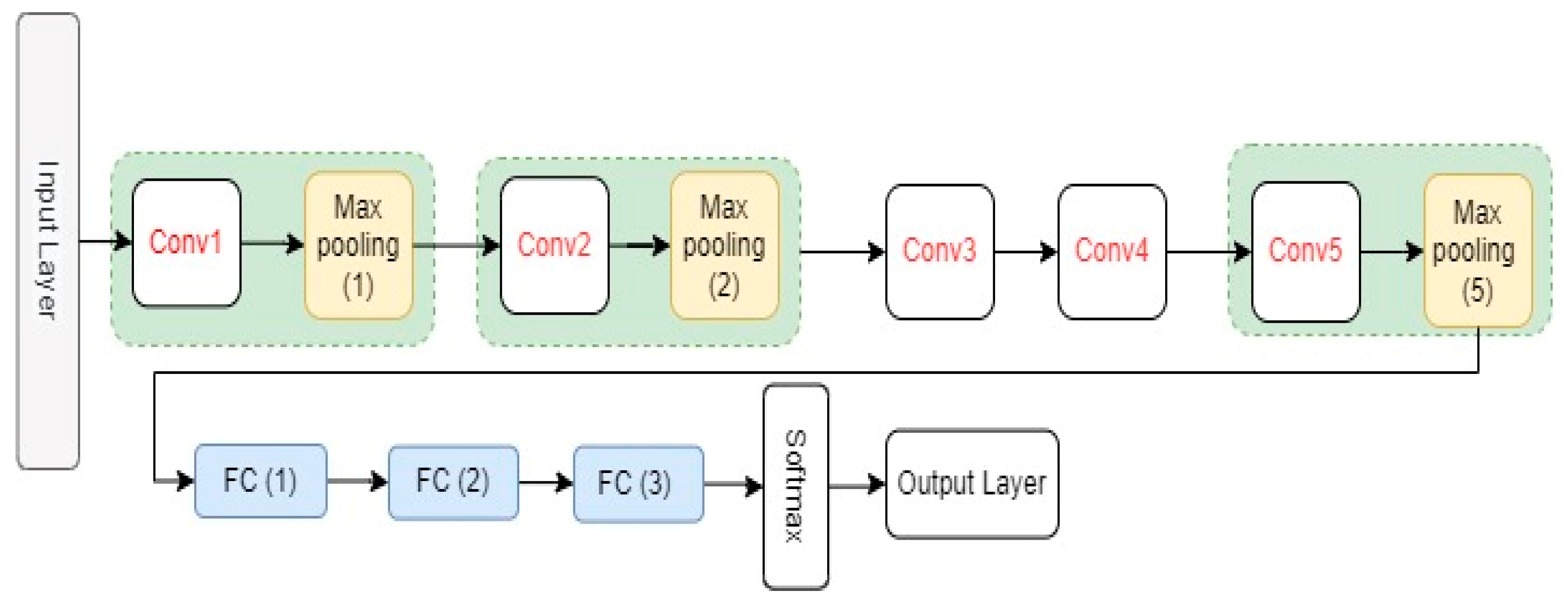

AlexNet is a CNN model proposed by Krizhevsky et al. [

26]. It has eight layers with learnable parameters, including five convolutional layers, three fully connected layers, and three max-pooling layers that are connected after the first, second, and fifth convolutional layers, respectively. As an activation function, AlexNet model uses the Relu activation function in all its layers except the output layer. The original AlexNet architecture and its configuration settings are shown in

Figure 3 and

Table 1, respectively.

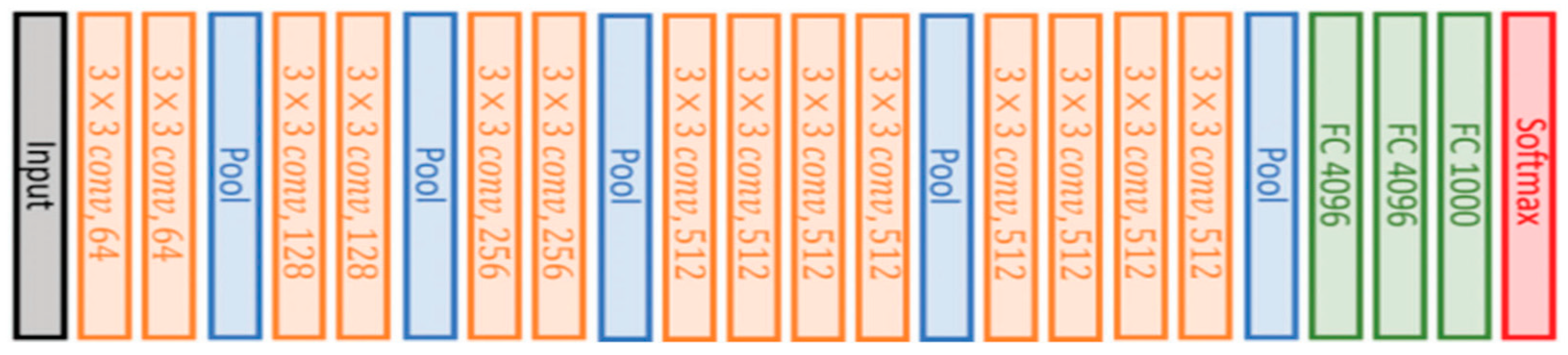

3.5.2. VGG16 and VGG19

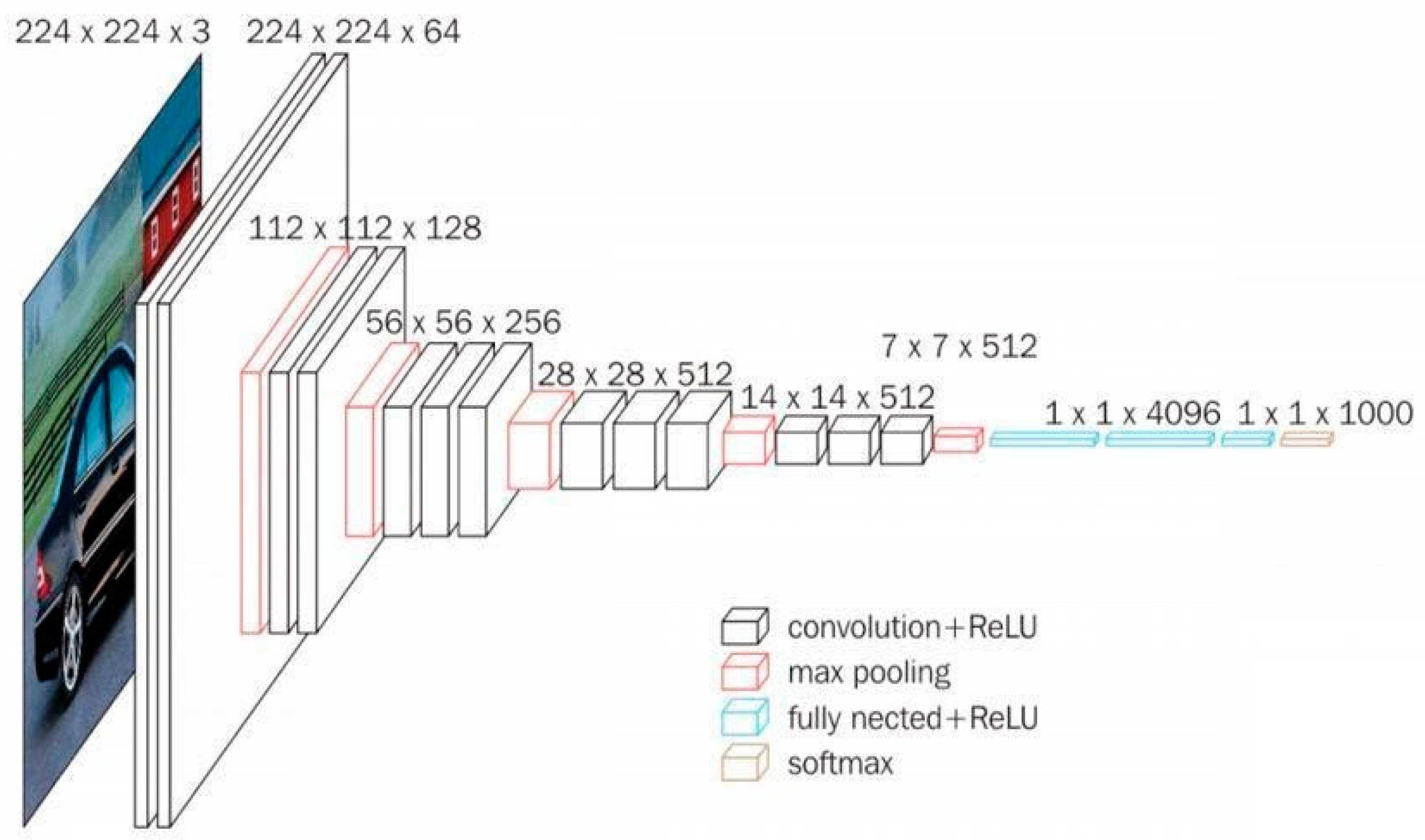

VGG16 is also a type of CNN model that consists of 21 layers. Out of these, there are only 16 weight layers with learnable parameters. Precisely, the model consists of 13 convolutional layers, 3 fully connected layers, and 5 max pooling layers [

27]. The VGG16 model uses small convolutional filters (3 × 3) with stride 1. In addition, the same padding and max pool layer of a 2 × 2 filter of stride 2 are used.

This network has about 138 million parameters, which is quite large. The original VGG16 architecture and its configuration settings are depicted in

Figure 4.

VGG19 is a variant of the VGG16 model in which three new convolutional layers were added up to the previous VGG16 model. There are 19 layers (16 convolution layers and 3 fully connected), 5 max-pooling layers, and 1 SoftMax layer.

Figure 5 shows the VGG19 architecture.

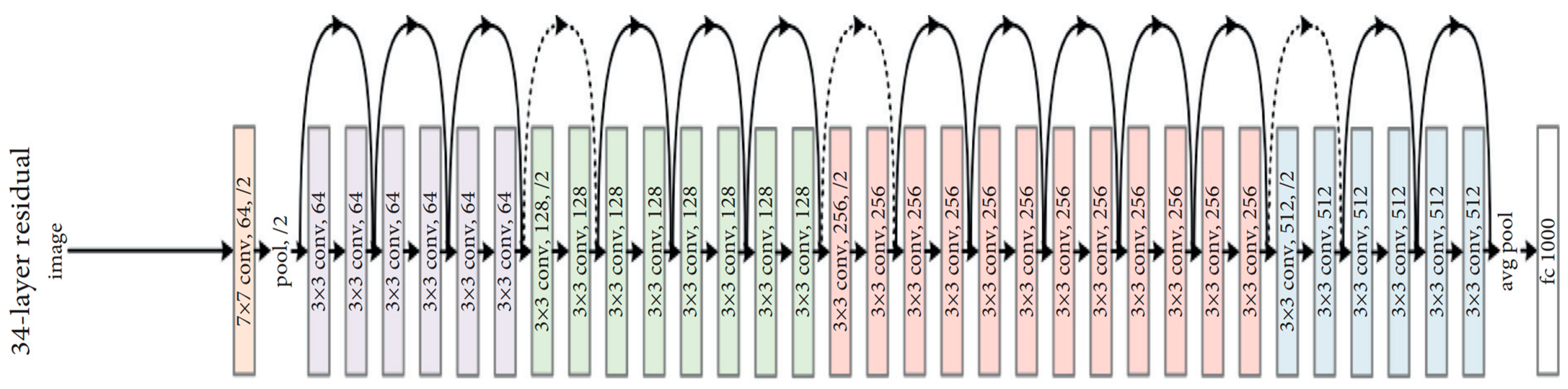

3.5.3. ResNet50

ResNet50 is a variant of the ResNet model, which has 48 convolution layers along with 1 MaxPool and 1 average pool layer. It has 3.8 × 10

9 floating point operations. Since the vanishing gradient problem is common in CNN-based models during backpropagation, the ResNet-based model uses a “skip connection” to overcome such a problem, as shown in

Figure 6.

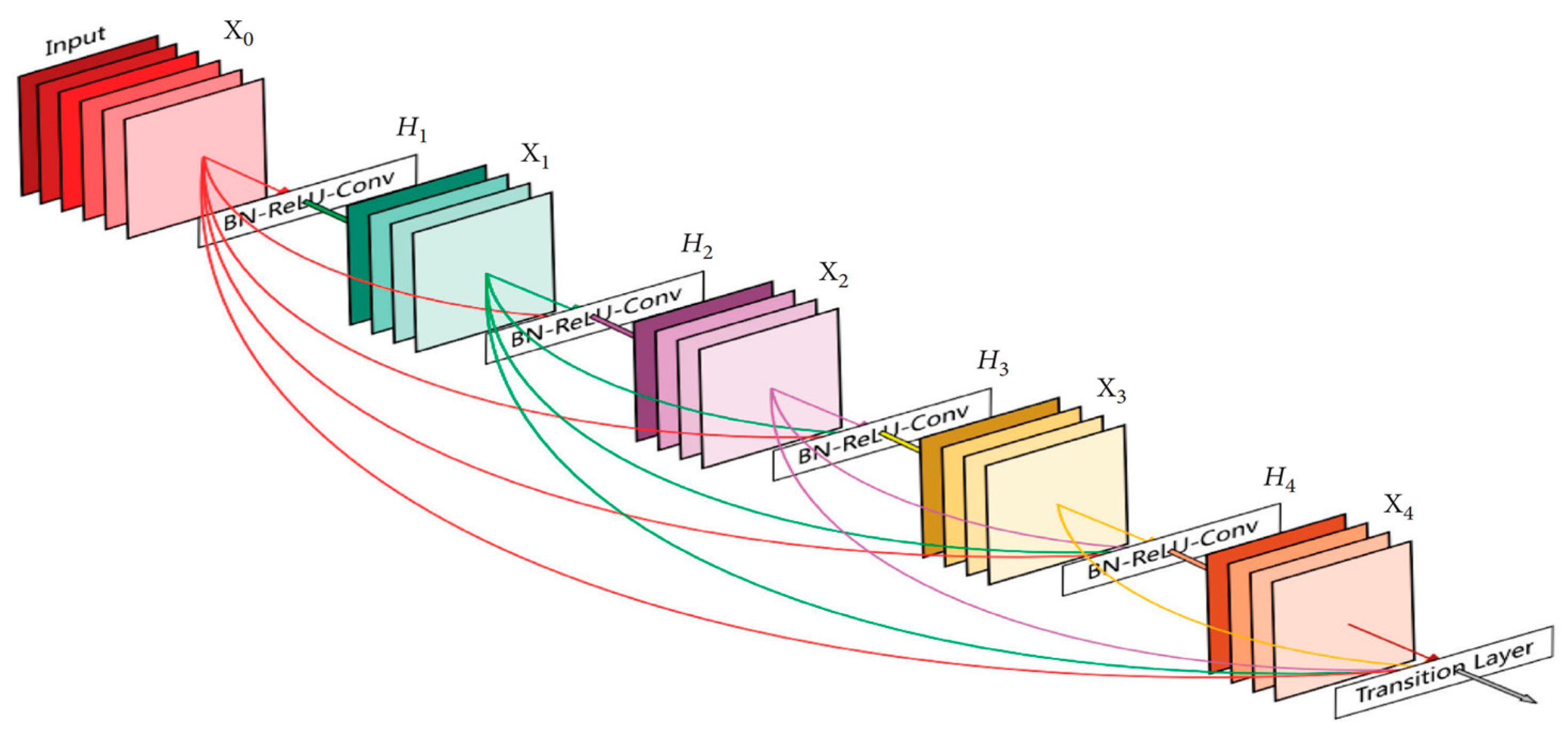

3.5.4. DenseNet169

A dense convolutional network (DenseNet) is an expansion of the Residual CNN (ResNet) architecture. Indeed, there are several DenseNets such as, DenseNet121, DenseNet169, and DenseNet201. In this paper, the DenseNet169 was used. As indicated in its name, the architecture of this model contains 169 layers. The reason for this choice is due to the ability of DenseNet169 to handle the vanishing gradient problem compared to the other versions [

28]. In addition, it has a minimal number of parameters. In contrast to ResNet and other convolution neural networks, DenseNet-based models provide an immediate connection between each layer and all descendant layers, as shown in

Figure 7.

3.5.5. MobileNetV3

The MobileNetV3 proposed by Howard et al. [

29] is an enhanced version of the original MobileNetV1 and MobileNetV2. The MobileNetV3 uses a new network architecture search algorithm called the NetAdapt algorithm for two reasons: (i) to search for the best kernel size and (ii) to optimize the MobileNet architecture by fulfilling the hardware platforms in terms of size, performance, and latency. The MobileNetV3 processes a new nonlinearity version of a sigmoid function called hard swish (h-swish), which is used, on the one hand, to reduce the overall model complexity and size and, on the other hand, to minimize the number of training parameters:

3.6. Experimental Setup

The proposed approach is implemented on a machine that meets the following specifications: Intel(R) Core (T.M.) i9-10980HK CPU @ 2.40 GHz 3.10 GHz processor and a 32 GB RAM running Windows 11 with an NVIDIA GeForce MX graphics card. The Jupyter Notebook provided with Anaconda distribution [

30] and Keras 2.10.0 library with TensorFlow backend was used for implementing all the proposed DL models using Python 3.9 [

31]. During the segmentation phase, a 10-fold cross-validation technique was applied, and the sigmoid output of the network was directly binarized. In addition, the weights were randomly initialized using a truncated normal distribution centered at zero. Then, the weights were optimized using the Adaptive Moment Estimation (ADAM) optimizer over 250 epochs with a batch size of four. We set the learning rate to 0.001. In terms of data augmentation, various combinations of horizontal flipping, vertical flipping, and rotations were applied.

Table 2 shows the hyperparameters used for model training at the segmentation and classification phase.

3.7. Evaluation Metrics

Several metrics were used to evaluate the proposed models’ performance depending on the model type [

27]. Among all metrics, accuracy, precision, recall, and F1-score were most used. These metrics are denoted by the following Equations (3)–(6) as follows:

In addition, we use the Matthews correlation coefficient (MCC) to measure how predictions match labels. The MCC is defined as follows:

where

is the class label,

is the prediction of the data,

is the total number of observations, and

is “True Positives”.

5. Conclusions and Future Research Directions

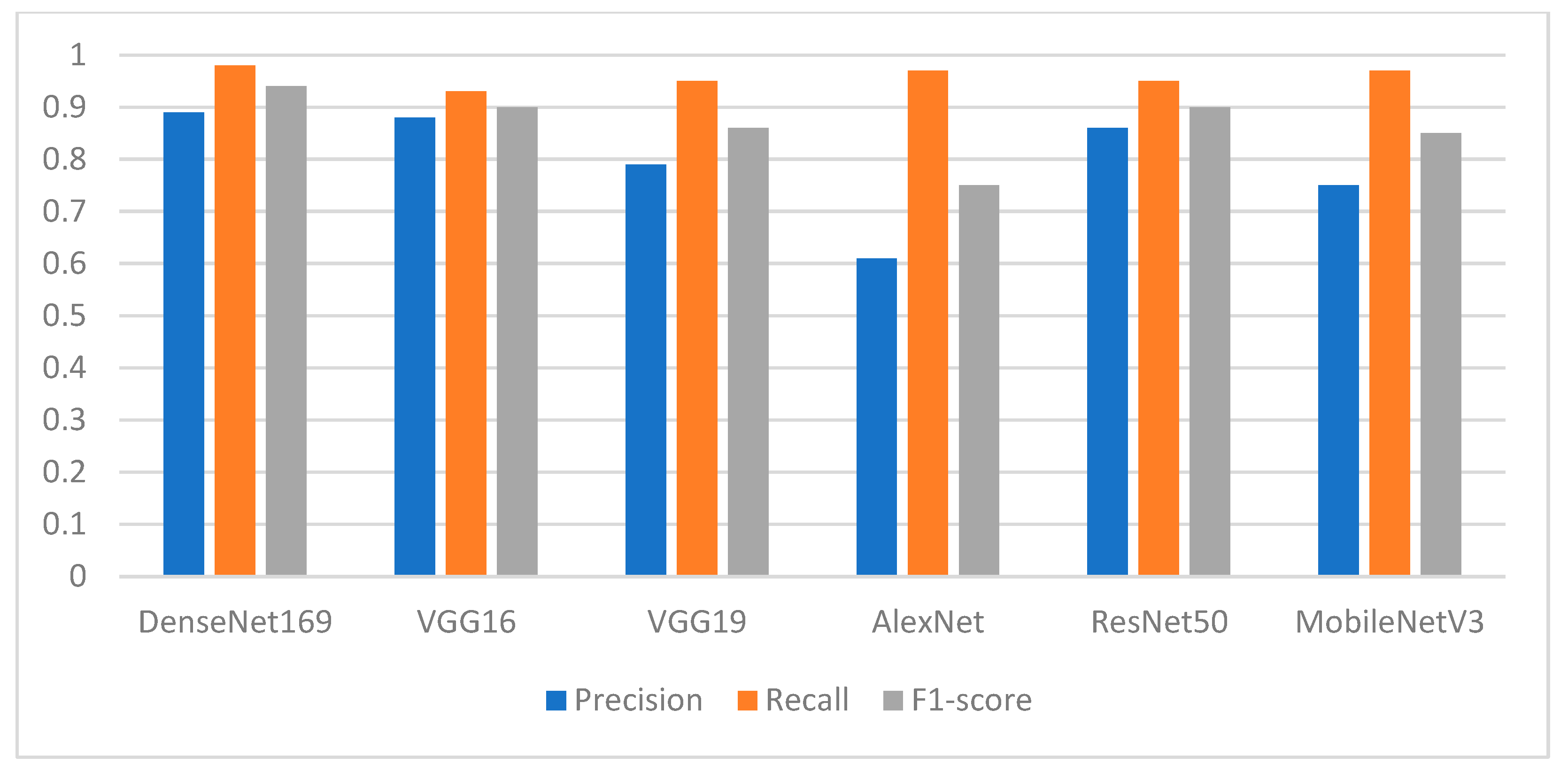

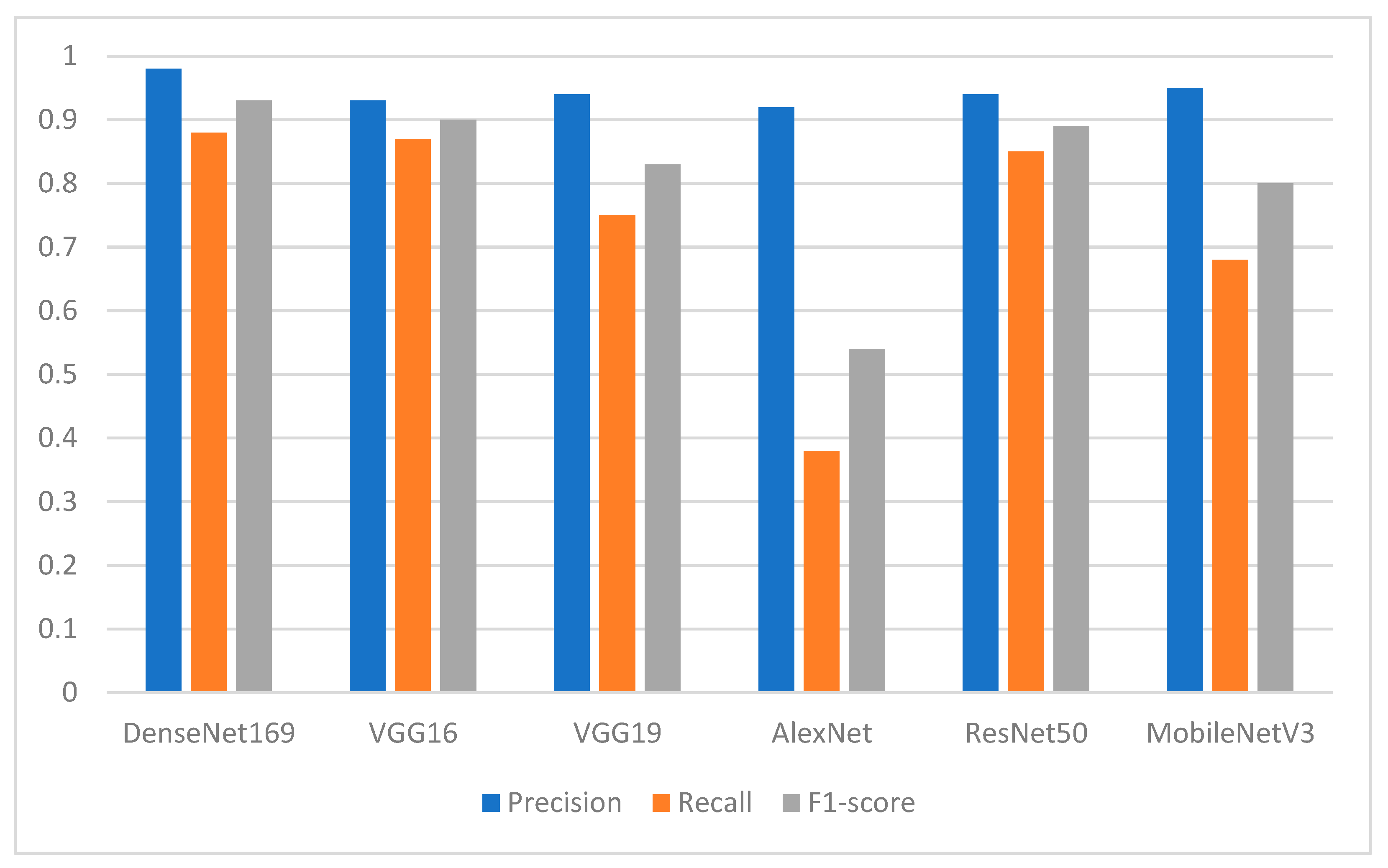

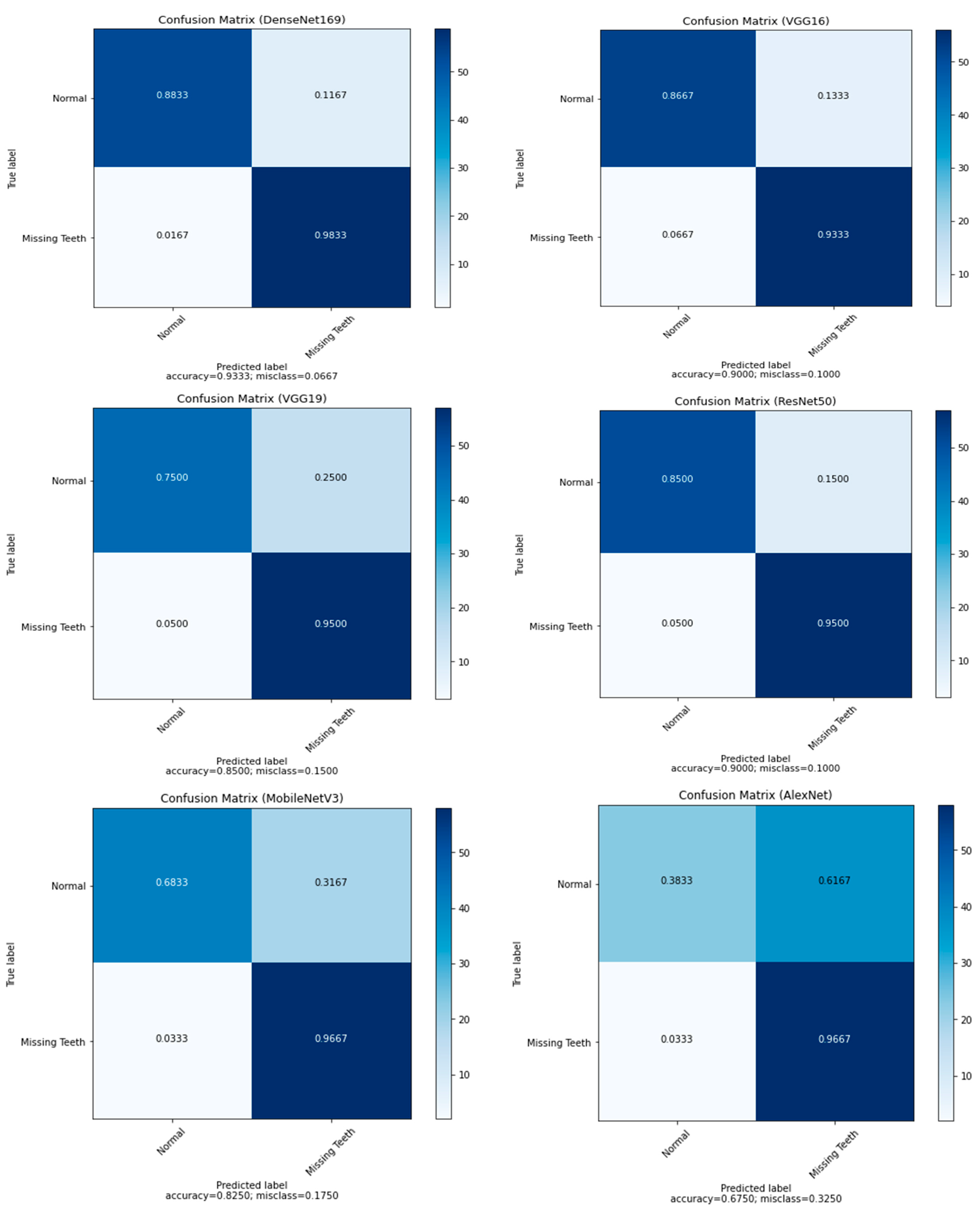

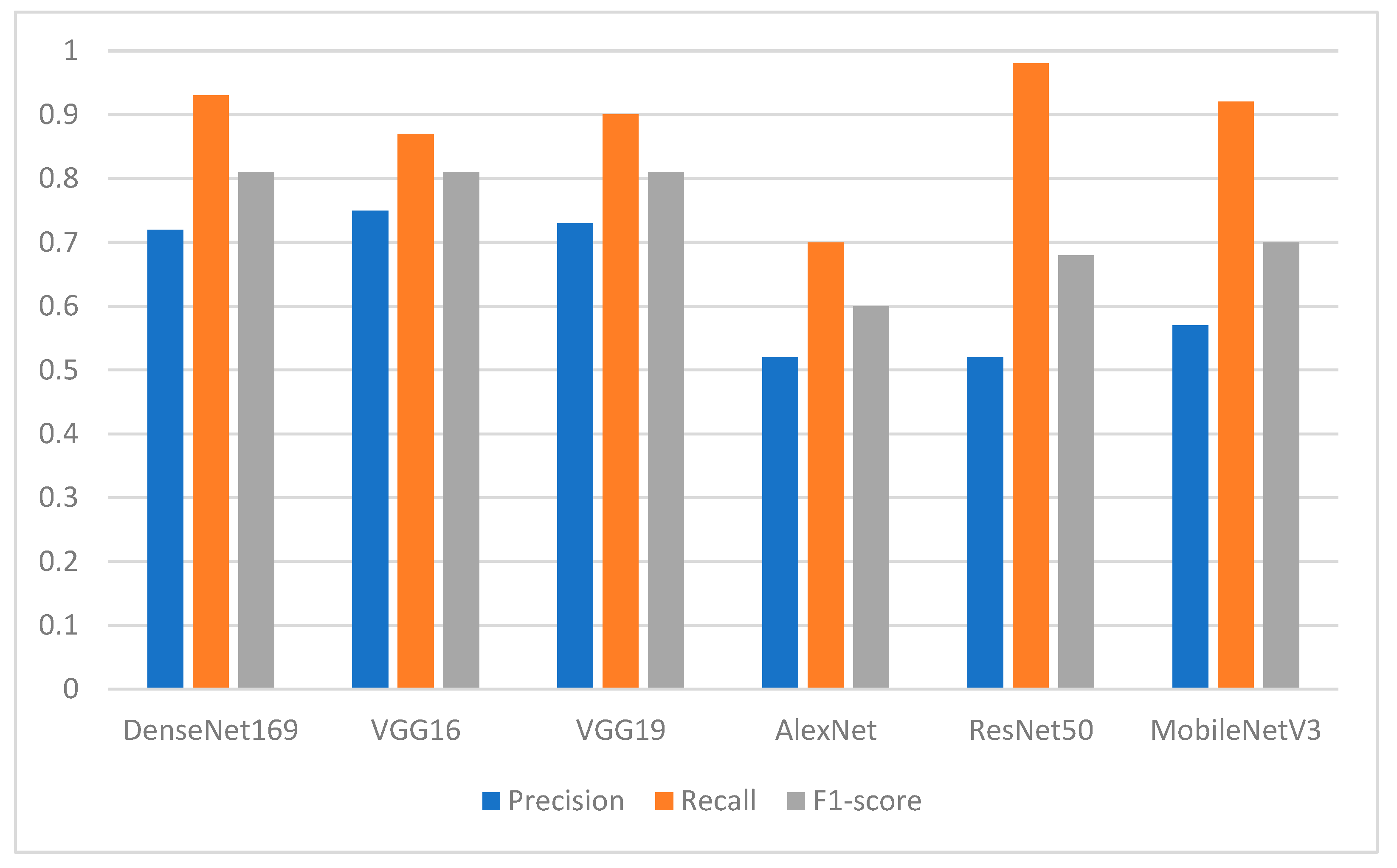

Several pretrained deep learning models were implemented in this study, including AlexNet, VGG16, VGG19, ResNet50, DenseNet169, and MobileNetV3, and their performance in detecting and classifying the missing tooth regions was also investigated in terms of different performance evaluation metrics. In addition, two types of feature extraction were investigated. The feature extraction was based on the U-Net model, and the other type used the bare image without segmentation. A binary classification-based dataset was obtained to facilitate the process of identification of the missing teeth bone area. For future planning and implanting of the predicted missing tooth areas, a domain expert verified the resulting dataset manually.

The experimental results showed that the segmentation process played an essential role in enhancing the performance of the pretrained DL models. Among the proposed DL models, the finding showed the superiority of DenseNet169, with an F1-score of 0.94 for the missing teeth class and 0.93 for the class of normal teeth class. The use of this model may represent a promising time-saving tool serving dental implantologists with a first step toward automated dental implant planning. In addition, this study also constructed a ground-truth dataset for tooth instance segmentation and missing tooth region detection in panoramic radiographic images which were officially obtained, sliced, and extracted and reviewed by an expert with more than 10 years of professional experience in dental implant planning. The domain expert manually annotated the X-ray images to obtain ground-truth masks using the well-known annotation tool called Label Studio (

https://labelstud.io/, accessed on 3 October 2022). An improved algorithm and more data will make implant placement more automated in the future.