Motion Capture Technology in Sports Scenarios: A Survey

Abstract

:1. Introduction

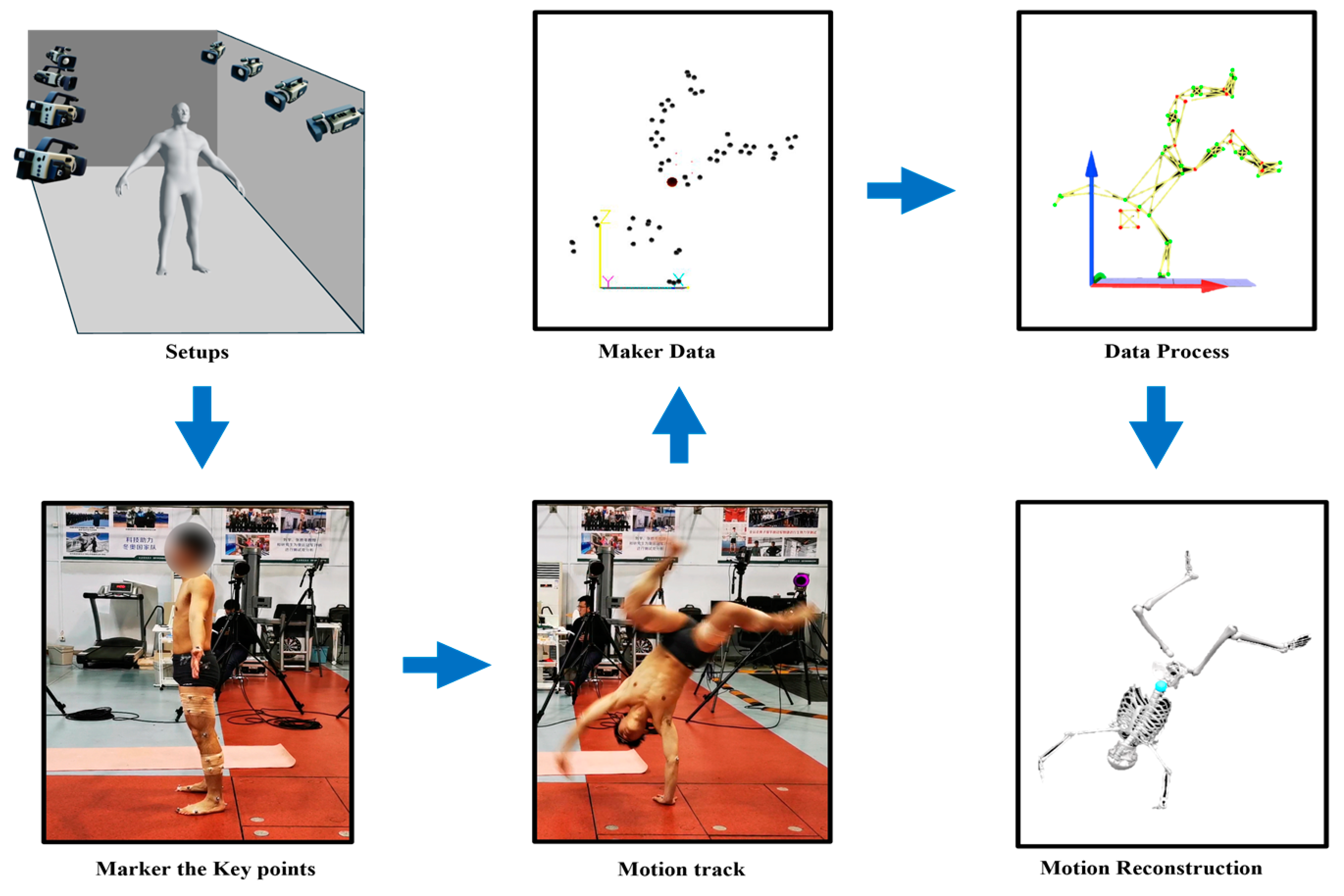

2. Classification of Motion Capture Technology

2.1. Cinematography Capture Systems

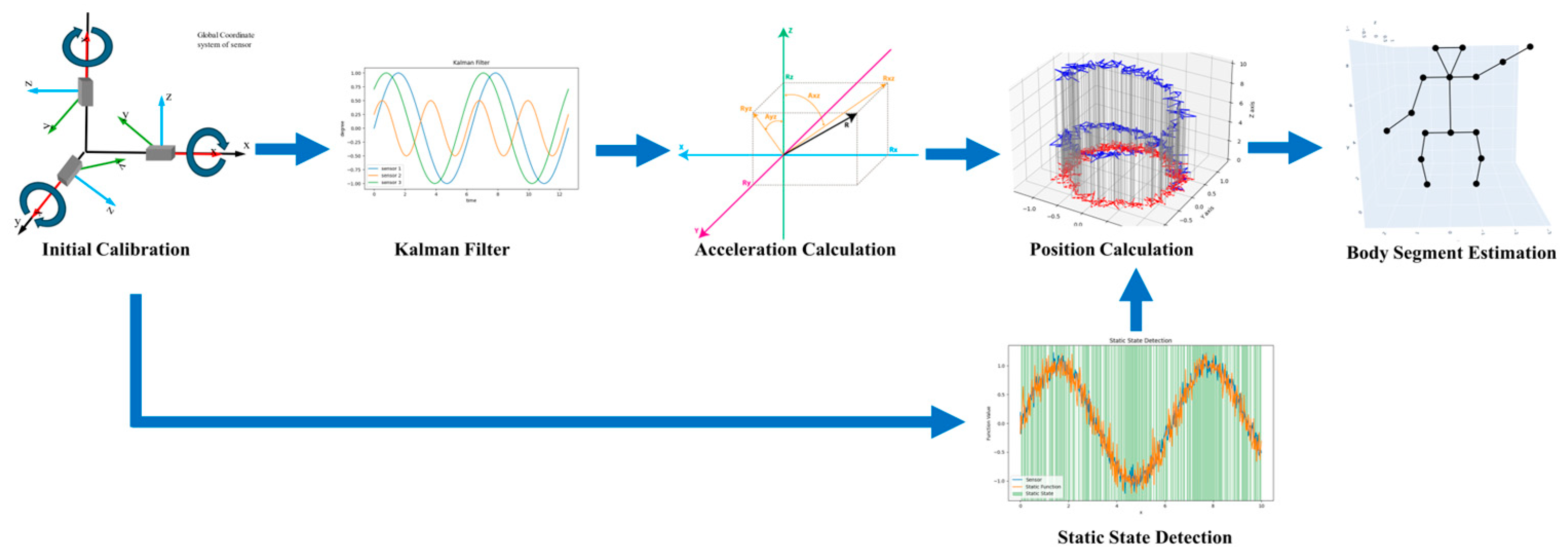

2.2. Electromagnetic Capture Systems

2.3. Computer Vision Capture Systems

2.4. Other Motion Capture Systems

3. Application of Motion Capture in the Field of Sports

3.1. Construction of Athlete Performance Datasets

3.2. Real-Time Assistance for Athlete Training and Competition

3.3. Multi-Camera Motion Capture Technology for Training

4. Discussion

- (1)

- Indoor laboratory settings offer controlled environments with better control over factors like lighting, temperature, and humidity, reducing noise and interference. However, outdoor sports scenarios present complex and variable conditions, including wind, sunlight, shadows, and different surfaces (e.g., plastic, grass, snow, ice, water), which can impact the accuracy and stability of motion capture systems. Outdoor conditions also experience changes in temperature, humidity, and visibility, while non-isolated environments introduce sound, lighting, and electromagnetic interference, further affecting motion capture tasks. Overcoming these environmental limitations is crucial for ensuring accurate and reliable motion capture in sports.

- (2)

- System setup: Indoor laboratory settings offer easier installation and calibration of motion capture systems due to the controlled environment. However, outdoor sports scenarios present more complex conditions, requiring additional effort for system setup and adjustment to achieve higher accuracy and precision. The precision of the measurement method is inversely proportional to the effective working range of the system. In sports scenarios, where large-scale motion scenes are common, capturing human kinematic information requires additional software and hardware optimization methods. These challenges necessitate careful system setup and optimization to ensure accurate motion capture in outdoor sports environments [84].

- (3)

- Motion characteristics: The movements in indoor laboratory settings are usually simple and single, such as gait analysis or arm movements. For these types of movements, indoor motion capture systems can provide high-quality data. In outdoor sports scenarios, there may be more complex movements, such as aerial rotations or limb flips, which can be distorted or inaccurate due to environmental factors. Motion capture for sports scenarios often requires capturing fast movements. In specific research scenarios, such as ballistic analysis in shooting or instant analysis of baseball swings, the sampling frequency may exceed 1000 Hz [16].

- (4)

- Data processing: Data processing is relatively straightforward in indoor laboratory settings because the data in controlled environments are usually stable and accurate [85]. In outdoor sports scenarios, data processing needs to consider more factors, such as lighting and environmental noise issues, occlusion and penetration issues, motion model establishment, data noise, and filtering issues [86].

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chambers, R.; Gabbett, T.J.; Cole, M.H.; Beard, A. The Use of Wearable Microsensors to Quantify Sport-Specific Movements. Sports Med. 2015, 45, 1065–1081. [Google Scholar] [CrossRef] [PubMed]

- Camomilla, V.; Bergamini, E.; Fantozzi, S.; Vannozzi, G. Trends Supporting the In-Field Use of Wearable Inertial Sensors for Sport Performance Evaluation: A Systematic Review. Sensors 2018, 18, 873. [Google Scholar] [CrossRef] [PubMed]

- Menache, A. Understanding Motion Capture for Computer Animation; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Adam, H.C. Eadweard Muybridge: The Human and Animal Locomotion Photographs; Bibliotheca Universalis; Taschen: Hong Kong, China, 2014; Available online: https://books.google.co.jp/books?id=tjomnwEACAAJ (accessed on 27 November 2023).

- Kolykhalova, K.; Camurri, A.; Völpe, G.; Sanguineti, M.; Puppo, E.; Niewiadomski, R. A multimodal dataset for the analysis of movement qualities in karate martial art. In Proceedings of the 2015 7th International Conference on Intelligent Technologies for Interactive Entertainment (INTETAIN), Turin, Italy, 10–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 74–78. [Google Scholar]

- Qian, B.; Chen, H.; Wang, X.; Guan, Z.; Li, T.; Jin, Y.; Wu, Y.; Wen, Y.; Che, H.; Kwon, G.; et al. DRAC 2022: A public benchmark for diabetic retinopathy analysis on ultra-wide optical coherence tomography angiography images. Patterns 2024, 5, 100929. [Google Scholar] [CrossRef]

- Colyer, S.L.; Evans, M.; Cosker, D.P.; Salo, A.I.T. A Review of the Evolution of Vision-Based Motion Analysis and the Integration of Advanced Computer Vision Methods Towards Developing a Markerless System. Sports Med.—Open 2018, 4, 24. [Google Scholar] [CrossRef] [PubMed]

- Brognara, L.; Mazzotti, A.; Rossi, F.; Lamia, F.; Artioli, E.; Faldini, C.; Traina, F. Using Wearable Inertial Sensors to Monitor Effectiveness of Different Types of Customized Orthoses during CrossFit® Training. Sensors 2023, 23, 1636. [Google Scholar] [CrossRef] [PubMed]

- Walgaard, S.; Faber, G.S.; van Lummel, R.C.; van Dieën, J.H.; Kingma, I. The validity of assessing temporal events, sub-phases and trunk kinematics of the sit-to-walk movement in older adults using a single inertial sensor. J. Biomech. 2016, 49, 1933–1937. [Google Scholar] [CrossRef]

- Mooney, R.; Corley, G.; Godfrey, A.; Osborough, C.; Newell, J.; Quinlan, L.R.; ÓLaighin, G. Analysis of swimming performance: Perceptions and practices of US-based swimming coaches. J. Sports Sci. 2016, 34, 997–1005. [Google Scholar] [CrossRef]

- Inoue, K.; Nunome, H.; Sterzing, T.; Shinkai, H.; Ikegami, Y. Dynamics of the support leg in soccer instep kicking. J. Sports Sci. 2014, 32, 1023–1032. [Google Scholar] [CrossRef]

- Augustus, S.; Mundy, P.; Smith, N. Support leg action can contribute to maximal instep soccer kick performance: An intervention study. J. Sports Sci. 2017, 35, 89–98. [Google Scholar] [CrossRef]

- Philippaerts, R.M.; Vaeyens, R.; Janssens, M.; Van Renterghem, B.; Matthys, D.; Craen, R.; Bourgois, J.; Vrijens, J.; Beunen, G.; Malina, R.M. The relationship between peak height velocity and physical performance in youth soccer players. J. Sports Sci. 2006, 24, 221–230. [Google Scholar] [CrossRef]

- Gómez-Carmona, C.D.; Bastida-Castillo, A.; Ibáñez, S.J.; Pino-Ortega, J. Accelerometry as a method for external workload monitoring in invasion team sports. A systematic review. PLoS ONE 2020, 15, e0236643. [Google Scholar] [CrossRef] [PubMed]

- Ekegren, C.L.; Gabbe, B.J.; Finch, C.F. Sports Injury Surveillance Systems: A Review of Methods and Data Quality. Sports Med. 2016, 46, 49–65. [Google Scholar] [CrossRef]

- van der Kruk, E.; Reijne, M.M. Accuracy of human motion capture systems for sport applications; state-of-the-art review. Eur. J. Sport Sci. 2018, 18, 806–819. [Google Scholar] [CrossRef] [PubMed]

- Cust, E.E.; Sweeting, A.J.; Ball, K.; Robertson, S. Machine and deep learning for sport-specific movement recognition: A systematic review of model development and performance. J. Sports Sci. 2019, 37, 568–600. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Cui, C.; Jiang, S. Strategy for improving the football teaching quality by AI and metaverse-empowered in mobile internet environment. Wireless Netw. 2022. [Google Scholar] [CrossRef]

- Robertson, D.G.E.; Caldwell, G.E.; Hamill, J.; Kamen, G.; Whittlesey, S. Research Methods in Biomechanics; Human kinetics: Champaign, IL, USA, 2013. [Google Scholar]

- Whitting, J.; Steele, J.; McGhee, D.; Munro, B. Different measures of plantar-flexor flexibility and their effects on landing technique: Implications for injury. J. Sci. Med. Sport 2010, 13, e48. [Google Scholar] [CrossRef]

- Rizaldy, N.; Ferryanto, F.; Sugiharto, A.; Mahyuddin, A.I. Evaluation of action sport camera optical motion capture system for 3D gait analysis. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1109, 012024. [Google Scholar] [CrossRef]

- Schmidt, M.; Nolte, K.; Kolodziej, M.; Ulbricht, A.; Jaitner, T. Accuracy of Three Global Positioning Systems for Determining Speed and Distance Parameters in Professional Soccer. In 13th World Congress of Performance Analysis of Sport and 13th International Symposium on Computer Science in Sport; Baca, A., Exel, J., Eds.; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2023; pp. 174–177. [Google Scholar] [CrossRef]

- Benjaminse, A.; Bolt, R.; Gokeler, A.; Otten, B. A validity study comparing xsens with vicon. ISBS Proc. Arch. 2020, 38, 752. [Google Scholar]

- Umek, A.; Kos, A. Validation of UWB positioning systems for player tracking in tennis. Pers. Ubiquit. Comput. 2022, 26, 1023–1033. [Google Scholar] [CrossRef]

- Houtmeyers, K.C.; Helsen, W.F.; Jaspers, A.; Nanne, S.; McLaren, S.; Vanrenterghem, J.; Brink, M.S. Monitoring Elite Youth Football Players’ Physiological State Using a Small-Sided Game: Associations With a Submaximal Running Test. Int. J. Sports Physiol. Perform. 2022, 17, 1439–1447. [Google Scholar] [CrossRef]

- Groos, D.; Ramampiaro, H.; Ihlen, E.A. EfficientPose: Scalable single-person pose estimation. Appl. Intell. 2021, 51, 2518–2533. [Google Scholar] [CrossRef]

- Aouaidjia, K.; Sheng, B.; Li, P.; Kim, J.; Feng, D.D. Efficient Body Motion Quantification and Similarity Evaluation Using 3-D Joints Skeleton Coordinates. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 2774–2788. [Google Scholar] [CrossRef]

- Li, Y.-C.; Chang, C.-T.; Cheng, C.-C.; Huang, Y.-L. Baseball Swing Pose Estimation Using OpenPose. In Proceedings of the 2021 IEEE International Conference on Robotics, Automation and Artificial Intelligence (RAAI), Hong Kong, China, 21–23 April 2021; pp. 6–9. [Google Scholar] [CrossRef]

- Nguyen, T.D.; Kresovic, M. A survey of top-down approaches for human pose estimation. arXiv 2022, arXiv:2202.02656. [Google Scholar] [CrossRef]

- Liang, D.; Thomaz, E. Audio-Based Activities of Daily Living (ADL) Recognition with Large-Scale Acoustic Embeddings from Online Videos. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 17. [Google Scholar] [CrossRef]

- Gurbuz, S.Z.; Rahman, M.M.; Kurtoglu, E.; Martelli, D. Continuous Human Activity Recognition and Step-Time Variability Analysis with FMCW Radar. In Proceedings of the 2022 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Ioannina, Greece, 27–30 September 2022; pp. 1–04. [Google Scholar] [CrossRef]

- Sheng, B.; Xiao, F.; Sha, L.; Sun, L. Deep Spatial–Temporal Model Based Cross-Scene Action Recognition Using Commodity WiFi. IEEE Internet Things J. 2020, 7, 3592–3601. [Google Scholar] [CrossRef]

- Brodie, M.; Walmsley, A.; Page, W. Fusion motion capture: A prototype system using inertial measurement units and GPS for the biomechanical analysis of ski racing. Sports Technol. 2008, 1, 17–28. [Google Scholar] [CrossRef]

- Corazza, S.; Mündermann, L.; Gambaretto, E.; Ferrigno, G.; Andriacchi, T.P. Markerless Motion Capture through Visual Hull, Articulated ICP and Subject Specific Model Generation. Int. J. Comput. Vis. 2009, 87, 156. [Google Scholar] [CrossRef]

- Maletsky, L.P.; Sun, J.; Morton, N.A. Accuracy of an optical active-marker system to track the relative motion of rigid bodies. J. Biomech. 2007, 40, 682–685. [Google Scholar] [CrossRef]

- Thewlis, D.; Bishop, C.; Daniell, N.; Paul, G. Next-Generation Low-Cost Motion Capture Systems Can Provide Comparable Spatial Accuracy to High-End Systems. J. Appl. Biomech. 2013, 29, 112–117. [Google Scholar] [CrossRef]

- Stancic, I.; Grujic Supuk, T.; Panjkota, A. Design, development and evaluation of optical motion-tracking system based on active white light markers. IET Sci. Meas. Technol. 2013, 7, 206–214. [Google Scholar] [CrossRef]

- Spörri, J.; Schiefermüller, C.; Müller, E. Collecting Kinematic Data on a Ski Track with Optoelectronic Stereophotogrammetry: A Methodological Study Assessing the Feasibility of Bringing the Biomechanics Lab to the Field. PLoS ONE 2016, 11, e0161757. [Google Scholar] [CrossRef]

- Panjkota, A. Outline of a Qualitative Analysis for the Human Motion in Case of Ergometer Rowing. In Proceedings of the 9th WSEAS International Conference on Simulation, Modelling and Optimization, Budapest, Hungary, 3–5 September 2009. [Google Scholar]

- Colloud, F.; Chèze, L.; André, N.; Bahuaud, P. An innovative solution for 3d kinematics measurement for large volumes. J. Biomech. 2008, 41 (Suppl. 1), S57. [Google Scholar] [CrossRef]

- Van der Kruk, E. Modelling and Measuring 3D Movements of a Speed Skater. 2013. Available online: https://repository.tudelft.nl/islandora/object/uuid%3A2a54e547-0a5a-468b-be80-a41a656cacc1 (accessed on 23 February 2023).

- Depenthal, C. iGPS used as kinematic measuring system. In Proceedings of the FIG Congress, Sydney, Australia, 11–16 April 2010; pp. 11–16. [Google Scholar]

- Stelzer, A.; Pourvoyeur, K.; Fischer, A. Concept and application of LPM—A novel 3-D local position measurement system. IEEE Trans. Microw. Theory Tech. 2004, 52, 2664–2669. [Google Scholar] [CrossRef]

- Pasku, V.; De Angelis, A.; De Angelis, G.; Arumugam, D.D.; Dionigi, M.; Carbone, P.; Moschitta, A.; Ricketts, D.S. Magnetic Field-Based Positioning Systems. IEEE Commun. Surv. Tutorials 2017, 19, 2003–2017. [Google Scholar] [CrossRef]

- Rana, M.; Mittal, V. Wearable Sensors for Real-Time Kinematics Analysis in Sports: A Review. IEEE Sensors J. 2021, 21, 1187–1207. [Google Scholar] [CrossRef]

- Perrat, B.; Smith, M.J.; Mason, B.S.; Rhodes, J.M.; Goosey-Tolfrey, V.L. Quality assessment of an Ultra-Wide Band positioning system for indoor wheelchair court sports. Proc. Inst. Mech. Eng. Part P J. Sports Eng. Technol. 2015, 229, 81–91. [Google Scholar] [CrossRef]

- Resch, A.; Pfeil, R.; Wegener, M.; Stelzer, A. Review of the LPM local positioning measurement system. In Proceedings of the 2012 International Conference on Localization and GNSS, Starnberg, Germany, 25–27 June 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Stevens, T.G.A.; de Ruiter, C.J.; van Niel, C.; van de Rhee, R.; Beek, P.J.; Savelsbergh, G.J.P. Measuring Acceleration and Deceleration in Soccer-Specific Movements Using a Local Position Measurement (LPM) System. Int. J. Sports Physiol. Perform. 2014, 9, 446–456. [Google Scholar] [CrossRef]

- Gardner, C.; Navalta, J.W.; Carrier, B.; Aguilar, C.; Perdomo Rodriguez, J. Training Impulse and Its Impact on Load Management in Collegiate and Professional Soccer Players. Technologies 2023, 11, 79. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Pandey, M.; Fernandez, M.; Gentile, F.; Isayev, O.; Tropsha, A.; Stern, A.C.; Cherkasov, A. The transformational role of GPU computing and deep learning in drug discovery. Nat. Mach. Intell. 2022, 4, 211–221. [Google Scholar] [CrossRef]

- Papadrakakis, M.; Stavroulakis, G.; Karatarakis, A. A new era in scientific computing: Domain decomposition methods in hybrid CPU–GPU architectures. Comput. Methods Appl. Mech. Eng. 2011, 200, 1490–1508. [Google Scholar] [CrossRef]

- Abraham, M.J.; Murtola, T.; Schulz, R.; Páll, S.; Smith, J.C.; Hess, B.; Lindahl, E. GROMACS: High performance molecular simulations through multi-level parallelism from laptops to supercomputers. SoftwareX 2015, 1–2, 19–25. [Google Scholar] [CrossRef]

- Li, J.; Zhang, P.; Wang, T.; Zhu, L.; Liu, R.; Yang, X.; Wang, K.; Shen, D.; Sheng, B. DSMT-Net: Dual Self-Supervised Multi-Operator Transformation for Multi-Source Endoscopic Ultrasound Diagnosis. IEEE Trans. Med. Imaging 2024, 43, 64–75. [Google Scholar] [CrossRef]

- Dai, L.; Sheng, B.; Chen, T.; Wu, Q.; Liu, R.; Cai, C.; Wu, L.; Yang, D.; Hamzah, H.; Liu, Y.; et al. A deep learning system for predicting time to progression of diabetic retinopathy. Nat. Med. 2024, 30, 584–594. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Ou, L.; Sheng, B.; Hao, P.; Li, P.; Yang, X.; Xue, G.; Zhu, L.; Luo, Y.; Zhang, P.; et al. Mixed-Weight Neural Bagging for Detecting m6A Modifications in SARS-CoV-2 RNA Sequencing. IEEE Trans. Biomed. Eng. 2022, 69, 2557–2568. [Google Scholar] [CrossRef]

- Thomas, G.; Gade, R.; Moeslund, T.B.; Carr, P.; Hilton, A. Computer vision for sports: Current applications and research topics. Comput. Vis. Image Underst. 2017, 159, 3–18. [Google Scholar] [CrossRef]

- Chen, Z.; Qiu, G.; Li, P.; Zhu, L.; Yang, X.; Sheng, B. MNGNAS: Distilling Adaptive Combination of Multiple Searched Networks for One-Shot Neural Architecture Search. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13489–13508. [Google Scholar] [CrossRef]

- Wang, J.; Tan, S.; Zhen, X.; Xu, S.; Zheng, F.; He, Z.; Shao, L. Deep 3D human pose estimation: A review. Comput. Vis. Image Underst. 2021, 210, 103225. [Google Scholar] [CrossRef]

- Sheng, B.; Li, P.; Ali, R.; Chen, C.L.P. Improving Video Temporal Consistency via Broad Learning System. IEEE Trans. Cybern. 2022, 52, 6662–6675. [Google Scholar] [CrossRef]

- Chen, X.; Pang, A.; Yang, W.; Ma, Y.; Xu, L.; Yu, J. SportsCap: Monocular 3D Human Motion Capture and Fine-Grained Understanding in Challenging Sports Videos. Int. J. Comput. Vis. 2021, 129, 2846–2864. [Google Scholar] [CrossRef]

- Guo, H.; Zou, S.; Lai, C.; Zhang, H. PhyCoVIS: A visual analytic tool of physical coordination for cheer and dance training. Comput. Animat. Virtual Worlds 2021, 32, e1975. [Google Scholar] [CrossRef]

- Mehta, D.; Rhodin, H.; Casas, D.; Fua, P.; Sotnychenko, O.; Xu, W.; Theobalt, C. Monocular 3D Human Pose Estimation in the Wild Using Improved CNN Supervision. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; IEEE: New York, NY, USA, 2017; pp. 506–516. [Google Scholar]

- He, Y.; Wang, Y.; Fan, H.; Sun, J.; Chen, Q. FS6D: Few-Shot 6D Pose Estimation of Novel Objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6814–6824. Available online: https://openaccess.thecvf.com/content/CVPR2022/html/He_FS6D_Few-Shot_6D_Pose_Estimation_of_Novel_Objects_CVPR_2022_paper.html (accessed on 13 March 2023).

- Li, X.; He, Y.; Jing, X. A Survey of Deep Learning-Based Human Activity Recognition in Radar. Remote Sens. 2019, 11, 1068. [Google Scholar] [CrossRef]

- von Marcard, T.; Pons-Moll, G.; Rosenhahn, B. Human Pose Estimation from Video and IMUs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1533–1547. [Google Scholar] [CrossRef]

- Shen, H.-M.; Lian, C.; Wu, X.-W.; Bian, F.; Yu, P.; Yang, G. Full-pose estimation using inertial and magnetic sensor fusion in structurized magnetic field for hand motion tracking. Measurement 2021, 170, 108697. [Google Scholar] [CrossRef]

- Hasegawa, S.; Ishijima, S.; Kato, F.; Mitake, H.; Sato, M. Realtime sonification of the center of gravity for skiing. In Proceedings of the 3rd Augmented Human International Conference on—AH ’12, Megève, France, 8–9 March 2012; ACM Press: New York, NY, USA, 2012; pp. 1–4. [Google Scholar]

- Kos, A. Smart sport equipment: SmartSki prototype for biofeedback applications in skiing. Pers. Ubiquitous Comput. 2017, 22, 535–544. [Google Scholar] [CrossRef]

- Hwang, D.-H.; Aso, K.; Yuan, Y.; Kitani, K.; Koike, H. MonoEye: Multimodal Human Motion Capture System Using A Single Ultra-Wide Fisheye Camera. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, UIST ’20, Virtual, 20–23 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 98–111. [Google Scholar] [CrossRef]

- Pons-Moll, G.; Baak, A.; Helten, T.; Müller, M.; Seidel, H.-P.; Rosenhahn, B. Multisensor-fusion for 3D full-body human motion capture. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 663–670. [Google Scholar] [CrossRef]

- Winter, D.A. Biomechanics and Motor Control of Human Movement; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Ángel López, J.; Segura-Giraldo, B.; Rodríguez-Sotelo, L.; García-Solano, K. Kinematic Soccer Kick Analysis Using a Motion Capture System. In Proceedings of the VII Latin American Congress on Biomedical Engineering CLAIB 2016, Bucaramanga, Colombia, 26–28 October 2016; pp. 682–685. [Google Scholar] [CrossRef]

- Yang, J.; Li, T.; Chen, Z.; Li, X. Research on the Method of Underwater Swimming Motion Capture. In Proceedings of the 2021 IEEE 7th International Conference on Virtual Reality (ICVR), Foshan, China, 20–22 May 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Xu, S.; Lee, S. An Inertial Sensing-Based Approach to Swimming Pose Recognition and Data Analysis. J. Sens. 2022, 2022, e5151105. [Google Scholar] [CrossRef]

- Zhao, X.; Ross, G.; Dowling, B.; Graham, R.B. Three-Dimensional Motion Capture Data of a Movement Screen from 183 Athletes. Sci. Data 2023, 10, 235. [Google Scholar] [CrossRef]

- Noureen, S.; Shamim, N.; Roy, V.; Bayne, S. Real-Time Digital Simulators: A Comprehensive Study on System Overview, Application, and Importance. Int. J. Res. Eng. 2017, 4, 266–277. [Google Scholar] [CrossRef]

- Sheng, B.; Li, P.; Zhang, Y.; Mao, L.; Chen, C.L.P. GreenSea: Visual Soccer Analysis Using Broad Learning System. IEEE Trans. Cybern. 2021, 51, 1463–1477. [Google Scholar] [CrossRef]

- Rekant, J.; Rothenberger, S.; Chambers, A. Inertial measurement unit-based motion capture to replace camera-based systems for assessing gait in healthy young adults: Proceed with caution. Meas. Sens. 2022, 23, 100396. [Google Scholar] [CrossRef]

- Pons, E.; García-Calvo, T.; Resta, R.; Blanco, H.; del Campo, R.L.; García, J.D.; Pulido, J.J. A comparison of a GPS device and a multi-camera video technology during official soccer matches: Agreement between systems. PLoS ONE 2019, 14, e0220729. [Google Scholar] [CrossRef] [PubMed]

- Ostrek, M.; Rhodin, H.; Fua, P.; Müller, E.; Spörri, J. Are Existing Monocular Computer Vision-Based 3D Motion Capture Approaches Ready for Deployment? A Methodological Study on the Example of Alpine Skiing. Sensors 2019, 19, 4323. [Google Scholar] [CrossRef] [PubMed]

- Nibali, A.; Millward, J.; He, Z.; Morgan, S. ASPset: An outdoor sports pose video dataset with 3D keypoint annotations. Image Vis. Comput. 2021, 111, 104196. [Google Scholar] [CrossRef]

- Akada, H.; Wang, J.; Shimada, S.; Takahashi, M.; Theobalt, C.; Golyanik, V. UnrealEgo: A New Dataset for Robust Egocentric 3D Human Motion Capture. arXiv 2022, arXiv:2208.01633. [Google Scholar] [CrossRef]

- Begon, M.; Colloud, F.; Fohanno, V.; Bahuaud, P.; Monnet, T. Computation of the 3D kinematics in a global frame over a 40m-long pathway using a rolling motion analysis system. J. Biomech. 2009, 42, 2649–2653. [Google Scholar] [CrossRef]

- Wang, J.; Wang, S.; Wang, Y.; Hu, H.; Yu, J.; Zhao, X.; Liu, J.; Chen, X.; Li, Y. A data process of human knee joint kinematics obtained by motion-capture measurement. BMC Med. Inform. Decis. Mak. 2021, 21, 121. [Google Scholar] [CrossRef]

- Saini, N.; Price, E.; Tallamraju, R.; Enficiaud, R.; Ludwig, R.; Martinovic, I.; Ahmad, A.; Black, M.J. Markerless Outdoor Human Motion Capture Using Multiple Autonomous Micro Aerial Vehicles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 823–832. Available online: https://openaccess.thecvf.com/content_ICCV_2019/html/Saini_Markerless_Outdoor_Human_Motion_Capture_Using_Multiple_Autonomous_Micro_Aerial_ICCV_2019_paper.html (accessed on 3 March 2023).

| Category | Application Example | ||

|---|---|---|---|

| Cinematography Capture | Active Marker | Landing Technique [20] | |

| Passive Marker | Gait Analysis [21] | ||

| Electromagnetic Capture Systems | GNSS | Soccer Player Kinematic Data Acquisition [22] | |

| IMU | Motion Data Validation [23] | ||

| UWB | Tennis Player Positioning [24] | ||

| LPM | Youth Soccer Performance [25] | ||

| Computer Vision Capture | Single-Person | 2D | Gait Analysis [26] |

| 3D | Handball Action Analysis [27] | ||

| Multi-Person | Bottom-Up | Baseball Swing Assessment [28] | |

| Top-Down | Gait Analysis [29] | ||

| Other | Audio Modality | Activity Recognition [30] | |

| Radar Modality | Activity Recognition [31] | ||

| Wi-Fi Modality | Cross-scene Action Recognition [32] | ||

| Fusion Modality | Ski Racing Biomechanics [33] | ||

| Motion Capture Technology | Accuracy | Advantages | Constraints | Robustness | Repeatability | Reliability | Sports Scenarios | Sports Applications |

|---|---|---|---|---|---|---|---|---|

| Cinematography | High | High accuracy, suitable for complex movements | Limited capture volume, marker occlusion | Medium | High | High | Lab-based analysis, technique evaluation | Biomechanical analysis, technique optimization, injury prevention |

| Wearable Sensors | Medium | No marker occlusion, large capture volume, real-time tracking | Prone to electromagnetic interference, lower accuracy than optical systems | High | High | Medium to High | Indoor and outdoor training, competition monitoring | Real-time performance tracking, load monitoring, tactical analysis |

| Computer Vision | Medium to High | Markerless tracking, flexible setup | Line of sight, lighting, computationally intensive, sensitive to lighting conditions | Medium to High | High | Medium | Lab-based analysis, technique evaluation | Biomechanical analysis, technique optimization, movement pattern recognition |

| Others (e.g., Fusion Modality) | High | Integrating the advantages of multiple sensors | Sensor synchronization | High | High | High | Comprehensive performance analysis | Multifaceted performance assessment, injury risk prediction |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suo, X.; Tang, W.; Li, Z. Motion Capture Technology in Sports Scenarios: A Survey. Sensors 2024, 24, 2947. https://doi.org/10.3390/s24092947

Suo X, Tang W, Li Z. Motion Capture Technology in Sports Scenarios: A Survey. Sensors. 2024; 24(9):2947. https://doi.org/10.3390/s24092947

Chicago/Turabian StyleSuo, Xiang, Weidi Tang, and Zhen Li. 2024. "Motion Capture Technology in Sports Scenarios: A Survey" Sensors 24, no. 9: 2947. https://doi.org/10.3390/s24092947