Energy-Efficient Neuromorphic Architectures for Nuclear Radiation Detection Applications

Abstract

1. Introduction

2. Materials and Methods

3. Results

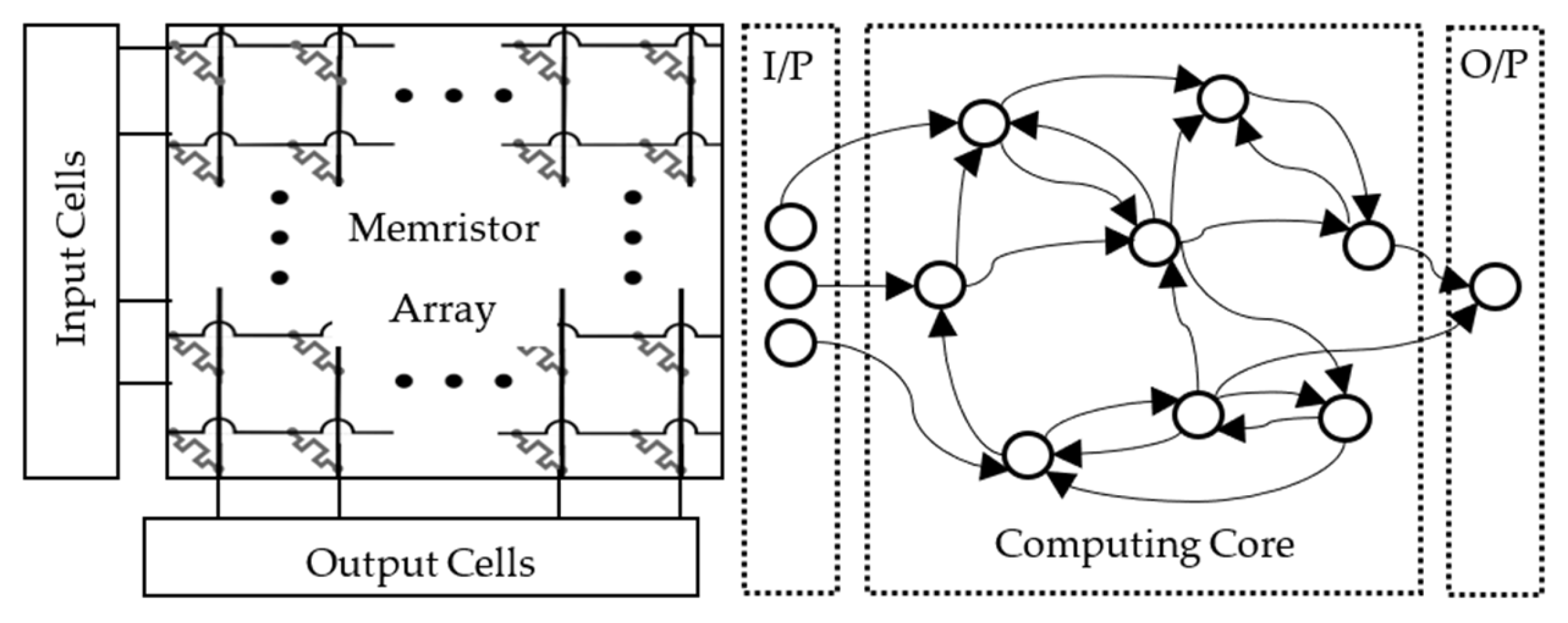

3.1. Analog Signal Architecture

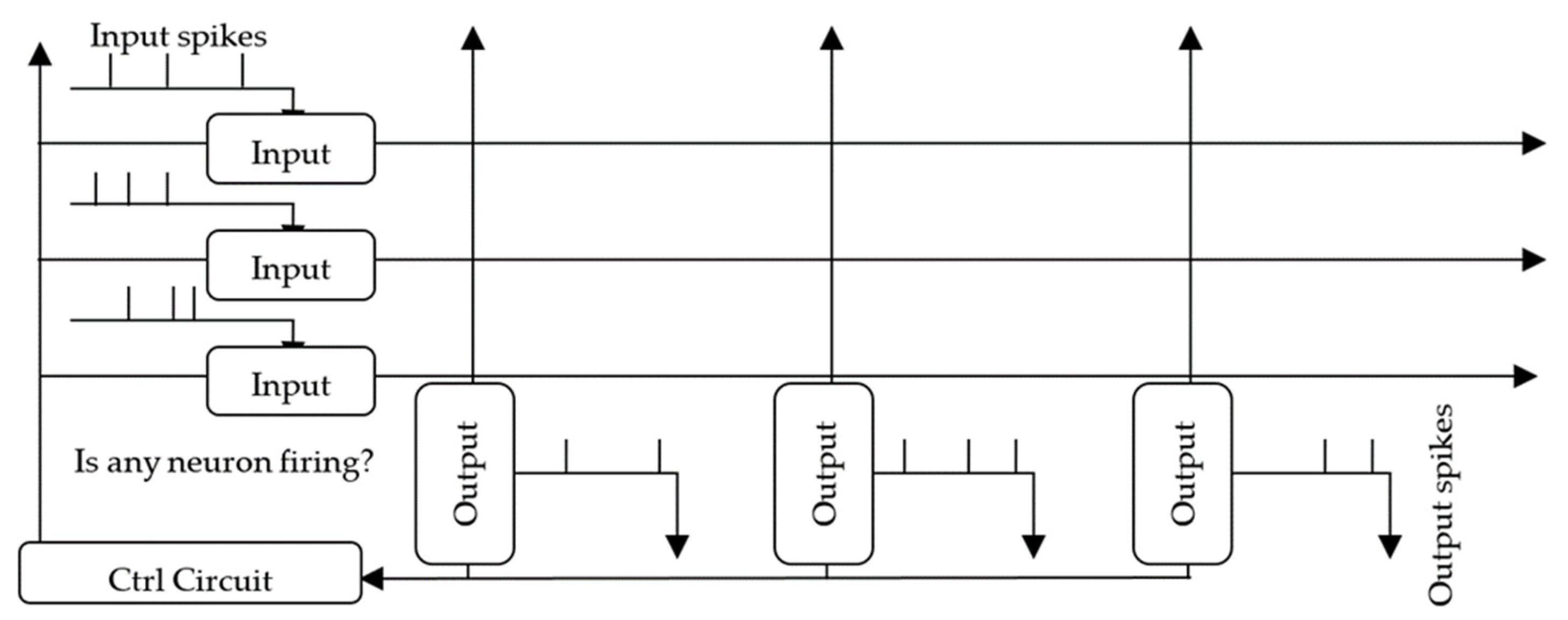

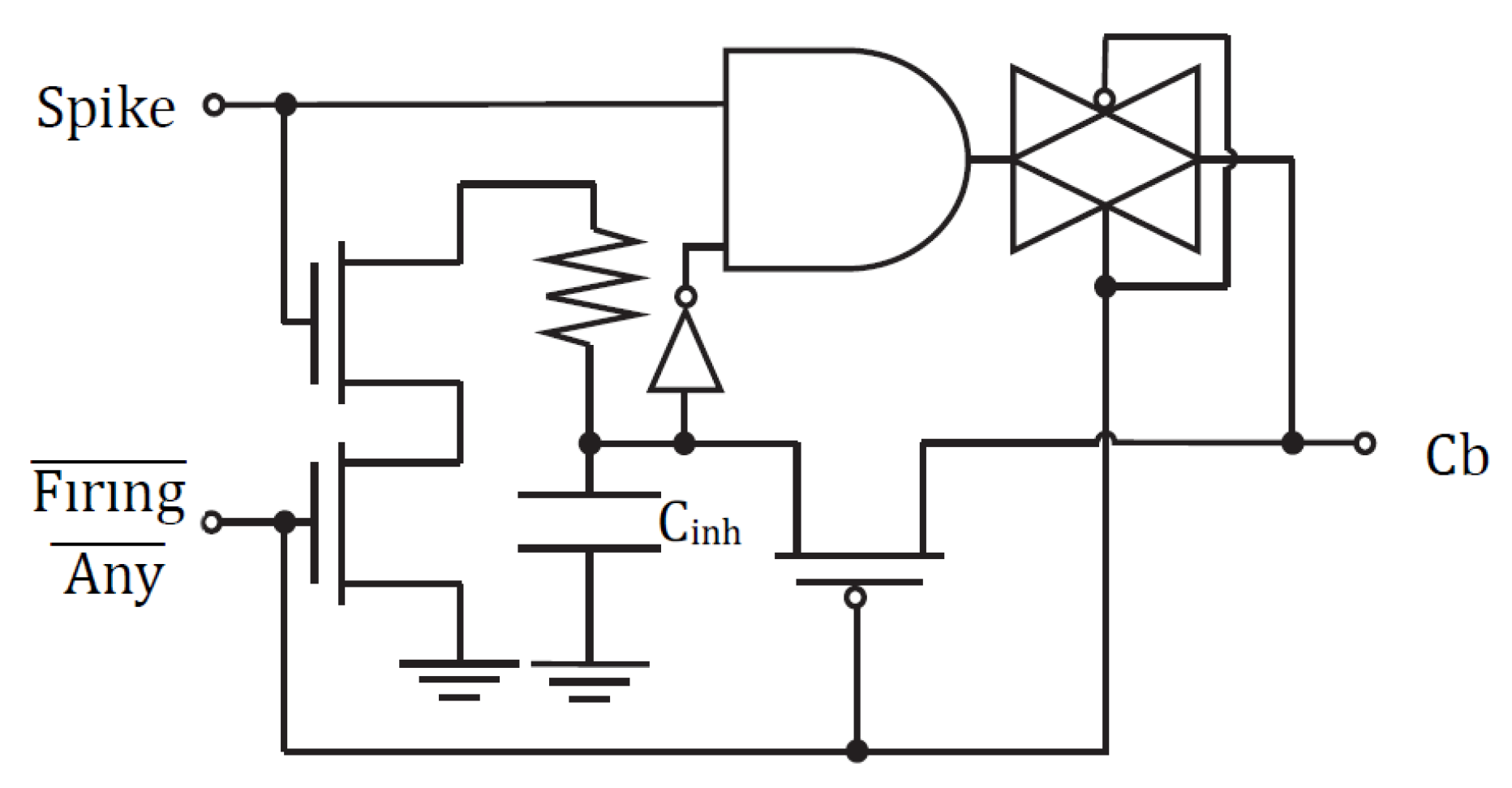

3.2. Mixed-Signal Spiking Architecture

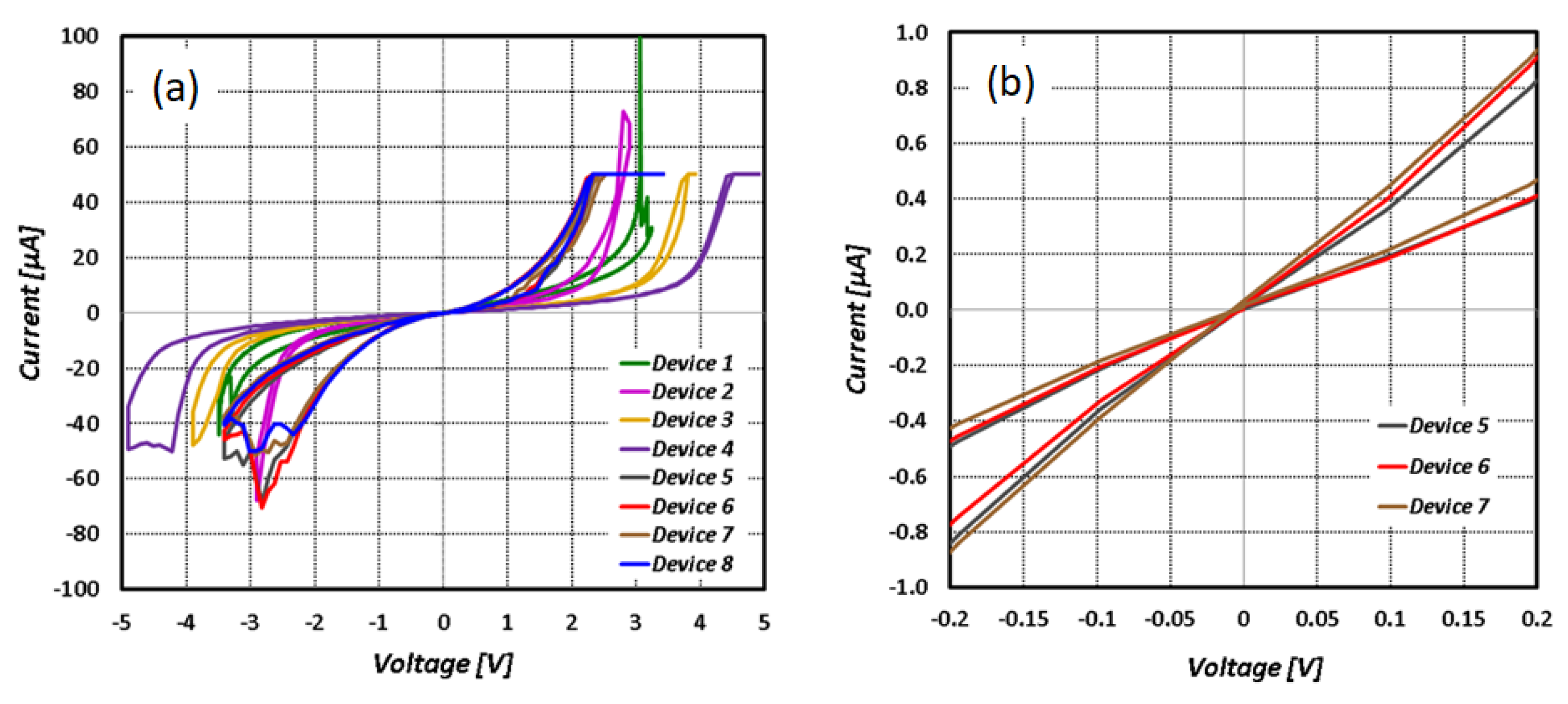

3.3. Al2O3/HfO2 ReRAM Crossbar Array Fabrication

3.4. Characterization of Al2O3/HfO2 ReRAM Devices

- Form Step: The voltage was incremented in 0.25–0.5 V steps until a low-resistance pathway was formed, hitting a steady-state compliance current. We considered a device to be open when it failed to hit the compliance current despite the voltage being increased to 10 V.

- Reset Step: Reverse bias voltage was applied using 0.5 V step decrements until the high-resistance state remained stable. The compliance current was disabled, as the current was throttled in a high-resistance state. However, a small risk of dielectric breakdown remained if the device state failed to change.

- Cycle Step: A compliance current was set, and the on/off voltage was adjusted to the device switch values.

3.5. Programming the Radionuclide Detection Dictionary

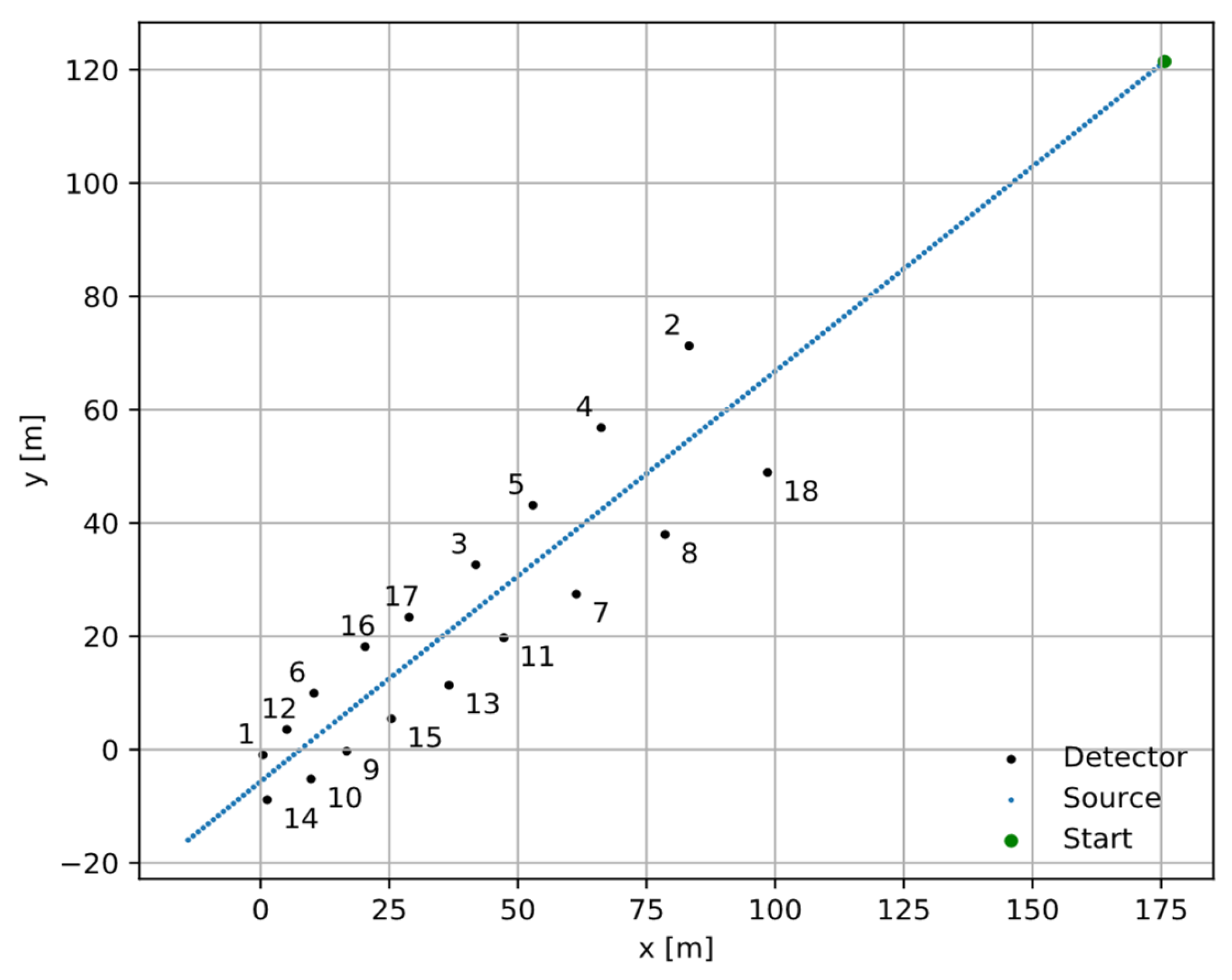

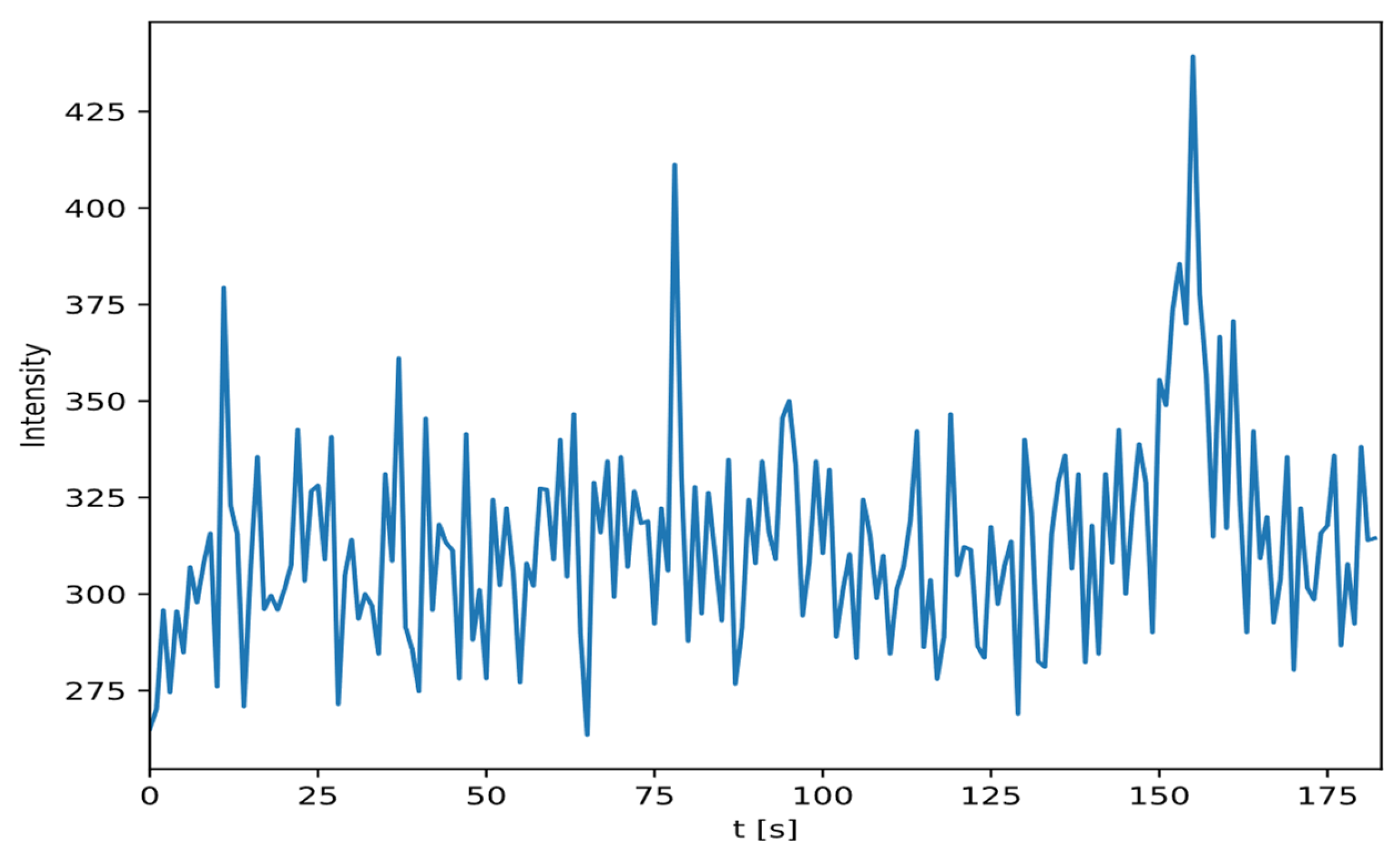

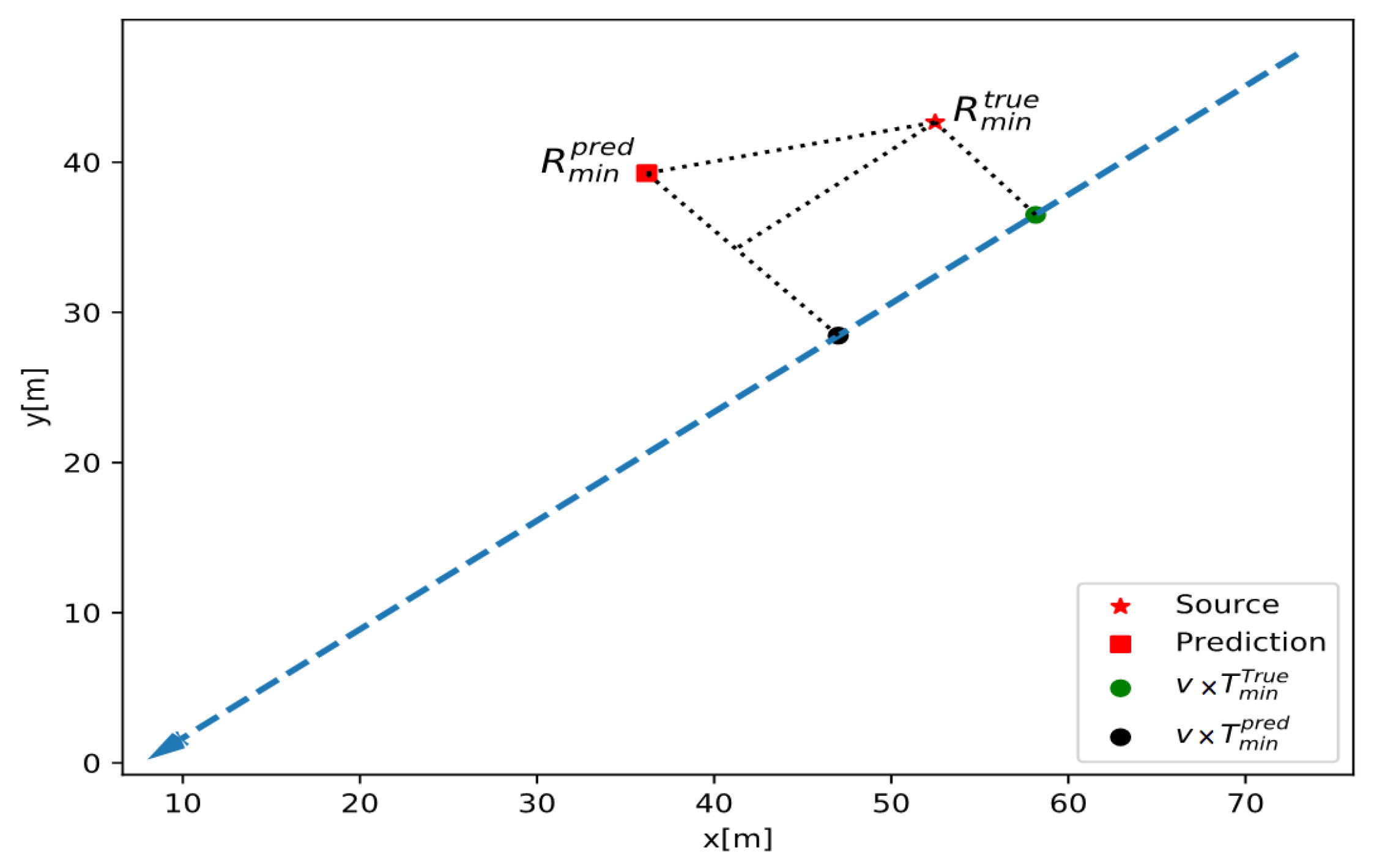

3.6. Localization Task Algorithm

4. Discussion

4.1. Radionuclide Classification

4.2. Radionuclide Localization

4.3. CMOS Neuron Energy Consumption per Spike

4.4. Overall System Energy Consumption

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Maass, W. Energy-efficient neural network chips approach human recognition capabilities. Proc. Natl. Acad. Sci. USA 2016, 113, 11387–11389. [Google Scholar] [CrossRef] [PubMed]

- Esser, S.K.; Merolla, P.A.; Arthur, J.V.; Cassidy, A.S.; Appuswamy, R.; Andreopoulos, A.; Berg, D.J.; McKinstry, J.L.; Melano, T.; Barch, D.R.; et al. Convolutional networks for fast, energy-efficient neuromorphic computing. Proc. Natl. Acad. Sci. USA 2016, 113, 11441–11446. [Google Scholar] [CrossRef] [PubMed]

- Carson, M.; Woods, W.; Reynolds, S.; Wetzel, M.; Morton, A.J.; Hecht, A.A.; Osiński, M.; Teuscher, C. Application of a simple, spiking, locally competitive algorithm to radionuclide identification. IEEE Trans. Nucl. Sci. 2021, 68, 292–304. [Google Scholar] [CrossRef]

- Koo, B.T.; Lee, H.C.; Bae, K.; Kim, Y.; Jung, J.; Park, C.S.; Kim, H.-S.; Min, C.H. Development of a radionuclide identification algorithm based on a convolutional neural network for radiation portal monitoring system. Radiat. Phys. Chem. 2021, 180, 109300. [Google Scholar] [CrossRef]

- Cai, E.; Juan, D.C.; Stamoulis, D.; Marculescu, D. Neuralpower: Predict and deploy energy-efficient convolutional neural networks. In Proceedings of the Asian Conference on Machine Learning, PMLR, Seoul, Republic of Korea, 15–17 November 2017; pp. 622–637. [Google Scholar]

- Crutchfield, J.P.; Ditto, W.L.; Sinha, S. Introduction to focus issue: Intrinsic and designed computation: Information processing in dynamical systems—Beyond the digital hegemony. Chaos 2010, 20, 037101. [Google Scholar] [CrossRef]

- Gaba, S.; Sheridan, P.; Zhou, J.; Choi, S.; Lu, W. Stochastic memristive devices for computing and neuromorphic applications. Nanoscale 2013, 5, 5872–5878. [Google Scholar] [CrossRef] [PubMed]

- Indiveri, G.; Linares-Barranco, B.; Legenstein, R.; Deligeorgis, G.; Prodromakis, T. Integration of nanoscale memristor synapses in neuromorphic computing architectures. Nanotechnology 2013, 24, 384010. [Google Scholar] [CrossRef] [PubMed]

- Scardapane, S.; Wang, D.H. Randomness in neural networks: An overview. WIREs Data Mining Knowl. Discov. 2017, 7, e1200. [Google Scholar] [CrossRef]

- Querlioz, D.; Bichler, O.; Dollfus, P.; Gamrat, C. Immunity to device variations in a spiking neural network with memristive nanodevices. IEEE Trans. Nanotechnol. 2013, 12, 288–295. [Google Scholar] [CrossRef]

- Canales-Verdial, J.I.; Woods, W.; Teuscher, C.; Osiński, M.; Zarkesh-Ha, P. Impact of memristor defects in a neuromorphic radionuclide identification system. In Proceedings of the 2020 IEEE International Symposium on Circuits and Systems (ISCAS), Sevilla, Spain, 10–21 October 2020. [Google Scholar] [CrossRef]

- Bürger, J.; Teuscher, C. Variation-tolerant computing with memristive reservoirs. In Proceedings of the 2013 IEEE/ACM International Symposium on Nanoscale Architectures (NANOARCH), Brooklyn, NY, USA, 15–17 July 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Gan, L.; Wang, C.; Chen, L.; Zhu, H.; Sun, Q.; Zhang, D.W. An Enhanced Floating Gate Memory for the Online Training of Analog Neural Networks. IEEE J. Electron Devices Soc. 2020, 8, 84–91. [Google Scholar] [CrossRef]

- Nawaz, A.; Merces, L.; Ferro, L.M.M.; Sonar, P.; Bufon, C.C.B. Impact of Planar and Vertical Organic Field-Effect Transistors on Flexible Electronics. Adv. Mater. 2023, 35, 2204804. [Google Scholar] [CrossRef] [PubMed]

- Demasius, K.U.; Kirschen, A.; Parkin, S. Energy-efficient memcapacitor devices for neuromorphic computing. Nat. Electron. 2021, 4, 748–756. [Google Scholar] [CrossRef]

- Jeong, D.S.; Kim, K.M.; Kim, S.H.; Choi, B.J.; Hwang, C.S.; Jeong, D.S.; Kim, K.M.; Kim, S.H.; Choi, B.J.; Hwang, C.S. Memristors for energy-efficient new computing paradigms. Adv. Electron. Mater. 2016, 2, 1600090. [Google Scholar] [CrossRef]

- Canales-Verdial, J.I. Energy-Efficient Memristor-Based Neuromorphic Computing Circuits and Systems for Radiation Detection Application. Ph.D. Thesis, University of New Mexico, Albuquerque, NM, USA, 2023. [Google Scholar]

- Yegnarayana, B. Artificial Neural Networks; PHI Learning Pvt. Ltd.: New Delhi, India, 2009. [Google Scholar]

- Li, C.; Belkin, D.; Li, Y.N.; Yan, P.; Hu, M.; Ge, N.; Jiang, H.; Montgomery, E.; Lin, P.; Wang, Z.R.; et al. Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 2018, 9, 2385. [Google Scholar] [CrossRef] [PubMed]

- Bala, A.; Yang, X.H.; Adeyemo, A.; Jabir, A. A memristive activation circuit for deep learning neural networks. In Proceedings of the 2018 8th International Symp. on Embedded Computing and System Design (ISED), Cochin, India, 13–15 December 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Paiton, D.M. Analysis and Applications of the Locally Competitive Algorithm; University of California, Berkeley ProQuest Dissertations Publishing: Berkeley, CA, USA, 2019; p. 13885693. [Google Scholar]

- Rozell, C.J.; Johnson, D.H.; Baraniuk, R.G.; Olshausen, B.A. Sparse coding via thresholding and local competition in neural circuits. Neural Comput. 2008, 20, 2526–2563. [Google Scholar] [CrossRef] [PubMed]

- Shapero, S.; Charles, A.S.; Rozell, C.J.; Hasler, P. Low power sparse approximation on reconfigurable analog hardware. IEEE J. Emerg. Sel. Top. Circuits Syst. 2012, 2, 530–541. [Google Scholar] [CrossRef]

- Woods, W.; Teuscher, C. Fast and Accurate Sparse Coding of Visual Stimuli with a Simple, Ultra-Low-Energy Spiking Architecture. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 2173–2187. [Google Scholar] [CrossRef] [PubMed]

- Molina, J.; Thamankar, R.; Pey, K.L. Performance of ultra-thin HfO2-based MIM devices after oxygen modulation and post-metallization annealing in N2. Phys. Status Solidi A 2016, 213, 1807–1813. [Google Scholar] [CrossRef]

- DeIonno, E.; Looper, M.D.; Osborn, J.V.; Barnaby, H.J.; Tong, W.M. Radiation effects studies on thin film TiO2 memristor devices. In Proceedings of the 2013 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2013; pp. 1–8. [Google Scholar]

- Tuli, J.K. Nuclear Wallet Cards for Radioactive Nuclides; International Atomic Energy Agency: New York, NY, USA, 2004; p. 121. [Google Scholar]

- Rao, N.S.; Sen, S.; Berry, M.L.; Wu, Q.S.; Grieme, M.; Brooks, R.R.; Cordone, G. Datasets for Radiation Network Algorithm Development and Testing. Oak Ridge National Lab. (ORNL) Report #1327710, Oak Ridge, TN (United States), January 2016. Available online: https://www.osti.gov/biblio/1327710 (accessed on 28 September 2020).

- Canonical IRSS Datasets. 2016. Available online: https://github.com/raonsv/canonical-datasets (accessed on 1 February 2023).

- Atchadé, Y.F.; Rosenthal, J.S. On adaptive Markov chain Monte Carlo algorithms. Bernoulli 2005, 11, 815–828. [Google Scholar] [CrossRef]

- Liberti, L.; Lavor, C. Euclidean Distance Geometry: An Introduction; Springer Undergraduate Texts in Mathematics and Technology; Springer: Berlin, Germany, 2017; pp. 9–18. ISBN 978-3-319-60792-4. [Google Scholar]

- Liu, G.; Li, Q.; Shi, W.; Liu, Y.; Liu, K.; Yang, X.; Shao, M.; Guo, A.; Huang, X.; Zhang, F.; et al. Ultralow-Power and Multisensory Artificial Synapse Based on Electrolyte-Gated Vertical Organic Transistors. Adv. Funct. Mater. 2022, 32, 2200959. [Google Scholar] [CrossRef]

- Yang, X.; Adeyemo, A.; Bala, A.; Jabir, A. Novel techniques for memristive multifunction logic design. Integration 2019, 65, 219–230. [Google Scholar] [CrossRef]

- Chakma, G.; Adnan, M.M.; Wyer, A.R.; Weiss, R.; Schuman, C.D.; Rose, G.S. Memristive mixed-signal neuromorphic systems: Energy-efficient learning at the circuit-level. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 125–136. [Google Scholar] [CrossRef]

| Nuclide | Major γ-rays (keV) | Nuclide | Major γ-rays (keV) |

|---|---|---|---|

| Na-22 | 511, 1275 | Cr-51 | 320 |

| Mn-56 | 847, 1811, 2113 | Fe-59 | 1099, 1292 |

| Co-57 | 122, 136 | Co-60 | 1332, 1173 |

| Cu-64 | 511 | Ga-66 | 511, 1039, 2752 |

| Ga-67 | 93, 185, 300 | Ga-68 | 511, 1077 |

| Se-75 | 265, 136, 280 | Sr-85 | 514 |

| Ru-103 | 497, 610 | In-111 | 245, 171 |

| I-123 | 159 | I-131 | 364, 637, 284 |

| Cs-137 | 662 | Ba-133 | 356, 81, 303 |

| Ce-144 | 134 | Sm-153 | 103, 70 |

| Eu-152 | 122, 344, 1408 | Ho-166 | 81, 56 |

| Yb-169 | 51, 63, 57 | Ir-192 | 317, 468, 308 |

| Tl-201 | 71, 69, 80 | Bi-207 | 570, 1064, 75 |

| Am-241 | 60 |

| Closest Distance [m] | MCMC [m] | NN [m] |

|---|---|---|

| 3–5 (44 runs) | 2.41 | 3.02 |

| 5–7 (37 runs) | 3.66 | 4.48 |

| 7–9 (44 runs) | 5.35 | 5.75 |

| 9–11 (22 runs) | 7.80 | 7.58 |

| >11 (33 runs) | 11.05 | 9.73 |

| Average | 5.63 | 5.78 |

| Presynaptic Neuron | Postsynaptic Neuron | ||

|---|---|---|---|

| Neuron Phase | Energy per Spike (pJ) | Neuron Phase | Energy per Spike (pJ) |

| Accumulation | 1.1 | Accumulation | 21.8 |

| Idle | negligible | Idle | 3.5 |

| Firing | 17.2 | Firing | 140.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Canales-Verdial, J.I.; Wagner, J.R.; Schmucker, L.A.; Wetzel, M.; Proctor, P.; Carson, M.; Meng, J.; Withers, N.J.; Harris, C.T.; Nogan, J.J.; et al. Energy-Efficient Neuromorphic Architectures for Nuclear Radiation Detection Applications. Sensors 2024, 24, 2144. https://doi.org/10.3390/s24072144

Canales-Verdial JI, Wagner JR, Schmucker LA, Wetzel M, Proctor P, Carson M, Meng J, Withers NJ, Harris CT, Nogan JJ, et al. Energy-Efficient Neuromorphic Architectures for Nuclear Radiation Detection Applications. Sensors. 2024; 24(7):2144. https://doi.org/10.3390/s24072144

Chicago/Turabian StyleCanales-Verdial, Jorge I., Jamison R. Wagner, Landon A. Schmucker, Mark Wetzel, Philippe Proctor, Merlin Carson, Jian Meng, Nathan J. Withers, Charles Thomas Harris, John J. Nogan, and et al. 2024. "Energy-Efficient Neuromorphic Architectures for Nuclear Radiation Detection Applications" Sensors 24, no. 7: 2144. https://doi.org/10.3390/s24072144

APA StyleCanales-Verdial, J. I., Wagner, J. R., Schmucker, L. A., Wetzel, M., Proctor, P., Carson, M., Meng, J., Withers, N. J., Harris, C. T., Nogan, J. J., Webb, D. B., Hecht, A. A., Teuscher, C., Osiński, M., & Zarkesh-Ha, P. (2024). Energy-Efficient Neuromorphic Architectures for Nuclear Radiation Detection Applications. Sensors, 24(7), 2144. https://doi.org/10.3390/s24072144