Convolutional Neural Networks for Raw Signal Classification in CNC Turning Process Monitoring

Abstract

1. Introduction

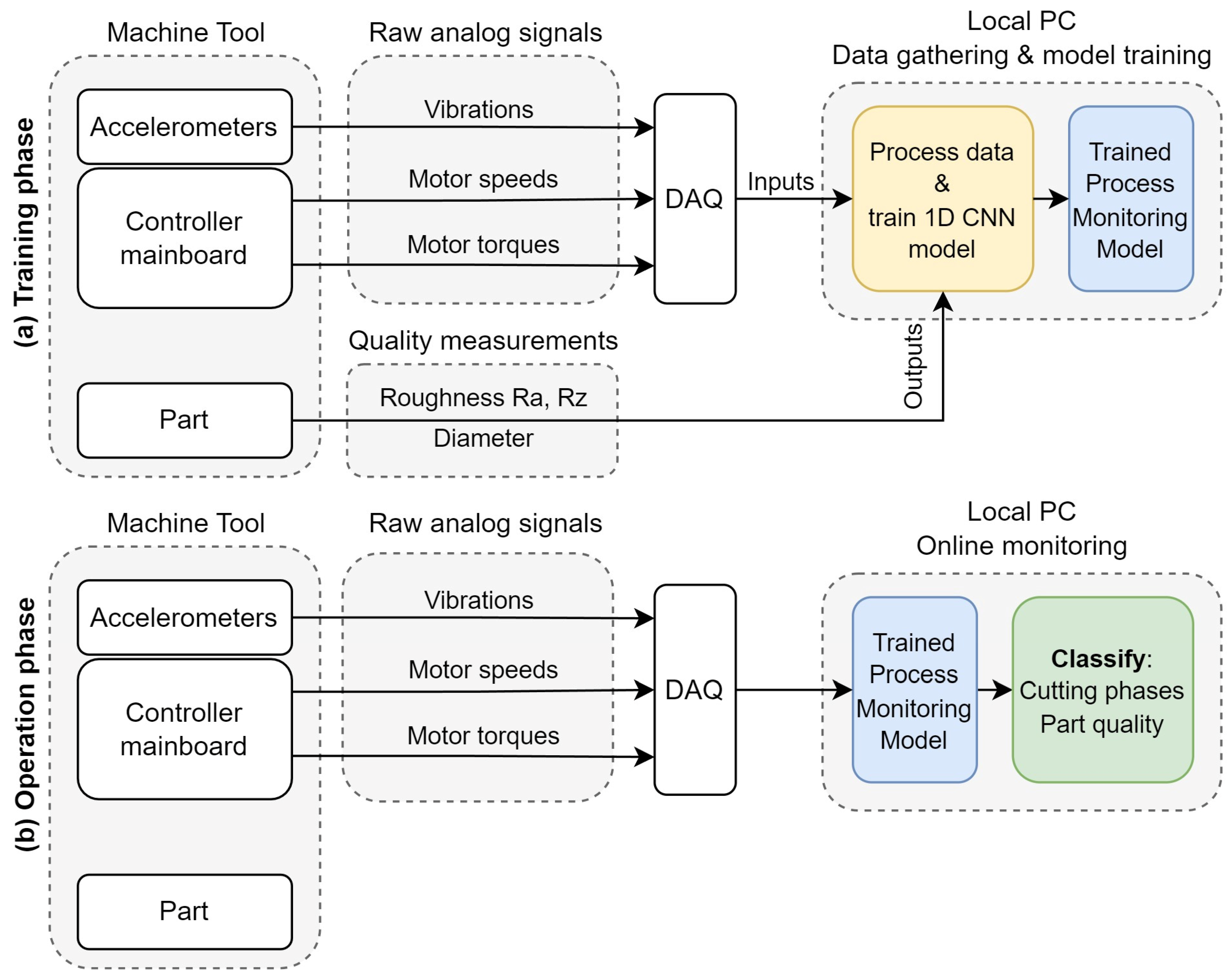

2. Experimental Setup

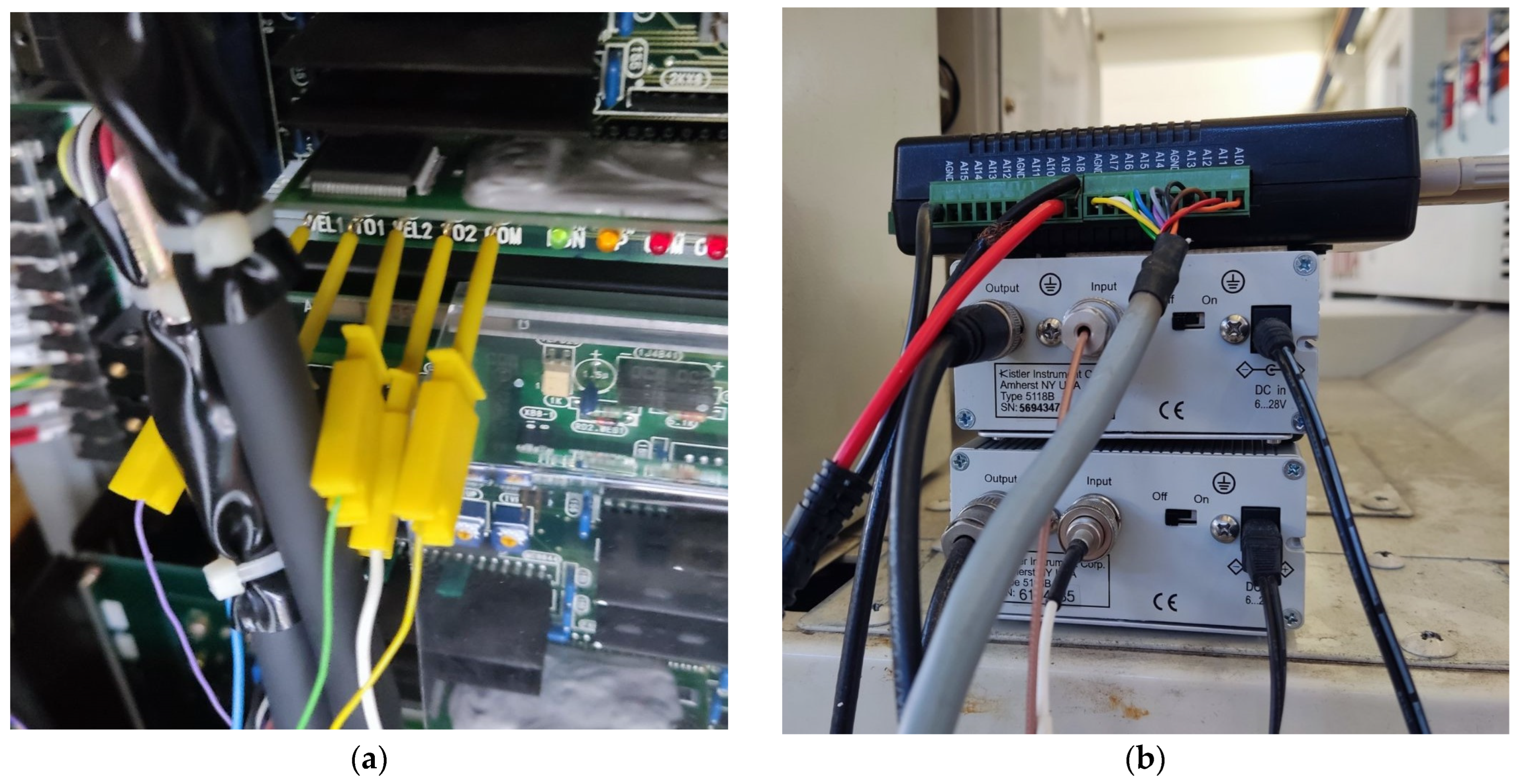

2.1. Machine Tool and Instrumentation

2.2. Experimental Procedure

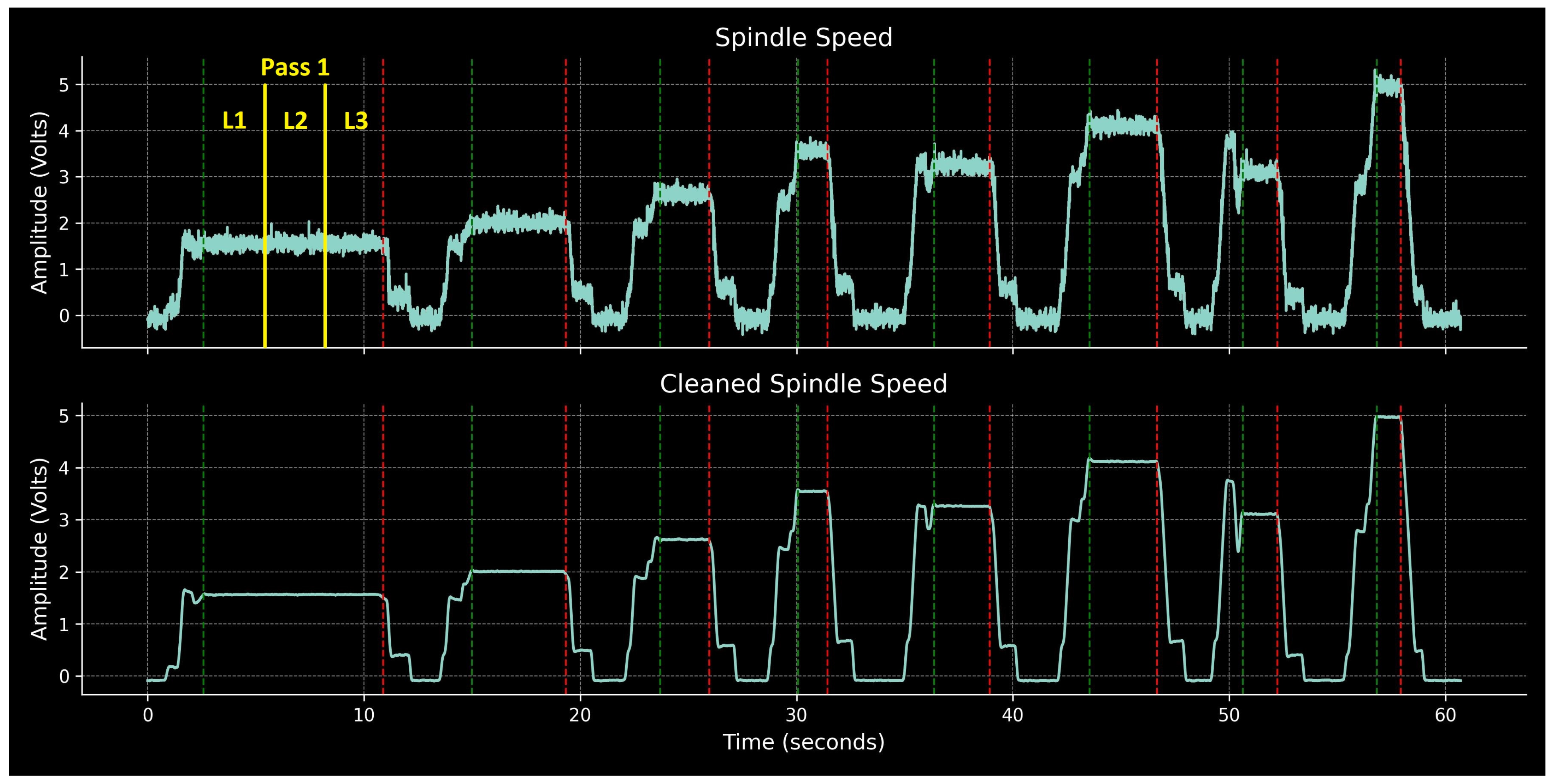

2.3. Mapping Signals to Quality Measurements

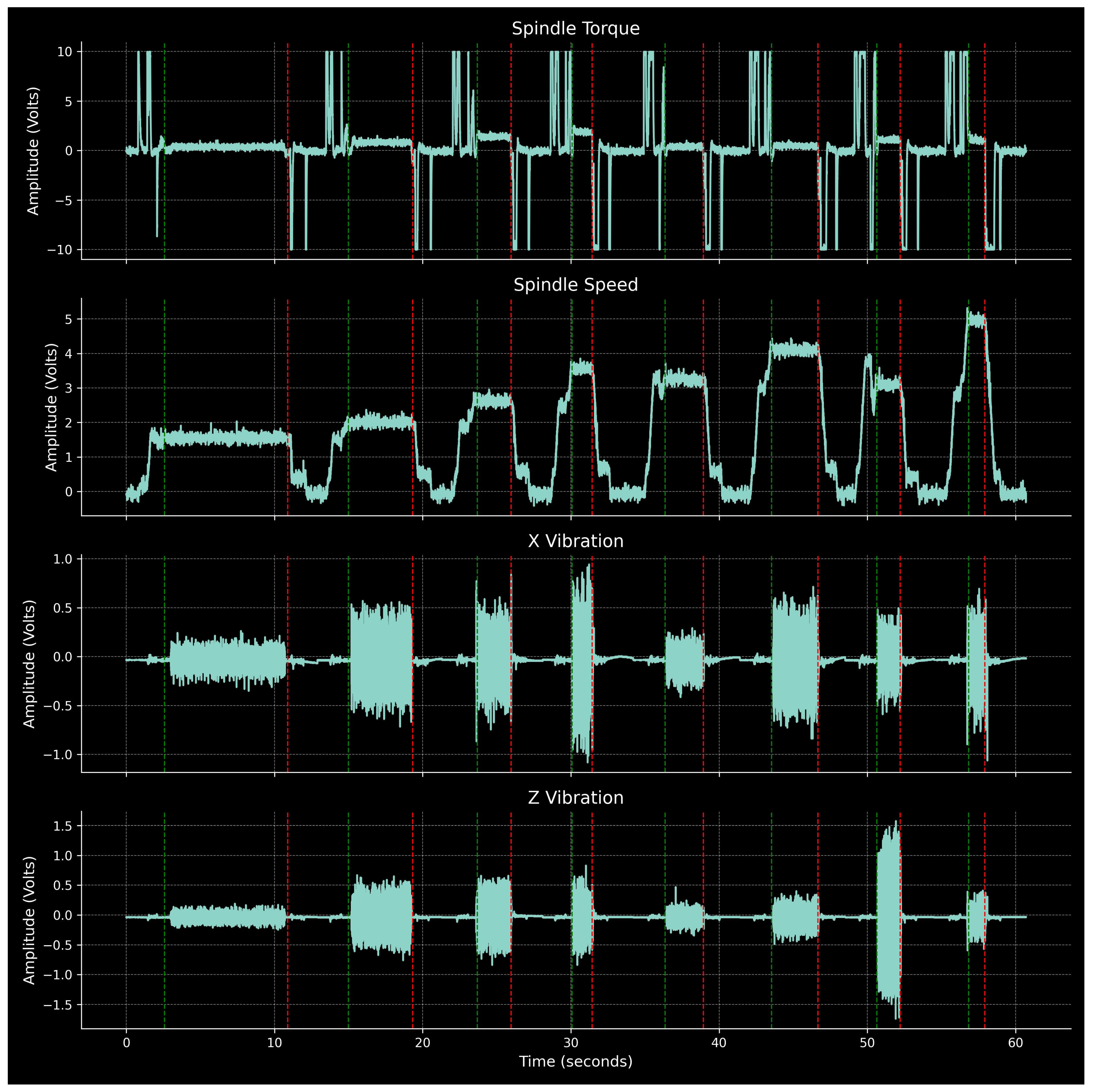

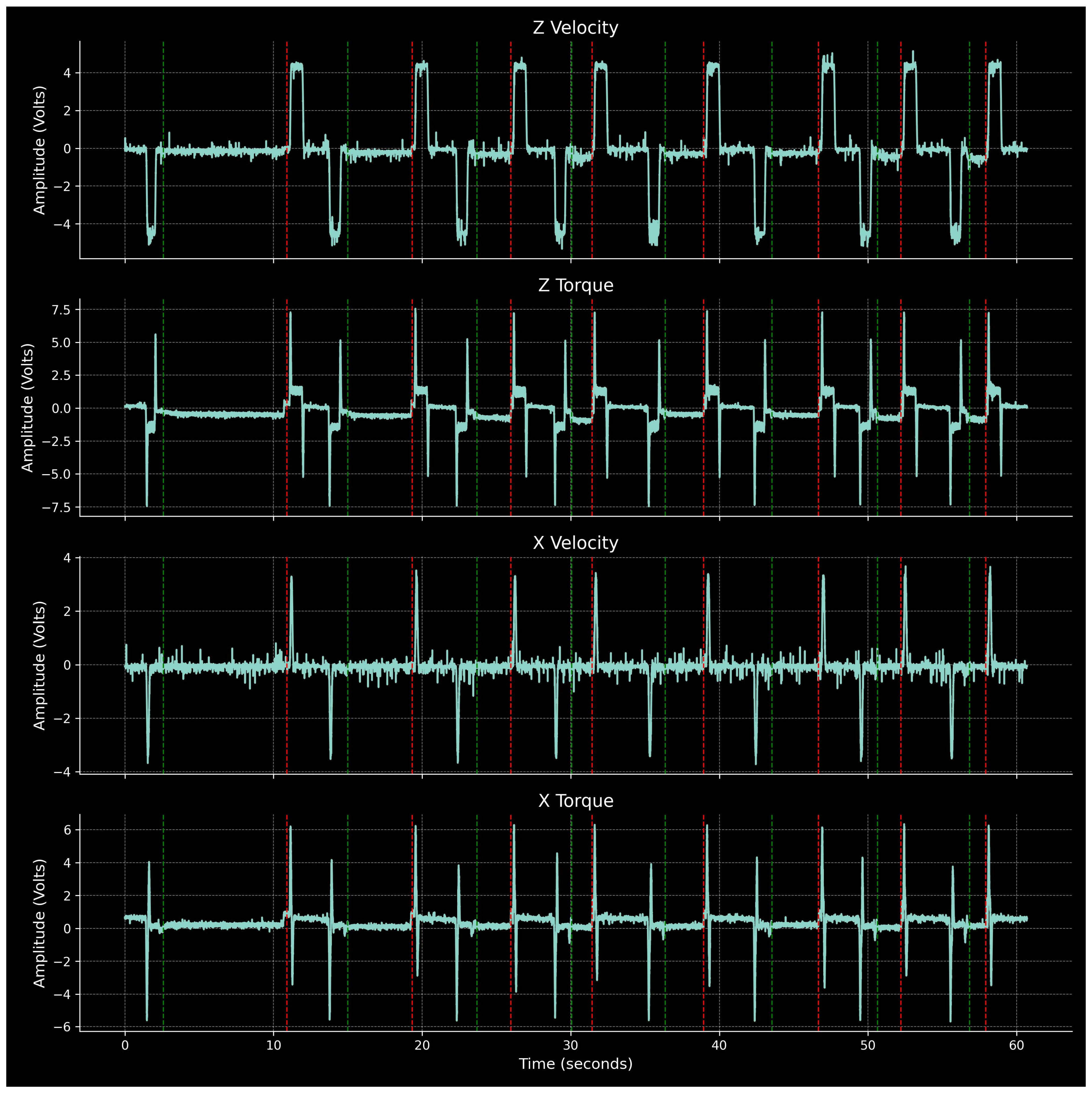

2.4. Process Signals

2.5. Quality Measurements

3. Neural Network Design

3.1. Data Preparation for Neural Network Training

3.2. Neural Network Architecture

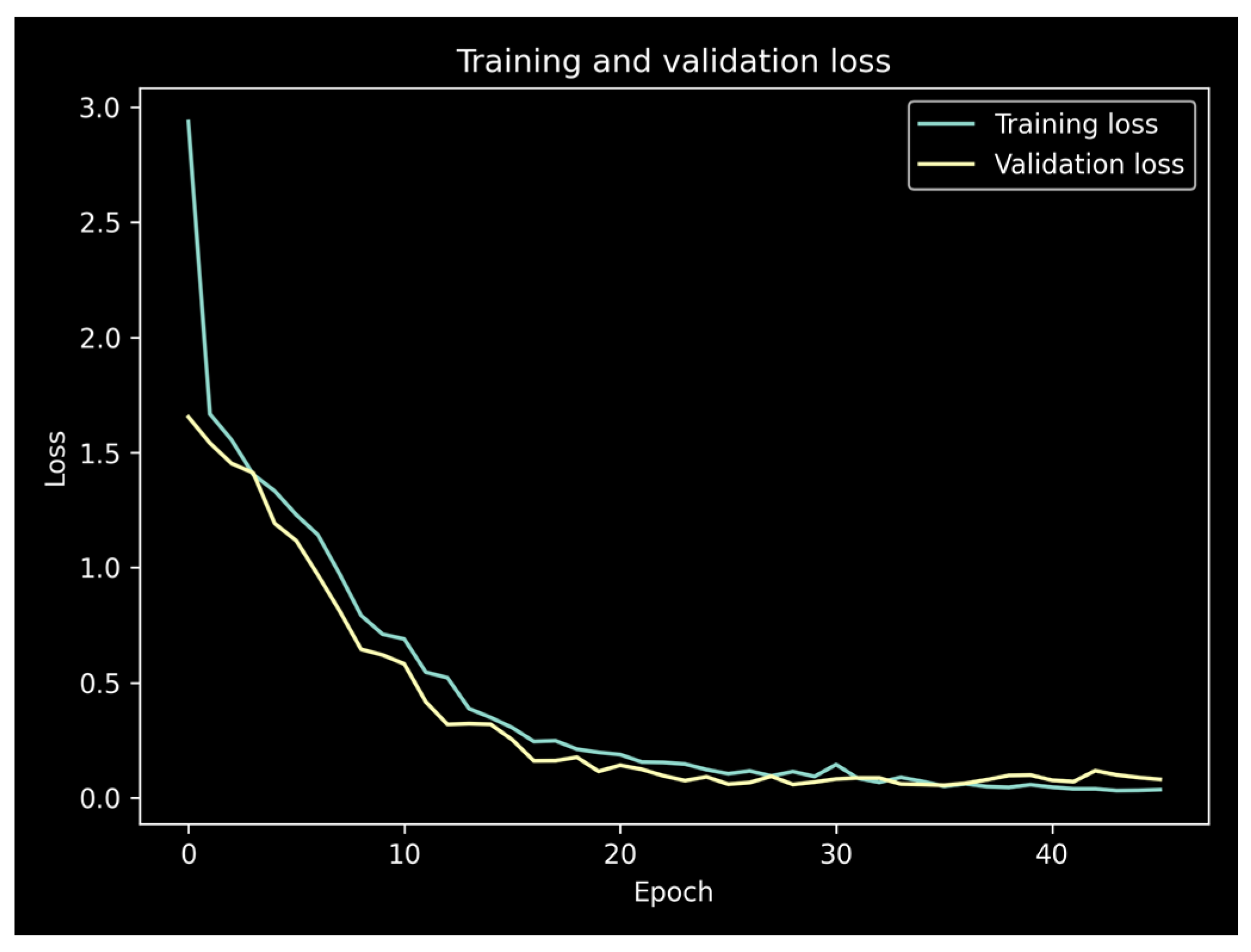

3.3. Model Training Parameters

4. Results and Discussion

4.1. K-Fold Cross Validation

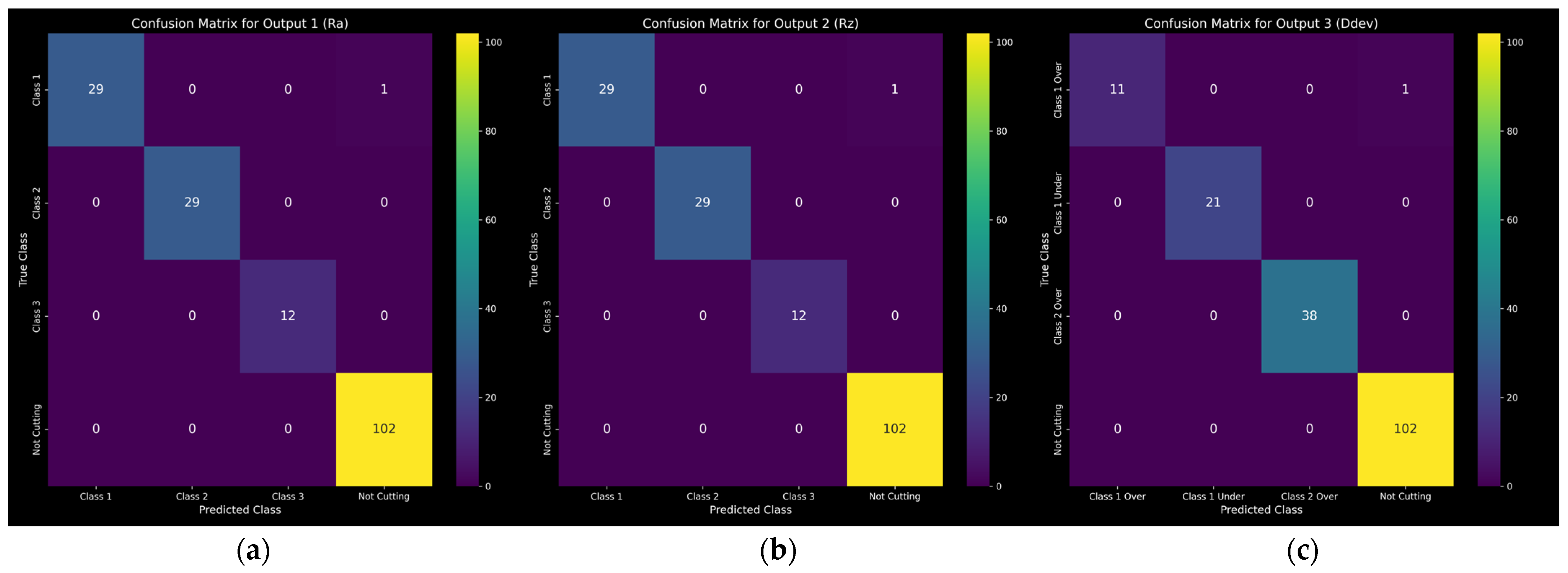

4.2. Detailed Performance Metrics

4.3. Sliding Window Parameters

4.4. Reduced Input

4.5. Alternative Definition of Classes

4.6. Datastream Simulation

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jimeno-Morenilla, A.; Azariadis, P.; Molina-Carmona, R.; Kyratzi, S.; Moulianitis, V. Technology Enablers for the Implementation of Industry 4.0 to Traditional Manufacturing Sectors: A Review. Comput. Ind. 2021, 125, 103390. [Google Scholar] [CrossRef]

- Ching, N.T.; Ghobakhloo, M.; Iranmanesh, M.; Maroufkhani, P.; Asadi, S. Industry 4.0 Applications for Sustainable Manufacturing: A Systematic Literature Review and a Roadmap to Sustainable Development. J. Clean. Prod. 2022, 334, 130133. [Google Scholar] [CrossRef]

- Luo, W.; Hu, T.; Zhang, C.; Wei, Y. Digital Twin for CNC Machine Tool: Modeling and Using Strategy. J. Ambient Intell. Humaniz. Comput. 2019, 10, 1129–1140. [Google Scholar] [CrossRef]

- Tao, F.; Zhang, M.; Liu, Y.; Nee, A.Y.C. Digital Twin Driven Prognostics and Health Management for Complex Equipment. CIRP Ann. 2018, 67, 169–172. [Google Scholar] [CrossRef]

- Lee, J.; Bagheri, B.; Kao, H.A. A Cyber-Physical Systems Architecture for Industry 4.0-Based Manufacturing Systems. Manuf. Lett. 2015, 3, 18–23. [Google Scholar] [CrossRef]

- Söderberg, R.; Wärmefjord, K.; Carlson, J.S.; Lindkvist, L. Toward a Digital Twin for Real-Time Geometry Assurance in Individualized Production. CIRP Ann.-Manuf. Technol. 2017, 66, 137–140. [Google Scholar] [CrossRef]

- Taha, H.A.; Yacout, S.; Shaban, Y. Autonomous Self-Healing Mechanism for a CNC Milling Machine Based on Pattern Recognition. J. Intell. Manuf. 2023, 34, 2185–2205. [Google Scholar] [CrossRef]

- Guo, W.; Wu, C.; Ding, Z.; Zhou, Q. Prediction of Surface Roughness Based on a Hybrid Feature Selection Method and Long Short-Term Memory Network in Grinding. Int. J. Adv. Manuf. Technol. 2021, 112, 2853–2871. [Google Scholar] [CrossRef]

- Elsheikh, A.; Yacout, S.; Ouali, M.S.; Shaban, Y. Failure Time Prediction Using Adaptive Logical Analysis of Survival Curves and Multiple Machining Signals. J. Intell. Manuf. 2020, 31, 403–415. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.; Chen, Z.; Mao, K.; Wang, P.; Gao, R.X. Deep Learning and Its Applications to Machine Health Monitoring. Mech. Syst. Signal Process. 2019, 115, 213–237. [Google Scholar] [CrossRef]

- Du, C.; Ho, C.L.; Kaminski, J. Prediction of Product Roughness, Profile, and Roundness Using Machine Learning Techniques for a Hard Turning Process. Adv. Manuf. 2021, 9, 206–215. [Google Scholar] [CrossRef]

- García Plaza, E.; Núñez López, P.J.; Beamud González, E.M. Efficiency of Vibration Signal Feature Extraction for Surface Finish Monitoring in CNC Machining. J. Manuf. Process. 2019, 44, 145–157. [Google Scholar] [CrossRef]

- Wang, B.; Wei, Y.; Liu, S.; Gu, D.; Zhao, D. Intelligent Chatter Detection for CNC Machine Based on RFE Multi-Feature Selection Strategy. Meas. Sci. Technol. 2021, 32, 095904. [Google Scholar] [CrossRef]

- Yao, Z.; Mei, D.; Chen, Z. On-Line Chatter Detection and Identification Based on Wavelet and Support Vector Machine. J. Mater. Process. Technol. 2010, 210, 713–719. [Google Scholar] [CrossRef]

- Xu, Y.; Gui, L.; Xie, T. Intelligent Recognition Method of Turning Tool Wear State Based on Information Fusion Technology and BP Neural Network. Shock Vib. 2021, 2021, 7610884. [Google Scholar] [CrossRef]

- von Hahn, T.; Mechefske, C.K. Machine Learning in CNC Machining: Best Practices. Machines 2022, 10, 1233. [Google Scholar] [CrossRef]

- Li, G.; Fu, Y.; Chen, D.; Shi, L.; Zhou, J. Deep Anomaly Detection for CNC Machine Cutting Tool Using Spindle Current Signals. Sensors 2020, 20, 4896. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5999–6009. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zhou, D.; Zhou, C.; Hu, B.; Li, G.; Liu, X.; Guo, K. Tool Wear Monitoring Using a Novel Parallel BiLSTM Model with Multi-Domain Features for Robotic Milling Al7050-T7451 Workpiece. Int. J. Adv. Manuf. Technol. 2023, 129, 1883–1899. [Google Scholar] [CrossRef]

- Yang, J.; Wu, J.; Li, X.; Qin, X. Tool Wear Prediction Based on Parallel Dual-Channel Adaptive Feature Fusion. Int. J. Adv. Manuf. Technol. 2023, 128, 145–165. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Volume 45, pp. 770–778. [Google Scholar]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. arXiv 2017, arXiv:1712.01815. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the Game of Go with Deep Neural Networks and Tree Search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Ibarra-Zarate, D.; Alonso-Valerdi, L.M.; Chuya-Sumba, J.; Velarde-Valdez, S.; Siller, H.R. Prediction of Inconel 718 Roughness with Acoustic Emission Using Convolutional Neural Network Based Regression. Int. J. Adv. Manuf. Technol. 2019, 105, 1609–1621. [Google Scholar] [CrossRef]

- Kuo, P.H.; Tseng, Y.R.; Luan, P.C.; Yau, H.T. Novel Fractional-Order Convolutional Neural Network Based Chatter Diagnosis Approach in Turning Process with Chaos Error Mapping. Nonlinear Dyn. 2023, 111, 7547–7564. [Google Scholar] [CrossRef]

- Hanchate, A.; Bukkapatnam, S.T.S.; Lee, K.H.; Srivastava, A.; Kumara, S. Explainable AI (XAI)-Driven Vibration Sensing Scheme for Surface Quality Monitoring in a Smart Surface Grinding Process. J. Manuf. Process. 2023, 99, 184–194. [Google Scholar] [CrossRef]

- Tran, M.Q.; Liu, M.K.; Tran, Q.V. Milling Chatter Detection Using Scalogram and Deep Convolutional Neural Network. Int. J. Adv. Manuf. Technol. 2020, 107, 1505–1516. [Google Scholar] [CrossRef]

- Kounta, C.A.K.A.; Arnaud, L.; Kamsu-Foguem, B.; Tangara, F. Deep Learning for the Detection of Machining Vibration Chatter. Adv. Eng. Softw. 2023, 180, 103445. [Google Scholar] [CrossRef]

- Unver, H.O.; Sener, B. A Novel Transfer Learning Framework for Chatter Detection Using Convolutional Neural Networks. J. Intell. Manuf. 2023, 34, 1105–1124. [Google Scholar] [CrossRef]

- Zhang, W.; Peng, G.; Li, C.; Chen, Y.; Zhang, Z. A New Deep Learning Model for Fault Diagnosis with Good Anti-Noise and Domain Adaptation Ability on Raw Vibration Signals. Sensors 2017, 17, 425. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A Deep Convolutional Neural Network with New Training Methods for Bearing Fault Diagnosis under Noisy Environment and Different Working Load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Kolar, D.; Lisjak, D.; Pająk, M.; Pavković, D. Fault Diagnosis of Rotary Machines Using Deep Convolutional Neural Network with Wide Three Axis Vibration Signal Input. Sensors 2020, 20, 4017. [Google Scholar] [CrossRef]

- Wu, C.; Jiang, P.; Ding, C.; Feng, F.; Chen, T. Intelligent Fault Diagnosis of Rotating Machinery Based on One-Dimensional Convolutional Neural Network. Comput. Ind. 2019, 108, 53–61. [Google Scholar] [CrossRef]

- Janssens, O.; Slavkovikj, V.; Vervisch, B.; Stockman, K.; Loccufier, M.; Verstockt, S.; Van de Walle, R.; Van Hoecke, S. Convolutional Neural Network Based Fault Detection for Rotating Machinery. J. Sound Vib. 2016, 377, 331–345. [Google Scholar] [CrossRef]

- Souza, R.M.; Nascimento, E.G.S.; Miranda, U.A.; Silva, W.J.D.; Lepikson, H.A. Deep Learning for Diagnosis and Classification of Faults in Industrial Rotating Machinery. Comput. Ind. Eng. 2021, 153, 107060. [Google Scholar] [CrossRef]

- Zhang, P.; Gao, D.; Hong, D.; Lu, Y.; Wu, Q.; Zan, S.; Liao, Z. Improving Generalisation and Accuracy of On-Line Milling Chatter Detection via a Novel Hybrid Deep Convolutional Neural Network. Mech. Syst. Signal Process. 2023, 193, 110241. [Google Scholar] [CrossRef]

- Lu, Y.; Ma, H.; Sun, Y.; Song, Q.; Liu, Z.; Xiong, Z. An Interpretable Anti-Noise Convolutional Neural Network for Online Chatter Detection in Thin-Walled Parts Milling. Mech. Syst. Signal Process. 2024, 206, 110885. [Google Scholar] [CrossRef]

- Huang, P.M.; Lee, C.H. Estimation of Tool Wear and Surface Roughness Development Using Deep Learning and Sensors Fusion. Sensors 2021, 21, 5338. [Google Scholar] [CrossRef] [PubMed]

- Lin, W.-J.; Lo, S.-H.; Young, H.-T.; Hung, C.-L. Evaluation of Deep Learning Neural Networks for Surface Roughness Prediction Using Vibration Signal Analysis. Appl. Sci. 2019, 9, 1462. [Google Scholar] [CrossRef]

- ISO 21920-2:2021; Geometrical Product Specifications (GPS), Surface Texture: Profile Part 2: Terms, Definitions and Surface Texture Parameters. ISO: Geneva, Switzerland, 2021. Available online: https://www.iso.org/standard/72226.html (accessed on 15 November 2023).

| DAQ channel | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| Axis | Spindle | Spindle | Z | Z | X | X | X | Z |

| Signal (V) | Torque | Speed | Velocity | Torque | Velocity | Torque | Vibration | Vibration |

| Source | Machine controller | Accelerometers | ||||||

| Experiment No | Workpiece Id | Depth of Cut (mm) | Feed (mm/rev) | Cutting Speed (m/min) | Final Diameter (mm) | Spindle Speed (RPM) |

|---|---|---|---|---|---|---|

| 1 | Part 1 | 0.5 | 0.2 | 160 | 31 | 1642.89 |

| 2 | 1 | 0.3 | 190 | 29 | 2085.48 | |

| 3 | 1.5 | 0.4 | 220 | 26 | 2693.39 | |

| 4 | 2 | 0.5 | 250 | 22 | 3617.16 | |

| 5 | 0.5 | 0.3 | 220 | 21 | 3334.67 | |

| 6 | 1 | 0.2 | 250 | 19 | 4188.29 | |

| 7 | 1.5 | 0.5 | 160 | 16 | 3183.1 | |

| 8 | 2 | 0.4 | 190 | 12 | 5039.91 | |

| 9 | Part 2 | 0.5 | 0.4 | 250 | 31 | 2567.02 |

| 10 | 1 | 0.5 | 220 | 29 | 2414.76 | |

| 11 | 1.5 | 0.2 | 190 | 26 | 2326.11 | |

| 12 | 2 | 0.3 | 160 | 22 | 2314.98 | |

| 13 | 0.5 | 0.5 | 190 | 21 | 2879.95 | |

| 14 | 1 | 0.4 | 160 | 19 | 2680.5 | |

| 15 | 1.5 | 0.3 | 250 | 16 | 4973.59 | |

| 16 | 2 | 0.2 | 220 | 12 | 5835.68 |

| Flatness Threshold | Minimum Segment Length | Amplitude Cutoff |

|---|---|---|

| 0.03 | 500 ms | 0.5 V |

| Metric | Label | Range (μm) |

|---|---|---|

| Roughness Ra | Class 1 | [0, 2.5) |

| Class 2 | [2.5, 4.5) | |

| Class 3 | [4.5, 10] | |

| Roughness Rz | Class 1 | [0, 10) |

| Class 2 | [10, 16) | |

| Class 3 | [16, 20] | |

| Diameter deviation Ddev | Class 1 Under | [−20, 0) |

| Class 1 Over | [0, 20) | |

| Class 2 Over | [20, 80] | |

| All metrics | Not Cutting | N/A |

| Step Size (Samples) | Number of Data Points |

|---|---|

| 100 | 1153 |

| 250 | 461 |

| 400 | 289 |

| Layer | Parameters |

|---|---|

| Conv1 | kernel 30, filters 64, stride 1 |

| MaxPool1 | kernel 2, stride 1 |

| Conv2 | kernel 3, filters 128, stride 1 |

| MaxPool2 | kernel 2, stride 1 |

| Flatten | - |

| Dense | size 100 |

| Dropout | rate 0.2 |

| Output X3 | 3 × size 4 |

| Total trainable parameters: ~1.5 M | |

| Parameter | Value |

|---|---|

| Window size | 500 samples |

| Step size | 100 samples |

| Optimizer | Adam |

| Learning rate | 0.0005 |

| Loss | Categorical cross-entropy |

| Batch size | 128 |

| Max epochs | 100 |

| Validation split | 0.15 of training |

| Patience | 10 epochs |

| Min delta | 0.001 |

| Test split | 0.15 of total |

| Split random state | 42 |

| Metric | F1 Macro Average | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Fold 1 | Fold 2 | Fold 3 | Fold 4 | Fold 5 | Fold 6 | Fold 7 | Fold 8 | Fold 9 | Fold 10 | Average | |

| Ra | 0.94 | 0.97 | 0.98 | 1.00 | 0.97 | 0.98 | 0.99 | 0.95 | 1.00 | 0.98 | 97.76 |

| Rz | 0.98 | 0.97 | 0.97 | 1.00 | 0.96 | 0.98 | 1.00 | 0.97 | 1.00 | 0.99 | 98.34 |

| Ddev | 0.97 | 0.97 | 1.00 | 0.96 | 0.98 | 1.00 | 0.99 | 0.97 | 1.00 | 1.00 | 98.46 |

| Metric | Label | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|---|

| Ra | Class 1 | 1.00 | 0.97 | 0.98 | 30 |

| Class 2 | 1.00 | 1.00 | 1.00 | 29 | |

| Class 3 | 1.00 | 1.00 | 1.00 | 12 | |

| Not Cutting | 0.99 | 1.00 | 1.00 | 102 | |

| Rz | Class 1 | 1.00 | 0.97 | 0.98 | 30 |

| Class 2 | 1.00 | 1.00 | 1.00 | 29 | |

| Class 3 | 1.00 | 1.00 | 1.00 | 12 | |

| Not Cutting | 0.99 | 1.00 | 1.00 | 102 | |

| Ddev | Class 1 Under | 1.00 | 1.00 | 1.00 | 21 |

| Class 1 Over | 1.00 | 0.92 | 0.96 | 12 | |

| Class 2 Over | 1.00 | 1.00 | 1.00 | 38 | |

| Not Cutting | 0.99 | 1.00 | 1.00 | 102 |

| Step Size | F1 Weighted Average | Data Points (Train/Test) | ||

|---|---|---|---|---|

| Ra | Rz | Ddev | ||

| 200 | 0.99 | 1.00 | 0.99 | 490/87 |

| 300 | 0.97 | 0.97 | 0.95 | 327/58 |

| 400 | 1.00 | 0.98 | 0.92 | 245/44 |

| 500 | 0.91 | 0.91 | 0.85 | 196/35 |

| Window Size | F1 Macro Average | Data Points (Train/Test) | ||

|---|---|---|---|---|

| Ra | Rz | Ddev | ||

| 100 | 0.98 | 0.98 | 0.98 | 986/175 |

| 200 | 0.98 | 0.98 | 0.98 | 985/174 |

| 400 | 0.97 | 0.98 | 0.98 | 981/174 |

| 600 | 0.99 | 1.00 | 0.99 | 978/173 |

| 800 | 1.00 | 1.00 | 0.99 | 974/173 |

| 1000 | 0.97 | 0.97 | 0.99 | 971/172 |

| Subset of Channels | F1 Macro Average | ||

|---|---|---|---|

| Ra | Rz | Ddev | |

| Only vibrations | 0.80 | 0.76 | 0.71 |

| Only torques | 0.94 | 0.94 | 0.96 |

| Only velocities | 0.99 | 0.99 | 0.93 |

| Only Z velocity | 0.89 | 0.90 | 0.79 |

| Z velocity and all torques | 0.99 | 0.99 | 0.98 |

| Number of Equally Distributed Classes | F1 Macro Average | F1 Weighted Average | ||||

|---|---|---|---|---|---|---|

| Ra | Rz | Ddev | Ra | Rz | Ddev | |

| 2 + 1 | 0.97 | 0.99 | 0.98 | 0.98 | 0.99 | 0.99 |

| 3 + 1 | 0.91 | 0.98 | 0.97 | 0.95 | 0.99 | 0.98 |

| 5 + 1 | 0.79 | 0.89 | 0.98 | 0.94 | 0.96 | 0.99 |

| 7 + 1 | 0.74 | 0.69 | 0.64 | 0.97 | 0.94 | 0.91 |

| 9 + 1 | 0.78 | 0.72 | 0.71 | 0.95 | 0.95 | 0.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stathatos, E.; Tzimas, E.; Benardos, P.; Vosniakos, G.-C. Convolutional Neural Networks for Raw Signal Classification in CNC Turning Process Monitoring. Sensors 2024, 24, 1390. https://doi.org/10.3390/s24051390

Stathatos E, Tzimas E, Benardos P, Vosniakos G-C. Convolutional Neural Networks for Raw Signal Classification in CNC Turning Process Monitoring. Sensors. 2024; 24(5):1390. https://doi.org/10.3390/s24051390

Chicago/Turabian StyleStathatos, Emmanuel, Evangelos Tzimas, Panorios Benardos, and George-Christopher Vosniakos. 2024. "Convolutional Neural Networks for Raw Signal Classification in CNC Turning Process Monitoring" Sensors 24, no. 5: 1390. https://doi.org/10.3390/s24051390

APA StyleStathatos, E., Tzimas, E., Benardos, P., & Vosniakos, G.-C. (2024). Convolutional Neural Networks for Raw Signal Classification in CNC Turning Process Monitoring. Sensors, 24(5), 1390. https://doi.org/10.3390/s24051390