Proposal and Implementation of a Procedure for Compliance Recognition of Objects with Smart Tactile Sensors

Abstract

:1. Introduction

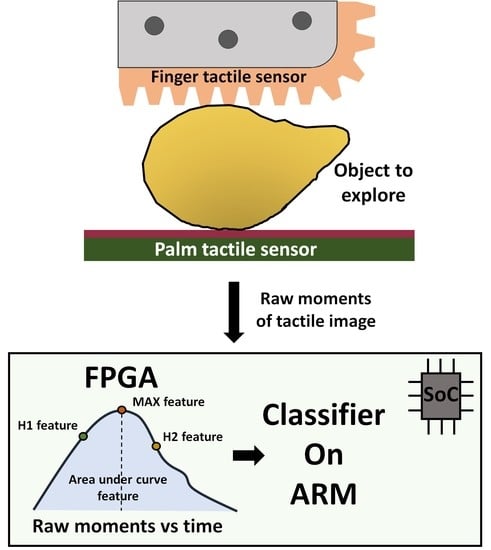

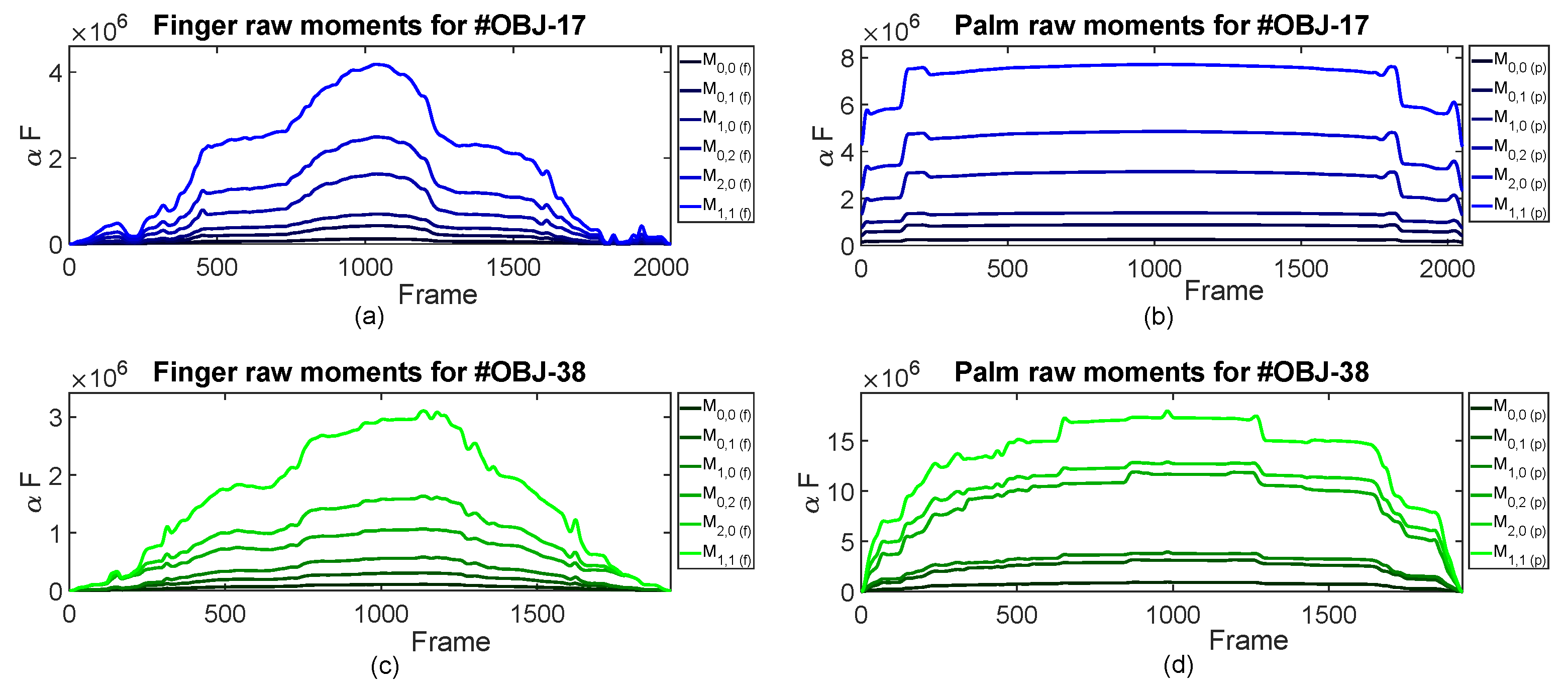

2. Proposed Features for Classification

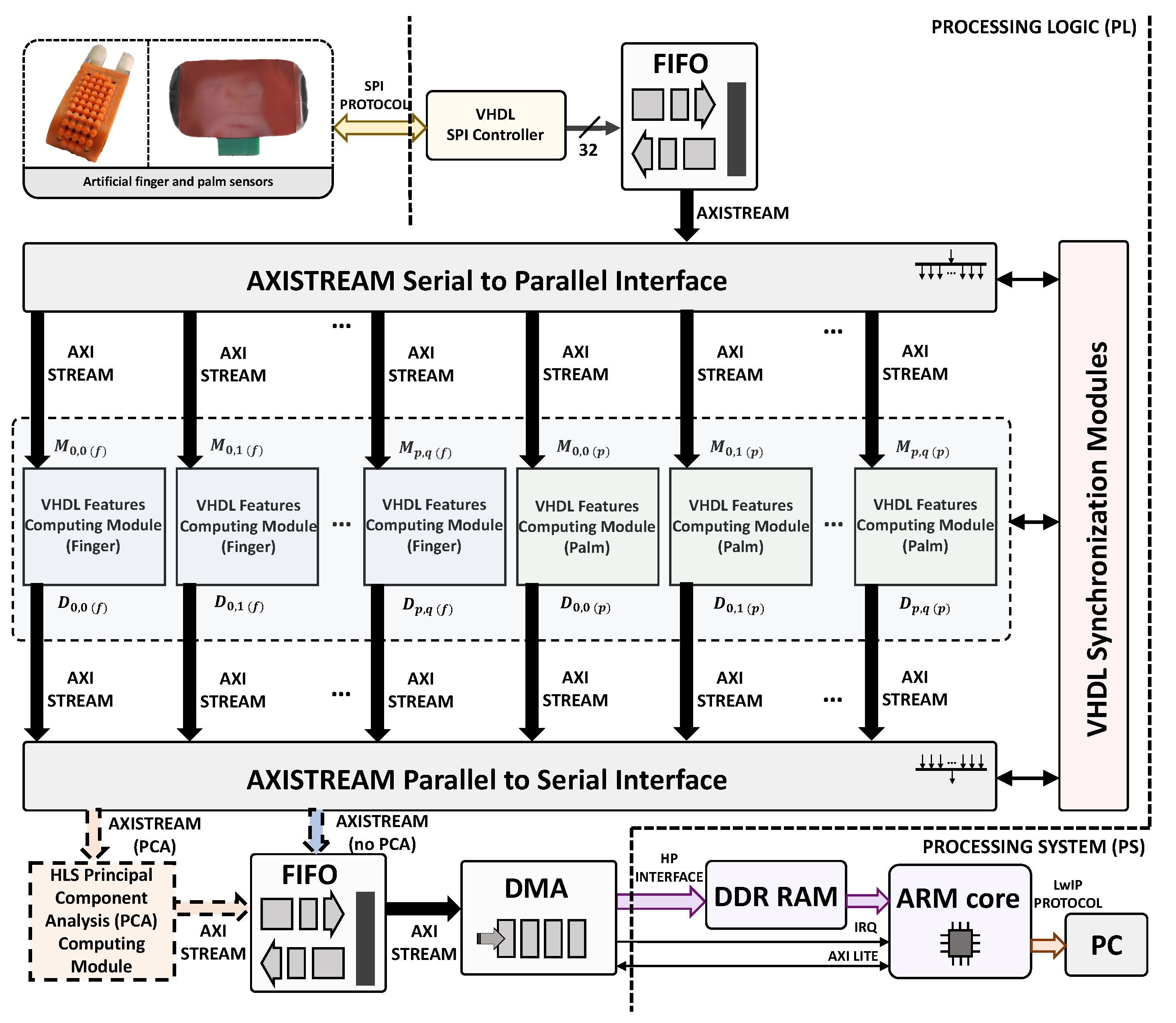

3. Materials and Methods

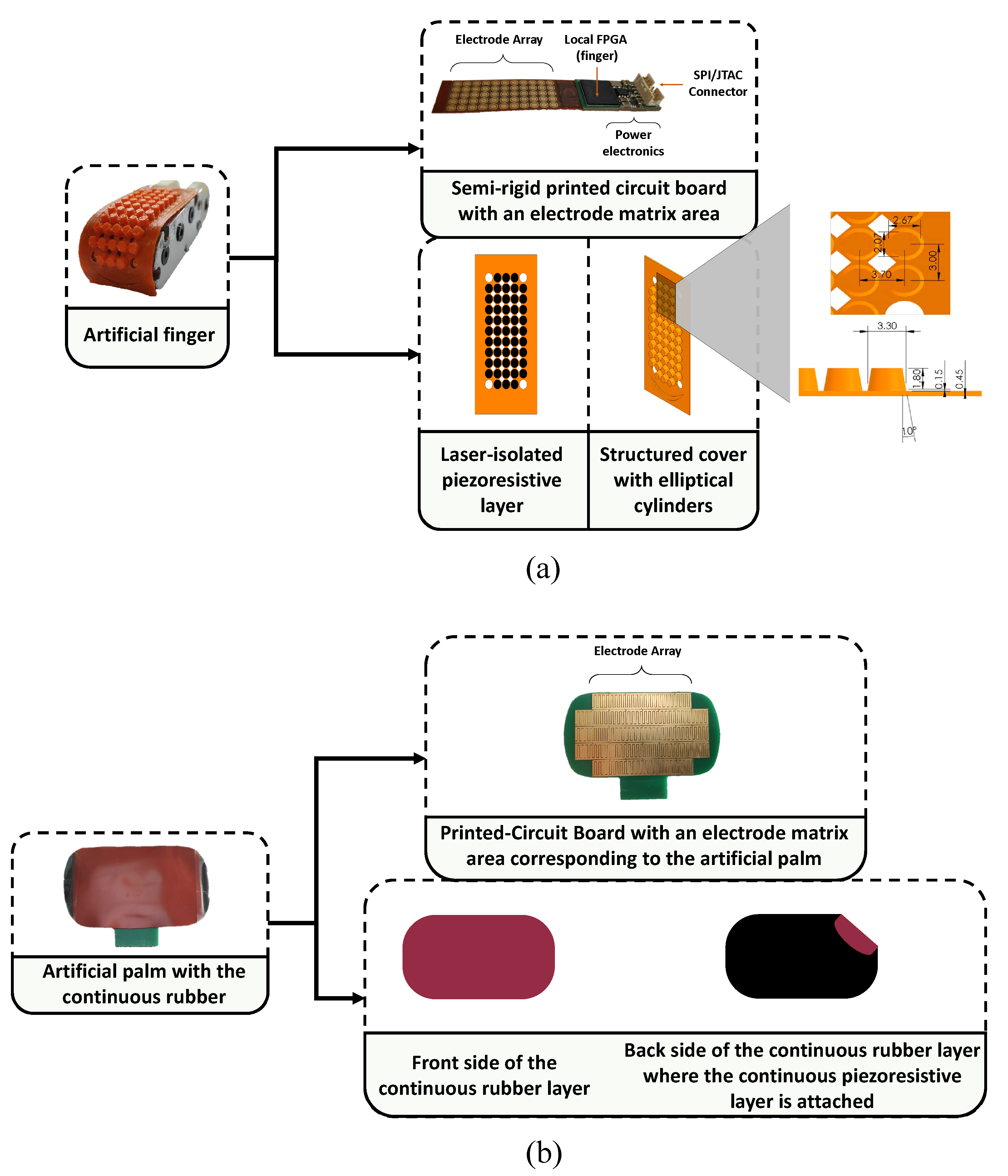

3.1. Sensors Technology

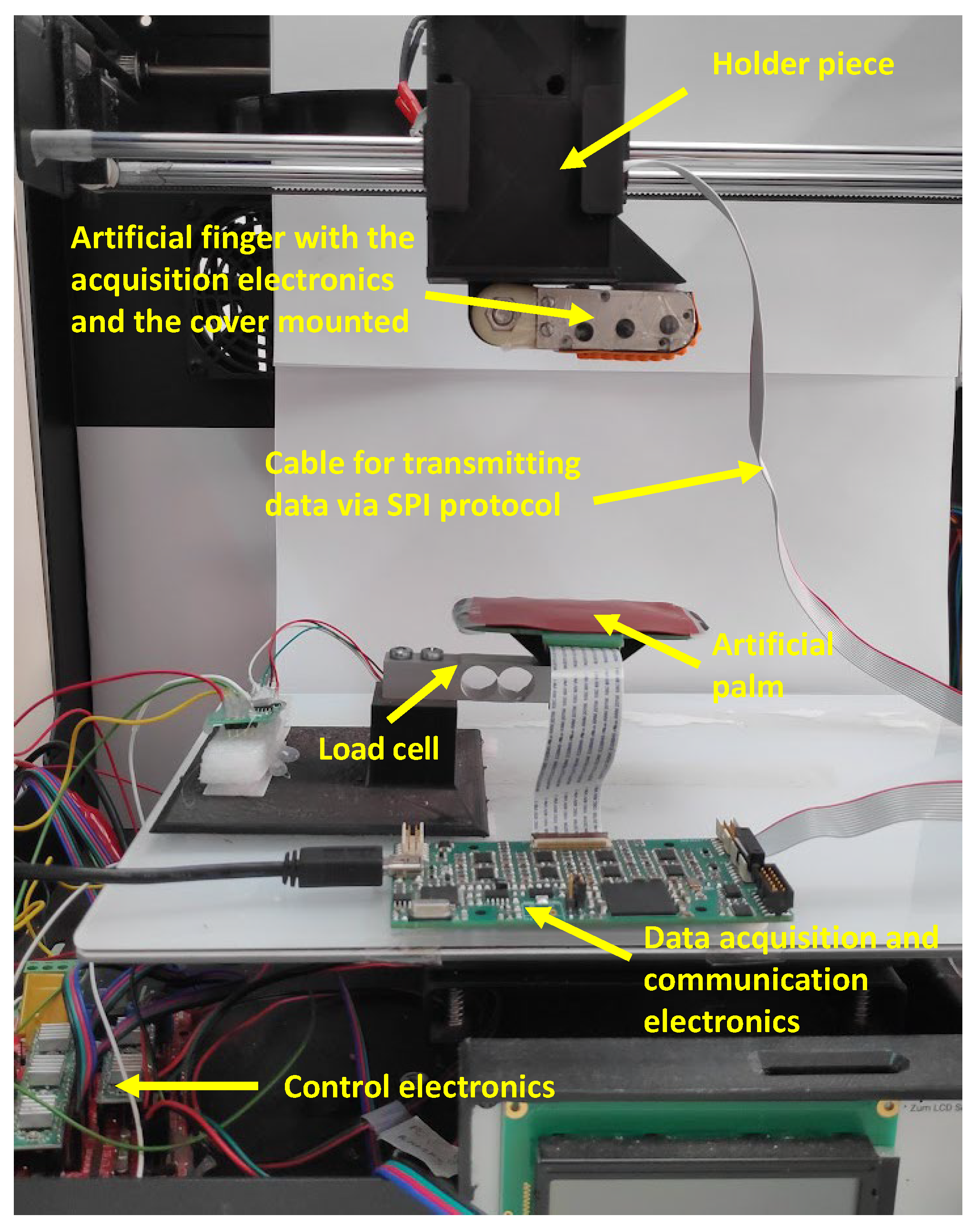

3.2. Experimental Setup

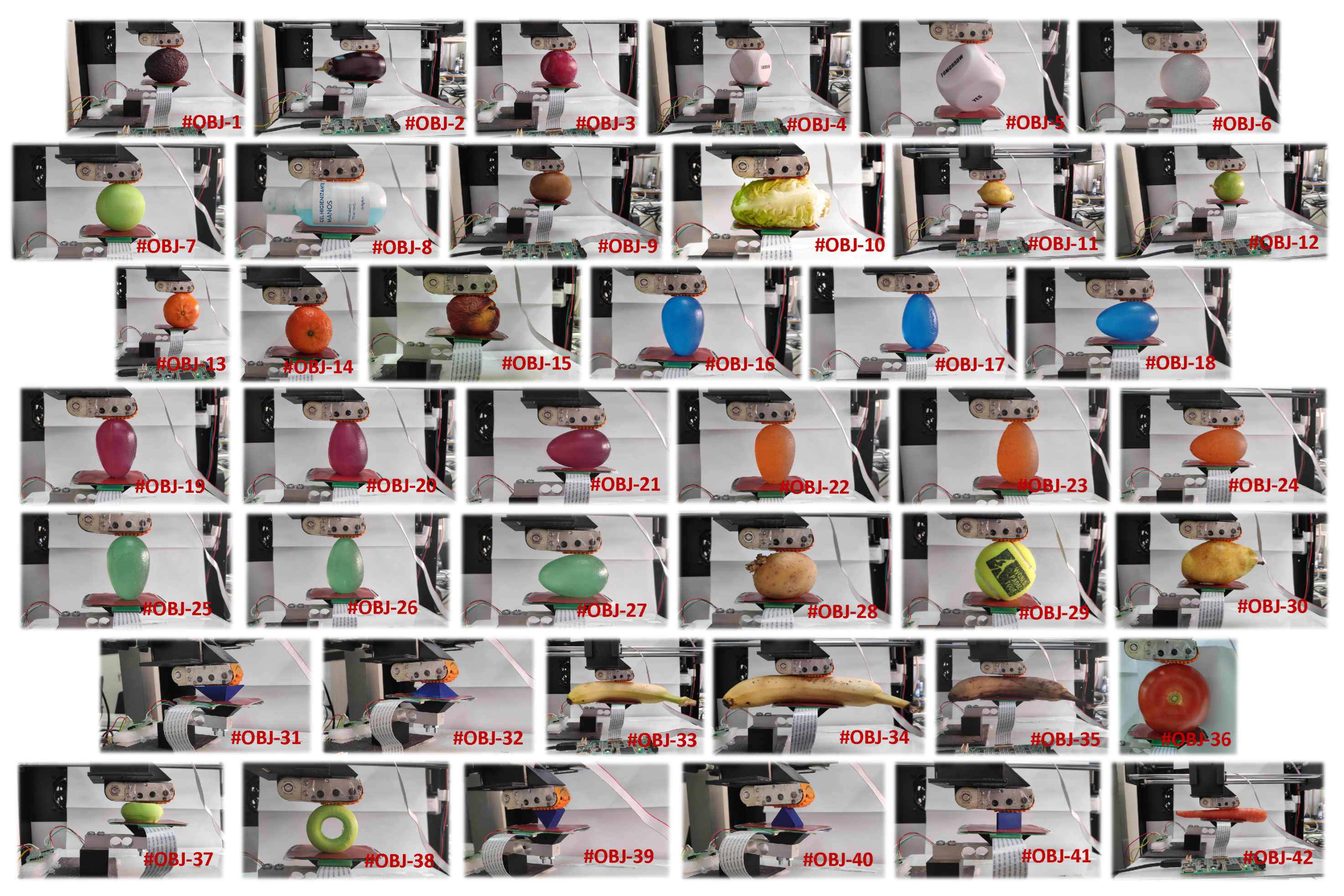

3.3. Objects to Explore

3.4. Data Gathering Procedure

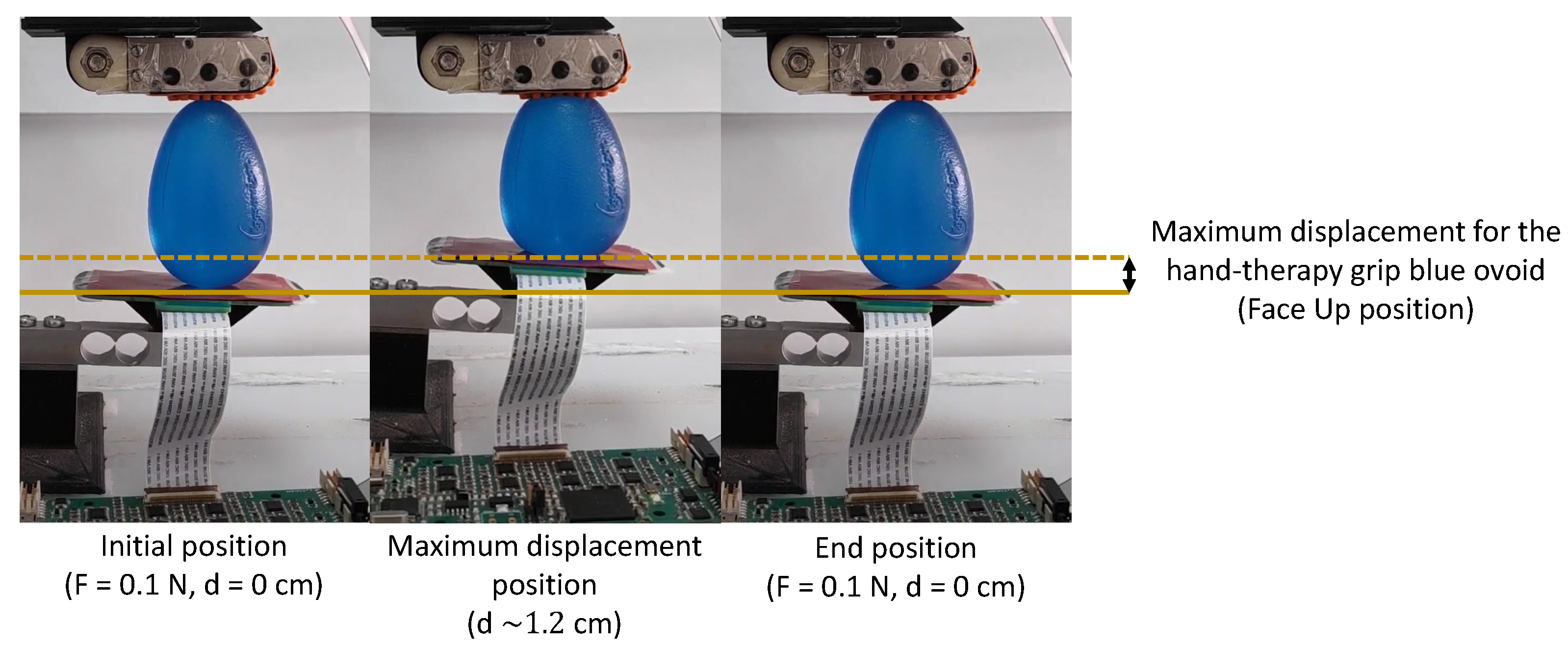

- Step 2: The palm was moved vertically to grasp the object, until the load cell detected a low-level threshold force of . This position was recorded as the initial point;

- Step 3: The palm was moved further vertically, so that the object was compressed until the palm reached a maximum relative distance from the initial point of approximately ≈1.2 cm (although the palm–finger gripper had a certain compliance, this limit was forced to avoid damage to the system when rigid objects were explored);

- Step 4: The palm was moved vertically in the reverse direction, so that the object was decompressed until the initial position defined in the Step 2 was reached.

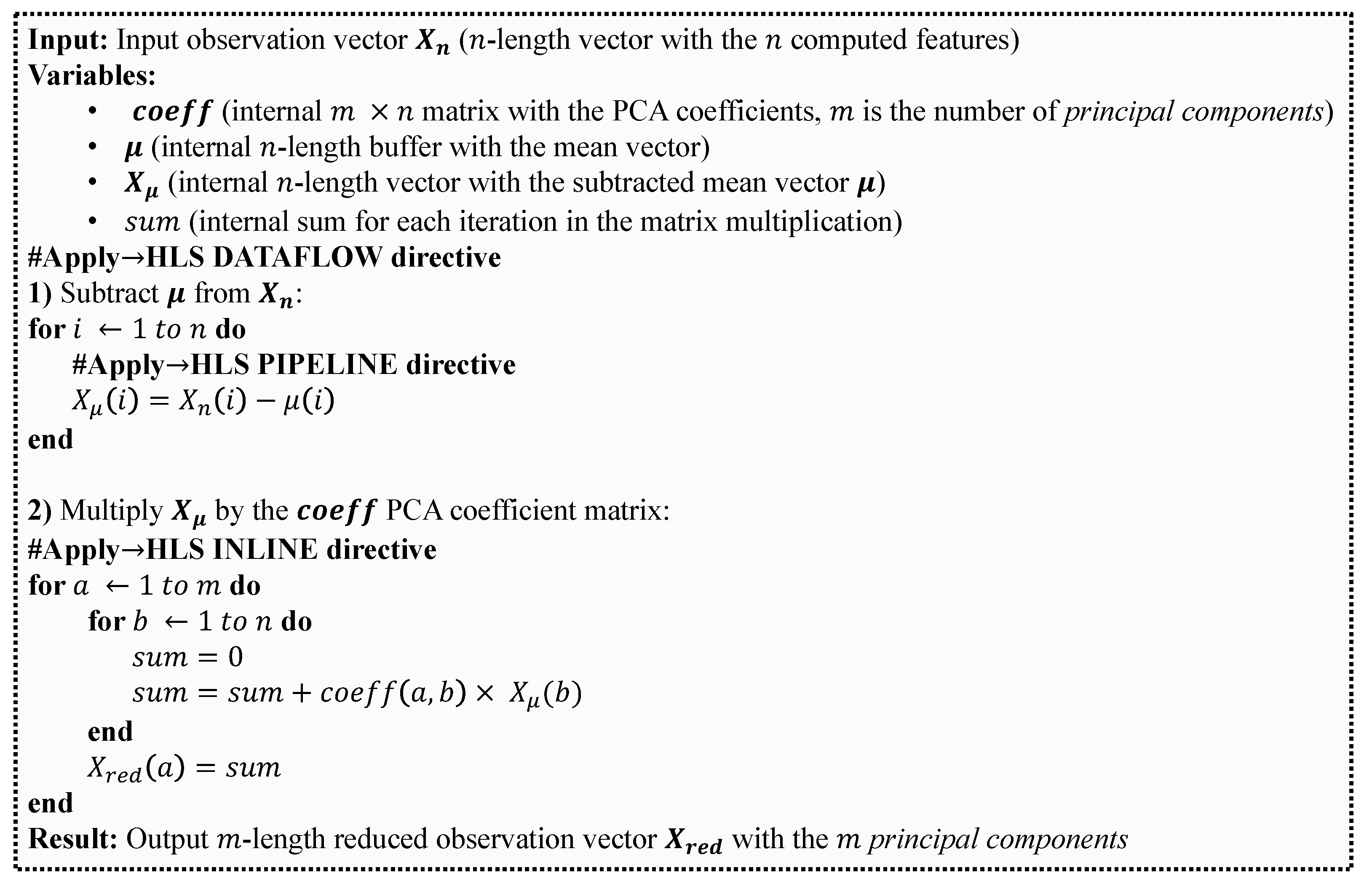

3.5. Training Algorithm

4. Implementation on the Zynq7000® SoC

5. Results and Discussion

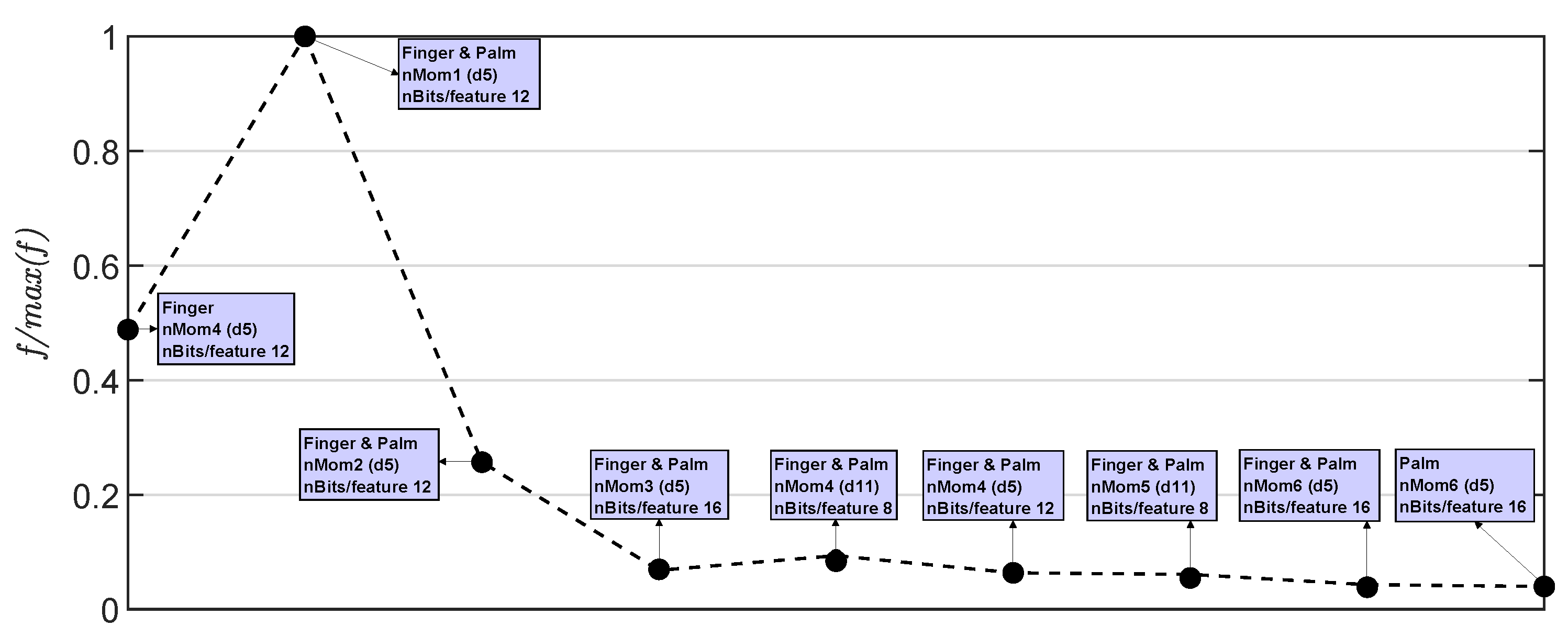

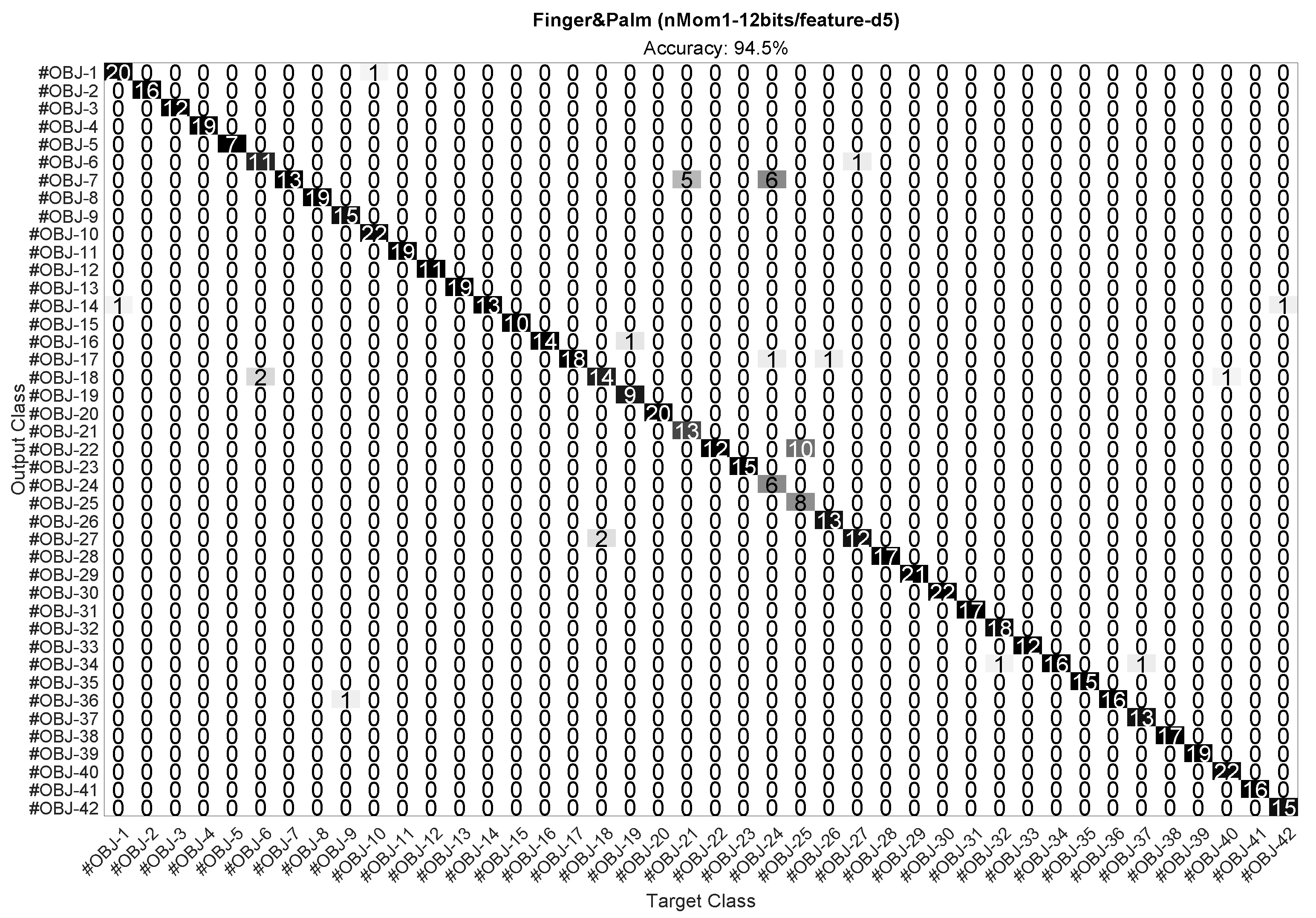

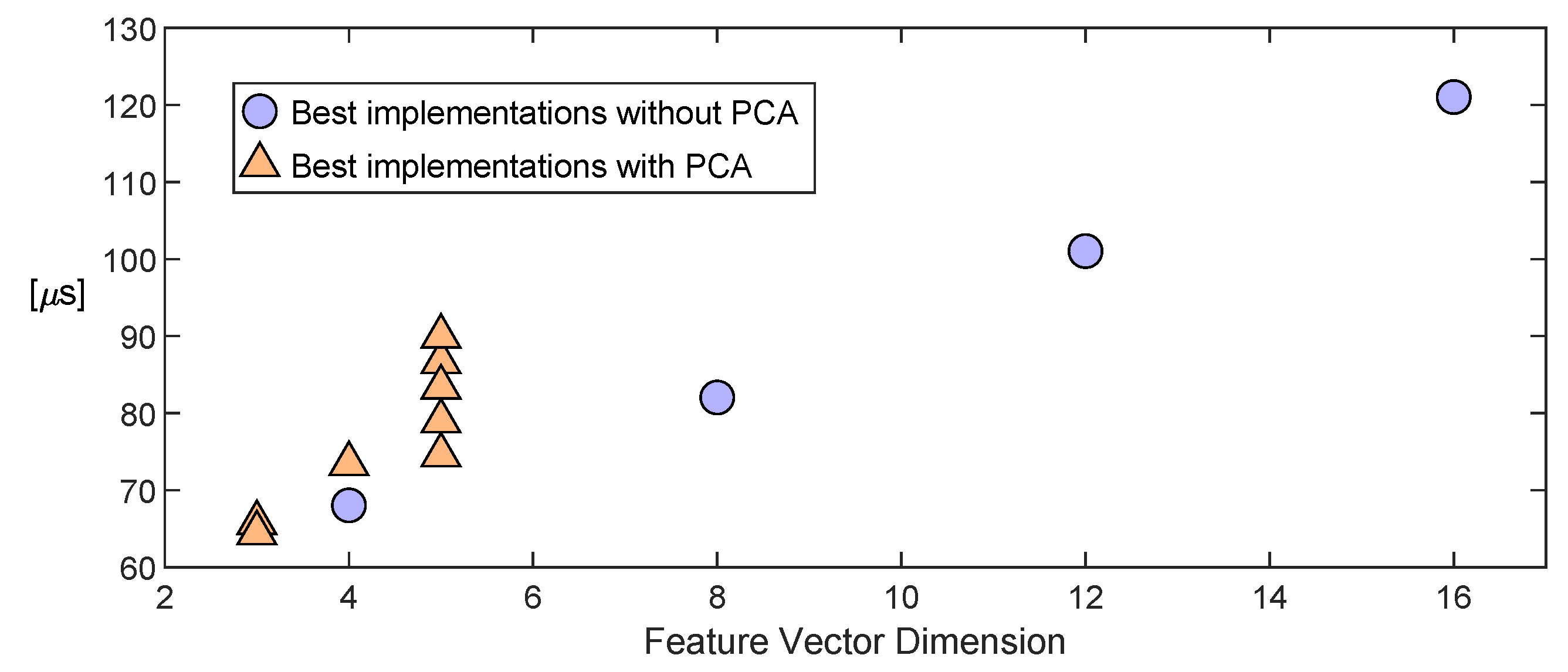

5.1. Results Obtained without PCA

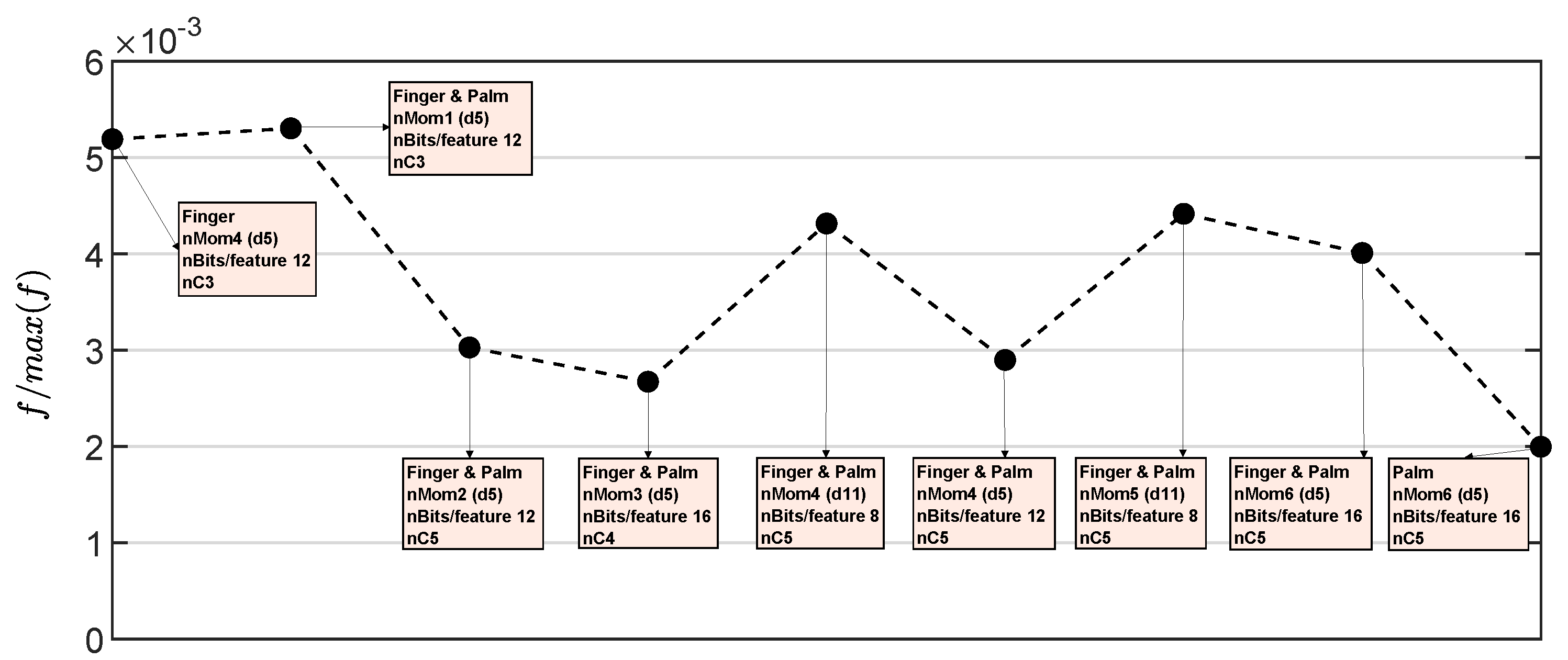

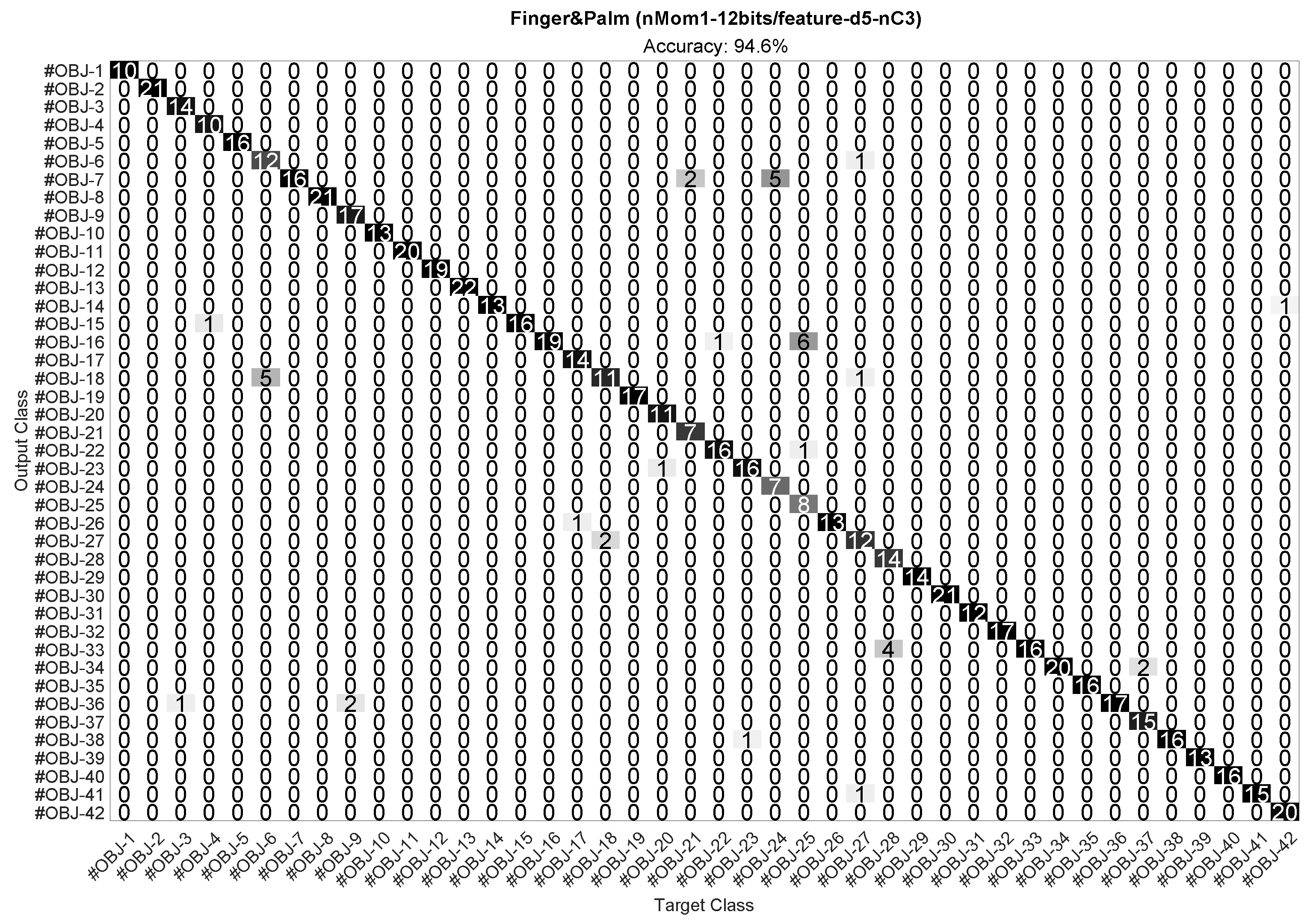

5.2. Results Obtained with PCA

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, Y.; Gao, Z.; Zhang, F.; Wen, Z.; Sun, X. Recent progress in self-powered multifunctional e-skin for advanced applications. Exploration 2022, 2, 20210112. [Google Scholar] [CrossRef]

- Luo, S.; Bimbo, J.; Dahiya, R.; Liu, H. Robotic tactile perception of object properties: A review. Mechatronics 2017, 48, 54–67. [Google Scholar] [CrossRef]

- Gao, S.; Dai, Y.; Nathan, A. Tactile and Vision Perception for Intelligent Humanoids. Adv. Intell. Syst. 2022, 4, 2100074. [Google Scholar] [CrossRef]

- Balasubramanian, A.B.; Magee, D.P.; Taylor, D.G. Stiffness Estimation in Single Degree of Freedom Mechanisms using Regression. In Proceedings of the IECON Proceedings (Industrial Electronics Conference), Toronto, ON, Canada, 13–16 October 2021; Volume 2021. [Google Scholar] [CrossRef]

- Wang, L.; Li, Q.; Lam, J.; Wang, Z. Tactual Recognition of Soft Objects from Deformation Cues. IEEE Robot. Autom. Lett. 2022, 7, 96–103. [Google Scholar] [CrossRef]

- Bandyopadhyaya, I.; Babu, D.; Kumar, A.; Roychowdhury, J. Tactile sensing based softness classification using machine learning. In Proceedings of the IEEE International Advance Computing Conference (IACC), Gurgaon, India, 21–22 February 2014. [Google Scholar] [CrossRef]

- Windau, J.; Shen, W.M. An inertia-based surface identification system. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 2330–2335. [Google Scholar] [CrossRef]

- Thompson, J.; Kasun Prasanga, D.; Murakami, T. Identification of unknown object properties based on tactile motion sequence using 2-finger gripper robot. Precis. Eng. 2021, 74, 347–357. [Google Scholar] [CrossRef]

- Yuan, W.; Dong, S.; Adelson, E. GelSight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 2017, 17, 2762. [Google Scholar] [CrossRef] [PubMed]

- Bottcher, W.; MacHado, P.; Lama, N.; McGinnity, T.M. Object recognition for robotics from tactile time series data utilising different neural network architectures. In Proceedings of the International Joint Conference on Neural Networks, Shenzhen, China, 18–22 July 2021; Volume 2021. [Google Scholar] [CrossRef]

- Bhattacharjee, T.; Rehg, J.M.; Kemp, C.C. Inferring Object Properties with a Tactile-Sensing Array Given Varying Joint Stiffness and Velocity. Int. J. Humanoid Robot. 2018, 15, 1750024. [Google Scholar] [CrossRef]

- Drimus, A.; Kootstra, G.; Bilberg, A.; Kragic, D. Classification of rigid and deformable objects using a novel tactile sensor. In Proceedings of the IEEE 15th International Conference on Advanced Robotics: New Boundaries for Robotics, ICAR 2011, Tallinn, Estonia, 20–23 June 2011; pp. 427–434. [Google Scholar] [CrossRef]

- Kappassov, Z.; Baimukashev, D.; Adiyatov, O.; Salakchinov, S.; Massalin, Y.; Varol, H.A. A Series Elastic Tactile Sensing Array for Tactile Exploration of Deformable and Rigid Objects. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 520–525. [Google Scholar] [CrossRef]

- Oballe-Peinado, O.; Hidalgo-Lopez, J.A.; Sanchez-Duran, J.A.; Castellanos-Ramos, J.; Vidal-Verdu, F. Architecture of a tactile sensor suite for artificial hands based on FPGAs. In Proceedings of the IEEE RAS and EMBS International Conference on Biomedical Robotics and Biomechatronics, Rome, Italy, 24–27 June 2012; pp. 112–117. [Google Scholar] [CrossRef]

- Lora-Rivera, R.; Fernandez-Ruiz, A.; Cuesta-Ramirez, J.; De Guzman-Manzano, A.; Castellanos-Ramos, J.; Oballe-Peinado, O.; Vidal-Verdu, F. Tactile Sensor with a Structured 3D Printed Cover and Laser-Isolated Tactels. In Proceedings of the 2018 9th Cairo International Biomedical Engineering Conference (CIBEC), Cairo, Egypt, 20–22 December 2019; pp. 98–101. [Google Scholar] [CrossRef]

- Kaufman, L.; Rousseuw, P.J. Finding Groups in Data: An Introduction to Cluster Analysis; John Wiley & Sons: Hoboken, NJ, USA, 1991; Volume 47. [Google Scholar]

- Friedman, J.H.; Bentley, J.L.; Finkel, R.A. An Algorithm for Finding Best Matches in Logarithmic Expected Time. ACM Trans. Math. Softw. (TOMS) 1977, 3, 209–226. [Google Scholar] [CrossRef]

- Lora-Rivera, R.; Luna-Cortes, J.A.; De Guzman-Manzano, A.; Ruiz-Barroso, P.; Castellanos-Ramos, J.; Oballe-Peinado, O.; Vidal-Verdu, F. Object stiffness recognition with descriptors given by an FPGA-based tactile sensor. In Proceedings of the IEEE International Symposium on Industrial Electronics, Delft, The Netherlands, 17–19 June 2020; Volume 2020, pp. 561–566. [Google Scholar] [CrossRef]

- Oballe-Peinado, O.; Hidalgo-Lopez, J.; Castellanos-Ramos, J.; Sanchez-Duran, J.; Navas-Gonzalez, R.; Herran, J.; Vidal-Verdu, F. FPGA-Based Tactile Sensor Suite Electronics for Real-Time Embedded Processing. IEEE Trans. Ind. Electron. 2017, 64, 9657–9665. [Google Scholar] [CrossRef]

- Kumar, S. Understanding K-Means, K-Means++ and, K-Medoids Clustering Algorithms. Available online: https://towardsdatascience.com/understanding-k-means-k-means-and-k-medoids-clustering-algorithms-ad9c9fbf47ca (accessed on 13 February 2023).

- Fränti, P.; Sieranoja, S. How much can k-means be improved by using better initialization and repeats? Pattern Recognit. 2019, 93, 95–112. [Google Scholar] [CrossRef]

| Object Label | Object Description | Exploring Position | Object Label | Object Description | Exploring Position |

|---|---|---|---|---|---|

| #OBJ-1 | Avocado | Horizontal | #OBJ-22 | Hand-therapy grip orange 3D ovoid | Face Down |

| #OBJ-2 | Eggplant | Horizontal | #OBJ-23 | Hand-therapy grip orange 3D ovoid | Face Up |

| #OBJ-3 | Plum | Standard | #OBJ-24 | Hand-therapy grip orange 3D ovoid | Horizontal |

| #OBJ-4 | Foam cube | Vertical | #OBJ-25 | Hand-therapy grip green 3D ovoid | Face Down |

| #OBJ-5 | Foam cube | Vertical | #OBJ-26 | Hand-therapy grip green 3D ovoid | Face Up |

| #OBJ-6 | Hand-therapy grip sphere | Standard | #OBJ-27 | Hand-therapy grip green 3D ovoid | Horizontal |

| #OBJ-7 | Green Filaflex 3D printed sphere | Standard | #OBJ-28 | Potato | Horizontal |

| #OBJ-8 | Hydro-alcoholic gel | Horizontal | #OBJ-29 | Paddle ball | Standard |

| #OBJ-9 | Kiwi | Horizontal | #OBJ-30 | Pear | Horizontal |

| #OBJ-10 | Lettuce | Horizontal | #OBJ-31 | TPU 3D printed pyramid | Face Down |

| #OBJ-11 | Ripe lemon | Horizontal | #OBJ-32 | TPU 3D printed pyramid | Face Up |

| #OBJ-12 | Green lemon | Horizontal | #OBJ-33 | Green banana | Horizontal |

| #OBJ-13 | Ripe tangerine | Horizontal | #OBJ-34 | Ripe banana | Horizontal |

| #OBJ-14 | Green tangerine | Horizontal | #OBJ-35 | Rotten banana | Horizontal |

| #OBJ-15 | Rotten nectarine | Horizontal | #OBJ-36 | Tomato | Horizontal |

| #OBJ-16 | Hand-therapy grip blue 3D ovoid | Face Down | #OBJ-37 | Filaflex 3D printed toroid | Horizontal |

| #OBJ-17 | Hand-therapy grip blue 3D ovoid | Face Up | #OBJ-38 | Filaflex 3D printed toroid | Vertical |

| #OBJ-18 | Hand-therapy grip blue 3D ovoid | Horizontal | #OBJ-39 | TPU 3D printed triangle | Face Down |

| #OBJ-19 | Hand-therapy grip purple 3D ovoid | Face Down | #OBJ-40 | TPU 3D printed triangle | Face Up |

| #OBJ-20 | Hand-therapy grip purple 3D ovoid | Face Up | #OBJ-41 | TPU 3D printed triangle | Horizontal |

| #OBJ-21 | Hand-therapy grip purple 3D ovoid | Horizontal | #OBJ-42 | Carrot | Horizontal |

| Combination of Features | Label |

|---|---|

| d1 | |

| d2 | |

| d3 | |

| d4 | |

| and | d5 |

| and | d6 |

| and | d7 |

| and | d8 |

| and | d9 |

| and | d10 |

| , and | d11 |

| , and | d12 |

| , and | d13 |

| , and | d14 |

| , , and | d15 |

| Combination of Moments | |||||||

|---|---|---|---|---|---|---|---|

| Sensor | Nbits/Feature | , | ,, | ,, , | ,, ,, | ,, ,, , | |

| 8 | < (all cases) | < (all cases) | (d15) | (d15) | (d11) | (d5) | |

| Finger | 12 | (d11) | (d5) | (d11) | (d5) | (d5) | (d5) |

| 16 | (d11) | (d11) | (d11) | (d11) | (d5) | (d5) | |

| 8 | < (all cases) | (d11) | (d11) | (d11) | (d5) | (d5) | |

| Palm | 12 | (d11) | (d5) | (d5) | (d5) | (d5) | (d5) |

| 16 | (d5) | (d11) | (d5) | (d5) | (d5) | (d5) | |

| 8 | (d11) | (d11) | (d11) | (d11) | (d11) | (d11) | |

| Finger and Palm | 12 | (d5) | (d5) | (d5) | (d5) | (d5) | (d5) |

| 16 | (d5) | (d5) | (d5) | (d5) | (d5) | (d8) | |

| Sensor | Best Case | LUTRAM | BRAMs | FF Pairs | LUT Logic | DSPs | F-MUXES | Power Consumption (mW) | |

|---|---|---|---|---|---|---|---|---|---|

| Finger | ,,, d5, 12 bits/feature | 0 | 0 | 14 | 19 | 0 | 0 | 30 | 4 |

| Finger and Palm | d5, 12 bits/feature | 0 | 0 | 28 | 38 | 0 | 0 | 30 | 2 |

| Finger and Palm | , d5, 12 bits/feature | 0 | 0 | 56 | 76 | 0 | 0 | 30 | 4 |

| Finger and Palm | ,, d5, 16 bits/feature | 0 | 0 | 108 | 136 | 0 | 0 | 30 | 6 |

| Finger and Palm | ,, , d11, 8 bits/feature | 0 | 0 | 72 | 128 | 0 | 0 | 30 | 12 |

| Finger and Palm | ,, , d5, 12 bits/feature | 0 | 0 | 112 | 152 | 0 | 0 | 30 | 8 |

| Finger and Palm | ,, ,, d11, 8 bits/feature | 0 | 0 | 90 | 160 | 0 | 0 | 30 | 15 |

| Finger and Palm | ,, ,, , d11, 8 bits/feature | 0 | 0 | 108 | 192 | 0 | 0 | 30 | 18 |

| Palm | ,, ,, , d5, 16 bits/feature | 0 | 0 | 84 | 114 | 0 | 0 | 30 | 6 |

| Combination of Moments | |||||||

|---|---|---|---|---|---|---|---|

| Sensor | Nbits/Feature | , | ,, | ,, , | ,, ,, | ,, ,, , | |

| 8 | < (all cases) | < (all cases) | (d15, nC5) | (d11, nC5) | (d11, nC5) | (d11, nC6) | |

| Finger | 12 | (d15, nC4) | (d5, nC3) | (d5, nC4) | (d5, nC3) | (d5, nC6) | (d5, nC5) |

| 16 | (d5, nC2) | (d5, nC4) | (d5, nC4) | (d5, nC6) | (d11, nC6) | (d5, nC4) | |

| 8 | < (all cases) | (d11, nC4) | (d5, nC6) | (d5, nC6) | (d5, nC6) | (d5, nC5) | |

| Palm | 12 | (d11, nC3) | (d5, nC4) | (d5, nC6) | (d5, nC5) | (d5, nC5) | (d5, nC6) |

| 16 | (d11, nC3) | (d5, nC3) | (d11, nC6) | (d5, nC5) | (d5, nC5) | (d5, nC5) | |

| 8 | (d15, nC4) | (d11, nC5) | (d11, nC4) | (d11, nC5) | (d11, nC5) | (d11, nC3) | |

| Finger and Palm | 12 | (d5, nC3) | (d5, nC5) | (d5, nC5) | (d5, nC5) | (d5, nC5) | (d8, nC3) |

| 16 | (d5, nC4) | (d5, nC5) | (d11, nC5) | (d5, nC6) | (d5, nC6) | (d8, nC5) | |

| Sensor | Best PCA Case | LUTRAM | BRAMs | FF Pairs | LUT Logic | DSPs | F-MUXES | Power Consumption (mW) | |

|---|---|---|---|---|---|---|---|---|---|

| Finger | ,, , d5, 12 bits/feature, nC3 | 40 | 2 | 441 | 1076 | 7 | 6 | 34 | |

| Finger and Palm | d5, 12 bits/feature, nC3 | 36 | 2 | 443 | 1078 | 7 | 6 | 32 | |

| Finger and Palm | , d5, 12 bits/feature, nC5 | 36 | 3 | 454 | 1110 | 7 | 6 | 37 | |

| Finger and Palm | ,, d5, 16 bits/feature, nC4 | 48 | 3 | 516 | 1219 | 7 | 6 | 39 | |

| Finger and Palm | ,, , d11, 8 bits/feature, nC5 | 36 | 3 | 478 | 1185 | 7 | 6 | 46 | |

| Finger and Palm | ,, , d5, 12 bits/feature, nC5 | 36 | 3 | 518 | 1198 | 7 | 6 | 41 | |

| Finger and Palm | ,, ,, d11, 8 bits/feature, nC5 | 36 | 3 | 507 | 1235 | 7 | 6 | 49 | |

| Finger and Palm | ,, ,, , d11, 8 bits/feature, nC5 | 24 | 4 | 495 | 1310 | 7 | 6 | 54 | |

| Palm | ,, ,, , d5, 16 bits/feature, nC5 | 48 | 3 | 506 | 1202 | 7 | 6 | 40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lora-Rivera, R.; Oballe-Peinado, Ó.; Vidal-Verdú, F. Proposal and Implementation of a Procedure for Compliance Recognition of Objects with Smart Tactile Sensors. Sensors 2023, 23, 4120. https://doi.org/10.3390/s23084120

Lora-Rivera R, Oballe-Peinado Ó, Vidal-Verdú F. Proposal and Implementation of a Procedure for Compliance Recognition of Objects with Smart Tactile Sensors. Sensors. 2023; 23(8):4120. https://doi.org/10.3390/s23084120

Chicago/Turabian StyleLora-Rivera, Raúl, Óscar Oballe-Peinado, and Fernando Vidal-Verdú. 2023. "Proposal and Implementation of a Procedure for Compliance Recognition of Objects with Smart Tactile Sensors" Sensors 23, no. 8: 4120. https://doi.org/10.3390/s23084120