Abstract

This paper deals with the development of a localization methodology for autonomous vehicles using only a LIDAR sensor. In the context of this paper, localizing a vehicle in a known global map of the environment is equivalent to finding the vehicle’s global pose (position and orientation), in addition to other vehicle states, within this map. Once localized, the problem of tracking uses the sequential LIDAR scans to continuously estimate the states of the vehicle. While the proposed scan matching-based particle filters can be used for both localization and tracking, in this paper, we emphasize only the localization problem. Particle filters are a well-known solution for robot/vehicle localization, but they become computationally prohibitive as the states and the number of particles increases. Further, computing the likelihood of a LIDAR scan for each particle is in itself a computationally expensive task, thus limiting the number of particles that can be used for real-time performance. To this end, a hybrid approach is proposed that combines the advantages of a particle filter with a global-local scan matching method to better inform the resampling stage of the particle filter. We also use a pre-computed likelihood grid to speed up the computation of LIDAR scan likelihoods. Using simulation data of real-world LIDAR scans from the KITTI datasets, we show the efficacy of the proposed approach.

1. Introduction

Real-time localization and tracking using sensors such as LIDAR and vision are becoming essential elements to achieve autonomy for vehicles. While GPS sensors provide a means for accurate localization and tracking, they often produce erroneous measurements in dense urban areas and often no measurements altogether in areas such as underpasses, tunnels and parking lots. In the absence of GPS or GPS-denied regions, the problem of localization becomes challenging. Indoor localization is also essential for warehouse robotics where GPS signals are not available. In such cases, LIDAR sensors mounted on robots can be used to localize the robot. To this end, vision and LIDAR sensors provide a means for both localization and tracking. This paper primarily deals with the problem of localization when only a LIDAR sensor is available. By addressing this base case, it is anticipated that additional sensors, such as cameras, IMUs and GPS, can easily be integrated and further increase the accuracy and reduce computational complexity. For the problem of localization, it is assumed that a (three-dimensional) map of the environment is available. While this assumption of maps is demanding, we believe that with the growth in the number of autonomous vehicles with LIDAR sensors, these maps can be locally built in an offline manner.

Though particle filters (PF) have been extensively used for vehicle localization [1,2,3,4], they often require a large number of particles to have robust performance and thus have high computational demands. This paper addresses this challenge by proposing a novel improvement to conventional particle filters often used for localization. The primary contributions of this paper are: (i) The computations of LIDAR scan likelihoods are made more efficient using sparse pre-computed lookup tables. (ii) The resampling stage of the particle filter is improved with a scan-to-map matching algorithm. This matching algorithm significantly increases the convergence of the particles to the true vehicle pose. In addition, any loss of tracking is also subsequently corrected during the tracking phase. (iii) A complete algorithmic approach is described that leverages parallel processing to asynchronously run the scan-to-map matching algorithm and integrate its results with the particle filter.

The rest of the paper is organized as follows: The literature review is described in Section 2, along with the notations used in this paper. In Section 2.2, the conventional particle filtering approach using a LIDAR sensor is revised, following the initial approach in [2]. In Section 3, the matching algorithm is developed from the perspective of localization and tracking. The complete approach is described in Section 4. In Section 5, this approach is applied to the KITTI odometry dataset, which has the true ground poses for validation. While we assume the mounted LIDAR sensor is a mechanically rotating scanning LIDAR with 360 degrees field of view (FOV) (such as the Velodyne or Ouster), the proposed methodology can also be used for front-facing solid-state LIDARs with a smaller FOV, and stereo camera-based pseudo-LIDAR point clouds [5].

2. Literature Review

The problem of tracking corresponds to estimating the state of the vehicle from observational data of different sensing modalities. Often, at the core of this tracking process is a filtering algorithm that fuses measurement data with motion models of vehicles. While for linear systems with Gaussian uncertainty, the Kalman Filter [6] is the exact and optimal filter, for nonlinear systems, various approximations lead to different filters that trade off computational complexity with accuracy. Conventional nonlinear filtering algorithms include Extended Kalman Filter (EKF) [7], Unscented Kalman Filter (UKF) [8], Gaussian Quadrature Filters (GQF) [9], Conjugate Unscented Transform Filter [10] and particle filters (PF) [11]. Particle filters are often the popular choice for problems of localization, tracking and even Simultaneous Localization and Mapping (SLAM) [12,13]. Conventional PF algorithms are often modified to improve performance. For example, visual lane-marking and GPS can be used to update the weights of the particles in the PF used for localization [14]. The ease of implementation of PF and its ability to accommodate nonlinear models makes PF an apt choice. Alternatively to PF, many SLAM problems often use graph-based pose optimization approaches to localize the vehicle’s position [15,16].

An intuitive approach for LIDAR-only vehicle localization is to match the current LIDAR scan to an available map. Often the iterative closest point algorithm is used to match the scan or features of the scan to a pre-built map [17,18,19]. Feature-based matching can have lower computational complexity but requires the design of distinguishable features that can uniquely match the scan. A hybrid approach that uses the full grid and leverages the strengths of feature-based approaches is proposed in [20], where a Rao–Blackwellized particle filter (RBPF) has been used to utilize the location features. The proposed method in this paper also builds upon the strengths of the particle filter and scan matching without the need to build features for the map. This main approach is described in Section 4.

2.1. Notations

A vector is denoted using a bold letter, such as , where the subscript k indicates the discrete time instant . is used to represent a probability density function (PDF) and represents a multivariate Gaussian PDF for the random vector with mean and covariance matrix P. The superscript in is used to denote the ith particle/sample of the particle filter. The position vector of the vehicle is represented as , and the orientation of the vehicle is described by the yaw-pitch-roll angle vector . The pose of the vehicle is represented by the vector , and the corresponding homogeneous transformation matrix is represented as

where is the rotation matrix for the z-y-x (yaw-pitch-roll) Euler angles. It is assumed that the body-fixed x-axis is pointing in the forward direction of the vehicle, and the corresponding body-fixed z-axis is pointing vertically up. A LIDAR scan at time is denoted as , and a single point with index j in this scan is represented as . The global map is represented as , and the corresponding top view or bird’s eye view (BEV) of the global map is represented as . A point in a BEV LIDAR scan will be denoted as , which is computed by setting the z-coordinate (assuming the z-axis points vertically up) of to zero.

2.2. Particle Filtering

Consider the vehicle dynamical model and mounted sensor model as:

where is the state vector at time step k, and is the measurement vector at time step k. is the process noise, and is the measurement noise; both are modeled as Gaussian white noise sequences with covariances and , respectively. In general, this measurement vector can directly hold the raw LIDAR scans, IMU measurements or features extracted from LIDAR scans and images. The general filtering algorithm works by solving the two-step filter equations, namely the Chapman–Kolmogorov Equation (CKE) and the Bayes’ Rule [21]:

where is the a priori state probability density function (PDF), is the a posteriori PDF after the measurement update and is the sequence or history of measurements until time step . The particle filter [11] solves these equations by approximating the state PDF using a finite set of samples or particles as . Hence, the PF only keeps track of the set of samples and their corresponding weights at every time step k. Propagation of the particles by (4) reduces to sampling from the transition PDF [11],

where represents a multivariate Gaussian PDF for the random variable with mean and covariance matrix P. Here the proposal density is taken as , though not optimal, it is often the most pragmatic choice [11]. The weights remain the same during propagation. The a posteriori PDF is approximated by updating the weights of the samples using the measurement likelihood model of the sensors as [11]:

Hence, the resultant set is denoted as . The measurement likelihood is typically taken as when the sensor model is well-defined and simple to evaluate. Though simple, the practical implementation of PF requires the proper tuning of parameters such as the number of particles and the proposal density. Further, PFs are susceptible to the problem of sample degeneracy, where, after a few iterations, only a small fraction of the samples have significant weight. This problem is solved by using better proposal densities or resampling strategies [11]. The conventional resampling procedure is that of bootstrap filtering. Ideally, increasing the number of particles can reduce the effects of degeneracy and sample impoverishment, but it directly leads to large computational complexity. While other resampling procedures, such as regularized particle filters can also be used, they are computationally demanding [11]. Hence, it is imperative that we reduce the number of particles and improve resampling strategies to achieve better performance of particle filters.

2.3. Dynamic Model

The dynamical model used for tracking the vehicle is modeled as a constant acceleration and constant turn-rate model. The state vector for the vehicle is , where is the position (with units in meters) of the vehicle in the reference frame of the map, are the yaw-pitch-roll angles (measured in radians) of the bod-fixed frame of the vehicle with respect to the map reference frame. The body-fixed frame is centered at the origin of the LIDAR sensor, with the x-axis pointing in the forward direction of the vehicle. v is the magnitude of the velocity of the vehicle along the body-fixed x-axis. are the corresponding yaw-pitch-roll rates and a is the magnitude of acceleration of the vehicle. Given the state at time step , the discrete-time dynamical model propagates the state vector to time step k, denoted as is given by the approximate first-order hold model:

here is the rotation matrix computed using the yaw-pitch-roll angles. In this model, the angular rates and the acceleration are assumed to be constant for short discrete time steps.

2.4. Sensor Model

The next problem is that of evaluating the likelihood probability for LIDAR scans in a given map. We follow the model in [2] to compute the likelihood. For a sample vehicle state , we use the notation and for the corresponding rotation matrix and translation vectors, respectively, for the vehicle pose. The LIDAR scan at time step k is denoted as for , where is the number of points in the scan. The scan likelihood model (sensor model) is constructed as:

The pose of particle is used to transform the LIDAR scan points from the sensor reference frame to the world inertial frame and then their corresponding distance to their closest neighbors in the map are determined. The likelihood is simply constructed as a measure of how close the transformed LIDAR scan is to the map. This likelihood function is strongly influenced by the chosen parameters of maximum distance and standard deviation . is the distance between a point x and its closest neighbor in the map . The nearest distance can be efficiently computed by kdtrees and octrees. However, it is to be noted that a typical LIDAR scan contains more than 100 K points. When combined with a large number of particles ( 1000–10,000), the number of nearest neighbor searches () becomes computationally intractable. To this end, the authors of [2] propose to decimate the number of points in the LIDAR scan to achieve better real-time performance. In other words, the number of points in the LIDAR scan is reduced by taking every nth point from the set of points in the scan. Parameter n is chosen by the user to reduce the computational complexity. The benchmarking results in [2] show sufficient localization capability with a much smaller subset of points of the LIDAR scan but with a large number of particles. To this end, we use this benchmark as a guide and instead use voxel-based down-sampling to reduce the number of points uniformly over the space. In voxel-based down-sampling, only one sample is randomly chosen within each voxel. This allows the LIDAR scan to be uniformly distributed over the original LIDAR scan.

However, as the number of particles increases, the computational complexity remains significantly high. It can be observed that the nearest neighbor search routine, in (14), is the main computational bottleneck, particularly when the LIDAR scan has thousands of points. To ease this computational complexity, we propose the use of a pre-computed sparse tensor to store the nearest distance to a map . This way, the pre-computed nearest neighbor distance to the map can be used for all LIDAR scans. The grid is determined by estimating the lower bound point and upper bound point for the bounding box of the map , with the length along the axes denoted as . Let the desired resolution of this spatial grid be denoted as . Assuming a uniform grid of points on each axis, the points are simply constructed as a tensor product as , where the grid nodes along the x-axis are represented as = with . and are similarly defined for the y- and z-dimensions, respectively.

The map is constructed offline by fusing sequential LIDAR scans. In this process, even LIDAR points corresponding to moving objects such as vehicles and people are included in the map. As these objects are temporary, the measurement likelihood computations and the scan-to-map matching process are correspondingly affected during real-time operation. To this end, a probabilistic occupancy grid [13] approach can be used to sequentially fuse the LIDAR scans into the map. The underlying assumption is that the grid cells (or voxels), corresponding to stationary objects, such as buildings, light posts and road signs, will end up having a higher density of points in their voxels after fusing all the scans. On the contrary, moving objects will have a lower density of points as the points will be distributed across the voxels along their path. While robust, the probabilistic occupancy grid could be computationally intensive. As such, for simulation purposes in this paper, we propose to simply remove voxels with fewer points than a set threshold. This improves the robustness of measurement likelihood computations in (15). The nearest point in the map to a grid point is pre-computed along with its distance. Now, given any LIDAR scan point , it can be decimated to its corresponding grid node . This way, multiple points that fall within the cell located between corners and can be mapped to the same grid node and thus save on the number of nearest neighborhood searches. The underlying assumption is that the nearest distance of points in a cell to the map can be approximated by the nearest distance of its corner to the map. The error in the nearest neighborhood distance is a function of the grid resolution used to create the cells.

As a regular grid is used, not all the grid nodes are necessary, as the map is usually less dense in regions where the vehicle cannot navigate. One approach is to use an adaptive grid, where higher resolution grids are only used in dense regions of the map. Alternatively, in this work, we use a high-resolution regular grid but instead use a sparse lookup table, denoted as , to store the nearest neighbors to the map. The sparsity of the map is further increased by only saving grid points that have a neighbor in the map within a distance of . The sparse map is populated offline and offers fast retrieval of approximate nearest neighborhood distances. In the offline process, to construct the lookup table, the distance d of each grid node index , with , and is computed using the nearest neighborhood search as . Then, this value is inserted into the sparse map as when . Now, given any point , the retrieval of its approximate closest distance to the map is achieved by first computing its corresponding grid node index as:

If the index triplet exists in , it can be retrieved as . If does not exist, then the default is directly used. It can be observed that the closest distance retrieval is fast as it involves only a simple lookup of keys. In this work, we use the parallel sparse hash map in [22]. With this fast lookup, the likelihood of LIDAR scans with a large number of points can be quickly computed. In the next section, a scan-matching method to improve the particle filter is discussed.

3. Multi-Resolution Scan Matching

The multi-resolution scan matching method used in this work is adopted from [23]. Rather than using it for scan-to-scan matching, the approach is used for scan-to-map matching. Only a brief review of the approach is discussed here, and complete details can be found in [23]. A similar approach to the branch bound method for scan matching and identifying loop closures can be found in [24]. Henceforth, the scan and map will refer to a point cloud in , typically the top view or the bird’s eye view of the scan. This bird’s eye view map will be referred to as .

The points in a LIDAR scan are often given in the LIDAR sensor-fixed frame or even the body-fixed frame. The objective is then to find the homogeneous transformation T that takes these LIDAR scan points and aligns them to the map in the map-fixed reference frame. As the points are in , it is only required to compute the rotation matrix and the translation . Conventional algorithms such as iterative closest point ICP and GICP [25] are predominantly local in nature and usually work well in the immediate neighborhood of the initial guess. The cost of aligning the scan to the map, as in the iterative closest point, is defined as where is a point in the scan ( BEV projection) and is the closest point to the transformed point . This cost function is nonlinear and often results in local minima, which tends to be wrong matches. Alternatively, the only way is to use a brute force approach or an exhaustive search approach by enumerating all possible angles and translations to find the global minimum. However, this is computationally challenging and often intractable. To this end, the method in [23] uses a carefully designed exhaustive search method, where the infeasible or high-cost regions of search space are progressively eliminated using low-resolution grids of the search space, thus reducing the computational complexity.

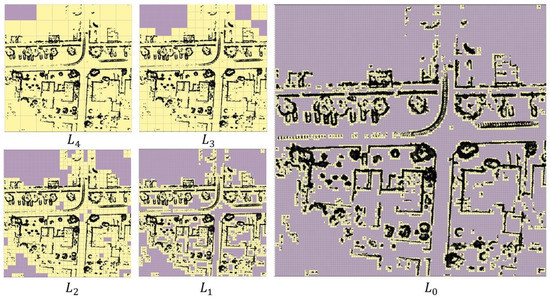

Starting with the highest desired match resolution , a regular grid is constructed for the map with spacing , which is denoted as level . A scan is assumed to be matched to the map if all the scan points lie within a distance of from the map. The value within a cell with index denoted as , with lower bound and upper bound is taken as the number of map points that fall within this cell. A simpler approach would be to just use a binary value to indicate if a cell contains at least one map point. Progressive levels for are then constructed using max-pooling operation with a stride of two, forming a sort of image pyramid with corresponding grid spacing denoted as at level l. Figure 1 shows an example of these progressive levels for a desired match resolution of at . Subsequent levels , , and have double the resolution at each level, as seen in Figure 1. The lighter shade grid cells (yellow in Figure 1) correspond to cells with at least one point, and the darker shade cells have no map points within them and hence have a value of zero.

Figure 1.

Examples of levels (–) for a section of the BEV map.Lighter yellow shaded grid cells correspond to cells with at least one point, and the darker shaded cells corresponding to cells with no points. The black points correspond to the map points.

The matching score for a given transformation at a particular level, l, is given as

Let be the index of the cell that contains the point , then in (17) is evaluated as and otherwise is zero. This cell index is directly computed as . Thus the total score computation in (17) corresponds to a simple lookup in . The objective is then to find the optimal transformation that maximizes this nonlinear score, as the highest score would correspond to the best alignment to the map. To avoid the nonlinearity due to the angular rotation, one can consider discrete angular positions as for , where is the desired angular resolution. The subsequent optimization problem is then to maximize (17) only with respect to as individually for each . While it can be observed that the cost is piece-wise linear in , local search algorithms that rely on gradients often result in local minima. A global search is generally required, and it tends to become computationally demanding for each . To this end, an intuitive approach for an exhaustive search over is to quickly eliminate cases that do not lead to the optimal solution. This exhaustive search for all can be performed sequentially, beginning at coarse resolutions of and progressing toward finer resolutions (). At low-resolution levels, the spatial grid is coarse, and configurations that do not lead to the optimal one can be quickly pruned at early stages leading to a significant speedup. At each level, it suffices to translate by the resolution of the grid, thus further speeding up the search process. As discussed in [23], a max-heap data structure, ordered using the score of a configuration, is most appropriate to refine the search over levels with higher resolution. In addition to the score, each entry in the heap also saves , the level l, the lower bound and the length of the rectangular search region. At each subsequent higher resolution level, this rectangular search region is split into four quadrants and pushed into the heap. When the top of the heap is at level corresponding to , the optimal solution is returned. While this matching process is more efficient than an exhaustive search, it is still computationally prohibitive when used to match a scan against the full map. In the next section, the main approach is discussed, which leverages this hierarchical scan-to-map matching approach in an efficient manner to improve the localization process of the particle filter (Section 4.4).

4. Main Approach

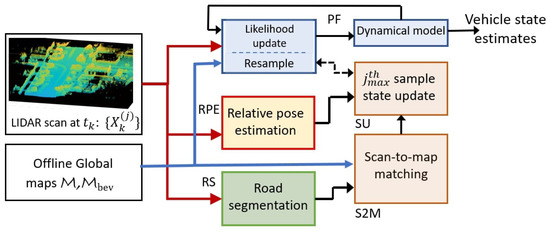

The overall approach is illustrated in Figure 2 for a single time step, where each block is used to designate a specific process or operation, and the arrows connecting them show the flow of information. The LIDAR scan at the current time step (shown on the left of Figure 2) is processed to result in the estimated vehicle pose shown on the right of Figure 2. The intermediate processing to estimate the vehicle pose is accomplished by various subsystems shown as blocks. These blocks (or processes) are labeled as particle filter block (PF), relative pose estimation block (RPE), road segmentation block (RS), scan-to-map matching block (S2M) and the sample update block (SU). The blocks are implemented in separate threads and run asynchronously. Specifically, the PF block running independently on a thread involves the computation of likelihood probabilities for the samples using the maps stored offline (shown on the left of Figure 2). The PF block is the primary block resulting in the vehicle state estimates. The convergence and robustness of the PF block are enhanced by the SU and S2M blocks, which enrich the samples/particles with high weights. Thereby, subsequent resampling will lead to better samples for the vehicle state. The SU and S2M blocks run on the same thread, updating the sample as soon as the scan-to-map matching is complete in the S2M block. The S2M block matches the LIDAR scan, where the road is segmented and removed by the RS block, to the offline map. The RPE block is run on a separate thread and computes the consecutive scan-to-scan poses and the corresponding linear and angular velocities. Finally, the RS block works in a background thread to generate LIDAR scans with the road removed. It is necessary to remove the road (and even flat surfaces) on the bird’s eye view of the map and the LIDAR scan as they do not contain features for alignment. The primary features in the top view of the map are often the building, wall and sidewalk edges that strongly aid the scan-to-map matching algorithm in Section 3. Though the S2M block is computationally intensive, it generates very accurate global pose alignment that outweighs its inherent complexity. The accurate global pose alignment significantly enhances the PF, making it accurate and even robust. The details of each block are further described below.

Figure 2.

Processing steps in one iteration of the proposed scan matching-based particle filtering approach. The dotted arrow between SU and PF indicates an update only when the computation is completed.

4.1. Particle Filter

The particle filter implements the sample propagation using the dynamical model in Section 2.3 and updates the sample weights using the likelihood approach as detailed in Section 2.4. The particle filter is based on the sequential importance sampling (SIS) [11] particle filtering algorithm, where a bootstrap resampling procedure is implemented. The resampling step is crucial to address the degeneracy problem of particle filters. The likelihood probability considered in Section 2.4 is highly dependent on , and the pose of the vehicle, particularly the and yaw states. In many cases where the vehicle suddenly makes a turn or accelerates, all but a few samples with a pose close to the true vehicle pose will have significant weights, thus rendering the rest of the samples useless. Resampling addresses this issue by generating samples close to the samples with significant weight. Further, the process noise matrix in Section 2.3 plays a crucial role in improving the diversity of the samples by adding randomness to the states of the particles and thus helps to capture vehicle turns and rapid change in accelerations (slowing, stopping and speeding). Further, by having adequate process noise, the problem of sample impoverishment [11] is reduced, without which the particles will collapse to a single particle after a few iterations. The resampling step is only performed when the effective sample size is less than [11].

4.2. Relative Pose Estimation

The relative pose estimation (RPE) block computes the relative poses between consecutive time steps. Scan-to-scan matching is performed in a separate thread to estimate these relative poses. As the current work focuses on the LIDAR-only approach, these relative pose estimates provide an alternate way to approximately compute velocity and angular rates that help in the overall tracking process using the particle filter. Ideally, an inertial measurement unit (IMU) sensor can directly provide angular rates and can replace this subsystem to further save computational resources. For LIDAR scans, we use the Generalized Iterative Closest Point (GICP) algorithm [25] for its robustness in assessing the full relative pose. While there is computational overhead in computing the covariance matrices for all points, the GICP can have better stability and accuracy compared to the conventional Iterative Closest Point/Plane algorithms that are often sensitive to outliers [26]. We use voxel-based downsampling to reduce computational complexity and, subsequently, the RANSAC algorithm to further introduce robustness against outliers. The GICP (or other scan-to-scan matching algorithms) computes the relative pose that aligns the LIDAR scan at time step k with the LIDAR scan at time step . The pose from the initial time is then computed as

where is the initial estimate of the vehicle’s pose at time step 0. As is unknown and is estimated later on through the main filter, one can assume to be the identity. The velocity is computed using a simple one-step Euler backward difference from the pose estimates as and , where and are the poses extracted from and , respectively. Better estimates can be achieved by using a cubic spline interpolation [27]. Note that these estimates are noisy on account of simple backward differences for derivative estimates. As such, these estimates can be assumed as pseudo measurements for the particle filter.

4.3. Road Segmentation

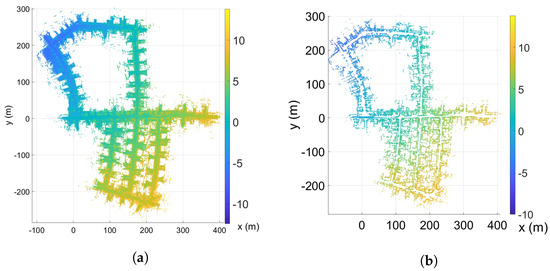

The scan-to-map matching procedure discussed in Section 3 is developed for scans. While developing a full scan-to-map matching procedure along similar lines is straightforward, the computational complexity renders the approach intractable. To this end, the LIDAR scans are matched to the global map in only . The success of the multi-resolution scan matching method is highly dependent on distinguishable spatial features in the map and the LIDAR scans. From the top view of the point cloud (by neglecting the z-coordinate), the lowest resolution grid will have points in almost every cell. The matching score over road regions will be smaller than the score for grid cells belonging to vertical walls or buildings. The LIDAR points corresponding to any temporary objects, such as a moving or parked vehicle, in the map could lead to degraded scan-to-map matching results. In the top view, the removal of flat horizontal surfaces (such as vehicle roofs) will reduce the affect of the remaining vertical surfaces of these temporary vehicles. As the scan-to-map matching cost in is aggregated over other stationary objects, such as buildings that have prominent features, such as vertical walls, corners and turns; therefore, the matching score becomes less sensitive to moving objects. Hence, to reduce the number of false positives, it would be advantageous to remove flat regions and improve the features in the top view that are dominated by vertical features, which are strongly captured by most LIDAR sensors. This is evident in Figure 3. To this end, the road segmentation block (RS) removes the roads that contribute to the major flat regions. Once removed, the top view will clearly show the turns and building borders as strong features for matching. Road segmentation methods are well studied and essential for road and lane detection. The approach of conditional random fields [28,29] provides effective means for road segmentation. Recently, deep learning models [30,31] provides alternative ways to segment road regions and, subsequently, remove them from the map and the scan. In this work, we simply choose to use surface normals to remove points belonging to flat regions. In particular, points with a z-coordinate of its unit normal vector greater than 0.75 are removed. This approach may not be appropriate for all environments, especially if the roads and surfaces are sloped, but it is computationally efficient and sufficient for enhancing walls and buildings, as shown in Figure 3 in our simulations. Ideally, a road segmentation algorithm, as mentioned above, should be used to improve the robustness of the entire localization approach. The color map in this figure corresponds to the height (z-coordinate), which varies by about 20 m. The dynamical model in Equations (8)–(12) is a model that also accounts for the z-coordinate of the vehicle.

Figure 3.

A sample map from the KITTI odometry dataset (sequence 5). (a) Top view, (b) Top view (road removed).

4.4. Scan-To-Map Matching

This block implements a two-stage scan-to-map matching (S2M) process that improves the overall performance of the particle filter. As the scan-to-map matching procedure in Section 3 is computationally demanding; it is only applied to the sample/particle with the highest weight. The assumption is that the particle with the highest weight has a better chance of being close to the true vehicle state. Let the index of particle with the highest weight be denoted as . The BEV LIDAR scan (transformed by the particle pose), with roads removed, is first matched to the global BEV map in using the multi-resolution scan matching algorithm for Section 3. To reduce computational time, the match is only performed in a small rectangular region, with side lengths , about the position of the particle . The corresponding lower bound and upper bound for the search region of are taken as and , respectively. The resultant match, denoted as , is accepted only when the match score is greater than the score of the original particle’s pose . As the scan is only matched in the BEV map, a subsequent match is performed in using GICP, which aligns the matched scan with the full map. The underlying assumption is that the aligned pose in is close enough to the true vehicle pose so that the GICP algorithm can locally correct the full pose in . The GICP-corrected pose in is denoted as = and can be used to directly update the corresponding pose values in to result in the corrected particle state .

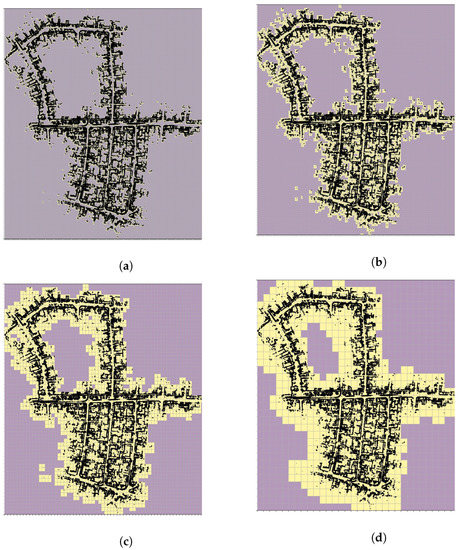

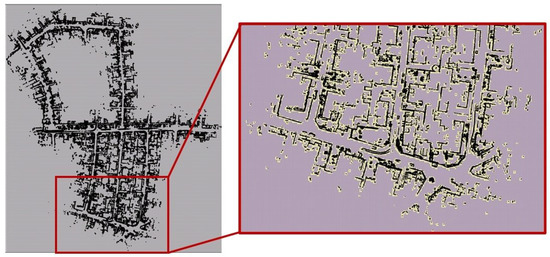

The multi-resolution levels for the map are pre-computed offline and directly used during the S2M block computations. For example, Figure 3b shows the BEV map, of sequence ‘05’ of the KITTI odometry dataset, with horizontal planes removed. The KITTI dataset also contains true ground pose data that are used to assemble all the individual LIDAR scans and form the map . The corresponding map is constructed by neglecting the z-coordinate. A few instances of the corresponding multi-resolution grids are shown in Figure 4 for levels –. In this figure, the black points indicate the LIDAR scan points of the map, and the lighter yellow regions represent the grid cells that have at least one map point. The level with the highest resolution, (not shown in figure), was constructed with the desired matching resolution of . The subsequent levels were constructed by the max pool operation, as described in Section 3. Figure 5 also shows the zoomed-in image of the resolution grid. It can be seen that by removing the horizontal surfaces (roads and roofs of buildings), the LIDAR features for matching become prominent.

Figure 4.

L9 resolution. (a) L6 resolution, (b) L7 resolution, (c) L8 resolution, (d) L9 resolution.

Figure 5.

Level resolution with zoomed-in view at a particular region.

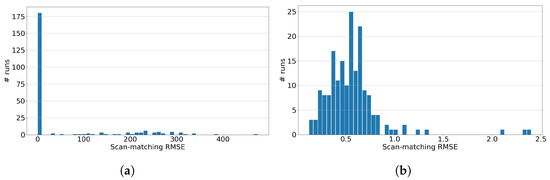

Figure 6a shows the Root-Mean-Squared-Error (RMSE) results of matching individual LIDAR scans from the KITTI odometry dataset sequence ‘05’ with the corresponding map . The histogram is constructed by randomly sampling 350 individual scans from the complete dataset of LIDAR scans of sequence ‘05’. This scan is matched to the map using the multi-resolution scan matching approach discussed in Section 3. The error used in the RMSE is calculated using the total Euclidean distance between all of the scan points transformed by the true pose and the same points transformed by the estimated pose, i.e., where , and are the number of points in the scan, the ground truth transformation matrix and the estimated transformation matrix, respectively. The angular resolution was taken as in the angular range of , the matching resolution was taken as = [1 m, 1 m] and the search space for the translation was taken as the complete range of the map , as in Figure 3b, which is about 400 m in both the x- and y-dimensions. Matching a scan against this full map is computationally demanding and time-consuming. The average wall-clock time for computing the match is 1.6 m, which is not acceptable for real-time performance. Yet the results show strong optimism in matching performance, with the majority of the RMSEs close to zero. Figure 6b shows the same histogram results but zoomed-in the interval [0 m, 2.5 m]; the RMSE is within the chosen matching resolution of 1 m. It can also be seen that the results in Figure 6a show large RMSE for some scans, indicating failed matches. This is often the case when a LIDAR scan is matched against the entire map with multiple sections that look very similar, especially at low-resolution levels. However, it can also be observed that scan-to-map matching succeeds for the majority of cases (>87%). We use OpenMP to parallelize the computation of the matches and use the priority queue from the C++ Standard Template Library (STL) to implement the max heap for the matching algorithm. Note that the implementation may not be optimized for execution time. GPU implementation can make the scan matching much faster.

Figure 6.

RMSE histogram for scan-to-map matching (x-axis units in meters). (a) RMSE histogram, (b) RMSE histogram for .

The computational time and the failed matches can be significantly reduced by searching in a local neighborhood rather than the full map. This reduces the search space for the translation variable . The computational complexity can also be reduced by using a down-sampled scan.

4.5. Particle State Correction

As seen in the previous section, the scan matching process is often computationally more expensive than the particle filter, which only entails simple likelihood computations for each particle. While the ideal case would be to apply scan-to-map matching (Section 3) for every particle, it would be computationally prohibitive and unnecessary. The particle filter thread (in Figure 2) often runs at a higher frame rate (limited by the LIDAR scan frame rate) compared to the slower frame rate of the scan-to-map matching thread. This might make the scan-to-map matching thread useless for any real-time implementation. Despite the slow frame rate of the scan matching process, its high success rate in exactly matching the scan to the map is highly advantageous and should be leveraged. To this end, we propose a two-stage scan-to-map matching process that accounts for the slower frame rate. In the first stage, only the pose for the particle at some time step is used to begin the scan matching process. When the matching process completes at some later time step , the second stage deals with the process of propagating the matched pose from time step to . To improve the success of matching the scan to the map and to reduce the computational time, only the pose of the particle is matched to the map. The scan-to-map matching process that is initiated at time step with pose of the particle will result in the corrected pose at some later time step where , with being the number of time steps required by the S2M procedure. One might be inclined to simply correct the pose of the same particle at time step with the S2M pose computed at time k. While this might be pragmatic when the vehicle is moving slowly, most often this will lead to a low likelihood weight for the particle at time as the vehicle could have moved a significant distance or could have made a significant turn, thus wasting all the computations of the S2M block. The appropriate solution would be to propagate the computed S2M pose at time to the current time . This is performed using relative poses computed from the RPE block from time to as

where is the homogeneous transformation matrix corresponding to the pose . can now be used to update the pose coordinates of at time . Further, the velocity and angular rate estimates from the RPE block are also used to directly replace the corresponding values in . During time no resampling is performed to keep the particle intact. The key assumption of this block is that the relative pose estimates during the duration can be computed reliably and accurately using the GICP algorithm of the RPE block. This allows one to use relative transformation to transform the accurate global pose correction at time to .

5. Simulation

In this section, the proposed approach is validated on the publicly available KITTI odometry dataset. These datasets have ground truth poses that allow for checking convergence and accuracy of the localization and tracking processes. As with most algorithms, the proposed approach is also dependent on tuning parameters such as , , , and resolution for voxel-based downsampling. As it is well established that a very large number of particles will improve the convergence of the particle filter [2], we only use particles in our preliminary simulations to test the performance of localization using fewer particles. The simulations help validate the hypothesis that scan-to-map matching can considerably increase the convergence of the particle filter with just a few particles. We fix the resolution for downsampling at 0.5 m for the LIDAR scans; is taken as 5 m and m. The process noise for state in the dynamical model, described in Section 2.3, is taken as , , , , where represents a diagonal matrix with given entries along the diagonal. The resolution of voxel-downsampling for the LIDAR scan can be chosen to achieve the desired frame rate of the particle filter thread. For the particle filter, the initial particles have positions uniformly sampled in the entire map region; the yaw-pitch-roll angles are sampled from , and , respectively. The angular rates are sampled from a Gaussian probability density function (PDF) with zero mean and variance (0.1 rad/s). The velocity is assumed to be uniformly sampled from , where is the known maximum velocity for the vehicle (used in sequence ‘05’; of the KITTI dataset [32]) or based on the location within the map (speed limits) or the average speed of the vehicle.

The simulation implements the parallel architecture of the particle filter and the scan-to-map matching procedure, as illustrated in Figure 2. As the particle filter is initiated with random particles over the entire map, only a few particles or none of the particles will be close to the true position of the vehicle. As the vehicle moves around in the map, the sequential LIDAR scans are processed by the particle filter thread block and the RPE thread block to estimate the vehicle’s state. Particles close enough to the true vehicle state will eventually be weighted higher by the particle filter. Hence, to achieve successful implementation of the particle filter, a large number of particles are required, especially in , to improve the chances of having particles close enough to the true vehicle state. The S2M block tries to place particles closer to the vehicle state, and thus, the particle filter is able to use fewer particles (just 1000 particles in the simulations). In essence, the computational complexity of using a large number of particles in a conventional particle filter is reduced by using the S2M block with fewer particles.

5.1. Initialization

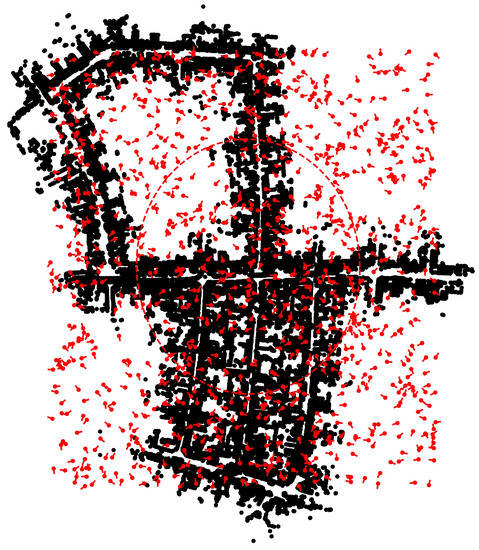

Figure 7 shows the initial time step where the particle filter is initialized over the whole map. The figure shows a top view or BEV of the map. While the positions of the particles are initialized in , only the projection is shown in Figure 7 as red circular markers (or dots) with a small line segment indicating the heading of the particle. As observed, all the particles have random headings and positions, and thus most particles have a very low likelihood. Only particles close enough to the true position of the particle will gain higher weights through higher relative likelihood values. However, with only 1000 particles, the probability of a particle falling close to the true position and also having a close enough heading (pitch and roll) to the true vehicle is very small. The only solution is to have a massive number of particles, especially in , to be close to the vehicle’s pose, which, in turn, makes the particle filter inefficient and even computationally intractable. The dotted red circle in the figure corresponds to the covariance ellipsoid of the position estimated by the particle filter.

Figure 7.

Snapshot at with particles shown as red dots. The red dots correspond to possible vehicle positions and the corresponding red line—segment from each dot represents the heading of the vehicle.

5.2. Simulation Snapshots

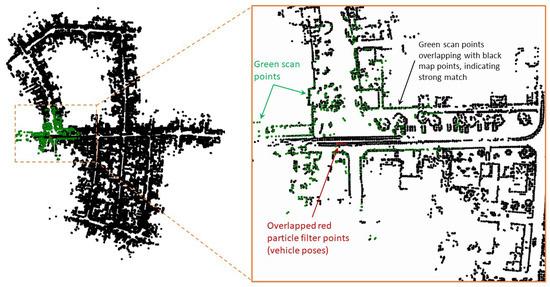

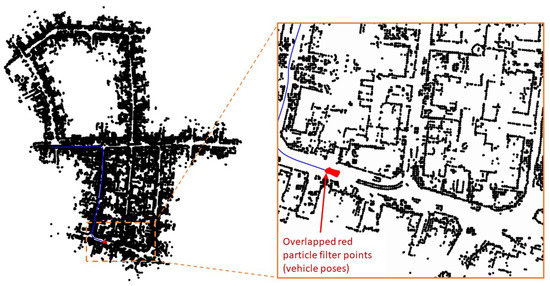

Figure 8 shows the snapshot of the simulation and its corresponding zoomed-in region around the initial position at time step . At time step , the S2M thread begins the scan-to-map matching process for the particle with the highest weight. At , S2M results in a match for the LIDAR scan at . The matched pose is then propagated to the current time step . This matched and propagated pose is used to transform the LIDAR scan at time step from the body-fixed frame to the initial (inertial) frame and is shown using green points in Figure 8. It can be observed that the green points overlap the black map points, indicating a very good match. This suggests that the LIDAR scan at time step was matched accurately, and the relative pose estimates were good enough to propagate the exact match to time step . Once the matched pose estimates are used to update the particle with the highest weight, the likelihood of this particle is recomputed to assign a new weight that is typically the highest on account of a very good match. As a result, the resampling process of the particle filter will resample the particles close to this particle. This can be observed in the zoomed section in Figure 8, where the particles represented by red points are overlapped. The S2M thread again begins at this time step and completes at some later time step ().

Figure 8.

Snapshots of a simulation at .

Figure 9 shows similar snapshots of the simulation at time step . The blue line is the ground truth trajectory of the vehicle. At this time step, there is no result from the S2M thread yet, in which case the conventional particle filter keeps track of the vehicle’s state. Figure 9 also shows a zoomed-in section, where all the particles are close together at the true position of the vehicle, thus providing reliable estimates between S2M thread computations. As such, the particle with the highest weight is often close to the vehicle’s state, thus leading to faster and more successful S2M thread results.

Figure 9.

Snapshots of a simulation at .

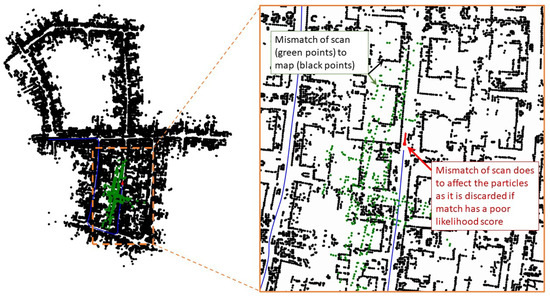

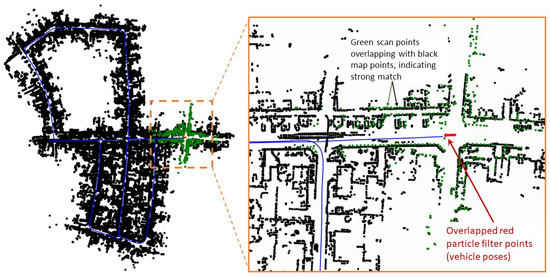

Figure 10 shows the snapshot at time step , where the S2M threads happen to return a result. The matched and propagated result to time step clearly failed, as seen in the zoomed-in section of Figure 10. The S2M matching process can occasionally fail in very dense regions or regions of the map that have similar-looking streets. This match is not accepted to update the pose of the particle, as the corresponding likelihood will significantly lower the weight of the particle compared to its original weight. This simple check can quickly disregard failed matches. Figure 11 shows the snapshot of the simulation toward the end of the map at time step . At this instant, the S2M block, which began at time step , resulted in a perfect match of the LIDAR scan and significantly improved the particle filter.

Figure 10.

Snapshots of a simulation at .

Figure 11.

Snapshots of a simulation at .

5.3. Localization Criteria

The proposed approach for localization can be used to continuously track the vehicle. This is often unnecessary due to the computational complexity of the localization process, and one can switch to conventional tracking filters once the vehicle has been sufficiently localized. In this paper, a vehicle is considered to be localizedif the standard deviation in the coordinates of the particle distribution is less than 10 m for (a) at least 10 consecutive time steps (LIDAR scans), and (b) the vehicle has moved a relative distance of at least 10 m or (c) has turned a relative angle of 30 degrees. Criteria (a) is useful to avoid spurious false positives, where the particle filter localizes to the wrong location with similar-looking LIDAR scans. Criteria (b) and (c) are further necessary to make sure that there is enough diversity in the sequential LIDAR scans. This helps in reducing false positives. If the standard deviation in the position coordinates reduces to less than 10 m but fails the joint criteria of ((a) and ((b) or (c))), the vehicle has localized to a false positive region and the particle distribution is reset to the full map at this time step. This way, the localization procedure continues till the vehicle is localized, after which one can move to tracking mode. Note that tracking is not considered in this paper, as the main focus is on localization. The threshold of 10 m is just chosen for simulation purposes and to indicate localization by bringing the uncertainty of the vehicle’s position from to m. While stricter localization criteria can be considered (<5 m), it is assumed that one can switch to tracking mode and thus avoid the computationally expensive S2M block within m of the true vehicle position. Efficient filters, such as Extended Kalman Filter (EKF) [7] or the Unscented Kalman Filter (UKF) [8], can then be used for tracking as they might be better suited from a computational standpoint than particle filters.

5.4. Localization Performance Metrics

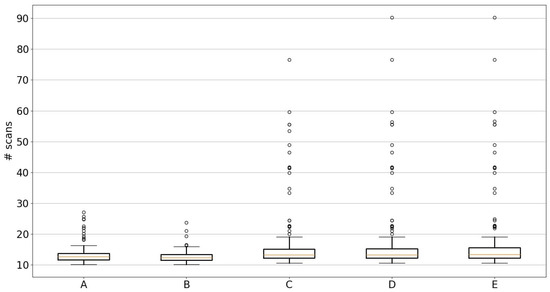

Figure 12 shows the results of the simulation on sequence ‘05’ of the KITTI odometry dataset. As the particles in the particle filter are randomly initialized, the simulations are repeated 250 times to generate statistical box plots of the localization performance. The localization performance is compared using five metrics (labeled as A, B, C, D and E in Figure 12), with units as the number of sequential LIDAR scans (y-axis in Figure 12) from time . Here, the number of time steps and the number of LIDAR scans are used interchangeably, as every time step of the simulation corresponds to a LIDAR scan. There are a total of 2750 LIDAR scans in sequence ‘05’ of the KITTI odometry dataset.

Figure 12.

Localization performance. Metric A: the first time step when vehicle remains localized for the whole simulation without track loss. Metric B: the very first time step at which the vehicle satisfies localization criteria. Metric C: the first time step when the vehicle satisfies localization criteria and is within m of the true vehicle position for 10 consecutive time steps. Metric D: Same as metric C but checks for 50 consecutive time steps. Metric E: Same as metric D but has stricter criteria of m of the true position.

5.4.1. Metric B

The box plot (box and whiskers plot) in Figure 12, labeled as metric ‘B’, shows the number of time steps (or LIDAR scans) required to first achieve localization, i.e., satisfy the localization criteria. It can be observed that the 75th percentile of the box plot is around 14 scans, and the largest outlier is about 24 scans. With 2750 total scans, the vehicle was localized within of the total scans. This indicates very good localization performance of the proposed approach. Once localized, tracking can be performed with more particles or other filters.

5.4.2. Metric A

In some cases, after the vehicle satisfies the localization criteria, the particle filter can occasionally diverge from the true vehicle state. This is because the 1000 particles tend to coalesce around the particle with the highest weight. At this point, any sudden vehicle turn will become difficult to track as there is a lack of diversity among the particles. A straightforward remedy is to significantly increase the number of particles and/or increase process noise in the dynamical model if necessary. Alternatively, to establish the efficacy of the proposed localization approach, we detect loss in localization and reset the process at the same time instant. The loss in localization is detected when the standard deviation of the position’s coordinates exceeds 50 m (indicating a loss in track), even if the S2M block returns an exact match to the true vehicle position. At this point, the particles of the particle filter are re-initialized over the whole map at this time step. This resetting approach will increase the time it takes or, equivalently, the number of scans for localization. Metric ‘A’ in Figure 12 captures this loss in localization over the complete 2750 time steps compared to metric ‘B’. Metric ‘A’ shows the number of scans it took to localize the vehicle according to the aforementioned localization criteria, including occasional resetting of the particle filter when it fails. Metric ‘A’ is slightly above metric ‘B’, indicating that there were a few instances where the particle filter was reset but eventually localized to the true vehicle position. The 75th percentile is about 17, which shows that 75% of cases were localized using only 15 scans (or time steps), though some outlier cases took about 28 scans. Nevertheless, the approach was still able to localize the vehicle after particle filter divergence. The S2M block is able to provide a good match, which subsequently makes the particle filter distribution converge to the true vehicle position.

5.4.3. Metric C

Note that the localization criteria are chosen to emulate real-time conditions and do not use the true ground pose data from the KITTI dataset. The localization criteria only look at the standard deviation of the vehicle’s position estimated by the particle filter. An ideal offline performance metric should check if the localized vehicle’s position is, in fact, close to the true vehicle position. To this end, metrics ‘C’, ‘D’ and ‘E’ in Figure 12 use ground truth data to measure the localization performance. In addition to the localization criteria of ((a) and ((b) or (c))), metric ‘C’ represents the number of scans before the mean position of the vehicle, estimated by the particle filter, is within ±5 m of the vehicle’s true position for 10 consecutive time steps. This is evaluated by finding the time step k for which m for all . The 75th percentile in the box and whiskers plot for metric ‘C’ shows that of the cases were localized to their true vehicle positions for 10 consecutive time steps within 16 time steps. There are some outliers that took 78 time steps (also counted as the number of scans) to reach true localization. It can be observed that there is a wide outlier disparity between metric ‘C’ and metrics ‘A’ or ‘B’. This is because the localization criteria in metric ‘A’ uses a threshold of 10 m for the standard deviation estimated by the particle filter, while metric ‘C’ uses stricter criteria of m of the true position of the vehicle. Even though the standard deviation of the particle filter is reduced, there is a small bias or lag in the mean compared to the true position. This is due to the S2M block, which matches the pose of the LIDAR scan at a previous time step and propagates it to the current time using the vehicle’s relative pose estimates. Once the S2M block corrects the particle with the highest weight, subsequent resampling stages of the particle filter add more particles around this particle. As more particles are pushed closer to the true pose of the vehicle, this bias in the mean estimate is eventually reduced, and thus some outlier cases take about 78 scans to get to the m error of the true position of the vehicle.

5.4.4. Metrics D and E

Metric ‘D’ is similar to metric ‘C’, but it uses 50 consecutive steps to verify that the localization by the particle filter is, in fact, stable, or in other words, time step k is determined for which for all . It can be observed from Figure 12 that both metrics ‘C’ and ‘D’ are similar, implying that the localization to the true position of the vehicle ( m error) was achieved and even remained stable for 50 consecutive time steps. Though the worst case took about 90 scans to reach an error of m. After which the S2M block can be switched off, or other vehicle tracking algorithms can be used efficiently. Finally, metric ‘E’, which is similar to metric ‘D’, has a stricter threshold of m for the error in the mean estimate. Time step k for metric ‘E’ is found when the condition m for all is satisfied. Metrics ‘D’ and ‘E’ look similar, except for the 75th percentile of metric ‘E’ being slightly higher than the 75th percentile of metric ‘D’. This implies that the proposed localization approach is cable of localizing the vehicle to within m of the true vehicle’s position.

5.5. Brief Note on Implementation

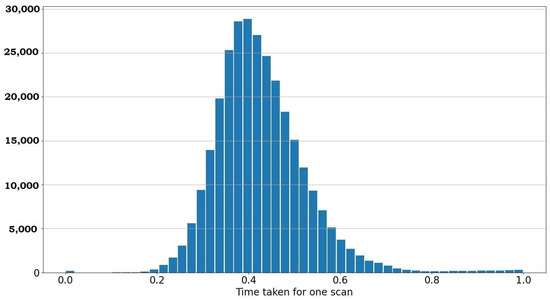

As described in Figure 2, the blocks run in parallel threads to provide fast particle filter estimates coupled with slower but accurate global pose estimates of the S2M block. In the simulations, an Intel i9 processor with eight cores was used to simulate the proposed approach with 32 GB of RAM. Point Cloud Library (PCL) -based implementation was used for the GICP in the RPE block and the second stage of the S2M block. The global nearest point to map distances is implemented using a parallel hash map [22] for fast retrieval. Alternatively, sparse tensors can be used to save and retrieve the distances. The simulation reads the scans from the KITTI database in a sequential loop and the wall-clock time is recorded between scans. This process is repeated over multiple runs using different initial conditions, and the recorded time between scans is shown using the histogram in Figure 13. As the threads run in parallel, the time also includes the time taken by the asynchronous S2M block. It can be observed that the average time to process a scan is 0.4 s, with a Gaussian-like distribution about the mean. It was observed that running the computationally intensive parallel routines on shared resources (CPU, RAM and caches) tends to make the overall process inefficient. It is to be noted that the objective of this paper is primarily to illustrate the validity of the proposed approach and not to establish high-performance implementation. Nevertheless, a distributed architecture using ROS2 [33] framework, where the computationally intensive scan-to-map matching routine is run on a separate machine, will significantly improve frame-per-second (FPS). Further, GPU implementation can significantly improve the scan-to-map matching block and the GICP computations.

Figure 13.

Latency performance for current implementation (x-axis time in units of seconds).

6. Discussion

The results show that the scan-to-map matching process is highly advantageous, even when the corresponding computational complexity is high. The localization approach proposed in this paper attempts to utilize the delayed result of the scan-to-map match by propagating the matched pose to the most recent time instant. Due to errors in relative pose estimation, the propagated match may not be close enough to be used directly for the pose of the vehicle. Hence, the matched pose is only used to update the pose in the particles of the particle filter, which subsequently estimates the vehicle’s full state. This symbiosis of the particle filter and the scan-to-map matching process can robustly localize the vehicle. This eliminates the need for an excessively large number of particles that would often be required in . The approximate frame rate achieved is only 2.5 Hz (1/0.4 s), which might be low for some applications. One approach is to decimate the LIDAR scan to a few 100 s or 1000 s of points to improve the likelihood of computational times. However, we observed that, in dense maps like the KITTI dataset, decimation, in fact, degraded performance and increased the failure of the particle filter. This is because the decimated LIDAR point cloud for dense maps tend to have large false positive neighrest neighbors. In our future work, we will implement a GPU version of scan-to-map matching running on separate machines with dedicated RAM and computing cores using the ROS2 framework.

7. Conclusions

The problem of the LIDAR-only localization and tracking approach was addressed by a hybrid approach of particle filtering and scan-to-map matching. It was observed that with fewer particles (∼1000), the approach was able to localize the vehicle to within m of the true vehicle position and within approximately 20 scans. Further, the intermittent loss in tracking by the particle filter was corrected by the matching block leading to robust tracking performance. Though scan-to-map matching is considerably slower than the conventional particle filter for real-time performance, the proposed asynchronous approach utilizes the scan-to-map matching results as they are often highly accurate in localizing the vehicle. This combined approach of particle filter and scan-to-map matching process had a latency of 0.4 s, suitable for most real-time applications. While the efficacy of the proposed approach was illustrated on well-structured KITTI odometry datasets, it is anticipated that these results provide strong optimism in developing high-performance GPU implementations that can be used for general urban areas and unstructured regions. Future work will assess the inclusion of IMU and vision sensors to further accelerate the localization process.

Funding

This research received no external funding.

Conflicts of Interest

The author declares no conflict of interest.

References

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM 2.0: An improved particle filtering algorithm for simultaneous localization and mapping that provably converges. In Proceedings of the IJCAI, Acapulco, Mexico, 9–15 August 2003; Volume 3, pp. 1151–1156. [Google Scholar]

- Blanco-Claraco, J.L.; Mañas-Alvarez, F.; Torres-Moreno, J.L.; Rodriguez, F.; Gimenez-Fernandez, A. Benchmarking particle filter algorithms for efficient velodyne-based vehicle localization. Sensors 2019, 19, 3155. [Google Scholar] [CrossRef] [PubMed]

- Marchetti, L.; Grisetti, G.; Iocchi, L. A Comparative Analysis of Particle Filter Based Localization Methods. In RoboCup 2006: Robot Soccer World Cup X; Lakemeyer, G., Sklar, E., Sorrenti, D.G., Takahashi, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 442–449. [Google Scholar]

- Zhang, Q.B.; Wang, P.; Chen, Z.H. An improved particle filter for mobile robot localization based on particle swarm optimization. Expert Syst. Appl. 2019, 135, 181–193. [Google Scholar] [CrossRef]

- Wang, Y.; Chao, W.L.; Garg, D.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8445–8453. [Google Scholar]

- Kalman, R. A new approach to linear filtering and prediction problems. ASME J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Anderson, B.D.O.; Moore, J.B. (Eds.) Optimal Filtering; Prentice-Hall: Englewood Cliffs, NJ, USA, 1979. [Google Scholar]

- Julier, S.; Uhlmann, J.; Durrant-Whyte, H. A New Method for the Nonlinear Transformation of Means and Covariances in Filters and Estimators. IEEE Trans. Autom. Control 2000, AC-45, 477–482. [Google Scholar] [CrossRef]

- Ito, K.; Xiong, K. Gaussian filters for nonlinear filtering problems. IEEE Trans. Autom. Control 2000, 45, 910–927. [Google Scholar] [CrossRef]

- Adurthi, N.; Singla, P. Conjugate Unscented Transformation Based Approach for Accurate Conjunction Analysis. J. Guid. Control Dyn. 2015, 38, 1642–1658. [Google Scholar] [CrossRef]

- Arulampalam, M.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. Signal Process. IEEE Trans. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Levinson, J.; Montemerlo, M.; Thrun, S. Map-based precision vehicle localization in urban environments. In Proceedings of the Robotics: Science and Systems, Atlanta, GA, USA, 27–30 June 2007; Volume 4, p. 1. [Google Scholar]

- Thrun, S. Probabilistic robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

- Rabe, J.; Stiller, C. Robust particle filter for lane-precise localization. In Proceedings of the 2017 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Vienna, Austria, 27–28 June 2017; pp. 127–132. [Google Scholar]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. g 2 o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Ila, V.; Polok, L.; Solony, M.; Svoboda, P. SLAM++-A highly efficient and temporally scalable incremental SLAM framework. Int. J. Robot. Res. 2017, 36, 210–230. [Google Scholar] [CrossRef]

- Yoneda, K.; Tehrani, H.; Ogawa, T.; Hukuyama, N.; Mita, S. Lidar scan feature for localization with highly precise 3-D map. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Ypsilanti, MI, USA, 8–11 June 2014; pp. 1345–1350. [Google Scholar]

- Moosmann, F.; Stiller, C. Velodyne slam. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (iv), Baden-Baden, Germany, 5–9 June 2011; pp. 393–398. [Google Scholar]

- Dubé, R.; Dugas, D.; Stumm, E.; Nieto, J.; Siegwart, R.; Cadena, C. Segmatch: Segment based place recognition in 3d point clouds. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 5266–5272. [Google Scholar]

- Choi, J. Hybrid map-based SLAM using a Velodyne laser scanner. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 3082–3087. [Google Scholar]

- Jazwinski, A.H. Stochastic Processes and Filtering Theory; Andrew, H.J., Ed.; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Popovitch, G. The Parallel Hashmap. Available online: https://github.com/greg7mdp/parallel-hashmap (accessed on 29 March 2023).

- Olson, E. M3RSM: Many-to-many multi-resolution scan matching. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5815–5821. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–2 July 2009; Volume 2, p. 435. [Google Scholar]

- Zhang, J.; Yao, Y.; Deng, B. Fast and Robust Iterative Closest Point. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3450–3466. [Google Scholar] [CrossRef] [PubMed]

- De Boor, C.; De Boor, C. A Practical Guide to Splines; Springer: New York, NY, USA, 1978; Volume 27. [Google Scholar]

- Xiao, L.; Wang, R.; Dai, B.; Fang, Y.; Liu, D.; Wu, T. Hybrid conditional random field based camera-LIDAR fusion for road detection. Inf. Sci. 2018, 432, 543–558. [Google Scholar] [CrossRef]

- Rummelhard, L.; Paigwar, A.; Nègre, A.; Laugier, C. Ground estimation and point cloud segmentation using spatiotemporal conditional random field. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1105–1110. [Google Scholar]

- Oliveira, G.L.; Burgard, W.; Brox, T. Efficient deep models for monocular road segmentation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 4885–4891. [Google Scholar]

- Caltagirone, L.; Scheidegger, S.; Svensson, L.; Wahde, M. Fast LIDAR-based road detection using fully convolutional neural networks. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (iv), Los Angeles, CA, USA, 11–14 June 2017; pp. 1019–1024. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Macenski, S.; Foote, T.; Gerkey, B.; Lalancette, C.; Woodall, W. Robot Operating System 2: Design, architecture, and uses in the wild. Sci. Robot. 2022, 7, eabm6074. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).