1. Introduction

In recent years, remote sensing data have been widely applied in various fields. For instance, MODIS data include information on vegetation coverage, atmospheric conditions, and surface temperature, providing researchers with a vast amount of spectral data for Earth studies. However, it has been observed that certain bands in the level 1 and 2 MODIS data products publicly available on the official website of NASA exhibit prominent stripe noise. These stripe noise patterns can be broadly classified into two categories: periodic and non-periodic. Periodic stripes primarily arise from the need to stitch together data obtained from multiple detectors during the sensing process to acquire a sufficiently large focal plane, resulting in radiometric response discrepancies [

1]. Additionally, the mechanical movements of the sensor itself introduce inevitable interferences and errors. Detector-to-detector and mirror-side stripes serve as two representative examples of periodic stripe noise [

2]. On the other hand, non-periodic stripe noise manifests as random patterns, with uncertain lengths and occurrence positions. It is greatly influenced by spectral distribution and temperature factors, thus demanding higher post-processing requirements for the data. Apart from these factors, numerous other contributors to stripe noise in remote sensing imagery exist, making the effective removal of such noise a challenging task that researchers strive to address.

Hence, many scientific researchers have started participating in the task of removing stripe noise in order to improve the quality of remote sensing images. They have proposed numerous effective destriping methods.These destriping methods can be mainly categorized into the following groups: filter-based, statistical-based, model-optimization-based, and deep-learning-based methods. Filter-based methods primarily involve the use of filters of different sizes to eliminate stripe noise [

3,

4,

5,

6,

7]. For example, in [

3], Beat et al. proposed a filtering method for the removal of horizontal or vertical stripes based on a joint analysis of wavelets and Fourier transform. In [

5], Cao et al. utilized wavelet decomposition to separate remote sensing images into different scales and applied one-dimensional guided filtering for destriping. Statistical-based methods focus on repairing images with stripe noise from the perspective of detector response and utilize the assumption of data similarity [

8,

9,

10,

11]. In [

10], Carfantan et al. established a linear relationship through the gain estimation of detectors and proposed a statistical linear destriping (SLD) method for push-broom satellite imaging systems. Additionally, to remove irregular stripes from MODIS data, Shen et al. introduced a method based on local statistics and expected information [

11]. In recent years, with the continuous development of deep learning methods in the field, this has been widely applied in various domains of image processing. Deep-learning-based destriping algorithms have also made progress in the research [

12,

13,

14,

15,

16]. Chang et al. designed a HIS-DeNet network based on CNN to extract spectral and spatial information for removing stripe noise from hyperspectral images [

13]. Huang et al. proposed a dual-network fusion denoising convolutional neural network (

) that could simultaneously remove both random noise and stripe noise [

16].

Model-optimization-based methods are widely regarded as highly effective approaches at present. These methods mainly utilize the prior features and texture features of images to construct energy functionals with different regularization terms and obtain the final clean image by solving it mathematically. In 2010, Bouali et al. proposed the unidirectional variational method (UTV), which utilized the directional characteristics of stripe noise [

2]. This method achieved significant results in the field of stripe noise removal for remote sensing images; however, it was prone to losing some fine details. Consequently, researchers started exploring the sparsity [

17] and low rank [

18] of the image itself and began addressing the limitations of this method from a mathematical perspective. As a result, many improved variants of UTV were studied. In [

19], Zhou et al. proposed an adaptive coefficient unidirectional variational optimization model by replacing the

norm in the regularization term with the

norm. In [

20], Liu et al. utilized the

norm to characterize the global sparsity characteristics of stripe noise, effectively separating the stripe noise and achieving better stripe noise removal results. Chang et al. introduced the low-rank and sparse image decomposition (LRSID) model based on the low rank of a single image, extending the algorithm from 2D images to 3D hyperspectral images [

21]. Compared to the original UTV method, the improved algorithms demonstrated a significantly enhanced destriping performance.

In summary, destriping methods in the research have been able to effectively remove stripe noise; however, there is still significant room for improvement in this area. Filtering-based and statistical-based methods have achieved good results in removing periodic stripes. However, they have often failed to deliver satisfactory results when dealing with complex non-periodic stripes. Deep-learning-based methods have high requirements for dataset preparation and choice of loss functions, and their applicability is limited to specific scenarios. In comparison, model-optimization-based methods have broader applicability; however, they still have their limitations. Many optimization models, for instance, consider the sparse characteristics of stripe noise structure. However, their exploration of these sparse characteristics is not sufficiently thorough. This deficiency leads to the loss of detailed information from the underlying image during the process of stripe removal. As a result, it is imperative to select appropriate norms that better characterize the relevant sparsity. Additionally, some optimization models involve overly intricate regularization constraints. Although they can yield improvements in destriping, the high number of parameters makes the process of parameter adjustment remarkably difficult. Hence, for more effective parameter configuration, the selection of simpler and more reasonable regularization constraints is of paramount importance.

In this paper, we propose a new destriping model based on

quasinorm and unidirectional variation to overcome the limitations of previous methods. The model fully considers the prior characteristics of remote sensing images, as well as the directional and structural properties of stripe noise. We introduce the

quasinorm to characterize the global sparsity of stripe noise and the local sparsity of the vertical image gradients. This norm facilitates the derivation of sparser solutions compared to the

and

norms, leading to superior stripe noise removal results. Additionally, the model employed the

norm to capture the local sparsity of the horizontal gradient of stripe noise, further preventing the loss of image details during the destriping process. In the model-solving process, we adopted the fast alternating direction method of multipliers (ADMM) algorithm [

22], which transforms the complex non-convex problem into simple subproblems for solution. The fast ADMM algorithm converges more rapidly and has shorter computation time compared to the traditional ADMM algorithm. The overall framework of the algorithm is presented in

Figure 1. Finally, we conducted extensive experiments and compared our proposed method with six classical methods. The proposed method achieves superior stripe noise removal results and demonstrates a certain robustness. The contributions and innovations of this work can be summarized as follows:

- (1)

We utilize the gradient information obtained from remote sensing image decomposition to design regularization constraints in different directions, effectively avoiding the ripple effect during the destriping process.

- (2)

The quasinorm is introduced into the proposed model to better capture the relevant sparsity properties, thereby preserving a greater amount of fine details in the underlying image.

- (3)

The fast ADMM algorithm is employed to solve the destriping model. It reduces the computational time, enabling efficient processing of large-scale data.

The subsequent sections are organized as follows: in

Section 2, we introduce the relevant knowledge and research related to the proposed method.

Section 3 provides a detailed explanation of the proposed stripe noise removal model and its solution. In

Section 4, we conduct extensive experiments and compare our proposed method with six different approaches. The experimental results are analyzed and discussed in

Section 5. Finally,

Section 6 presents the conclusion of this study.

4. Experiment Results

To validate the effectiveness and generalizability of the proposed method, we conducted separate tests on simulated and real stripe noise. The experiments involved a significant number of comparative experiments to evaluate the performance of the proposed method on different types of stripe noise, ensuring the logical rigor of the experiments. For the comparative experiments, we analyzed and compared six typical destriping methods. Among them, WAFT [

3] and WLS [

37] are filtering-based and statistical-based methods. UTV [

2] is a model optimization method based on the

norm. SAUTV [

19] is an earlier model optimization algorithm based on the

norm, while GSLV [

27] and RBSUTV [

38] are more advanced model optimization methods proposed in the research in recent years. Furthermore, all our experiments were conducted on a personal computer with an Intel(R) Core (TM) i7-6700 CPU @ 3.40 GHz and 16 GB RAM, using MATLAB R2022b.

In the evaluation of the method, this study employed a comprehensive evaluation approach that combined subjective and objective assessments, resulting in the more persuasive evaluation results. In terms of subjective evaluation, the effectiveness of different methods in removing stripes can be directly observed by examining the restored remote sensing images and their corresponding stripe noise maps. We also zoomed in on some areas with noticeable differences and marked them with red boxes in the images. Furthermore, we plotted the mean cross-track profiles and mean column power spectrum of the restored remote sensing images to better demonstrate the differences in the stripe noise removal results among the different methods. In terms of objective evaluation, we use different reference indicators for simulated data and real data. For the simulated data, since the original images are available, we used peak-signal-to-noise ratio (PSNR) and structural similarity (SSIM) [

39] as reference metrics. For the real data, we employed two commonly used no-reference evaluation metrics in the field of stripe noise removal: mean relative deviation (MRD) [

40,

41] and inverse coefficient of variation (ICV) [

20,

42].

4.1. Simulated Data Experiments

In this section, we conducted extensive simulation experiments to validate the superiority of the algorithm. In

Figure 4, we selected six remote sensing images captured by different sensors for conducting simulated stripe noise experiments. Among them,

Figure 4a,b,f are MODIS data products obtained from 1B-level calibrated radiance, which can be downloaded from the official website of NASA [

43].

Figure 4c is a hyperspectral image captured by the Tiangong-1 satellite.

Figure 4d is a hyperspectral image of Washington DC Mall, which can be obtained from the relevant website [

44].

Figure 4e is captured by the VIIRS sensor and is included in the “Earth at Night” collection, which can also be obtained from the official website of NASA [

45].

In the experiment, the simulated stripe noise can be mainly classified into two categories: periodic and non-periodic. Inspired by the ideas presented in [

31], we verified the effectiveness and robustness of the proposed method by adding stripes of different intensities and ratios. During destriping process, the remote sensing image was operated in MATLAB as an eight-bit encoded matrix. Therefore, the intensity of the stripe noise could be selected within the range of [0, 255]. The ratio refers to the ratio of the number of rows where stripe noise appears to the total number of rows in the remote sensing image matrix and can be selected within the range of [0, 1]. Additionally, to facilitate better comparison of the stripe noise removal effects of different methods, we normalized the stripe noise. Moreover, in the simulated experiment, we employed two important objective reference metrics: PSNR and SSIM. The calculation methods for these metrics are as follows:

where

represents the original image,

represents the destriped image, and

represents the total number of pixels in the image.

where

and

respectively denote the pixel means of images

and

.

denotes the covariance between g and u, while

and

respectively denote the variances of the images

and

.

and

are constants used in the calculations.

4.1.1. Periodic Stripe Noise

For the assessment of periodic stripe noise, we selected three different remote sensing images and added stripe noise of varying intensities and ratios for experimentation. In

Figure 5, through the restored remote sensing images, we observed that all the methods effectively removed the stripe noise. However, the WAFT method introduced some ripple artifacts in the denoised image, while UTV and SAUTV caused the blurring of the edge details in the recovered image. The other three methods and the proposed method performed well, with no significant visual differences observed.

In

Figure 6, the denoising results of most methods are similar to

Figure 5, except for the WLS method, which still exhibits some noticeable stripes in the denoised images. This was because we added stripe noise with different ratios to the two remote sensing images, resulting in different frequencies. The WLS method was unable to accurately compute the local linear relationship between the image and stripe noise through guided filtering, thus leading to the incomplete removal of stripe noise. This indicates that filter-based and statistical-based stripe noise removal algorithms are not universally applicable. In

Figure 7, we present the estimates of stripe noise of

Figure 6. For several model optimization algorithms, it can be observed that whether it is UTV based on the

norm or SAUTV, GSLV, and RBSUTV based on the

norm, a relatively large amount of underlying image information is removed while destriping. In contrast, the proposed method removed the least amount of underlying image detail information. It indicates that the

quasinorm has a better capability to characterize the sparse nature of stripe noise compared to the

and

norms.

To distinguish the subtle differences between the different methods, we increased the ratio of periodic stripes in

Figure 8 and plotted the corresponding metric curves.

Figure 9 presents the mean cross-track profiles of the stripe removal results for each method. Although some methods showed no significant differences in the visual results of stripe removal compared to the proposed method, the mean cross-track profile of the proposed method was noticeably superior to these methods. It can be observed that, except for the proposed method, the mean cross-track profiles of other methods have a certain deviation from the original image at the peak. This also indicates that the

and

norms inadequately capture the sparse characteristics of stripe noise, resulting in the loss of detail information during the process of destriping.

Figure 10 displays the mean column power spectrum for each method. The main frequencies of the stripe noise are concentrated around 0.1, 0.2, 0.3, and 0.4, which align with the actual situation. Around the frequency of 0.05, the curve of the proposed method closely fits the original curve compared to the other methods. This indicates that the proposed method outperforms the other methods in preserving details.

Furthermore, from the results presented in

Table 1, it can be observed that WAFT and WLS exhibit a good performance in removing stripes at lower levels. However, as the level of stripe noise increases, their ability to remove the noise deteriorates rapidly. Consistently with the results presented in

Figure 6 and

Figure 7, UTV, SAUTV, and GSLV all result in the loss of some details from the underlying image when removing stripe noise, leading to lower PSNR and SSIM values compared to the proposed method. The RBSUTV method, due to its specific characteristics, performs better than the proposed method in handling low-level stripe noise; however, it shows a poor performance when dealing with high-level stripe noise. In comparison, the proposed method is superior to other methods in most cases and demonstrated robustness. Although it may not surpass RBSUTV in some situations, the difference between the two is minimal.

4.1.2. Nonperiodic Stripe Noise

For experiments conducted on non-periodic stripe noise, we also selected three examples for demonstration and explanation, with the relevant results shown in

Figure 11,

Figure 12,

Figure 13,

Figure 14,

Figure 15 and

Figure 16. Among them,

Figure 11,

Figure 12 and

Figure 14 show the results of removing different levels of non-periodic stripe noise. Overall, the results are similar to the handling of periodic stripe noise; however, there are still some notable points. In

Figure 11, the horizontal streets are mistakenly treated as stripe noise and removed in the results of SAUTV and RBSUTV. This is because these edge structures are very similar to stripe noise and are prone to being misprocessed. Therefore, during the destriping process, the consideration of global sparsity is necessary. Additionally, due to the overall smoothness of the simulated remote sensing image presented in

Figure 12, there is less detailed information of the underlying image contained in the presented stripe noise in

Figure 13. This suggests that the image itself also has an impact on the stripe removal noise capability of the algorithm.

In

Figure 15, it can be observed that, unlike periodic stripes, the mean cross-track profiles of non-periodic stripe noise appear more chaotic. Additionally, from the mean column power spectrum in

Figure 16, it is evident that the frequencies of non-periodic stripe noise are not concentrated but rather dispersed. As a result, non-periodic stripe noise has a significant impact on filtering-based and statistical-based destriping methods. Furthermore, from the PSNR and SSIM values of the recovered images using different methods presented in

Table 2, we can observe that the performance of WAFT and WLS noticeably decreases compared to removing periodic stripes, and other algorithms also show slight decreases. This indicates that the difficulty of destriping increases when non-periodic stripe noise is present. However, the overall trends remain consistent with periodic stripe noise. The proposed method in this paper still outperforms other algorithms in terms of performance.

4.2. Real Data Experiments

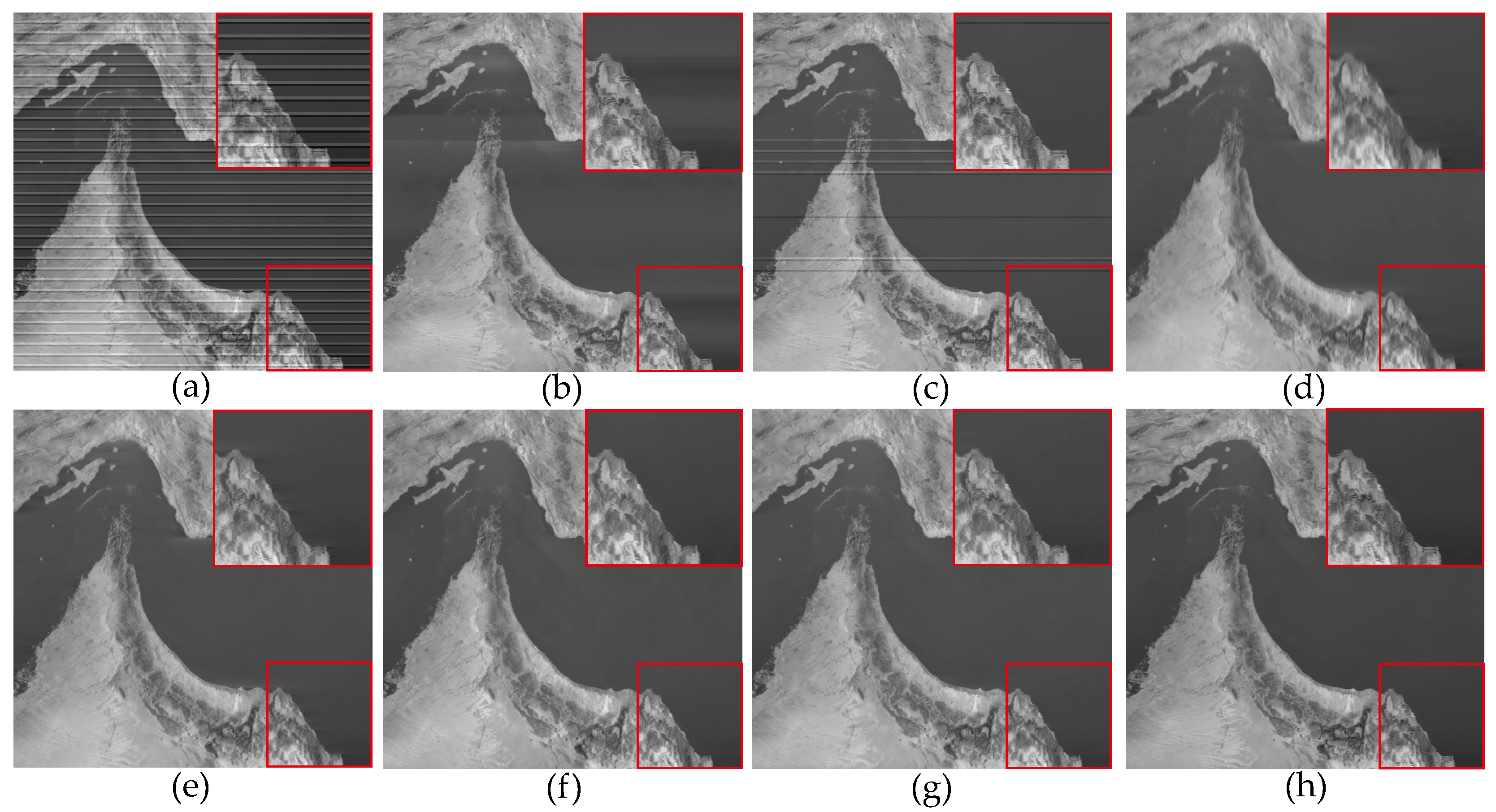

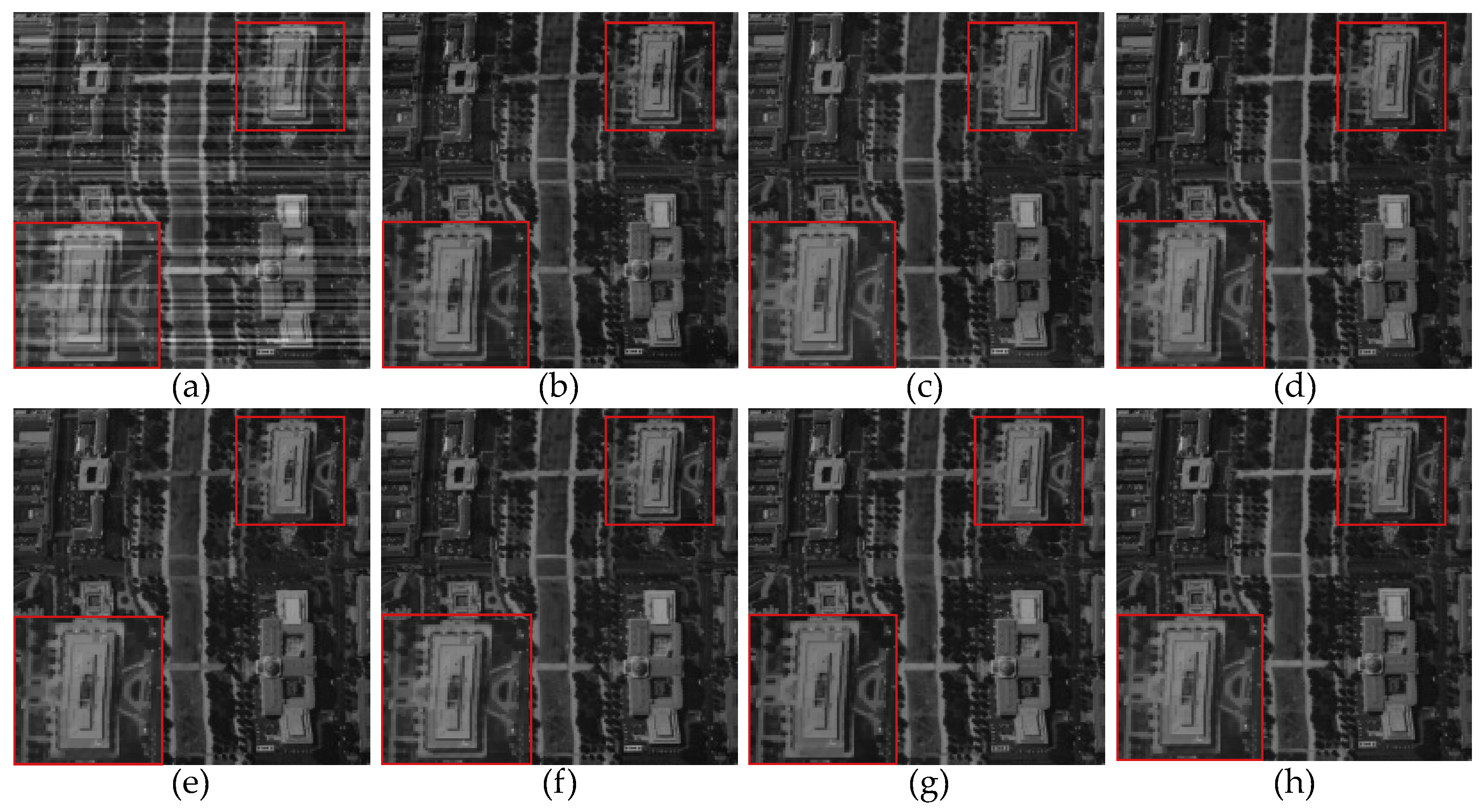

In the experiments conducted with the use of real data, we selected four remote sensing images from the MODIS dataset.

Figure 17a was obtained from the 33rd band of the MODIS data, primarily affected by non-periodic stripe noise.

Figure 18a and

Figure 19a were both obtained from the 27th band of the MODIS data.

Figure 18a is primarily contaminated by periodic stripes, while

Figure 19a is primarily contaminated by non-periodic stripes. This indicates that the stripe noise in the same band is not fixed.

Figure 20a was obtained from the 31st band of the MODIS data, and it exhibits a high ratio of stripe noise but with a relatively low intensity level.

Furthermore, since there are no original images available for the real data, we selected two commonly used no-reference metrics, MRD and ICV, to calculate the objective evaluation metrics. These metrics were used to quantitatively assess the performance of different methods, and their calculation methods are as follows:

where

represents the number of pixels in the region unaffected by stripes,

represents the pixel value of the original image, and

represents the pixel value of the image after removing the stripes. Considering the characteristics of stripe noise, we selected individual rows without stripe noise as the region unaffected by stripe noise to calculate the MRD index. Furthermore, we calculated the mean value from multiple calculations as the final result to avoid the randomness of the results.

where

represents the average value of pixels, and

represents the standard deviation of pixels. As suggested in [

2], we selected a 10 × 10 pixel window to compute the ICV index in homogeneous regions of the destriping image. To better differentiate the differences between different algorithms, we performed multiple calculations in the experiment and selected the set of data with the highest value as the final result.

Figure 17,

Figure 18,

Figure 19 and

Figure 20 depict the restored images after applying different methods for removing stripe noise in the real data. It is worth noting that

Figure 17 presents extremely dark regions, which are highly challenging for destriping. As a result, most methods exhibited artifacts in that specific area. However, the proposed method effectively avoided the generation of artifacts by incorporating a vertical sparsity constraint with the

quasinorm. Furthermore,

Figure 19 reveals that, in practical scenarios, non-periodic stripe noise appears randomly in terms of location, length, and intensity, significantly increasing the difficulty of removing such stripes. As observed in the local magnification images, both WAFT and WLS still exhibit residual stripe noise in their restored images. Even the RBSUTV method, which demonstrates a good performance on destriping in simulated experiments, failed to achieve satisfactory results when confronted with this type of stripe. In this case, the proposed method still achieved excellent destriping results. This strongly indicates the superior capability of the

quasinorm in characterizing the sparse nature of underlying image, enabling better removal of stripe information contained in the underlying image.

In

Figure 21, we can observe that the mean cross-track profiles of the destriping results obtained from WAFT, UTV, and SAUTV align with the trend of the original image. However, their curves appear overly smooth, indicating a loss of detailed information. In

Figure 21c, some prominent spikes are present, indicating the presence of residual stripe noise in the denoised result of WLS. The RBSUTV method shows significant deviations in certain row mean values compared to the original image, which could be attributed to the choice of regularization terms. In comparison, the curves of GSLV and the proposed method align most closely with the trend of the original image. Due to the relatively low intensity of the stripe noise, all methods effectively removed the stripe noise. Consequently, in

Figure 22, there is minimal difference in the normalized power spectrum of the stripe removal results among the various methods.

Additionally,

Table 3 presents the objective evaluation metrics, MRD and ICV, for

Figure 17,

Figure 18,

Figure 19 and

Figure 20, with the best results being highlighted. From the table, we can observe that the proposed method outperforms the other methods in most cases. Although it may not achieve the best results in some cases, the disparity arising from the optimal results remains minor. This further demonstrates the effectiveness and robustness of the proposed method.

6. Conclusions

In this paper, we proposed a univariate variational model based on the quasinorm for removing stripe noise and applied it to different types of stripes in remote sensing data. The model considered the sparsity and low-rank properties of the stripe and image components separately. By introducing the quasinorm, it effectively avoided the loss of details during the destriping process. In the model-solving process, a fast ADMM algorithm was employed to speed up the convergence during iterations. Finally, extensive simulations and real experiments were conducted to compare the proposed method with six different methods. From both subjective and objective experimental results, it can be observed that the proposed method not only effectively removes stripes but also better preserves the details of the original image compared to the other methods. Even under severe stripe contamination, the proposed method achieved a good removal performance and demonstrated strong robustness. Furthermore, the fast ADMM algorithm reduces the running time of the proposed method, providing it with better prospects in the era of big data.

In addition, although this paper focused on the application of the proposed method to remote sensing data, its applicability was not limited to this specific domain. The method can be extended to the entire field of stripe noise removal. Therefore, in order to adapt to a wider range of application scenarios, we will address the limitations of the proposed method and make improvements in the future work. Additionally, we will continue to explore additional characteristics of the stripe and image components to better remove stripe noise. Moreover, with the continuous development and improvement of neural network methods, we will also consider integrating deep learning methods with traditional methods to obtain more effective approaches for destriping.