Abstract

The colorimetric conversion of wide-color-gamut cameras plays an important role in the field of wide-color-gamut displays. However, it is rather difficult for us to establish the conversion models with desired approximation accuracy in the case of wide color gamut. In this paper, we propose using an optimal method to establish the color conversion models that change the RGB space of cameras to the XYZ space of a CIEXYZ system. The method makes use of the Pearson correlation coefficient to evaluate the linear correlation between the RGB values and the XYZ values in a training group so that a training group with optimal linear correlation can be obtained. By using the training group with optimal linear correlation, the color conversion models can be established, and the desired color conversion accuracy can be obtained in the whole color space. In the experiments, the wide-color-gamut sample groups were designed and then divided into different groups according to their hue angles and chromas in the CIE1976L*a*b* space, with the Pearson correlation coefficient being used to evaluate the linearity between RGB and XYZ space. Particularly, two kinds of color conversion models employing polynomial formulas with different terms and a BP artificial neural network (BP-ANN) were trained and tested with the same sample groups. The experimental results show that the color conversion errors (CIE1976L*a*b* color difference) of the polynomial transforms with the training groups divided by hue angles can be decreased efficiently.

1. Introduction

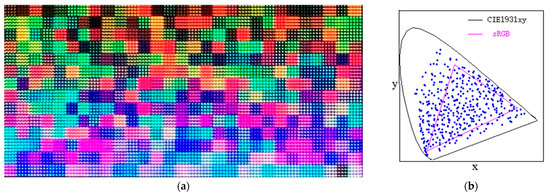

The colorimetric conversion of digital cameras plays an important role not only in the field of color display but also in many other fields such as color measurement, machine vision, and so on. Today, with the rapid development of wide-color-gamut display technology, a series of color display standards with wide color gamut have been put forward, such as Adobe-RGB, DCI-P3, and Rec.2020. Meanwhile, many kinds of multi-primary display systems with wide color gamut have been demonstrated [1,2,3,4,5,6,7,8,9]. Figure 1a shows the wide-color-gamut blocks displayed on a six-primary LED array display developed by our laboratory. It can be seen from Figure 1b that the color gamut of the six-primary LED array display is much wider than that of the sRGB standard. To meet the needs of wide-color-gamut display technology, it is necessary for us to develop a colorimetric characterization method for digital cameras in the case of wide color gamut.

Figure 1.

The 406 wide-color-gamut blocks displayed on a six-primary LED array display (a) and their (x, y) coordinates as shown by the blue dots distributed on a CIE1931xy chromaticity diagram (b).

Until now, different kinds of colorimetric characterization methods have been developed, including polynomial transforms [10,11,12,13,14,15,16], artificial neural networks (ANN) [17,18,19,20,21,22,23], and look-up tables [24]. In 2016, Gong et al. [12] demonstrated a color calibration method between different digital cameras with ColorChecker SG cards and the polynomial transform method and achieved an average color difference of 1.12 (CIEDE2000). In 2022, Xie et al. [18] proposed a colorimetric characterization method for color imaging systems with ColorChecker SG cards based on a multi-input PSO-BP neural network and achieved average color differences of 1.53 (CIEDE2000) and 2.06 (CIE1976L*a*b*). However, the methods mentioned above were mainly used for cameras with standard color space such as an sRGB system. In 2023, Li et al. [17] employed multi-layer BP artificial neural networks (ML-BP-ANN) to realize colorimetric characterization for wide-color-gamut cameras, but the color conversion differences of some high-chroma samples were undesirable.

In addition to conventional multi-layer BP artificial neural networks, the prevailing deep learning neural networks have also been used for the color conversion of color imagers. For example, in 2022, Yeh et al. [19] proposed using a lightweight deep neural network model for the image color conversion of underwater object detection. However, the aim of the model proposed by Yeh et al. was to transform color images to corresponding grayscale images to solve the problem of underwater color absorption; therefore, it had nothing to do with colorimetric characterization. For another example, in 2023, Wang et al. [21] proposed a five-stage convolutional neural network (CNN) to model the colorimetric characterization of a color image sensor and achieved an average color difference of 0.48 (CIE1976L*a*b*) for 1300 color samples from IT8.7/4 cards. It should be noted that the color difference accuracy achieved by Wang et al.’s CNN is roughly equal to that achieved by the ML-BP-ANN of Li et al. [17] for samples from ColorChecker SG cards. However, in the architectures of the CNN proposed by Wang et al., a BP neural network (BPNN) with a single hidden layer and 2048 neurons in the hidden layer was employed to perform color conversion from the RGB space of the imager to the L*a*b* space of the CIELAB system. This indicates that the CNN proposed by Wang et al. cannot yet replace the BP neural network. Moreover, another interesting neural network model named the radial basis function neural network (RBFNN) was demonstrated for the colorimetric characterization of digital cameras by Ma et al. [19] in 2020. The architecture of the RBFNN used by Ma et al. was made up of an input layer, a hidden layer, and an output layer, and the error back-propagation (BP) algorithm was also used for the training of the RBFNN. In summary, until now, different kinds of artificial neural networks used for color conversion have been put forward; however, neural networks employing the BP algorithm continue to prevail.

In this paper, to improve the colorimetric characterization accuracy of wide-color-gamut cameras, an optimization method for training samples was proposed and studied. In the method, the training and testing samples were grouped according to hue angles and chromas, and by using the Pearson correlation coefficient as the evaluation standard, the linear correlation between the RGB and XYZ of the sample groups could be evaluated. Meanwhile, two colorimetric characterization methods—polynomial formulas with different terms and the multi-layer BP artificial neural network (ML-BP-ANN)—were used, respectively, to verify the efficiency of the proposed method.

2. The Problems of Wide Color Gamut

Practically, the RGB values of a digital camera can be calculated with Formula (1), where , , and denote the spectral sensitivities of the RGB channels, respectively, denotes the spectral reflectance of the object, denotes the spectrum of the light source, and , , and and are the normalized constants.

Meanwhile, the tristimulus of the object can be calculated with Formula (2), where , , and denote the color matching functions of a CIE1931XYZ system, and k is a normalized constant.

It can be seen from Formulas (1) and (2) that there exists a nonlinear conversion between the (R, G, B) and the (X, Y, Z). In fact, different methods have been used to model the conversion from the RGB space to the XYZ space [10,11,12,13,14,15,16,17,18,19,20,21,22,23,24]. Among them, polynomial transforms and the BP artificial neural network (BP-ANN) are the most popular.

2.1. Polynomial Transforms

When the color sample sets () and () of digital cameras are given, where i = 1 to N, and N is the total number of samples, a polynomial transformation model can be established for converting the [R, G, B] space to the [X, Y, Z] space for the cameras.

The simplest method is a linear model, as follows:

where A is a matrix:

Usually, by using the color sample sets () and () of the cameras, and the least squares method, the elements of matrix A can be estimated, and then the linear transformation model Formula (3) can be established.

However, for digital cameras, the conversion between the (R, G, B) space and the (X, Y, Z) space is nonlinear, so it is necessary to adopt the nonlinear models as follows.

The square model:

The cubic model:

The quartic model:

In summary, polynomial transforms can be expressed with Formulas (8) and (9), where A is a constant matrix of three lines by n rows, and n is the number of terms in a polynomial formula.

To conclude, the elements of matrix A can be decided by means of the least square method, Wiener estimation method, principal component analysis, and so on. In the paper, the least squares method was used to estimate the elements of matrix A.

2.2. Artificial Neural Network ML-BP-ANN

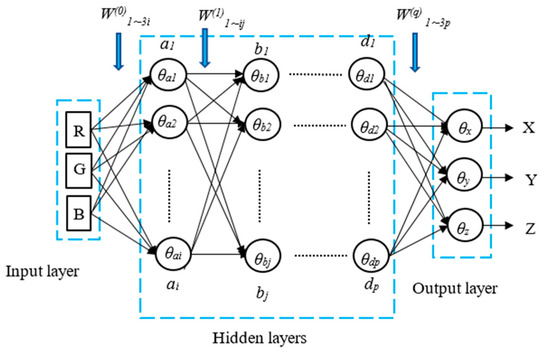

The multi-layer BP artificial neural network (ML-BP-ANN) can also be used to perform the conversion from the [R, G, B] space to the [X, Y, Z] space in the case of wide color gamut [17]. The architecture of an ML-BP-ANN is shown in Figure 2, where W(0), W(1), and W(q) denote the link weights, and θ denotes the threshold of the neurons, and ai, bj, dp denote the output values of the neurons in each layer, respectively. It should be noted that the number of hidden layers and the neurons in each hidden layer can be decided based on the training and testing experiments [17], and the values of the link weights and the values of the thresholds can be decided through training. More importantly, the color sample sets () and () for the training should be uniformly distributed in the whole color space of the cameras.

Figure 2.

The architecture of the multi-layer BP neural network.

In order to obtain desired conversion accuracy for all color samples, the training samples should be uniformly distributed in the whole color space. For instance, when the chromaticity coordinates of the color samples are distributed within a color gamut area such as the sRGB gamut triangle, the desired conversion accuracy can be easily obtained using the polynomial formulas with the terms of the cubic, the quartic, or the quantic [11,12,13,16].

However, in the case of wide color gamut, the chromaticity coordinates of the samples are distributed in the whole color space such as in a CIE1931XYZ system, and the chromas of the samples are usually bigger than those of conventional samples such as the IT8 chart, the Munsell or NCS system, the Professional Colour Communicator (PCC), X-rite ColorChecker SG, and so on. Therefore, in the case of wide color gamut, it is difficult for us to establish the color conversions with desired conversion accuracy.

To solve the problem above, we propose using an optimal method to facilitate color conversions for wide-color-gamut cameras. The method makes use of the Pearson correlation coefficient to evaluate the linear correlation between the RGB values and the XYZ values in a training group, so that a training group with optimal linear correlation can be obtained and color conversion models with desired approximation accuracy can be established.

3. Evaluating the Samples Using Linear Correlation

Theoretically, the Pearson correlation coefficient can be used to describe the linear correlation between two random variables. Similarly, we can use the Pearson correlation coefficient to evaluate the linear correlation between the RGB space of a digital camera and the XYZ space of a CIE1931XYZ system. Therefore, in a sample group, the () and the () are supposed as two random variables, respectively, the vectors , are used to describe the coordinates () and (), respectively, and , are used to express the module of and , respectively, as shown in Formula (10).

Hence, the Pearson correlation coefficient (PCC) between () and () can be calculated using Formula (11).

where n is the number of the samples in a sample group.

Beside the Pearson correlation coefficient mentioned above, there are many other statistical methods to analyze the relationship between () and (). For example, the standard deviations and as shown in Formula (12) can be used to estimate the similarity between the vectors and . However, the values of and here are variable along with the values of () and (), so it is not a suitable method to solve our problem.

Beside Formula (12), many other methods can be used to calculate the similarity between () and (). For example, both wavelet analysis and the gray symbiotic matrix can be used to evaluate the similarity between the chromaticity coordinate patterns of () and (). Obviously, such methods are much more complicated than the Pearson correlation coefficient method.

4. Experiment

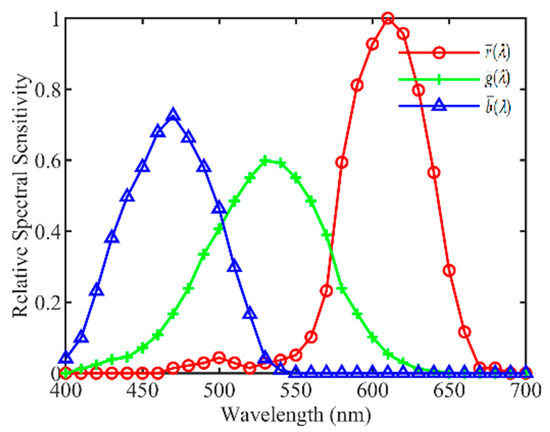

4.1. The Spectral Sensitivity of the Camrea

In order to calculate the RGB values with Formula (1), it is necessary to obtain the spectral sensitivity curves of the camera. Figure 3 shows the spectral sensitivity curves of a Canon1000D camera (Canon Inc., Tokyo, Japan) from 400 nm to 700 nm at an interval of 10 nm. The experimental setups for measuring the spectral sensitivity functions , , and consisted of a monochrometor (SOFN 71SW151, SOFN Instruments Co., Ltd., Beijing, China), a xenon lamp (SOFN 71LX150A, SOFN Instruments Co., Ltd., Beijing, China), an integrating sphere light equalizer, and a set of reference detectors (Thorlabs PDA100A, Thorlabs Inc., Newton, NJ, USA), wherein spectral sensitivity was characterized by the National Institute of Metrology China. Note that, in the experiment, the white point of the camera was set to D65.

Figure 3.

The relative spectral sensitivity curves of the RGB channels of Canon1000D.

4.2. Preparing the Sample Groups

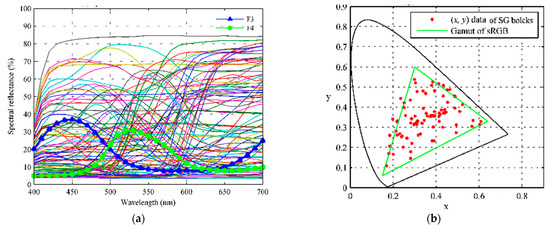

4.2.1. The Basic Samples

In order to calculate the RGB values with Formula (1) and the XYZ values with Formula (2), respectively, the spectral reflectance function of the samples should be prepared. In our experiment, the real color blocks of ColorChecker-SG were selected as a basic sample group. Figure 4a shows the spectral reflectance curves of the 96 ColorChecker-SG blocks measured using the spectrometer X-rite 7000A with 8° degree (X-rite Inc., Grand Rapids, MI, USA), and Figure 4b shows the CIE1931xy chromaticity coordinates of them, where the illuminant is D65. It can be seen from Figure 4b that the chromaticity coordinates of the 96 ColorChecker-SG blocks were almost distributed within the sRGB gamut triangle, therefore it is not suitable for the colorimetric characterization of the digital cameras in the case of wide color gamut.

Figure 4.

(a) The spectral reflectance curves of 96 ColorChecker-SG blocks measured via X-rite 7000A (de, 8°); (b) the (x, y) coordinates (CIE1931xy) of 96 ColorChecker-SG blocks, where the illuminant is D65.

4.2.2. Extending the Samples

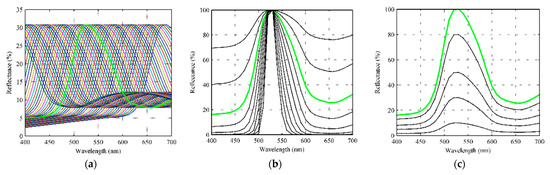

In order to obtain samples with wide color gamut, the spectral reflectance curves of ColorChecker-SG F3 and F4, as shown in Figure 4a, were used, respectively, to generate samples distributed on the whole CIE1931XYZ space. The method for the generation of wide-color-gamut samples can be found in the reference [17]. Figure 5a,d show examples of hue extension obtained by shifting the main wavelength of the spectrum, Figure 5b,e show examples of Chroma extension obtained by sharpening or passivating the spectrum, and Figure 5c,f show examples of lightness extension obtained by scaling the peak value of the spectrum. Therefore, 5529 spectral reflectance curves were generated. By adding the 5529 curves with 96 real curves of ColorChecker-SG, we had 5625 spectral reflectance curves in total. Then, the 2813 spectral reflectance curves for the training groups were uniformly drawn from the 5625 curves, and the remanining 2812 curves were used for the testing groups.

Figure 5.

Illustrations of the spectral reflectance curves extended from ColorChecker-SG 4F and 3F, respectively: (a) Hue extension of F4. (b) Chroma extension of F4. (c) Lightness extension of F4. (d) Hue extension of F3. (e) Chroma extension of F3. (f) Lightness extension of F3.

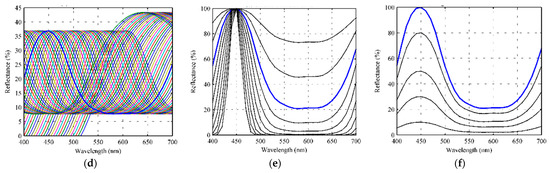

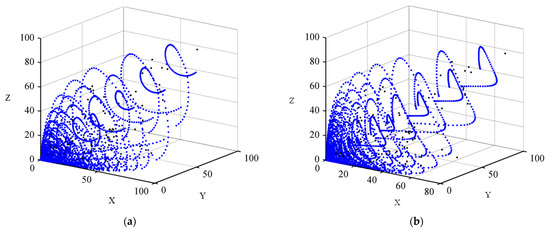

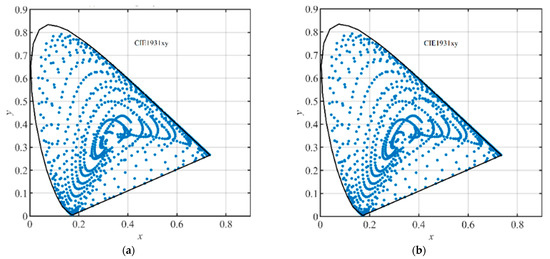

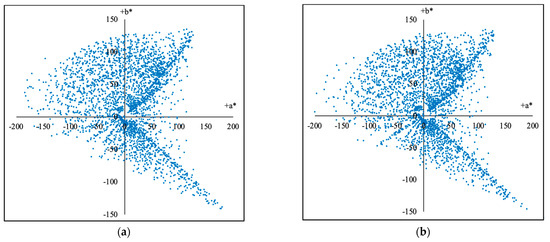

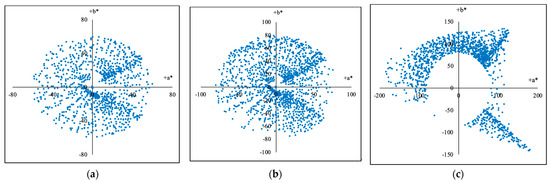

By using Formulas (1) and (2), the () and the () of the 2813 training groups and the 2812 testing groups were calculated, respectively. As results, the (X, Y, Z) distributions of the samples extended from F3 and F4 in the standard CIEXYZ color space are shown in Figure 6a,b, respectively, the (x, y) coordinates (CIE1931xy) of the 2813 training groups and the 2812 testing groups are shown in Figure 7a,b, respectively, and the (a*, b*) (CIELAB) coordinates of them are shown in Figure 8a,b, respectively. It can be seen from Figure 6 and Figure 7 that the generated samples were distributed in most of the CIE1931XYZ color space.

Figure 6.

The (X, Y, Z) standard chromaticity distribution of the wide-color-gamut samples extended from F3 and F4 as shown in blue dots: (a) The wide-color-gamut samples extended from F3. (b) The wide-color-gamut samples extended from F4.

Figure 7.

The (x, y) coordinates (CIE1931xy) of total training and testing samples as shown in blue dots: (a) 2813 training samples; (b) 2812 testing samples.

Figure 8.

The (a*, b*) (CIELAB) coordinates of total training samples and testing samples as shown in blue dots: (a) 2813 training samples; (b) 2812 testing samples.

It should be noted that, in the experiment, the CIE illuminant D65 was used as the light source in Formulas (1) and (2), respectively, and the and values were normalized to 255 according to the white point. However, it can be seen from Formulas (1) and (2) that the relationship between the () and the () was influenced by the spectrum of the light source , and if the light source was changed, the () and the () of the samples should be recalculated.

4.2.3. Dividing the Samples

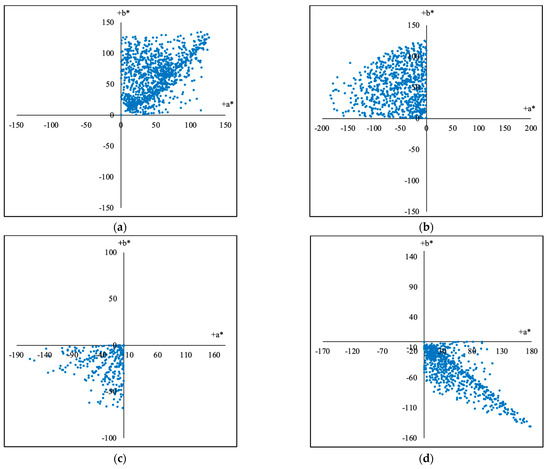

In order to find the optimal training groups and testing groups, different division methods have been tried. For the first method, according to the hue angles, the samples were divided into groups of 0–90, 90–180, 180–270, and 270–360, totaling 4 parts in CIELAB color space, and the calculation formula is shown in Equation (13). As results, the 2813 training samples were divided into 4 training groups according to their hue angles () as shown in Figure 9a–d and Table 1, and accordingly, the 2812 testing samples were divided into 4 testing groups according to their hue angles () as described in Table 2.

Figure 9.

The (a*, b*) (CIELAB) coordinates of the training groups as shown in blue dots divided by the hue angles (hab) from 2813 training samples: (a) The division Q1 with the hue angles from 0 to 90. (b) The division Q2 with the hue angles from 90 to 180. (c) The division Q3 with the hue angles from 180 to 270. (d) The division Q4 with the hue angles from 270 to 360.

Table 1.

Pearson correlation coefficients (PCC) of different training groups divided by hue angles.

Table 2.

Pearson correlation coefficients (PCC) of different testing groups divided by hue angles.

For the second method, according to the chromas (), the samples were divided into the groups of 0–60, 0–70, and greater than 70, totaling 3 parts, and the calculation formula is shown in Equation (14). As results, the 2813 training samples were divided into 3 training groups according to their chromas () as shown in Figure 10a–c and Table 3, and accordingly, the 2812 testing samples were divided into 3 testing groups according to their chromas () as described in Table 4.

Figure 10.

The (a*, b*) coordinates (CIELAB) of the training groups divided by the chromas (C*ab) from 2813 training samples: (a) The division C1 with the chromas from 0 to 60. (b) The division C2 with the chromas from 0 to 70. (c) The division C3 with the chromas above 70.

Table 3.

Pearson correlation coefficients (PCC) of different training groups divided by chromas.

Table 4.

Pearson correlation coefficients (PCC) of different testing groups divided by chromas.

4.3. The Correlation Coefficients

By using Formulas (10) and (11), the Pearson correlation coefficients between the (R, G, B) and the (X, Y, Z) of different sample groups were calculated, respectively, and the calculation results are listed in Table 1, Table 2, Table 3 and Table 4, respectively. It can be seen from Table 1 that all the Pearson correlation coefficients of the training groups Q1, Q2, Q3, and Q4, which were divided by the hue angles (), are bigger than that of the 2813 training group. Similarly, it can be seen from Table 2 that all the Pearson correlation coefficients of the testing groups Q1, Q2, Q3, and Q4 are bigger than that of the 2812 testing group. It indicates that sample groups with better linear correlation can be obtained using the hue angles (). However, it can be seen from Table 3 that the Pearson correlation coefficients of the training groups C1, C2, and C3, which were divided by the chromas (), get smaller with the increase in the chromas. It indicates that their linear correlation will get worse with the increase in the chromas.

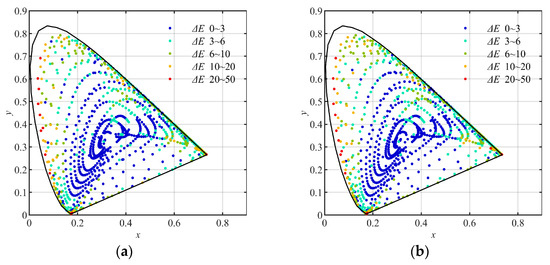

4.4. Training and Testing

Using the training groups and the testing groups above, the multi-layer BP artificial neural network (ML-BP-ANN) with the architecture of 20 (neurons) × 4 (layers) and 20 (neurons) × 8 (layers), and the polynomial formulas with different numbers of terms, including the quadratic, the cubic, the quartic, and the quantic, which were described in Section 2, were trained and tested, respectively. In the training, the least square method was used to decide the elements of matrix A in Formula (9). As results, the training errors and the testing errors expressed with CIE1976 L*a*b* color difference are listed in Table 5, Table 6, Table 7 and Table 8, respectively. The distribution of the (x, y) coordinates (CIE1931xy) of the training and testing samples converted using the quantic polynomial formula, and marked with different color difference (CIE1976 L*a*b*) levels, are shown in Figure 11, Figure 12 and Figure 13, respectively.

Table 5.

The CIE1976L*a*b* color differences in the training groups corresponding to Table 1.

Table 6.

The CIE1976L*a*b* color differences in the testing groups corresponding to Table 2.

Table 7.

The CIE1976L*a*b* color differences in the training groups corresponding to Table 3.

Table 8.

The CIE1976L*a*b* color differences in the testing groups corresponding to Table 4.

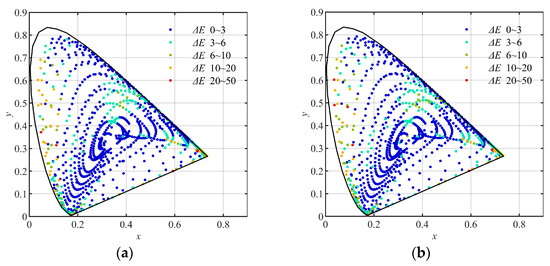

Figure 11.

The (x, y) coordinates (CIE1931xy) of the samples converted using the quantic polynomial formula, and marked with different color difference levels: (a) the 2813 training samples nongrouped; (b) the 2812 testing samples nongrouped.

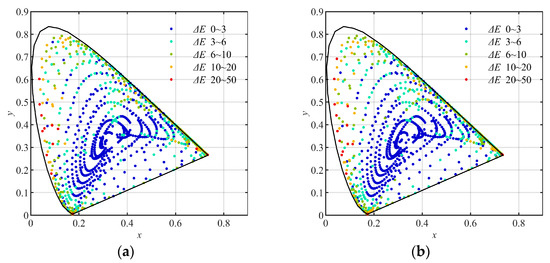

Figure 12.

The (x, y) coordinates (CIE1931xy) of the samples converted using the quantic polynomial formula, and marked with different color difference levels: (a) the 2813 training samples grouped by hue angle (h*ab); (b) the 2812 testing samples grouped by hue angle (h*ab).

Figure 13.

The (x, y) coordinates (CIE1931xy) of the samples converted using the quantic polynomial formula, and marked with different color difference levels: (a) the 2813 training samples grouped by chromas (C*ab). (b) the 2812 testing samples grouped by chromas (C*ab).

It can be seen from Table 5, Table 6, Table 7 and Table 8 that the color approximation accuracy of the polynomial formulas depends on the number of terms in the polynomial formulas, and desirable color conversion performance was achieved using the ML-BP-ANN with the architecture of 20 × 8 and the quintic polynomial formula.

4.5. Discussion

(1) By comparing Table 1 with Table 5, Table 2 with Table 6, Table 3 with Table 7, and Table 4 with Table 8, respectively, we can see that for each training group or testing group, the average and the maximum color differences (CIE1976L*a*b*) are in proportion to the Pearson correlation coefficient of the samples group itself. It indicates that the color approximation accuracy of both the polynomial formulas and the ML-BP-ANN can be improved by selecting the training groups with optimal linear correlation.

(2) It can be seen from Table 1 and Table 5 that the average and maximum color differences of the group Q96 is much smaller than that of other groups because the Pearson correlation coefficient of the group Q96 is much bigger than that of other groups. It indicates that when the chromaticity coordinates of the color samples were limited within the ordinary color-gamut area, such as the sRGB gamut triangle, the desired color approximation accuracy can be easily obtained.

(3) It can be seen from Table 5 and Table 6 that the average and maximum color differences of the groups Q1, Q2, Q3, and Q4 are obviously smaller than those of the groups Q2813 or Q2812. It indicates that sample divisions by hue angles () is an efficient method to improve color conversion accuracy in the whole color space.

(4) It can be seen from Table 5, Table 6, Table 7 and Table 8 that the average and maximum color differences of the groups C1 and C2 are much smaller than those of the groups Q2813 or Q2812, whereas the average color differences of the group C3 are bigger than those of the groups Q2813 or Q2812. It indicates that the training groups using chromas () are not a correct choice to set up the color conversion models for the whole color space.

(5) By comparing Figure 11 with Figure 12 and Figure 13, we can see that in the low-chroma area of the CIE1931xy chromaticity diagram, the color difference levels of the samples in Figure 12a,b and Figure 13a,b are correspondingly similar to those in Figure 11a,b. This indicates that for the samples with ordinary chromas such as the group Q96, it is unnecessary to divide them into sub-groups for training.

(6) By comparing Figure 11 with Figure 12 correspondingly, we can see that, in the high-chroma area of the CIE1931xy chromaticity diagram, the color difference levels in the samples in Figure 12a,b are obviously smaller than those in Figure 11a,b. It indicates again that the sample groups using hue angles () can be used to improve the color conversion accuracy in the whole color space.

(7) By comparing Figure 11 with Figure 13, we can see that in the high-chroma area of the CIE1931xy chromaticity diagram, the color difference levels in the samples in Figure 13a,b are correspondingly similar to those in Figure 11a,b. This indicates that the sample groups using chromas (C*ab) are unable to improve the color conversion accuracy in the high-chroma area of the color space.

5. Conclusions

In this paper, we demonstrate that the Pearson correlation coefficient (PCC) can be used for evaluating the linear correlation between the RGB space of digital cameras and the XYZ space of a CIE1931XYZ system, and can be used for selecting training groups with better linear correlation. The experimental results show that for polynomial transforms with a certain number of terms, the color approximation accuracy will be in proportion to the Pearson correlation coefficients of the training groups. The experimental results also show that using training groups divided by hue angles () is an efficient method to improve color conversion accuracy in the whole color space. Therefore, it can be expected that better color approximation accuracy could be achieved if training samples are divided into more groups according to their hue angles.

Author Contributions

Conceptualization, Y.L. (Yumei Li) and N.L.; Methodology, Y.L. (Yasheng Li) and N.L.; Software, Y.L. (Yasheng Li) and H.L.; Validation, Y.L. (Yumei Li); Formal Analysis, Y.L. (Yasheng Li) and H.L.; Data Curation, Y.L. (Yumei Li); Writing—Original Draft Preparation, Y.L. (Yasheng Li); Writing—Review and Editing, Y.L. (Yumei Li) and N.L.; Supervision, W.W. All authors have read and agreed to the published version of the manuscript.

Funding

National Nature Science Foundation of China (Grants No. 61975012).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data underlying the results presented in this paper are available on reasonable request from the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sun, Y.; Xi, Y.; Zhang, X. Research on color conversion model of multi-primary-color display. SID Int. Symp. 2021, 52, 982–984. [Google Scholar] [CrossRef]

- Hexley, A.C.; Yöntem, A.; Spitschan, M.; Smithson, H.E.; Mantiuk, R. Demonstrating a multi-primary high dynamic range display system for vision experiments. J. Opt. Soc. Am. A 2020, 37, 271–284. [Google Scholar] [CrossRef] [PubMed]

- Huraibat, K.; Perales, E.; Viqueira, V.; Martínez-Verdú, F.M. A multi-primary empirical model based on a quantum dots display technology. Color Res. Appl. 2020, 45, 393–400. [Google Scholar] [CrossRef]

- Lin, S.; Tan, G.; Yu, J.; Chen, E.; Weng, Y.; Zhou, X.; Xu, S.; Ye, Y.; Yan, Q.F.; Guo, T. Multi-primary-color quantum-dot down-converting films for display applications. Opt. Express 2019, 27, 28480–28493. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.-J.; Shin, M.-H.; Lee, J.-Y.; Kim, J.-H.; Kim, Y.-J. Realization of 95% of the Rec. 2020 color gamut in a highly efficient LCD using a patterned quantum dot film. Opt. Express 2017, 25, 10724–10734. [Google Scholar] [PubMed]

- Xiong, Y.; Deng, F.; Xu, S.; Gao, S. Performance analysis of multi-primary color display based on OLEDs/PLEDs. Opt. Commun. 2017, 398, 49–55. [Google Scholar] [CrossRef]

- Kim, G. Optical Design Optimization for LED Chip Bonding and Quantum Dot Based Wide Color Gamut Displays; University of California: Irvine, CA, USA, 2017. [Google Scholar]

- Masaoka, K.; Nishida, Y.; Sugawara, M.; Nakasu, E. Design of Primaries for a Wide-Gamut Television Colorimetry. IEEE Trans. Broadcast. 2010, 56, 452–457. [Google Scholar] [CrossRef]

- Zhang, X.; Qin, H.; Zhou, X.; Liu, M. Comparative evaluation of color reproduction ability and energy efficiency between different wide-color-gamut LED display approaches. Opt. Int. J. Light Electron Opt. 2021, 225, 165894. [Google Scholar]

- Rowlands, D.A. Color conversion matrices in digital cameras: A tutorial. Opt. Eng. 2020, 59, 110801. [Google Scholar]

- Ji, J.; Fang, S.; Shi, Z.; Xia, Q.; Li, Y. An efficient nonlinear polynomial color characterization method based on interrelations of color spaces. Color Res. Appl. 2020, 45, 1023–1039. [Google Scholar] [CrossRef]

- Gong, R.; Wang, Q.; Shao, X.; Liu, J. A color calibration method between different digital cameras. Optik 2016, 127, 3281–3285. [Google Scholar] [CrossRef]

- Molada-Tebar, A.; Lerma, J.L.; Marqués-Mateu, Á. Camera characterization for improving color archaeological documentation. Color Res. Appl. 2018, 43, 47–57. [Google Scholar] [CrossRef]

- Wu, X.; Fang, J.; Xu, H.; Wang, Z. High dynamic range image reconstruction in device-independent color space based on camera colorimetric characterization. Optik 2017, 140, 776–785. [Google Scholar]

- Cheung, V.; Westland, S.; Connah, D.; Ripamonti, C. A comparative study of the characterization of colour cameras by means of neural networks and polynomial transforms. Color. Technol. 2004, 120, 19–25. [Google Scholar] [CrossRef]

- Hong, G.; Luo, M.R.; Rhodes, P.A. A study of digital camera colorimetric characterization based on polynomial modeling. Color Res. Appl. 2001, 26, 76–84. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Liao, N.; Li, H.; Lv, N.; Wu, W. Colorimetric characterization of the wide-color-gamut camera using the multilayer artificial neural network. J. Opt. Soc. Am. A 2023, 40, 629. [Google Scholar] [CrossRef]

- Liu, L.; Xie, X.; Zhang, Y.; Cao, F.; Liang, J.; Liao, N. Colorimetric characterization of color imaging systems using a multi-input PSO-BP neural network. Color Res. Appl. 2022, 47, 855–865. [Google Scholar] [CrossRef]

- Yeh, C.-H.; Lin, C.-H.; Kang, L.-W.; Huang, C.-H.; Lin, M.-H.; Chang, C.-Y.; Wang, C.-C. Lightweight deep neural network for Joint learning of underwater object detection and color conversion. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6129–6143. [Google Scholar] [CrossRef]

- Ma, K.; Shi, J. Colorimetric Characterization of digital camera based on RBF neural network. Optoelectron. Imaging Multimed. Technol. VII. SPIE 2020, 11550, 282–288. [Google Scholar]

- Wang, P.T.; Tseng, C.W.; Chou, J.J. Colorimetric characterization of color image sensors based on convolutional neural network modeling. Sens. Mater. 2019, 31, 1513–1522. [Google Scholar]

- Miao, H.; Zhang, L. The color characteristic model based on optimized BP neural network. Lect. Notes Electr. Eng. 2016, 369, 55–63. [Google Scholar]

- Li, X.; Zhang, T.; Nardell, C.A.; Smith, D.D.; Lu, H. New color management model for digital camera based on immune genetic algorithm and neural network. Proc. SPIE 2007, 6786, 678632. [Google Scholar]

- Hung, P.-C. Colorimetric calibration in electronic imaging devices using a look-up-table model and interpolations. J. Electron. Imaging 1993, 2, 53–61. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).