Text Recognition Model Based on Multi-Scale Fusion CRNN

Abstract

:1. Introduction

- (1)

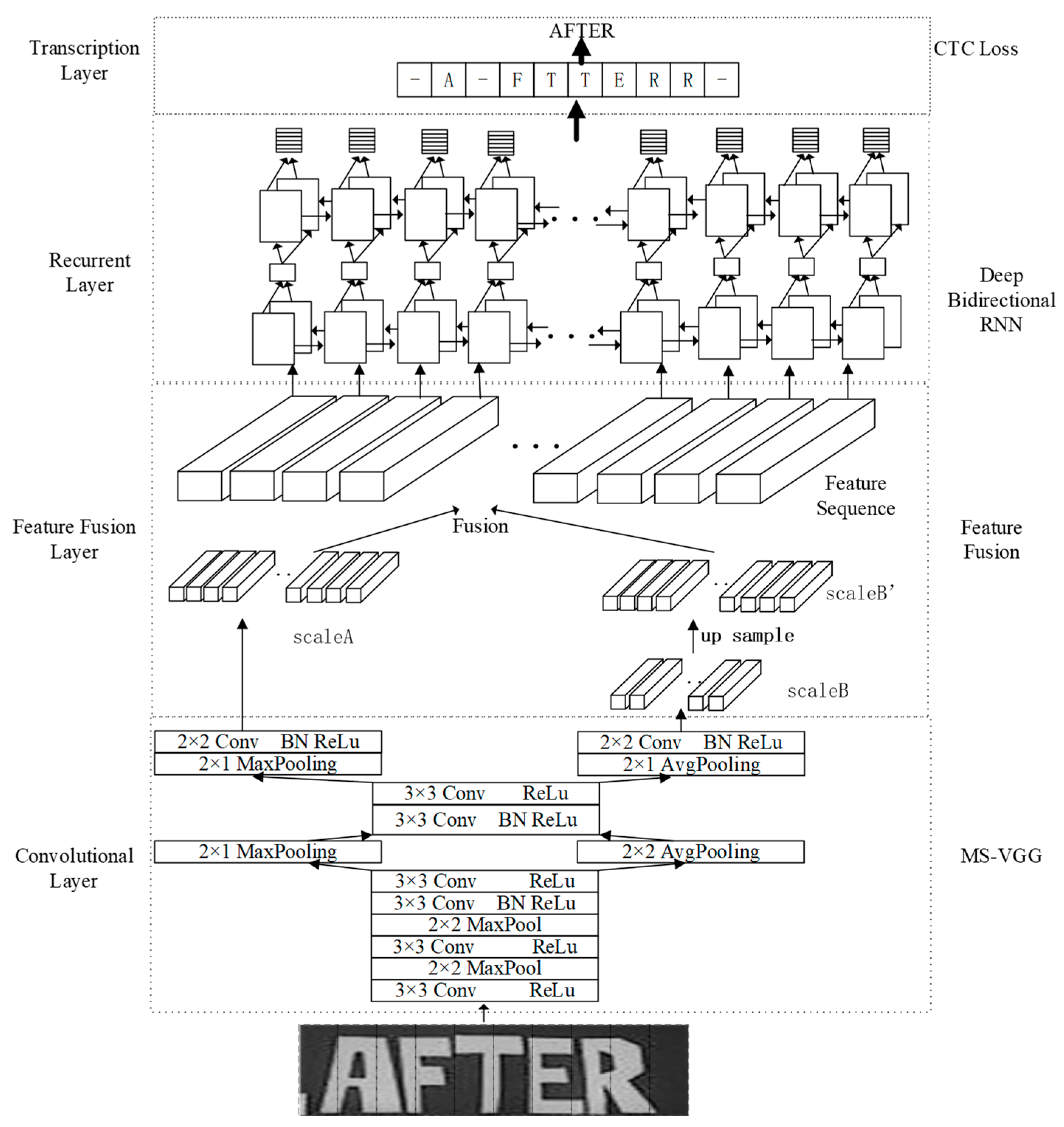

- The proposed MSF-CRNN model uses a new multi-scale output CNN in the convolutional layer. The use of two different scales of downsampling when extracting image features allows for more image features and outputs two different scales of results.

- (2)

- The MSF-CRNN model uses a new fusion approach in the feature fusion layer, which fuses the outputs of the different scales of the convolutional layer to better represent the features of the image and improve the accuracy of the recognized text.

- (3)

- The proposed model was evaluated on datasets, such as IIIT5k [18], SVT [1], ICDAR2003 [19] and ICDAR2013 [20]. Quantitative and qualitative analyses were conducted, and the experimental results demonstrate that the accuracy of the MSF-CRNN model with multiscale fusion is higher than that of the model without multiscale fusion.

2. Related Work

2.1. Traditional Text Recognition Algorithms

2.2. Text Recognition Algorithms Based on Attention Mechanisms

2.3. Text Recognition Algorithms Based on CTC

3. Methodology

3.1. The Overall Model of the MSF-CRNN Model

3.2. Convolutional Layer

3.2.1. Extraction of Features at Different Scales

3.2.2. Different Downsampling Methods

3.3. Feature Fusion Layer

3.3.1. Upsampling of ScaleB

3.3.2. Fusion of scaleA and scaleB

3.4. The Recurrent Layer

3.5. The Transcript Layer and Loss Function

3.5.1. The Transcript Layer

3.5.2. The Loss Function

4. Experimental Results

4.1. Benchmark Dataset

4.2. Model Performance Comparison

4.2.1. Qualitative Comparison

4.2.2. Quantitative Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, K.; Babenko, B.; Belongie, S. End-to-end scene text recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1457–1464. [Google Scholar]

- Cruz-Mota, J.; Bogdanova, I.; Paquier, B.; Bierlaire, M.; Thiran, J. Scale invariant feature transform on the sphere: Theory and applications. Int. J. Comput. Vis. 2012, 98, 217–241. [Google Scholar] [CrossRef] [Green Version]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Gray, R. Vector quantization. IEEE Assp Mag. 1984, 1, 4–29. [Google Scholar] [CrossRef]

- Wang, J.; Yang, J.; Yu, K.; Lv, F. Locality-constrained linear coding for image classification. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3360–3367. [Google Scholar]

- Perronnin, F.; Dance, C. Fisher kernels on visual vocabularies for image categorization. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [Green Version]

- Chen, P.H.; Lin, C.J.; Schölkopf, B. A tutorial on ν-support vector machines. Appl. Stoch. Models Bus. Ind. 2005, 21, 111–136. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by random Forest. R News 2002, 2, 18–22. [Google Scholar]

- Tseng, H.; Chang, P.-C.; Andrew, G.; Jurafsky, D.; Manning, C. A conditional random field word segmenter for sighan bakeoff 2005. In Proceedings of the Fourth SIGHAN Workshop on Chinese Language Processing, Jeju Island, Korea, 14–15 October 2005; pp. 1021–1033. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 30 June 2016; pp. 1646–1654. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 30 June 2016; pp. 770–778. [Google Scholar]

- Shi, B.; Bai, X.; Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shi, B.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Robust scene text recognition with automatic rectification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 30 June 2016; pp. 4168–4176. [Google Scholar]

- Mishra, A.; Alahari, K.; Jawahar, C. Scene text recognition using higher order language priors. In Proceedings of the BMVC-British Machine Vision Conference, Surrey, UK, 3–7 September 2012; pp. 2130–2145. [Google Scholar]

- Lucas, S.M.; Panaretos, A.; Sosa, L.; Tang, A. ICDAR 2003 robust reading competitions: Entries, results, and future directions. Int. J. Doc. Anal. Recognit. 2005, 7, 105–122. [Google Scholar] [CrossRef]

- Karatzas, D.; Shafait, F.; Uchida, S. ICDAR 2013 robust reading competition. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1484–1493. [Google Scholar]

- Ye, Q.; Doermann, D. Text detection and recognition in imagery: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1480–1500. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Belongie, S. Word spotting in the wild. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; pp. 591–604. [Google Scholar]

- Yao, C.; Bai, X.; Shi, B.; Liu, W. Strokelets: A learned multi-scale representation for scene text recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 4042–4049. [Google Scholar]

- Neumann, L.; Matas, J. Real-time scene text localization and recognition. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3538–3545. [Google Scholar]

- Almazán, J.; Gordo, A.; Fornés, A.; Valveny, E. Word spotting and recognition with embedded attributes. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2552–2566. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Reading text in the wild with convolutional neural networks. Int. J. Comput. Vis. 2016, 116, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Cheng, Z.; Bai, F.; Xu, Y.; Zheng, G.; Pu, S.; Zhou, S. Focusing attention: Towards accurate text recognition in natural images. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5076–5084. [Google Scholar]

- Su, B.; Lu, S. Accurate scene text recognition based on recurrent neural network. In Proceedings of the Computer Vision–ACCV 2014: 12th Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 35–48. [Google Scholar]

- Ranjitha, P.; Rajashekar, K. Multi-oriented text recognition and classification in natural images using MSER. In Proceedings of the 2020 International Conference for Emerging Technology (INCET), Belgaum, India, 5–7 June 2020; pp. 1–5. [Google Scholar]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Li, Y.; Wang, Q.; Xiao, T.; Liu, T.; Zhu, J. Neural machine translation with joint representatio. Proc. AAAI Conf. Artif. Intell. 2020, 34, 8285–8292. [Google Scholar]

- Lee, C.-Y.; Osindero, S. Recursive recurrent nets with attention modeling for ocr in the wild. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 1 July 2016; pp. 2231–2239. [Google Scholar]

- Bai, F.; Cheng, Z.; Niu, Y.; Pu, S.; Zhou, S. Edit probability for scene text recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1508–1516. [Google Scholar]

- Liu, Z.; Li, Y.; Ren, F.; Goh, W.; Yu, H. Squeezedtext: A real-time scene text recognition by binary convolutional encoder-decoder network. Proc. AAAI Conf. Artif. Intell. 2018, 32, 1052–1060. [Google Scholar] [CrossRef]

- Shi, B.; Yang, M.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Aster: An attentional scene text recognizer with flexible rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2035–2048. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.-H.; Lucey, S. Inverse compositional spatial transformer networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2568–2576. [Google Scholar]

- Cheng, Z.; Xu, Y.; Bai, F.; Niu, Y.; Pu, S.; Zhou, S. Aon: Towards arbitrarily-oriented text recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5571–5579. [Google Scholar]

- Liu, W.; Chen, C.; Wong, K.-Y. Char-net: A character-aware neural network for distorted scene text recognition. Proc. AAAI Conf. Artif. Intell. 2018, 32, 1330–1342. [Google Scholar] [CrossRef]

- Liao, M.; Zhang, J.; Wan, Z.; Xie, F.; Liang, J.; Lyu, P.; Yao, C.; Bai, X. Scene text recognition from two-dimensional perspective. Proc. AAAI Conf. Artif. Intell. 2019, 33, 8714–8721. [Google Scholar] [CrossRef] [Green Version]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. Int. Conf. Mach. Learn. 2015, 37, 2048–2057. [Google Scholar]

- Li, H.; Wang, P.; Shen, C.; Zhang, G. Show, attend and read: A simple and strong baseline for irregular text recognition. Proc. AAAI Conf. Artif. Intell. 2019, 33, 8610–8617. [Google Scholar] [CrossRef] [Green Version]

- Qiao, Z.; Zhou, Y.; Yang, D.; Zhang, G. Seed: Semantics enhanced encoder-decoder framework for scene text recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13528–13537. [Google Scholar]

- Zhao, X.; Zhang, Y.; Zhang, T.; Zou, X. Channel splitting network for single MR image super-resolution. IEEE Trans. Image Process. 2019, 28, 5649–5662. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Graves, A.; Liwicki, M.; Bunke, H.; Schmidhuber, J.; Fernández, S. Unconstrained on-line handwriting recognition with recurrent neural networks. Adv. Neural Inf. Process. Syst. 2007, 20, 3120–3133. [Google Scholar]

- Liu, W.; Chen, C.; Wong, K.Y.K.; Su, Z.; Han, J. Star-net: A spatial attention residue network for scene text recognition. In Proceedings of the The British Machine Vision Conference, York, UK, 19–22 September 2016; pp. 7–17. [Google Scholar]

- Fang, S.; Xie, H.; Zha, Z.J.; Sun, N.; Tan, J.; Zhang, Y. Attention and language ensemble for scene text recognition with convolutional sequence modeling. In Proceedings of the 26th ACM International Conference on Multimedia, New York, NY, USA, 22–26 October 2018; pp. 248–256. [Google Scholar]

- Zhao, X.; Zhang, Y.; Qin, Y.; Wang, Q.; Zhang, T.; Li, T. Single MR image super-resolution via channel splitting and serial fusion network. Knowl.-Based Syst. 2022, 246, 108669. [Google Scholar] [CrossRef]

- Al-Saffar, A.; Awang, S.; Al-Saiagh, W.; Al-Khaleefa, A.; Abed, S. A Sequential Handwriting Recognition Model Based on a Dynamically Configurable CRNN. Sensors 2021, 21, 7306. [Google Scholar] [CrossRef]

- Na, B.; Kim, Y.; Park, S. Multi-modal text recognition networks: Interactive enhancements between visual and semantic features. Eur. Conf. Comput. Vis. 2022, 13688, 446–463. [Google Scholar]

- Fu, Z.; Xie, H.; Jin, G.; Guo, J. Look back again: Dual parallel attention network for accurate and robust scene text recognition. In Proceedings of the 2021 International Conference on Multimedia Retrieval, New York, NY, USA, 21–24 August 2021; pp. 638–644. [Google Scholar]

- Zhao, S.; Wang, X.; Zhu, L.; Yang, Y. CLIP4STR: A Simple Baseline for Scene Text Recognition with Pre-trained Vision-Language Model. arXiv 2023, arXiv:2305.14014. [Google Scholar]

- Bautista, D.; Atienza, R. Scene text recognition with permuted autoregressive sequence models. Eur. Conf. Comput. Vis. 2022, 13688, 178–196. [Google Scholar]

- He, Y.; Chen, C.; Zhang, J.; Liu, J.; He, F.; Wang, C.; Du, B. Visual semantics allow for textual reasoning better in scene text recognition. Proc. AAAI Conf. Artif. Intell. 2022, 36, 888–896. [Google Scholar] [CrossRef]

- Zheng, T.; Chen, Z.; Fang, S.; Xie, H.; Jiang, Y. Cdistnet: Perceiving multi-domain character distance for robust text recognition. arXiv 2021, arXiv:2111.11011. [Google Scholar]

- Cui, M.; Wang, W.; Zhang, J.; Wang, L. Representation and correlation enhanced encoder-decoder framework for scene text recognition. In Proceedings of the Document Analysis and Recognition–ICDAR 2021: 16th International Conference, Lausanne, Switzerland, 5–10 September 2021; pp. 156–170. [Google Scholar]

- Graves, A.; Graves, A. Long short-term memory. Supervised Seq. Label. Recurr. Neural Netw. 2012, 385, 37–45. [Google Scholar]

- Chollampatt, S.; Ng, H.T. A multilayer convolutional encoder-decoder neural network for grammatical error correction. Proc. AAAI Conf. Artif. Intell. 2018, 32, 1220–1230. [Google Scholar] [CrossRef]

- Sheng, F.; Chen, Z.; Xu, B. NRTR: A no-recurrence sequence-to-sequence model for scene text recognition. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019; pp. 781–786. [Google Scholar]

- Luo, C.; Jin, L.; Sun, Z. Moran: A multi-object rectified attention network for scene text recognition. Pattern Recognit. 2019, 90, 109–118. [Google Scholar] [CrossRef]

- Litman, R.; Anschel, O.; Tsiper, S.; Litman, R.; Mazor, S.; Manmatha, R. Scatter: Selective context attentional scene text recognizer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11962–11972. [Google Scholar]

| The Way of Scales | Average Precision (AP) | Average Precision of Character (APc) |

|---|---|---|

| S4 | 86.3 | 97.4 |

| S8 | 65.3 | 82.1 |

| S16 | 45.3 | 74.0 |

| Model | Average Precision (AP) | Average Precision of Character (APc) |

|---|---|---|

| MSF-CRNN + concat | 31.1 | 78.3 |

| MSF-CRNN + add | 80.4 | 91.4 |

| Models | Weights | ICDAR2003 | ICDAR2013 | IIIT5K | SVT |

|---|---|---|---|---|---|

| CRNN (BiGRU) | 29.3M | 87.3 | 84.2 | 80.1 | 79.1 |

| MSF-CRNN (BiGRU) | 31.3M | 91.2 | 92.9 | 83.3 | 83.5 |

| +3.9 | +8.7 | +3.2 | +4.4 |

| Models | Weights | ICDAR2003 | ICDAR2013 | IIIT5K | SVT |

|---|---|---|---|---|---|

| CRNN (BiLSTM) | 31.8M | 90.4 | 86.7 | 83.2 | 82.8 |

| MSF-CRNN (BiLSTM) | 33.9M | 96.3 | 94.9 | 91.3 | 90.1 |

| +5.9 | +8.2 | +8.1 | +7.3 |

| Models | Inference Time | Training Time (min/epoch) | |||

|---|---|---|---|---|---|

| ICDAR2003 | ICDAR2013 | IIIT5K | SVT | ||

| CRNN (BiGRU) | 42 ms | 20 min | 30 min | 18 min | 17 min |

| MSF-CRNN (BiGRU) | 46 ms | 22 min | 32 min | 20 min | 19 min |

| CRNN (BiLSTM) | 53 ms | 25 min | 35 min | 23 min | 22 min |

| MSF-CRNN (BiLSTM) | 58 ms | 26 min | 36 min | 23 min | 24 min |

| Models | ICDAR2003 | ICDAR2013 | IIIT5K | SVT |

|---|---|---|---|---|

| R2AM (2016) [32] | 97.0 | 90 | 78.4 | 80.7 |

| RARE (2016) [17] | 90.1 | 88.6 | 81.9 | 81.9 |

| FAN (2017) [27] | 94.2 | 93.3 | 87.4 | 85.9 |

| NRTR (2017) [58] | 95.4 | 94.7 | 86.5 | 88.3 |

| Char-Net (2018) [38] | 93.3 | 91.1 | 92 | 87.6 |

| ASTER (2018) [35] | 94.5 | 91.8 | 93.4 | 89.5 |

| MORAN (2019) [59] | 95.0 | 92.4 | 91.2 | 88.3 |

| SCATTER (2020) [60] | 96.1 | 93.8 | 92.9 | 89.2 |

| RCEED (2022) [55] | - | 94.7 | 94.9 | 91.8 |

| MSF-CRNN (ours) | 96.3 | 94.9 | 91.3 | 90.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, L.; He, Z.; Wang, K.; Wu, Z.; Wang, Y.; Zhang, G.; Wang, X. Text Recognition Model Based on Multi-Scale Fusion CRNN. Sensors 2023, 23, 7034. https://doi.org/10.3390/s23167034

Zou L, He Z, Wang K, Wu Z, Wang Y, Zhang G, Wang X. Text Recognition Model Based on Multi-Scale Fusion CRNN. Sensors. 2023; 23(16):7034. https://doi.org/10.3390/s23167034

Chicago/Turabian StyleZou, Le, Zhihuang He, Kai Wang, Zhize Wu, Yifan Wang, Guanhong Zhang, and Xiaofeng Wang. 2023. "Text Recognition Model Based on Multi-Scale Fusion CRNN" Sensors 23, no. 16: 7034. https://doi.org/10.3390/s23167034