A Review of Multi-Modal Learning from the Text-Guided Visual Processing Viewpoint

Abstract

:1. Introduction

- We comparatively analyze previous and new SOTA approaches over conventional evaluation criteria [11] and datasets by paying particular attention to the models missed by earlier studies.

- By critically examining different methodologies and their problem-solving approaches, we can set the stage for future research that can assist researchers in better understanding present limitations.

2. Foundation

2.1. Language and Vision AI

2.1.1. Language Models

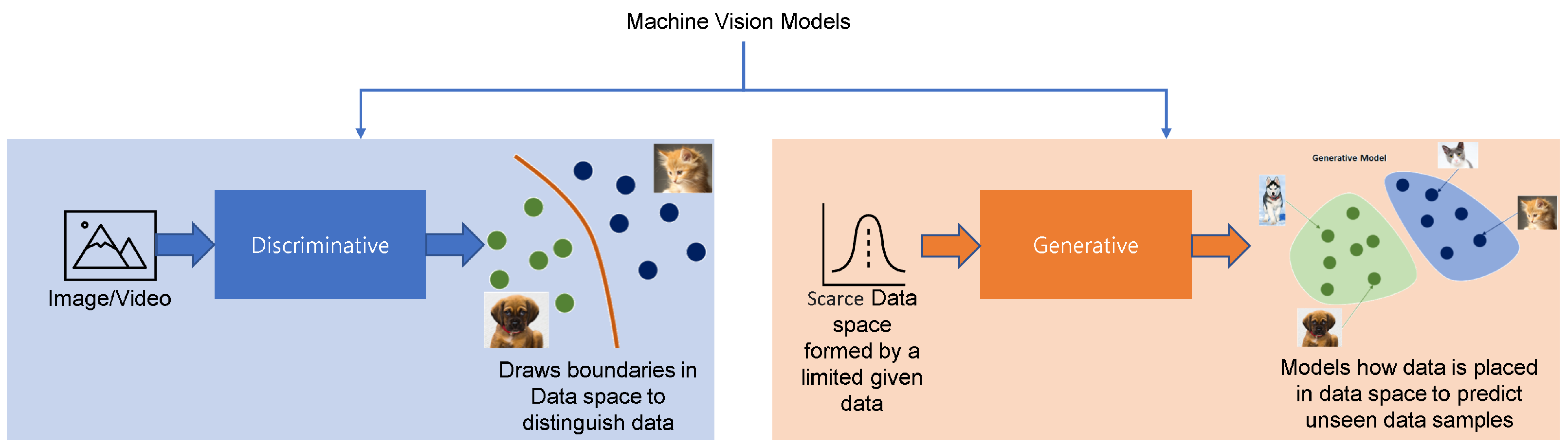

2.1.2. Visual Models

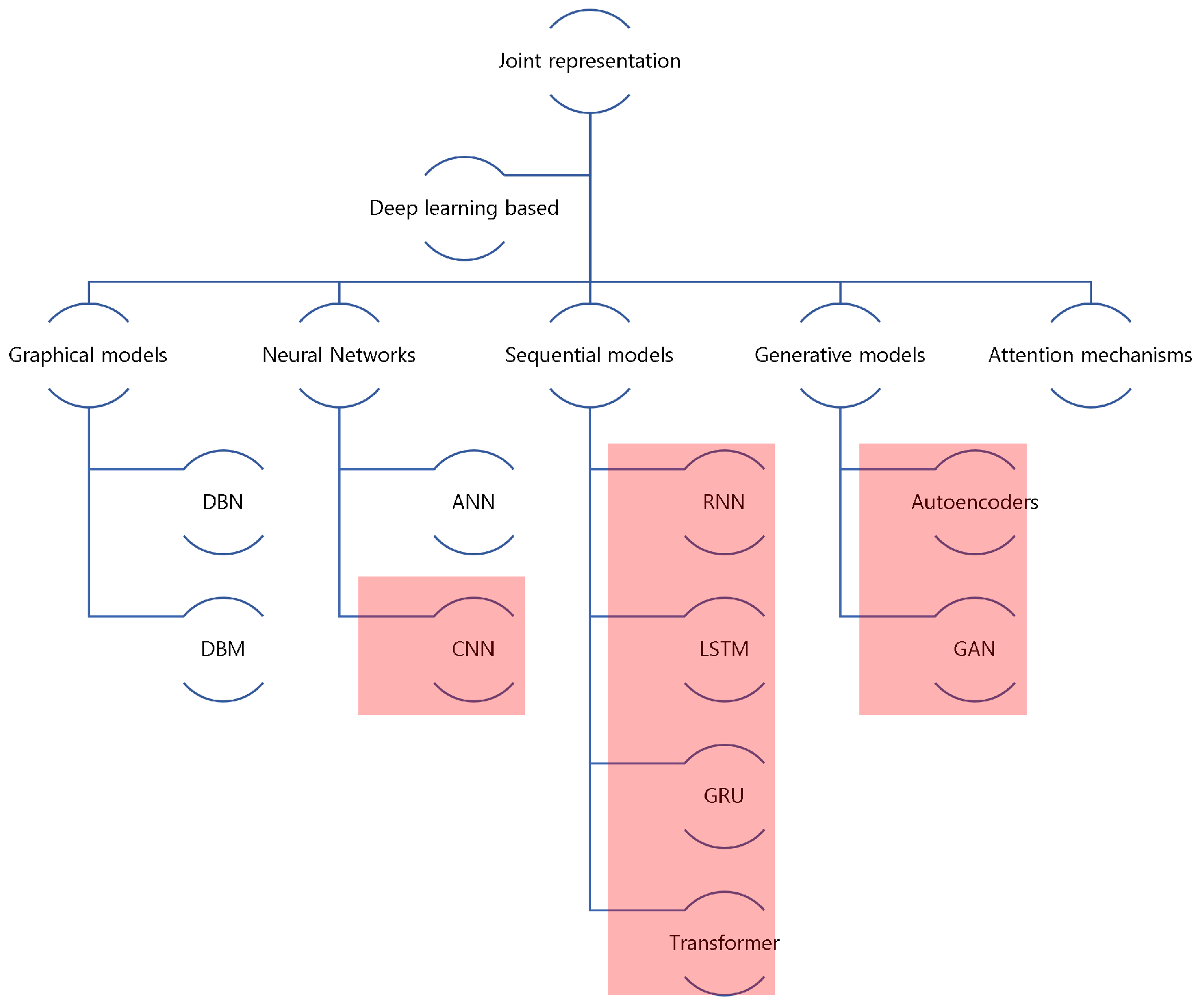

2.2. Joint Representation

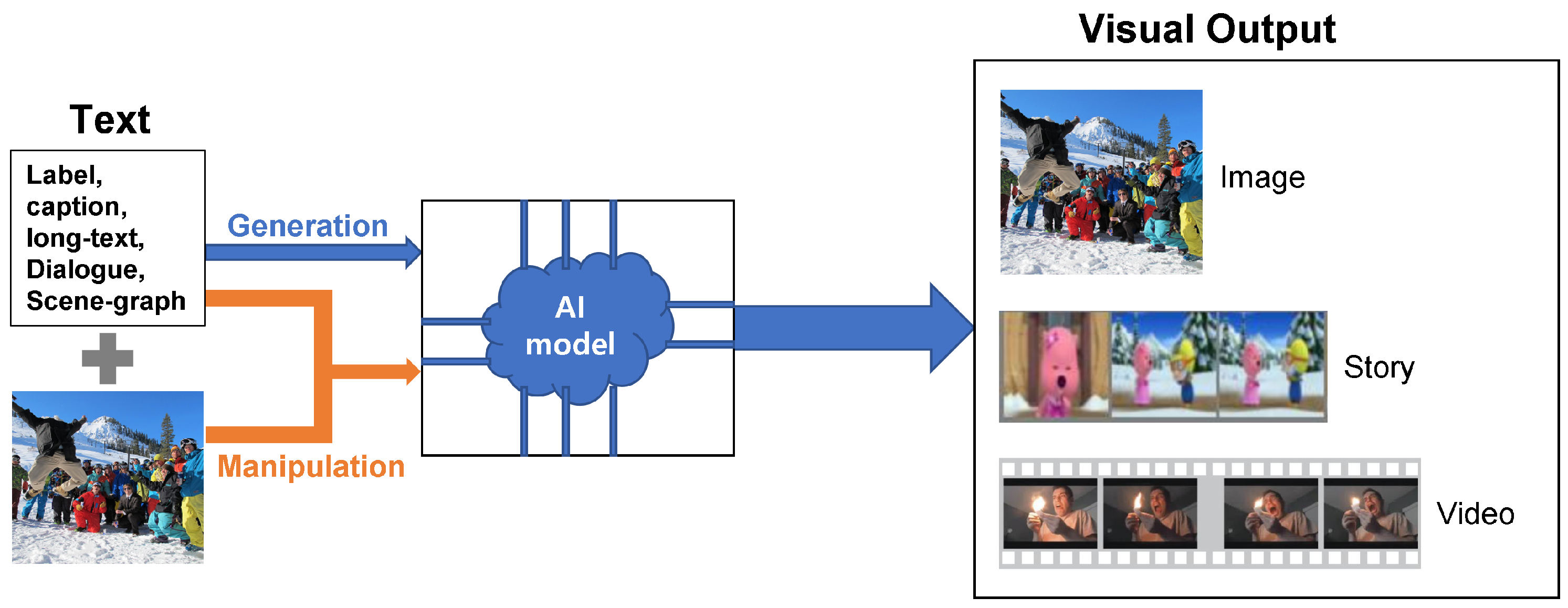

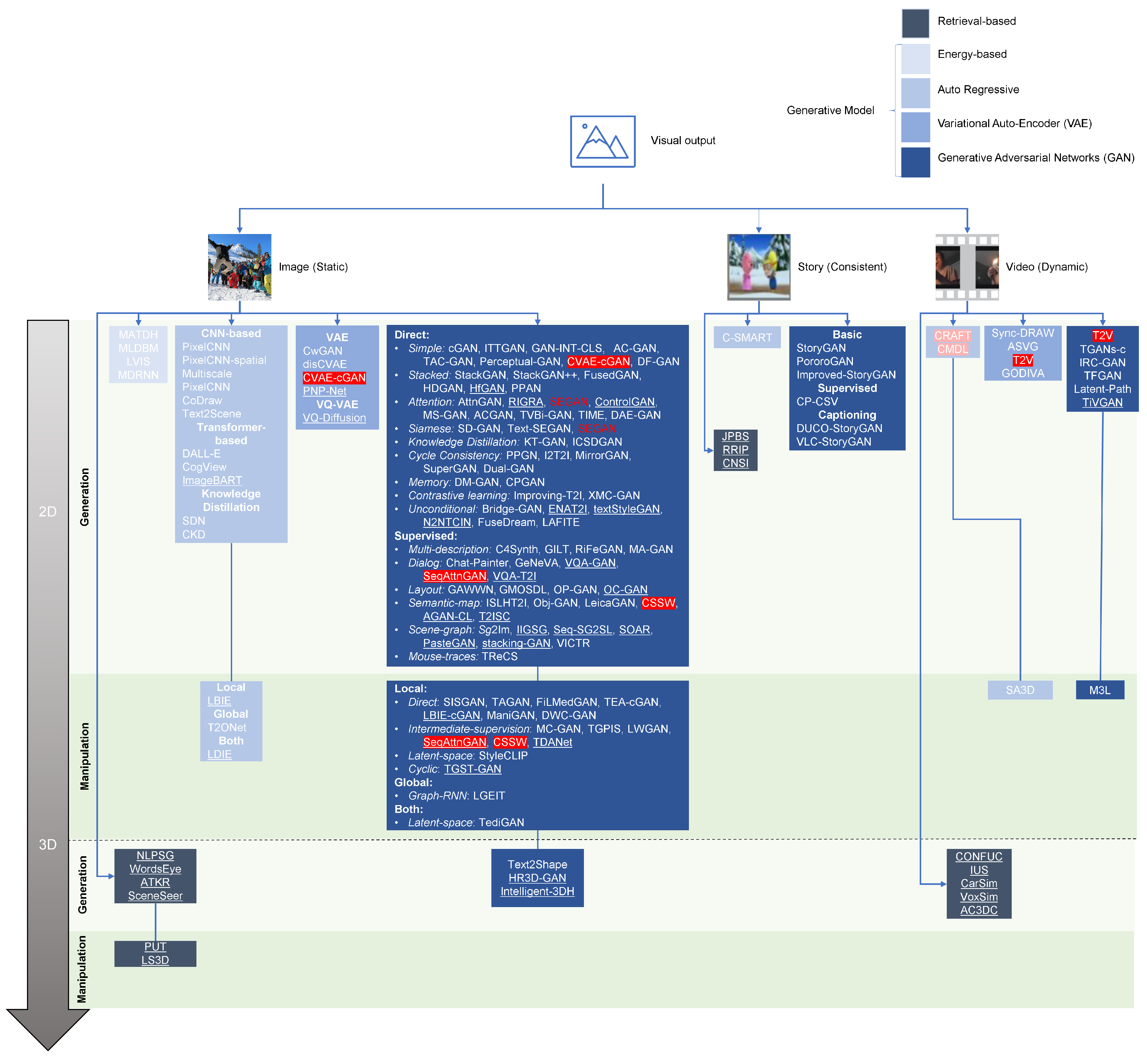

3. Text-Guided Visual-Output

4. Image (Static)

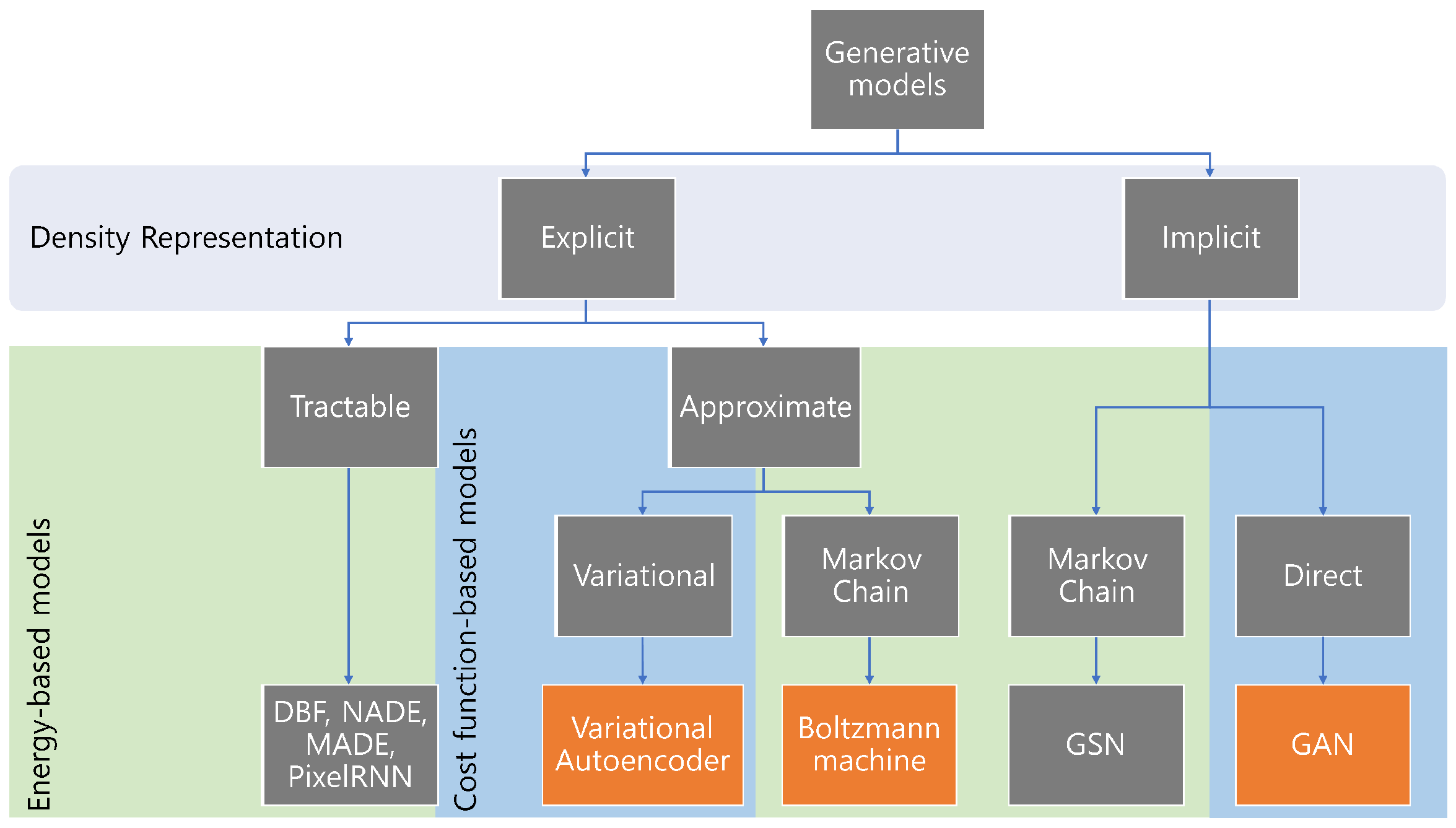

4.1. Energy-Based Models

4.2. Auto-Regressive Models

4.2.1. Generation

4.2.2. Manipulation

4.3. Variational Auto-Encoder (VAE)

4.4. Generative Adverserial Networks (GAN)

- First, we expand over the previous list by adding additional papers and categorizing them into the already given taxonomy.

- Second, we separate these models into generation or manipulation based on model input.

- Third, we not only consider the image as 2D but include studies beyond the 2D image, such as 3D images, stories, and videos.

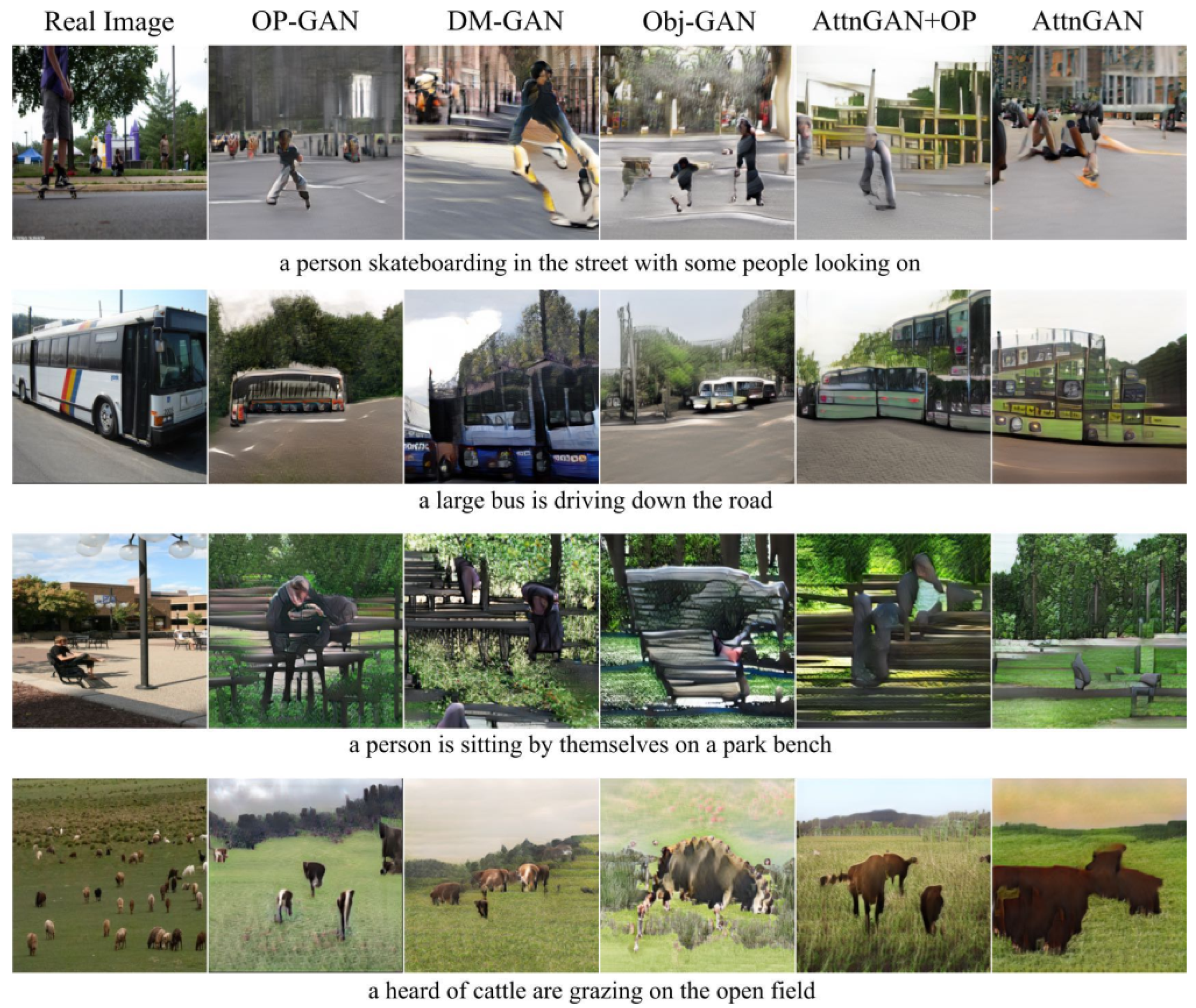

4.4.1. Direct T2I

4.4.2. Supervised T2I

5. Story (Consistent)

5.1. GAN Model

5.2. Autoregressive Model

6. Video (Dynamic)

6.1. VAE Models

6.2. Auto-Regressive Models

6.2.1. Generation

6.2.2. Manipulation

6.3. GAN Models

6.3.1. Generation

6.3.2. Manipulation

7. Datasets

8. Evaluation Metrics and Comparisons

8.1. Automatic

8.1.1. T2I

8.1.2. T2S and T2V

8.2. Human Evaluation

9. Applications

Open-Source Tools

- Pytorch, TensorFlow, Keras, and Scikit-learn; for DL and ML;

- NumPy; for data analysis and high-performance scientific computing;

- OpenCV; for computer vision;

- NLTK, spaCy; for Natural Language Processing (NLP);

- SciPy; for advanced computing;

- Pandas; for general-purpose data analysis;

- Seaborn; for data visualization.

- Kaggle (https://www.kaggle.com, accessed on 30 May 2022);

- Google Dataset Search (https://datasetsearch.research.google.com, accessed on 30 May 2022);

- UCI Machine Learning Repository (https://archive.ics.uci.edu/ml/index.php, accessed on 30 May 2022);

- OpenML (https://www.openml.org, accessed on 30 May 2022);

- DataHub (https://datahubproject.io, accessed on 30 May 2022);

- Papers with Code (https://paperswithcode.com, accessed on 30 May 2022);

- VisualData (https://visualdata.io, accessed on 30 May 2022).

10. Existing Challenges

10.1. Data Limitations

10.2. High-Dimensionality

10.3. Framework Limitations

10.4. Misleading Evaluation

11. Discussion and Future Directions

11.1. Visual Tasks

11.2. Generative Models

11.3. Cross-Modal Datasets

11.4. Evaluation Techniques

- Evaluate the correctness between the image and caption;

- Evaluate the presence of defined objects in the image;

- Clarify the difference between the foreground and background;

- Evaluate the overall consistency between previous output and successive caption;

- Evaluate the consistency between the frames considering the spatio-temporal dynamics inherent in videos [415].

12. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kosslyn, S.M.; Ganis, G.; Thompson, W.L. Neural foundations of imagery. Nat. Rev. Neurosci. 2001, 2, 635–642. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Goldberg, A.; Eldawy, M.; Dyer, C.; Strock, B. A Text-to-Picture Synthesis System for Augmenting Communication; AAAI Press: Vancouver, BC, Canada, 2007; p. 1590. ISBN 9781577353232. [Google Scholar]

- Srivastava, N.; Salakhutdinov, R.R. Multimodal Learning with Deep Boltzmann Machines. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Mansimov, E.; Parisotto, E.; Ba, J.L.; Salakhutdinov, R. Generating Images from Captions with Attention. arXiv 2016, arXiv:1511.02793. [Google Scholar]

- Gregor, K.; Danihelka, I.; Graves, A.; Rezende, D.J.; Wierstra, D. DRAW: A Recurrent Neural Network For Image Generation. arXiv 2015, arXiv:1502.04623. [Google Scholar]

- Reed, S.; Akata, Z.; Yan, X.; Logeswaran, L.; Schiele, B.; Lee, H. Generative Adversarial Text to Image Synthesis. arXiv 2016, arXiv:1605.05396. [Google Scholar]

- Wu, X.; Xu, K.; Hall, P. A Survey of Image Synthesis and Editing with Generative Adversarial Networks. Tsinghua Sci. Technol. 2017, 22, 660–674. [Google Scholar] [CrossRef]

- Huang, H.; Yu, P.S.; Wang, C. An Introduction to Image Synthesis with Generative Adversarial Nets. arXiv 2018, arXiv:1803.04469. [Google Scholar]

- Agnese, J.; Herrera, J.; Tao, H.; Zhu, X. A Survey and Taxonomy of Adversarial Neural Networks for Text-to-Image Synthesis. arXiv 2019, arXiv:1910.09399. [Google Scholar] [CrossRef]

- Frolov, S.; Hinz, T.; Raue, F.; Hees, J.; Dengel, A. Adversarial Text-to-Image Synthesis: A Review. arXiv 2021, arXiv:2101.09983. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- A Survey on Deep Multimodal Learning for Computer Vision: Advances, Trends, APPLICATIONS, and Datasets; Springer: Berlin/Heidelberg, Germany, 2021.

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal Machine Learning: A Survey and Taxonomy. arXiv 2017, arXiv:1705.09406. [Google Scholar] [CrossRef] [PubMed]

- Jurafsky, D.; Martin, J.H.; Kehler, A.; Linden, K.V.; Ward, N. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics and Speech Recognition; Amazon.com: Bellevue, WA, USA, 1999; ISBN 9780130950697. [Google Scholar]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Khan, W.; Daud, A.; Nasir, J.A.; Amjad, T. A survey on the state-of-the-art machine learning models in the context of NLP. Kuwait J. Sci. 2016, 43, 95–113. [Google Scholar]

- Torfi, A.; Shirvani, R.A.; Keneshloo, Y.; Tavaf, N.; Fox, E.A. Natural Language Processing Advancements By Deep Learning: A Survey. arXiv 2020, arXiv:2003.01200. [Google Scholar]

- Krallinger, M.; Leitner, F.; Valencia, A. Analysis of Biological Processes and Diseases Using Text Mining Approaches. In Bioinformatics Methods in Clinical Research; Matthiesen, R., Ed.; Methods in Molecular Biology; Humana Press: Totowa, NJ, USA, 2010; pp. 341–382. [Google Scholar] [CrossRef]

- Sutskever, I.; Martens, J.; Hinton, G. Generating text with recurrent neural networks. In Proceedings of the 28th International Conference on International Conference on Machine Learning, ICML’11, Bellevue, WA, USA, 28 June–2 July 2011; Omnipress: Madison, WI, USA, 2011; pp. 1017–1024. [Google Scholar]

- Socher, R.; Lin, C.C.Y.; Ng, A.Y.; Manning, C.D. Parsing natural scenes and natural language with recursive neural networks. In Proceedings of the 28th International Conference on International Conference on Machine Learning, ICML’11, Bellevue, WA, USA, 28 June–2 July 2011; Omnipress: Madison, WI, USA, 2011; pp. 129–136, ISBN 9781450306195. [Google Scholar]

- Le, Q.V.; Mikolov, T. Distributed Representations of Sentences and Documents. arXiv 2014, arXiv:1405.4053. [Google Scholar]

- Zhang, X.; Zhao, J.; LeCun, Y. Character-level Convolutional Networks for Text Classification. In Advances in Neural Information Processing Systems; Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28, Available online: https://proceedings.neurips.cc/paper/2015/file/250cf8b51c773f3f8dc8b4be867a9a02-Paper.pdf (accessed on 30 May 2022).

- Harris, Z.S. Distributional Structure. WORD 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Guy, L. Riemannian Geometry and Statistical Machine Learning; Illustrated, Ed.; Carnegie Mellon University: Pittsburgh, PA, USA, 2015; ISBN 978-0-496-93472-0. [Google Scholar]

- Leskovec, J.; Rajaraman, A.; Ullman, J.D. Mining of Massive Datasets, 2nd ed.; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. arXiv 2013, arXiv:1310.4546. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. arXiv 2018, arXiv:1802.05365. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. arXiv 2017, arXiv:1607.04606. [Google Scholar] [CrossRef]

- Zeng, G.; Li, Z.; Zhang, Y. Pororogan: An improved story visualization model on pororo-sv dataset. In Proceedings of the 2019 3rd International Conference on Computer Science and Artificial Intelligence, Normal, IL, USA, 6–8 December 2019; pp. 155–159. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D. StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks. arXiv 2017, arXiv:1612.03242. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D. StackGAN++: Realistic Image Synthesis with Stacked Generative Adversarial Networks. arXiv 2018, arXiv:1710.10916. [Google Scholar]

- Xu, T.; Zhang, P.; Huang, Q.; Zhang, H.; Gan, Z.; Huang, X.; He, X. AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks. arXiv 2017, arXiv:1711.10485. [Google Scholar]

- Rumelhart, D.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Fukushima, K. Neocognitron. Scholarpedia 2007, 2, 1717. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Kalchbrenner, N.; Espeholt, L.; Simonyan, K.; Oord, A.v.d.; Graves, A.; Kavukcuoglu, K. Neural Machine Translation in Linear Time. arXiv 2017, arXiv:1610.10099. [Google Scholar]

- Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y.N. Convolutional Sequence to Sequence Learning. arXiv 2017, arXiv:1705.03122. [Google Scholar]

- Reed, S.; Akata, Z.; Schiele, B.; Lee, H. Learning Deep Representations of Fine-grained Visual Descriptions. arXiv 2016, arXiv:1605.05395. [Google Scholar]

- Tang, G.; Müller, M.; Rios, A.; Sennrich, R. Why Self-Attention? A Targeted Evaluation of Neural Machine Translation Architectures. arXiv 2018, arXiv:1808.08946. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training; Technical Report; OpenAI: San Francisco, CA, USA, 2018; p. 12. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners; OpenAI: San Francisco, CA, USA, 2019; Volume 1, p. 24. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Bengio, S.; Vinyals, O.; Jaitly, N.; Shazeer, N. Scheduled Sampling for Sequence Prediction with Recurrent Neural Networks. arXiv 2015, arXiv:1506.03099. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; Association for Computational Linguistics: Ann Arbor, MI, USA, 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Keneshloo, Y.; Shi, T.; Ramakrishnan, N.; Reddy, C.K. Deep Reinforcement Learning For Sequence to Sequence Models. arXiv 2018, arXiv:1805.09461. [Google Scholar] [CrossRef] [PubMed]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; Adaptive Computation and Machine Learning Series; A Bradford Book: Cambridge, MA, USA, 2018. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I. Reinforcement Learning Neural Turing Machines. arXiv 2015, arXiv:1505.00521. [Google Scholar]

- Williams, R.J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- Daumé, H.; Langford, J.; Marcu, D. Search-based Structured Prediction. arXiv 2009, arXiv:0907.0786. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Neha, S.; Vibhor, J.; Anju, M. An Analysis of Convolutional Neural Networks for Image Classification—ScienceDirect. Procedia Comput. Sci. 2018, 132, 377–384. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep Learning for Generic Object Detection: A Survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Nasr Esfahani, S.; Latifi, S. Image Generation with Gans-based Techniques: A Survey. Int. J. Comput. Sci. Inf. Technol. 2019, 11, 33–50. [Google Scholar] [CrossRef]

- Li, Z.; Yang, W.; Peng, S.; Liu, F. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. arXiv 2020, arXiv:2004.02806. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:1512.00567. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. arXiv 2016, arXiv:1602.07261. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide Residual Networks. arXiv 2017, arXiv:1605.07146. [Google Scholar]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in Resnet: Generalizing Residual Architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. arXiv 2017, arXiv:1610.02357. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2020, arXiv:1905.11946. [Google Scholar]

- Xie, Q.; Luong, M.T.; Hovy, E.; Le, Q.V. Self-training with Noisy Student improves ImageNet classification. arXiv 2020, arXiv:1911.04252. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic Routing Between Capsules. arXiv 2017, arXiv:1710.09829. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A Survey of the Recent Architectures of Deep Convolutional Neural Networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Wu, Y.N.; Gao, R.; Han, T.; Zhu, S.C. A Tale of Three Probabilistic Families: Discriminative, Descriptive and Generative Models. arXiv 2018, arXiv:1810.04261. [Google Scholar] [CrossRef]

- Goodfellow, I. NIPS 2016 Tutorial: Generative Adversarial Networks. arXiv 2017, arXiv:1701.00160. [Google Scholar]

- Oussidi, A.; Elhassouny, A. Deep generative models: Survey. In Proceedings of the 2018 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 2–4 April 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Fahlman, S.; Hinton, G.E.; Sejnowski, T. Massively Parallel Architectures for AI: NETL, Thistle, and Boltzmann Machines; AAAI: Washington, DC, USA, 1983; pp. 109–113. [Google Scholar]

- Ackley, D.H.; Hinton, G.E.; Sejnowski, T.J. A learning algorithm for boltzmann machines. Cogn. Sci. 1985, 9, 147–169. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; McClelland, J.L. Information Processing in Dynamical Systems: Foundations of Harmony Theory. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition: Foundations; MIT Press: Cambridge, MA, USA, 1987; pp. 194–281. [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Salakhutdinov, R.; Hinton, G. Deep Boltzmann Machines. In Proceedings of the Twelth International Conference on Artificial Intelligence and Statistics, Hilton Clearwater Beach Resort, Clearwater Beach, FL, USA, 16–18 April 2009; Volume 5. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2014, arXiv:1312.6114. [Google Scholar]

- Ballard, D.H. Modular Learning in Neural Networks; AAAI Press: Seattle, WA, USA, 1987; pp. 279–284. [Google Scholar]

- Bayoudh, K.; Knani, R.; Hamdaoui, F.; Abdellatif, M. A survey on deep multimodal learning for computer vision: Advances, trends, applications, and datasets. Vis. Comput. 2022, 38, 5–7. [Google Scholar] [CrossRef] [PubMed]

- Xing, E.P.; Yan, R.; Hauptmann, A.G. Mining Associated Text and Images with Dual-Wing Harmoniums. arXiv 2012, arXiv:1207.1423. [Google Scholar]

- Srivastava, N.; Salakhutdinov, R. Multimodal Learning with Deep Boltzmann Machines. J. Mach. Learn. Res. 2014, 15, 2949–2980. [Google Scholar]

- Zitnick, C.L.; Parikh, D.; Vanderwende, L. Learning the Visual Interpretation of Sentences. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 1681–1688. [Google Scholar] [CrossRef]

- Sohn, K.; Shang, W.; Lee, H. Improved Multimodal Deep Learning with Variation of Information. In Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Oord, A.V.D.; Kalchbrenner, N.; Vinyals, O.; Espeholt, L.; Graves, A.; Kavukcuoglu, K. Conditional Image Generation with PixelCNN Decoders. arXiv 2016, arXiv:1606.05328. [Google Scholar]

- Reed, S. Generating Interpretable Images with Controllable Structure. 2017, p. 13. Available online: https://openreview.net/forum?id=Hyvw0L9el (accessed on 30 May 2022).

- Reed, S.; Oord, A.V.D.; Kalchbrenner, N.; Colmenarejo, S.G.; Wang, Z.; Belov, D.; de Freitas, N. Parallel Multiscale Autoregressive Density Estimation. arXiv 2017, arXiv:1703.03664. [Google Scholar]

- Kim, J.H.; Kitaev, N.; Chen, X.; Rohrbach, M.; Zhang, B.T.; Tian, Y.; Batra, D.; Parikh, D. CoDraw: Collaborative drawing as a testbed for grounded goal-driven communication. arXiv 2017, arXiv:1712.05558. [Google Scholar]

- Tan, F.; Feng, S.; Ordonez, V. Text2Scene: Generating Compositional Scenes from Textual Descriptions. arXiv 2019, arXiv:1809.01110. [Google Scholar]

- Ramesh, A.; Pavlov, M.; Goh, G.; Gray, S.; Voss, C.; Radford, A.; Chen, M.; Sutskever, I. Zero-Shot Text-to-Image Generation. arXiv 2021, arXiv:2102.12092. [Google Scholar]

- Child, R.; Gray, S.; Radford, A.; Sutskever, I. Generating Long Sequences with Sparse Transformers. arXiv 2019, arXiv:1904.10509. [Google Scholar]

- Ding, M.; Yang, Z.; Hong, W.; Zheng, W.; Zhou, C.; Yin, D.; Lin, J.; Zou, X.; Shao, Z.; Yang, H.; et al. CogView: Mastering Text-to-Image Generation via Transformers. arXiv 2021, arXiv:2105.13290. [Google Scholar]

- Kudo, T.; Richardson, J. SentencePiece: A simple and language independent subword tokenizer and detokenizer for Neural Text Processing. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Brussels, Belgium, 2018; pp. 66–71. [Google Scholar] [CrossRef]

- Esser, P.; Rombach, R.; Blattmann, A.; Ommer, B. ImageBART: Bidirectional Context with Multinomial Diffusion for Autoregressive Image Synthesis. arXiv 2021, arXiv:2108.08827. [Google Scholar]

- Yuan, M.; Peng, Y. Text-to-image Synthesis via Symmetrical Distillation Networks. arXiv 2018, arXiv:1808.06801. [Google Scholar]

- Yuan, M.; Peng, Y. CKD: Cross-Task Knowledge Distillation for Text-to-Image Synthesis. IEEE Trans. Multimed. 2020, 22, 1955–1968. [Google Scholar] [CrossRef]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and Tell: Lessons learned from the 2015 MSCOCO Image Captioning Challenge. arXiv 2016, arXiv:1609.06647. [Google Scholar] [CrossRef]

- Yan, X.; Yang, J.; Sohn, K.; Lee, H. Attribute2Image: Conditional Image Generation from Visual Attributes. arXiv 2016, arXiv:1512.00570. [Google Scholar]

- Zhang, C.; Peng, Y. Stacking VAE and GAN for Context-aware Text-to-Image Generation. In Proceedings of the 2018 IEEE Fourth International Conference on Multimedia Big Data (BigMM), Xi’an, China, 13–16 September 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Deng, Z.; Chen, J.; FU, Y.; Mori, G. Probabilistic Neural Programmed Networks for Scene Generation. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Andreas, J.; Rohrbach, M.; Darrell, T.; Klein, D. Deep Compositional Question Answering with Neural Module Networks. arXiv 2015, arXiv:1511.02799. [Google Scholar]

- Gu, S.; Chen, D.; Bao, J.; Wen, F.; Zhang, B.; Chen, D.; Yuan, L.; Guo, B. Vector Quantized Diffusion Model for Text-to-Image Synthesis. arXiv 2022, arXiv:2111.14822. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. arXiv 2015, arXiv:1508.07909. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. arXiv 2020, arXiv:2006.11239. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. arXiv 2016, arXiv:1606.03498. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional Image Synthesis With Auxiliary Classifier GANs. arXiv 2017, arXiv:1610.09585. [Google Scholar]

- Dash, A.; Gamboa, J.C.B.; Ahmed, S.; Liwicki, M.; Afzal, M.Z. TAC-GAN - Text Conditioned Auxiliary Classifier Generative Adversarial Network. arXiv 2017, arXiv:1703.06412. [Google Scholar]

- Cha, M.; Gwon, Y.; Kung, H.T. Adversarial nets with perceptual losses for text-to-image synthesis. arXiv 2017, arXiv:1708.09321. [Google Scholar]

- Chen, K.; Choy, C.B.; Savva, M.; Chang, A.X.; Funkhouser, T.; Savarese, S. Text2Shape: Generating Shapes from Natural Language by Learning Joint Embeddings. arXiv 2018, arXiv:1803.08495. [Google Scholar]

- Fukamizu, K.; Kondo, M.; Sakamoto, R. Generation High resolution 3D model from natural language by Generative Adversarial Network. arXiv 2019, arXiv:1901.07165. [Google Scholar]

- Chen, Q.; Wu, Q.; Tang, R.; Wang, Y.; Wang, S.; Tan, M. Intelligent Home 3D: Automatic 3D-House Design From Linguistic Descriptions Only. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12625–12634. [Google Scholar] [CrossRef]

- Schuster, S.; Krishna, R.; Chang, A.; Fei-Fei, L.; Manning, C.D. Generating Semantically Precise Scene Graphs from Textual Descriptions for Improved Image Retrieval. In Proceedings of the Fourth Workshop on Vision and Language, Lisbon, Portugal, 18 September 2015; Association for Computational Linguistics: Lisbon, Portugal, 2015; pp. 70–80. [Google Scholar] [CrossRef]

- Tao, M.; Tang, H.; Wu, S.; Sebe, N.; Jing, X.Y.; Wu, F.; Bao, B. Df-gan: Deep fusion generative adversarial networks for text-to-image synthesis. arXiv 2020, arXiv:2008.05865. [Google Scholar]

- Bodla, N.; Hua, G.; Chellappa, R. Semi-supervised FusedGAN for Conditional Image Generation. arXiv 2018, arXiv:1801.05551. [Google Scholar]

- Zhang, Z.; Xie, Y.; Yang, L. Photographic Text-to-Image Synthesis with a Hierarchically-nested Adversarial Network. arXiv 2018, arXiv:1802.09178. [Google Scholar]

- Gao, L.; Chen, D.; Song, J.; Xu, X.; Zhang, D.; Shen, H.T. Perceptual Pyramid Adversarial Networks for Text-to-Image Synthesis. Proc. Aaai Conf. Artif. Intell. 2019, 33, 8312–8319. [Google Scholar] [CrossRef]

- Huang, X.; Wang, M.; Gong, M. Hierarchically-Fused Generative Adversarial Network for Text to Realistic Image Synthesis|IEEE Conference Publication|IEEE Xplore. In Proceedings of the 2019 16th Conference on Computer and Robot Vision (CRV), Kingston, QC, Canada, 29–31 May 2019; pp. 73–80. [Google Scholar] [CrossRef]

- Huang, W.; Xu, Y.; Oppermann, I. Realistic Image Generation using Region-phrase Attention. arXiv 2019, arXiv:1902.05395. [Google Scholar]

- Tan, H.; Liu, X.; Li, X.; Zhang, Y.; Yin, B. Semantics-enhanced adversarial nets for text-to-image synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 10501–10510. [Google Scholar]

- Li, B.; Qi, X.; Lukasiewicz, T.; Torr, P.H.S. Controllable Text-to-Image Generation. arXiv 2019, arXiv:1909.07083. [Google Scholar]

- Mao, F.; Ma, B.; Chang, H.; Shan, S.; Chen, X. MS-GAN: Text to Image Synthesis with Attention-Modulated Generators and Similarity-Aware Discriminators. BMVC 2019, 150. Available online: https://bmvc2019.org/wp-content/uploads/papers/0413-paper.pdf (accessed on 30 May 2022).

- Li, L.; Sun, Y.; Hu, F.; Zhou, T.; Xi, X.; Ren, J. Text to Realistic Image Generation with Attentional Concatenation Generative Adversarial Networks. Discret. Dyn. Nat. Soc. 2020, 2020, 6452536. [Google Scholar] [CrossRef]

- Wang, Z.; Quan, Z.; Wang, Z.J.; Hu, X.; Chen, Y. Text to Image Synthesis with Bidirectional Generative Adversarial Network. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, B.; Song, K.; Zhu, Y.; de Melo, G.; Elgammal, A. Time: Text and image mutual-translation adversarial networks. arXiv 2020, arXiv:2005.13192. [Google Scholar]

- Ruan, S.; Zhang, Y.; Zhang, K.; Fan, Y.; Tang, F.; Liu, Q.; Chen, E. Dae-gan: Dynamic aspect-aware gan for text-to-image synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 13960–13969. [Google Scholar]

- Cha, M.; Gwon, Y.L.; Kung, H.T. Adversarial Learning of Semantic Relevance in Text to Image Synthesis. arXiv 2019, arXiv:1812.05083. [Google Scholar] [CrossRef]

- Yin, G.; Liu, B.; Sheng, L.; Yu, N.; Wang, X.; Shao, J. Semantics Disentangling for Text-to-Image Generation. arXiv 2019, arXiv:1904.01480. [Google Scholar]

- Tan, H.; Liu, X.; Liu, M.; Yin, B.; Li, X. KT-GAN: Knowledge-transfer generative adversarial network for text-to-image synthesis. IEEE Trans. Image Process. 2020, 30, 1275–1290. [Google Scholar] [CrossRef] [PubMed]

- Mao, F.; Ma, B.; Chang, H.; Shan, S.; Chen, X. Learning efficient text-to-image synthesis via interstage cross-sample similarity distillation. Sci. China Inf. Sci. 2020, 64, 120102. [Google Scholar] [CrossRef]

- Nguyen, A.; Clune, J.; Bengio, Y.; Dosovitskiy, A.; Yosinski, J. Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space. arXiv 2017, arXiv:1612.00005. [Google Scholar]

- Dong, H.; Zhang, J.; McIlwraith, D.; Guo, Y. I2T2I: Learning Text to Image Synthesis with Textual Data Augmentation. arXiv 2017, arXiv:1703.06676. [Google Scholar]

- Qiao, T.; Zhang, J.; Xu, D.; Tao, D. MirrorGAN: Learning Text-to-image Generation by Redescription. arXiv 2019, arXiv:1903.05854. [Google Scholar]

- Chen, Z.; Luo, Y. Cycle-Consistent Diverse Image Synthesis from Natural Language. In Proceedings of the 2019 IEEE International Conference on Multimedia Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 459–464. [Google Scholar] [CrossRef]

- Lao, Q.; Havaei, M.; Pesaranghader, A.; Dutil, F.; Di Jorio, L.; Fevens, T. Dual Adversarial Inference for Text-to-Image Synthesis. arXiv 2019, arXiv:1908.05324. [Google Scholar]

- Zhu, M.; Pan, P.; Chen, W.; Yang, Y. DM-GAN: Dynamic Memory Generative Adversarial Networks for Text-to-Image Synthesis. arXiv 2019, arXiv:1904.01310. [Google Scholar]

- Miller, A.H.; Fisch, A.; Dodge, J.; Karimi, A.; Bordes, A.; Weston, J. Key-Value Memory Networks for Directly Reading Documents. arXiv 2016, arXiv:1606.03126. [Google Scholar]

- Liang, J.; Pei, W.; Lu, F. CPGAN: Full-Spectrum Content-Parsing Generative Adversarial Networks for Text-to-Image Synthesis. arXiv 2020, arXiv:1912.08562. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-Up and Top-Down Attention for Image Captioning and VQA. arXiv 2017, arXiv:1707.07998. [Google Scholar]

- Ye, H.; Yang, X.; Takac, M.; Sunderraman, R.; Ji, S. Improving Text-to-Image Synthesis Using Contrastive Learning. arXiv 2021, arXiv:2107.02423. [Google Scholar]

- Zhang, H.; Koh, J.Y.; Baldridge, J.; Lee, H.; Yang, Y. Cross-Modal Contrastive Learning for Text-to-Image Generation. arXiv 2022, arXiv:2101.04702. [Google Scholar]

- Yuan, M.; Peng, Y. Bridge-GAN: Interpretable representation learning for text-to-image synthesis. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 4258–4268. [Google Scholar] [CrossRef]

- Souza, D.M.; Wehrmann, J.; Ruiz, D.D. Efficient Neural Architecture for Text-to-Image Synthesis. arXiv 2020, arXiv:2004.11437. [Google Scholar]

- Brock, A.; Donahue, J.; Simonyan, K. Large Scale GAN Training for High Fidelity Natural Image Synthesis. arXiv 2018, arXiv:1809.11096. [Google Scholar]

- Stap, D.; Bleeker, M.; Ibrahimi, S.; ter Hoeve, M. Conditional Image Generation and Manipulation for User-Specified Content. arXiv 2020, arXiv:2005.04909. [Google Scholar]

- Zhang, Y.; Lu, H. Deep Cross-Modal Projection Learning for Image-Text Matching. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 707–723. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. arXiv 2018, arXiv:1812.04948. [Google Scholar]

- Rombach, R.; Esser, P.; Ommer, B. Network-to-network translation with conditional invertible neural networks. Adv. Neural Inf. Process. Syst. 2020, 33, 2784–2797. [Google Scholar]

- Liu, X.; Gong, C.; Wu, L.; Zhang, S.; Su, H.; Liu, Q. FuseDream: Training-Free Text-to-Image Generation with Improved CLIP+GAN Space Optimization. arXiv 2021, arXiv:2112.01573. [Google Scholar]

- Zhou, Y.; Zhang, R.; Chen, C.; Li, C.; Tensmeyer, C.; Yu, T.; Gu, J.; Xu, J.; Sun, T. LAFITE: Towards Language-Free Training for Text-to-Image Generation. arXiv 2022, arXiv:2111.13792. [Google Scholar]

- Zhang, P.; Li, X.; Hu, X.; Yang, J.; Zhang, L.; Wang, L.; Choi, Y.; Gao, J. VinVL: Making Visual Representations Matter in Vision-Language Models. arXiv 2021, arXiv:2101.00529. [Google Scholar]

- Joseph, K.J.; Pal, A.; Rajanala, S.; Balasubramanian, V.N. C4Synth: Cross-Caption Cycle-Consistent Text-to-Image Synthesis. arXiv 2018, arXiv:1809.10238. [Google Scholar]

- El, O.B.; Licht, O.; Yosephian, N. GILT: Generating Images from Long Text. arXiv 2019, arXiv:1901.02404. [Google Scholar]

- Wang, H.; Sahoo, D.; Liu, C.; Lim, E.; Hoi, S.C.H. Learning Cross-Modal Embeddings with Adversarial Networks for Cooking Recipes and Food Images. arXiv 2019, arXiv:1905.01273. [Google Scholar]

- Cheng, J.; Wu, F.; Tian, Y.; Wang, L.; Tao, D. RiFeGAN: Rich feature generation for text-to-image synthesis from prior knowledge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 10911–10920. [Google Scholar]

- Yang, R.; Zhang, J.; Gao, X.; Ji, F.; Chen, H. Simple and Effective Text Matching with Richer Alignment Features. arXiv 2019, arXiv:1908.00300. [Google Scholar]

- Yang, Y.; Wang, L.; Xie, D.; Deng, C.; Tao, D. Multi-Sentence Auxiliary Adversarial Networks for Fine-Grained Text-to-Image Synthesis. IEEE Trans. Image Process. 2021, 30, 2798–2809. [Google Scholar] [CrossRef] [PubMed]

- Sharma, S.; Suhubdy, D.; Michalski, V.; Kahou, S.E.; Bengio, Y. ChatPainter: Improving Text to Image Generation using Dialogue. arXiv 2018, arXiv:1802.08216. [Google Scholar]

- El-Nouby, A.; Sharma, S.; Schulz, H.; Hjelm, D.; Asri, L.E.; Kahou, S.E.; Bengio, Y.; Taylor, G.W. Tell, draw, and repeat: Generating and modifying images based on continual linguistic instruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 10304–10312. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global Vectors for Word Representation. In Proceedings of the Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Niu, T.; Feng, F.; Li, L.; Wang, X. Image Synthesis from Locally Related Texts. In Proceedings of the 2020 International Conference on Multimedia Retrieval, Dublin, Ireland, 8–11 June 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 145–153. [Google Scholar]

- Cheng, Y.; Gan, Z.; Li, Y.; Liu, J.; Gao, J. Sequential Attention GAN for Interactive Image Editing. arXiv 2020, arXiv:1812.08352. [Google Scholar]

- Frolov, S.; Jolly, S.; Hees, J.; Dengel, A. Leveraging Visual Question Answering to Improve Text-to-Image Synthesis. arXiv 2020, arXiv:2010.14953. [Google Scholar]

- Kazemi, V.; Elqursh, A. Show, Ask, Attend, and Answer: A Strong Baseline For Visual Question Answering. arXiv 2017, arXiv:1704.03162. [Google Scholar]

- Hinz, T.; Heinrich, S.; Wermter, S. Generating Multiple Objects at Spatially Distinct Locations. arXiv 2019, arXiv:1901.00686. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. arXiv 2015, arXiv:1506.02025. [Google Scholar]

- Hinz, T.; Heinrich, S.; Wermter, S. Semantic object accuracy for generative text-to-image synthesis. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1552–1565. [Google Scholar] [CrossRef] [PubMed]

- Sylvain, T.; Zhang, P.; Bengio, Y.; Hjelm, R.D.; Sharma, S. Object-Centric Image Generation from Layouts. arXiv 2020, arXiv:2003.07449. [Google Scholar]

- Goller, C.; Kuchler, A. Learning task-dependent distributed representations by backpropagation through structure. In Proceedings of the International Conference on Neural Networks (ICNN’96), Washington, DC, USA, 3–6 June 1996; Volume 1, pp. 347–352. [Google Scholar]

- Hong, S.; Yang, D.; Choi, J.; Lee, H. Inferring Semantic Layout for Hierarchical Text-to-Image Synthesis. arXiv 2018, arXiv:1801.05091. [Google Scholar]

- Ha, D.; Eck, D. A Neural Representation of Sketch Drawings. arXiv 2017, arXiv:1704.03477. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.; Wong, W.; Woo, W. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. arXiv 2015, arXiv:1506.04214. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Li, W.; Zhang, P.; Zhang, L.; Huang, Q.; He, X.; Lyu, S.; Gao, J. Object-driven Text-to-Image Synthesis via Adversarial Training. arXiv 2019, arXiv:1902.10740. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Qiao, T.; Zhang, J.; Xu, D.; Tao, D. Learn, imagine and create: Text-to-image generation from prior knowledge. Adv. Neural Inf. Process. Syst. 2019, 32, 3–5. [Google Scholar]

- Pavllo, D.; Lucchi, A.; Hofmann, T. Controlling Style and Semantics in Weakly-Supervised Image Generation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 482–499. [Google Scholar]

- Park, T.; Liu, M.; Wang, T.; Zhu, J. Semantic Image Synthesis with Spatially-Adaptive Normalization. arXiv 2019, arXiv:1903.07291. [Google Scholar]

- Wang, M.; Lang, C.; Liang, L.; Lyu, G.; Feng, S.; Wang, T. Attentive Generative Adversarial Network To Bridge Multi-Domain Gap For Image Synthesis. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Zhu, J.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. arXiv 2017, arXiv:1703.10593. [Google Scholar]

- Wang, M.; Lang, C.; Liang, L.; Feng, S.; Wang, T.; Gao, Y. End-to-End Text-to-Image Synthesis with Spatial Constrains. ACM Trans. Intell. Syst. Technol. 2020, 11, 47:1–47:19. [Google Scholar] [CrossRef]

- Johnson, J.; Gupta, A.; Fei-Fei, L. Image Generation from Scene Graphs. arXiv 2018, arXiv:1804.01622. [Google Scholar]

- Chen, Q.; Koltun, V. Photographic Image Synthesis with Cascaded Refinement Networks. arXiv 2017, arXiv:1707.09405. [Google Scholar]

- Mittal, G.; Agrawal, S.; Agarwal, A.; Mehta, S.; Marwah, T. Interactive Image Generation Using Scene Graphs. arXiv 2019, arXiv:1905.03743. [Google Scholar]

- Johnson, J.; Krishna, R.; Stark, M.; Li, L.J.; Shamma, D.A.; Bernstein, M.S.; Fei-Fei, L. Image retrieval using scene graphs. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3668–3678. [Google Scholar] [CrossRef]

- Li, B.; Zhuang, B.; Li, M.; Gu, J. Seq-SG2SL: Inferring Semantic Layout from Scene Graph Through Sequence to Sequence Learning. arXiv 2019, arXiv:1908.06592. [Google Scholar]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.; Shamma, D.A.; et al. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. arXiv 2016, arXiv:1602.07332. [Google Scholar] [CrossRef]

- Ashual, O.; Wolf, L. Specifying Object Attributes and Relations in Interactive Scene Generation. arXiv 2019, arXiv:1909.05379. [Google Scholar]

- Li, Y.; Ma, T.; Bai, Y.; Duan, N.; Wei, S.; Wang, X. PasteGAN: A Semi-Parametric Method to Generate Image from Scene Graph. arXiv 2019, arXiv:1905.01608. [Google Scholar]

- Vo, D.M.; Sugimoto, A. Visual-Relation Conscious Image Generation from Structured-Text. arXiv 2020, arXiv:1908.01741. [Google Scholar]

- Han, C.; Long, S.; Luo, S.; Wang, K.; Poon, J. VICTR: Visual Information Captured Text Representation for Text-to-Vision Multimodal Tasks. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 3107–3117. [Google Scholar] [CrossRef]

- Chen, D.; Manning, C. A Fast and Accurate Dependency Parser using Neural Networks. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Association for Computational Linguistics: Doha, Qatar, 2014; pp. 740–750. [Google Scholar] [CrossRef]

- Koh, J.Y.; Baldridge, J.; Lee, H.; Yang, Y. Text-to-image generation grounded by fine-grained user attention. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 237–246. [Google Scholar]

- Chen, J.; Shen, Y.; Gao, J.; Liu, J.; Liu, X. Language-Based Image Editing with Recurrent Attentive Models. arXiv 2018, arXiv:1711.06288. [Google Scholar]

- Shi, J.; Xu, N.; Bui, T.; Dernoncourt, F.; Wen, Z.; Xu, C. A Benchmark and Baseline for Language-Driven Image Editing. arXiv 2020, arXiv:2010.02330. [Google Scholar]

- Yu, L.; Lin, Z.; Shen, X.; Yang, J.; Lu, X.; Bansal, M.; Berg, T.L. MAttNet: Modular Attention Network for Referring Expression Comprehension. arXiv 2018, arXiv:1801.08186. [Google Scholar]

- Shi, J.; Xu, N.; Xu, Y.; Bui, T.; Dernoncourt, F.; Xu, C. Learning by Planning: Language-Guided Global Image Editing. arXiv 2021, arXiv:2106.13156. [Google Scholar]

- Dong, H.; Yu, S.; Wu, C.; Guo, Y. Semantic Image Synthesis via Adversarial Learning. arXiv 2017, arXiv:1707.06873. [Google Scholar]

- Kiros, R.; Salakhutdinov, R.; Zemel, R.S. Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models. arXiv 2014, arXiv:1411.2539. [Google Scholar]

- Nam, S.; Kim, Y.; Kim, S.J. Text-Adaptive Generative Adversarial Networks: Manipulating Images with Natural Language. arXiv 2018, arXiv:1810.11919. [Google Scholar]

- Günel, M.; Erdem, E.; Erdem, A. Language Guided Fashion Image Manipulation with Feature-wise Transformations. arXiv 2018, arXiv:1808.04000. [Google Scholar]

- Perez, E.; Strub, F.; de Vries, H.; Dumoulin, V.; Courville, A.C. FiLM: Visual Reasoning with a General Conditioning Layer. arXiv 2017, arXiv:1709.07871. [Google Scholar] [CrossRef]

- Zhu, D.; Mogadala, A.; Klakow, D. Image Manipulation with Natural Language using Two-sidedAttentive Conditional Generative Adversarial Network. arXiv 2019, arXiv:1912.07478. [Google Scholar]

- Mao, X.; Chen, Y.; Li, Y.; Xiong, T.; He, Y.; Xue, H. Bilinear Representation for Language-based Image Editing Using Conditional Generative Adversarial Networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2047–2051. [Google Scholar] [CrossRef]

- Li, B.; Qi, X.; Lukasiewicz, T.; Torr, P.H.S. ManiGAN: Text-Guided Image Manipulation. arXiv 2020, arXiv:1912.06203. [Google Scholar]

- Liu, Y.; De Nadai, M.; Cai, D.; Li, H.; Alameda-Pineda, X.; Sebe, N.; Lepri, B. Describe What to Change: A Text-guided Unsupervised Image-to-Image Translation Approach. arXiv 2020, arXiv:2008.04200. [Google Scholar]

- Liu, Y.; Nadai, M.D.; Yao, J.; Sebe, N.; Lepri, B.; Alameda-Pineda, X. GMM-UNIT: Unsupervised Multi-Domain and Multi-Modal Image-to-Image Translation via Attribute Gaussian Mixture Modeling. arXiv 2020, arXiv:2003.06788. [Google Scholar]

- Park, H.; Yoo, Y.; Kwak, N. MC-GAN: Multi-conditional Generative Adversarial Network for Image Synthesis. arXiv 2018, arXiv:1805.01123. [Google Scholar]

- Zhou, X.; Huang, S.; Li, B.; Li, Y.; Li, J.; Zhang, Z. Text Guided Person Image Synthesis. arXiv 2019, arXiv:1904.05118. [Google Scholar]

- Ma, L.; Sun, Q.; Georgoulis, S.; Gool, L.V.; Schiele, B.; Fritz, M. Disentangled Person Image Generation. arXiv 2017, arXiv:1712.02621. [Google Scholar]

- Li, B.; Qi, X.; Torr, P.H.S.; Lukasiewicz, T. Lightweight Generative Adversarial Networks for Text-Guided Image Manipulation. arXiv 2020, arXiv:2010.12136. [Google Scholar]

- Zhang, L.; Chen, Q.; Hu, B.; Jiang, S. Neural Image Inpainting Guided with Descriptive Text. arXiv 2020, arXiv:2004.03212. [Google Scholar]

- Patashnik, O.; Wu, Z.; Shechtman, E.; Cohen-Or, D.; Lischinski, D. StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery. arXiv 2021, arXiv:2103.17249. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:2103.00020. [Google Scholar]

- Togo, R.; Kotera, M.; Ogawa, T.; Haseyama, M. Text-Guided Style Transfer-Based Image Manipulation Using Multimodal Generative Models. IEEE Access 2021, 9, 64860–64870. [Google Scholar] [CrossRef]

- Wang, H.; Williams, J.D.; Kang, S. Learning to Globally Edit Images with Textual Description. arXiv 2018, arXiv:1810.05786. [Google Scholar]

- Manning, C.; Surdeanu, M.; Bauer, J.; Finkel, J.; Bethard, S.; McClosky, D. The Stanford CoreNLP Natural Language Processing Toolkit. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 23–24 June 2014; Association for Computational Linguistics: Baltimore, MD, USA, 2014; pp. 55–60. [Google Scholar] [CrossRef]

- Chen, D.; Yuan, L.; Liao, J.; Yu, N.; Hua, G. StyleBank: An Explicit Representation for Neural Image Style Transfer. arXiv 2017, arXiv:1703.09210. [Google Scholar]

- Xia, W.; Yang, Y.; Xue, J.H.; Wu, B. TediGAN: Text-Guided Diverse Face Image Generation and Manipulation. arXiv 2021, arXiv:2012.03308. [Google Scholar]

- Anonymous. Generating a Temporally Coherent Image Sequence for a Story by Multimodal Recurrent Transformers. 2021. Available online: https://openreview.net/forum?id=L99I9HrEtEm (accessed on 30 May 2022).

- Li, Y.; Gan, Z.; Shen, Y.; Liu, J.; Cheng, Y.; Wu, Y.; Carin, L.; Carlson, D.; Gao, J. Storygan: A sequential conditional gan for story visualization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6329–6338. [Google Scholar]

- Cer, D.; Yang, Y.; Kong, S.; Hua, N.; Limtiaco, N.; John, R.S.; Constant, N.; Guajardo-Cespedes, M.; Yuan, S.; Tar, C.; et al. Universal Sentence Encoder. arXiv 2018, arXiv:1803.11175. [Google Scholar]

- Li, C.; Kong, L.; Zhou, Z. Improved-storygan for sequential images visualization. J. Vis. Commun. Image Represent. 2020, 73, 102956. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Song, Y.Z.; Rui Tam, Z.; Chen, H.J.; Lu, H.H.; Shuai, H.H. Character-Preserving Coherent Story Visualization. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 18–33. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. arXiv 2019, arXiv:1902.09212. [Google Scholar]

- Maharana, A.; Hannan, D.; Bansal, M. Improving generation and evaluation of visual stories via semantic consistency. arXiv 2021, arXiv:2105.10026. [Google Scholar]

- Lei, J.; Wang, L.; Shen, Y.; Yu, D.; Berg, T.L.; Bansal, M. MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning. arXiv 2020, arXiv:2005.05402. [Google Scholar]

- Maharana, A.; Bansal, M. Integrating Visuospatial, Linguistic and Commonsense Structure into Story Visualization. arXiv 2021, arXiv:2110.10834. [Google Scholar]

- Bauer, L.; Wang, Y.; Bansal, M. Commonsense for Generative Multi-Hop Question Answering Tasks. arXiv 2018, arXiv:1809.06309. [Google Scholar]

- Koncel-Kedziorski, R.; Bekal, D.; Luan, Y.; Lapata, M.; Hajishirzi, H. Text Generation from Knowledge Graphs with Graph Transformers. arXiv 2019, arXiv:1904.02342. [Google Scholar]

- Yang, L.; Tang, K.D.; Yang, J.; Li, L. Dense Captioning with Joint Inference and Visual Context. arXiv 2016, arXiv:1611.06949. [Google Scholar]

- Gupta, T.; Schwenk, D.; Farhadi, A.; Hoiem, D.; Kembhavi, A. Imagine This! Scripts to Compositions to Videos. arXiv 2018, arXiv:1804.03608. [Google Scholar]

- Liu, Y.; Wang, X.; Yuan, Y.; Zhu, W. Cross-Modal Dual Learning for Sentence-to-Video Generation. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1239–1247. [Google Scholar] [CrossRef]

- Conneau, A.; Kiela, D.; Schwenk, H.; Barrault, L.; Bordes, A. Supervised Learning of Universal Sentence Representations from Natural Language Inference Data. arXiv 2017, arXiv:1705.02364. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [Green Version]

- Huq, F.; Ahmed, N.; Iqbal, A. Static and Animated 3D Scene Generation from Free-form Text Descriptions. arXiv 2020, arXiv:2010.01549. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 38–45. [Google Scholar] [CrossRef]

- Introduction—Blender Manual. Available online: https://www.blender.org/ (accessed on 30 May 2022).

- Mittal, G.; Marwah, T.; Balasubramanian, V.N. Sync-DRAW: Automatic video generation using deep recurrent attentive architectures. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1096–1104. [Google Scholar]

- Kiros, R.; Zhu, Y.; Salakhutdinov, R.; Zemel, R.S.; Torralba, A.; Urtasun, R.; Fidler, S. Skip-Thought Vectors. arXiv 2015, arXiv:1506.06726. [Google Scholar]

- Marwah, T.; Mittal, G.; Balasubramanian, V.N. Attentive Semantic Video Generation using Captions. arXiv 2017, arXiv:1708.05980. [Google Scholar]

- Li, Y.; Min, M.R.; Shen, D.; Carlson, D.; Carin, L. Video Generation From Text. arXiv 2017, arXiv:1710.00421. [Google Scholar] [CrossRef]

- Wu, C.; Huang, L.; Zhang, Q.; Li, B.; Ji, L.; Yang, F.; Sapiro, G.; Duan, N. GODIVA: Generating Open-DomaIn Videos from nAtural Descriptions. arXiv 2021, arXiv:2104.14806. [Google Scholar]

- Pan, Y.; Qiu, Z.; Yao, T.; Li, H.; Mei, T. To Create What You Tell: Generating Videos from Captions. arXiv 2018, arXiv:1804.08264. [Google Scholar]

- Deng, K.; Fei, T.; Huang, X.; Peng, Y. IRC-GAN: Introspective Recurrent Convolutional GAN for Text-to-Video Generation. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19, Macao, China, 10–16 August 2019; pp. 2216–2222. [Google Scholar] [CrossRef]

- Balaji, Y.; Min, M.R.; Bai, B.; Chellappa, R.; Graf, H.P. Conditional GAN with Discriminative Filter Generation for Text-to-Video Synthesis. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 1995–2001. [Google Scholar] [CrossRef]

- Mazaheri, A.; Shah, M. Video Generation from Text Employing Latent Path Construction for Temporal Modeling. arXiv 2021, arXiv:2107.13766. [Google Scholar]

- Kim, D.; Joo, D.; Kim, J. TiVGAN: Text to Image to Video Generation with Step-by-Step Evolutionary Generator. arXiv 2021, arXiv:2009.02018. [Google Scholar] [CrossRef]

- Fu, T.J.; Wang, X.E.; Grafton, S.T.; Eckstein, M.P.; Wang, W.Y. M3L: Language-based Video Editing via Multi-Modal Multi-Level Transformers. arXiv 2022, arXiv:2104.01122. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Lafferty, J.D.; McCallum, A.; Pereira, F.C.N. Conditional Random Fields: Probabilistic Models for Segmenting and Labeling Sequence Data. In Proceedings of the Eighteenth International Conference on Machine Learning, San Francisco, CA, USA, 28 June–1 July 2001; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2001; pp. 282–289. [Google Scholar]

- van den Oord, A.; Kalchbrenner, N.; Kavukcuoglu, K. Pixel Recurrent Neural Networks. arXiv 2016, arXiv:1601.06759. [Google Scholar]

- van den Oord, A.; Vinyals, O.; Kavukcuoglu, K. Neural Discrete Representation Learning. In Advances in Neural Information Processing Systems; Guyon, I., von Luxburg, U., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2018; Volume 30, Available online: https://proceedings.neurips.cc/paper/2017/file/7a98af17e63a0ac09ce2e96d03992fbc-Paper.pdf (accessed on 30 May 2022).

- Sohl-Dickstein, J.; Weiss, E.A.; Maheswaranathan, N.; Ganguli, S. Deep Unsupervised Learning using Nonequilibrium Thermodynamics. arXiv 2015, arXiv:1503.03585. [Google Scholar]

- Hu, Y.; He, H.; Xu, C.; Wang, B.; Lin, S. Exposure: A White-Box Photo Post-Processing Framework. arXiv 2017, arXiv:1709.09602. [Google Scholar] [CrossRef]

- Park, J.; Lee, J.; Yoo, D.; Kweon, I.S. Distort-and-Recover: Color Enhancement using Deep Reinforcement Learning. arXiv 2018, arXiv:1804.04450. [Google Scholar]

- Shinagawa, S.; Yoshino, K.; Sakti, S.; Suzuki, Y.; Nakamura, S. Interactive Image Manipulation with Natural Language Instruction Commands. arXiv 2018, arXiv:1802.08645. [Google Scholar]

- Laput, G.P.; Dontcheva, M.; Wilensky, G.; Chang, W.; Agarwala, A.; Linder, J.; Adar, E. PixelTone: A Multimodal Interface for Image Editing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris France, 27 April–2 May 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 2185–2194. [Google Scholar] [CrossRef]

- Denton, E.L.; Chintala, S.; Szlam, A.; Fergus, R. Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks. arXiv 2015, arXiv:1506.05751. [Google Scholar]

- Lin, Z.; Feng, M.; dos Santos, C.N.; Yu, M.; Xiang, B.; Zhou, B.; Bengio, Y. A Structured Self-attentive Sentence Embedding. arXiv 2017, arXiv:1703.03130. [Google Scholar]

- Li, S.; Bak, S.; Carr, P.; Wang, X. Diversity Regularized Spatiotemporal Attention for Video-based Person Re-identification. arXiv 2018, arXiv:1803.09882. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Wang, X.; Chen, Y.; Zhu, W. A Comprehensive Survey on Curriculum Learning. arXiv 2020, arXiv:2010.13166. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality Reduction by Learning an Invariant Mapping. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1735–1742. [Google Scholar] [CrossRef]

- Nguyen, A.M.; Dosovitskiy, A.; Yosinski, J.; Brox, T.; Clune, J. Synthesizing the preferred inputs for neurons in neural networks via deep generator networks. arXiv 2016, arXiv:1605.09304. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and Tell: A Neural Image Caption Generator. arXiv 2014, arXiv:1411.4555. [Google Scholar]

- Donahue, J.; Krähenbühl, P.; Darrell, T. Adversarial Feature Learning. arXiv 2016, arXiv:1605.09782. [Google Scholar]

- Dumoulin, V.; Belghazi, I.; Poole, B.; Mastropietro, O.; Lamb, A.; Arjovsky, M.; Courville, A. Adversarially Learned Inference. arXiv 2016, arXiv:1606.00704. [Google Scholar]

- Saunshi, N.; Ash, J.; Goel, S.; Misra, D.; Zhang, C.; Arora, S.; Kakade, S.; Krishnamurthy, A. Understanding Contrastive Learning Requires Incorporating Inductive Biases. arXiv 2022, arXiv:2202.14037. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Dinh, L.; Krueger, D.; Bengio, Y. NICE: Non-linear Independent Components Estimation. arXiv 2014, arXiv:1410.8516. [Google Scholar]

- Dinh, L.; Sohl-Dickstein, J.; Bengio, S. Density estimation using Real NVP. arXiv 2016, arXiv:1605.08803. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and Improving the Image Quality of StyleGAN. arXiv 2019, arXiv:1912.04958. [Google Scholar]

- Das, A.; Kottur, S.; Gupta, K.; Singh, A.; Yadav, D.; Lee, S.; Moura, J.M.F.; Parikh, D.; Batra, D. Visual Dialog. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1242–1256. [Google Scholar] [CrossRef] [PubMed]

- Johnson, J.; Hariharan, B.; Van Der Maaten, L.; Hoffman, J.; Fei-Fei, L.; Lawrence Zitnick, C.; Girshick, R. Inferring and executing programs for visual reasoning. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2989–2998. [Google Scholar]

- Ben-younes, H.; Cadène, R.; Cord, M.; Thome, N. MUTAN: Multimodal Tucker Fusion for Visual Question Answering. arXiv 2017, arXiv:1705.06676. [Google Scholar]

- Goyal, Y.; Khot, T.; Summers-Stay, D.; Batra, D.; Parikh, D. Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering. arXiv 2016, arXiv:1612.00837. [Google Scholar]

- Zhao, B.; Meng, L.; Yin, W.; Sigal, L. Image Generation from Layout. arXiv 2018, arXiv:1811.11389. [Google Scholar]

- Sun, W.; Wu, T. Image Synthesis From Reconfigurable Layout and Style. arXiv 2019, arXiv:1908.07500. [Google Scholar]

- Sun, W.; Wu, T. Learning Layout and Style Reconfigurable GANs for Controllable Image Synthesis. arXiv 2020, arXiv:2003.11571. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.B. Fast R-CNN. arXiv 2015, arXiv:1504.08083. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. arXiv 2019, arXiv:1901.00596. [Google Scholar] [CrossRef] [PubMed]

- Pont-Tuset, J.; Uijlings, J.; Changpinyo, S.; Soricut, R.; Ferrari, V. Connecting vision and language with localized narratives. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 647–664. [Google Scholar]

- Pose-Normalized Image Generation for Person Re-identification. arXiv 2017, arXiv:1712.02225.

- Adorni, G.; Di Manzo, M. Natural Language Input for Scene Generation. In Proceedings of the First Conference of the European Chapter of the Association for Computational Linguistics, Pisa, Italy, 1–2 September 1983; Association for Computational Linguistics: Pisa, Italy, 1983. [Google Scholar]

- Coyne, B.; Sproat, R. WordsEye: An automatic text-to-scene conversion system. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques—SIGGRAPH ’01, Los Angeles, CA, USA, 12–17 August 2001; ACM Press: New York, NY, USA, 2001; pp. 487–496. [Google Scholar] [CrossRef]

- Chang, A.X.; Eric, M.; Savva, M.; Manning, C.D. SceneSeer: 3D Scene Design with Natural Language. arXiv 2017, arXiv:1703.00050. [Google Scholar]

- Häusser, P.; Mordvintsev, A.; Cremers, D. Learning by Association—A versatile semi-supervised training method for neural networks. arXiv 2017, arXiv:1706.00909. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 214–223. Available online: https://proceedings.mlr.press/v70/arjovsky17a.html (accessed on 30 May 2022).

- Kim, G.; Moon, S.; Sigal, L. Joint photo stream and blog post summarization and exploration. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3081–3089. [Google Scholar] [CrossRef]

- Kim, G.; Moon, S.; Sigal, L. Ranking and retrieval of image sequences from multiple paragraph queries. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1993–2001. [Google Scholar] [CrossRef]

- Ravi, H.; Wang, L.; Muniz, C.M.; Sigal, L.; Metaxas, D.N.; Kapadia, M. Show Me a Story: Towards Coherent Neural Story Illustration. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7613–7621. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Yu, Z. Incorporating Structured Commonsense Knowledge in Story Completion. arXiv 2018, arXiv:1811.00625. [Google Scholar] [CrossRef]

- Ma, M.; Mc Kevitt, P. Virtual human animation in natural language visualisation. Artif. Intell. Rev. 2006, 25, 37–53. [Google Scholar] [CrossRef]

- Åkerberg, O.; Svensson, H.; Schulz, B.; Nugues, P. CarSim: An Automatic 3D Text-to-Scene Conversion System Applied to Road Accident Reports. In Proceedings of the Research Notes and Demonstrations of the 10th Conference of the European Chapter of the Association of Computational Linguistics, Budapest, Hungary, 12–17 April 2003; Association of Computational Linguistics: Stroudsburg, PA, USA, 2003; pp. 191–194. [Google Scholar]

- Krishnaswamy, N.; Pustejovsky, J. VoxSim: A Visual Platform for Modeling Motion Language. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics: System Demonstrations, Osaka, Japan, 11–16 December 2016; The COLING 2016 Organizing Committee: Osaka, Japan, 2016; pp. 54–58. [Google Scholar]

- Hayashi, M.; Inoue, S.; Douke, M.; Hamaguchi, N.; Kaneko, H.; Bachelder, S.; Nakajima, M. T2V: New Technology of Converting Text to CG Animation. ITE Trans. Media Technol. Appl. 2014, 2, 74–81. [Google Scholar] [CrossRef]

- El-Mashad, S.Y.; Hamed, E.H.S. Automatic creation of a 3D cartoon from natural language story. Ain Shams Eng. J. 2022, 13, 101641. [Google Scholar] [CrossRef]

- Miech, A.; Zhukov, D.; Alayrac, J.; Tapaswi, M.; Laptev, I.; Sivic, J. HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips. arXiv 2019, arXiv:1906.03327. [Google Scholar]

- Saito, M.; Matsumoto, E.; Saito, S. Temporal Generative Adversarial Nets with Singular Value Clipping. arXiv 2017, arXiv:1611.06624. [Google Scholar]

- Tulyakov, S.; Liu, M.; Yang, X.; Kautz, J. MoCoGAN: Decomposing Motion and Content for Video Generation. arXiv 2017, arXiv:1707.04993. [Google Scholar]

- Gavrilyuk, K.; Ghodrati, A.; Li, Z.; Snoek, C.G.M. Actor and Action Video Segmentation from a Sentence. arXiv 2018, arXiv:1803.07485. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Clark, A.; Donahue, J.; Simonyan, K. Efficient Video Generation on Complex Datasets. arXiv 2019, arXiv:1907.06571. [Google Scholar]

- Xian, Y.; Lampert, C.H.; Schiele, B.; Akata, Z. Zero-Shot Learning—A Comprehensive Evaluation of the Good, the Bad and the Ugly. arXiv 2017, arXiv:1707.00600. [Google Scholar] [CrossRef] [Green Version]

- Lampert, C.H.; Nickisch, H.; Harmeling, S. Learning to detect unseen object classes by between-class attribute transfer. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 951–958. [Google Scholar] [CrossRef]

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J. StarGAN v2: Diverse Image Synthesis for Multiple Domains. arXiv 2019, arXiv:1912.01865. [Google Scholar]

- Netzer, Y.; Wang, T.; Coates, A.; Bissacco, A.; Wu, B.; Ng, A.Y. Reading Digits in Natural Images with Unsupervised Feature Learning. In Proceedings of the NIPS Workshop on Deep Learning and Unsupervised Feature Learning, Granada, Spain, 16–17 December 2011. [Google Scholar]

- Gonzalez-Garcia, A.; van de Weijer, J.; Bengio, Y. Image-to-image translation for cross-domain disentanglement. arXiv 2018, arXiv:1805.09730. [Google Scholar]

- Eslami, S.M.A.; Heess, N.; Weber, T.; Tassa, Y.; Kavukcuoglu, K.; Hinton, G.E. Attend, Infer, Repeat: Fast Scene Understanding with Generative Models. arXiv 2016, arXiv:1603.08575. [Google Scholar]

- Nilsback, M.E.; Zisserman, A. Automated Flower Classification over a Large Number of Classes. In Proceedings of the 2008 Sixth Indian Conference on Computer Vision, Graphics Image Processing, Bhubaneswar, India, 16–19 December 2008; pp. 722–729. [Google Scholar] [CrossRef]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. Technical Report CNS-TR-2011-001; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Finn, C.; Goodfellow, I.J.; Levine, S. Unsupervised Learning for Physical Interaction through Video Prediction. arXiv 2016, arXiv:1605.07157. [Google Scholar]

- Abolghasemi, P.; Mazaheri, A.; Shah, M.; Bölöni, L. Pay attention!—Robustifying a Deep Visuomotor Policy through Task-Focused Attention. arXiv 2018, arXiv:1809.10093. [Google Scholar]

- Huang, G.B.; Ramesh, M.; Berg, T.; Learned-Miller, E. Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments; Technical Report 07-49; University of Massachusetts: Amherst, MA, USA, 2007. [Google Scholar]

- Berg, T.; Berg, A.; Edwards, J.; Maire, M.; White, R.; Teh, Y.W.; Learned-Miller, E.; Forsyth, D. Names and faces in the news. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 2, pp. 2–4. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Robust Real-Time Face Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep Learning Face Attributes in the Wild. arXiv 2014, arXiv:1411.7766. [Google Scholar]

- Sun, Y.; Wang, X.; Tang, X. Deep Learning Face Representation by Joint Identification-Verification. arXiv 2014, arXiv:1406.4773. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OI, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar] [CrossRef]

- Antol, S.; Agrawal, A.; Lu, J.; Mitchell, M.; Batra, D.; Zitnick, C.L.; Parikh, D. VQA: Visual Question Answering. arXiv 2015, arXiv:1505.00468. [Google Scholar]

- Zhang, P.; Goyal, Y.; Summers-Stay, D.; Batra, D.; Parikh, D. Yin and Yang: Balancing and Answering Binary Visual Questions. arXiv 2015, arXiv:1511.05099. [Google Scholar]

- Salvador, A.; Hynes, N.; Aytar, Y.; Marin, J.; Ofli, F.; Weber, I.; Torralba, A. Learning Cross-Modal Embeddings for Cooking Recipes and Food Images. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3068–3076. [Google Scholar] [CrossRef]

- Zitnick, C.L.; Parikh, D. Bringing Semantics into Focus Using Visual Abstraction. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3009–3016. [Google Scholar] [CrossRef]

- Kim, J.; Parikh, D.; Batra, D.; Zhang, B.; Tian, Y. CoDraw: Visual Dialog for Collaborative Drawing. arXiv 2017, arXiv:1712.05558. [Google Scholar]

- Johnson, J.; Hariharan, B.; van der Maaten, L.; Fei-Fei, L.; Zitnick, C.L.; Girshick, R.B. CLEVR: A Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning. arXiv 2016, arXiv:1612.06890. [Google Scholar]

- Gwern, B.; Anonymous; Danbooru Community. Danbooru2019 Portraits: A Large-Scale Anime Head Illustration Dataset. 2019. Available online: https://www.gwern.net/Crops#danbooru2019-portraits (accessed on 30 May 2022).

- Anonymous; Danbooru Community; Gwern, B. Danbooru2021: A Large-Scale Crowdsourced and Tagged Anime Illustration Dataset. Available online: https://www.gwern.net/Danbooru2021 (accessed on 30 May 2022).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2007/workshop/index.html (accessed on 30 May 2022).

- Guillaumin, M.; Verbeek, J.; Schmid, C. Multimodal semi-supervised learning for image classification. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 902–909. [Google Scholar] [CrossRef]

- Huiskes, M.J.; Lew, M.S. The MIR Flickr Retrieval Evaluation. In Proceedings of the 1st ACM International Conference on Multimedia Information Retrieval, Vancouver, BC, Canada, 30–31 October 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 39–43. [Google Scholar] [CrossRef]

- Huiskes, M.J.; Thomee, B.; Lew, M.S. New Trends and Ideas in Visual Concept Detection: The MIR Flickr Retrieval Evaluation Initiative. In Proceedings of the International Conference on Multimedia Information Retrieval, Philadelphia, PA, USA, 29–31 March 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 527–536. [Google Scholar] [CrossRef] [Green Version]

- Bosch, A.; Zisserman, A.; Munoz, X. Image Classification using Random Forests and Ferns. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Oliva, A.; Torralba, A. Modeling the Shape of the Scene: A Holistic Representation of the Spatial Envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Manjunath, B.; Ohm, J.R.; Vasudevan, V.; Yamada, A. Color and texture descriptors. IEEE Trans. Circuits Syst. Video Technol. 2001, 11, 703–715. [Google Scholar] [CrossRef]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Fellbaum, C. (Ed.) WordNet: An Electronic Lexical Database; Language, Speech, and Communication; A Bradford Book: Cambridge, MA, USA, 1998. [Google Scholar]

- Yu, F.; Seff, A.; Zhang, Y.; Song, S.; Funkhouser, T.; Xiao, J. LSUN: Construction of a Large-scale Image Dataset using Deep Learning with Humans in the Loop. arXiv 2016, arXiv:1506.03365. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html (accessed on 30 May 2022).

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. SUN database: Large-scale scene recognition from abbey to zoo. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3485–3492. [Google Scholar] [CrossRef]

- Thomee, B.; Shamma, D.A.; Friedland, G.; Elizalde, B.; Ni, K.; Poland, D.; Borth, D.; Li, L. The New Data and New Challenges in Multimedia Research. arXiv 2015, arXiv:1503.01817. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

- Caesar, H.; Uijlings, J.R.R.; Ferrari, V. COCO-Stuff: Thing and Stuff Classes in Context. arXiv 2016, arXiv:1612.03716. [Google Scholar]

- Sharma, P.; Ding, N.; Goodman, S.; Soricut, R. Conceptual Captions: A Cleaned, Hypernymed, Image Alt-text Dataset For Automatic Image Captioning. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 2556–2565. [Google Scholar] [CrossRef]

- Chambers, C.; Raniwala, A.; Perry, F.; Adams, S.; Henry, R.R.; Bradshaw, R.; Weizenbaum, N. FlumeJava: Easy, Efficient Data-Parallel Pipelines. In Proceedings of the 31st ACM SIGPLAN Conference on Programming Language Design and Implementation, London, UK, 15–20 June 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 363–375. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.R.R.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Duerig, T.; et al. The Open Images Dataset V4: Unified image classification, object detection, and visual relationship detection at scale. arXiv 2018, arXiv:1811.00982. [Google Scholar] [CrossRef]

- Schuhmann, C.; Vencu, R.; Beaumont, R.; Kaczmarczyk, R.; Mullis, C.; Katta, A.; Coombes, T.; Jitsev, J.; Komatsuzaki, A. LAION-400M: Open Dataset of CLIP-Filtered 400 Million Image-Text Pairs. arXiv 2021, arXiv:2111.02114. [Google Scholar]

- Common Crawl. Available online: https://commoncrawl.org/ (accessed on 30 May 2022).

- Chang, A.X.; Funkhouser, T.A.; Guibas, L.J.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. ShapeNet: An Information-Rich 3D Model Repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Kazemzadeh, S.; Ordonez, V.; Matten, M.; Berg, T. ReferItGame: Referring to Objects in Photographs of Natural Scenes. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Association for Computational Linguistics: Doha, Qatar, 2014; pp. 787–798. [Google Scholar] [CrossRef]

- Grubinger, M.; Clough, P.; Müller, H.; Deselaers, T. The IAPR TC12 Benchmark: A New Evaluation Resource for Visual Information Systems. In Proceedings of the International Workshop ontoImage, Genova, Italy, 22 May 2006; Volume 2. [Google Scholar]

- Escalante, H.J.; Hernández, C.A.; Gonzalez, J.A.; López-López, A.; Montes, M.; Morales, E.F.; Enrique Sucar, L.; Villaseñor, L.; Grubinger, M. The Segmented and Annotated IAPR TC-12 Benchmark. Comput. Vis. Image Underst. 2010, 114, 419–428. [Google Scholar] [CrossRef] [Green Version]

- Zhu, S.; Fidler, S.; Urtasun, R.; Lin, D.; Loy, C.C. Be Your Own Prada: Fashion Synthesis with Structural Coherence. arXiv 2017, arXiv:1710.07346. [Google Scholar]

- Bychkovsky, V.; Paris, S.; Chan, E.; Durand, F. Learning Photographic Global Tonal Adjustment with a Database of Input/Output Image Pairs. In Proceedings of the Twenty-Fourth IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Yu, A.; Grauman, K. Fine-Grained Visual Comparisons with Local Learning. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OI, USA, 23–28 June 2014; pp. 192–199. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Qiu, S.; Wang, X.; Tang, X. DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1096–1104. [Google Scholar] [CrossRef]

- Zhopped—The First, Free Image Editing Community. Available online: http://zhopped.com/ (accessed on 30 May 2022).

- Reddit—Dive into Anything. Available online: https://www.reddit.com/ (accessed on 30 May 2022).

- Huang, T.H.K.; Ferraro, F.; Mostafazadeh, N.; Misra, I.; Agrawal, A.; Devlin, J.; Girshick, R.; He, X.; Kohli, P.; Batra, D.; et al. Visual Storytelling. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics: San Diego, CA, USA, 2016; pp. 1233–1239. [Google Scholar] [CrossRef]

- Kim, K.; Heo, M.; Choi, S.; Zhang, B. DeepStory: Video Story QA by Deep Embedded Memory Networks. arXiv 2017, arXiv:1707.00836. [Google Scholar]