Indoor Visual Exploration with Multi-Rotor Aerial Robotic Vehicles

Abstract

1. Introduction

2. Related Work

2.1. State-of-the-Art on Autonomous Robot Exploration

2.2. State-of-the-Art on Multi-Robot Exploration

2.3. Proposed Method

- Development of an AHPF-based exploration algorithm for a multi-rotor platform,

- Extending the above scheme to the multi-robot exploration problem,

- Integrating the aforementioned exploration framework with a scheme for single-agent visual map-building of unknown workspaces, combined with an inter-agent information exchange aspect.

3. Problem Formulation

Proposed Sub-Problems

- Localization and Mapping,

- Path Planning,

- Tracking Problem.

4. Materials and Methods

4.1. Multirotor Kinematics and Dynamics

- denotes the drag forces and the drag coefficient matrix;

- denotes the gravitational force, where g is the gravitational constant;

- denotes the total thrust generated by the motors;

- denotes the torque produced by the motors;

- are the drag moments with denoting the drag moment coefficient matrix;

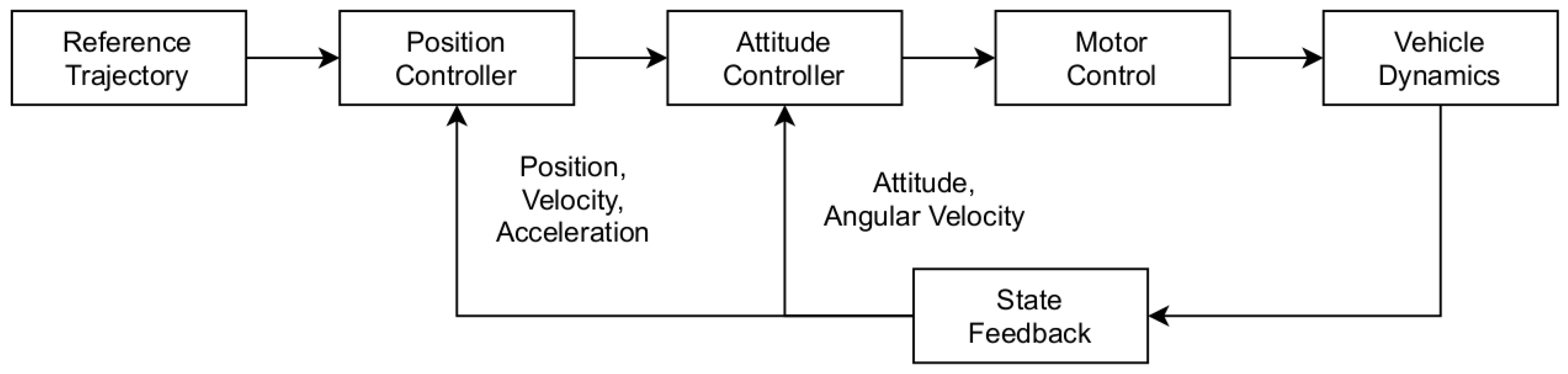

4.2. Autopilot and On-Board Sensors

4.3. Localization and Mapping

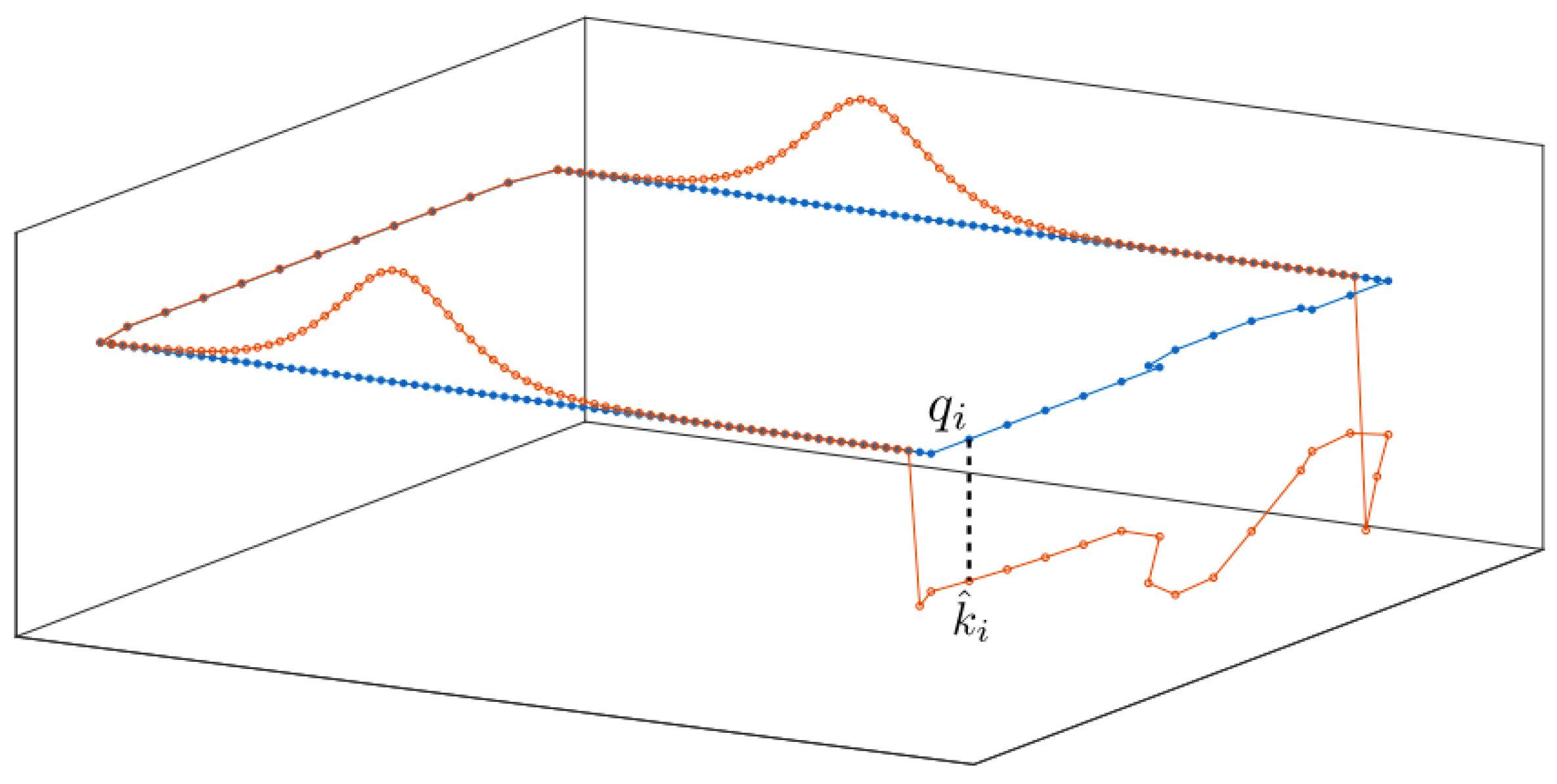

4.4. Path Planning

4.4.1. Velocity Field

4.4.2. Boundary Discretization

4.4.3. Fast Multipole Boundary Elements

4.4.4. A Brief Discussion about the Algorithm

4.4.5. Technical Results

4.5. Tracking Controller

- The vectors point to different directions, and

- The vectors point to different directions.

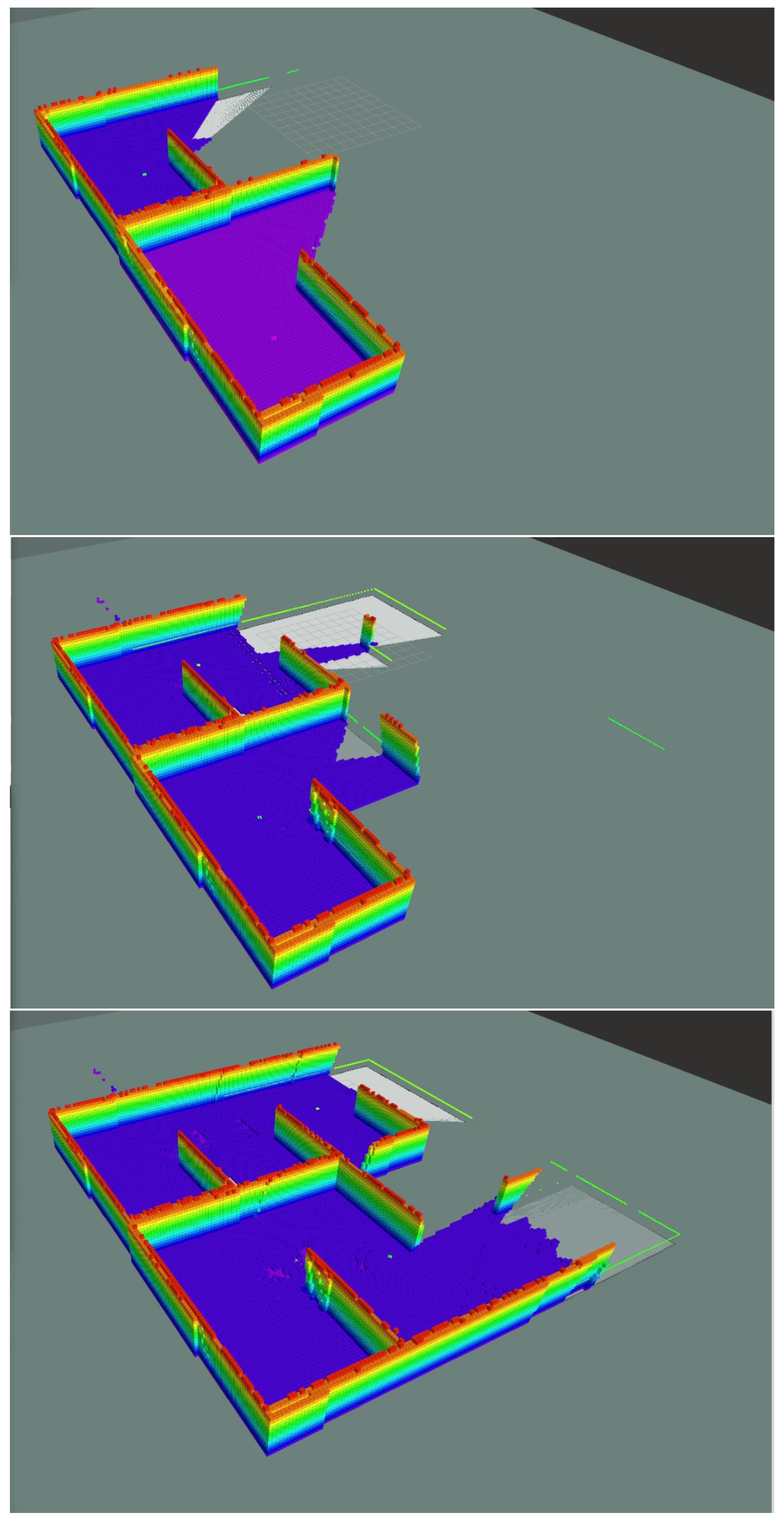

4.6. Octopmap Building

4.7. Drone Communication and Map Merging

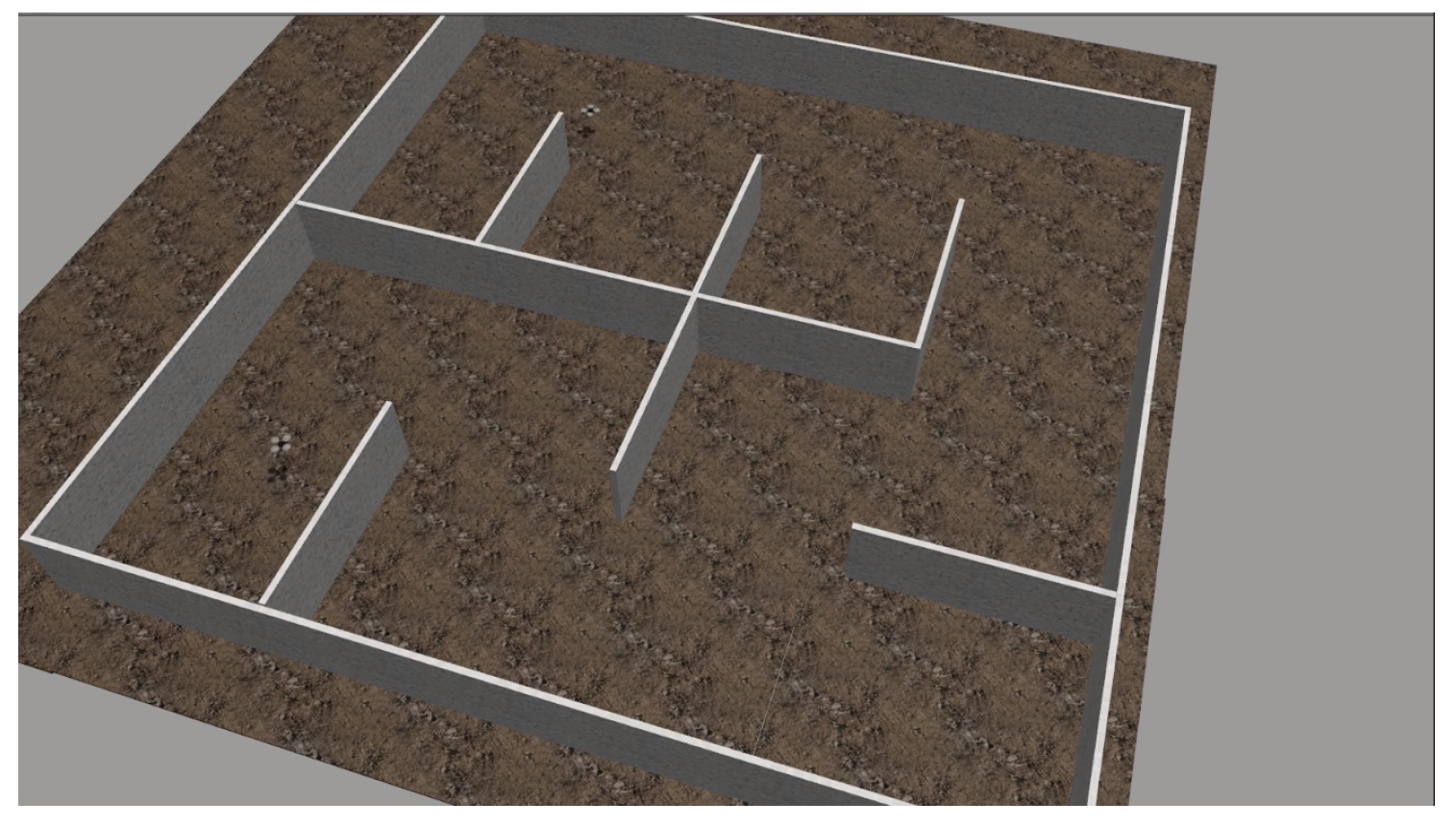

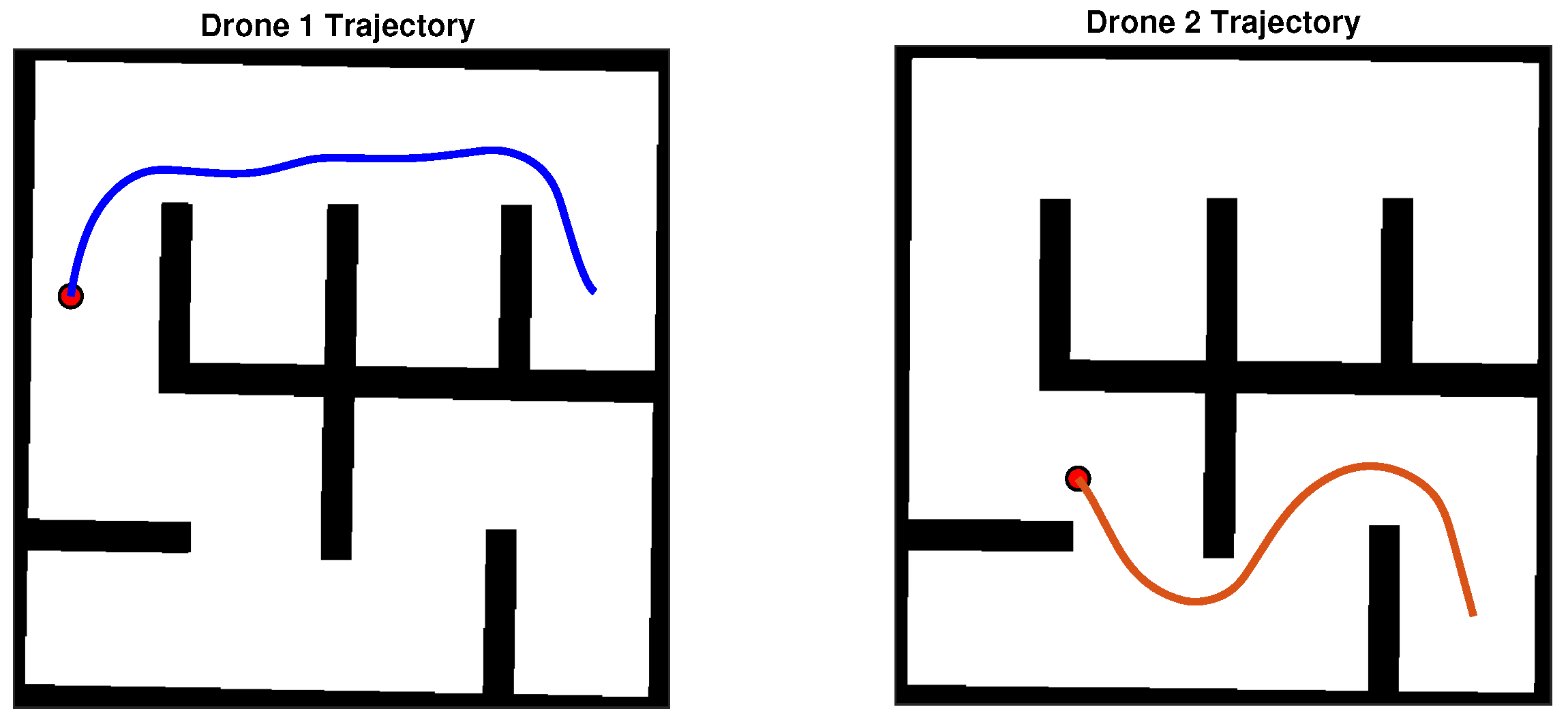

5. Results

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Stanford Artificial Intelligence Laboratory. Robotic Operating System; Stanford Artificial Intelligence Laboratory: Stanford, CA, USA, 2022. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents); The MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Yamauchi, B. A frontier-based approach for autonomous exploration. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97. Towards New Computational Principles for Robotics and Automation, Monterey, CA, USA, 10–11 July 1997; pp. 146–151. [Google Scholar] [CrossRef]

- Lu, L.; Redondo, C.; Campoy, P. Optimal Frontier-Based Autonomous Exploration in Unconstructed Environment Using RGB-D Sensor. Sensors 2020, 20, 6507. [Google Scholar] [CrossRef] [PubMed]

- Stachniss, C.; Grisetti, G.; Burgard, W. Information Gain-based Exploration Using Rao-Blackwellized Particle Filters. In Proceeding of the Robotics: Science and Systems I, Cambridge, MA, USA, 8–11 June 2005; The MIT Press: Cambridge, MA, USA, 2005; pp. 65–72. [Google Scholar] [CrossRef]

- Carrillo, H.; Dames, P.; Kumar, V.; Castellanos, J.A. Autonomous robotic exploration using occupancy grid maps and graph SLAM based on Shannon and Rényi Entropy. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 487–494. [Google Scholar] [CrossRef]

- Charrow, B.; Liu, S.; Kumar, V.; Michael, N. Information-theoretic mapping using Cauchy-Schwarz Quadratic Mutual Information. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4791–4798. [Google Scholar] [CrossRef]

- Bai, S.; Wang, J.; Chen, F.; Englot, B. Information-theoretic exploration with Bayesian optimization. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1816–1822. [Google Scholar] [CrossRef]

- Zhao, L.; Yan, L.; Hu, X.; Yuan, J.; Liu, Z. Efficient and High Path Quality Autonomous Exploration and Trajectory Planning of UAV in an Unknown Environment. ISPRS Int. J. Geo-Inf. 2021, 10, 631. [Google Scholar] [CrossRef]

- Liu, S.; Li, S.; Pang, L.; Hu, J.; Chen, H.; Zhang, X. Autonomous Exploration and Map Construction of a Mobile Robot Based on the TGHM Algorithm. Sensors 2020, 20, 490. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Shi, C.; Zhu, P.; Zeng, Z.; Zhang, H. Autonomous Exploration of Mobile Robots via Deep Reinforcement Learning Based on Spatiotemporal Information on Graph. Appl. Sci. 2021, 11, 8299. [Google Scholar] [CrossRef]

- Prestes, E.; Idiart, M.; Engel, P.; Trevisan, M. Exploration technique using potential fields calculated from relaxation methods. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No.01CH37180), Maui, HI, USA, 29 October–3 November 2001; Volume 4, pp. 2012–2017. [Google Scholar] [CrossRef]

- Maffei, R.; Jorge, V.A.M.; Prestes, E.; Kolberg, M. Integrated exploration using time-based potential rails. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3694–3699. [Google Scholar] [CrossRef]

- Shade, R.; Newman, P. Choosing where to go: Complete 3D exploration with stereo. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2806–2811. [Google Scholar] [CrossRef]

- Jorge, V.A.M.; Maffei, R.; Franco, G.S.; Daltrozo, J.; Giambastiani, M.; Kolberg, M.; Prestes, E. Ouroboros: Using potential field in unexplored regions to close loops. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2125–2131. [Google Scholar] [CrossRef]

- Silveira, R.; Prestes, E.; Nedel, L. Fast path planning using multi-resolution boundary value problems. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4710–4715. [Google Scholar] [CrossRef]

- Wurm, K.M.; Stachniss, C.; Burgard, W. Coordinated multi-robot exploration using a segmentation of the environment. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1160–1165. [Google Scholar] [CrossRef]

- Cipolleschi, R.; Giusto, M.; Li, A.Q.; Amigoni, F. Semantically-informed coordinated multirobot exploration of relevant areas in search and rescue settings. In Proceedings of the 2013 European Conference on Mobile Robots, Barcelona, Catalonia, Spain, 25–27 September 2013; pp. 216–221. [Google Scholar] [CrossRef]

- Basilico, N.; Amigoni, F. Exploration strategies based on multi-criteria decision making for searching environments in rescue operations. Auton. Robot. 2011, 31, 401–417. [Google Scholar] [CrossRef]

- Rekleitis, I. Multi-robot simultaneous localization and uncertainty reduction on maps (MR-SLURM). In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 1216–1221. [Google Scholar] [CrossRef]

- Juliá, M.; Gil, A.; Reinoso, O. A comparison of path planning strategies for autonomous exploration and mapping of unknown environments. Auton. Robot. 2012, 33, 427–444. [Google Scholar] [CrossRef]

- Mukhija, P.; Krishna, K.M.; Krishna, V. A two phase recursive tree propagation based multi-robotic exploration framework with fixed base station constraint. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4806–4811. [Google Scholar] [CrossRef]

- Rooker, M.N.; Birk, A. Multi-robot exploration under the constraints of wireless networking. Control. Eng. Pract. 2007, 15, 435–445. [Google Scholar] [CrossRef]

- Reich, J.; Misra, V.; Rubenstein, D.; Zussman, G. Connectivity Maintenance in Mobile Wireless Networks via Constrained Mobility. IEEE J. Sel. Areas Commun. 2012, 30, 935–950. [Google Scholar] [CrossRef][Green Version]

- Hollinger, G.A.; Singh, S. Multirobot Coordination With Periodic Connectivity: Theory and Experiments. IEEE Trans. Robot. 2012, 28, 967–973. [Google Scholar] [CrossRef]

- Visser, A.; Slamet, B.A. Including communication success in the estimation of information gain for multi-robot exploration. In Proceedings of the 6th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks and Workshops, Berlin, Germany, 31 March–4 April 2008; pp. 680–687. [Google Scholar] [CrossRef]

- de Hoog, J.; Cameron, S.; Visser, A. Role-Based Autonomous Multi-robot Exploration. In Proceedings of the 2009 Computation World: Future Computing, Service Computation, Cognitive, Adaptive, Content, Patterns, Athens, Greece, 15–20 November 2009; pp. 482–487. [Google Scholar] [CrossRef]

- Arkin, R.; Diaz, J. Line-of-sight constrained exploration for reactive multiagent robotic teams. In Proceedings of the 7th International Workshop on Advanced Motion Control. Proceedings (Cat. No.02TH8623), Maribor, Slovenia, 3–5 July 2002; pp. 455–461. [Google Scholar] [CrossRef]

- Jensen, E.A.; Lowmanstone, L.; Gini, M.; Kolling, A.; Berman, S.; Frazzoli, E.; Martinoli, A.; Matsuno, F.; Gauci, M. Communication-Restricted Exploration for Search Teams. In Distributed Autonomous Robotic Systems: The 13th International Symposium, Tokyo, Japan, 12–14 November 2018; Springer: Cham, Switzerland, 2018; pp. 17–30. [Google Scholar] [CrossRef]

- Lin, Z.; Zhang, S.; Yan, G. An incremental deployment algorithm for wireless sensor networks using one or multiple autonomous agents. Ad Hoc Netw. 2013, 11, 355–367. [Google Scholar] [CrossRef]

- Stump, E.; Michael, N.; Kumar, V.; Isler, V. Visibility-based deployment of robot formations for communication maintenance. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Wellington, New Zealand, 6–8 December 2011; pp. 4498–4505. [Google Scholar] [CrossRef]

- Pei, Y.; Mutka, M.W.; Xi, N. Connectivity and bandwidth-aware real-time exploration in mobile robot networks. Wirel. Commun. Mob. Comput. 2013, 13, 847–863. [Google Scholar] [CrossRef]

- Grontas, P.D.; Vlantis, P.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Computationally Efficient Harmonic-Based Reactive Exploration. IEEE Robot. Autom. Lett. 2020, 5, 2280–2285. [Google Scholar] [CrossRef]

- Chunyu, J.; Qu, Z.; Pollak, E.; Falash, M. Reactive target-tracking control with obstacle avoidance of unicycle-type mobile robots in a dynamic environment. In Proceedings of the American Control Conference, ACC 2010, Baltimore, MD, USA, 30 June–2 July 2010. [Google Scholar] [CrossRef]

- Loizou, S.G. Closed form Navigation Functions based on harmonic potentials. In Proceedings of the 50th IEEE Conference on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 6361–6366. [Google Scholar] [CrossRef]

- Karras, G.C.; Bechlioulis, C.P.; Fourlas, G.K.; Kyriakopoulos, K.J. Target Tracking with Multi-rotor Aerial Vehicles based on a Robust Visual Servo Controller with Prescribed Performance. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 480–487. [Google Scholar] [CrossRef]

- Mahony, R.; Kumar, V.; Corke, P. Multirotor Aerial Vehicles: Modeling, Estimation, and Control of Quadrotor. IEEE Robot. Autom. Mag. 2012, 19, 20–32. [Google Scholar] [CrossRef]

- Kohlbrecher, S.; Meyer, J.; von Stryk, O.; Klingauf, U. A Flexible and Scalable SLAM System with Full 3D Motion Estimation. In Proceedings of the IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), Kyoto, Japan, 1–5 November 2011. [Google Scholar]

- Solomon, C.; Breckon, T. Front Matter. In Fundamentals of Digital Image Processing; John Wiley and Sons, Ltd.: Hoboken, NJ, USA, 2010. [Google Scholar] [CrossRef]

- Gonzalez-Arjona, D.; Sanchez, A.; López-Colino, F.; De Castro, A.; Garrido, J. Simplified Occupancy Grid Indoor Mapping Optimized for Low-Cost Robots. ISPRS Int. J. Geo-Inf. 2013, 2, 959–977. [Google Scholar] [CrossRef]

- Saad, Y.; Schultz, M.H. GMRES: A Generalized Minimal Residual Algorithm for Solving Nonsymmetric Linear Systems. SIAM J. Sci. Stat. Comput. 1986, 7, 856–869. [Google Scholar] [CrossRef]

- Yu, Y.; Yang, S.; Wang, M.; Li, C.; Li, Z. High performance full attitude control of a quadrotor on SO(3). In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1698–1703. [Google Scholar] [CrossRef]

- Shamshirgaran, A.; Ebeigbe, D.; Simon, D. Position and Attitude Control of Underactuated Drones Using the Adaptive Function Approximation Technique. In Proceedings of the Dynamic Systems and Control Conference 2020, Online, 5–7 October 2020; American Society of Mechanical Engineers: New York, NY, USA, 2020; Volume 1, p. V001T01A004. [Google Scholar] [CrossRef]

- Fenwick, J.; Newman, P.; Leonard, J. Cooperative concurrent mapping and localization. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No.02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 2, pp. 1810–1817. [Google Scholar] [CrossRef]

- Howard, A. Multi-robot Simultaneous Localization and Mapping using Particle Filters. Int. J. Robot. Res. 2006, 25, 1243–1256. [Google Scholar] [CrossRef]

- Thrun, S. A Probabilistic On-Line Mapping Algorithm for Teams of Mobile Robots. Int. J. Robot. Res. 2001, 20, 335–363. [Google Scholar] [CrossRef]

- Hörner, J. Map-Merging for Multi-Robot System. Bachelor’s Thesis, Faculty of Mathematics and Physics, Charles University in Prague, Prague, Czech Republic, 2016. [Google Scholar]

- Citroni, R.; Di Paolo, F.; Livreri, P. A Novel Energy Harvester for Powering Small UAVs: Performance Analysis, Model Validation and Flight Results. Sensors 2019, 19, 1771. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Gong, D. A comparative study of A-star algorithms for search and rescue in perfect maze. In Proceedings of the International Conference on Electric Information and Control Engineering, Yichang, China, 16–18 September 2011; pp. 24–27. [Google Scholar] [CrossRef]

- Rousseas, P.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Trajectory Planning in Unknown 2D Workspaces: A Smooth, Reactive, Harmonics-Based Approach. IEEE Robot. Autom. Lett. 2022, 7, 1992–1999. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rousseas, P.; Karras, G.C.; Bechlioulis, C.P.; Kyriakopoulos, K.J. Indoor Visual Exploration with Multi-Rotor Aerial Robotic Vehicles. Sensors 2022, 22, 5194. https://doi.org/10.3390/s22145194

Rousseas P, Karras GC, Bechlioulis CP, Kyriakopoulos KJ. Indoor Visual Exploration with Multi-Rotor Aerial Robotic Vehicles. Sensors. 2022; 22(14):5194. https://doi.org/10.3390/s22145194

Chicago/Turabian StyleRousseas, Panagiotis, George C. Karras, Charalampos P. Bechlioulis, and Kostas J. Kyriakopoulos. 2022. "Indoor Visual Exploration with Multi-Rotor Aerial Robotic Vehicles" Sensors 22, no. 14: 5194. https://doi.org/10.3390/s22145194

APA StyleRousseas, P., Karras, G. C., Bechlioulis, C. P., & Kyriakopoulos, K. J. (2022). Indoor Visual Exploration with Multi-Rotor Aerial Robotic Vehicles. Sensors, 22(14), 5194. https://doi.org/10.3390/s22145194