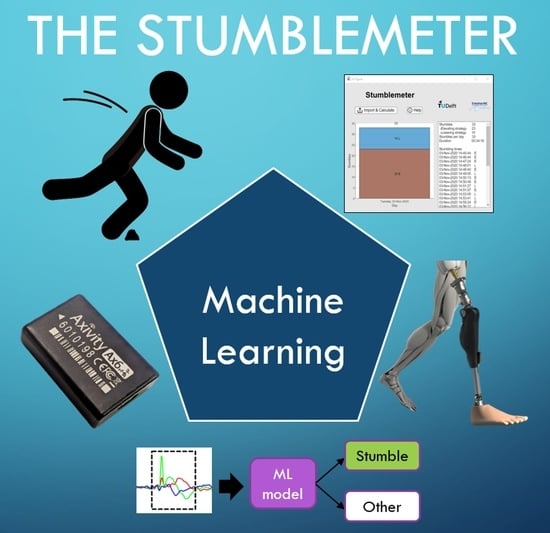

The Stumblemeter: Design and Validation of a System That Detects and Classifies Stumbles during Gait

Abstract

:1. Introduction

1.1. Stumbling in Individuals with Impaired Gait

1.2. Automatic Stumble Detection for an Objective Evaluation of Fall Risk

1.3. Wearable Sensors and Machine Learning

2. Materials and Methods

2.1. Participants, Experimental Setup, and Protocol

- -

- Elevating strategy: After impact with the obstacle, the perturbed foot lifts up and over the obstacle, landing past the obstacle. This strategy is used when the foot is perturbed in the early swing phase (5–50% of the entire swing phase).

- -

- Lowering strategy: After impact with the obstacle, the perturbed foot lowers in front of the obstacle, while the other foot performs a recovery step and lands past the obstacle. This strategy is used when the foot is perturbed in the late swing phase (40–75% of the entire swing).

2.2. Dataset and Software

2.3. Data Pre-Processing

2.4. Window Segmentation and Labelling

2.5. Feature Selection and Extraction

- An objective function, called the criterion, in which the method seeks to minimize the overall feasible feature subsets. For our classification problem, the misclassification rate was set as the objective function.

- A sequential search algorithm, which adds or removes features from a candidate subject while evaluating the criterion.

- Sequential forward selection (SFS), in which features are sequentially added to an empty candidate set until the addition of further features does not decrease the criterion.

- Sequential backward selection (SBS), in which features are sequentially removed from a full candidate set until the removal of further features increase the criterion.

2.6. Machine Learning Algorithms

2.7. Training, Validating, and Testing

3. Results

3.1. Three-Class Classification Approach

3.2. Double Binary Classification Approach

3.3. Final Model

3.4. Stumblemeter App

4. Discussion

4.1. Recap

4.2. Internal Validity

4.3. Comparison with Previous Studies

4.4. Practical Application in Clinical Research

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| ML Model | Maximum Number of Splits | Split Criterion | Surrogate Decision Splits |

|---|---|---|---|

| Decision Tree (D1) | 59 | Maximum deviance reduction | Off |

| Decision Tree (D2) | 38 | Gini’s diversity index | Off |

| Decision Tree (D3) | 19 | Gini’s diversity index | Off |

| ML Model | Discriminant Type |

|---|---|

| Discriminant Analysis (D1) | Linear |

| Discriminant Analysis (D2) | Linear |

| Discriminant Analysis (D3) | Linear |

| ML Model |

|---|

| Logistic Regression (D2) |

| Logistic Regression (D2) |

| ML Model | Distribution Type | Kernel Type | Support |

|---|---|---|---|

| Naïve Bayes (D1) | Kernel | Gaussian | Unbounded |

| Naïve Bayes (D2) | Kernel | Triangle | Unbounded |

| Naïve Bayes (D3) | Kernel | Gaussian | Unbounded |

| ML Model | Kernel Function | Box Constraint Level | Kernel Scale |

|---|---|---|---|

| SVM (D1) | Linear | 0.0010 | 0.0025 |

| SVM (D2) | Linear | 976.7 | 7.5492 |

| SVM (D3) | Linear | 0.0001 | 0.0293 |

| ML Model | Number of Neighbors | Distance Metric | Distance Weight |

|---|---|---|---|

| KNN (D1) | 1 | Cosine | Squared inverse |

| KNN (D2) | 5 | Spearman | Squared inverse |

| KNN (D3) | 1 | Correlation | Inverse |

| ML Model | Ensemble Method | Learner Type | Max Number of Splits | Number of Learners | Learning Rate | Subspace Dimension |

|---|---|---|---|---|---|---|

| Ensemble Learner (D1) | Bag | Decision Tree | 320 | 12 | - | 53 |

| Ensemble Learner (D2) | GentleBoost | Decision Tree | 5 | 485 | 0.001864 | 38 |

| Ensemble Learner (D3) | Bag | Decision Tree | 182 | 27 | - | 35 |

References

- Li, W.; Keegan, T.H.M.; Sternfeld, B.; Sidney, S.; Quesenberry, C.P.; Kelsey, J.L. Outdoor Falls Among Middle-Aged and Older Adults: A Neglected Public Health Problem. Am. J. Public Health 2006, 96, 1192–1200. [Google Scholar] [CrossRef]

- Blake, A.J.; Morgan, K.; Bendall, M.J.; Dallosso, H.; Ebrahim, S.B.J.; Arie, T.H.D.; Fentem, P.H.; Bassey, E.J. Falls by elderly people at home: Prevalence and associated factors. Age Ageing 1988, 17, 365–372. [Google Scholar] [CrossRef]

- Berg, W.P.; Alessio, H.M.; Mills, E.M.; Tong, C. Circumstances and consequences of falls in independent community-dwelling older adults. Age Ageing 1997, 26, 261–268. [Google Scholar] [CrossRef] [Green Version]

- Ofori-Asenso, R.; Ackerman, I.N.; Soh, S. Prevalence and correlates of falls in a middle-aged population with osteoarthritis: Data from the Osteoarthritis Initiative. Health Soc. Care Community 2020, 29, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Weerdesteyn, V.; De Niet, M.; Van Duijnhoven, H.J.; Geurts, A.C. Falls in individuals with stroke. J. Rehabil. Res. Dev. 2008, 45, 1195–1213. [Google Scholar] [CrossRef] [PubMed]

- Hunter, S.W.; Batchelor, F.; Hill, K.D.; Hill, A.M.; Mackintosh, S.; Payne, M. Risk Factors for Falls in People with a Lower Limb Amputation: A Systematic Review. PMR 2016, 9, 170–180. [Google Scholar] [CrossRef]

- Winter, D.A. Foot Trajectory in Human Gait: A Precise and Multifactorial Motor Control Task. Phys. Ther. 1992, 72, 45–53. [Google Scholar] [CrossRef]

- Begg, R.; Best, R.; Dell’Oro, L.; Taylor, S. Minimum foot clearance during walking: Strategies for the minimisation of trip-related falls. Gait Posture 2007, 25, 191–198. [Google Scholar] [CrossRef]

- Rosenblatt, N.J.; Bauer, A.; Rotter, D.; Grabiner, M.D. Active dorsiflexing prostheses may reduce trip-related fall risk in people with transtibial amputation. J. Rehabil. Res. Dev. 2014, 51, 1229–1242. [Google Scholar] [CrossRef]

- Byju, A.G.; Nussbaum, M.A.; Madigan, M.L. Alternative measures of toe trajectory more accurately predict the probability of tripping than minimum toe clearance. J. Biomech. 2016, 49, 4016–4021. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Santhiranayagam, B.K.; Sparrow, W.A.; Lai, D.T.H.; Begg, R.K. Non-MTC gait cycles: An adaptive toe trajectory control strategy in older adults. Gait Posture 2017, 53, 73–79. [Google Scholar] [CrossRef] [PubMed]

- Srygley, J.M.; Herman, T.; Giladi, N.; Hausdorff, J.M. Self-Report of Missteps in Older Adults: A Valid Proxy of Fall Risk? Arch. Phys. Med. Rehabil. 2009, 90, 786–792. [Google Scholar] [CrossRef] [Green Version]

- Sehatzadeh, S.; Tiggelaar, S.; Shafique, A. Osseointegrated Prosthetic Implants for People with Lower-Limb Amputation: A Health Technology Assessment. Ont. Health Technol. Assess. Ser. 2019, 19, 1–126. [Google Scholar]

- Advantages and Disadvantages Osseointegration. Available online: https://www.radboudumc.nl/en/patientenzorg/behandelingen/osseointegration/advantages-and-disadvantages (accessed on 17 March 2021).

- Hajj Chehade, N.; Ozisik, P.; Gomez, J.; Ramos, F.; Pottie, G. Detecting stumbles with a single accelerometer. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 6681–6686. [Google Scholar]

- Mackenzie, L.; Byles, J.; D’Este, C. Validation of self-reported fall events in intervention studies. Clin. Rehabil. 2006, 20, 331–339. [Google Scholar] [CrossRef]

- Eng, J.J.; Winter, D.A.; Patla, A.E. Strategies for recovery from a trip in early and late swing during human walking. Exp. Brain Res. 1994, 102, 339–349. [Google Scholar] [CrossRef]

- Pavol, M.J.; Owings, T.M.; Foley, K.T.; Grabiner, M.D. Mechanisms Leading to a Fall from an Induced Trip in Healthy Older Adults. J. Gerontol. Ser. A Biol. Sci. Med. Sci. 2001, 56, 428–437. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Patel, S.; Park, H.; Bonato, P.; Chan, L.; Rodgers, M. A review of wearable sensors and systems with application in rehabilitation. J. NeuroEng. Rehabil. 2012, 9, 21. [Google Scholar] [CrossRef] [Green Version]

- Noury, N.; Fleury, A.; Rumeau, P.; Bourke, A.K.; Laighin, G.Ó.; Rialle, V.; Lundy, J.E. Fall detection—Principles and methods. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology, Lyon, France, 22–26 August 2007; pp. 1663–1666. [Google Scholar]

- What is Machine Learning? A definition—Expert System. Available online: https://www.expert.ai/blog/machine-learning-definition/ (accessed on 17 March 2021).

- Aziz, O.; Park, E.J.; Mori, G.; Robinovitch, S.N. Distinguishing near-falls from daily activities with wearable accelerometers and gyroscopes using Support Vector Machines. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 5837–5840. [Google Scholar]

- Choi, Y.; Ralhan, A.S.; Ko, S. A study on machine learning algorithms for fall detection and movement classification. In Proceedings of the International Conference on Information Science and Applications, Jeju, Korea, 26–29 April 2011. [Google Scholar]

- Karel, J.M.H.; Sendenz, R.; Jansseny, J.E.M.; Savelbergz, H.H.C.M.; Grimm, B.; Heyligers, I.C.; Peeters, R.; Meijerz, K. Towards unobtrusive in vivo monitoring of patients prone to falling. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 5018–5021. [Google Scholar]

- Lawson, B.E.; Varol, H.A.; Sup, F.; Goldfarb, M. Stumble detection and classification for an intelligent transfemoral prosthesis. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 511–514. [Google Scholar]

- Weiss, A.; Shimkin, I.; Giladi, N.; Hausdorff, J.M. Automated detection of near falls: Algorithm development and preliminary results. BMC Res. Notes 2010, 3, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Zhang, F.; D’Andrea, S.; Nunnery, M.; Kay, S.; Huang, H. Towards Design of a Stumble Detection System for Artificial Legs. IEEE Trans. Neural. Syst. Rehabil. Eng. 2010, 19, 567–577. [Google Scholar] [CrossRef] [Green Version]

- Huijun, Z.; Zuojun, L.; Guoxing, C.; Yan, Z. Stumble mode identification of prosthesis based on the Dempster-Shafer evidential theory. In Proceedings of the Chinese Control. and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; pp. 2794–2798. [Google Scholar]

- King, S.T.; Eveld, M.E.; Martínez, A.; Zelik, K.E.; Goldfarb, M. A novel system for introducing precisely-controlled, unanticipated gait perturbations for the study of stumble recovery. J. NeuroEng. Rehabil. 2019, 16, 69. [Google Scholar] [CrossRef] [Green Version]

- Schillings, A.M.; van Wezel, B.M.H.; Mulder, T.; Duysens, J. Muscular Responses and Movement Strategies During Stumbling Over Obstacles. J. Neurophysiol. 2000, 83, 2093–2102. [Google Scholar] [CrossRef] [Green Version]

- Shirota, C.; Simon, A.M.; Kuiken, T.A. Trip recovery strategies following perturbations of variable duration. J. Biomech. 2014, 47, 2679–2684. [Google Scholar] [CrossRef] [Green Version]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Acharya, A.; Sinha, D. Application of Feature Selection Methods in Educational Data Mining. Int. J. Comput. Appl. 2014, 103, 34–38. [Google Scholar] [CrossRef]

- García, S.; Luengo, J.; Herrera, F. Data Preprocessing in Data Mining, 1st ed.; Springer Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Sequential Feature Selection. Available online: https://nl.mathworks.com/help/stats/sequential-feature-selection.html (accessed on 31 August 2021).

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning, 1st ed.; Cambridge University Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Schein, A.I.; Ungar, L.H. Active learning for logistic regression: An evaluation. Mach. Learn. 2007, 68, 235–265. [Google Scholar] [CrossRef]

- Jadhav, S.D.; Channe, H.P. Comparative Study of K-NN, Naive Bayes and Decision Tree Classification Techniques. Int. J. Sci. Res. 2016, 5, 1842–1845. [Google Scholar]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-based Learning Methods, 1st ed.; Cambridge University Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Opitz, D.; Maclin, R. Popular Ensemble Methods: An Empirical Study. J. Artif. Intell. Res. 1999, 11, 169–198. [Google Scholar] [CrossRef]

- What is the Difference Between Test and Validation Datasets? Available online: https://machinelearningmastery.com/difference-test-validation-datasets/ (accessed on 31 August 2021).

- Shirota, C.; Simon, A.M.; Kuiken, T.A. Transfemoral amputee recovery strategies following trips to their sound and prosthesis sides throughout swing phase. J. NeuroEng. Rehabil. 2015, 12, 1–11. [Google Scholar] [CrossRef] [Green Version]

| ADL | Amount/Time | Instructions |

|---|---|---|

| Walking straight | 5 min | 1, 2, 3, 4, and 5 km/h on a treadmill (1 min each) |

| Walking corner | 10× | Walk 90-degree and 180-degree corners |

| Come to a halt | 10× | Stand still after walking. Repeat 10 times. |

| Sitting and rising | 10× | Sit down on a chair and rise from a chair in different ways and speeds. Repeat 10 times |

| Pick up object from ground | 10× | Throw a small ball on the ground and then pick it up from the ground in different ways and speeds. Repeat 10 times. |

| Walking upstairs and downstairs | 5× | Walk up and downstairs in different ways and using speeds. Repeat 5 times |

| Dataset | Classes | Amount of Windows (Validation) | Amount of Windows (Test) |

|---|---|---|---|

| (D1) Three-class classification | Stumble (elevating) Stumble (lowering) Other | 132 114 329 | 12 18 77 |

| (D2) Stumble detection | Stumble Other | 246 329 | 30 77 |

| (D3) Stumble type classification | Elevating Lowering | 132 114 | 12 18 |

| Nr | Feature Class |

|---|---|

| 1 | Interquartile range |

| 2 | Kurtosis |

| 3 | Mean |

| 4 | Median |

| 5 | Mean absolute deviation |

| 6 | Maximum |

| 7 | Minimum |

| 8 | Peak-magnitude-to-RMS-ratio |

| 9 | Spectral entropy |

| 10 | Prominence |

| 11 | Root-mean-square level |

| 12 | Root-sum-of-squares level |

| 13 | Range |

| 14 | Skewness |

| 15 | Standard deviation |

| 16 | Sum of local maxima and minima |

| ML Model | Sensitivity (%) | Specificity (%) | Accuracy (%) |

|---|---|---|---|

| SVM | 98.4 | 99.4 | 98.5 |

| Ensemble Learning | 98.0 | 98.5 | 93.4 |

| Discriminant Analysis | 97.2 | 97.0 | 90.0 |

| KNN | 97.2 | 95.1 | 74.1 |

| Naïve Bayes | 91.1 | 95.4 | 75.4 |

| Decision Tree | 87.8 | 93.3 | 77.8 |

| ML Model | Sensitivity (%) | Specificity (%) | Accuracy (%) |

|---|---|---|---|

| SVM | 96.7 | 100 | 96.6 |

| Ensemble Learning | 93.3 | 98.7 | 92.9 |

| Discriminant Analysis | 93.3 | 97.4 | 89.3 |

| KNN | 90 | 93.5 | 88.9 |

| Naïve Bayes | 90 | 93.5 | 77.8 |

| Decision Tree | 83.3 | 94.8 | 72.0 |

| ML Model 1 | Sensitivity (%) (Validation) | Specificity (%) (Validation) |

|---|---|---|

| SVM | 98.8 | 100 |

| Discriminant Analysis | 98.0 | 96.3 |

| Ensemble Learner | 97.6 | 98.2 |

| Logistic Regression | 97.6 | 94.8 |

| KNN | 96.3 | 92.4 |

| Naïve Bayes | 88.2 | 90.4 |

| Decision Tree | 88.0 | 93.6 |

| ML Model 1 | Sensitivity (Testing) | Specificity (%) (Testing) |

|---|---|---|

| SVM | 100 | 100 |

| Discriminant Analysis | 96.7 | 97.4 |

| Ensemble Learner | 96.7 | 97.4 |

| Logistic Regression | 90.0 | 93.5 |

| KNN | 90.0 | 92.2 |

| Naïve Bayes | 86.7 | 89.6 |

| Decision Tree | 83.3 | 88.3 |

| ML Model 2 | Accuracy (%) (Validation) |

|---|---|

| SVM | 95.5 |

| Ensemble Learner | 91.9 |

| Discriminant Analysis | 87.0 |

| KNN | 86.6 |

| Logistic Regression | 85.4 |

| Naïve Bayes | 84.2 |

| Decision Tree | 81.3 |

| ML Model 2 | Accuracy (%) (Testing) |

|---|---|

| SVM | 96.7 |

| Ensemble Learner | 93.3 |

| Discriminant Analysis | 83.3 |

| KNN | 80.0 |

| Logistic Regression | 80.0 |

| Naïve Bayes | 76.7 |

| Decision Tree | 73.3 |

| ML Model | Type | Features | Kernel Function | Box Constraint Level | Kernel Scale |

|---|---|---|---|---|---|

| ML model 1 (stumble vs. other) | SVM | Median Maximum Minimum Minimum Spectral entropy Peak-magnitude-to-RMS ratio Peak-magnitude-to-RMS ratio | Linear | 976.7 | 7.5492 |

| ML model 2 (elevating vs. lowering) | SVM | Interquartile range Kurtosis Mean Mean Maximum Minimum Skewness | Linear | 0.0001 | 0.0293 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hartog, D.d.; Harlaar, J.; Smit, G. The Stumblemeter: Design and Validation of a System That Detects and Classifies Stumbles during Gait. Sensors 2021, 21, 6636. https://doi.org/10.3390/s21196636

Hartog Dd, Harlaar J, Smit G. The Stumblemeter: Design and Validation of a System That Detects and Classifies Stumbles during Gait. Sensors. 2021; 21(19):6636. https://doi.org/10.3390/s21196636

Chicago/Turabian StyleHartog, Dylan den, Jaap Harlaar, and Gerwin Smit. 2021. "The Stumblemeter: Design and Validation of a System That Detects and Classifies Stumbles during Gait" Sensors 21, no. 19: 6636. https://doi.org/10.3390/s21196636