1. Introduction

Aircraft guidance [

1,

2,

3,

4], especially high-dimensional aircraft guidance, has gradually emerged as a significant research focus in academic circles, owing to the application prospects in complex flight tasks and under realistic conditions. In military exercises, pilots usually need to fly to a series of 4D waypoints [

5], which are arranged by air traffic controllers (ATCOs) in advance when performing complex flight tasks. For example, in team aircraft landing tasks, aircraft will sequentially arrive at landing 4D waypoints, and the arrival heading angle of the aircraft is required. The aircraft guidance becomes complicated when the arrival heading angle is taken into account, especially when the arrival time is also considered. Thus, it is essential to seek an approach to solve the multi-dimensional goal aircraft guidance problem, so as to guide an aircraft to 4D waypoints at a certain heading.

Researchers have made significant contributions to aircraft guidance. New local quadratic-biquadratic quality functions [

6] were used to obtain more general linear-cubic control laws for aircraft guidance. For altitude and position control, in a previous study [

7], incremental nonlinear dynamic inversion control was proposed, which is able to track the desired acceleration of the vehicle across the flight envelope. In another previous study [

8], a visual/inertial integrated carrier landing guidance algorithm was proposed for aircraft carrier landing, of which the simulation results showed satisfactory accuracy and high efficiency in carrier landing guidance.

The aforementioned prior research is of positive significance in aircraft guidance. In the actual flight, pilots conduct flight tasks with rich experience and skills. However, in ATC simulators, the aircraft is controlled by an autopilot, not by a real pilot, who is usually not well trained to guide the aircraft on 4D flight tasks. The main issue is that autopilot is unable to generate a 4D trajectory [

9,

10] to meet the requirement of the multi-dimensional goal task, wherein 4D waypoints of certain latitude, longitude, altitude, and heading and arrival time must be reached.

Consequently, ATCOs call for an intelligent approach to solve the aforementioned problem. In this paper, a possible reinforcement learning (RL) [

11] approach with a shaped reward function is proposed to achieve the multi-dimensional goal aircraft guidance task, by formulating the problem as a Markov decision process problem.

RL solves sequential decision-making problems by iteratively estimating value functions and optimal control strategies, which represents the long-term optimal performance of the system. Much attention has been shifted towards RL owing to the performance thereof in a wide range of applications. Thus, the capabilities of RL have stimulated research on aircraft guidance tasks.

As a result of RL development, researchers have proposed deep reinforcement learning (DRL) approaches to solve the problems of aircraft guidance. In a previous study [

12], to solve the aircraft sequencing and separation problem, the author explored the possibilities of applying RL techniques for ’time in trail’ tasks. A similar approach was proposed in another study [

13], which used DRL to train the aircraft by heading commands and constant speed to guide the aircraft. A trajectory generating method was proposed by using a DQN algorithm to perform a perched landing on the ground [

14]. In the above DQN algorithm, noise is considered by the model, which is more in line with the actual scenario in the training process.

In previous studies, RL has been shown to have beneficial application prospects in aircraft guidance. However, some results are inconsistent and further research is required for verification, and, at the same time, there are still a number of limitations. First, RL is a method of constantly trying and exploring from the environment, wherein the complexity of state space will directly affect the difficulty of the task. In prior research, aircraft heading was not taken into account in guidance, which reduced the convergence difficulty due to low-level dimensional state space. When aircraft heading and velocity are considered, convergence is usually difficult as aircraft guidance tasks are performed in a high-dimensional state space. Second, the reward function [

15,

16,

17] is vital and will directly affect the converge efficiency; however, the reward function is usually hard to define and needs reward shaping methods.

The high-dimensional state space has an effect on RL training efficiency, which will lead to a considerable amount of calculations and sparse rewards. To reduce the state space dimensions and difficulties, the multi-level hierarchical RL method and nested policies [

18] were proposed. Researchers [

19] proposed a nested RL model capable of determining both aircraft route and velocity, using an air traffic controller simulator created by NASA. The latter was employed as a testing environment to evaluate RL techniques, to provide tactical decision support to an air traffic controller, to select the proper route, and to change the velocity for each aircraft. Ultimately, RL methods were evaluated in the aforementioned testing environment to solve the autonomous air traffic control problem for aircraft sequencing and separation. The results revealed that, in the whole training process, the total score tended to oscillate and rise, which limited the application of the above method in practice. Another disadvantage of this approach is that it restricts the position of the aircraft in a fixed place and moves the aircraft in a limited route without considering the effect of aircraft aerodynamics on the flight path.

To design an effective reward function through reward shaping algorithms, it is necessary to speed up the convergence. Two types of reward functions [

20] are proposed to assist ATCOs in ensuring the safety and fairness of airlines, by solving the problems of both holding on ground and in air. To solve the problem of aircraft guidance, a new reward function was proposed in [

21], to improve the performance of the generated trajectories and the training efficiency.

Recent RL development for nonlinear control systems has implications for aircraft guidance tasks. A Virtual State-feedback Reference Feedback Tuning (VSFRT) method [

22] was applied to unknown observable systems control. In [

23], a hierarchical soft actor–critic algorithm was proposed for task allocation which significantly improved the efficiency of the intelligent system. In another study [

24], a strategy based on heuristic dynamic programming (HDP) (

) was used to solve the event-triggered control problem in a nonlinear system and improve the system stability, where the one-step-return value was approximated by an actor–critic neural network structure.

In the present paper, a multi-layer RL approach with a reward shaping algorithm is proposed for the multi-dimensional goal aircraft guidance flight task, wherein an aircraft is guided to waypoints at certain latitude, longitude, altitude, heading angle, and arrival time. In the proposed approach, a trained agent is adopted to control the aircraft by selecting the heading, changing the vertical velocity, and altering the horizontal velocity, based on an improved multi-layer RL algorithm with a shaped reward function. The present solution can solve the aircraft guidance problem intelligently and efficiently, and thus is applicable in a continuous environment where an aircraft moves in a continuous expanse of space.

The key contributions of the proposed deep RL approach are multifold:

a. A multi-layer RL model and an intelligent aircraft guidance approach are presented to perform the multi-dimensional goal aircraft guidance flight task, by reducing the state space dimensions and simplifying the neural network structure.

b. A shaped reward function is proposed to enhance the performance of aircraft trajectory, while considering Dubin’s path method.

c. The proposed work provides possible application prospects for the research on aircraft guidance while considering arrival time.

The remainder of the present study is organized as follows: in

Section 2, the background concepts on Dubins path and RL are introduced, along with the variants used in the present work; in

Section 3, the RL formulation of the aircraft guidance task is presented; in

Section 4, the environment settings and structure of model are introduced in detail; in

Section 5, numerical simulation results and discussion are given; and, in

Section 6, the conclusions of the present study are provided.

3. RL Formulation

To guide aircraft to the 4D waypoints at a certain heading, it is necessary to design 4D waypoints series and guide the aircraft to 4D waypoints at a certain heading.

3.1. 4D Waypoints Design

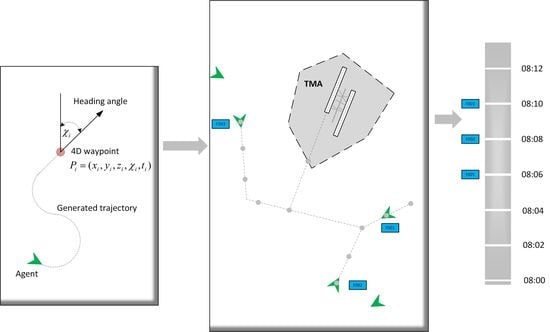

4D waypoints are waypoints with attributes of coordinates (latitude, longitude, altitude) and arrival time, as shown in

Figure 2, wherein an aircraft is flying through a series of 4D waypoints. A 4D waypoint can be defined as:

, where

are the longitude, latitude, altitude, and arrival time of aircraft at 4D waypoint

, respectively.

Assuming that there are

4D waypoints on the flight route from the departure airport

to the arrival airport

, which is shown in

Figure 3, the 4D waypoints are sequenced and numbered from the arrival airport to the departure airport:

. The

4D waypoints divide the route

L into

n segments,

, where

is the segment between 4D waypoint

and 4D waypoint

. The time can be calculated from each position to the landing site

, respectively:

.

The time from 4D waypoint

to 4D waypoint

is:

where

is the distance and

is the velocity of aircraft.

The flight segment can only be a straight line or an arc. The attribute of is determined by the two 4D waypoints and , and the connection attribute between them, where is the radius of the flight segment between with . Let represent the distance from to , and can be defined as . When , the flight segment is a straight line, whereas, when , the flight segment is an arc. Each 4D waypoint of the route has unique inherent attributes: , which respectively represent the longitude, latitude, altitude, and arrival time. The arrival time is the time when aircraft arrives at from current position .

When the flight segment

is a straight line, the radius

is 0. The length of the flight segment

is:

where (

X,

Y,

Z) are geocentric coordinates of (

x,

y,

z).

When the flight segment is an arc: , where . will be determined by four known points: and , . Segments and are straight line segments, and is a circular arc with point O as the center and as the radius. is the central angle of the arc.

The length of the flight segment

is:

From (8)–(10), an observation can be made that, when the velocity distribution of a route

is determined, the arrival time of an aircraft moving from 4D waypoint

to 4D waypoint

can be determined as:

where

is the current time of

. The interval

between 4D waypoints is determined according to the actual airline requirements.

Figure 4 shows a series of 4D waypoints. For example, if the current time at

is 08:00:00 and

is 2 min, the arrival time at

of an aircraft departure from

can be calculated by (11), which is 08:12:00.

3.2. Fly to Waypoints

Flying to 4D waypoints is an aircraft guidance problem.

Figure 5 shows the kinematic model of the aircraft, wherein an agent guides an aircraft from a current position

to the target position

.

,

and

t are the longitude, latitude, altitude, heading angle, and arrival time, respectively.

is the pitch angle of aircraft. The subscripts

a and

g denote the aircraft and goal. During movement, velocity and heading directly affect the position, and thus the kinematic equations and mathematical relationship can be defined as:

where

, and

are the delta of the 3D coordinates of the aircraft,

v is the velocity,

is the pitch angle,

is the heading angle (with respect to the geographical north) of the aircraft,

is the Earth radius, and

z is the current altitude of aircraft (with respect to sea level).

In the actual environment, the above variables can be obtained by multi sensors; however, in ATC simulators, the aircraft information can be obtained directly without error. (13) defines the change rates of velocity and heading angle:

where

, and

are the delta of horizontal velocity, vertical velocity, and heading angle turn rate, respectively.

,

,

,

,

and

are the minimum and maximum of acceleration of horizontal velocity, vertical velocity, and heading angle, respectively.

For aircraft guidance, the generated trajectory is required to be smooth, and other factors should be considered, which will be analyzed in the reward shaping subsection.

In the present study, the decision-making problem can be formulated as finite horizon MDP, which can be defined as . S and A denote the state space and action space, respectively, and both are defined as high-dimensional continuous spaces. For example, the aircraft state and the destination state are composed of 10 dimensions. Generally, the arrival time of goal state is zero, whose state space dimension can be reduced to 9. Additionally, by expressing the actions performed by the agent performing the task in terms of heading, velocity, and altitude commands, the action space also becomes a multi-dimensional continuous space. P denotes the state transition probabilities function. is the discount factor. R denotes the set of rewards that the agent obtains from the environment.

Figure 6 shows the flow chart of the training process. The total steps from when the aircraft starts from the initialization state to the termination state are referred to as an episode. In the initialization process, information such as the position and movement model of the aircraft and the goal state are initialized, in addition to the reward shaping value, which will be explained in detail in the next section. After the initialization of the environment, in each step that does not reach the termination state, the agent selects an action

from current state

, then the environment steps in next state

and returns the reward

. The tuple

is stored into the replay buffer until the environment reaches the termination state to update the policy while training the agent.

3.3. Training Optimization

3.3.1. Multi-Layer RL Algorithm

In consideration of the flight task as a multi-dimensional goal task, it is necessary to involve the multi-layer RL algorithm. The multi-layer RL algorithm can divide the flight task into several sub tasks, which also decreases both the dimensions of state space and action space. The sub control layers are divided as follows:

Position control layer: control the heading angle of aircraft;

Altitude control layer: control the vertical velocity of aircraft;

Velocity control layer: control the horizontal velocity of aircraft.

The three sub control layers have their own structure, which can be seen as three single neural networks and are integrated into a main neural network. Sub layers are updated by updating the main neural network, so they run sequentially.

Figure 7 shows the framework of the algorithm, from which it can be seen that the main neural network consists of “Actor Model” and “Critic Model”. Both of the two models have three sub layers, and they update the parameters in the same training step. The algorithm is shown in Algorithm 1. In every training step of one episode, the agent selects three actions to control position, altitude, and velocity of the aircraft by the “Actor Model”. Then, the environment obtains the selected actions and steps into next state, during which the rewards of three sub control tasks are respectively calculated by (22)–(27). Finally, the agent will learn from the data in replay buffer.

| Algorithm 1 Multi-layer RL algorithm. |

- 1:

// Assume policy parameters of three sub layers are , and - 2:

// Assume critic parameters of three sub layers are , and - 3:

// Assume , and are the step rewards of three sub layers respectively - 4:

Initialize the environment - 5:

Initialize parameters , , , , and - 6:

if is training mode then - 7:

for each episode do - 8:

Randomly initialize the environment parameters - 9:

for each episode in range episodes do - 10:

obtain actions according to ; let - 11:

obtain actions according to ; let - 12:

obtain actions according to ; let - 13:

let , , - 14:

step the environment and get tuple () - 15:

store tuple data in replay buffer - 16:

if done then - 17:

break - 18:

end if - 19:

end for - 20:

update in by minimizing by (7) - 21:

update in by maximizing in (6) - 22:

end for - 23:

else - 24:

for each testing episode do - 25:

for each step in range episodes do - 26:

run the environment - 27:

end for - 28:

end for - 29:

end if

|

3.3.2. State Space

In the present experiment, all possible states have an impact on the final results in the RL environment. Therefore, it is important to consider all parameters that may have an impact on the experimental results when setting the state space. The present experimental goal was to reach a target position (latitude, longitude, altitude, and heading) at the correct time. For the above purpose, a multi-layer RL model was introduced with three layers: a position control layer to select the heading, a velocity control layer to change the velocity, and an altitude control layer to alter the aircraft altitude. The state space was designed separately for each of the layers.

For the position control layer, which aimed to lead the aircraft towards the target position (latitude, longitude) having a goal heading, the state space

was designed as:

where Δx denotes the delta longitude of the target and the aircraft Δy denotes the delta latitude of the target and the aircraft,

represents the aircraft heading, and

represents the goal heading. The domain of

, and

are

and

, in degrees, respectively.

For the velocity control layer, which aimed to reach the target position (latitude, longitude, and heading) at certain time, the state space

was designed as:

where

denotes horizontal velocity of the aircraft,

is the arrival time, and

is the distance of aircraft to goal. The domain of

will be introduced in the “Numerical Experiment” section.

For the altitude control layer, which aimed to reach the target altitude, the state space

was designed as:

where

is the delta of

and

,

denotes the current altitude, and

denotes the goal altitude. The domain of the altitude

z is from 0 m to 10,000 m.

3.3.3. Action Space

In the multi-layer RL algorithm, three layers that output actions, heading angle, vertical velocity, and horizontal velocity, respectively, were included.

The action space of the position control layer can be defined as:

where 1 means the aircraft remains at the current heading; 0 and 2 represent the left turn and right turn of the aircraft, respectively.

The action space of the vertical velocity control layer can be defined as:

where 1 means the aircraft remains at the current altitude and the vertical velocity is zero; 0 and 2 represent descending and climbing, respectively.

The action space of the horizontal velocity control layer can be defined as:

where 1 means the aircraft remains at the current horizontal velocity; 0 and 2 represent deceleration and acceleration, respectively.

3.3.4. Termination State

The environment resets when entering a termination state. The following termination states were designed:

Running out of time. The agent is trained every 300 steps, and, if the agent is trained for more than 300 steps, time runs out and the environment resets.

Reaching the goal. If the agent-goal distance is less than 2 km and the delta of the heading angle is lower than 28°, the aircraft is assumed to have reached the goal.

3.3.5. Reward Function Design

To learn a policy for an MDP , the reinforcement learning algorithm could instead be run on a transformed MDP.

, where is the transformed reward function, and is the shaping reward function.

Potential function

[

32,

33] is possible applied to reward shaping, which will modify the reward function to accelerate the agent’s learning to move straight forward to the goal. For each state

s, we added the difference of potentials to the reward of a transition.

Figure 8 shows an agent learning to reach goal, with a +3 reward for going up to a higher potential value state, a −3 reward for going down to a lower potential value state, and an additional −1 reward for losing time at each step.

A shaping reward function

is potential-based if there exists

:

Owing to F being a potential-based shaping function, every optimal policy in will also be an optimal policy in .

At every step, the agent takes an action

from current state

and transits to the next state

. The reward function can be defined as:

where

is the terminal state reward, which is defined as:

The aircraft guidance task involves five demands: latitude, longitude, altitude, arrival time, and heading angle. Thus,

is defined as:

where

is the horizontal distance reward function, which denotes the distance to the target.

is the direction reward function, which denotes the heading angle to the target;

is the altitude reward function, which denotes the distance in altitude to the target;

is the arrival time reward function, which denotes the arrival time to the target;

is the length of Dubins path and can be calculated by (1) and (2);

and

are coefficients.

However, too many factors considered in the reward function will lead to low convergence efficiency and local region of application. There are four sub functions in (25), and in the present study, a shaped reward function was proposed, wherein the reward function form was simplified using the multi-layer reward function.

In (25),

and

are merged into one function. Thus,

can be redefined by three parts:

where

is the coefficient.

Table 1 shows all the coefficients of reward functions.