Efficient Sky Dehazing by Atmospheric Light Fusion

Abstract

1. Introduction

- efficient estimation of (scalar atmospheric light) used as a central value for the fusion,

- optimization of two regularization terms of the transmission,

- radiance computed by two possible methods:

- –

- total fusion of all possible values of weighted by Gaussian coefficients,

- –

- limited weightless fusion of the neighborhood values of estimated in the first step.

2. Contributions

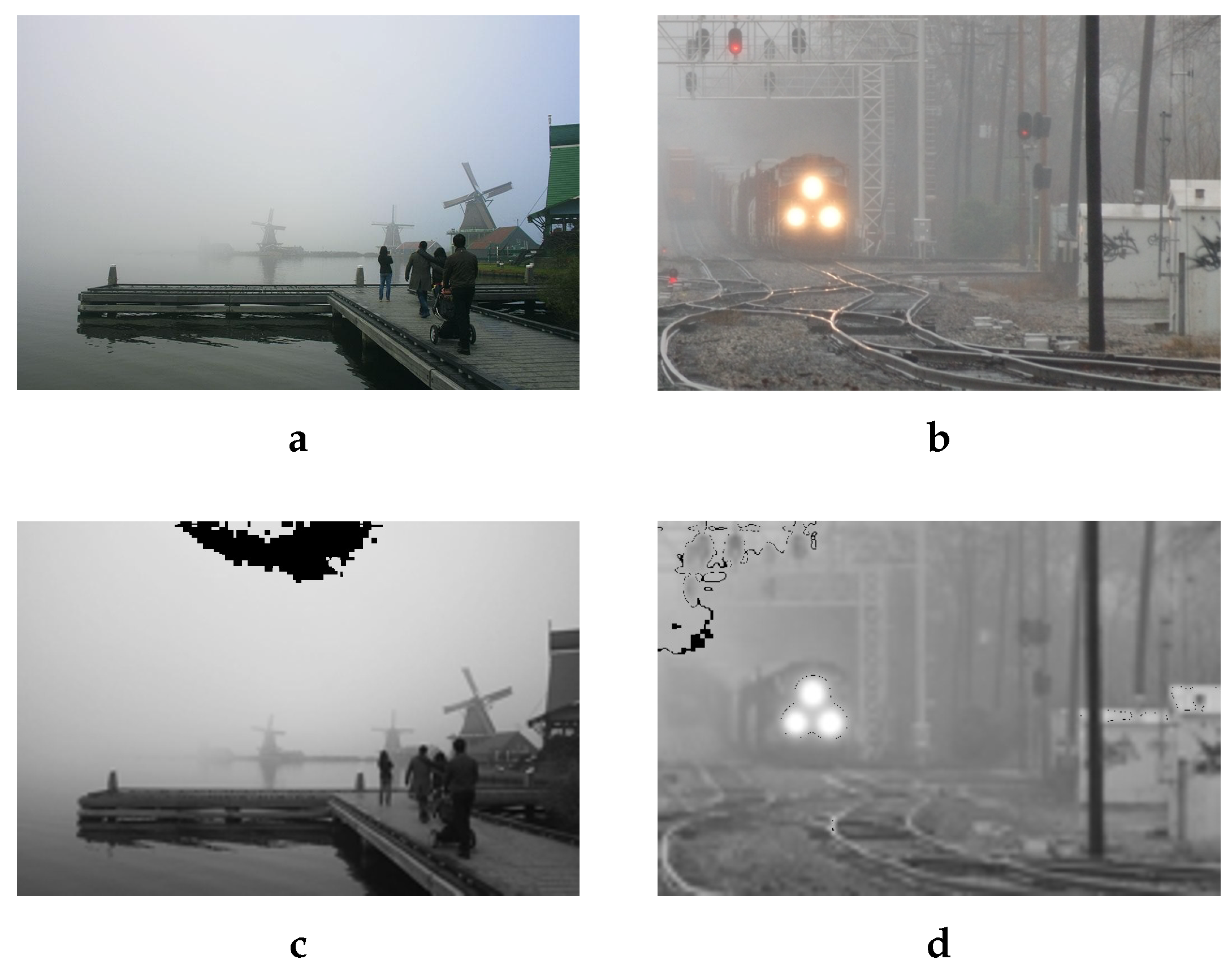

2.1. Estimation of Atmospheric Light

- the fog is best represented in the gray level image because the hazed images contain few colors and their contrast is very low. So, we propose to estimate it on this single channel for a homogeneous rendering especially in areas where the haze is more present.

- “burned” areas of the image have a maximum pixel value (e.g., 255), which is often incorrect to be considered as the haze color. For this reason, the grayscale channel is then resized by reducing its size to further smooth burned areas (high light) by merging several pixels together.

2.2. Estimation of the Transmission

- keeping only the main contours defined by the greatest depth difference between two regions of the image. Given that no depth information is available, our estimation is based on the difference of intensity between two homogeneous regions.

- using a Gaussian filter for smoothing the areas where the variation of the depth (e.g., intensity variation) is small.

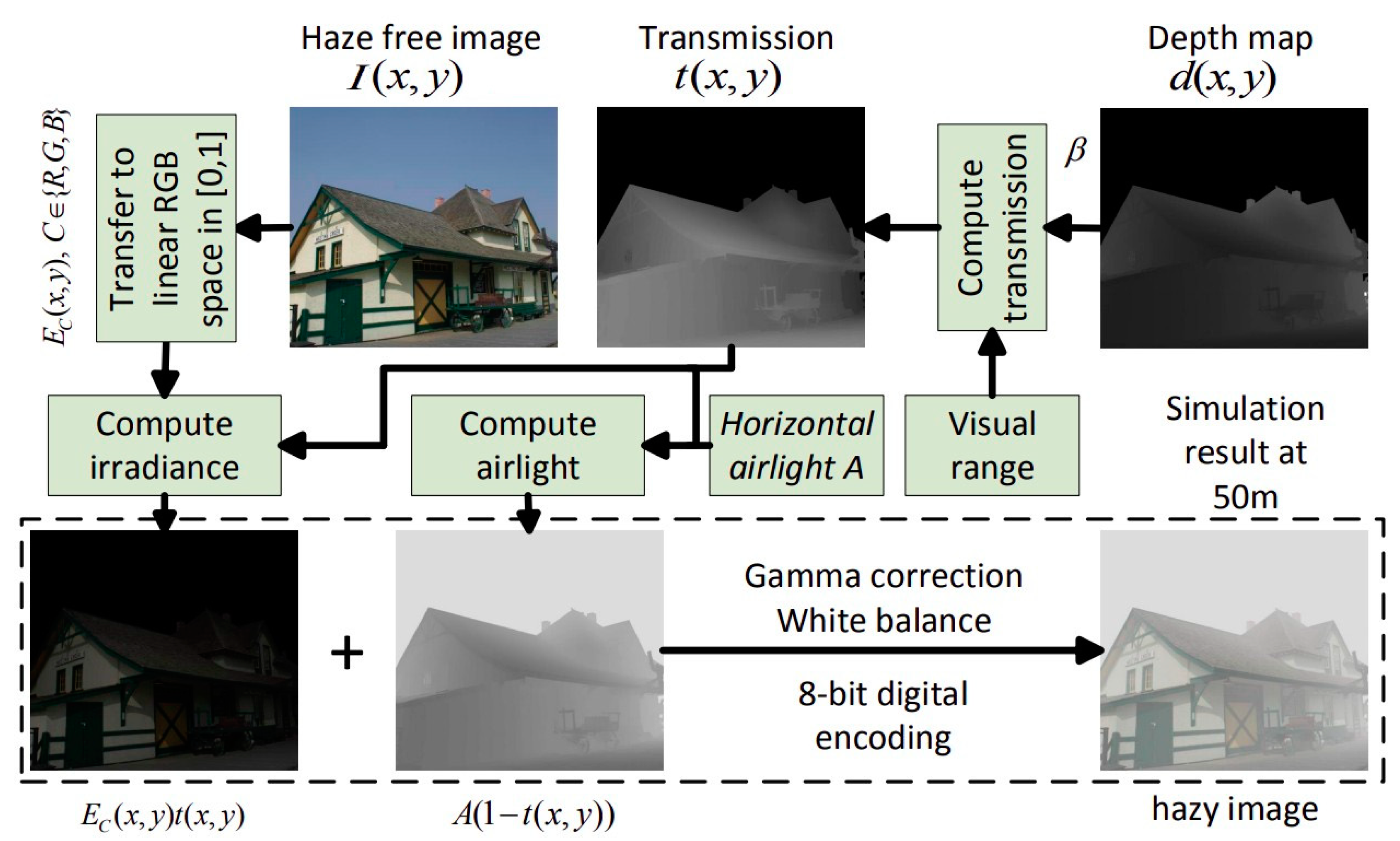

2.3. Formulation

- is the regularization parameter.

- : the “norm” zero corresponds to the number of times the magnitude is not black.

- : the application of the Gaussian filter H on the transmission t for smoothing areas with a low gradient.

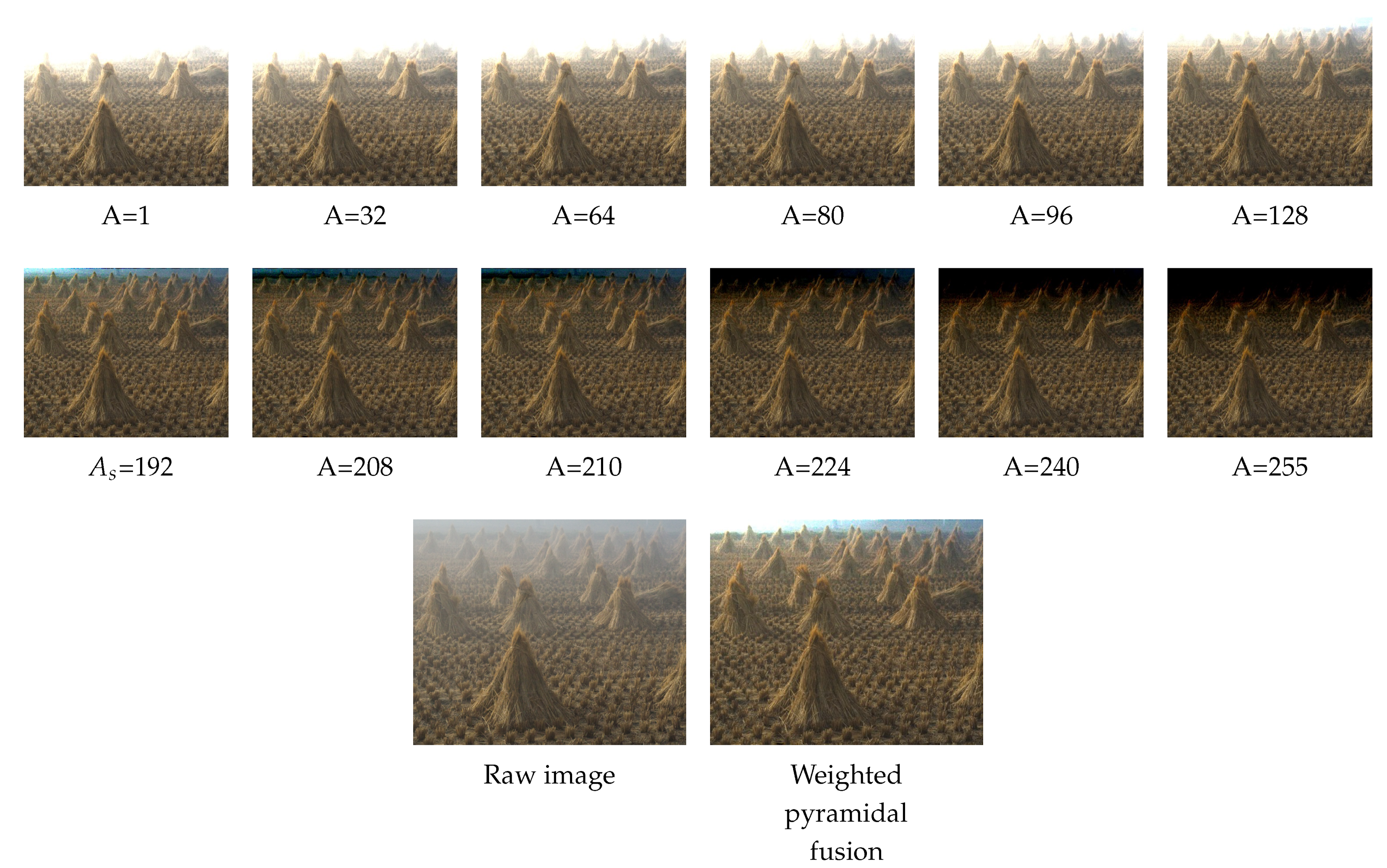

3. Fusion

3.1. Gaussian Pyramid

3.2. Laplacian Pyramid

3.3. Fusion Process

3.3.1. Pyramidal Fusion

3.3.2. Reduced Pyramidal Fusion

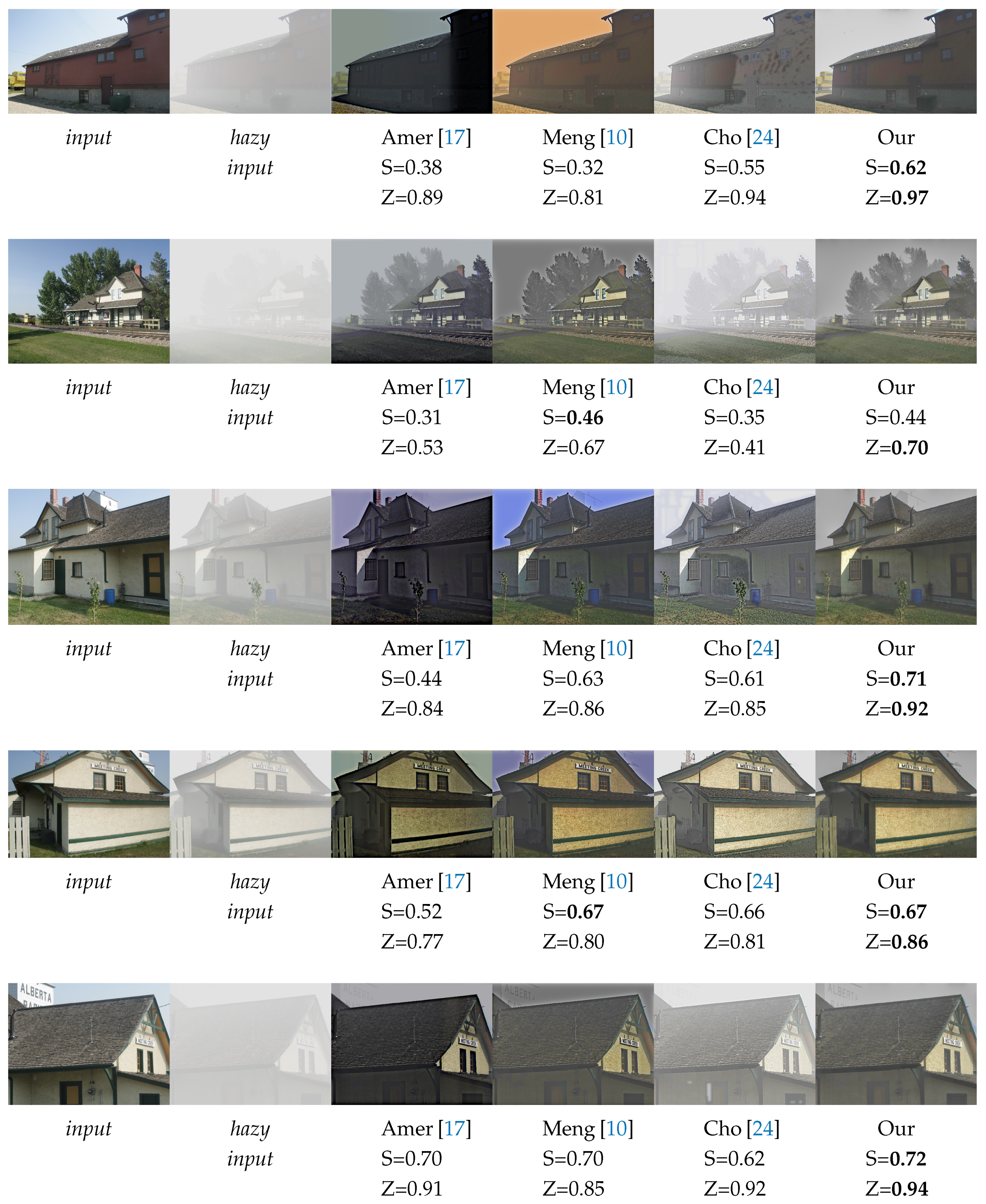

4. Results

4.1. Quantitative Results

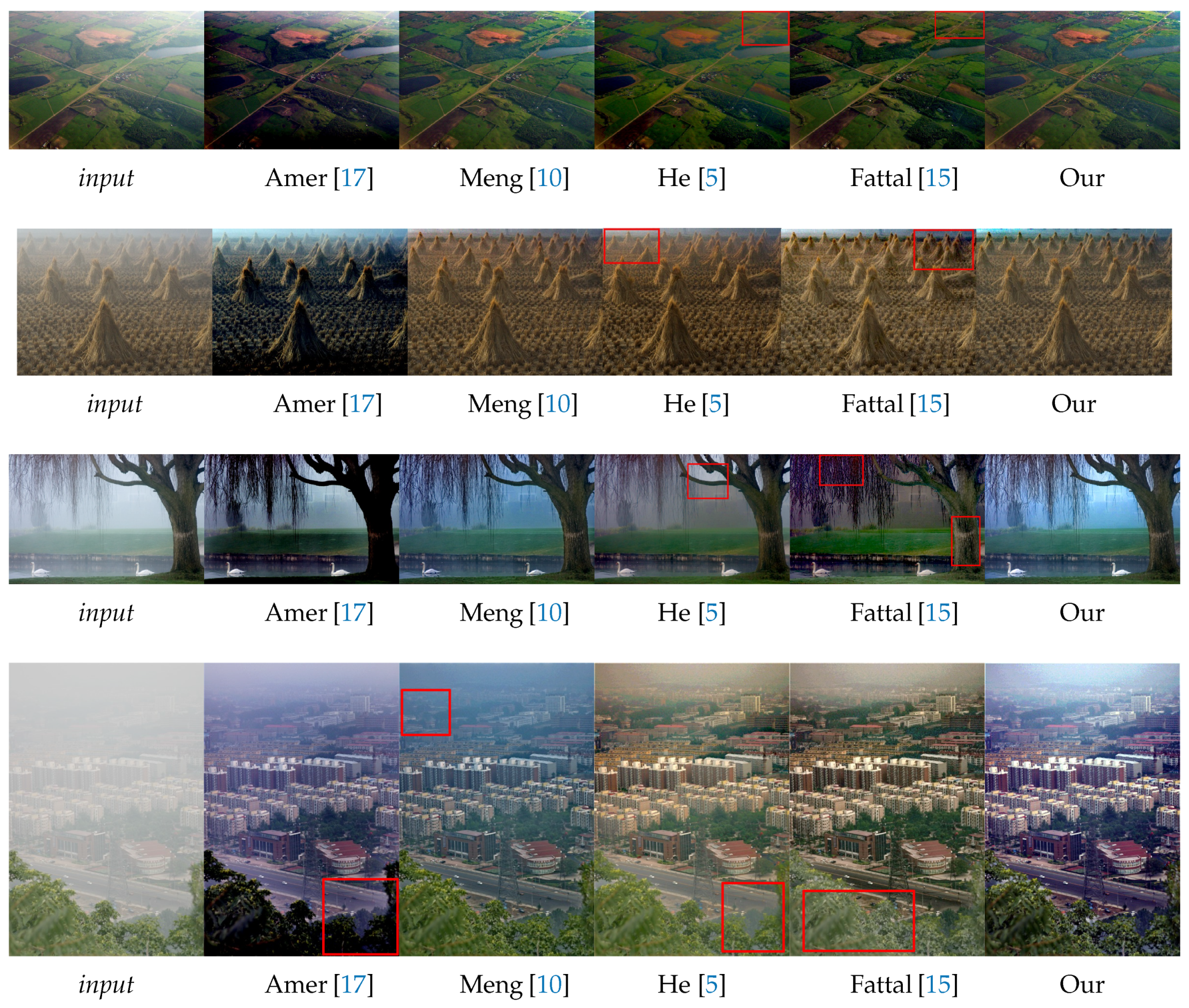

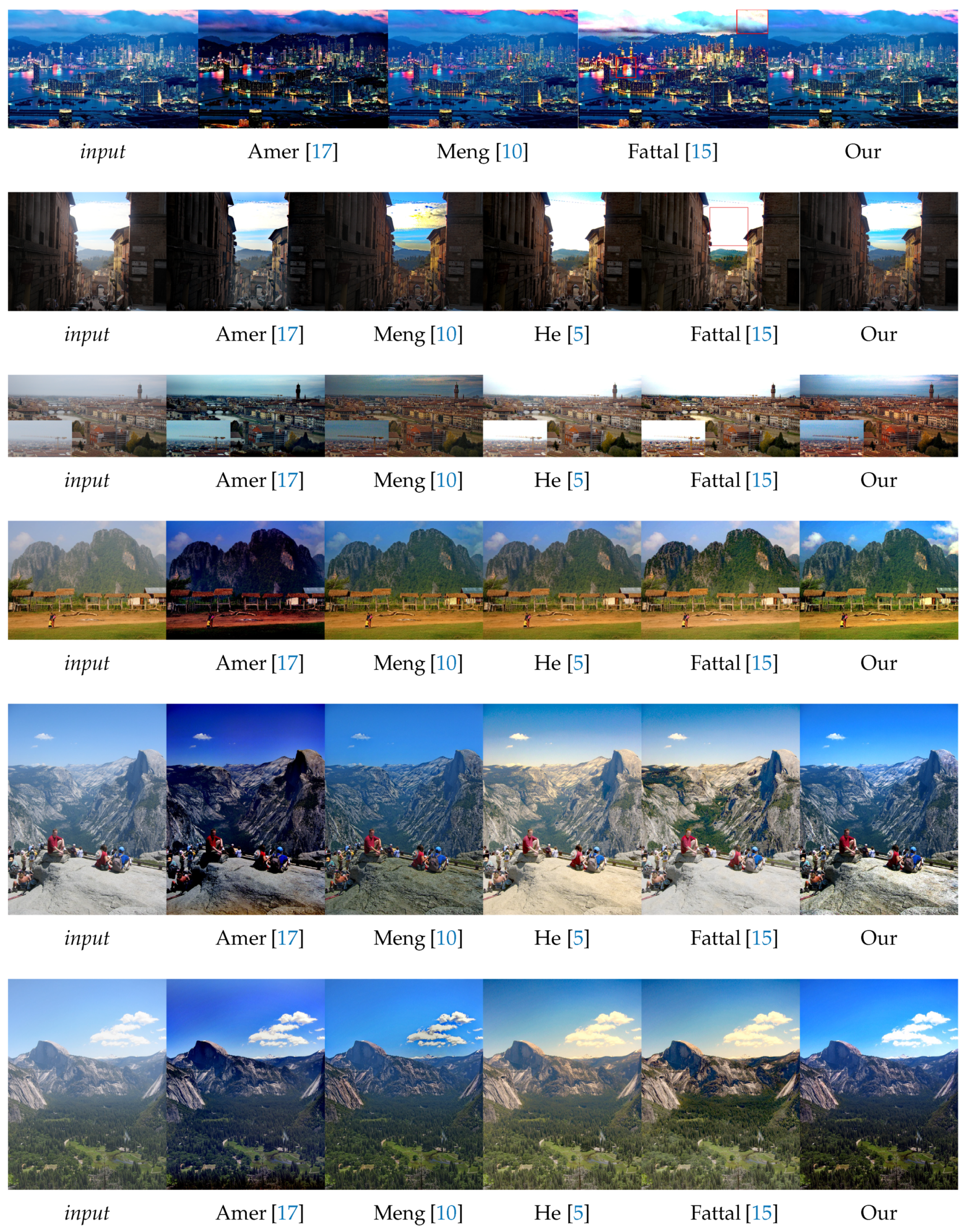

4.2. Qualitative Results

4.2.1. Landscape Images

4.2.2. Sky Images

4.2.3. Haze-Free Images

4.3. Computation Time

5. Conclusions and Perspective

Author Contributions

Funding

Conflicts of Interest

References

- Dabass, J.; Arora, S.; Vig, R.; Hanmandlu, M. Mammogram Image Enhancement Using Entropy and CLAHE Based Intuitionistic Fuzzy Method. In Proceedings of the 6th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 7–8 March 2019. [Google Scholar]

- Hitam, M.S.; Awalludin, E.A.; Yussof, W.N.J.H.W.; Bachok, Z. Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In Proceedings of the 2013 International Conference on Computer Applications Technology, Sousse, Tunisia, 20–22 January 2013. [Google Scholar]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. Graph. Gems 1994, 1, 474–485. [Google Scholar]

- Harald, K. Theorie der horizontalen Sichtweite. Beitr. Zur Phys. Der Freien Atmosphare 1924, 640, 33–53. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Gibson, K.B.; Vo, D.T.; Nguyen, T.Q. An investigation of dehazing effects on image and video coding. IEEE Trans. Image Process. 2011, 21, 662–673. [Google Scholar] [CrossRef] [PubMed]

- Tarel, J.-P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009. [Google Scholar]

- Yu, J.; Xiao, C.; Li, D. Physics-based fast single image fog removal. In Proceedings of the IEEE 10th International Conference on Signal Processing Proceedings, Beijing, China, 24–28 October 2010. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Ancuti, C.O.; Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Chromatic framework for vision in bad weather. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR 2000, Hilton Head Island, SC, USA, 15 June 2000. [Google Scholar]

- Shwartz, S.; Einav, N.; Yoav, Y. Blind haze separation. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. 2014, 34, 13. [Google Scholar] [CrossRef]

- Maragos, P.; Schafer, R.W. Morphological filters–Part II: Their relations to median, order-statistic, and stack filters. IEEE Trans. Acoust. Speech Signal Process. 1987, 35, 1170–1184. [Google Scholar] [CrossRef]

- Amer, K.O.; Elbouz, M.; Alfalou, A.; Brosseau, C.; Hajjami, J. Enhancing underwater optical imaging by using a low-pass polarization filter. Opt. Express 2019, 27, 621–643. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yang, J.; Yin, W.; Zhang, Y. A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci. 2008, 1, 248–272. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, D.; Gaurav, S. Hazerd: An outdoor scene dataset and benchmark for single image dehazing. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017. [Google Scholar]

- Burt, P.; Adelson, E. The Laplacian pyramid as a compact image code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Di Stefano, L.; Mattoccia, S.; Tombari, F. ZNCC-based template matching using bounded partial correlation. Pattern Recognit. Lett. 2005, 26, 2129–2134. [Google Scholar] [CrossRef]

- McCartney, E.J. Optics of the atmosphere: Scattering by molecules and particles. IEEE J. Quantum Electron. 1976, 14, 698–699. [Google Scholar] [CrossRef]

- Cho, Y.; Jeong, J.; Kim, A. Model-assisted multiband fusion for single image enhancement and applications to robot vision. IEEE Robot. Autom. Lett. 2018, 3, 2822–2829. [Google Scholar]

| Resolution | Proposed Method | Meng [10] | He [5], Amer [17] |

|---|---|---|---|

| 0.005205 | 0.036404 | 0.02140 | |

| 0.208691 | 8.541274 | 3.8477 |

| Resolution (RGB) | Computation Time (s) |

|---|---|

| 1.5746 | |

| 3.7901 | |

| 5.1347 |

| Methods | Computation Time (s) |

|---|---|

| Amer [17] | 0.28 |

| Fattal [15] | 1.54 * |

| Our | 1.96 |

| Meng [10] | 4.25 |

| Cho [24] | 8.20 |

| He [5] | 18.06 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hajjami, J.; Napoléon, T.; Alfalou, A. Efficient Sky Dehazing by Atmospheric Light Fusion. Sensors 2020, 20, 4893. https://doi.org/10.3390/s20174893

Hajjami J, Napoléon T, Alfalou A. Efficient Sky Dehazing by Atmospheric Light Fusion. Sensors. 2020; 20(17):4893. https://doi.org/10.3390/s20174893

Chicago/Turabian StyleHajjami, Jaouad, Thibault Napoléon, and Ayman Alfalou. 2020. "Efficient Sky Dehazing by Atmospheric Light Fusion" Sensors 20, no. 17: 4893. https://doi.org/10.3390/s20174893

APA StyleHajjami, J., Napoléon, T., & Alfalou, A. (2020). Efficient Sky Dehazing by Atmospheric Light Fusion. Sensors, 20(17), 4893. https://doi.org/10.3390/s20174893