Single-Pixel Imaging and Its Application in Three-Dimensional Reconstruction: A Brief Review

Abstract

:1. Introduction

2. Three-Dimensional Single-Pixel Imaging

2.1. Mathematic Interperation of Single-Pixel Imaging

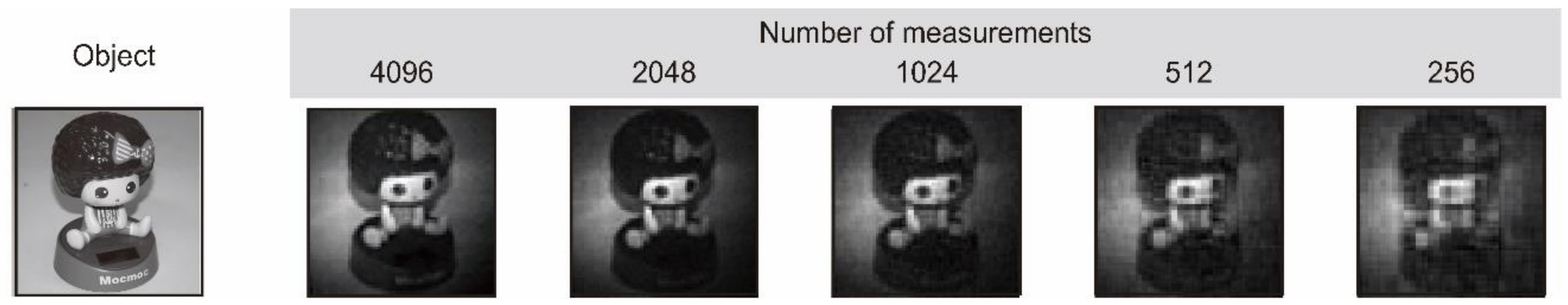

2.2. Performance of Single-Pixel Imaging

2.2.1. SLM

- Spatial resolution

- Data acquisition time

- Spectrum

2.2.2. Single-Pixel Detector

2.3. From 2D to 3D

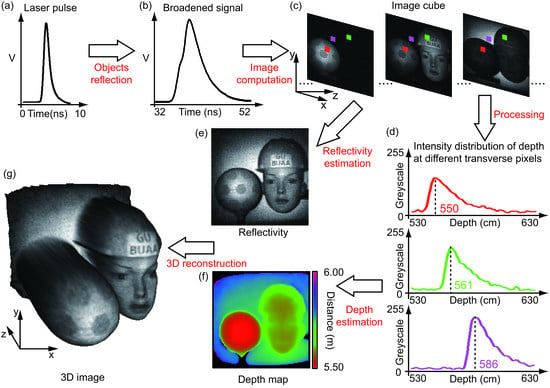

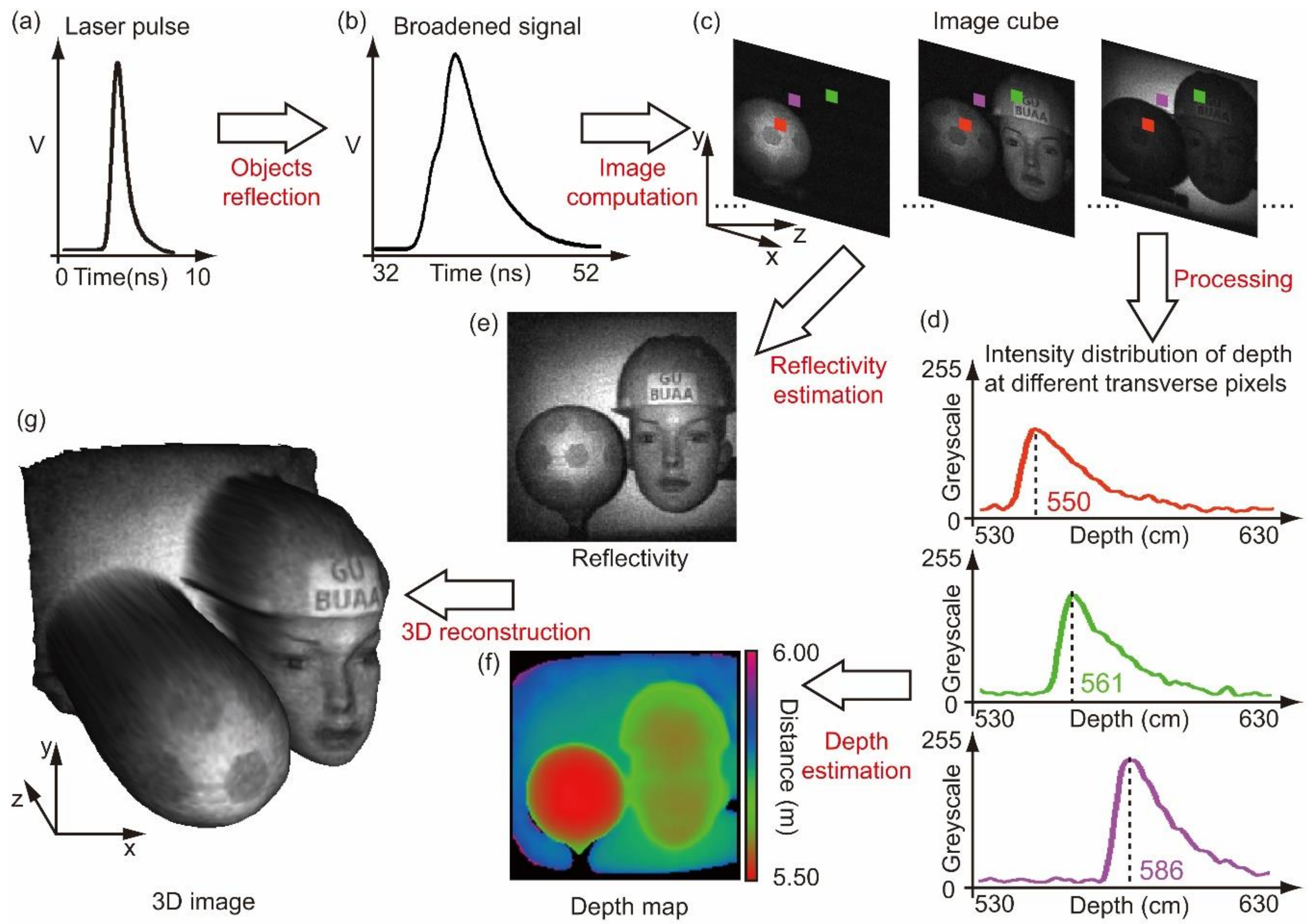

2.3.1. Time-of-Flight Approach

- Repetition rate of the pulsed light: One pulse corresponds to one mask measurement, therefore the higher the repetition rate is, the faster an SLM displays the set of masks.

- Pulse width of the pulsed light: A narrower pulse width means a smaller uncertainty in time-of-flight measurement and less overlapping between back-scattered signals from objects of different depths, which in turn improves the system depth resolution.

- The type of the single-pixel detector: The choice of whether to use a conventional photodiode or one operated with a higher reverse bias (e.g., a single-photon counting detector), is dependent on the application. A single-photon counting detector, which can resolve single-photon arrival with a faster response time, is well suited for low-light-level imaging. However, its total detection efficiency is very low since only one photon is detected for each measuring pulse. Furthermore, the inherent dead time of the single-photon counting detector, often 10s of nanoseconds, prohibits the information retrieval of a farther object if a closer one has a relatively higher detection probability. In contrast, a high-speed photodiode can record the temporal response from a single illumination pulse, which can be advantageous in applications with a relatively large illumination.

- Time bin and time jitter of the electronics: These two parameters are usually closely related, and the smaller they are, the better the depth resolution will be. However, a smaller time bin also means a larger amount of data, which will burden the reconstruction of the 3D image.

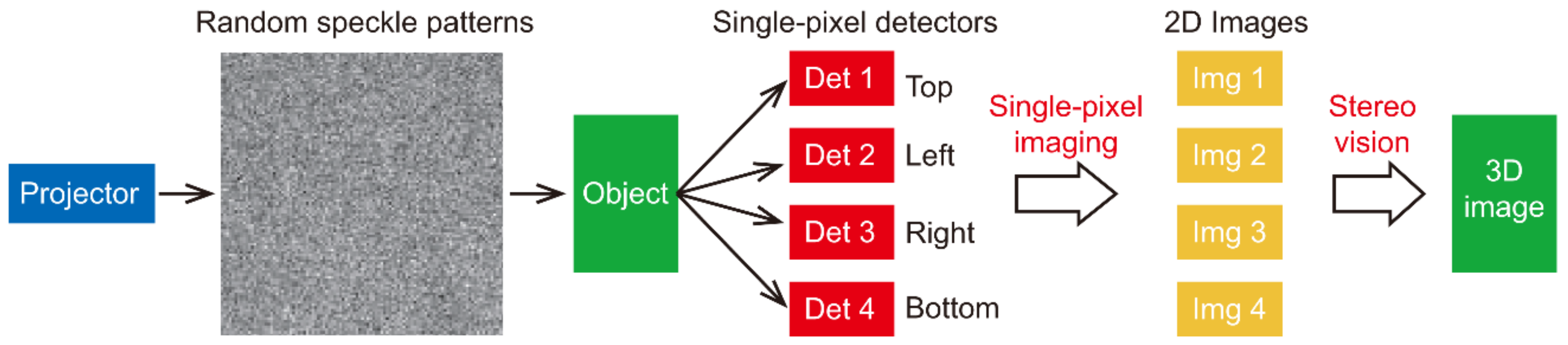

2.3.2. Stereo Vision Approach

3. Conclusions and Discussions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pittman, T.B.; Shih, Y.H.; Strekalov, D.V.; Sergienko, A.V. Optical imaging by means of two-photon quantum entanglement. Phys. Rev. A 1995, 52, R3429. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, J.H. Computational ghost imaging. Phys. Rev. A 2008, 78, 061802. [Google Scholar] [CrossRef]

- Duarte, M.F.; Davenport, M.A.; Takhar, D.; Laska, J.N.; Sun, T.; Kelly, K.F. Single-pixel imaging via compressive sampling. IEEE Signal Process. Mag. 2008, 25, 83–91. [Google Scholar] [CrossRef] [Green Version]

- Bromberg, Y.; Katz, O.; Silberberg, Y. Ghost imaging with a single detector. Phys. Rev. A 2009, 79, 053840. [Google Scholar] [CrossRef]

- Nipkow, P. Optical Disk. German Patent 30,150, 6 January 1884. [Google Scholar]

- Baird, J.L. Apparatus for Transmitting Views or Images to a Distance. U.S. Patent 1,699,270, 15 January 1929. [Google Scholar]

- Mertz, P.; Gray, F. A theory of scanning and its relation to the characteristics of the transmitted signal in telephotography and television. Bell Syst. Tech. J. 1934, 13, 464–515. [Google Scholar] [CrossRef]

- Kane, T.J.; Byvik, C.E.; Kozlovsky, W.J.; Byer, R.L. Coherent laser radar at 1.06 μm using Nd:YAG lasers. Opt. Lett. 1987, 12, 239–241. [Google Scholar] [CrossRef]

- Hu, B.B.; Nuss, M.C. Imaging with terahertz waves. Opt. Lett. 1995, 20, 1716–1718. [Google Scholar] [CrossRef]

- Thibault, P.; Dierolf, M.; Menzel, A.; Bunk, O.; David, C.; Pfeiffer, F. High-resolution scanning x-ray diffraction microscopy. Science 2008, 321, 379–382. [Google Scholar] [CrossRef]

- Scarcelli, G.; Berardi, V.; Shih, Y.H. Can two-photon correlation of chaotic light be considered as correlation of intensity fluctuations? Phys. Rev. Lett. 2006, 96, 063602. [Google Scholar] [CrossRef]

- Shih, Y.H. Quantum imaging. IEEE J. Sel. Top. Quant. 2007, 13, 1016. [Google Scholar] [CrossRef]

- Bennink, R.S.; Bentley, S.J.; Boyd, R.W. “Two-photon” coincidence imaging with a classical source. Phys. Rev. Lett. 2002, 89, 113601. [Google Scholar] [CrossRef] [PubMed]

- Gatti, A.; Brambilla, E.; Bache, M.; Lugiato, L. Correlated imaging: Quantum and classical. Phys. Rev. A 2004, 70, 013802. [Google Scholar] [CrossRef]

- Valencia, A.; Scarcelli, G.; D’Angelo, M.; Shih, Y. Two-photon imaging with thermal light. Phys. Rev. Lett. 2005, 94, 063601. [Google Scholar] [CrossRef] [PubMed]

- Zhai, Y.H.; Chen, X.H.; Zhang, D.; Wu, L.A. Two-photon interference with true thermal light. Phys. Rev. A 2005, 72, 043805. [Google Scholar] [CrossRef]

- Katz, O.; Bromberg, Y.; Silberberg, Y. Compressive ghost imaging. Phys. Rev. A 2009, 95, 131110. [Google Scholar] [CrossRef] [Green Version]

- Erkmen, B.I.; Shapiro, J.H. Unified theory of ghost imaging with Gaussian-state light. Phys. Rev. A 2012, 77, 043809. [Google Scholar] [CrossRef]

- Shapiro, J.H.; Boyd, R.W. The physics of ghost imaging. Quantum Inf. Process. 2012, 11, 949–993. [Google Scholar] [CrossRef] [Green Version]

- Altmann, Y.; Mclaughlin, S.; Padgett, M.J.; Goyal, V.K.; Hero, A.O.; Faccio, D. Quantum-inspired computational imaging. Science 2018, 361, eaat2298. [Google Scholar] [CrossRef] [PubMed]

- Candès, E.J. Compressive sampling. In Proceedings of the 2006 International Congress of Mathematicians, Madrid, Spain, 22–30 August 2006; International Mathematical Union: Berlin, Germany, 2006; pp. 1433–1452. [Google Scholar] [CrossRef]

- Donoho, D.L. Compressed sensing. IEEE Trans. Inform. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candès, E.; Romberg, J. Sparsity and incoherence in compressive sampling. Inverse Probl. 2007, 23, 969. [Google Scholar] [CrossRef]

- Baraniuk, R.G. Compressive sensing [lecture notes]. IEEE Signal Process. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Studer, V.; Jérome, B.; Chahid, M.; Mousavi, H.S.; Candes, E.; Dahan, M. Compressive fluorescence microscopy for biological and hyperspectral imaging. Proc. Natl. Acad. Sci. USA 2012, 109, E1679–E1687. [Google Scholar] [CrossRef]

- Welsh, S.S.; Edgar, M.P.; Edgar, S.S.; Bowman, M.P.; Jonathan, R.P.; Sun, B.; Padgett, M.J. Fast full-color computational imaging with single-pixel detectors. Opt. Express 2013, 21, 23068–23074. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Radwell, N.; Mitchell, K.J.; Gibson, G.M.; Edgar, M.P.; Bowman, R.; Padgett, M.J. Single-pixel infrared and visible microscope. Optica 2014, 1, 285–289. [Google Scholar] [CrossRef] [Green Version]

- Edgar, M.P.; Gibson, G.M.; Bowman, R.W.; Sun, B.; Radwell, N.; Mitchell, K.J. Simultaneous real-time visible and infrared video with single-pixel detectors. Sci. Rep. 2015, 5, 10669. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bian, L.; Suo, J.; Situ, G.; Li, Z.; Fan, J.; Chen, F. Multispectral imaging using a single bucket detector. Sci. Rep. 2016, 6, 24752. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Watts, C.M.; Shrekenhamer, D.; Montoya, J.; Lipworth, G.; Hunt, J.; Sleasman, T. Terahertz compressive imaging with metamaterial spatial light modulators. Nat. Photonics 2014, 8, 605–609. [Google Scholar] [CrossRef]

- Stantchev, R.I.; Sun, B.; Hornett, S.M.; Hobson, P.A.; Gibson, G.M.; Padgett, M.J. Noninvasive, near-field terahertz imaging of hidden objects using a single-pixel detector. Sci. Adv. 2016, 2, e1600190. [Google Scholar] [CrossRef]

- Cheng, J.; Han, S. Incoherent coincidence imaging and its applicability in X-ray diffraction. Phys. Rev. Lett. 2004, 92, 093903. [Google Scholar] [CrossRef]

- Greenberg, J.; Krishnamurthy, K.; David, B. Compressive single-pixel snapshot x-ray diffraction imaging. Opt. Lett. 2014, 39, 111–114. [Google Scholar] [CrossRef]

- Zhang, A.X.; He, Y.H.; Wu, L.A.; Chen, L.M.; Wang, B.B. Tabletop x-ray ghost imaging with ultra-low radiation. Optica 2018, 5, 374–377. [Google Scholar] [CrossRef] [Green Version]

- Ryczkowski, P.; Barbier, M.; Friberg, A.T.; Dudley, J.M.; Genty, G. Ghost imaging in the time domain. Nat. Photonics 2016, 10, 167–170. [Google Scholar] [CrossRef]

- Faccio, D. Optical communications: Temporal ghost imaging. Nat. Photonics 2016, 10, 150–152. [Google Scholar] [CrossRef]

- Devaux, F.; Moreau, P.A.; Denis, S.; Lantz, E. Computational temporal ghost imaging. Optica 2016, 3, 698–701. [Google Scholar] [CrossRef]

- Howland, G.A.; Dixon, P.B.; Howell, J.C. Photon-counting compressive sensing laser radar for 3D imaging. Appl. Opt. 2011, 50, 5917–5920. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Gong, W.; Chen, M.; Li, E.; Wang, H.; Xu, W. Ghost imaging LIDAR via sparsity constraints. Appl. Phys. Lett. 2012, 101, 141123. [Google Scholar] [CrossRef]

- Howland, G.A.; Lum, D.J.; Ware, M.R.; Howell, J.C. Photon counting compressive depth mapping. Opt. Express 2013, 21, 23822. [Google Scholar] [CrossRef] [Green Version]

- Zhao, C.; Gong, W.; Chen, M.; Li, E.; Wang, H.; Xu, W. Ghost imaging lidar via sparsity constraints in real atmosphere. Opt. Photonics J. 2013, 3, 83. [Google Scholar] [CrossRef]

- Sun, B.; Edgar, M.P.; Bowman, R.; Vittert, L.E.; Welsh, S.; Bowman, A. 3D computational imaging with single-pixel detectors. Science 2013, 340, 844–847. [Google Scholar] [CrossRef]

- Yu, H.; Li, E.; Gong, W.; Han, S. Structured image reconstruction for three-dimensional ghost imaging lidar. Opt. Express 2015, 23, 14541. [Google Scholar] [CrossRef]

- Yu, W.K.; Yao, X.R.; Liu, X.F.; Li, L.Z.; Zhai, G.J. Three-dimensional single-pixel compressive reflectivity imaging based on complementary modulation. Appl. Opt. 2015, 54, 363–367. [Google Scholar] [CrossRef]

- Sun, M.J.; Edgar, M.P.; Gibson, G.M.; Sun, B.; Radwell, N.; Lamb, R. Single-pixel three-dimensional imaging with time-based depth resolution. Nat. Commun. 2016, 7, 12010. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Zhong, J. Tree-dimensional single-pixel imaging with far fewer measurements than effective image pixels. Opt. Lett. 2016, 41, 2497–2500. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.B.; Liu, S.J.; Peng, J.Z.; Yao, M.H.; Zheng, G.A.; Zhong, J.G. Simultaneous spatial, spectral, and 3D compressive imaging via efficient Fourier single-pixel measurements. Optica 2018, 5, 315–319. [Google Scholar] [CrossRef]

- Salvador-Balaguer, E.; Latorre-Carmona, P.; Chabert, C.; Pla, F.; Lancis, J.; Enrique Tajahuerce, E. Low-cost single-pixel 3D imaging by using an LED array. Opt. Express 2018, 26, 15623–15631. [Google Scholar] [CrossRef] [PubMed]

- Massa, J.S.; Wallace, A.M.; Buller, G.S.; Fancey, S.J.; Walker, A.C. Laser depth measurement based on time-correlated single photon counting. Opt. Lett. 1997, 22, 543–545. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, A.; Collins, R.J.; Krichel, N.J.; Fernandez, V.; Wallace, A.M.; Buller, G.S. Long-range time-of-flight scanning sensor based on high-speed time-correlated single-photon counting. Appl. Opt. 2009, 48, 6241–6251. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, A.; Krichel, N.J.; Gemmell, N.R.; Ren, X.; Tanner, M.G.; Dorenbos, S.N. Kilometer-range, high resolution depth imaging via 1560 nm wavelength single-photon detection. Opt. Express 2013, 21, 8904–8915. [Google Scholar] [CrossRef]

- Lochocki, B.; Gambín, A.; Manzanera, S.; Irles, E.; Tajahuerce, E.; Lancis, J.; Artal, P. Single pixel camera ophthalmoscope. Optica 2016, 3, 1056–1059. [Google Scholar] [CrossRef]

- Sun, M.J.; Edgar, M.P.; Phillips, D.B.; Phillips, D.B.; Gibson, G.M.; Padgett, M.J. Improving the signal-to-noise ratio of single-pixel imaging using digital microscanning. Opt. Express 2016, 24, 10476–10485. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Zhao, S. Fast reconstructed and high-quality ghost imaging with fast Walsh-Hadamard transform. Photonics Res. 2016, 4, 240–244. [Google Scholar] [CrossRef]

- Zhang, Z.; Ma, X.; Zhong, J. Single-pixel imaging by means of Fourier spectrum acquisition. Nat. Commun. 2015, 6, 6225. [Google Scholar] [CrossRef] [Green Version]

- Czajkowski, K.M.; Pastuszczak, A.; Kotyński, R. Real-time single-pixel video imaging with Fourier domain regularization. Opt. Express 2018, 26, 20009–20022. [Google Scholar] [CrossRef] [PubMed]

- Aβmann, M.; Bayer, M. Compressive adaptive computational ghost imaging. Sci. Rep. 2013, 3, 1545. [Google Scholar] [CrossRef] [PubMed]

- Yu, W.K.; Li, M.F.; Yao, X.R.; Liu, X.F.; Wu, L.A.; Zhai, G.J. Adaptive compressive ghost imaging based on wavelet trees and sparse representation. Opt. Express 2014, 22, 7133–7144. [Google Scholar] [CrossRef]

- Rousset, F.; Ducros, N.; Farina, A.; Valentini, G.; D’Andrea, C.; Peyrin, F. Adaptive basis scan by wavelet prediction for single-pixel imaging. IEEE Trans. Comput. Imaging 2017, 3, 36–46. [Google Scholar] [CrossRef]

- Czajkowski, K.M.; Pastuszczak, A.; Kotyński, R. Single-pixel imaging with Morlet wavelet correlated random patterns. Sci. Rep. 2018, 8, 466. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, M.J.; Meng, L.T.; Edgar, M.P.; Padgett, M.J.; Radwell, N.A. Russian Dolls ordering of the Hadamard basis for compressive single-pixel imaging. Sci. Rep. 2017, 7, 3464. [Google Scholar] [CrossRef]

- Aravind, R.; Cash, G.L.; Worth, J.P. On implementing the JPEG still-picture compression algorithm. Adv. Intell. Rob. Syst. Conf. 1989, 1199, 799–808. [Google Scholar] [CrossRef]

- Cheng, X.; Liu, Q.; Luo, K.H.; Wu, L.A. Lensless ghost imaging with true thermal light. Opt. Lett. 2009, 34, 695–697. [Google Scholar] [CrossRef] [Green Version]

- Ferri, F.; Magatti, D.; Lugiato, L.; Gatti, A. Differential ghost imaging. Phys. Rev. Lett. 2010, 104, 253603. [Google Scholar] [CrossRef] [PubMed]

- Agafonov, I.N.; Luo, K.H.; Wu, L.A.; Chekhova, M.V.; Liu, Q.; Xian, R. High-visibility, high-order lensless ghost imaging with thermal light. Opt. Lett. 2010, 35, 1166–1168. [Google Scholar] [CrossRef]

- Sun, B.; Welsh, S.; Edgar, M.P.; Shapiro, J.H.; Padgett, M.J. Normalized ghost imaging. Opt. Express 2012, 20, 16892–16901. [Google Scholar] [CrossRef]

- Sun, M.J.; Li, M.F.; Wu, L.A. Nonlocal imaging of a reflective object using positive and negative correlations. Appl. Opt. 2015, 54, 7494–7499. [Google Scholar] [CrossRef] [PubMed]

- Song, S.C.; Sun, M.J.; Wu, L.A. Improving the signal-to-noise ratio of thermal ghost imaging based on positive–negative intensity correlation. Opt. Commun. 2016, 366, 8–12. [Google Scholar] [CrossRef]

- Sun, M.J.; He, X.D.; Li, M.F.; Wu, L.A. Thermal light subwavelength diffraction using positive and negative correlations. Chin. Opt. Lett. 2016, 14, 15–19. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Near-optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory 2006, 52, 5406–5425. [Google Scholar] [CrossRef]

- Sankaranarayanan, A.C.; Studer, C.; Baraniuk, R.G. CS-MUVI: Video compressive sensing for spatial-multiplexing cameras. In Proceedings of the 2012 IEEE International Conference on Computational Photography (ICCP), Seattle, WA, USA, 28–29 April 2012. [Google Scholar]

- Gong, W.; Zhao, C.; Yu, H.; Chen, M.; Xu, W.; Han, S. Three-dimensional ghost imaging lidar via sparsity constraint. Sci. Rep. 2016, 6, 26133. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, Z.H.; Chen, W.; Penulas, J.; Padgett, M.J.; Sun, M.J. 1000 fps computational ghost imaging using LED-based structured illumination. Opt. Express 2018, 26, 2427–2434. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Komatsu, K.; Ozeki, Y.; Nakano, Y.; Tanemura, T. Ghost imaging using integrated optical phased array. In Proceedings of the Optical Fiber Communication Conference 2017, Los Angeles, CA, USA, 19–23 March 2017. [Google Scholar]

- Li, L.J.; Chen, W.; Zhao, X.Y.; Sun, M.J. Fast Optical Phased Array Calibration Technique for Random Phase Modulation LiDAR. IEEE Photonics J. 2018. [Google Scholar] [CrossRef]

- Sun, M.J.; Zhao, X.Y.; Li, L.J. Imaging using hyperuniform sampling with a single-pixel camera. Opt. Lett. 2018, 43, 4049–4052. [Google Scholar] [CrossRef] [PubMed]

- Phillips, D.B.; Sun, M.J.; Taylor, J.M.; Edgar, M.P.; Barnett, S.M.; Gibson, G.M.; Padgett, M.J. Adaptive foveated single-pixel imaging with dynamic super-sampling. Sci. Adv. 2017, 3, e1601782. [Google Scholar] [CrossRef]

- Herman, M.; Tidman, J.; Hewitt, D.; Weston, T.; McMackin, L.; Ahmad, F. A higher-speed compressive sensing camera through multi-diode design. Proc. SPIE 2013, 8717. [Google Scholar] [CrossRef]

- Sun, M.J.; Chen, W.; Liu, T.F.; Li, L.J. Image retrieval in spatial and temporal domains with a quadrant detector. IEEE Photonics J. 2017, 9, 3901206. [Google Scholar] [CrossRef]

- Dickson, R.M.; Norris, D.J.; Tzeng, Y.L.; Moerner, W.E. Three-dimensional imaging of single molecules solvated in pores of poly (acrylamide) gels. Science 1996, 274, 966–968. [Google Scholar] [CrossRef] [PubMed]

- Udupa, J.K.; Herman, G.T. 3D Imaging in Medicine; CRC Press: Boca Raton, FL, USA, 1999. [Google Scholar]

- Bosch, T.; Lescure, M.; Myllyla, R.; Rioux, M.; Amann, M.C. Laser ranging: A critical review of usual techniques for distance measurement. Opt. Eng. 2001, 40, 10–19. [Google Scholar] [CrossRef]

- Schwarz, B. Lidar: Mapping the world in 3D. Nat. Photon. 2010, 4, 429–430. [Google Scholar] [CrossRef]

- Zhang, S. Recent progresses on real-time 3D shape measurement using digital fringe projection techniques. Opt. Lasers Eng. 2010, 48, 149–158. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Three-dimensional photon counting double-random-phase encryption. Opt. Lett. 2013, 38, 3198–3201. [Google Scholar] [CrossRef]

- Velten, A.; Willwacher, T.; Gupta, O.; Veeraraghavan, A.; Bawendi, M.G.; Raskar, R. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 2012, 3, 745. [Google Scholar] [CrossRef] [Green Version]

- Keppel, E. Approximating complex surfaces by triangulation of contour lines. IBM J. Res. Dev. 1975, 19, 2–11. [Google Scholar] [CrossRef]

- Boyde, A. Stereoscopic images in confocal (tandem scanning) microscopy. Science 1985, 230, 1270–1272. [Google Scholar] [CrossRef] [PubMed]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Horn, B.K.P. Robot Vision; MIT Press: Cambridge, CA, USA, 1986. [Google Scholar]

- Horn, B.K.P.; Brooks, M.J. Shape from Shading; MIT Press: Cambridge, CA, USA, 1989. [Google Scholar]

- Zhang, Y.; Edgar, M.P.; Sun, B.; Radwell, N.; Gibson, G.M.; Padgett, M.J. 3D single-pixel video. J. Opt. 2016, 18, 035203. [Google Scholar] [CrossRef] [Green Version]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Jiang, C.F.; Bell, T.; Zhang, S. High dynamic range real-time 3D shape measurement. Opt. Express 2016, 24, 7337–7346. [Google Scholar] [CrossRef] [PubMed]

- Goda, K.; Tsia, K.K.; Jalali, B. Serial time-encoded amplified imaging for real-time observation of fast dynamic phenomena. Nature 2009, 458, 1145–1149. [Google Scholar] [CrossRef] [PubMed]

- Diebold, E.D.; Buckley, B.W.; Gossett, D.R.; Jalali, B. Digitally synthesized beat frequency multiplexing for sub-millisecond fluorescence microscopy. Nat. Photonics 2013, 7, 806–810. [Google Scholar] [CrossRef] [Green Version]

- Tajahuerce, E.; Durán, V.; Clemente, P.; Irles, E.; Soldevila, F.; Andrés, P.; Lancis, J. Image transmission through dynamic scattering media by single-pixel photodetection. Opt. Express 2014, 22, 16945. [Google Scholar] [CrossRef]

- Guo, Q.; Chen, H.W.; Weng, Z.L.; Chen, M.H.; Yang, S.G.; Xie, S.Z. Compressive sensing based high-speed time-stretch optical microscopy for two-dimensional image acquisition. Opt. Express 2015, 23, 29639. [Google Scholar] [CrossRef]

| Element | Choices | Advantages (*) and Disadvantages (^) |

|---|---|---|

| System architecture | Focal plane modulation | * Active or passive imaging. ^ Limited choice on modulation. |

| Structured light illumination | * More choices for active illumination. ^ Active imaging only. | |

| Modulation method | Rotating ground glass | * High power endurance; cheap. ^ Not programmable; random modulation only. |

| Customized diffuser | * High power endurance; can be customized. ^ Not programmable; complicated manufacturing. | |

| LCD | * Greyscale modulation; programmable. ^ Slow modulation; low power endurance | |

| DMD | * Faster than LCD; programmable. ^ Binary modulation; not fast enough. | |

| LED array | * Much faster than DMD; programmable. ^ Binary modulation; structured illumination only. | |

| OPA | * Much faster than DMD; controllable. ^ Random modulation; complicated manufacturing. | |

| Reconstruction algorithm | Orthogonal sub-sampling | * Not computationally demanding. ^ Requires a specific prior. |

| Compressive sensing | * A computational overhead. ^ Needs only a general sparse assumption. |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, M.-J.; Zhang, J.-M. Single-Pixel Imaging and Its Application in Three-Dimensional Reconstruction: A Brief Review. Sensors 2019, 19, 732. https://doi.org/10.3390/s19030732

Sun M-J, Zhang J-M. Single-Pixel Imaging and Its Application in Three-Dimensional Reconstruction: A Brief Review. Sensors. 2019; 19(3):732. https://doi.org/10.3390/s19030732

Chicago/Turabian StyleSun, Ming-Jie, and Jia-Min Zhang. 2019. "Single-Pixel Imaging and Its Application in Three-Dimensional Reconstruction: A Brief Review" Sensors 19, no. 3: 732. https://doi.org/10.3390/s19030732