Abstract

Lane detection plays an important role in improving autopilot’s safety. In this paper, a novel lane-division-lines detection method is proposed, which exhibits good performances in abnormal illumination and lane occlusion. It includes three major components: First, the captured image is converted to aerial view to make full use of parallel lanes’ characteristics. Second, a ridge detector is proposed to extract each lane’s feature points and remove noise points with an adaptable neural network (ANN). Last, the lane-division-lines are accurately fitted by an improved random sample consensus (RANSAC), termed the (regional) gaussian distribution random sample consensus (G-RANSAC). To test the performances of this novel lane detection method, we proposed a new index named the lane departure index (LDI) describing the departure degree between true lane and predicted lane. Experimental results verified the superior performances of the proposed method over others in different testing scenarios, respectively achieving 99.02%, 96.92%, 96.65% and 91.61% true-positive rates (TPR); and 66.16, 54.85, 55.98 and 52.61 LDIs in four different types of testing scenarios.

1. Introduction

Lane-division line detection plays a critical role in improving the safety level of intelligent electric vehicles (IEVs). Currently, there are two main methods to detect lane-division-lines: feature-based detection and model-based detection. Feature-based detection extracts the edge location distribution, connected shadow area, color and texture differences from graphs to detect lane-division-lines [1,2,3]. Zhang proposed a Hough transform based fitting-lane method for tracking [4]. Yoo used gradient-enhancing conversion for illumination-robust lane detection [5]. Geiger designed a Bayes model to discriminate lane-division line pixels from other pixels [6]. Peng proposed a lane-division line detection method with the statistical Hough transform based on a gradient constraint [7]. To extract significant features, Ma converted the color space of RGB to the CIELab color model and detected the lane-division-lines by using k-means clustering [8]. Son proposed a lane detection method based on the color feature and clustering method [9]. Jung proposed a lane-division line detection method based on the Haar feature [10]. Wang used lane detection by combining the self-clustering algorithm, fuzzy C-mean, and fuzzy rule to process the spatial information and Canny algorithm to extract edge features [11]. Other feature-based detection methods [12,13,14,15,16,17] also achieved good performances in normal-conditions scenarios; however, the main drawback of this approach is that it is easily disturbed by noise, as it ignores the model of the lane-division-lines.

Aiming to solve this shortcoming, many model-based detection methods are proposed. Zhou proposed a novel lane detection method based on the geometrical model and Gabor filter [18]. Wang proposed a lane detection approach based on inverse perspective mapping [19]. Baofeng proposed a detection system with a linear approximation method [20]. Although lane-division-lines detection technology has shown great development [21,22,23,24,25,26], feature extraction is still heavily influenced by complex road situations, such as light variance, under-road road signs and shadow interference [27]. Hence, more researchers are focusing on deep learning-based detection methods [28,29,30,31,32], which are showing better results, but these models heavily depend on the quality of training samples [33]. The artificial neural network is applied to remove noise from the image or feature map. Rashidha proposed an adaptive-size median filter for pulse noise removal based on a neural network [34]. With the development of computation, a deeper neural network was applied to reduce noise in samples. Kim Y proposed an adaptive filter based convolutional neural network [35]. Kuang removed noise by a deep convolution neural network, which showed good performances in various, unknown-noise scenarios [36].

In this paper, we aim to solve this problem by combining the advantages of feature detection and model detection methods. In particular, we propose a ridge detector to extract ridges from the aerial view map. Then, we remove the noise according to the lane-division-lines model. A sample set is generated by combining a ridge-positive sample and a noise-negative sample. In order to improve the robustness of the ridge detector, we fully utilize the information between frames. Specifically, we design a six-dimensional feature for each sample point to retrain a three-layer backpropagation (BP) neural network of each ten frames [37]. The six-dimensional feature consists of the abscissa; ordinate values; 3 × 3 convolution filter, 7 × 7 convolution filter and 11 × 11 convolution filter feature values; and the frequency within ten frames. We use the detection data set to update the neural network weights in real-time for the next ten frames’ detection. The confidence level for each of the ridge points is decided based on the results of ridge detector. The confidence values are decided by the corresponding pixels of the two-dimensional Gaussian distribution’s covariance matrix. To robustly fit the ridge points, we sample three ridge points from three different areas then generate k new points by two-dimensional Gaussian inverse-transformation sampling for each selected ridge point. Last, we fit the 3k + 3 points by the random sample consensus (RANSAC) algorithm, in which the least-squares’ objective function is solved by the stochastic sub-gradient descent (SGD). In this research, we used an adaptable neural network (ANN) to discriminate noise and ridge points, and improved the traditional RANSAC algorithm by considering the confidence of remaining ridge-feature points.

The remainder of this paper is organized as follows. In Section 2, related work is introduced. In Section 3, the lane-division line feature extraction method, based on an adaptable ridge detector and the between-frames neural network, is proposed, and the regional gaussian distribution random sample consensus (G-RANSAC) fitting method is proposed as well. The experimental results are shown in Section 4, and finally, the conclusion is drawn in Section 5.

Nomenclature: Let stand for real matrix of an image; stands for the row column element of matrix . and represent for height and width of an image, respectively. Symbol ⊗ represents for convolution operation; and represent the minimum and maximum element of matrix , respectively.

2. Related Work

Lane-division lines can be detected using a static camera sensor or vehicle-mounted camera sensor. For the static camera sensor, the application scenarios include precise vehicle positioning and intelligent transportation. The main methods include method are based on the trajectories of vehicles [38,39] and pixel-entropy [40]. For the vehicle-mounted camera sensor, the application scenarios include intelligent vehicles and advanced driver assistant systems (ADAS) [41,42]. In this paper, we focus on lane-division line detection based on vehicle-mounted camera sensor.

Lane detection methods based on a moving camera sensor are divided into two steps, lane feature extraction, and feature points fitting. For feature extraction, Sobel and Canny edge features are usually used as the lane division lane feature, but the edge feature is susceptible to noise interference. To solve this problem, a ridge detected method based on ridge-feature is proposed [43], which contains two matrix-convolution operations, two matrix-differential operations, three matrix-point product operations, and a divergence calculation. In this paper, we propose a simpler ridge detection method based on lane width with better results in real time, as shown in Section 4.1.

For feature point fitting, Hough transform is the usual method [15], but it is an inefficient method, thus J Guo proposed a lane-division, lane-feature-fitting method based on RANSAC [44]. The main steps of this method are the following: (1) Fewer fitting points are selected randomly from the whole point set. (2) The model is fit based on the selected points in step 1 by the least-square method. (3) Step 1 is repeated until the maximum iterative number is reached. (4) The best fitting model from multiple iterations is selected, according to whole point set’s lowest error. Compared with Hough transform, this method improves fitting efficiency. However, randomly selecting fitting points from whole feature points is a bad strategy for lane detection; the reason is illustrated in Figure 9b. Therefore, in Section 3.4 of this paper, we propose to divide the ridge-feature map into three regions, and then resample, based on the Gaussian distribution according to ridge coefficient. The experimental results show significantly improved fitting efficiency.

3. Materials and Methods

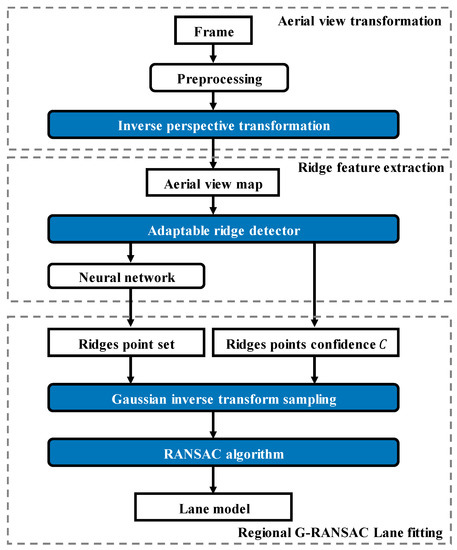

The detection algorithm is proposed here, which is divided into five sub-sections, as shown in Figure 1. In Section 3.1, a graphical preprocessing method based on inverse perspective transformation is presented to obtain the aerial view map. In Section 3.2, a feature extraction method based on an adaptable ridge detector is developed to extract the feature of ridges in the lane-division-lines. In Section 3.3, we extract a six-dimensional feature for each pixel to attain the neural network, which is used to discriminate between noise and ridge points. In Section 3.4, the regional G-RANSAC is proposed to robustly fit the lane-division-lines. In Section 3.5, we propose a new index, named the lane departure index (LDI), to test the performances of this lane detection method.

Figure 1.

Detection algorithm block diagram.

3.1. A Graphical-Preprocessing-Method Based Inverse-Perspective Transformation

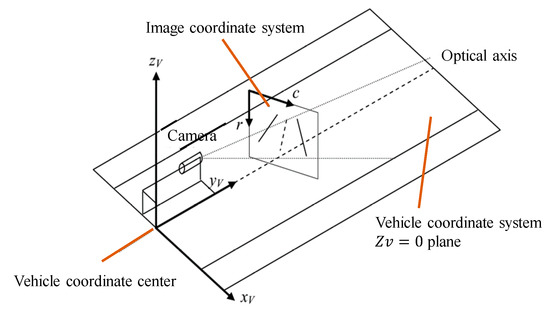

As the frame captured from the camera is an RGB-image with noise, preprocessing methods consist of a gray image transformation and using a 5 × 5 median filter to reduce image noise. However, there are many additional interferences from image background information. Therefore, we select the region of interest (ROI) by inverse perspective transformation to attain the aerial view map, which eliminates perspective-side effects. According to the lane-division line standard [43], we choose a 35 m by 11.5 m region in the Cartesian coordinate system. The principle of the inverse perspective transformation is shown in Figure 2; represents the vehicle coordinate system and represents the image coordinate system. In the inverse perspective transformation, we convert the image coordinate system into a real-world, three-dimensional coordinate system . The transforming relationship is described as follows:

where represent the camera’s center coordinates in the vehicle coordinate system; and and denote the transfer and rotation matrices, respectively. and stand for the image resolution; is the angle between optical axis and the = 0 plane; is the angle between optical axis and the vehicle coordinate system’s axes, ; denotes half of the camera view angle.

Figure 2.

The principles of the inverse perspective transformation.

We convert the image to an aerial view map with Equation (1).

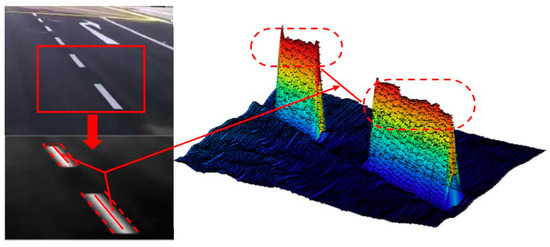

3.2. Ridge Detector

In general, ridges are low-level features in gray images. In comparison with edge features, ridge-features are more suitable to describe lane-division-lines in situations such as vehicle shadow interference, worn-out ground signs and insufficient illumination [19]. As shown in Figure 3, the ridge is the center line. In the traditional ridge detected method in Section 2, the gradient vector calculation and multiple matrix operation require high computational cost.

Figure 3.

Ridge-feature illustration.

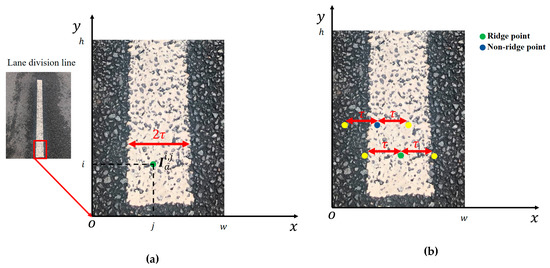

According to the geometry of lane-division-lines in the aerial view map , we proposed a simple ridge detector based on lane width. As shown in Figure 4a, we define the green point as row column pixel’s value, in the image coordinate system. Row denotes ordinate and column denotes abscissa; we define as the lane-division line transverse pixel number in the aerial view map. stands for ridge-feature map, which is defined by Equation (2).

where denotes half number of lane-division line transverse pixels.

Figure 4.

Ridge detector illustration. (a) ridge point illustration. (b) Equation (2) illustration.

As shown in Figure 4b, is defined as the pixel value of the point in a lane-division line and is defined as the pixel value of the point outside a lane-division line, where . The green point represents the ridge point value, ; its left and bright yellow point are outside the lane-division line with a value. The blue point represents non-ridge point with a value, its left yellow point is outside the lane-division line with a value, and a bright yellow point is in a lane-division line with a value. The term of a green point is larger than the term of a blue point, and the term of a green point equivalent to the term of a blue point. According to Equation (2), the ridge-feature value of a green point is larger than the ridge-feature value of a blue point.

In order to increase the difference between the of a ridge point and a non-ridge point, on the basis of Equation (2), we add a punishment term , as shown in Equation (3). At the ridge point, the punishment term equates to zero, but the term of the non-ridge point is larger than zero.

We simplify Equation (3) to obtain the following ridge detector mathematical model:

According to Equation (4), we calculate the row column ridge-feature value for each pixel , which is shown in matrix form:

We redefine ridge-feature map :

aerial view map :

and one-dimensional ridge filters and :

where denotes half the number of the lane-division line’s transverse pixels.

According to Equations (6)–(9), we simplify Equation (5):

According to Equation (10), we can convert the aerial view map to ridge-feature map .

We obtain the normalized ridge-feature map by Equation (11).

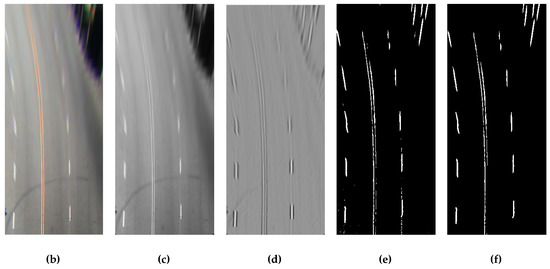

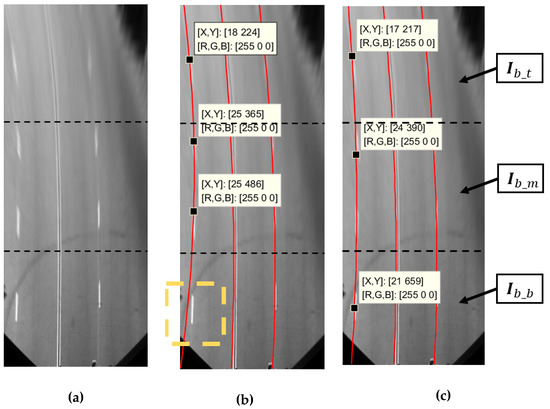

Pictorial examples of ridge-feature extraction are shown in Figure 5.

Figure 5.

Ridge-feature extraction illustration. (a) Original map . (b) Aerial view map . (c) Gray map. (d) Ridge map . (e) Ridge binary map . (f) Map when areas with pixel numbers less than 30 are removed.

describes the possibility of a pixel belonging to a ridge point set; we set the value of as the confidence level for the regional G-RANSAC algorithm in Section 3.4.

Thus, we attain the statistical histogram of and select the highest bin corresponding value as the threshold to covert to a binary ridge binary image . According to the experimental results, the mathematical morphology process is applied to image to remove areas with pixel numbers less than 30.

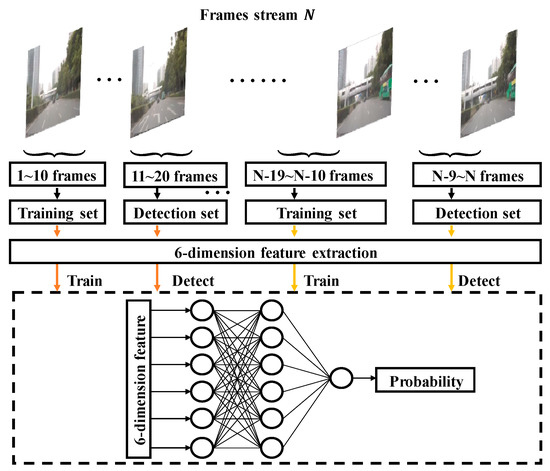

3.3. 6-Dimensional Feature Extraction and Retraining a BP Neural Network for Removing Noise

In this section, we propose an adaptable classification method for binary ridge-feature image to discriminate noise and ridge points. The noise point is comprised of another objection noise point and imaging noise point. No-objection noise is caused by the sign on the ground, tree shadow, or other vehicles; therefore, they appear in particular areas. We remove the no-objection noise point by pixel position information according to the lane-division line model. The imaging noise points are randomly distributed and caused by the camera sensor, but it is a separate and accidental process. Therefore, we remove the imaging noise points by calculating the number of surrounding feature points and the frequency of 10 consecutive frames. The frequency affects the real-time performance and adaptability of neural network. The frequency of 10 was selected based on experimental results.

A six-dimensional feature for the row column pixel is proposed to discriminate noise and ridge points, which is as follows:

where stands for binary ridge-feature image; denotes row column ; , and denote the convolution feature matrices; is a -rank square matrix’s element equal to 1; is the row column element of the convolution feature matrix ; is the row column element of convolution feature matrix ; is the row column element of convolution feature matrix ; and represents for the frequency of pixel within 10 frames.

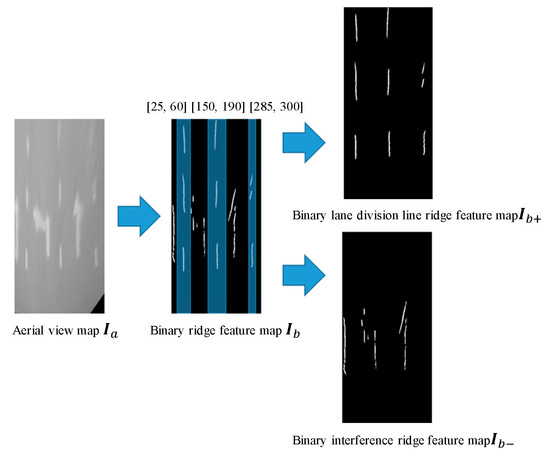

According to the lane-division line model, binary ridge image can be divided into two sets. The ridge point set is expressed as , in which abscissa , which came from experiment result in Figure 6. However, the constant bounds are ineffective when in the vehicle change lane. Variable bounds are an effective method to handle this scenario, which will be exploited in the feature. The noise point set is expressed as . Point set would be processed by a well-trained BP neural network and further divided into ridge point subset and no-ridge point subset . The ridges’ subset is be fitted to the line.

Figure 6.

Pictorial example for dividing binary ridge map .

To improve the detection robustness, within each 10 frames, we retrain the BP neural network by a positive sample set and negative sample sets and . Cross-entropy is applied as a loss function, and the sigmoid function is adopted as an activation function. The structure of the BP neural network is shown in Figure 7.

Figure 7.

Retrain the backpropagation (BP) neural network for each 10 frames.

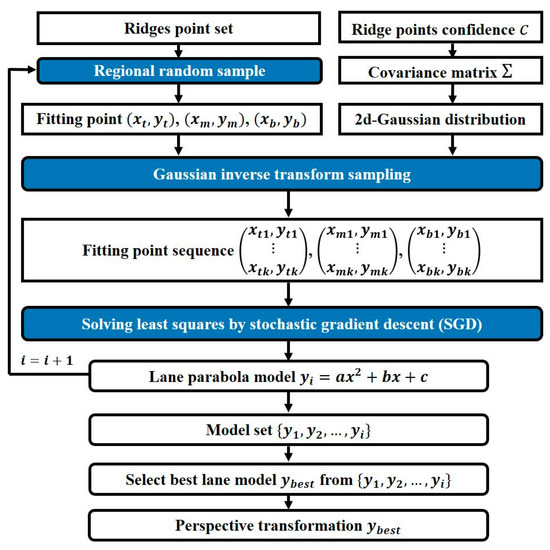

3.4. Regional G-RANSAC

The RANSAC algorithm is applied to fit the point set, which contains a number of noise points [43]. However, random sampling from the whole point set is not a good strategy for lane model fitting. As shown in Figure 8, we improve RANSAC algorithm by considering ridge point confidence and sampling areas. The binary ridges feature map is divided into three areas , and , shown in Figure 9a. We randomly select a ridge point from each area, defined as , , and , respectively. Fitting efficiency would be improved in Figure 9c, compared with Figure 9b.

Figure 8.

Regional gaussian distribution random sample consensus (G-RANSAC) land fitting block diagram.

Figure 9.

Regional fitting points selection illustration. (a) Aerial map. (b) Select. fitting points randomly from whole map. (c) Select fitting points randomly from three areas.

The feature points selection method is suitable for lane fitting, but it is still possible to select noise points. Therefore, we assume that the selected row, column, ridge point and coordinate , is subject to two-dimensional Gaussian distribution, in which probability density function is defined as follows:

where means vector, and and are the covariance matrix ’s elements. We hypothesis that variables and are non-correlated, hence covariance matrix is defined as .

The covariance matrix describes the uncertainty degree of selected point belonging to the ridge point set. In other words, the selected point in the ridge point set is the fitting target, so we set the covariance matrix as a small value to limit the inverse transformation resampling range. The other case is that the selected point is not in the ridge point set; then, we set a big covariance matrix , giving a chance for this point to generate ridge points at the inverse-transformation sampling stage.

We then define the confidence level as the probability of selected point , which belongs the to ridge point set. denotes the row , column element of the ridge points’ confidence matrix , which is defined in Equation (14):

where is when the -rank square matrix’s element equals 1. stands for normalized ridge-feature map. Covariance matrix is calculated with confidence of the point in binary ridge-feature map , as shown in Equation (15):

where denotes scale coefficient.

In summary, the selected point initiates a two-dimensional Gaussian distribution with mean vector and covariance matrix . Because abscissa and ordinate are non-correlated, the two-dimensional Gaussian distribution can be converted into two one-dimensional Gaussian distributions, . Therefore, abscissa distribution and ordinate distribution arise; their probability density functions are shown in Equations (16) and (17), respectively.

The corresponding cumulative distribution function is shown in Equations (18) and (19).

where denotes an exponential function, denotes a scale coefficient and is the coordinate point of ridge-feature map . In Equations (18) and (19), represents the mean vector of the Gaussian distribution.

We describe Equations (18) and (19) by the error function , as shown in Equations (20) and (21), respectively.

The inverse function of and is shown in Equations (22) and (23).

where denotes scale coefficient, and is a coordinate point of ridge-feature map , in Equations (18) and (19). denotes the mean vector of the Gaussian distribution.

According to inverse transformation sampling [45], we attain the selected point sequence by , where represents the inverse transformation sampling numbers. and are subject to uniform distribution , which is subject to and , which are subject to .

The traditional RANSAC algorithm, which randomly fits selected points by least-squares, in which the objective function is , is shown in Equation (24).

where represents the number of fitting points, denotes the th fitting points and denotes the fitting model. We define , for each , where belongs to (0,]. In this paper, we choose the stochastic sub-gradient descent (SGD) method [46] to solve Equation (24), because the number of selected point sequence is large if the inverse-transform sampling parameter is large. The objective function’s partial derivatives with respect to , and are as follows:

where represents the number of randomly selected points and represents the number of inverse transform sampling.

The stochastic sub-gradient descent for solving the least-squares receives four input parameters: (i) step size , (ii) the number of iterations , (iii) the number of examples to use for calculating sub-gradient , (iv) and the fitting point sequence . Algorithm 1 describes the proposed method in pseudocode.

| Algorithm 1 The stochastic sub-gradient descent for solving least-squares |

| 1: Input: 2: Initialize: , , ; 3: For t = 1, 2, …, do 4: Choose , where 5: Set 6: , , 7: End for. |

3.5. Lane Departure Index (LDI)

The true-positive rate (TPR) and false-positive rate (FPR) are common indices in lane detection [16], which is used to measure the ratio of correctly fitting frames to the total frames, described as follows:

where NTP (number of true-positive) is the number of correctly predicted lane detections; NLT (number of lanes positive) is the real lane number in the test video; and NFP (number of false-positive) is the number of wrongly predicted lane detections.

However, for a lane-division line, the judgment of TPR and FPR indices are binary: correct predictions or wrong predictions. It is very dangerous for an autopilot system to predict a lane division lane inaccurately, so it is necessary to find an index to describe the departure degree between the predicted lane and real predicted in a single frame.

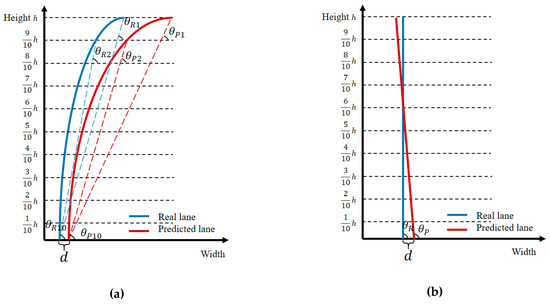

Herein, we propose a new measure index termed the lane departure index (LDI) to describe the departure degree. The curve-line lane model is simplified to ten straight lines based on ten points of curved lane, as shown in Figure 10a and defined in Equation (30).

where denotes the abscissa error between th real lane, and th predicted lane of th frame when the ordinate is zero; to are the weight of ten simplified straight line; denotes the first simplified straight line of th real lane in th frame; denotes the first simplified straight line of th predicted lane in th frame; denotes the second simplified straight line of th real lane in th frame; denotes the second simplified straight line of th predicted lane in th frame; denotes the tenth simplified straight line of th real lane in th frame; denotes the tenth simplified straight line of th predicted lane in th frame; denotes video frame number; and denotes the number of the th frame real lane-division line.

Figure 10.

Illustration of lane departure index (LDI). (a) Curved lane in an aerial view map. (b) Straight lane in aerial view map.

Shown in Figure 10b, as a particular case of a two-degree polynomial curve lane, Equation (30) also holds true for a straight lane, and the terms are equal to each other.

4. Results

In this section, three experiments are carried out. In Section 4.1, there is a comparison experiment about the operating speed of the ridge-feature model [43]. The proposed method is given and the computational complexity is analyzed. In Section 4.2, the median filter, regional noise removing method and BP neural network are applied to remove noise adaptability, and their effectiveness is verified. In Section 4.3, the effectiveness of fitting method regional G-RANSAC is verified by comparing with traditional RANSAC and the Hough transform. Lastly, in Section 4.4, the comparative experimental results of the whole proposed method and other lane detection methods are given to verify the improvement in challenging scenarios.

4.1. An Analysis of the Ridge-Feature Extraction Method’s Operating Speed

The traditional ridge-feature extraction method [43] contains two matrix convolution operations, two matrix differential operations, three matrix point product operations and a divergence calculation. The proposed method in Equation (10) contains two matrix convolution operations and a matrix subtraction operation.

We compared the running speed of the traditional ridge-feature extraction method and the proposed method. Software platform: MATLAB R2018b. Hardware platform: CPU: Intel Core i5-4570 CPU (3.20 GHz), Memory: 32 GB and GPU: NVIDIA GeForce GTX 1080 Ti. Testing video: shown in Table 3.

As shown in Table 1, compared with method [43], the proposed method operating speed is improved 2.56, 2.70, 2.55 and 2.50 times in the four testing scenario videos respectively.

Table 1.

Comparison experiment about the operating speed of the traditional method and the proposed method.

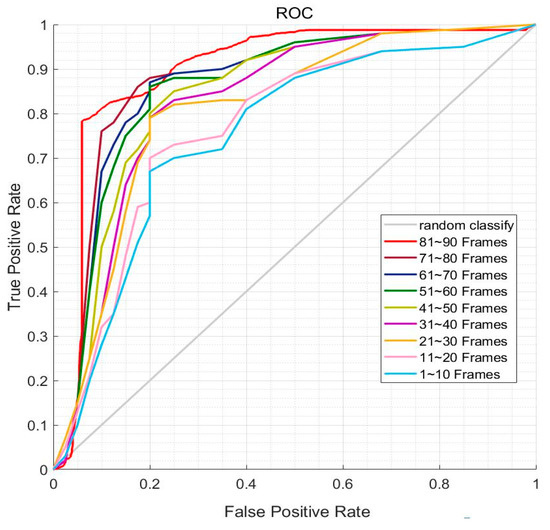

4.2. A BP Neural Network Applied to Remove Noise

We chose 100 frames from a driving video and retrained the BP neural network for each 10 frames and tested the neural network with the next 10 frames. The neural network’s receiver operating characteristic (ROC) curve is shown in Figure 11. There are nine ROC curves in Figure 11, representing each 10-frame training of the neural network. With training, the neural network performance got better. More specifically, the classification performances of the BP neural network for the next 10 frames are listed in Table 2. The results illustrate that the neural network’s accuracy is equal to 0.830 at the beginning and after nine retraining frames, the accuracy increase to 0.889, which verifies that the neural network is adaptable for the current detection scenario.

Figure 11.

Receiver operating characteristic (ROC) curve of the BP neural network.

Table 2.

Result of the BP neural network for different frames.

In order to test the degree of improvement of the BP neural network applied to removing noise, we compared the proposed method with a hybrid median filter [47] and the regional noise removing method [48] in four different types of testing scenarios, which are listed in Table 3, including a scenario with normal illumination and good pavement; one with intense illumination and shadow interruption; another with normal illumination and a sign-on-the-ground interruption; and finally, one with poor illumination and vehicle interference. The fitting method is by the traditional RANSAC algorithm.

Table 3.

Different testing video scenarios.

As shown in Table 4, in the normal illumination and good pavement scenario video, the performance of the proposed method is similar to hybrid median filter and regional noise removing, but the proposed method is better than hybrid median filter and regional noise removing in abnormal illumination and bad pavement conditions. Furthermore, the proposed method achieves a more stable and better performance in different scenarios. This experiment aims to verify the effectiveness of the proposed de-noising method, as the general fitting method, the traditional RANSAC algorithm, is applied to fit ridge-feature points to generate results in Table 4, the results in bold means the best performance in the corresponding scenarios.

Table 4.

Comparative results of noise removing methods.

4.3. Regional G-RANSAC Fitting Method Verification

The experimental parameters are shown as follows: scale coefficient , fitting point number , inverse transformation sampling number , RANSAC iteration number 60, SGD step size , SGD iterations and the number of examples to use for calculating sub-gradient .

To compare with Hough transform and the traditional RANSAC algorithm, we tested the proposed method in four different types of testing scenarios: normal illumination and good pavement; intense illumination and shadow interruption; normal illumination and a sign-on-the-ground interruption; and finally, one with poor illumination and vehicle interference (as listed in Table 5). The proposed de-noising method described in Section 3.3 is applied to experiment in Table 5, the results in bold means the best performance in the corresponding scenarios.

Table 5.

Comparative results of fitting methods in different test scenarios.

The proposed method achieved 99.02%, 96.92%, 96.65%, and 91.61% TPR in the four different testing scenarios, respectively. In addition, for the LDI, the proposed method achieved 20.55% and 26.48% more than the Hough transform and traditional RANSAC in normal illumination and good pavement conditions; 46.41% and 35.66% more in intense illumination and shadow interruption scenarios; 68.51% and 13.80% more in normal illumination and sign on the ground interruption scenarios; and 74.78% and 33.16% more in poor illumination and vehicle interferance scenario.

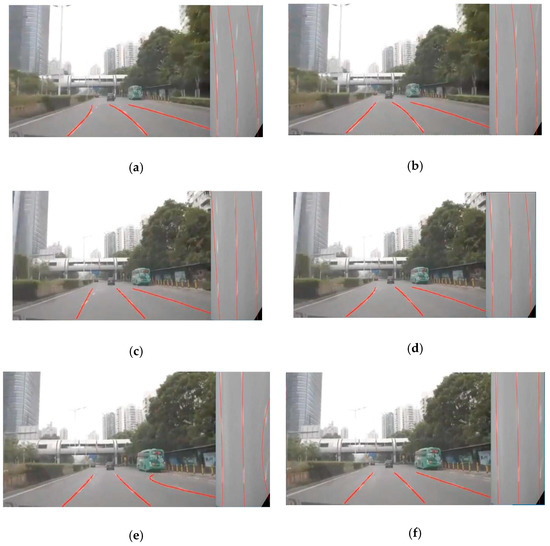

In Figure 12, the comparative results of traditional RANSAC and regional G-RANSAC are shown. Figure 12a,c,e show the fitting by traditional RANSAC algorithm. Figure 12b,d,f is fitting by regional G-RANSAC. Shown in Figure 12a–d, the traditional RANSAC algorithm selects fitting points from the whole ridges’ point feature map, which increases the probability of selecting noise points. We proposed selecting fitting points from three divided areas to improve the fitting effectiveness. Shown in Figure 12e,f the traditional RANSAC algorithm missed the feature point, hence the fitting effect in Figure 12e is poor, but the proposed method considers the Gaussian distribution of feature points based on a ridges’ confidence, thus improved the robustness of lane fitting.

Figure 12.

The comparative result of traditional RANSAC and regional G-RANSAC. (a,c,e) fitting results of the traditional RANSAC, (b,d,f) fitting results of the regional G-RANSAC.

4.4. Lane Detection Frame Verifying Experience

Here, the proposed lane detection frame tests are shown. We chose recent lane detection methods to verify the effectiveness of the proposed lane detection method. Comparative methods are listed in Table 6. Testing scenario videos are the same video as above, including scenarios with normal illumination and good pavement; intense illumination and shadow interruption; normal illumination and a sign-on-the-ground interruption; and finally, one with poor illumination and vehicle interference.

Table 6.

Comparison of lane detection methods.

As shown in Table 7 (the results in bold means the best performance in the corresponding scenarios), the proposed method has better performances than method 1 in four different types of testing scenario videos; the improvement is significant in the testing scenario of occlusion interruption, including sign interruption and vehicle interruption. The method 1 lane fitting by Hough transform, achieved 98.13% TPR and 64.10 LDI in normal illumination and good pavement condition testing scenarios, but 82.75% TPR 30.32 LDI and 85.14% TPR 33.17 LDI in sign interruption and vehicle interference scenarios, respectively. The results indicate that Hough transform is not good at the occlusion interruption scenario.

Table 7.

Comparative results of lane detection methods.

Method 3, based on vehicle trajectories, performed badly: 50.64%, 48.81%, 50.37% and 47.32% TPR; and 30.94, 21.96, 29.40 and 27.16 LDI in the four different types of testing scenarios, respectively. Additionally, method 3 fails to detect lane-division-lines in some situations; for example, when there is no preceding vehicle or the preceding vehicle changes lanes. However, compared with scenario 1, method 3 only reduced TPR 0.27% in scenario 3, while the proposed method reduced it 2.37%. The reason is that method 3 focuses on vehicle trajectories rather than lane-division line, so the fitting result is not affected by the sign marking on the ground.

The proposed method has satisfactory performances in four different types of testing scenarios, achieving 99.02%, 96.92%, 96.65% and 91.61% TPR; and 66.16, 54.85, 55.98 and 52.61 LDI in the four different types of testing scenarios, respectively. The results show that the proposed method is effective in challenging scenarios.

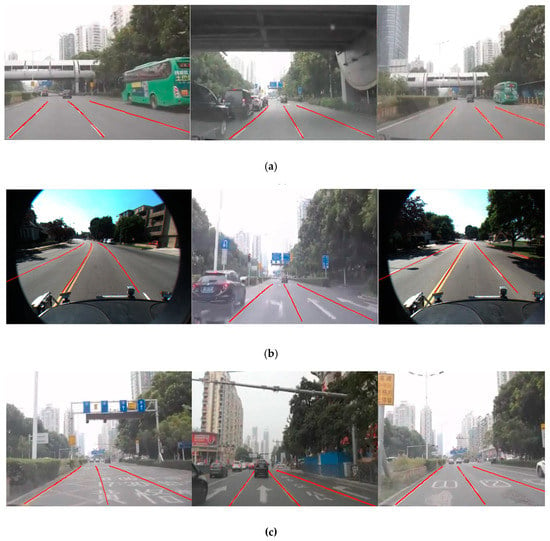

In Figure 13a, because of good lighting conditions and road conditions, there are few noise points in the ridge-feature map, resulting in good detection. In Figure 13b, the testing scenario has shadow interruption due to strong lighting, whereas the proposed method can remove shadow noise points and show good lane-division line fitting. In Figure 13c, the sign on the ground is the main interference, but the lane-division line model and BP neural network has the capability to discriminate between ridge pixel points and sign pixel points, which results in a good git. In Figure 13d, the scenario has poor illumination, vehicle interfere generates a lot of noise points and it lacks key ridge points; however, with the advantage of the G-RANSAC algorithm, the proposed method can fit the lane-division line with a small number of ridge points.

Figure 13.

The proposed method detects the lane in four different types of scenarios. (a) Scenario 1 with normal illumination and good pavement. (b) Scenario 2 with intense illumination and shadow interruption. (c) Scenario 3 with normal illumination and sign-on-the-ground interruption, and (d) scenario 4 with poor illumination and vehicle interference.

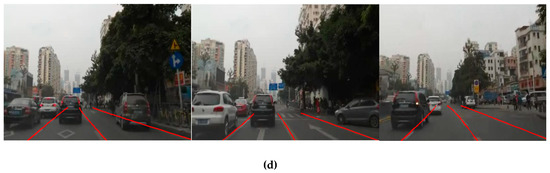

Herein, the drawbacks of the proposed method are described. First, as the proposed method fitting model, Parabola, cannot provide perfect fitting when the lane-division line bends continuously, shown in Figure 14a. Second, the proposed method fails to detect lane-division-lines when its abscissa crosses three ranges (25, 60) and (150, 190) and (285, 300) at same time, shown in Figure 14b. Third, the proposed method fails to detect lane-division-lines when the vehicle itself changes lane, shown in Figure 14c,d.

Figure 14.

Incorrect detection with the proposed method. (a) The left lane-division line is not a good fit. (b) Detection failure of the left lane division. (c) Detection failure of lane division. (d) The left predicted lane division does not match the left, real lane-division line.

5. Conclusions

In this paper, we proposed a lane-division-lines detection method based on ridge detector and regional G-RANSAC. The main innovation is summarized as: First, we removed noise points by an adaptable neural network. The experimental results verified that the adaptable neural network achieves better detection performance than a hybrid median filter and regional noise removing, in challenging scenarios. Secondly, we improved the traditional RANSAC by considering the confidence levels of pending fitting points. The experimental results indicate that the regional G-RANSAC achieves better detection performance in TPR and LDI compared to traditional RANSAC and Hough transform in different scenarios. Last, we compared the whole proposed method with other lane detection methods on four types of testing scenario videos, including a scenario with normal illumination and good pavement; one with intense illumination and shadow interruption; another with normal illumination and a sign-on-the-ground interruption; and finally, one with poor illumination and vehicle interference. The experimental results show, regardless of normal or challenging scenarios, the proposed method achieves 0.91%, 9.85%, 10.57% and 7.60% improvements in TPR; and 3.21%, 43.47%, 84.63% and 58.61% improvements in LDI in the four different types of testing scenarios compared to the other lane detection methods, especially in the sign and vehicle interference scenario. Since the proposed method cannot adaptively separate the lane-division line regions on the abscissa, the lane-division line cannot be well fitted in the case where the lane dividing line bends continuously and the vehicle changes lanes. Variable region bounds are an effective method to solve the problem [49], which will be studied in the future.

Author Contributions

Conceptualization, Z.L. and Y.X.; methodology, X.S. and Y.X.; software, X.S.; validation, Z.L.; formal analysis, L.L.; investigation, Y.X.; resources, X.S.; data curation, X.S.; writing—original draft preparation, Z.L.; writing—review and editing, L.L.; visualization, X.W.; supervision, X.W. and J.S.; project administration, Y.X.; funding acquisition, Y.X.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 61403259; the National Natural Science Foundation of China, grant number 51577120; and the Science and Technology Research and Development Foundation of Shenzhen, grant number JCYJ20170302142107025.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hillel, A.B.; Lerner, R.; Levi, D.; Raz, G. Recent progress in road and lane detection: A survey. Mach. Vis. Appl. 2014, 25, 727–745. [Google Scholar] [CrossRef]

- Ramer, C.; Lichtenegger, T.; Sessner, J.; Landgraf, M.; Franke, J. An adaptive, color based lane detection of a wearable jogging navigation system for visually impaired on less structured paths. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016; pp. 741–746. [Google Scholar]

- Dang, H.S.; Guo, C.J. Structure Lane Detection Based on Saliency Feature of Color and Direction. In Applied Mechanics and Materials; Trans Tech Publications: Zurich, Switzerland, 2014; pp. 2876–2879. [Google Scholar]

- Zhang, X.; Zhu, X. Autonomous path tracking control of intelligent electric vehicles based on lane detection and optimal preview method. Expert Syst. Appl. 2019, 121, 38–48. [Google Scholar] [CrossRef]

- Yoo, H.; Yang, U.; Sohn, K. Gradient-enhancing conversion for illumination-robust lane detection. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1083–1094. [Google Scholar] [CrossRef]

- Geiger, A.; Lauer, M.; Wojek, C.; Stiller, C.; Urtasun, R. 3d traffic scene understanding from movable platforms. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1012–1025. [Google Scholar] [CrossRef]

- Peng, Y.; Gao, H. Lane detection method of statistical Hough transform based on gradient constraint. J. Intell. Inf. Syst. 2015, 4, 40–45. [Google Scholar]

- Ma, C.; Xie, M. A method for lane detection based on color clustering. In Proceedings of the 2010 Third International Conference on Knowledge Discovery and Data Mining, Phuket, Thailand, 9–10 January 2010; pp. 200–203. [Google Scholar]

- Son, J.; Yoo, H.; Kim, S.; Sohn, K. Real-time illumination invariant lane detection for lane departure warning system. Expert Syst. Appl. 2015, 42, 1816–1824. [Google Scholar] [CrossRef]

- Jung, H.; Min, J.; Kim, J. An efficient lane detection algorithm for lane departure detection. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 976–981. [Google Scholar]

- Wang, J.G.; Lin, C.J.; Chen, S.M. Applying fuzzy method to vision-based lane detection and departure warning system. Expert Syst. Appl. 2010, 37, 113–126. [Google Scholar] [CrossRef]

- Song, W.; Yang, Y.; Fu, M.; Li, Y.; Wang, M. Lane detection and classification for forward collision warning system based on stereo vision. IEEE Sens. J. 2018, 18, 5151–5163. [Google Scholar] [CrossRef]

- Li, Q.; Zhou, J.; Li, B.; Guo, Y.; Xiao, J. Robust Lane-Detection Method for Low-Speed Environments. Sensors 2018, 18, 4274. [Google Scholar] [CrossRef]

- Wu, C.B.; Wang, L.H.; Wang, K.C.; Technology, S.F.V. Ultra-low Complexity Block-based Lane Detection and Departure Warning System. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 582–593. [Google Scholar] [CrossRef]

- Sun, Y.; Li, J.; Sun, Z. Multi-Stage Hough Space Calculation for Lane Markings Detection via IMU and Vision Fusion. Sensors 2019, 19, 2305. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.; Moon, J.H. Robust lane detection and tracking for real-time applications. IEEE Trans. Intell. Transp. Syst. 2018, 19, 4043–4048. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E. Dynamic integration and online evaluation of vision-based lane detection algorithms. IET Intell. Transp. Syst. 2018, 13, 55–62. [Google Scholar] [CrossRef]

- Zhou, S.; Jiang, Y.; Xi, J.; Gong, J.; Xiong, G.; Chen, H. A novel lane detection based on geometrical model and gabor filter. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 59–64. [Google Scholar]

- Wang, J.; Mei, T.; Kong, B.; Wei, H. An approach of lane detection based on inverse perspective mapping. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 Octorber 2014; pp. 35–38. [Google Scholar]

- Baofeng, W. Curve of Lane Line Detected method based on Linear Approximation. J. Beijing Univ. Technol. 2016, 36, 470–474. [Google Scholar]

- Küçükmanisa, A.; Akbulut, O.; Urhan, O. Robust and real-time lane detection filter based on adaptive neuro-fuzzy inference system. IET Image Process. 2019, 13, 1181–1190. [Google Scholar] [CrossRef]

- Gupta, A.; Choudhary, A. A Framework for Camera-Based Real-Time Lane and Road Surface Marking Detection and Recognition. IEEE Trans. Intell. Veh. 2018, 3, 476–485. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Y.; Zhao, X.; Wang, G.; Huang, H.; Zhang, J. Lane Detection of Curving Road for Structural High-way with Straight-curve Model on Vision. IEEE Trans. Veh. Technol. 2019, 68, 5321–5330. [Google Scholar] [CrossRef]

- Ozgunalp, U. Robust lane-detection algorithm based on improved symmetrical local threshold for feature extraction and inverse perspective mapping. IET Image Process. 2019, 13, 975–982. [Google Scholar] [CrossRef]

- Cáceres Hernández, D.; Kurnianggoro, L.; Filonenko, A.; Jo, K. Real-time lane region detection using a combination of geometrical and image features. Sensors 2016, 16, 1935. [Google Scholar] [CrossRef]

- Cao, J.; Song, C.; Song, S.; Xiao, F.; Peng, S. Lane detection algorithm for intelligent vehicles in complex road conditions and dynamic environments. Sensors 2019, 19, 3166. [Google Scholar] [CrossRef] [PubMed]

- Yurtsever, E.; Yamazaki, S.; Miyajima, C.; Takeda, K.; Mori, M. Integrating driving behavior and traffic context through signal symbolization for data reduction and risky lane change detection. IEEE Trans. Intell. Veh. 2018, 3, 242–253. [Google Scholar] [CrossRef]

- Li, J.; Mei, X.; Prokhorov, D.; Tao, D. Deep neural network for structural prediction and lane detection in traffic scene. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 690–703. [Google Scholar] [CrossRef] [PubMed]

- Nan, Z.; Wei, P.; Xu, L.; Zheng, N. Efficient lane boundary detection with spatial-temporal knowledge filtering. Sensors 2016, 16, 1276. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Havyarimana, V.; Bai, J.; Xiao, Z. Vision-Based Lane Detection and Lane-Marking Model Inference: A Three-Step Deep Learning Approach. In Proceedings of the 2018 9th International Symposium on Parallel Architectures, Algorithms and Programming (PAAP), Taipei, Taiwan, 26–28 December 2018; pp. 183–190. [Google Scholar]

- Neven, D.; De Brabandere, B.; Georgoulis, S.; Proesmans, M.; Van Gool, L. Towards end-to-end lane detection: An instance segmentation approach. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 286–291. [Google Scholar]

- Hoang, T.M.; Baek, N.R.; Cho, S.W.; Kim, K.W.; Park, K.R. Road lane detection robust to shadows based on a fuzzy system using a visible light camera sensor. Sensors 2017, 17, 2475. [Google Scholar] [CrossRef] [PubMed]

- Chalapathy, R.; Chawla, S. Deep learning for anomaly detection: A survey. arXiv 2019, arXiv:1901.03407. [Google Scholar]

- Rashidha, R.; Simon, P. An adaptive-size median filter for impulse noise removal using neural network-based detector. Int. J. Signal Imaging Syst. Eng. 2016, 9, 305–310. [Google Scholar] [CrossRef]

- Kim, Y.; Soh, J.W.; Cho, N.I. Adaptively Tuning a Convolutional Neural Network by Gate Process for Image Denoising. IEEE Access 2019, 7, 63447–63456. [Google Scholar] [CrossRef]

- Kuang, X.; Sui, X.; Liu, Y.; Chen, Q.; Guohua, G. Single infrared image optical noise removal using a deep convolutional neural network. IEEE Photonics J. 2017, 10, 1–15. [Google Scholar] [CrossRef]

- Hansen, L.K.; Salamon, P. Neural network ensembles. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 993–1001. [Google Scholar] [CrossRef]

- Melo, J.; Naftel, A.; Bernardino, A.; Santos-Victor, J. Detection and classification of highway lanes using vehicle motion trajectories. IEEE Trans. Intell. Transp. Syst. 2006, 7, 188–200. [Google Scholar] [CrossRef]

- Ren, J.; Chen, Y.; Xin, L.; Shi, J. Lane detection in video-based intelligent transportation monitoring via fast extracting and clustering of vehicle motion trajectories. Math. Probl. Eng. 2014, 2014, 156296. [Google Scholar] [CrossRef]

- Hermosillo-Reynoso, F.; Torres-Roman, D.; Santiago-Paz, J.; Ramirez-Pacheco, J. A Novel Algorithm Based on the Pixel-Entropy for Automatic Detection of Number of Lanes, Lane Centers, and Lane Division Lines Formation. Entropy 2018, 20, 725. [Google Scholar] [CrossRef]

- Yi, S.C.; Chen, Y.C.; Chang, C.H. A lane detection approach based on intelligent vision. Comput. Electr. Eng. 2015, 42, 23–29. [Google Scholar] [CrossRef]

- Bertozzi, M.; Broggi, A. GOLD: A parallel real-time stereo vision system for generic obstacle and lane detection. IEEE Trans. Image Process. 1998, 7, 62–81. [Google Scholar] [CrossRef] [PubMed]

- López, A.; Serrat, J.; Canero, C.; Lumbreras, F.; Graf, T. Robust lane markings detection and road geometry computation. Int. J. Automot. Technol. 2010, 11, 395–407. [Google Scholar] [CrossRef]

- Guo, J.; Wei, Z.; Miao, D. Lane detection method based on improved RANSAC algorithm. In Proceedings of the 2015 IEEE Twelfth International Symposium on Autonomous Decentralized Systems, Taiwan, China, 25–27 March 2015; pp. 285–288. [Google Scholar]

- Miller Frederic, P.; Vandome, A.F.; Mcbrewster, J. Inverse Transform Sampling; Alphascript Publishing: Saarbrücken, Germany, 2010; Volume 2, pp. 22–24. [Google Scholar]

- Dorigo, M.; Blum, C. Ant colony optimization theory: A survey. Theor. Comput. Sci. 2005, 344, 243–278. [Google Scholar] [CrossRef]

- Srivastava, S.; Lumb, M.; Singal, R. Improved lane detection using hybrid median filter and modified Hough transform. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2014, 4, 30–37. [Google Scholar]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Izquierdo, A.; Lopez-Guede, J.M.; Graña, M. Road Lane Landmark Extraction: A State-of-the-art Review. In Proceedings of the International Conference on Hybrid Artificial Intelligence Systems, León, Spain, 4–6 September 2019; pp. 625–635. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).