A Cancelable Iris- and Steganography-Based User Authentication System for the Internet of Things

Abstract

:1. Introduction

2. Related Work

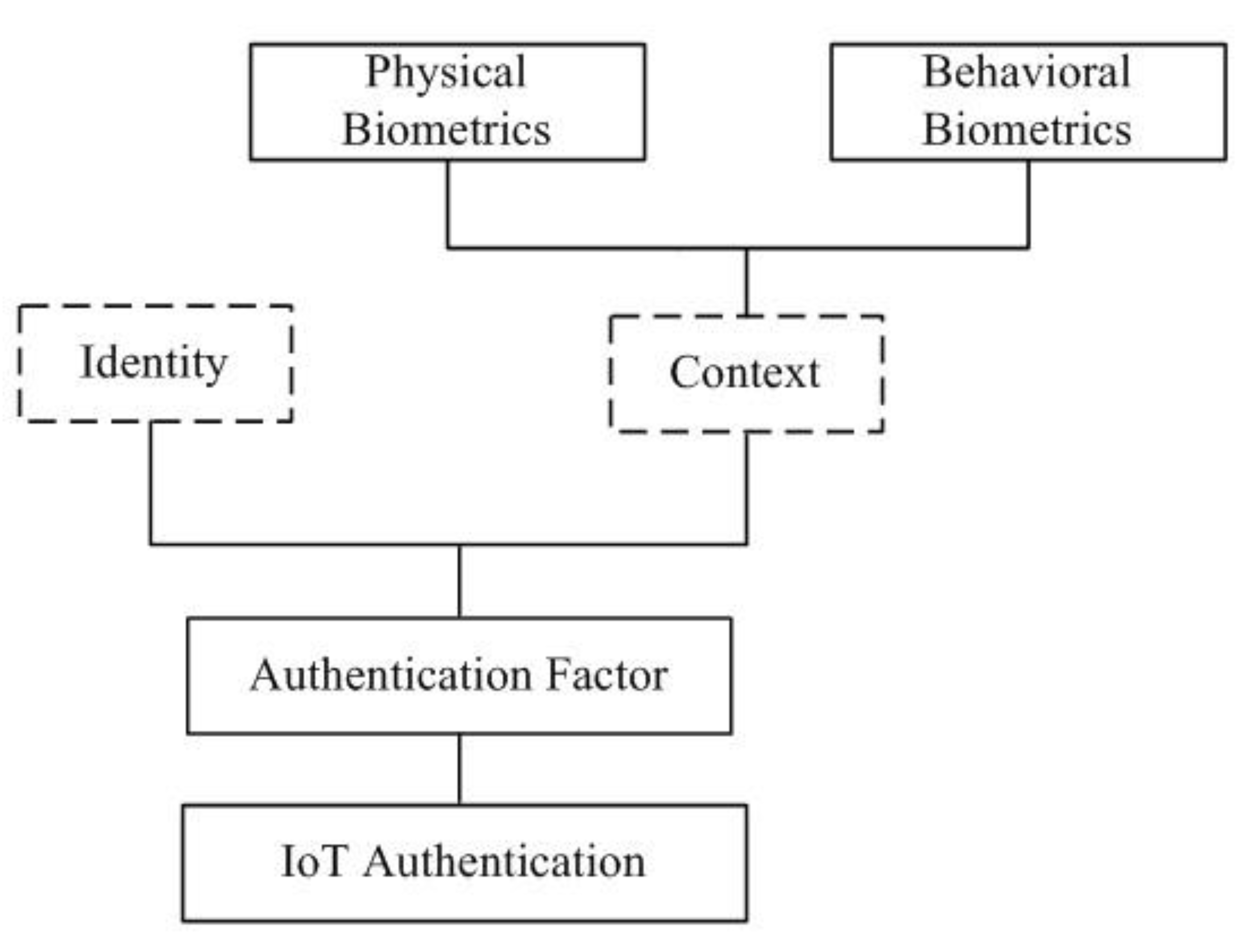

2.1. Biometric-Based IoT Networks

2.2. Cancelable Iris-Based Biometrics

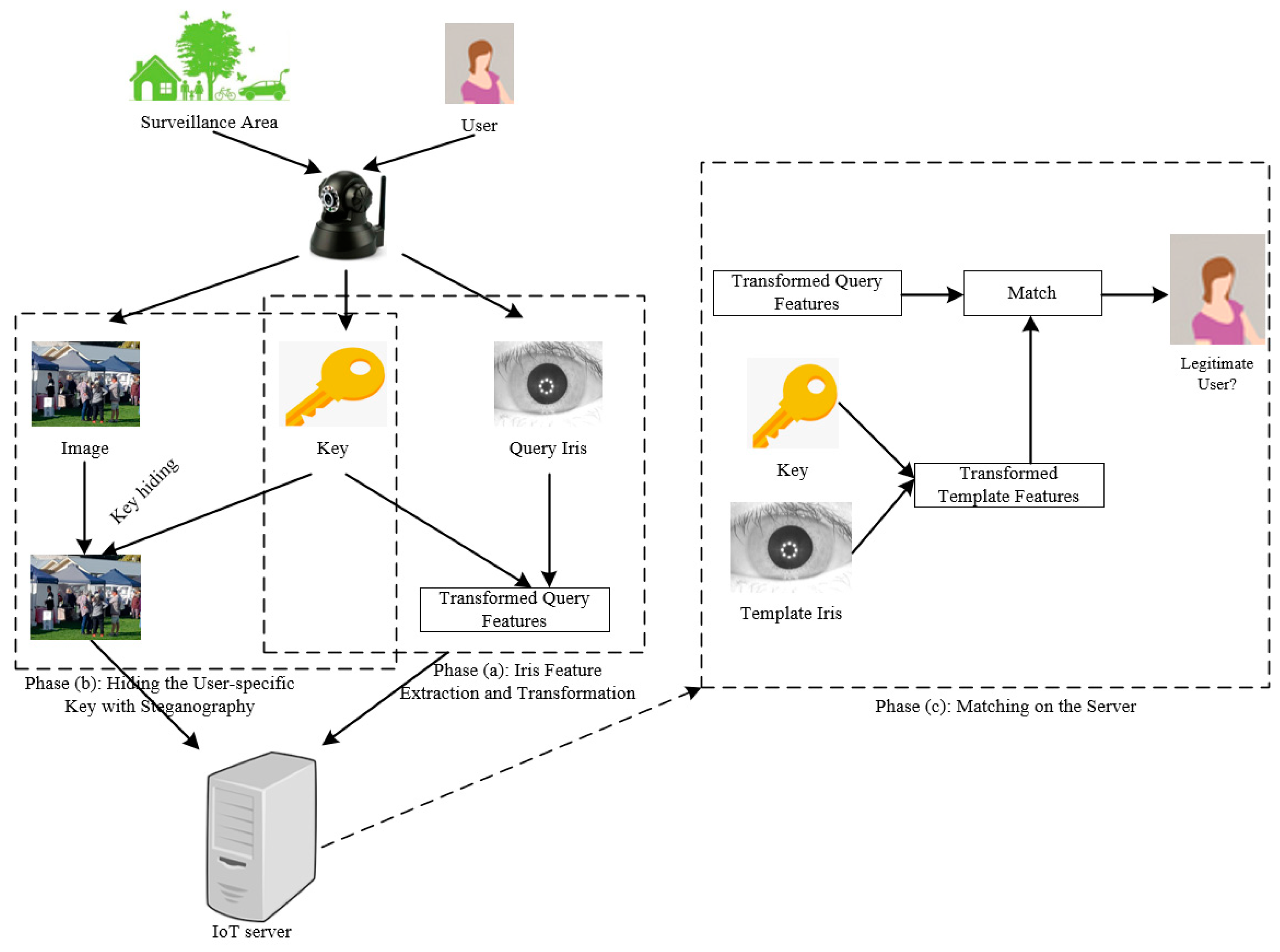

3. The Proposed Cancelable Iris- and Steganography-Based System

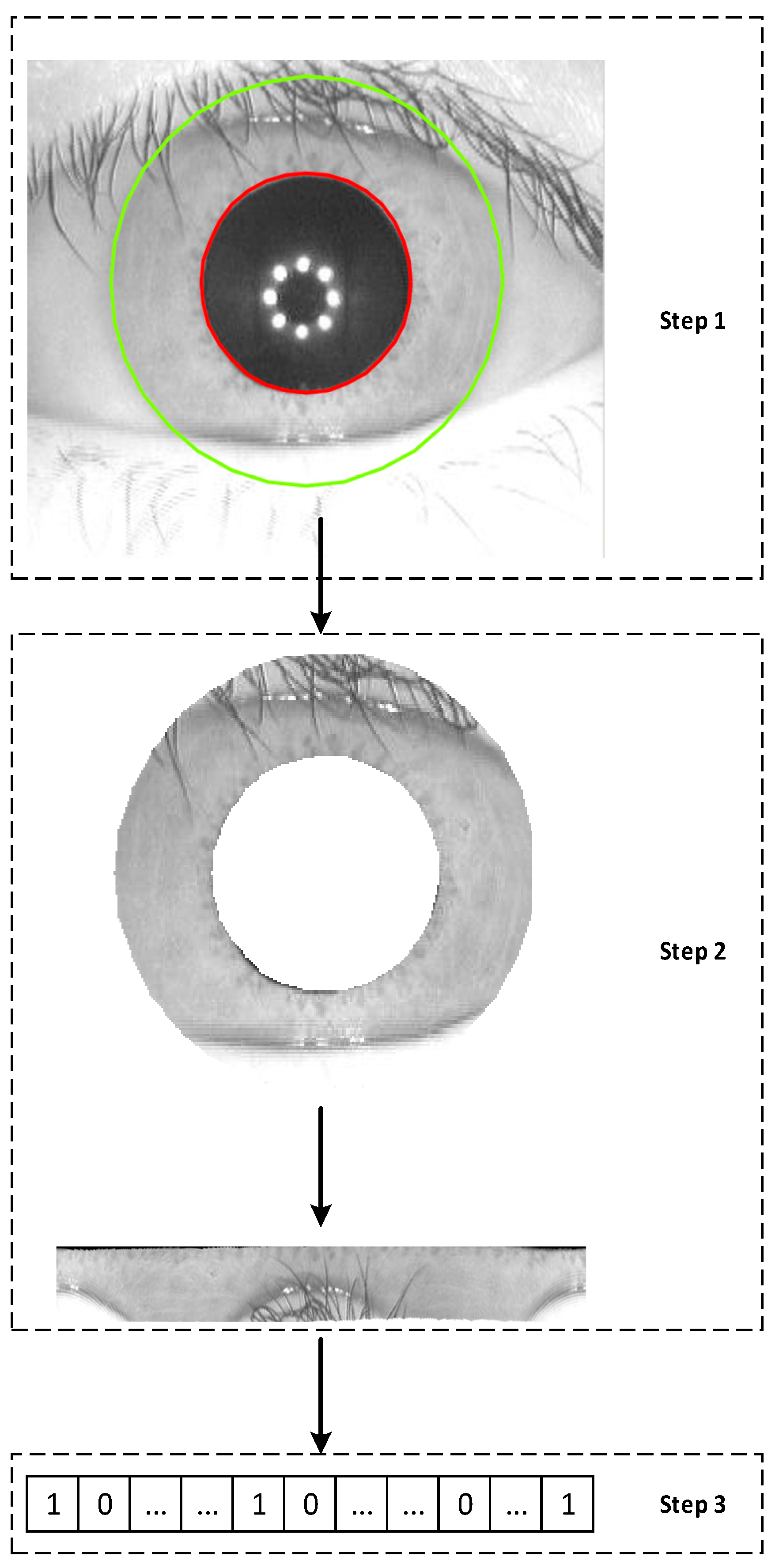

3.1. Iris Feature Extraction and Transformation

3.2. Hiding the User-Specific Key with Steganography

3.3. Matching on the Server

4. Experimental Results

4.1. Database Selection and Experimental Environment

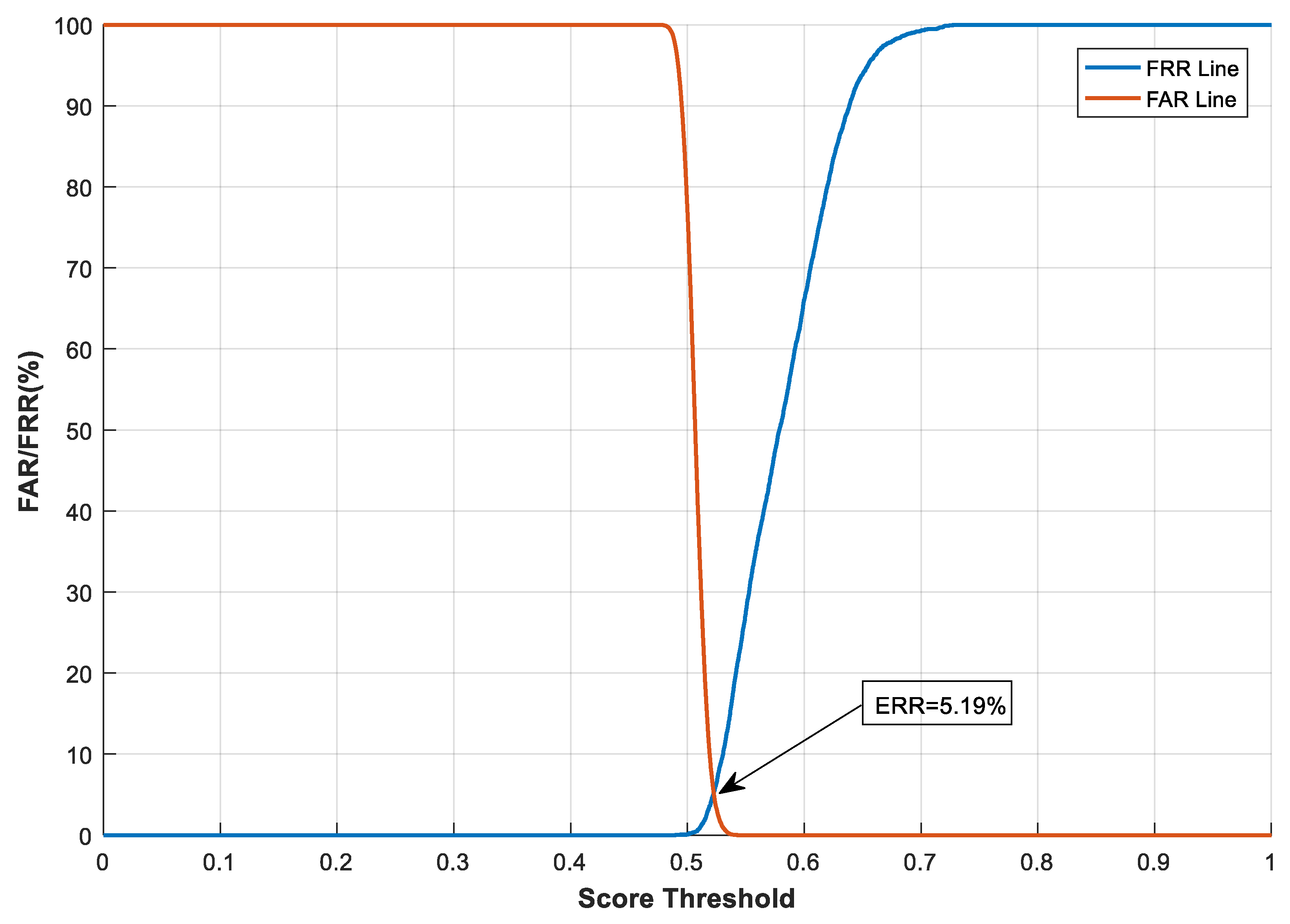

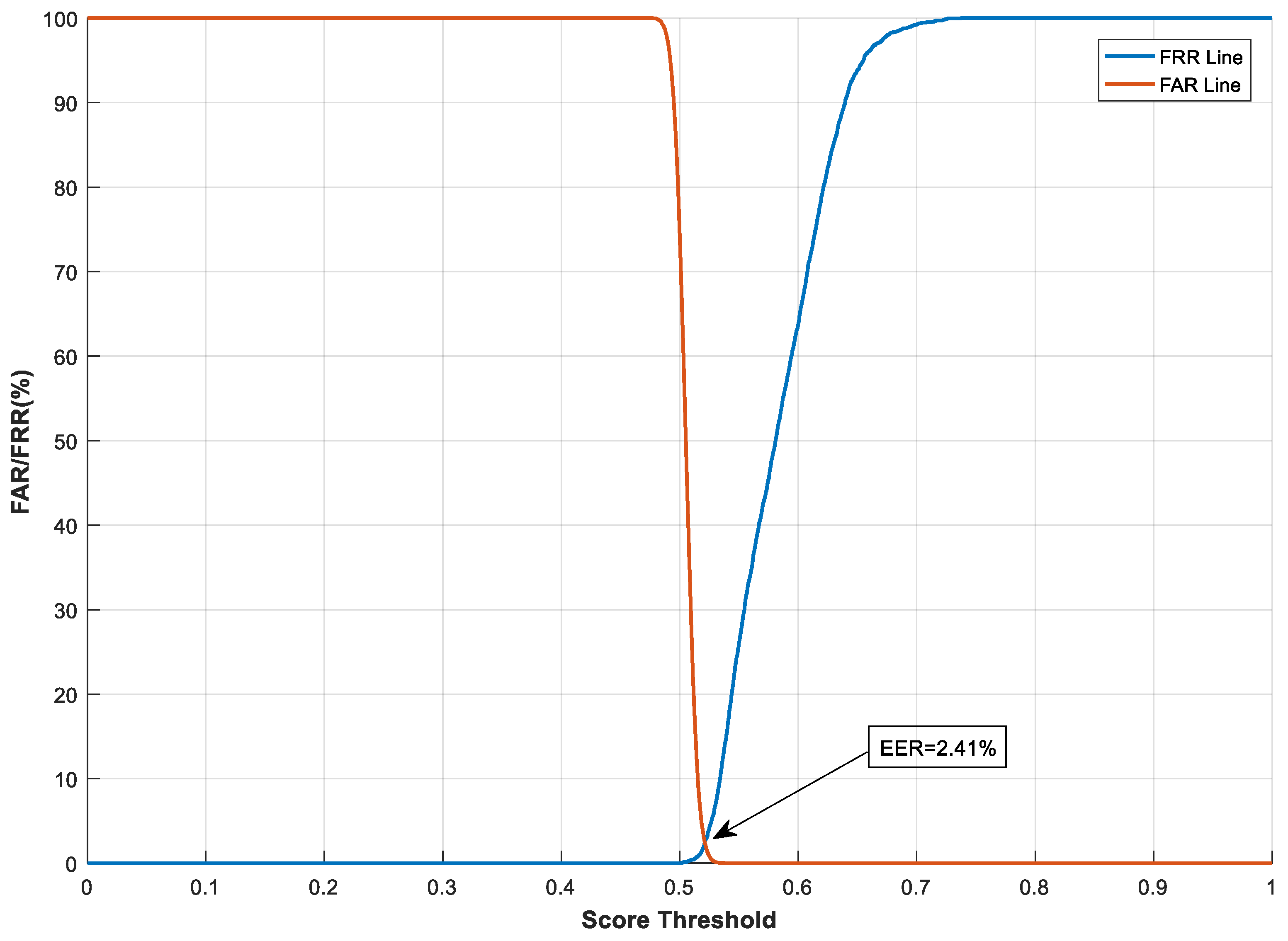

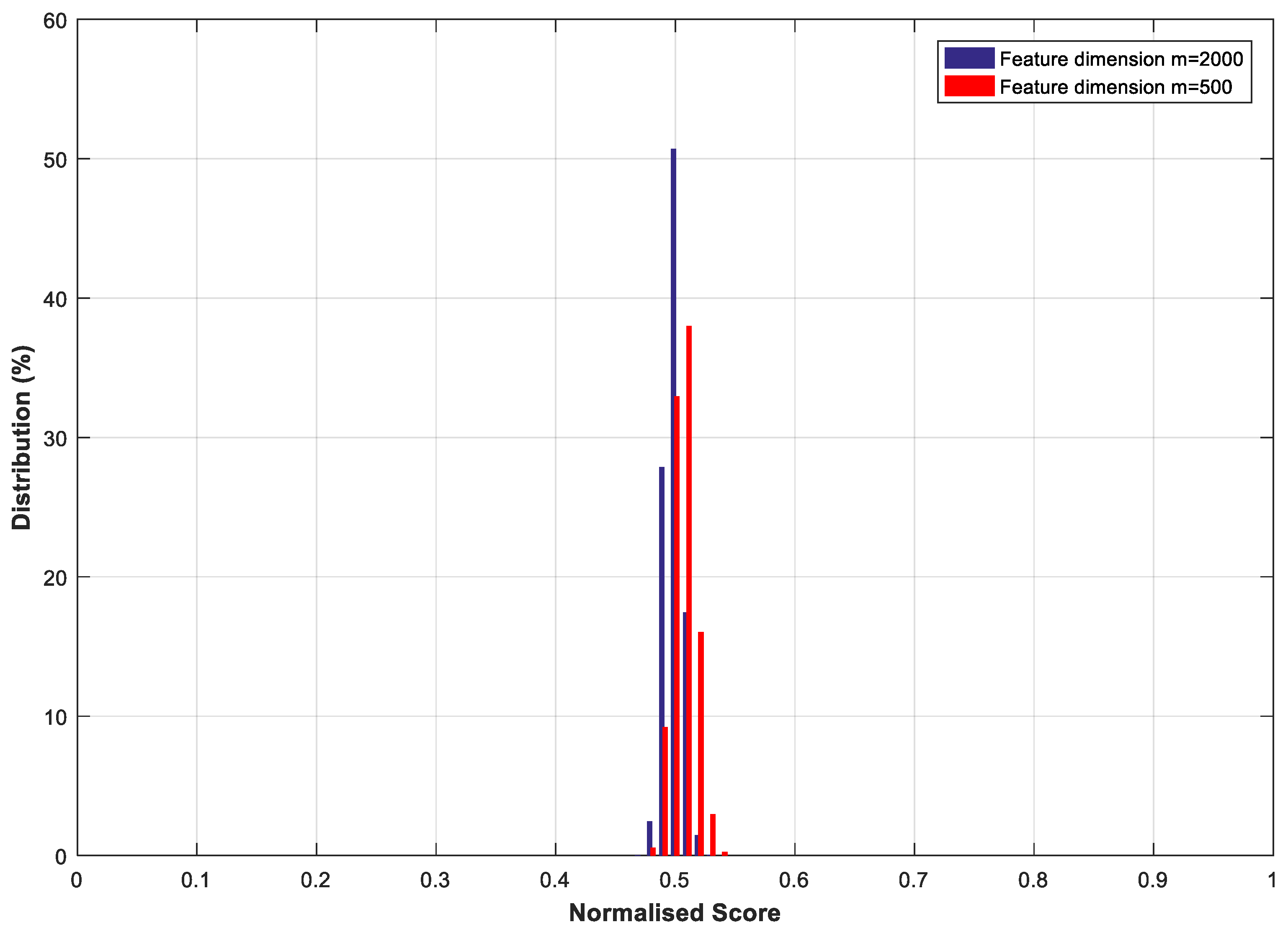

4.2. Performance Evaluation

4.2.1. The Effect of Transformation Parameters on System Performance

4.2.2. Comparison with Other Similar Systems

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ashton, K. That ‘internet of things’ thing. RFID J. 2009, 22, 97–114. [Google Scholar]

- Habib, K.; Torjusen, A.; Leister, W. A novel authentication framework based on biometric and radio fingerprinting for the IoT in eHealth. In Proceedings of the 2014 International Conference on Smart Systems, Devices and Technologies (SMART), Paris, France, 20–24 July 2014; pp. 32–37. [Google Scholar]

- Macedo, M.J.; Yang, W.; Zheng, G.; Johnstone, M.N. A comparison of 2D and 3D Delaunay triangulations for fingerprint authentication. In Proceedings of the 2017 Australian Information Security Management Conference, Perth, Australia, 5–6 December 2017; pp. 108–115. [Google Scholar]

- Lai, Y.-L.; Jin, Z.; Teoh, A.B.J.; Goi, B.-M.; Yap, W.-S.; Chai, T.-Y.; Rathgeb, C. Cancellable iris template generation based on Indexing-First-One hashing. Pattern Recognit. 2017, 64, 105–117. [Google Scholar]

- Masek, L. Iris Recognition. Available online: https://www.peterkovesi.com/studentprojects/libor/ (accessed on 19 April 2019).

- El-hajj, M.; Fadlallah, A.; Chamoun, M.; Serhrouchni, A. A Survey of Internet of Things (IoT) Authentication Schemes. Sensors 2019, 19, 1141. [Google Scholar] [CrossRef] [PubMed]

- Blasco, J.; Peris-Lopez, P. On the Feasibility of Low-Cost Wearable Sensors for Multi-Modal Biometric Verification. Sensors 2018, 18, 2782. [Google Scholar] [CrossRef] [PubMed]

- Arjona, R.; Prada-Delgado, M.; Arcenegui, J.; Baturone, I. A PUF-and Biometric-Based Lightweight Hardware Solution to Increase Security at Sensor Nodes. Sensors 2018, 18, 2429. [Google Scholar] [CrossRef]

- Kantarci, B.; Erol-Kantarci, M.; Schuckers, S. Towards secure cloud-centric internet of biometric things. In Proceedings of the 2015 IEEE 4th International Conference on Cloud Networking (CloudNet), Niagara Falls, ON, Canada, 5–7 October 2015; pp. 81–83. [Google Scholar]

- Karimian, N.; Wortman, P.A.; Tehranipoor, F. Evolving authentication design considerations for the internet of biometric things (IoBT). In Proceedings of the Eleventh IEEE/ACM/IFIP International Conference on Hardware/Software Codesign and System Synthesis, Pittsburgh, PA, USA, 1–7 October 2016; p. 10. [Google Scholar]

- Maček, N.; Franc, I.; Bogdanoski, M.; Mirković, A. Multimodal Biometric Authentication in IoT: Single Camera Case Study. In Proceedings of the 8th International Conference on Business Information Security, Belgrade, Serbia, 15 October 2016; pp. 33–38. [Google Scholar]

- Shahim, L.-P.; Snyman, D.; du Toit, T.; Kruger, H. Cost-Effective Biometric Authentication using Leap Motion and IoT Devices. In Proceedings of the Tenth International Conference on Emerging Security Information, Systems and Technologies (SECURWARE 2016), Nice, France, 24–28 July 2016; pp. 10–13. [Google Scholar]

- Dhillon, P.K.; Kalra, S. A lightweight biometrics based remote user authentication scheme for IoT services. J. Inf. Secur. Appl. 2017, 34, 255–270. [Google Scholar] [CrossRef]

- Punithavathi, P.; Geetha, S.; Karuppiah, M.; Islam, S.H.; Hassan, M.M.; Choo, K.-K.R. A Lightweight Machine Learning-based Authentication Framework for Smart IoT Devices. Inf. Sci. 2019. [Google Scholar] [CrossRef]

- Yang, W.; Hu, J.; Wang, S. A Delaunay Quadrangle-Based Fingerprint Authentication System with Template Protection Using Topology Code for Local Registration and Security Enhancement. IEEE Trans. Inf. Forensics Sec. 2014, 9, 1179–1192. [Google Scholar] [CrossRef]

- Yang, W.; Hu, J.; Wang, S.; Stojmenovic, M. An Alignment-free Fingerprint Bio-cryptosystem based on Modified Voronoi Neighbor Structures. Pattern Recognit. 2014, 47, 1309–1320. [Google Scholar]

- Wang, S.; Yang, W.; Hu, J. Design of Alignment-Free Cancelable Fingerprint Templates with Zoned Minutia Pairs. Pattern Recognit. 2017, 66, 295–301. [Google Scholar] [CrossRef]

- Ratha, N.K.; Connell, J.H.; Bolle, R.M. Enhancing security and privacy in biometrics-based authentication systems. IBM Syst. J. 2001, 40, 614–634. [Google Scholar] [CrossRef]

- Ratha, N.K.; Chikkerur, S.; Connell, J.H.; Bolle, R.M. Generating cancelable fingerprint templates. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 561–572. [Google Scholar] [CrossRef] [PubMed]

- Zuo, J.; Ratha, N.K.; Connell, J.H. Cancelable iris biometric. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; p. 4. [Google Scholar]

- Hämmerle-Uhl, J.; Pschernig, E.; Uhl, A. Cancelable iris biometrics using block re-mapping and image warping. In Proceedings of the 12th International Conference on Information Security, Pisa, Italy, 7–9 September 2009; pp. 135–142. [Google Scholar]

- Kanade, S.; Petrovska-Delacrétaz, D.; Dorizzi, B. Cancelable iris biometrics and using error correcting codes to reduce variability in biometric data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 120–127. [Google Scholar]

- Pillai, J.K.; Patel, V.M.; Chellappa, R.; Ratha, N.K. Sectored random projections for cancelable iris biometrics. In Proceedings of the IEEE International Conference on Acoustics Speech and Signal Processing (ICASSP), Dallas, TX, USA, 14–19 March 2010; pp. 1838–1841. [Google Scholar]

- Jenisch, S.; Uhl, A. Security analysis of a cancelable iris recognition system based on block remapping. In Proceedings of the 2011 18th IEEE International Conference on Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 3213–3216. [Google Scholar]

- Hämmerle-Uhl, J.; Pschernig, E.; Uhl, A. Cancelable iris-templates using key-dependent wavelet transforms. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013; p. 8. [Google Scholar]

- Rathgeb, C.; Breitinger, F.; Busch, C. Alignment-free cancelable iris biometric templates based on adaptive bloom filters. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013; p. 8. [Google Scholar]

- Quan, F.; Fei, S.; Anni, C.; Feifei, Z. Cracking cancelable fingerprint template of Ratha. In Proceedings of the 2008 International Symposium on Computer Science and Computational Technology, Shanghai, China, 20–22 December 2008; pp. 572–575. [Google Scholar]

- Li, C.; Hu, J. Attacks via record multiplicity on cancelable biometrics templates. Concurr. Comput. Pract. Exp. 2014, 26, 1593–1605. [Google Scholar] [CrossRef]

- Tran, Q.N.; Wang, S.; Ou, R.; Hu, J. Double-layer secret-sharing system involving privacy preserving biometric authentication. In User-Centric Privacy and Security in Biometrics; Institution of Engineering and Technology: London, UK, 2017; pp. 153–170. [Google Scholar] [CrossRef]

- Johnson, N.F.; Jajodia, S. Exploring steganography: Seeing the unseen. Computer 1998, 31, 26–34. [Google Scholar] [CrossRef]

- Ma, L.; Tan, T.; Wang, Y.; Zhang, D. Efficient iris recognition by characterizing key local variations. IEEE Trans. Image Process. 2004, 13, 739–750. [Google Scholar] [CrossRef] [PubMed]

- VeriEye, S.D.K. Neuro Technology. Available online: http://www.neurotechnology.com/verieye.html (accessed on 19 April 2019).

- Yang, W.; Wang, S.; Hu, J.; Zheng, G.; Valli, C. A Fingerprint and Finger-vein Based Cancelable Multi-biometric System. Pattern Recognit. 2018, 78, 242–251. [Google Scholar] [CrossRef]

- Wang, S.; Deng, G.; Hu, J. A partial Hadamard transform approach to the design of cancelable fingerprint templates containing binary biometric representations. Pattern Recognit. 2017, 61, 447–458. [Google Scholar] [CrossRef]

- Boncelet, C.G.J.; Marvel, L.M.; Retter, C.T. Spread Spectrum Image Steganography. U.S. Patent No. 6,557,103, 29 April 2003. [Google Scholar]

- Agrawal, N.; Gupta, A. DCT domain message embedding in spread-spectrum steganography system. In Proceedings of the Data Compression Conference, Snowbird, UT, USA, 16–18 March 2009; p. 433. [Google Scholar]

- Dumitrescu, S.; Wu, X.; Wang, Z. Detection of LSB steganography via sample pair analysis. IEEE Trans. Signal Process. 2003, 51, 1995–2007. [Google Scholar] [CrossRef]

- Qi, X.; Wong, K. An adaptive DCT-based mod-4 steganographic method. In Proceedings of the 2005 IEEE International Conference on Image Processing, Genova, Italy, 11–14 September 2005. [Google Scholar]

- Online Steganography Program. Available online: https://stylesuxx.github.io/steganography/ (accessed on 19 April 2019).

- Yang, W.; Hu, J.; Wang, S.; Chen, C. Mutual dependency of features in multimodal biometric systems. Electron. Lett. 2015, 51, 234–235. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Zheng, G.; Chaudhry, J.; Valli, C. ECB4CI: An enhanced cancelable biometric system for securing critical infrastructures. J. Supercomput. 2018. [Google Scholar] [CrossRef]

- CASIA-IrisV3. Available online: http://www.cbsr.ia.ac.cn/IrisDatabase.htm (accessed on 15 April 2019).

- MMU-V1 Iris Database. Available online: https://www.cs.princeton.edu/~andyz/irisrecognition (accessed on 10 June 2019).

- Proença, H.; Alexandre, L.A. UBIRIS: A noisy iris image database. In Proceedings of the 13th International Conference on Image Analysis and Processing, Cagliari, Italy, 6–8 September 2005; pp. 970–977. [Google Scholar]

- Yang, W.; Wang, S.; Zheng, G.; Valli, C. Impact of feature proportion on matching performance of multi-biometric systems. ICT Express 2018, 5, 37–40. [Google Scholar] [CrossRef]

- Yang, W.; Hu, J.; Wang, S.; Wu, Q. Biometrics based Privacy-Preserving Authentication and Mobile Template Protection. Wirel. Commun. Mob. Comput. 2018, 2018, 7107295. [Google Scholar] [CrossRef]

- Zhao, D.; Luo, W.; Liu, R.; Yue, L. Negative iris recognition. IEEE Trans. Dependable Secur. Comput. 2015. [Google Scholar] [CrossRef]

- Daugman, J.; Downing, C. Searching for doppelgängers: Assessing the universality of the IrisCode impostors distribution. IET Biom. 2016, 5, 65–75. [Google Scholar] [CrossRef]

- Ouda, O.; Tsumura, N.; Nakaguchi, T. Tokenless cancelable biometrics scheme for protecting iris codes. In Proceedings of the 2010 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 882–885. [Google Scholar]

- Zhao, D.; Fang, S.; Xiang, J.; Tian, J.; Xiong, S. Iris Template Protection Based on Local Ranking. Secur. Commun. Netw. 2018, 2018, 4519548. [Google Scholar] [CrossRef]

- Radman, A.; Jumari, K.; Zainal, N. Fast and reliable iris segmentation algorithm. IET Image Process. 2013, 7, 42–49. [Google Scholar] [CrossRef]

| Shifting Parameter | N = 2 | N = 4 | N = 8 |

|---|---|---|---|

| CASIA-IrisV3-Interval | EER = 0.62% | EER = 0.22% | EER = 0.22% |

| MMU-V1 | EER = 2.11% | EER = 1.89% | EER = 1.77% |

| UBIRIS-V1-Session 1 | EER = 2.43% | EER = 2.52% | EER = 2.53% |

| Methods | Databases | ||

|---|---|---|---|

| CASIA-IrisV3-Interval | MMU-V1 | UBIRIS-V1-Session 1 | |

| Bin-combo in Zuo et al. [20] | 4.41% | - | - |

| Jenisch and Uhl [24] | 1.22% | - | - |

| Uhl et al. [25] | 1.07% | - | - |

| Rathgeb et al. [26] | 1.54% | - | - |

| Ouda et al. [49] | 6.27% | - | - |

| Jin et al. [4] | 0.54% | - | - |

| Radman et al. [51] | - | - | 9.48% |

| Zhao et al. [50] | 1.06% | 5.50% | 13.44% |

| Proposed (m = 2000) | 1.66% | 4.78% | 3.00% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, W.; Wang, S.; Hu, J.; Ibrahim, A.; Zheng, G.; Macedo, M.J.; Johnstone, M.N.; Valli, C. A Cancelable Iris- and Steganography-Based User Authentication System for the Internet of Things. Sensors 2019, 19, 2985. https://doi.org/10.3390/s19132985

Yang W, Wang S, Hu J, Ibrahim A, Zheng G, Macedo MJ, Johnstone MN, Valli C. A Cancelable Iris- and Steganography-Based User Authentication System for the Internet of Things. Sensors. 2019; 19(13):2985. https://doi.org/10.3390/s19132985

Chicago/Turabian StyleYang, Wencheng, Song Wang, Jiankun Hu, Ahmed Ibrahim, Guanglou Zheng, Marcelo Jose Macedo, Michael N. Johnstone, and Craig Valli. 2019. "A Cancelable Iris- and Steganography-Based User Authentication System for the Internet of Things" Sensors 19, no. 13: 2985. https://doi.org/10.3390/s19132985