Sub-Diffraction Visible Imaging Using Macroscopic Fourier Ptychography and Regularization by Denoising

Abstract

:1. Introduction

2. Related Work

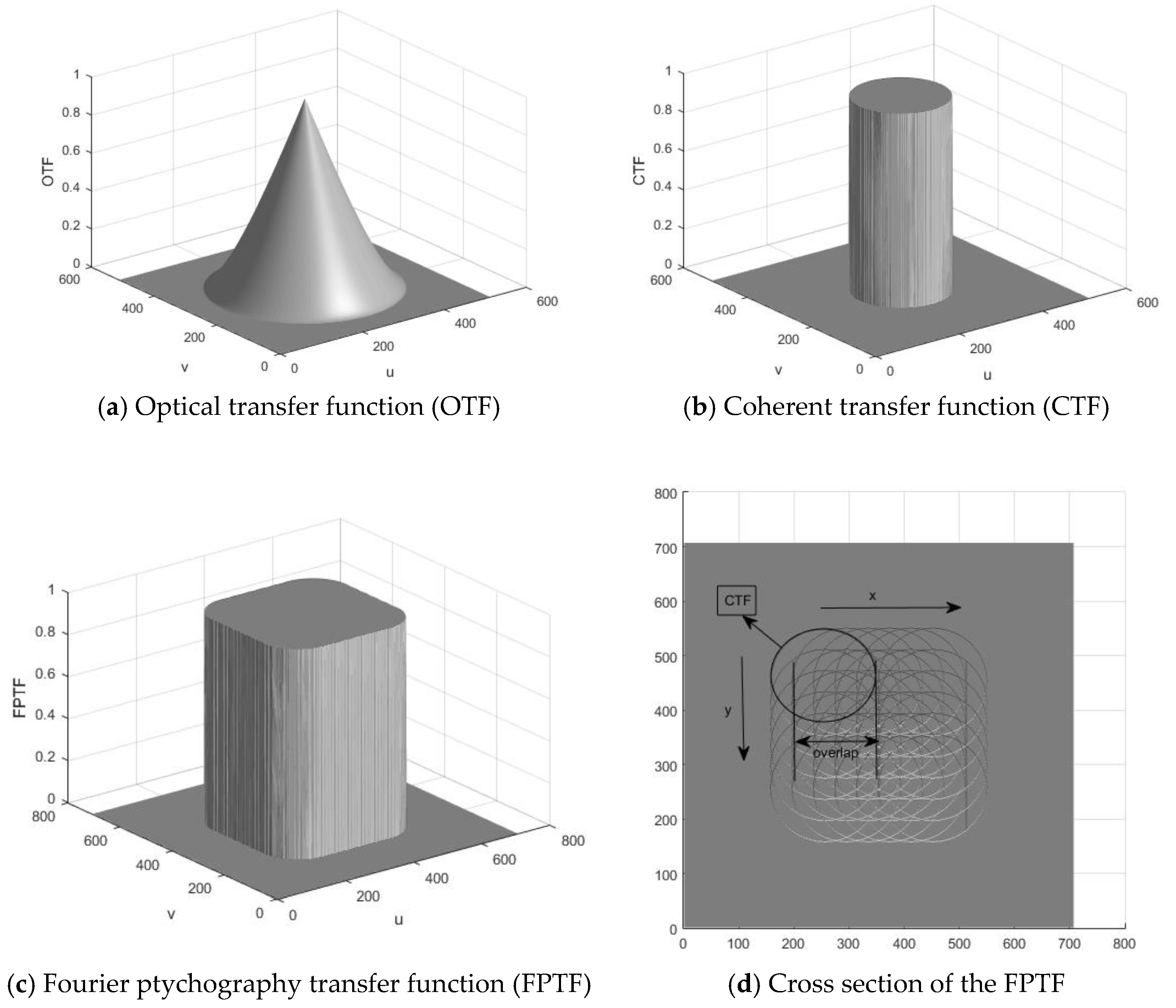

2.1. Macroscopic Fourier Ptychography

2.2. Coherent Illummination and Speckle Phenomena

2.3. Phase Retrieval

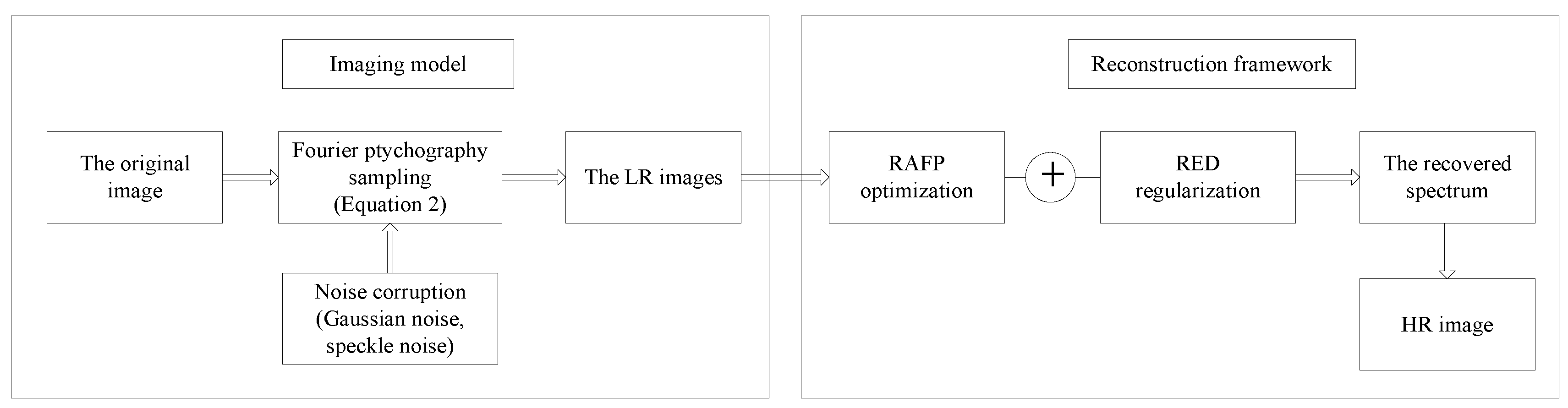

3. Methods

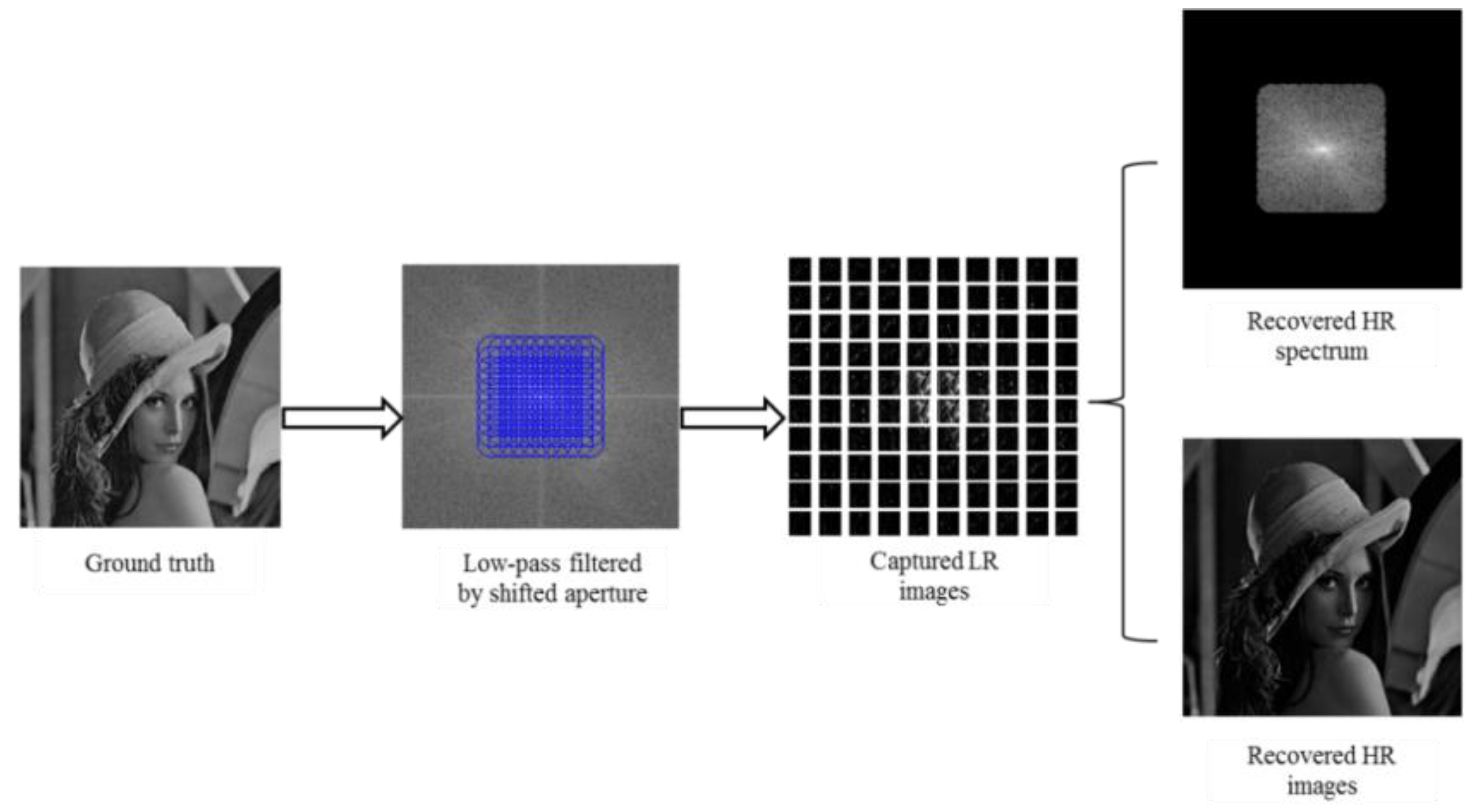

3.1. Image Formation Model

3.2. Optimization Framework

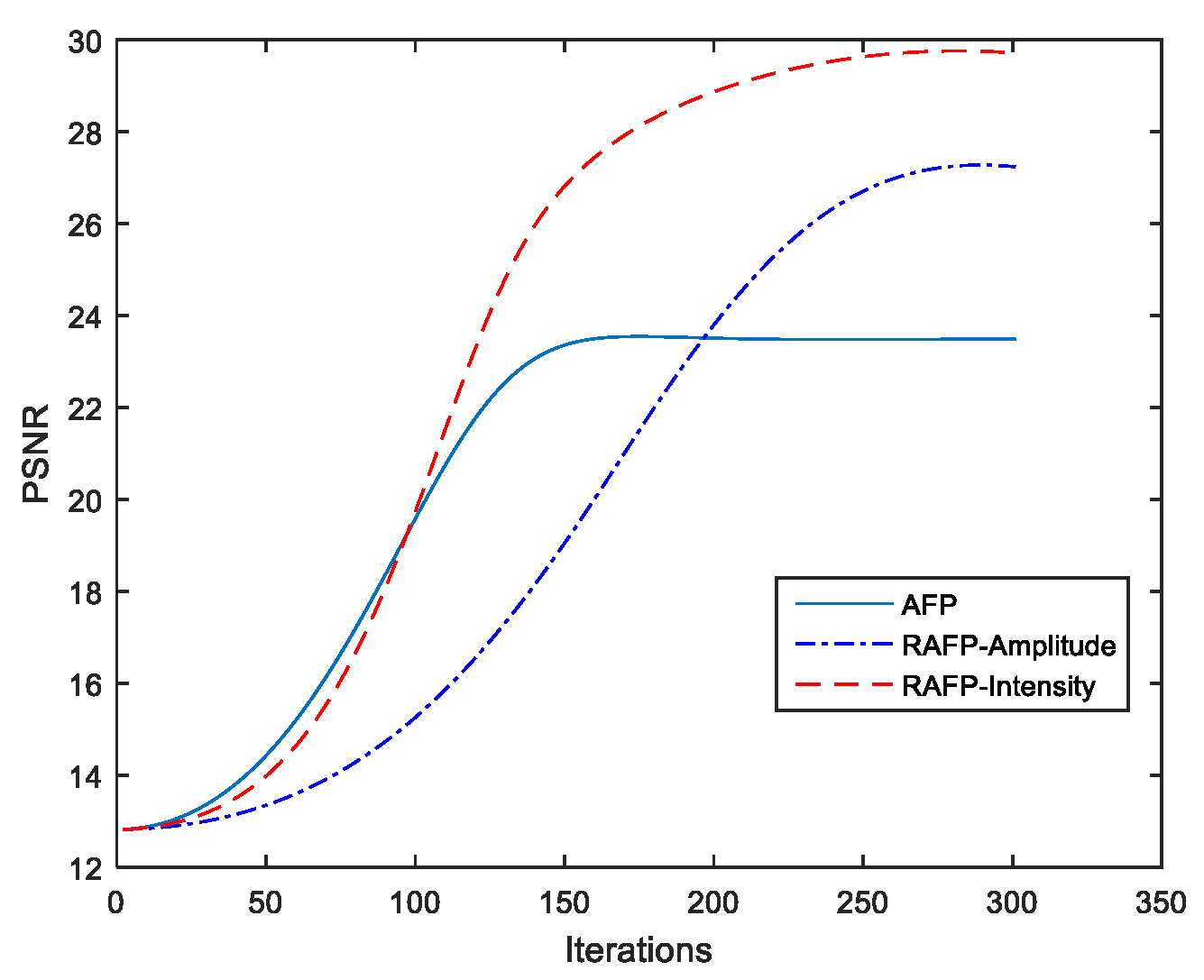

3.2.1. Review of Reweighted Amplitude Flow Algorithm

3.2.2. Reweighted Amplitude Flow for Fourier Ptychography (RAFP)

3.2.3. Fourier Ptychography via Regularization by Denoising

| Algorithm 1 The imaging reconstruction framework |

| Input: Captured LR images ; sampling matrix . |

| Output: Recovered spectrum . |

| 1: Parameters: Maximum number of iterations T; step size ; |

| weighting parameters ; Regularization parameter . |

| 2: Initialization: . |

| 3: Loop: for to |

| , |

| where for all . |

| 4: end |

4. Experiments and Results

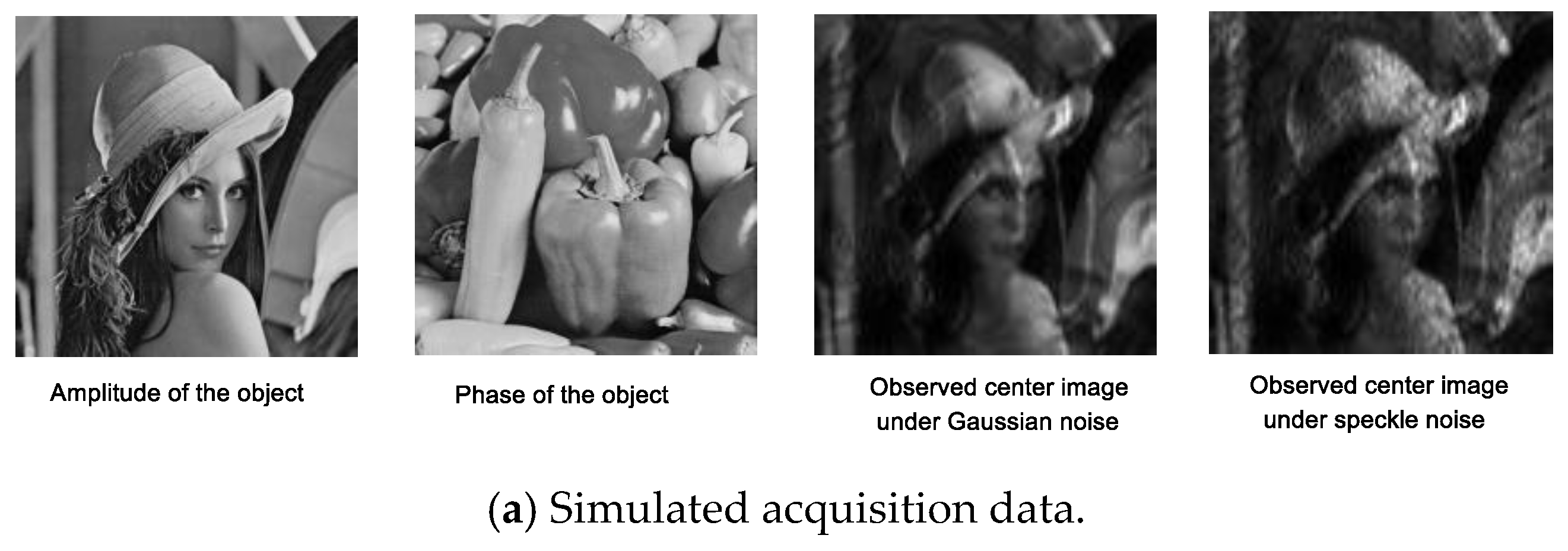

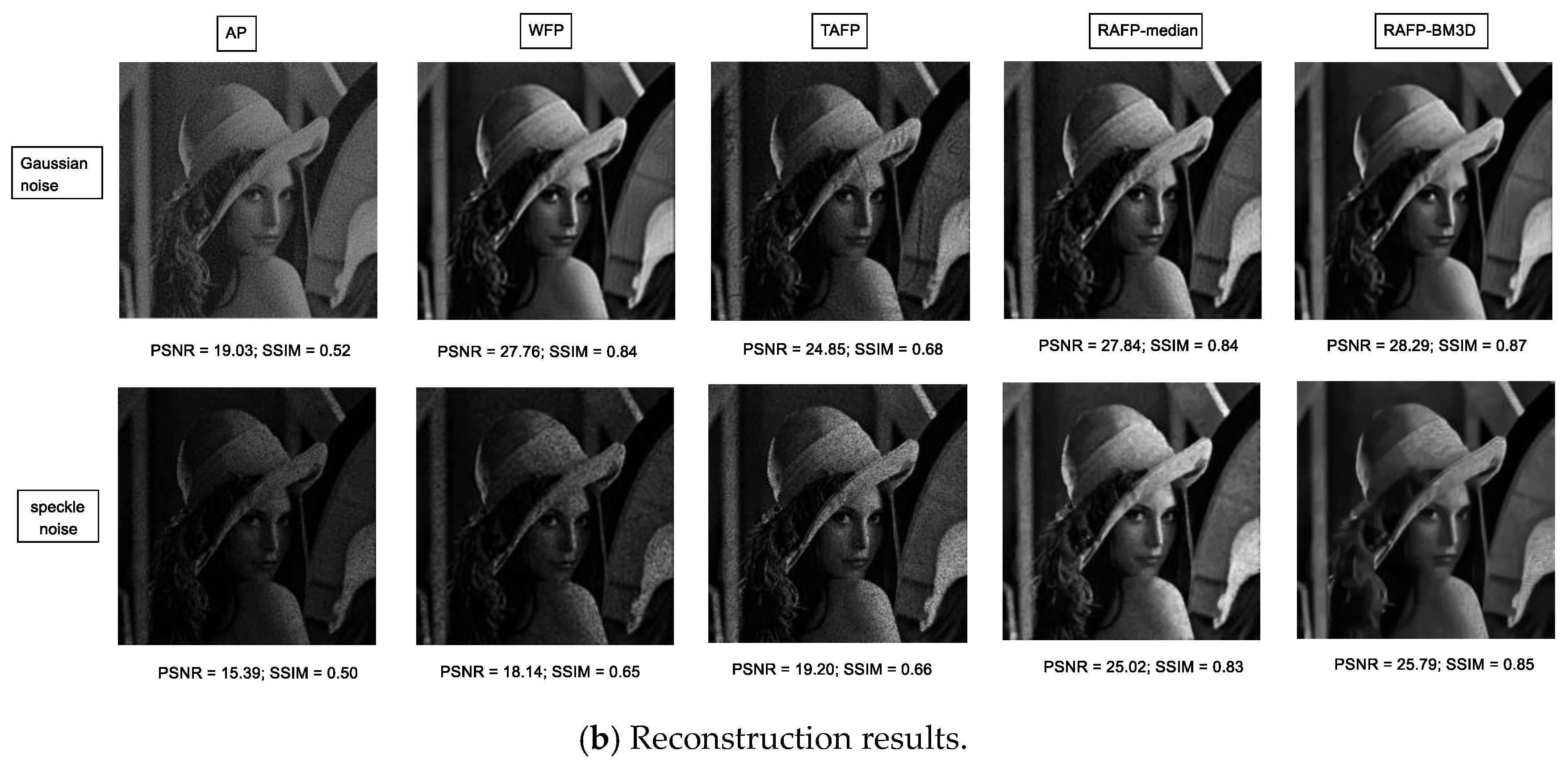

4.1. Numerical Simulation

4.2. Criterion

4.3. Results

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kang, M.; Chaudhuri, S. Super-resolution image reconstruction. IEEE Signal Process. Mag. 2003, 20, 19–20. [Google Scholar] [CrossRef]

- Holloway, J.; Asif, M.S.; Sharma, M.K.; Matsuda, N.; Horstmeyer, R.; Cossairt, O.; Veeraraghavan, A. Toward long distance, sub-diffraction imaging using coherent camera arrays. IEEE Trans. Comput. Imaging 2016, 2, 251–265. [Google Scholar] [CrossRef]

- Holloway, J.; Wu, Y.; Sharma, M.K.; Cossairt, O.; Veeraraghavan, A. SAVI: Synthetic apertures for long-range, subdiffraction-limited visible imaging using Fourier ptychography. Sci. Adv. 2017, 3, e1602564. [Google Scholar] [CrossRef] [PubMed]

- Maiden, A.M.; Humphry, M.J.; Zhang, F.; Rodenburg, J.M. Superresolution imaging via ptychography. JOSA A 2011, 28, 604–612. [Google Scholar] [CrossRef] [PubMed]

- Zheng, G.; Horstmeyer, R.; Yang, C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics 2013, 7, 739. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Horstmeyer, R.; Shiradkar, R.; Guo, K.; Ou, X.; Bian, Z.; Xin, H.; Zheng, G. Aperture-scanning Fourier ptychography for 3D refocusing and super-resolution macroscopic imaging. Opt. Express 2014, 22, 13586–13599. [Google Scholar] [CrossRef] [PubMed]

- Fienup, J.R. Phase retrieval algorithms: A comparison. Appl. Opt. 1982, 21, 2758–2769. [Google Scholar] [CrossRef] [PubMed]

- Fienup, J.R. Reconstruction of a complex-valued object from the modulus of its Fourier transform using a support constraint. JOSA A 1987, 4, 118–123. [Google Scholar] [CrossRef]

- Goodman, J.W. Introduction to Frouier Optics; Roberts and Company Publishers: Greenwood Village, CO, USA, 2005; pp. 31–118. [Google Scholar]

- Pacheco, S.; Salahieh, B.; Milster, T.; Rodriguez, J.J.; Liang, R. Transfer function analysis in epi-illumination Fourier ptychography. Opt. Lett. 2015, 40, 5343–5346. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goodman, J.W. Speckle Phenomena in Optics: Theory and Application; Roberts and Company Publishers: Greenwood Village, CO, USA, 2007; pp. 1–57. [Google Scholar]

- Huang, X.; Jia, Z.; Zhou, J.; Yang, J.; Kasabov, N. Speckle reduction of reconstructions of digital holograms using Gamma-correction and filtering. IEEE Access 2018, 6, 5227–5235. [Google Scholar] [CrossRef]

- Di, C.G.; El, M.A.; Ferraro, P.; Dale, R.; Coppola, G.; Dale, B.; Coppola, G.; Dubois, F. 4D tracking of clinical seminal samples for quantitative characterization of motility parameters. Biomed. Opt. Express 2014, 5, 690–700. [Google Scholar]

- Garcia-Sucerquia, J.; Ramirez, J.A.H.; Prieto, D.V. Reduction of speckle noise in digital holography by using digital image processing. Optik 2005, 116, 44–48. [Google Scholar] [CrossRef]

- Lee, J.S. Digital image enhancement and noise filtering by use of local statistic. IEEE Trans. Pattern Anal. Mach. Intell. 1980, PAM1-2, 165–168. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.-M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Metzler, C.A.; Maleki, A.; Baraniuk, R.G. BM3D-prgamp: Compressive phase retrieval based on BM3D denoising. In Proceedings of the 2016 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Seattle, WA, USA, 11–15 July 2016. [Google Scholar]

- Shechtman, Y.; Eldar, Y.C.; Cohen, O.; Chapman, H.N.; Miao, J.; Segev, M. Phase retrieval with application to optical imaging: A contemporary overview. IEEE Signal Process. Mag. 2015, 32, 87–109. [Google Scholar] [CrossRef]

- Netrapalli, P.; Jain, P.; Sanghavi, S. Phase retrieval using alternating minimization. IEEE Trans. Signal Process. 2015, 18, 4814–4826. [Google Scholar] [CrossRef]

- Katkovnik, V. Phase retrieval from noisy data based on sparse approximation of object phase and amplitude. arXiv, 2017; arXiv:1709.01071. [Google Scholar]

- Bian, L.; Suo, J.; Zheng, G.; Guo, K.; Chen, F.; Dai, Q. Fourier ptychographic reconstruction using Wirtinger flow optimization. Opt. Express 2015, 23, 4856–4866. [Google Scholar] [CrossRef] [PubMed]

- Candes, E.J.; Li, X.; Soltanolkotabi, M. Phase retrieval via Wirtinger flow: Theory and algorithms. IEEE Trans. Inf. Theory 2015, 61, 1985–2007. [Google Scholar] [CrossRef]

- Cai, T.T.; Li, X.; Ma, Z. Optimal rates of convergence for noisy sparse phase retrieval via thresholded Wirtinger flow. Ann. Stat. 2016, 44, 2221–2251. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Liang, Y. Reshaped wirtinger flow for solving quadratic system of equations. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Chen, Y.; Candes, E. Solving random quadratic systems of equations is nearly as easy as solving linear systems. Commun. Pure Appl. Math. 2017, 70, 822–883. [Google Scholar] [CrossRef]

- Yeh, L.H.; Dong, J.; Zhong, J.; Tian, L.; Chen, M.; Tang, G.; Soltanolkotabi, M.; Waller, L. Experimental robustness of Fourier ptychography phase retrieval algorithms. Opt. Express 2015, 23, 33214–33240. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Metzler, C.A.; Schniter, P.; Veeraraghavan, A.; Baraniuk, R.G. PrDeep: Robust Phase Retrieval with Flexible Deep Neural Networks. arXiv, 2018; arXiv:1803.00212. [Google Scholar]

- Wang, G.; Giannakis, G.B.; Saad, Y.; Chen, J. Phase Retrieval via Reweighted Amplitude Flow. IEEE Trans. Signal Process. 2018, 66, 2818–2833. [Google Scholar] [CrossRef]

- Wang, G.; Giannakis, G.B.; Eldar, Y.C. Solving systems of random quadratic equations via truncated amplitude flow. IEEE Trans. Inf. Theory 2018, 64, 773–794. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, L.; Giannakis, G.B.; Akcakaya, M.; Chen, J. Sparse Phase Retrieval via Truncated Amplitude Flow. IEEE Trans. Signal Process. 2017, 66, 479–491. [Google Scholar] [CrossRef]

- Jagatap, G.; Chen, Z.; Hegde, C.; Vaswani, N. Sub-diffraction Imaging using Fourier Ptychography and Structured Sparsity. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Bian, L.; Suo, J.; Chung, J.; Ou, X.; Yang, C.; Chen, F.; Dai, Q. Fourier ptychographic reconstruction using Poisson maximum likelihood and truncated Wirtinger gradient. Sci. Rep. 2017, 6, 27384. [Google Scholar] [CrossRef] [PubMed]

- Romano, Y.; Elad, M.; Milanfar, P. The little engine that could: Regularization by denoising (RED). SIAM J. Imaging Sci. 2017, 10, 1804–1844. [Google Scholar] [CrossRef]

- Cheng, C.F.; Qi, D.P.; Liu, D.L.; Teng, S.Y. The Computational Simulations of the Gaussian Correlation Random Surface and Its Light-Scattering Speckle Field and the Analysis of the Intensity Probability Density. Acta Phys. Sin. 1999, 48, 1643–1648. [Google Scholar]

- Fujii, H.; Uozumi, J.; Asakura, T. Computer simulation study of image speckle patterns with relation to object surface profile. JOSA 1976, 66, 1222–1236. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

|---|---|---|---|---|---|---|---|---|---|---|

| Without RED | 29.72 | 0.90 | 28.93 | 0.88 | 28.43 | 0.87 | 28.10 | 0.86 | 27.86 | 0.85 |

| RED-median | 30.10 | 0.91 | 29.32 | 0.89 | 28.85 | 0.88 | 28.47 | 0.87 | 28.15 | 0.86 |

| RED-wavelet | 30.12 | 0.90 | 28.98 | 0.88 | 28.57 | 0.87 | 28.18 | 0.86 | 27.92 | 0.85 |

| RED-BM3D | 30.15 | 0.90 | 29.54 | 0.89 | 29.21 | 0.88 | 28.94 | 0.88 | 28.76 | 0.87 |

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

|---|---|---|---|---|---|---|---|---|---|---|

| Without RED | 24.08 | 0.82 | 21.36 | 0.74 | 19.20 | 0.66 | 17.78 | 0.60 | 16.70 | 0.54 |

| RED-median | 27.31 | 0.91 | 26.93 | 0.89 | 26.09 | 0.86 | 25.03 | 0.83 | 24.07 | 0.81 |

| RED-Lee filter | 26.93 | 0.89 | 26. 69 | 0.88 | 25.38 | 0.84 | 24.46 | 0.81 | 23.52 | 0.78 |

| RED-BM3D | 28.45 | 0.91 | 27.25 | 0.88 | 26.30 | 0.86 | 25.91 | 0.84 | 25.52 | 0.82 |

| AP | WFP | TAFP | RAFP-median | RAFP-BM3D | |

|---|---|---|---|---|---|

| Iteration | 100 | 350 | 300 | 200 | 140 |

| Running time(s) | 25 | 332 | 294 | 206 | 630 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Wen, D.; Song, Z.; Liu, G.; Zhang, W.; Wei, X. Sub-Diffraction Visible Imaging Using Macroscopic Fourier Ptychography and Regularization by Denoising. Sensors 2018, 18, 3154. https://doi.org/10.3390/s18093154

Li Z, Wen D, Song Z, Liu G, Zhang W, Wei X. Sub-Diffraction Visible Imaging Using Macroscopic Fourier Ptychography and Regularization by Denoising. Sensors. 2018; 18(9):3154. https://doi.org/10.3390/s18093154

Chicago/Turabian StyleLi, Zhixin, Desheng Wen, Zongxi Song, Gang Liu, Weikang Zhang, and Xin Wei. 2018. "Sub-Diffraction Visible Imaging Using Macroscopic Fourier Ptychography and Regularization by Denoising" Sensors 18, no. 9: 3154. https://doi.org/10.3390/s18093154