A Scene Recognition and Semantic Analysis Approach to Unhealthy Sitting Posture Detection during Screen-Reading

Abstract

:1. Introduction

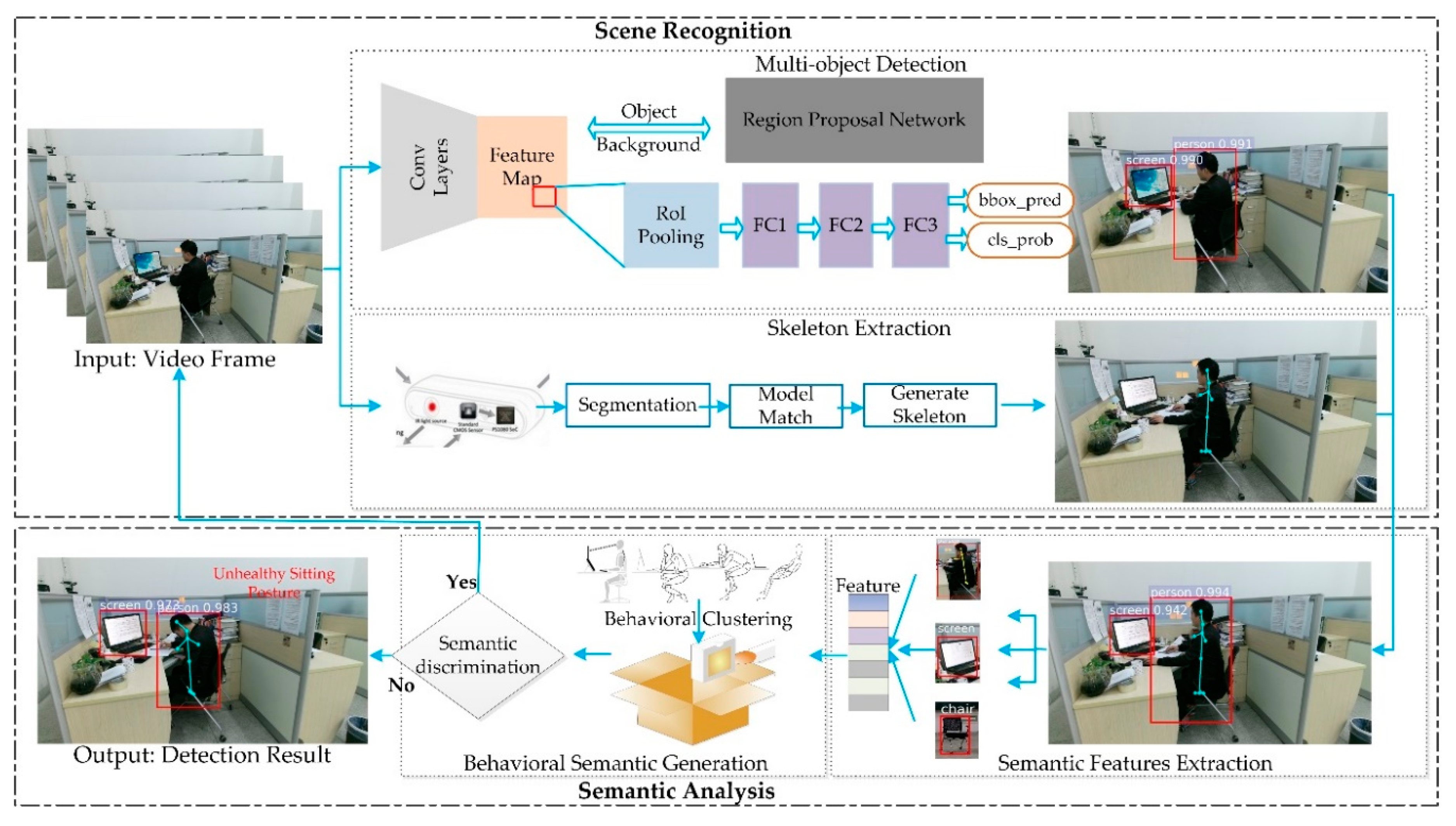

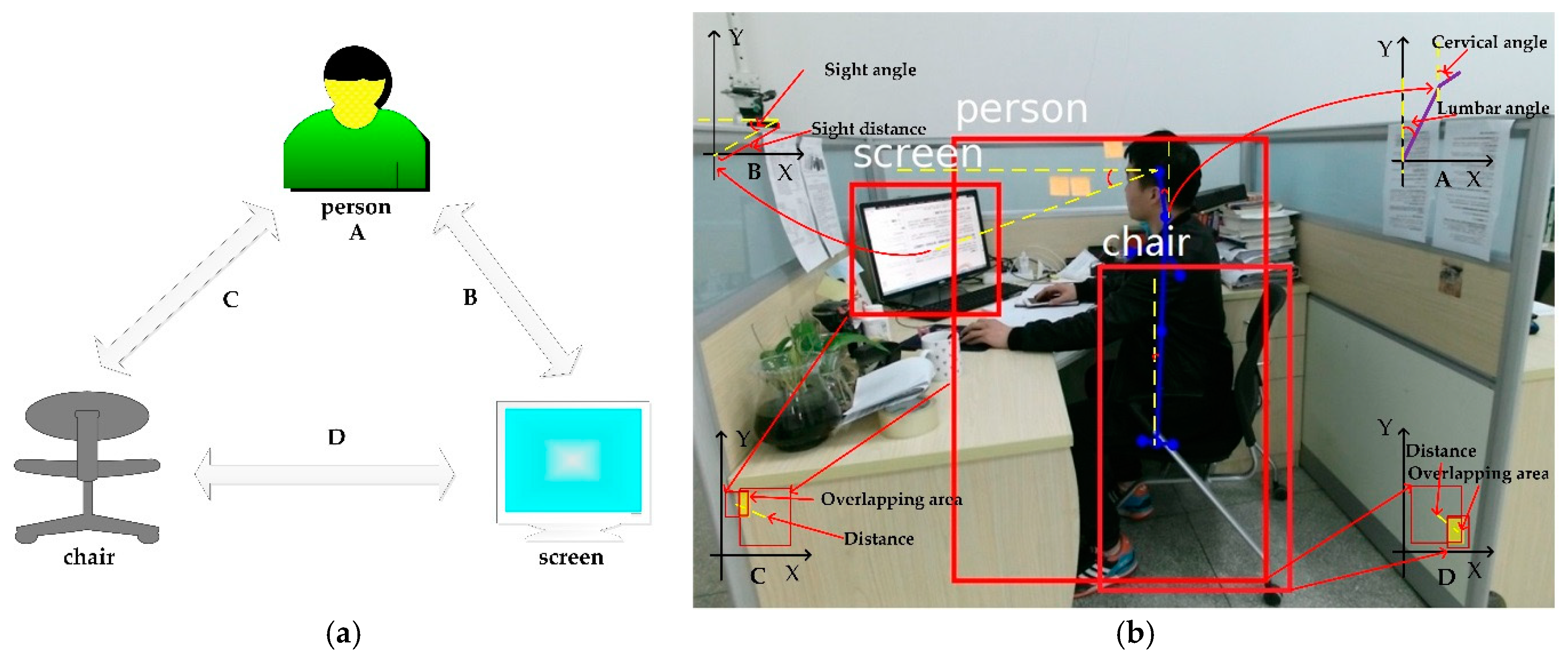

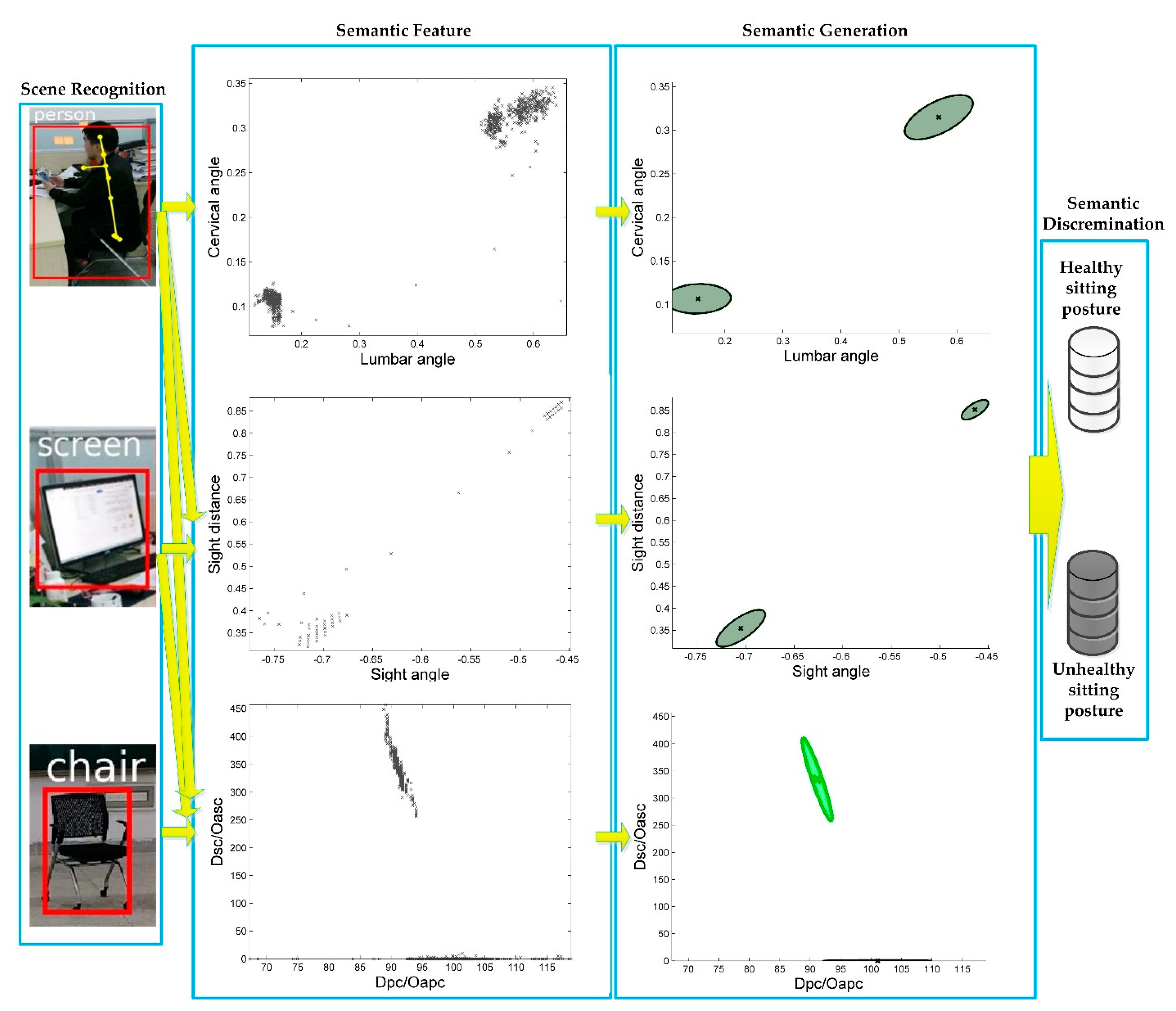

2. Framework of Our Proposed Method for Unhealthy Sitting Posture Detection

3. Our Proposed Method Based on Scene Recognition and Semantic Analysis

3.1. Scene Recognition

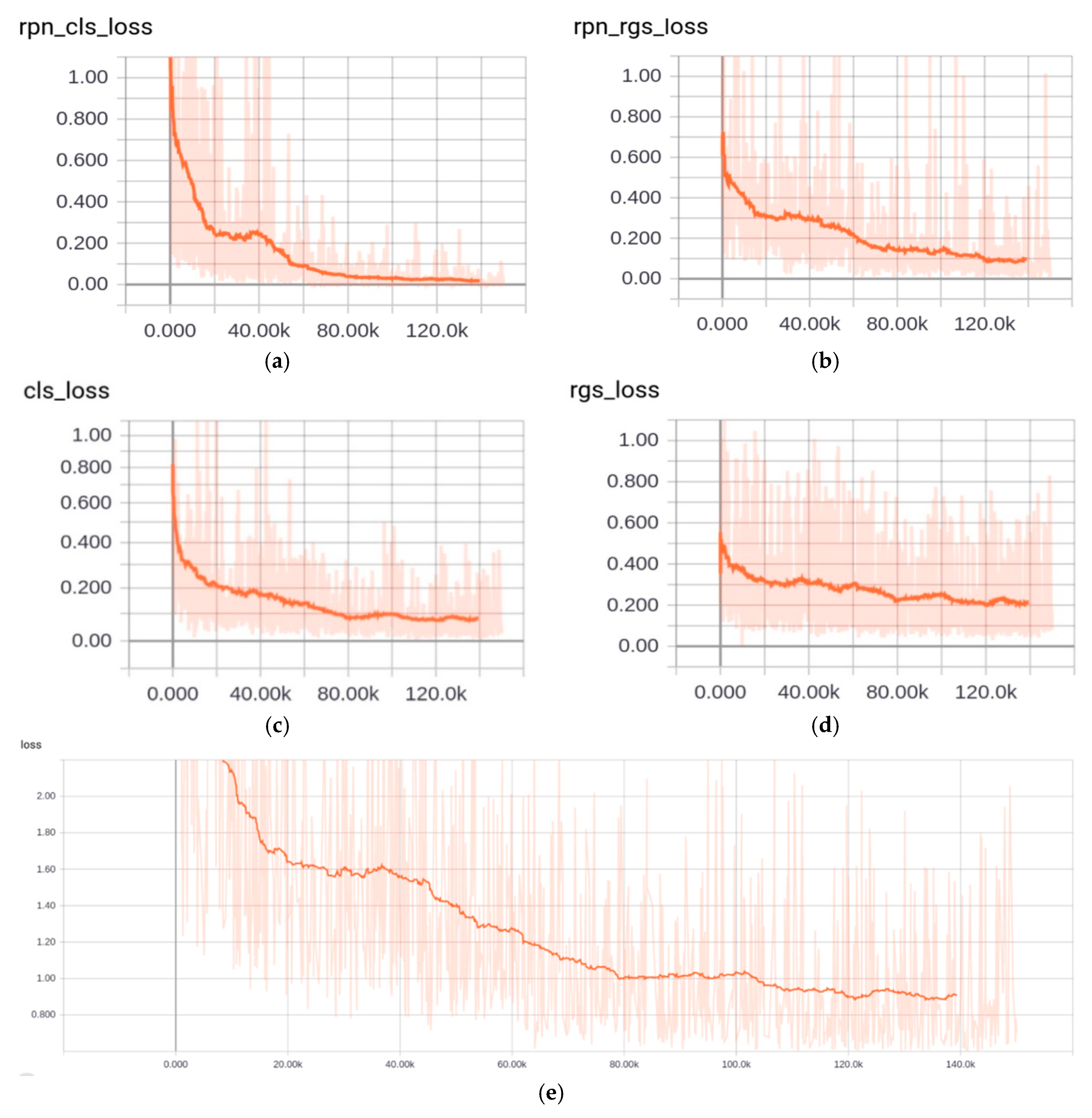

3.1.1. Multi-object Detection Using Faster R-CNN

- is the index of an anchor in a mini-batch.

- is the predicted probability of anchor i being an object.

- is the ground-truth label, whose value is 1 if the anchor is positive and 0 if the anchor is negative.

- ti is a vector representing the 4 parameterized coordinates of the predicted bounding box.

- is the ground-truth box associated with a positive anchor.

- The classification loss is log loss over two classes (object versus not object).

- The regression loss where R is the robust loss function (smooth ).

- The outputs of the cls and reg layers consist of and respectively.

- is weighted by a balancing parameter.

- The mini-batch size (i.e., = 256) and the reg term is normalized by the number of anchor locations (i.e., = 2400). By default we set = 10, thus both cls and reg terms are roughly equally weighted.

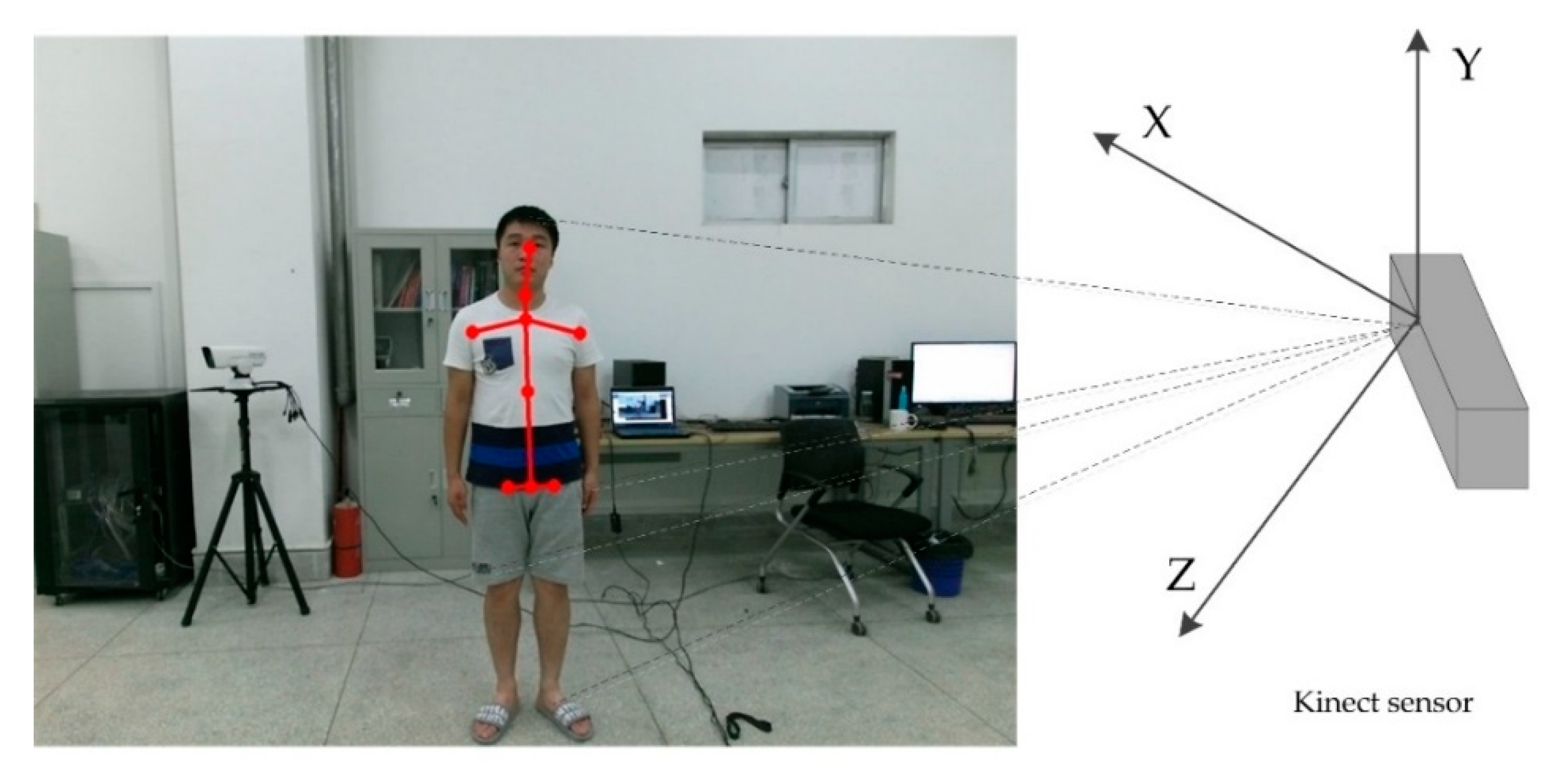

3.1.2. Skeleton Extraction Using Microsoft Kinect Sensor

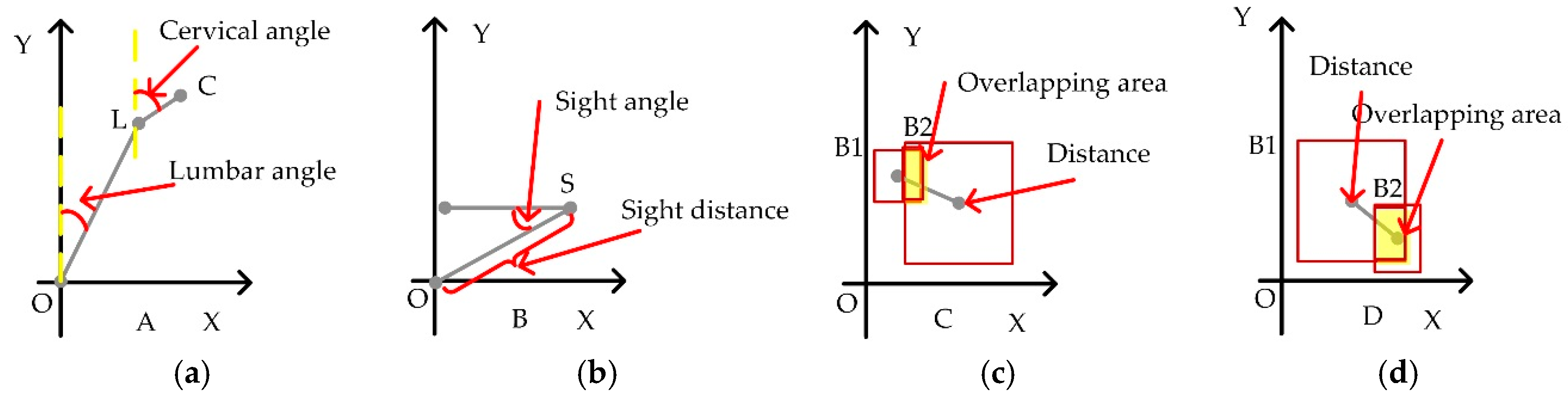

3.2. Semantic Analysis

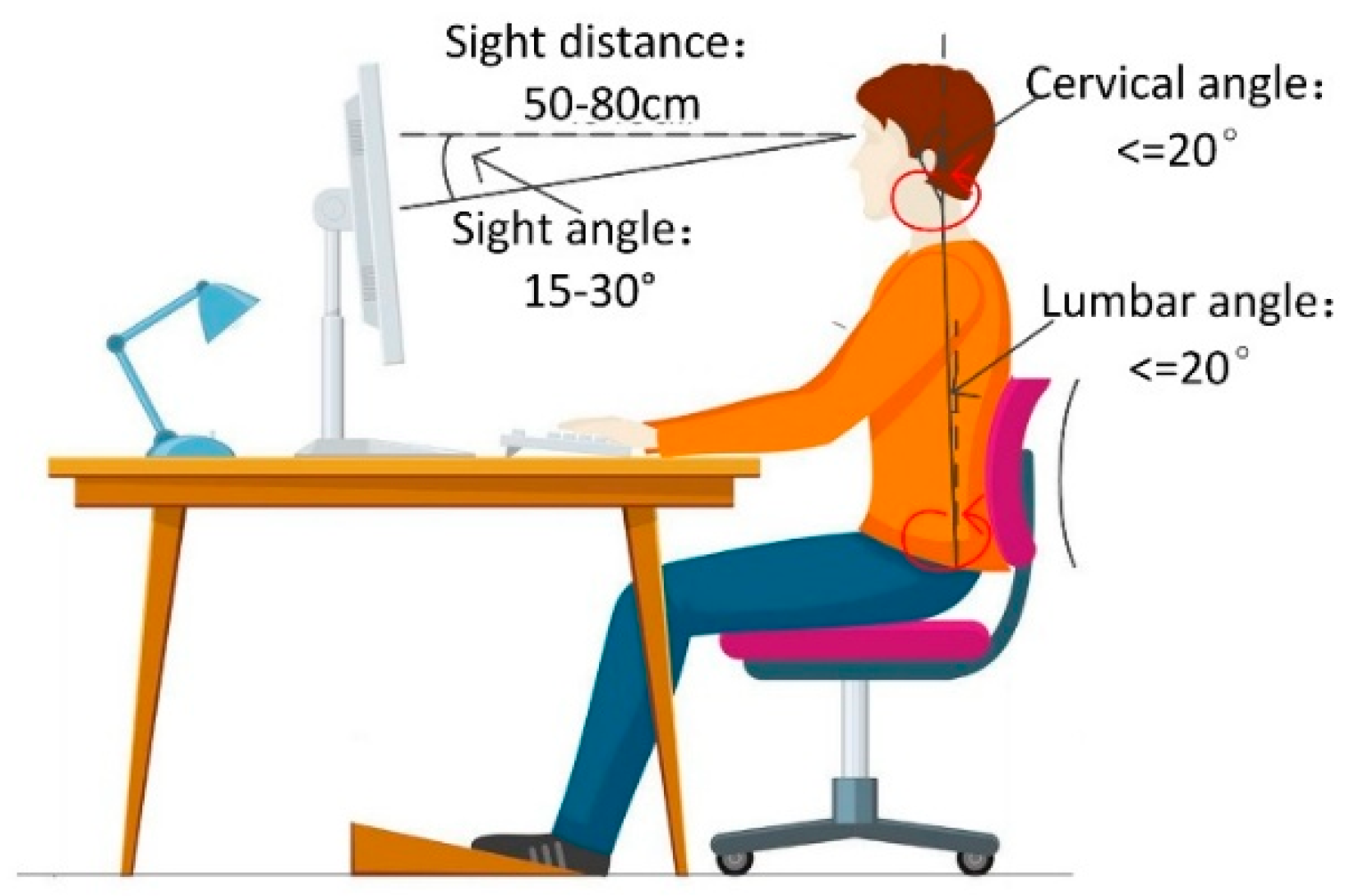

3.2.1. The Definition of Healthy Sitting Posture

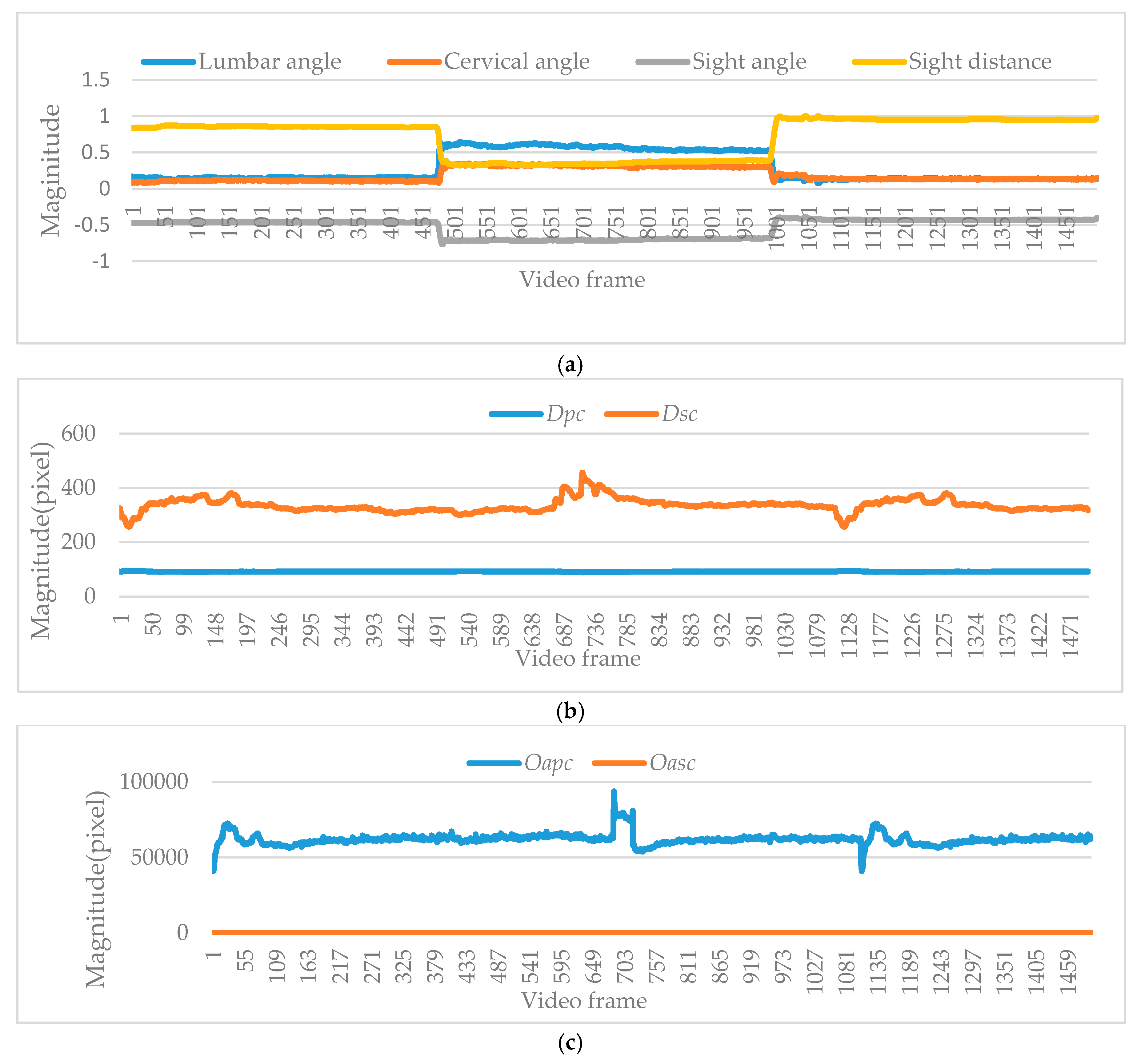

3.2.2. Semantic Feature Calculation

3.2.3. Semantic Generation using Gaussian-Mixture Clustering

| Algorithm 1. The processing of generating behavioral semantic clustering. |

| Input: semantic features |

| The number of category |

| Output: |

| Repeat |

| for do { |

| According to, calculate the posterior probability of } |

| for do { |

| Calculate mean vector: |

| Calculate covariance matrix: |

| Calculate mixture coefficient: } |

| Update semantic parameters |

| Until find out the optimization of |

| for do { |

| According to , generate behavioral semantic clustering: |

| } |

3.2.4. Semantic Discrimination

4. Result

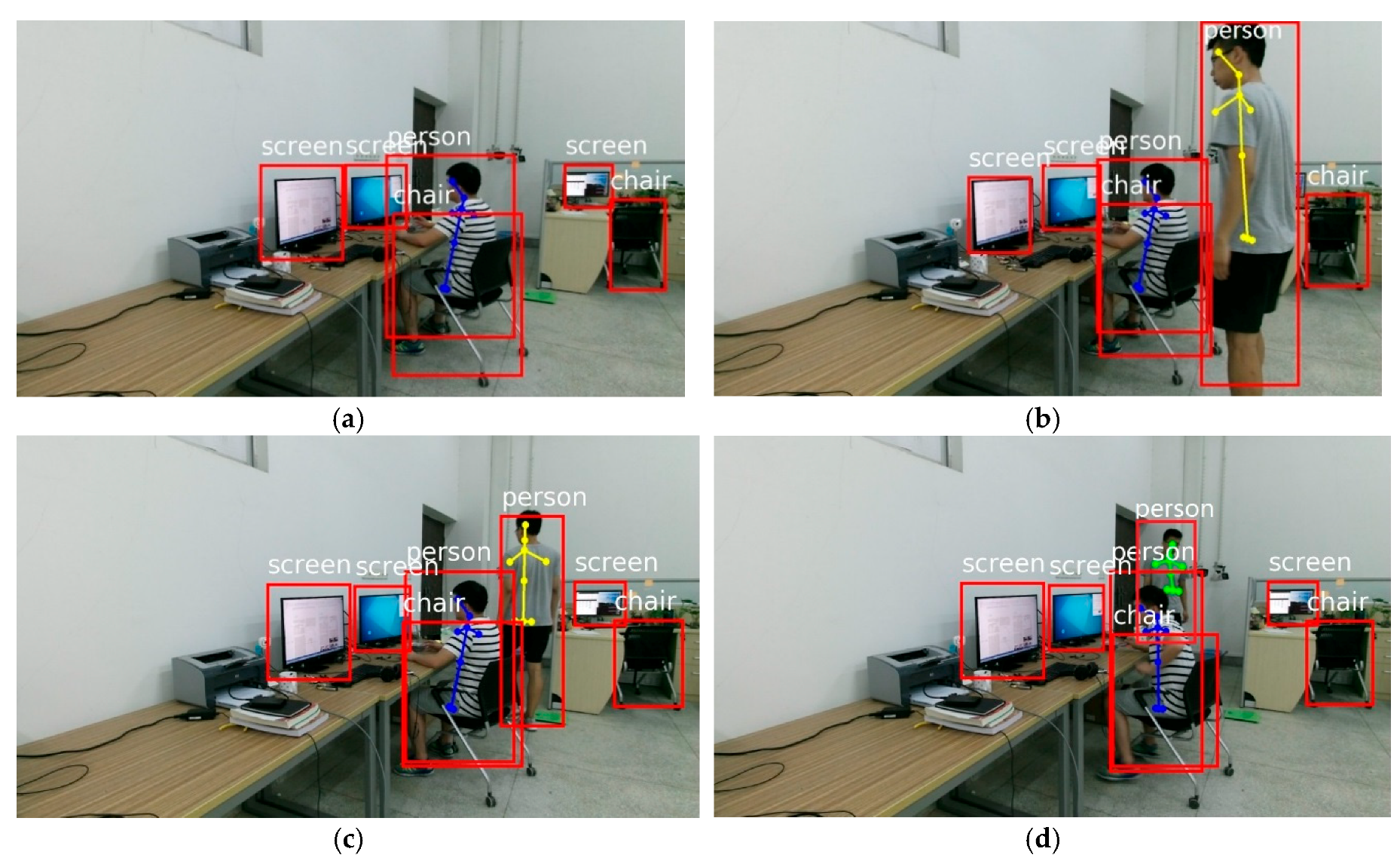

4.1. Self-Collected Test Dataset and Some Detection Results

- Healthy sitting posture as shown in Figure 10a. The lumbar angle and cervical angle are less than 20°. The sight distance is about 80 cm. The sight angle is in 15°~30°.

- Leaning forward as shown in Figure 10b. When a person reaches out to read the screen, leaning forward causes an excessive lumbar angle, cervical angle and a small sight distance.

- Holding the head as shown in Figure 10c. When a person holds his head with a hand on the desk, the leaning body results in unhealthy sitting posture.

- Leaning backward as shown in Figure 10d. When a person leans on the chair, the excess lumbar angle, cervical angle and sight distance cause an unhealthy sitting posture.

- Bent over as shown in Figure 10e. When a person squats on the desk, the excessive bending angle results in an unhealthy sitting posture.

- Looking up as shown in Figure 10f. Because the location of the screen is too high or the chair is low, looking up at screen causes an unhealthy sitting posture.

- Body sideways as shown in Figure 10g. When a person leans on the side of the chair, the excessive lumbar angle and cervical angle cause an unhealthy sitting posture.

- Small sight distance as shown in Figure 10h. Here the eyes are too close to the screen.

- Holding the head in a complicated environment as shown in Figure 10i. A person is holding his head with a hand on the desk, and the scene contains multiple objects (i.e., multiple persons, multiple screens and multiple chairs).

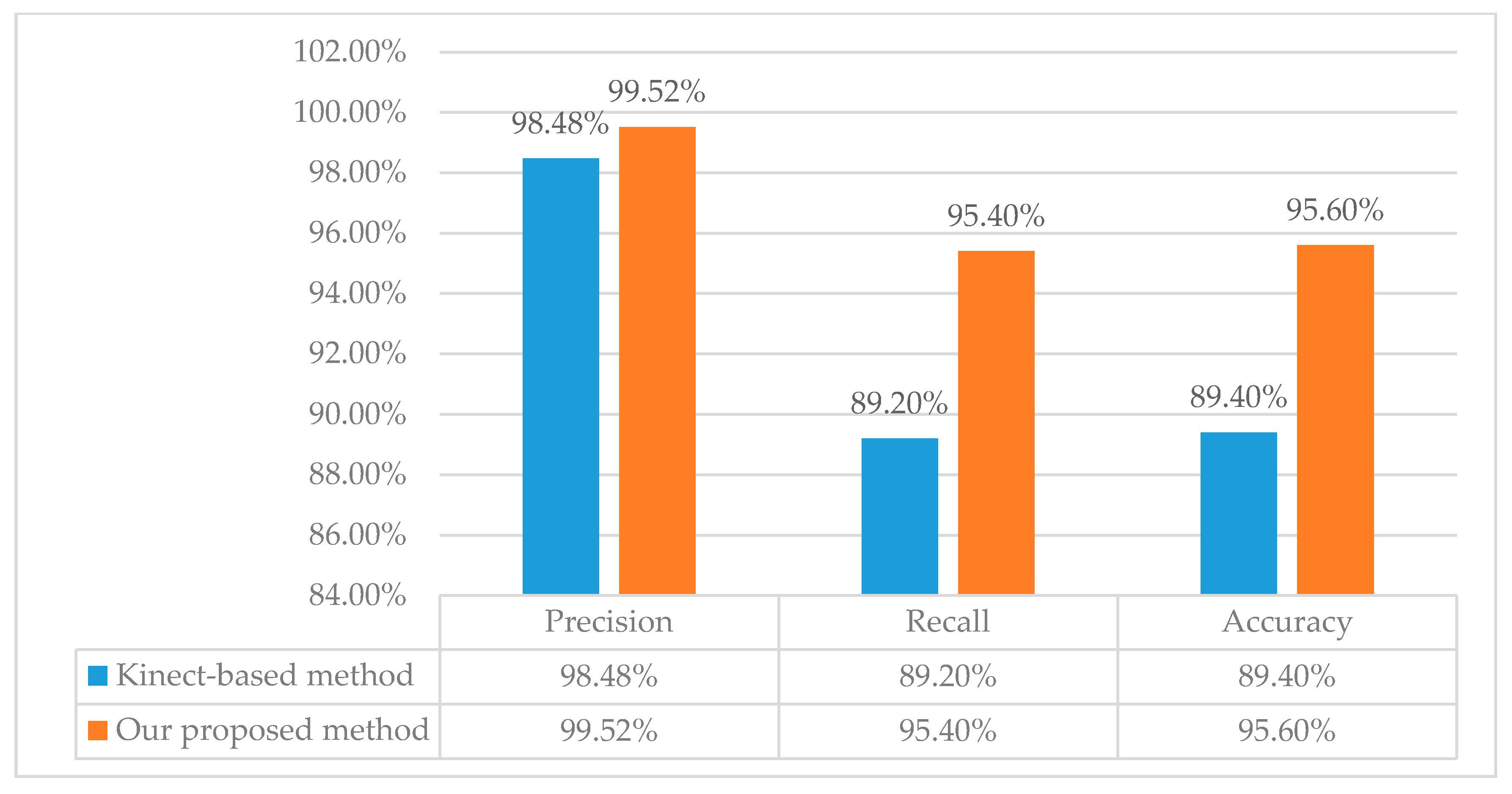

4.2. Quantitative Analysis

4.3. Qualitative Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, S.; Gao, C.; Zhang, J.; Chen, F.; Sang, N. Discriminative Part Selection for Human Action Recognition. IEEE Trans. Multimed. 2018, 20, 769–780. [Google Scholar] [CrossRef]

- Guo, S.; Xiong, H.; Zheng, X. Activity Recognition and Semantic Description for Indoor Mobile Localization. Sensors 2017, 17, 649. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Pan, G.; Jia, K.; Lu, M.; Wang, Y.; Wu, Z. Accelerometer-Based Gait Recognition by Sparse Representation of Signature Points with Clusters. IEEE Trans. Cybern. 2015, 45, 1864–1875. [Google Scholar] [CrossRef] [PubMed]

- Luo, M.; Chang, X.; Nie, L.; Yang, Y.; Hauptmann, A.G.; Zheng, Q. An Adaptive Semisupervised Feature Analysis for Video Semantic Recognition. IEEE Trans. Cybern. 2018, 48, 648–660. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, G.; Hu, P.; Duan, L. Global Context-Aware Attention LSTM Networks for 3D Action Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3671–3680. [Google Scholar]

- Hoogendoorn, W.E.; Bongers, P.M.; Vet, H.C. Flexion and Rotation of the Trunk and Lifting at Work are Risk Factors for Low Back Pain: Results of a Prospective Cohort Study. Spine 2014, 25, 3087–3092. [Google Scholar] [CrossRef]

- Chandna, S.; Wang, W. Bootstrap Averaging for Model-Based Source Separation in Reverberant Conditions. IEEE Trans. Audio Speech Lang. Process. 2018, 26, 806–819. [Google Scholar] [CrossRef] [Green Version]

- Lis, A.M.; Black, K.; Korn, H.; Nordin, M. Association between Sitting and Occupational LBP. Eur. Spine J. 2007, 16, 283–298. [Google Scholar] [CrossRef] [PubMed]

- O’Sullivan, P.B.; Grahamslaw, K.M.; Lapenskie, S.C. The Effect of Different Standing and Sitting Posture on Trunk Muscle Activity in a Pain-free Population. Spine 2002, 27, 1238–1244. [Google Scholar] [CrossRef] [PubMed]

- Straker, L.; Mekhora, K. An Evaluation of Visual Display Unit Placement by Electronmygraphy, Posture, Discomfort and Preference. Int. J. Ind. Ergon. 2000, 26, 389–398. [Google Scholar] [CrossRef]

- Grandjean, E.; Hünting, W. Ergonomics of Posture-review of Various Problems of Standing and Sitting Posture. Appl. Ergon. 1977, 8, 135–140. [Google Scholar] [CrossRef]

- Meyer, J.; Arnrich, B.; Schumm, J.; Troster, G. Design and Modeling of a Textile Pressure Sensor for Sitting Posture Classification. IEEE Sens. J. 2010, 10, 1391–1398. [Google Scholar] [CrossRef]

- Mattmann, C.; Amft, O.; Harms, H.; Troster, G.; Clemens, F. Recognizing Upper Body Postures using Textile Strain Sensors. In Proceedings of the 11th IEEE International Symposium on Wearable Computers, Boston, MA, USA, 11–13 October 2007; pp. 29–36. [Google Scholar]

- Ma, S.; Cho, W.H.; Quan, C.H.; Lee, S. A Sitting Posture Recognition System Based on 3 Axis Accelerometer. In Proceedings of the IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Chiang Mai, Thailand, 5–7 October 2016; pp. 1–3. [Google Scholar]

- Ma, C.; Li, W.; Gravina, R.; Fortino, G. Posture Detection Based on Smart Cushion for Wheelchair Users. Sensors 2017, 17, 719. [Google Scholar] [CrossRef] [PubMed]

- Foubert, N.; McKee, A.M.; Goubran, R.A.; Knoefel, F. Lying and Sitting Posture Recognition and Transition Detection Using a Pressure Sensor Array. In Proceedings of the 2012 IEEE International Symposium on Medical Measurements and Applications Proceedings, Budapest, Hungary, 18–19 May 2012; pp. 1–6. [Google Scholar]

- Liang, G.; Cao, J.; Liu, X. Smart Cushion: A Practical System for Fine-grained Sitting Posture Recognition. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kona, HI, USA, 13–17 March 2017; pp. 419–424. [Google Scholar]

- Huang, Y.R.; Ouyang, X.F. Sitting Posture Detection and Recognition Using Force Sensor. In Proceedings of the 5th International Conference on BioMedical Engineering and Informatics, Chongqing, China, 16–18 October 2012; pp. 1117–1121. [Google Scholar]

- Wu, S.-L.; Cui, R.-Y. Human Behavior Recognition Based on Sitting Postures. In Proceedings of the 2010 International Symposium on Computer, Communication, Control and Automation (3CA), Tainan, Taiwan, 5–7 May 2010; pp. 138–141. [Google Scholar]

- Mu, L.; Li, K.; Wu, C. A Sitting Posture Surveillance System Based on Image Processing Technology. In Proceedings of the 2nd International Conference on Computer Engineering and Technology, Chengdu, China, 16–18 April 2010; pp. V1-692–V1-695. [Google Scholar]

- Zhang, B.C.; Gao, Y.; Zhao, S. Local Derivative Pattern Versus Local Binary Pattern: Face Recognition with High-Order Local Pattern Descriptor. IEEE Tans. Image Process. 2009, 19, 533–544. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.C.; Yang, Y.; Chen, C.; Yang, L. Action Recognition Using 3D Histograms of Texture and a Multi-Class Boosting Classifier. IEEE Tans. Image Process. 2017, 26, 4648–4660. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.J.; Chang, J.W.; Huang, S.F. Human Posture Recognition Based on Images Captured by the Kinect Sensor. Int. J. Adv. Robot. Syst. 2016, 13, 54. [Google Scholar] [CrossRef] [Green Version]

- Yao, L.; Min, W.; Cui, H. A New Kinect Approach to Judge Unhealthy Sitting Posture Based on Neck Angle and Torso Angle. In Proceedings of the International Conference on Image and Graphics (ICIG), Shanghai, China, 13–15 September 2017; Springer: Cham, Switzerland, 2017; pp. 340–350. [Google Scholar]

- Tariqa, M.; Majeeda, H.; Omer, M.; Farrukh, B.; Khanb, A.; Derhabb, A. Accurate Detection of Sitting Posture Activities in a Secure IoT Based Assisted Living Environment. Future Gener. Comput. Syst. 2018, in press. [Google Scholar] [CrossRef]

- Zhang, B.C.; Perina, A.; Li, Z. Bounding Multiple Gaussians Uncertainty with Application to Object Tracking. Int. J. Comput. Vis. 2018, 27, 4357–4366. [Google Scholar] [CrossRef]

- Ponglangka, W.; Theera-Umpon, N.; Auephanwiriyakul, S. Eye-gaze Distance Estimation Based on Gray-level Intensity of Image Patch. In Proceedings of the International Symposium on Intelligent Signal Processing and Communications Systems (ISPACS), Chiang Mai, Thailand, 7–9 December 2011; pp. 1–5. [Google Scholar]

- Zhang, B.C.; Luan, S.; Chen, C. Latent Constrained Correlation Filter. IEEE Trans. Image Process. 2018, 27, 1038–1048. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Luan, S.; Zhang, B.C.; Zhou, S. Gabor Convolutional Networks. IEEE Trans. Image Process. 2018, 27, 4357–4366. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Everingham, M.; Zisserman, A.; Williams, C.K.I.; Van Gool, L.; Allan, M. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. Int. J. Comput. Vis. 2006, 111, 98–136. [Google Scholar] [CrossRef]

- Wasenmüller, O.; Stricker, D. Comparison of Kinect V1 and V2 Depth Images in Terms of Accuracy and Precision. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Cham, Switzerland, 2016; pp. 34–45. [Google Scholar]

- McAtamney, L.; Corlett, E.N. RULA: A Survey Method for The Investigation of Work-related Upper Limb Disorders. Appl. Ergon. 1993, 24, 91–99. [Google Scholar] [CrossRef]

- Burgess-Limerick, R.; Plooy, A.; Ankrum, D.R. The Effect of Imposed and Self-selected Computer Monitor Height on Posture and Gaze Angle. Clin. Biomech. 1998, 13, 584–592. [Google Scholar] [CrossRef]

- Springer, T.J. VDT Workstations: A Comparative Evaluation of Alternatives. Appl. Ergon. 1982, 13, 211–212. [Google Scholar] [CrossRef]

- Shikdar, A.A.; Al-Kindi, M.A. Office Ergonomics: Deficiencies in Computer Workstation Design. Int. J. Occup. Saf. Ergon. 2007, 13, 215–223. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Min, W.; Cui, H.; Rao, H.; Li, Z.; Yao, L. Detection of Human Falls on Furniture Using Scene Analysis Based on Deep Learning and Activity Characteristics. IEEE Access 2018, 6, 9324–9335. [Google Scholar] [CrossRef]

| Method | Device | Model | Region Proposal | Test (Region Proposal + Detection) |

|---|---|---|---|---|

| R-CNN | CPU & GPU | Mulit-ConvNet Multi-Classifier | Selective Search | 2 s + 47 s |

| SPP-net | CPU & GPU | Mulit-ConvNet SPP Pooling | Selective Search | 2 s + 2.3 s |

| Fast R-CNN | CPU & GPU | Share ConvNet RoI Pooling | Selective Search | 2 s + 0.32 s |

| Faster R-CNN | GPU | End-to-End Share ConvNet RoI Pooling | Region Proposal Network | 0.01 s+ 0.2 s |

| Object | Real Result | Detection Result | Recall | Precision | Accuracy | |

|---|---|---|---|---|---|---|

| Positive | Negative | |||||

| Person | TRUE | 2562 | 817 | 93.33% | 85.40% | 84.48% |

| FALSE | 438 | 183 | ||||

| Chair | TRUE | 2151 | 693 | 87.51% | 71.70% | 71.60% |

| FALSE | 849 | 307 | ||||

| Screen | TRUE | 2212 | 759 | 90.18% | 73.73% | 74.28% |

| FALSE | 788 | 241 | ||||

| Different Sitting Postures | The Total Number of Videos | The Total Number of Detected as Unhealthy Sitting Posture | |

|---|---|---|---|

| Kinect-Based Method Using Neck Angle and Torso Angle [24] | Our Proposed Method | ||

| Healthy sitting posture | 65 | 6 | 2 |

| Lean forward | 65 | 59 | 62 |

| Hold head | 60 | 57 | 57 |

| Lean backward | 60 | 52 | 56 |

| Bend over | 60 | 58 | 58 |

| Looking up | 60 | 49 | 57 |

| Body side | 65 | 59 | 63 |

| Too small sight distance | 65 | 54 | 62 |

| Method | Features | Classifier | Dataset | TP | TN | FP | FN |

|---|---|---|---|---|---|---|---|

| Kinect-based method [24] | Torso angle, Neck angle | Threshold | Self-collected dataset (435 positive samples and 65 negative samples) | 388 | 59 | 6 | 47 |

| Our proposed method | Lumbar angle, Cervical angle, Sight angle, Sight distance, Spatial distance, Overlapping area | Scene Recognition using Faster R-CNN and Semantic Analysis using Gaussian-Mixture Model | 415 | 63 | 2 | 20 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Min, W.; Cui, H.; Han, Q.; Zou, F. A Scene Recognition and Semantic Analysis Approach to Unhealthy Sitting Posture Detection during Screen-Reading. Sensors 2018, 18, 3119. https://doi.org/10.3390/s18093119

Min W, Cui H, Han Q, Zou F. A Scene Recognition and Semantic Analysis Approach to Unhealthy Sitting Posture Detection during Screen-Reading. Sensors. 2018; 18(9):3119. https://doi.org/10.3390/s18093119

Chicago/Turabian StyleMin, Weidong, Hao Cui, Qing Han, and Fangyuan Zou. 2018. "A Scene Recognition and Semantic Analysis Approach to Unhealthy Sitting Posture Detection during Screen-Reading" Sensors 18, no. 9: 3119. https://doi.org/10.3390/s18093119