A Robust Method for Detecting Parking Areas in Both Indoor and Outdoor Environments

Abstract

:1. Introduction

2. Related Work

- (i)

- The method to calibration surrounding cameras in order to form the bird view of the environment around the vehicle;

- (ii)

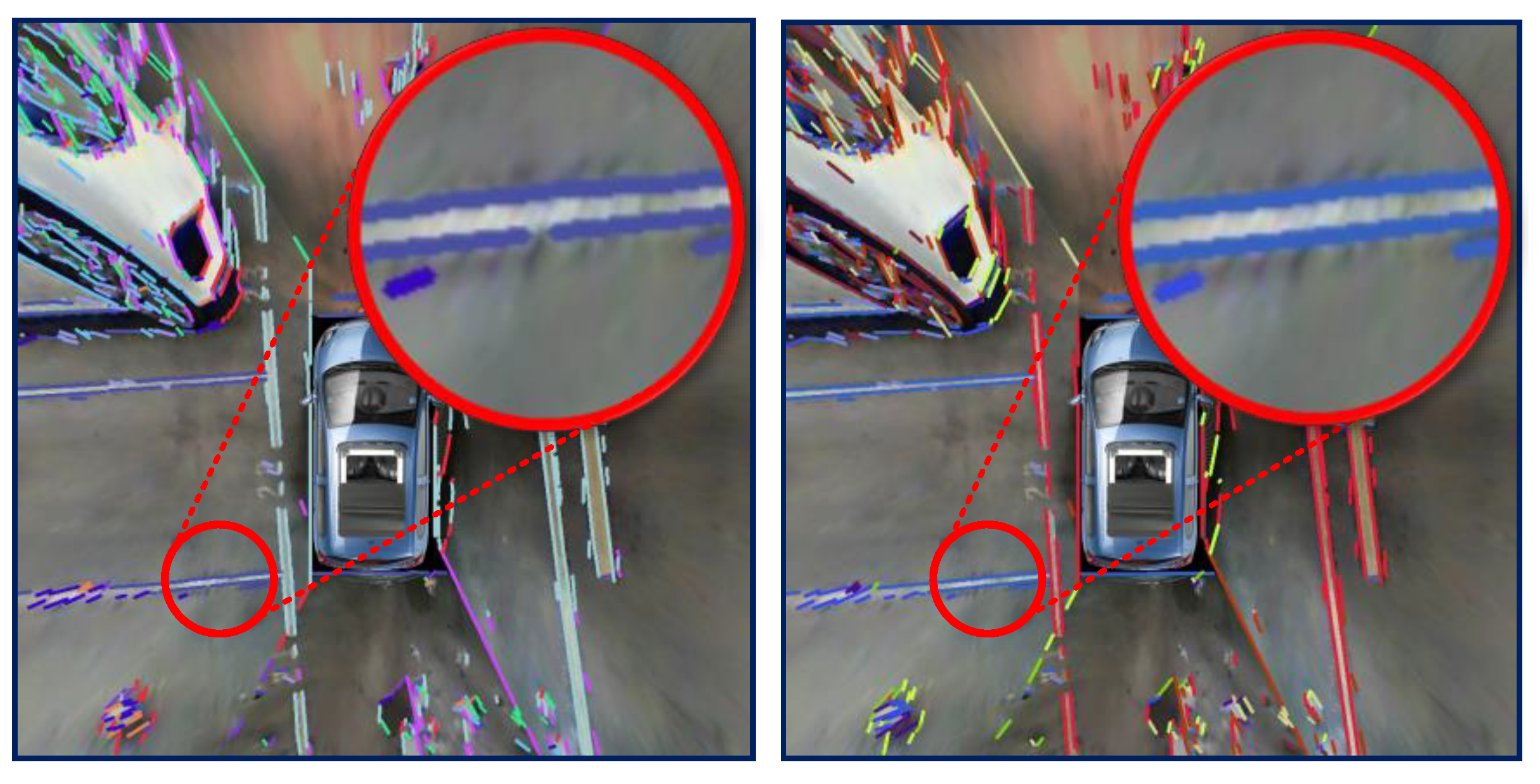

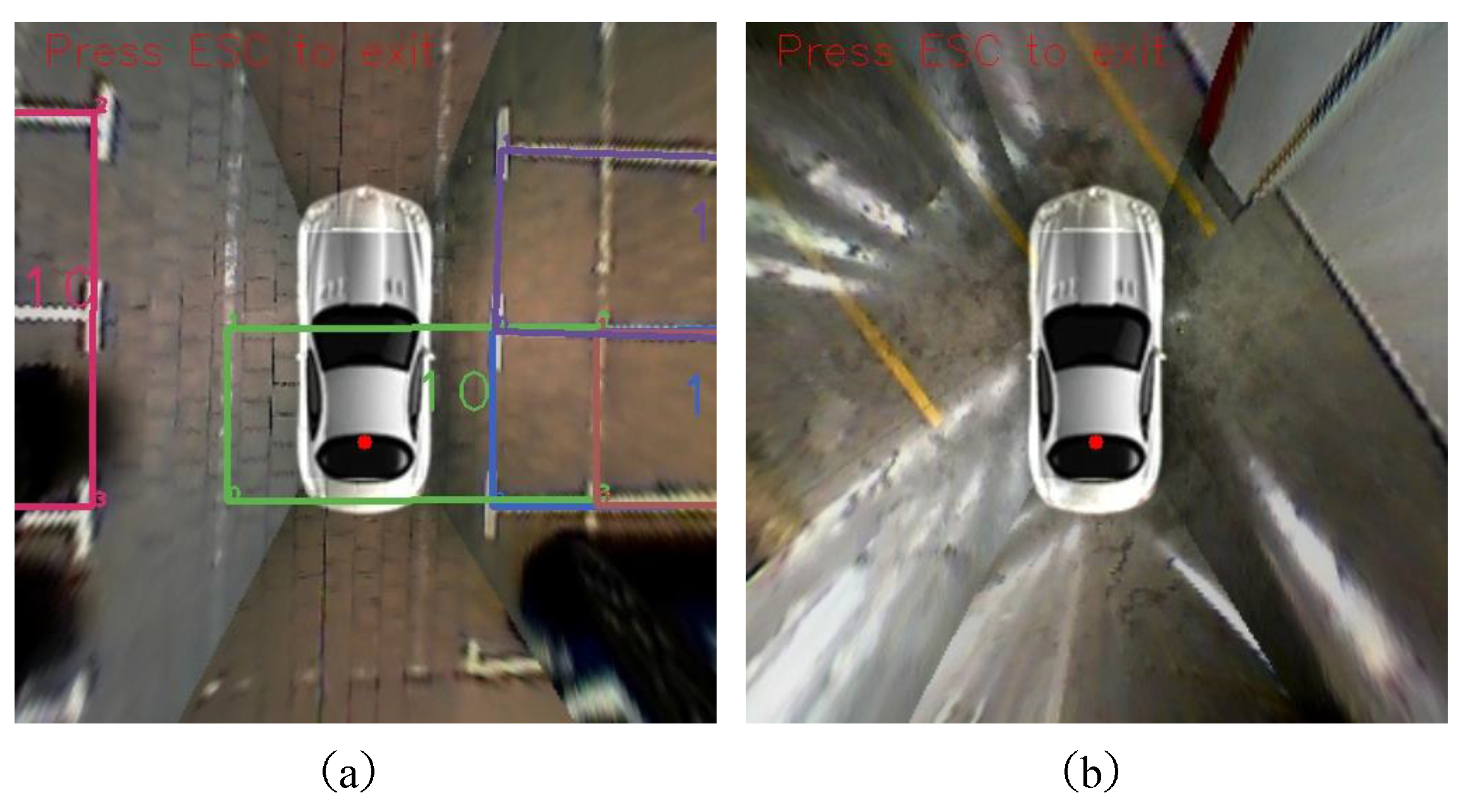

- Due to the severe change of the color and luminance caused by reflection of the ground in garages, it is very hard to segment the image using RGB color.

- (iii)

- Due to the great difference between the indoor and outdoor parking lots environment, it is very hard to train a learning based classifier or match with template. For example, the line color of the parking area can be any bright color compared with the ground color; the ground material and texture may different greatly from each parking lot; the shadow on the ground really does harm to the training accuracy, etc.

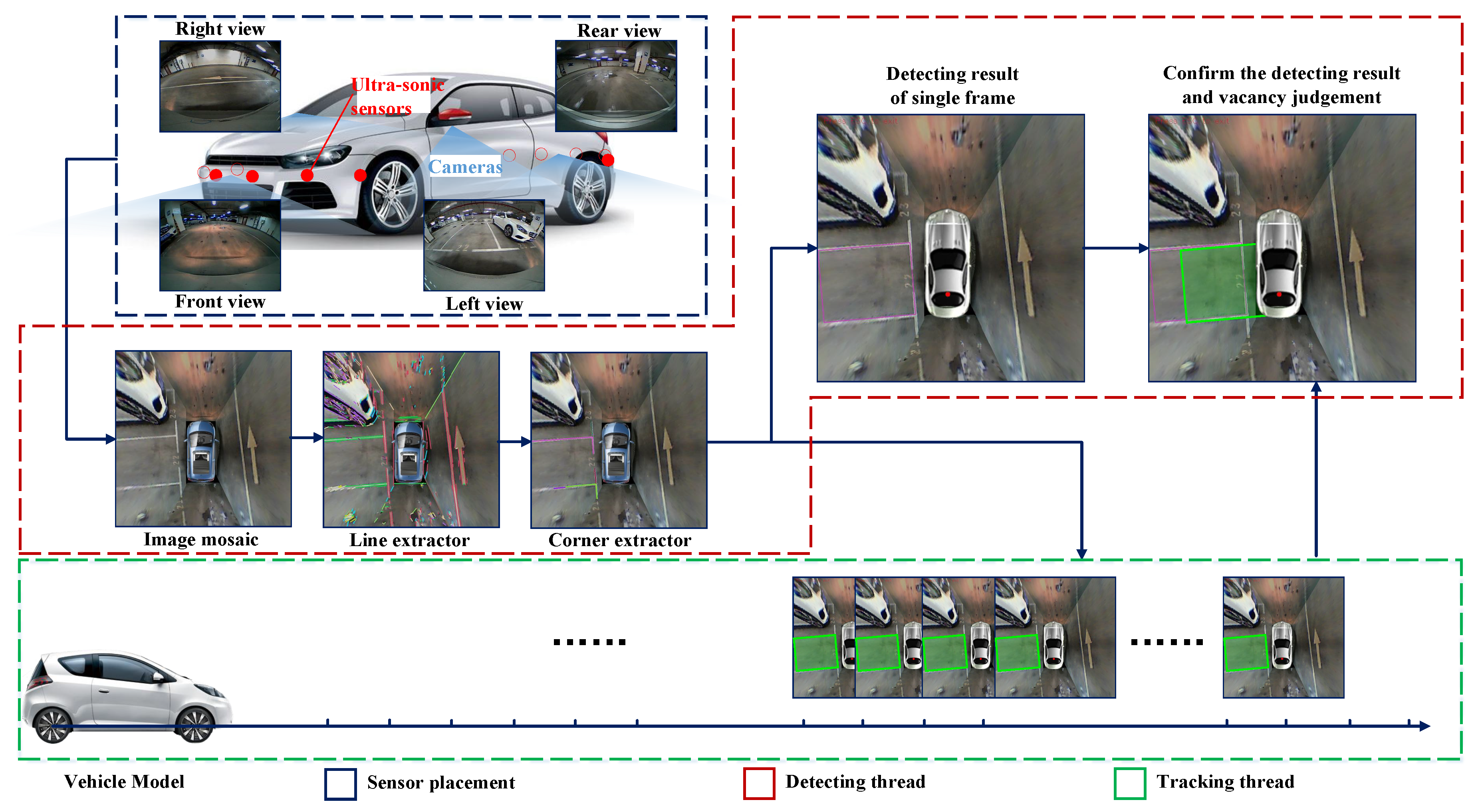

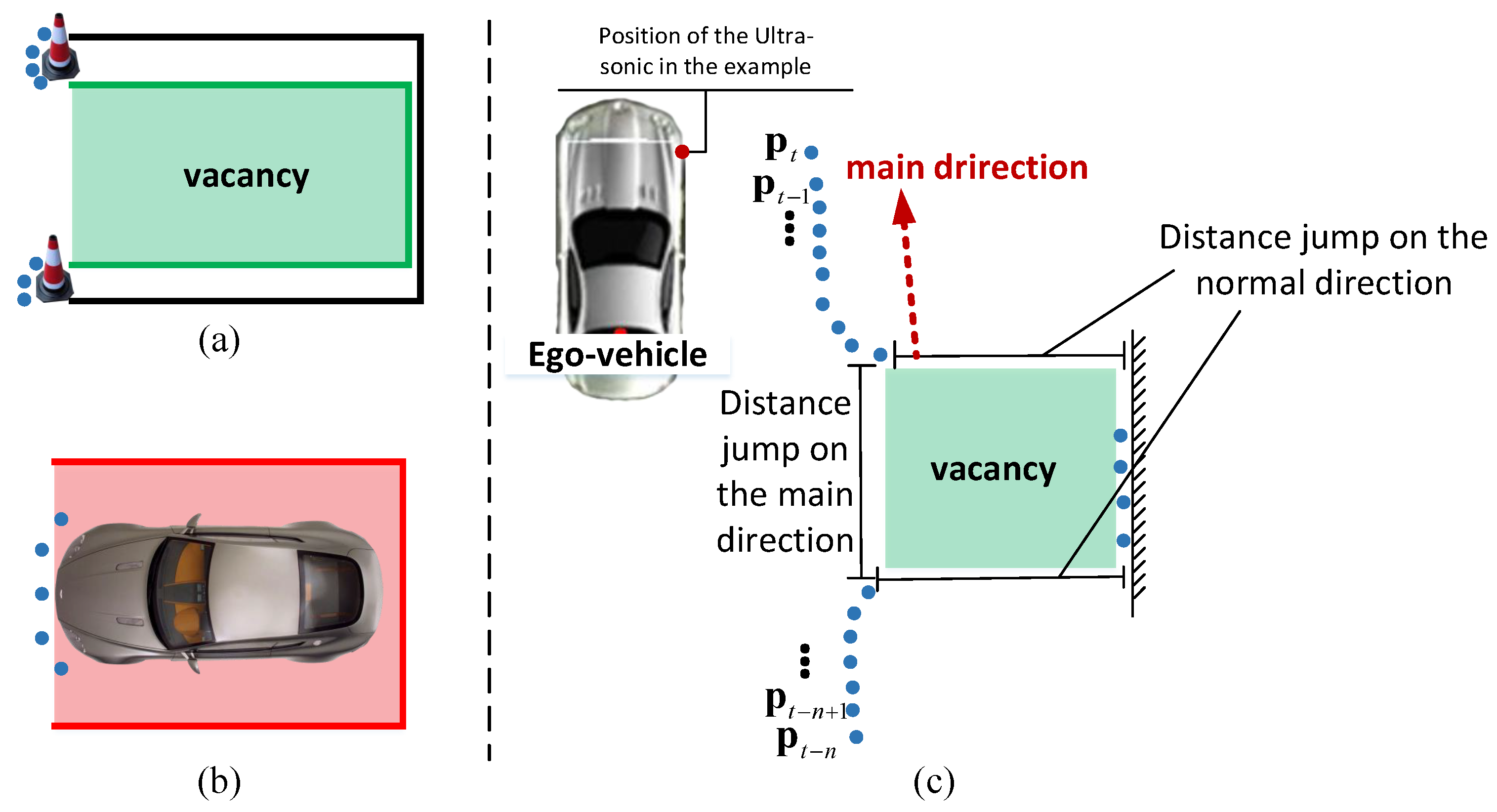

3. System Overview

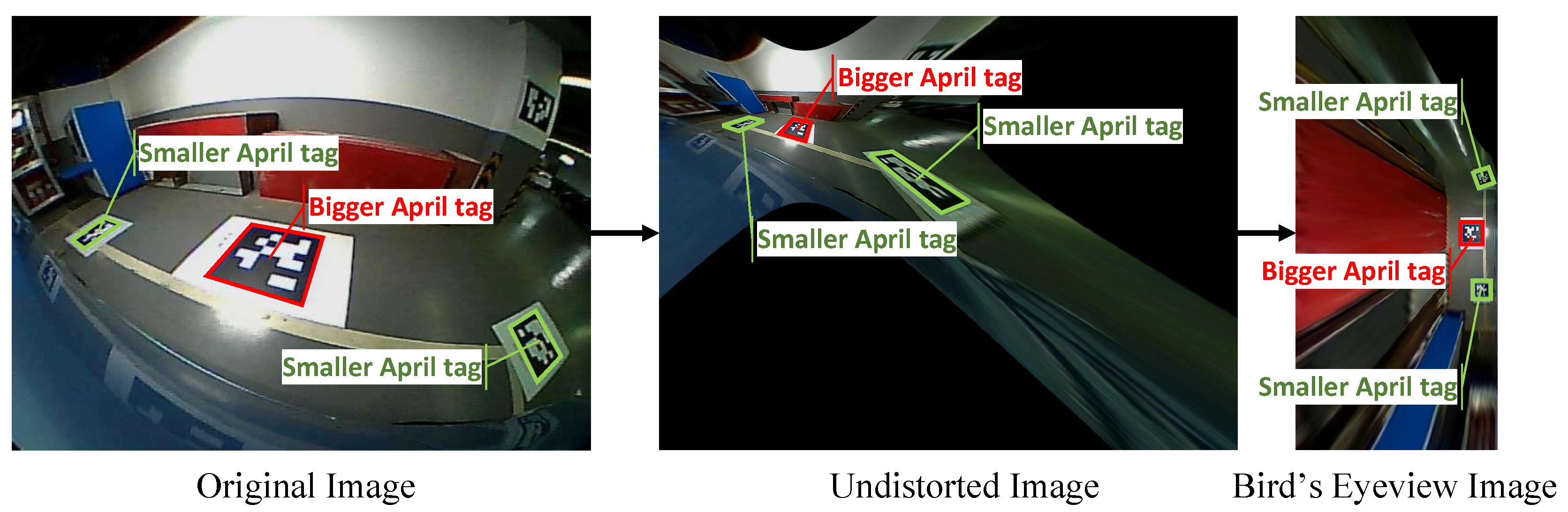

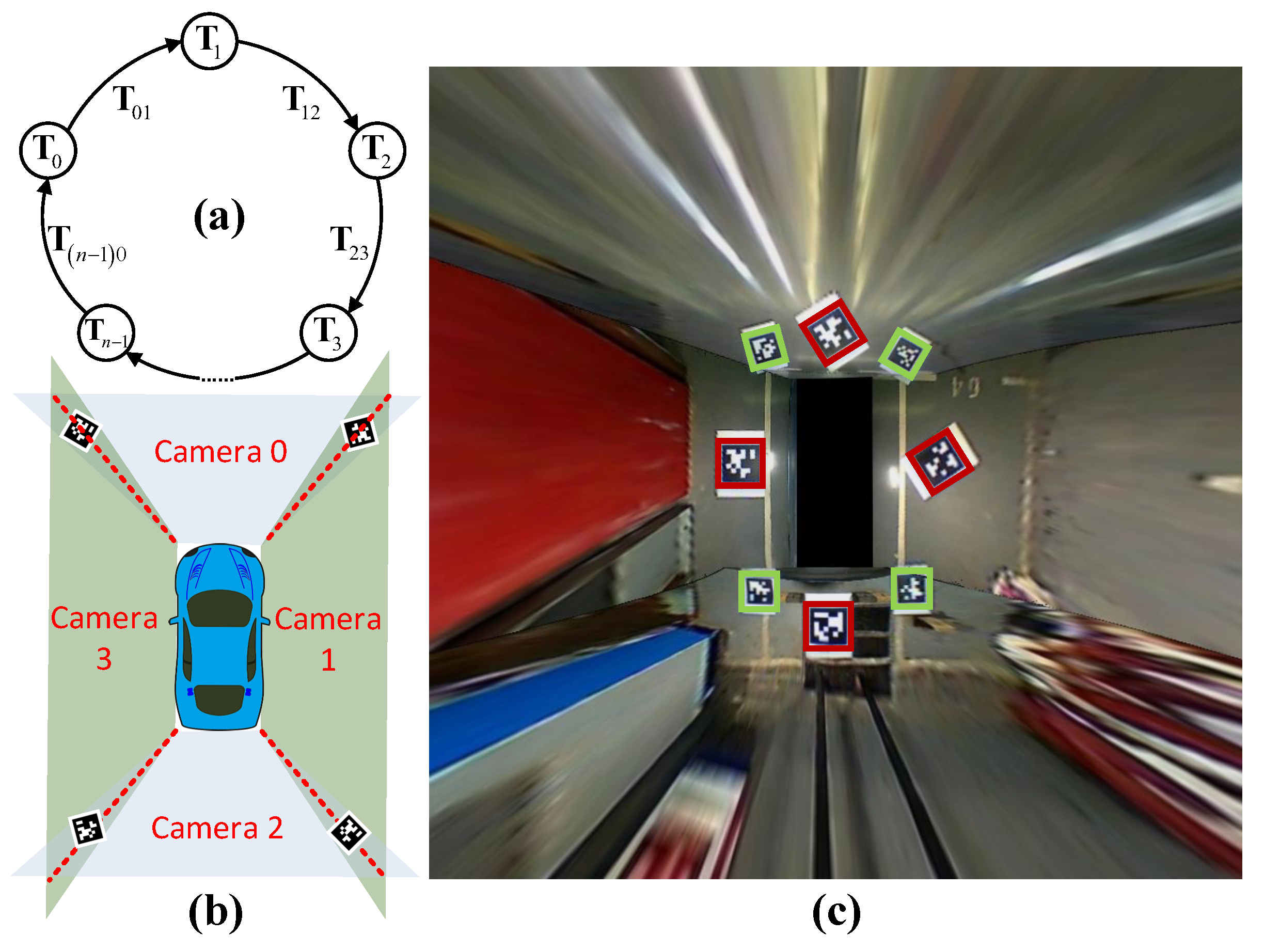

4. Surrounding Camera Image Stitching

4.1. Apriltag

4.2. Image Stitching

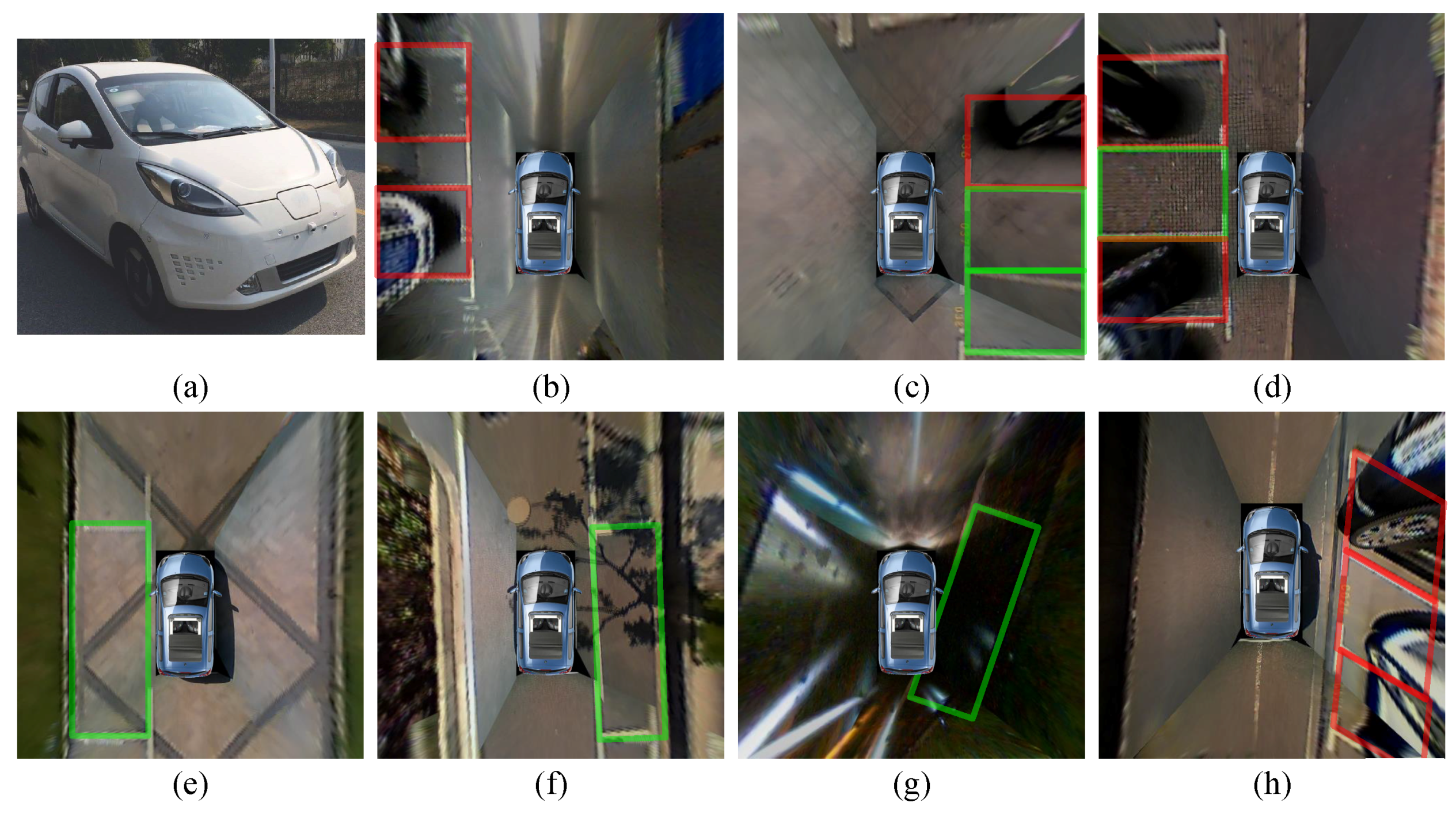

5. Parking Space Detection in a Single Frame

5.1. Line Extractor

5.2. L-Shaped Corner Extractor

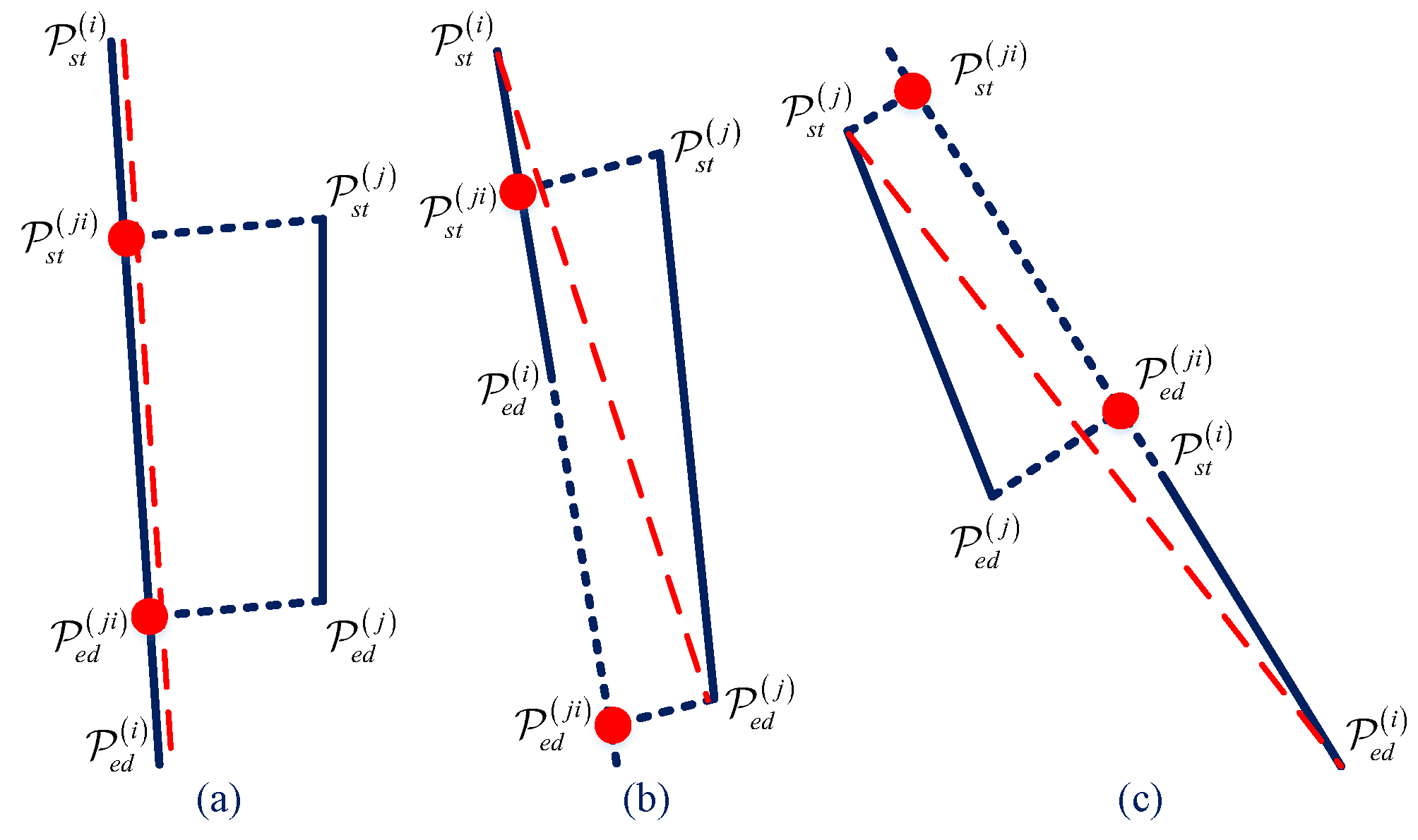

- In situation (a), and . Ifjudging if , make an L-shaped tuple , where is the minimum length of one parking side. Usually, this value is smaller than the reality because not all of the four sides are closed. stands for the maximum gap tolerance from the intersection point to the nearest end point of .

- Situation (b) is similar to (a).

- In situation (c), and . Ifadd a new tuple to L-shaped set.

- In situation (d), and . The distance of to each end point of and needs to be calculated. If and , add a new tuple to L-shaped set.

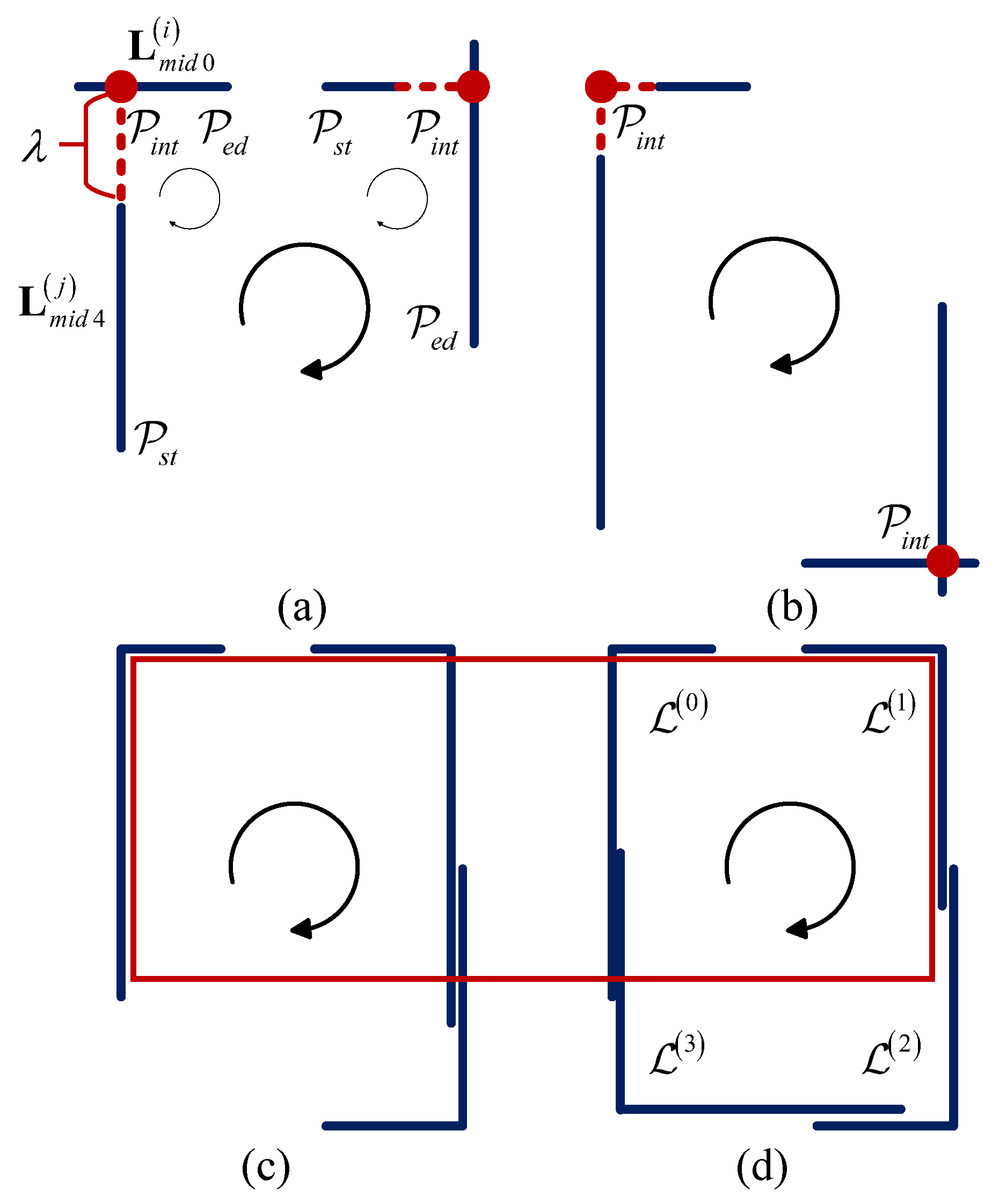

5.3. Candidate Parking Area Searching Method

- situation adjacent

- situation opposite

| Algorithm 1: algorithm parking space search. |

|

| Algorithm 2: IsNewTempPkSp(L, , n). |

|

6. Parking Space Tracking and Parkable Confirmation

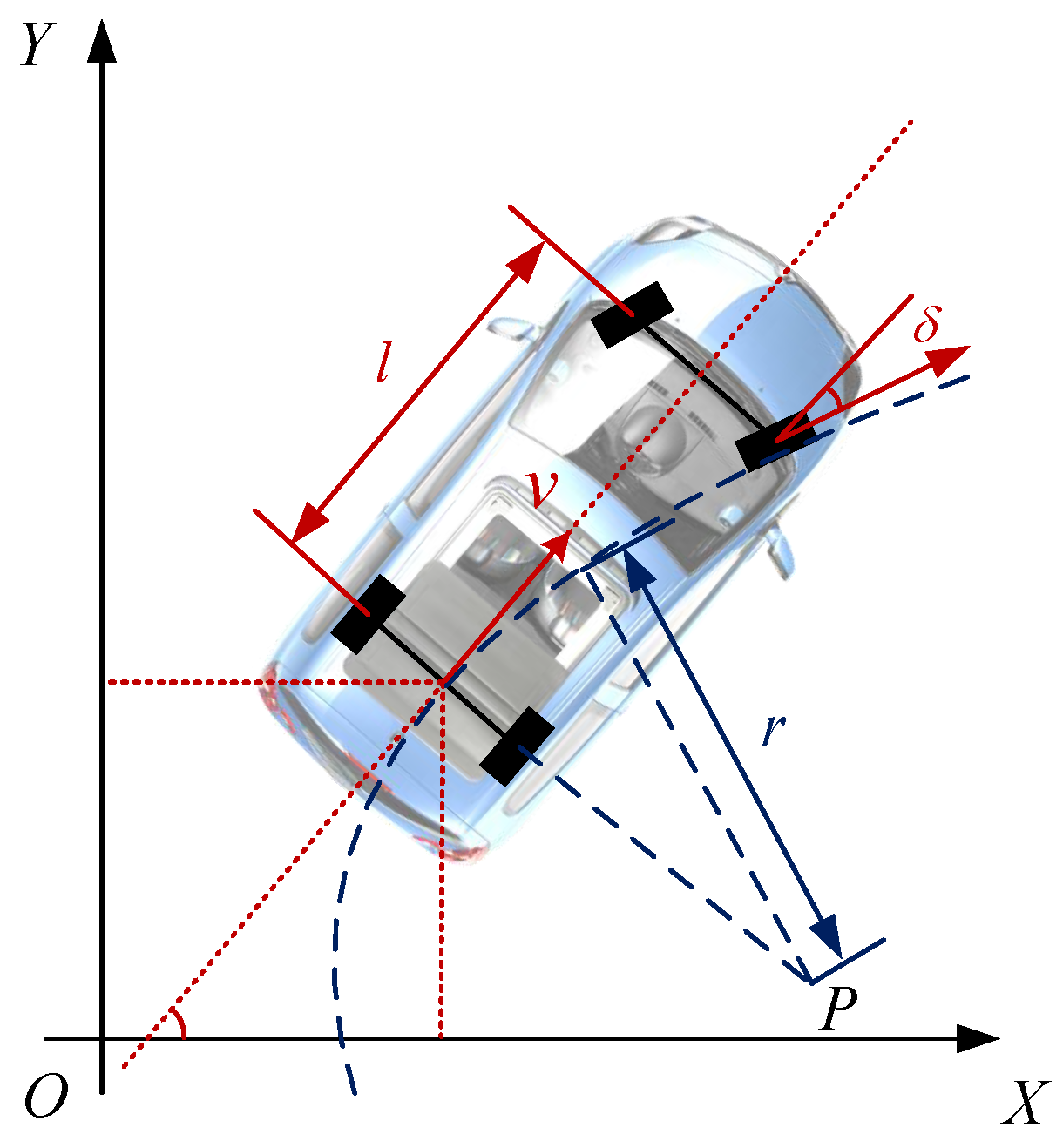

6.1. Vehicle Model

6.2. Parking Space Tracker

6.3. Parkable Area Detection

7. Experiment

8. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wiesbaden, S.F. Around View Monitor. Auto Tech Rev. 2013, 2, 64. [Google Scholar] [CrossRef]

- Choi, D.Y.; Choi, J.H.; Choi, J.; Song, B.C. Sharpness Enhancement and Super-Resolution of Around-View Monitor Images. IEEE Trans. Intell. Transp. Syst. 2017, 1–13. [Google Scholar] [CrossRef]

- Yu, H.S.; Jeoung, E.B. The Lane Recognition Enhancement Algorithms of Around View Monitoring System Based on Automotive Black Boxes. J. KIIT 2017, 15, 45. [Google Scholar] [CrossRef]

- Wang, S.; Yue, J.; Dong, Y. Obstacle detection on around view monitoring system. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Banff, AB, Canada, 5–8 October 2017; pp. 1564–1569. [Google Scholar]

- Liu, Y.; Zhang, B. Photometric alignment for surround view camera system. In Proceedings of the IEEE International Conference on Image Processing, Paris, France, 27–30 October 2014; pp. 1827–1831. [Google Scholar]

- Dabral, S.; Kamath, S.; Appia, V.; Mody, M.; Zhang, B.; Batur, U. Trends in camera based Automotive Driver Assistance Systems (ADAS). In Proceedings of the IEEE International Midwest Symposium on Circuits and Systems, College Station, TX, USA, 3–6 August 2014; pp. 1110–1115. [Google Scholar]

- Zhang, B.; Appia, V.; Pekkucuksen, I.; Liu, Y.; Batur, A.U.; Shastry, P.; Liu, S.; Sivasankaran, S.; Chitnis, K. A Surround View Camera Solution for Embedded Systems. In Proceedings of the Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 676–681. [Google Scholar]

- Harris, C. A combined corner and edge detector. In Proceedings of the Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: binary robust independent elementary features. In Proceedings of the 11th European Conference on Computer Vision (ECCV 2010), Crete, Greece, 10–11 September 2010; pp. 778–792. [Google Scholar]

- Yu, M.; Ma, G. 360 surround view system with parking guidance. SAE Int. J. Commer. Veh. 2014, 7, 19–24. [Google Scholar] [CrossRef]

- Houben, S.; Komar, M.; Hohm, A.; Luke, S. On-vehicle video-based parking lot recognition with fisheye optics. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems, The Hague, The Netherlands, 6–9 October 2013; pp. 7–12. [Google Scholar]

- Hamada, K.; Hu, Z.; Fan, M.; Chen, H. Surround view based parking lot detection and tracking. In Proceedings of the Intelligent Vehicles Symposium, Seoul, Korea, 28 June–1 July 2015; pp. 1106–1111. [Google Scholar]

- Suhr, J.K.; Jung, H.G. Automatic Parking Space Detection and Tracking for Underground and Indoor Environments. IEEE Trans. Ind. Electron. 2016, 63, 5687–5698. [Google Scholar] [CrossRef]

- Chen, J.Y.; Hsu, C.M. A visual method tor the detection of available parking slots. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Banff, AB, Canada, 5–8 October 2017; pp. 2980–2985. [Google Scholar]

- Kim, S.H.; Kim, J.S.; Kim, W.Y. A method of detecting parking slot in hough space and pose estimation using rear view image for autonomous parking system. In Proceedings of the IEEE International Conference on Network Infrastructure and Digital Content, Beijing, China, 23–25 September 2016. [Google Scholar]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar] [CrossRef]

- Gioi, R.G.V.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A line segment detector. IPOL J. 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Pyo, J.; Hyun, S.; Jeong, Y. Auto-image calibration for AVM system. In Proceedings of the 2015 International SoC Design Conference (ISOCC), Gyungju, Korea, 2–5 November 2015; pp. 307–308. [Google Scholar]

- Lo, W.J.; Lin, D.T. Embedded system implementation for vehicle around view monitoring. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Catania, Italy, 26–29 October 2015; Springer: Berlin, Germany, 2015; pp. 181–192. [Google Scholar]

- Makarov, A.S.; Bolsunovskaya, M.V. The 360° Around View System for Large Vehicles, the Methods of Calibration and Removal of Barrel Distortion for Omnidirectional Cameras; AIST (Supplement): Tokyo, Japan, 2016; pp. 182–190. [Google Scholar]

- Pekkucuksen, I.E.; Batur, A.U. Method, Apparatus and System for Performing Geometric Calibration for Surround View Camera Solution. U.S. Patent 9,892,493, 13 February 2018. [Google Scholar]

- Neunert, M.; Bloesch, M.; Buchli, J. An Open Source, Fiducial Based, Visual-Inertial Motion Capture System. In Proceedings of the 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016. [Google Scholar]

- Sementille, A.C.; Rodello, I. A motion capture system using passive markers. In Proceedings of the International Conference on Virtual Reality Continuum and its Applications in Industry, Singapore, 16–18 June 2004; pp. 440–447. [Google Scholar]

- Fiala, M. Vision Guided Control of Multiple Robots. In Proceedings of the Conference on Computer & Robot Vision, London, ON, Canada, 17–19 May 2004; pp. 241–246. [Google Scholar]

- Fiala, M. ARTag, a fiducial marker system using digital techniques. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 590–596. [Google Scholar]

- Chu, C.H.; Yang, D.N.; Chen, M.S. Image stablization for 2D barcode in handheld devices. In Proceedings of the International Conference on Multimedia 2007, Augsburg, Germany, 25–29 September 2007; pp. 697–706. [Google Scholar]

- Illingworth, J.; Kittler, J. A survey of the Hough transform. Comput. Vis. Graph. Image Process. 1988, 43, 87–116. [Google Scholar] [CrossRef]

- Singh, K. Automobile Engineering; Standard Publishers: New Delhi, India, 1994. [Google Scholar]

- Crassidis, J.L.; Junkins, J.L. Optimal Estimation of Dynamic Systems; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Kaehler, A.; Bradski, G. Learning OpenCV 3: Computer Vision in C++ with the OpenCV Library; O’Reilly Media, Inc.: Sevan Fort, CA, USA, 2016. [Google Scholar]

| Minimum width of vertical parking area | 2.2 m | Maximum width of vertical parking area | 3.5 m |

| Minimum length of vertical parking area | 5.1 m | Maximum length of vertical parking area | 6.5 m |

| Minimum width of parallel parking area | 2.1 m | Maximum width of parallel parking area | 2.7 m |

| Minimum length of parallel parking area | 5.3 m | Maximum length of parallel parking area | 7.0 m |

| Scale of LSD API in OpenCV [30] | 0.5 | Sigma_scale of LSD API in OpenCV | 0.375 |

| Shape anlge of vertical parking area | Number of line angle group | 10 | |

| Minimum width of parking edge | 4 px | Minimum width of parking edge | 13 px |

| Maximum line distance for combination of two LSD result | 3 px | Angle tolerance of L-shaped extractor | |

| Minimum length of a valid LSD line after combination | 15 px | Maximum length of a valid LSD line after combination | 250 px |

| Color threshold in Section 5.1 | 5 | Color threshold in Section 5.1 | 150 |

| Maximum distance for treating two line as intersection | 10 px | Maximum point distance for treating two parking areas as the same | 0.7 m |

| Method | No. of Vacant Parking Areas | No. of Correct Detection | No. of False Detection | Recall | Precision |

|---|---|---|---|---|---|

| Ultrasonic sensor-based method | 227 | 90 | 19 | 0.3965 | 0.8257 |

| Pillar-based method in [13] | 227 | 182 | 4 | 0.8018 | 0.9785 |

| Proposed fusion method | 227 | 197 | 13 | 0.8678 | 0.9381 |

| Method | No. of Vacant Parking Areas | No. of Correct Detection | No. of False Detection | Recall | Precision |

|---|---|---|---|---|---|

| Ultrasonic sensor-based method | 144 | 62 | 7 | 0.4306 | 0.8986 |

| Pillar-based method in [13] | 114 | 88 | 3 | 0.7719 | 0.9670 |

| Proposed fusion method | 144 | 131 | 5 | 0.9097 | 0.9632 |

| Method | No. of Vacant Parking Areas | No. of Correct Detection | No. of False Detection | Recall | Precision |

|---|---|---|---|---|---|

| Ultrasonic sensor-based method | 98 | 41 | 1 | 0.4184 | 0.8238 |

| Pillar-based method in [13] | 98 | 43 | 1 | 0.4388 | 0.9773 |

| Proposed fusion method | 98 | 78 | 5 | 0.7959 | 0.9398 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zong, W.; Chen, Q. A Robust Method for Detecting Parking Areas in Both Indoor and Outdoor Environments. Sensors 2018, 18, 1903. https://doi.org/10.3390/s18061903

Zong W, Chen Q. A Robust Method for Detecting Parking Areas in Both Indoor and Outdoor Environments. Sensors. 2018; 18(6):1903. https://doi.org/10.3390/s18061903

Chicago/Turabian StyleZong, Wenhao, and Qijun Chen. 2018. "A Robust Method for Detecting Parking Areas in Both Indoor and Outdoor Environments" Sensors 18, no. 6: 1903. https://doi.org/10.3390/s18061903

APA StyleZong, W., & Chen, Q. (2018). A Robust Method for Detecting Parking Areas in Both Indoor and Outdoor Environments. Sensors, 18(6), 1903. https://doi.org/10.3390/s18061903