1. Introduction

A smart home system is a system that monitors and controls various electronic devices to provide convenience to users in a private residential environment. For instance, a smart home system monitors and controls the climate in a residential setting such as temperature, humidity, and illumination in real time using various sensor devices, thus providing convenience to the user [

1,

2]. In recent times, technology has proliferated into various aspects of daily life. The widespread use of smart phones, tablet PCs, and high-speed internet, has led to active research on a spoken dialog system, which acts as an interface between users and a smart home system. The integration of a spoken dialog system with smart homes can provide a more convenient experience to the user. In particular, if the spoken dialog system can recognize user behaviors based on input from motion sensors, the dialog system can realize active dialog. For example, to automatically set the temperature and illumination in a house just prior to the user’s return to the house, the system automatically recognizes the user using sensors when he/she leaves home and initiates a conversation like “What time will you come home?” If the user replies, “I will come home at about seven p.m.”, the system can respond “Alright, I will turn the heater on at six thirty in the evening.” Thus, based on the response of the user, the system can customize the conditions in the house that are suited to the user’s preferences.

In particular, a task-oriented dialog system recognizes a user intended task from a user’s speech in a smart home environment and provides core functions to accomplish the intended purpose. Such a dialog system is designed to achieve specific objectives and tasks such as climate control, schedule management, hotel reservation, and text-based communication [

3,

4,

5,

6,

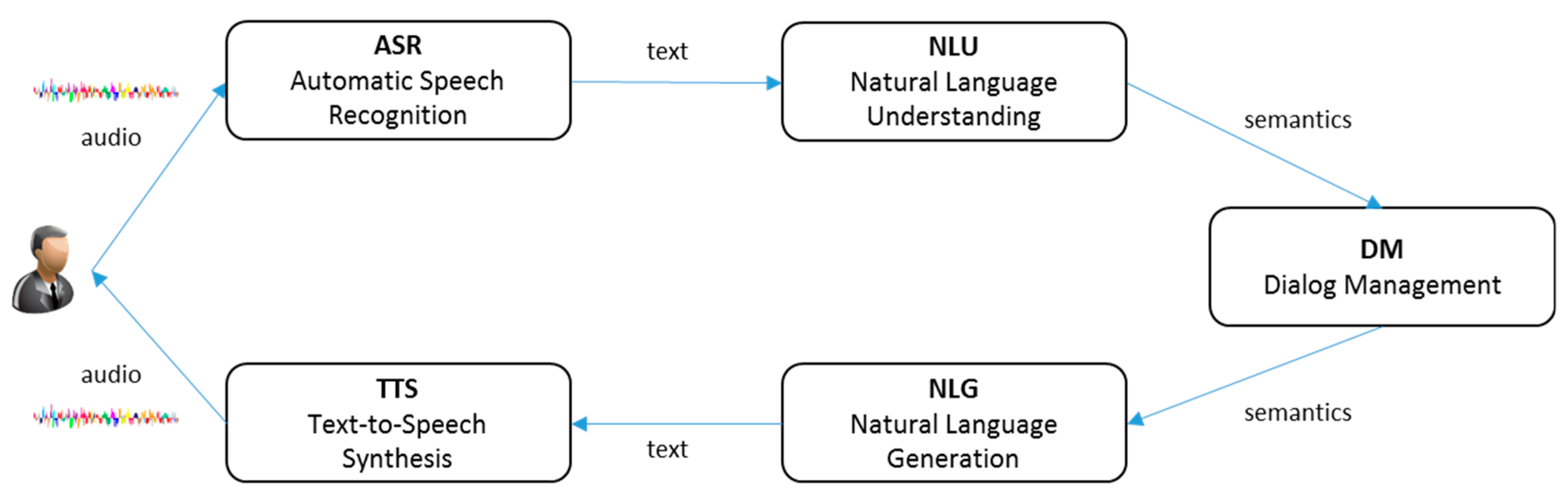

7]. A task-oriented dialog system comprises the following five components: automatic speech recognition (ASR), natural language understanding (NLU), dialog management (DM), natural language generation (NLG), and text-to-speech synthesis (TTS). Each component must be configured to achieve the objectives of the dialog system. Depending on the services to be provided, the dialog system may require integration with other functional modules, because certain applications, in addition to the basic modules, require other functional modules to successfully perform operations. Without expertise in dialog models and natural language processing, it is rather challenging to effectively design such tasks, as developers will be required to implement each module separately for accomplishing different operations.

Most previous studies on task-oriented dialog systems have discussed specific modules in the proposed systems [

3]. Although these individual studies have contributed to the improvement in the quality of such dialog systems, they cannot be directly applied to other dialog systems [

8]. However, to address some of these issues, researchers are actively studying end-to-end dialog systems using deep neural networks [

9,

10,

11]. Nevertheless, this approach cannot be directly used for developing a practical task-oriented dialog system because of scalability issues. Thus, there is a need for a dialog framework that integrates and manages each component of the system to develop a task-oriented dialog system. Unfortunately, relevant studies on this topic are scarce.

Considering this, in this study, we propose a dialog framework that helps non-expert developers in dialog systems and natural language processing implement a task-oriented dialog system. The proposed dialog framework provides the following differentiated functions:

A rule-based approach to build the basic components of the dialog system (SLU, DM, and NLG), and expressing the rules used in the dialog knowledge. The dialog knowledge can be easily understood because it is structured in an ontological style, and basic conversational agents can be implemented by editing it.

A module router that can link the system with external modules to add various functions without accessing the source code of the dialog system. The module router is executed by calling external modules based on the conditions set by the developers.

A hierarchical argument structure (HAS) to manage various argument representations of natural language sentences.

The remainder of this paper is structured as follows:

Section 2 reviews the research related to task-oriented dialog systems. The structure and features of our proposed dialog framework are described in

Section 3, while

Section 4 introduces the dialog models and dialog knowledge that are the core components of our research.

Section 5 explains the NLU and NLG methods used in the proposed dialog system.

Section 6 describes the experimental procedures and results, which evaluate the usability of the proposed dialog framework. Lastly,

Section 7 presents the conclusions and directions for future research.

2. Related Work

This section discusses previous work on the components of a dialog system as well as on frameworks for building an effective dialog system.

Figure 1 shows the typical structure of a task-oriented dialog system. Owing to the comprehensive and cumbersome nature of covering every component of this structure, most studies have addressed individual components. Previous research primarily focused on improving the performance of the system by applying machine learning(ML) models such as the Bayes classifier [

12,

13], support vector machine (SVM) [

14], maximum entropy (ME) [

15], and conditional random fields (CRF) [

16]; some studies have also used feature engineering. However, recently, the application of deep neural network (DNN) to improve the performance of such task-oriented dialog systems has gained momentum [

17].

Studies related to domain/intent detection primarily involved the application of machine learning-based classification models such as SVM and ME [

18,

19]. More recently, the application of DNN models has also gained interest. In [

20], the authors describe the use of mass unlabeled data belief in an initialization task for a deep belief network; the results showed improved accuracy when compared against existing classification models. Since then, the application of recurrent neural network (RNN) models and convolutional neural network models to domain/intent detection has been regarded as the state-of-the-art [

21,

22]. Further, research related to slot-value detection primarily involved performing sequence labeling that attached begin, inner, and outer type tags to the corresponding word positions. These studies assumed that values such as date, time, and location, which are required to perform domain/intent detection, are present as it is in a given sentence. In [

23], the authors proposed a slot-value detection method using conditional random fields, which showed excellent performance in sequence labeling; in addition, they introduced a feature engineering method to improve the performance of slot-value detection accuracy. Then, slot-value detection using long-short term memory (LSTM) was proposed [

24]. In recent studies, sequence-to-sequence models have been widely applied for machine translation [

25,

26]. In addition to these studies, studies have been conducted that combine domain/intent and slot-value detection methods to reduce the propagation of errors [

27] and effectively address the ambiguity using contexts, which reflect dialog history information [

28].

The role of DM involves dialog state tracking (DST), which updates the dialog state when a conversation is in progress, and a dialog policy (DP), which creates a dialog act to respond to the user in the corresponding dialog state. Rule-based DM is a way of manually defining the possible states of a dialog system and the behavior of the system for the given state. Finite state (FS)-based DM [

29,

30], one of the typical rule-based DM, is the simplest and widely-used method to date. FS-based DM component converts the finite state automata (FSA) based on the output of the NLU, and generates dialog act based on this output; such is achieved by manually designing an FSA to analyze a conversation scenario. Meanwhile, information state (IS)-based DM [

31] provides more generalized and flexible functions compared to FS-based DM. IS contains various dialog context sets (such as task, slot-value, dialog history, ASR confidence score etc.) and update rules. Then IS-based DM selects the update rule matched with context set, and updates the IS during conversation. Rule-based DM is significantly intuitive; its advantages include easy addition of new domains and easy maintenance. However, rule-based DM is vulnerable to errors in ASR and NLU, because it is entirely dependent on the result of the NLU to recognize the intent of the user. Therefore, it is important to robustly complete a conversation analysis even for semantically ambiguous input. Therefore, to address this issue, active research is being conducted to track the state in such FSA while maintaining the dialog state using a probability distribution. In particular, Young [

32] showed that an FS-based DM component could be robust against errors in ASR and NLU by successfully applying a partially observable Markov decision process (POMDP) to the DM. Annually, the dialog state tracking challenge (DSTC) [

33] is held, where researchers compete against each other based on the performance of their proposed systems on selected new issues related to DST. In [

34], Henderson improved DST accuracy by building a multi-layer perceptron and applying rich feature representations. Another study [

35] used both NLU and ASR results as features to supplement the information lost in the NLU process. Moreover, methods using reinforcement learning (RL) have been primarily proposed for DP optimization [

36]. In such methods, a reward must be assigned to the output from the model. For this, either a user simulator that plays the role of a user is developed or a method of seeking an actual user for the reward is used [

37]. In [

38], a POMDP-based dialog manager was proposed that simultaneously applied rule-based dialog management and probabilistic dialog management.

The most widely used method in natural language generation (NLG) is a template-based NLG. The template-based NLG is a method for defining the sentences that can be output for all the possible output dialog acts in DM. For this, appropriate rules that allow certain changes based on the values of dialog acts are applied to the sentences [

39]. The main advantages of the template-based NLG method include the low number of errors and the ease with which developers can control the system. However, building such systems is cumbersome when there are several dialog act types; this can be regarded as a drawback. Moreover, it cannot process undefined dialog acts. To address these problems, recently, a machine learning-based NLG has been actively researched. In [

40], Mairesse proposed a method to perform NLG using a Bayesian network. This study defined a new tag, called a semantic stack, by dividing a natural language sentence into phrase units, and created a sentence using the Bayesian network. In addition, it was shown that their method could achieve higher performance even when annotating small data using active learning. However, recent research has primarily focused on using a corpus consisting of only dialog acts and sentences because tagging the semantic stack is a relatively expensive annotation task. In [

41], Wen proposed a method to generate a sentence using the one-hot encoded representation of the dialog act as the initial hidden state of the RNN in a manner similar to image captioning [

42]. A subsequent study [

43] showed improved performance by proposing a semantic conditioned LSTM in order to prevent the repetition of words with the same meaning or including information that specific dialog act values are not present in sentences. Then, in [

44], Press showed that the generative adversarial network (GAN) that was used quite effectively in image recognition could also be used in NLG.

Studies on the dialog framework that helps the development of the task-oriented dialog system have not been widely conducted because the studies are considerably difficult. Nevertheless, studies on an effective dialog framework have been steadily underway. Of these, VoiceXML [

45], RavenClaw [

46], and DialogStudio [

47,

48] are the frameworks that have been used to develop actual commercial dialog systems. VoiceXML is a markup language that can define and express the dialog flow specified by the World Wide Web consortium (W3C), thus dialog management can be implemented based on VoiceXML. In [

49], implemented a dialog system based on VoiceXML and Galaxy HUB. However, VoiceXML is very inflexible and implementing a relatively complex dialog system is challenging. RavenClaw primarily involves plan-based dialog management, and yields the knowledge output, known as a dialog task tree, which indicates the purpose of a dialog; in this case, a dialog engine plays the role of planning the tasks to be executed based on the content of the dialog task tree when interacting with the user. In addition, it has been proven through various implementation cases that this method can be used in various commercialization services. However, to build a dialog system based on RavenClaw, it is necessary to manage relatively complex knowledge grammar and build many rules. DialogStudio is focused on providing a corpus-based dialog system using an example-based dialog management method; in other words, if we have a sufficiently large dialog corpus, we can implement a dialog system without building complex knowledge. However, the example-based dialog management method has some drawbacks in that it is difficult to accurately control the dialog system and guarantee the performance when the corpus is not sufficiently large. Until recently, framework studies helping implement dialog systems have been underway. Owlspeak [

50] is an ontology-based dialog framework that uses Web Ontology Language (OWL) to generate VoiceXML documents dynamically. Owlspeak provides a model-view-presenter (MVP) design pattern: its model represents dialog knowledge, and its view updates dialog state and generates the dialog act of the following system. Then the dialog act of the system is rendered in the view to Voice XML document. The framework proposed in [

51] focused on helping quickly implement machine learning-based dialog management and natural language understanding, and provided a special function called a story graph that visualized the flow of dialogue scenarios in advance. In [

52], the authors proposed a framework for expressing dialogue behaviors as probabilistic rules. The probabilistic rules used in this study consist of conditional statements and actions with probability; these probabilistic rules can be made manually or generated automatically by supervised learning or reinforcement learning. In [

53], a very high quality framework is proposed that provides all the necessary tools, such as natural language understanding, dialog management, language generation, evaluation, user simulation, etc.

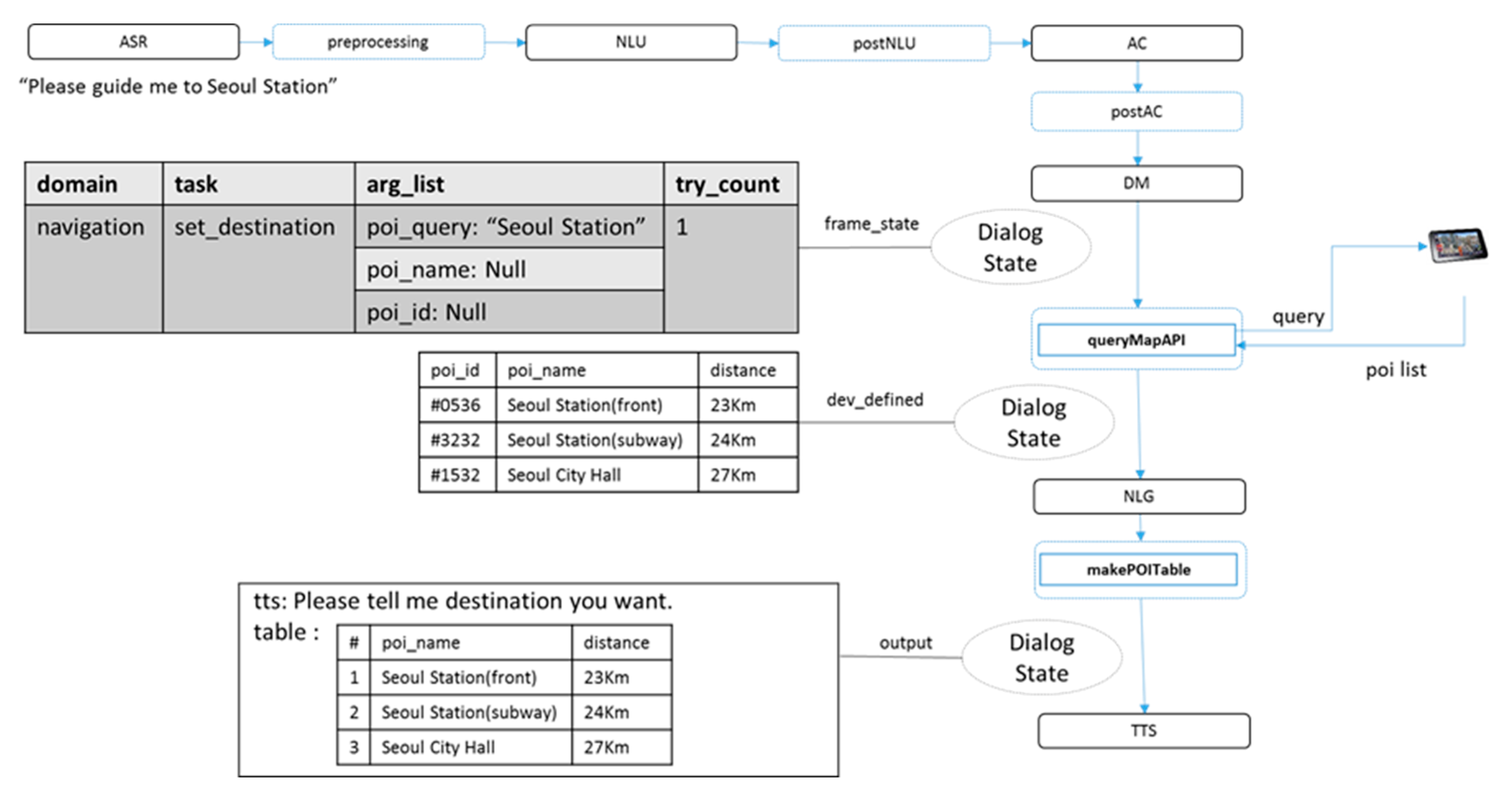

4. Dialog Manager

A dialog manager consists of a dialog state tracker, which updates the contents of the frame state based on the result of the NLU analysis, an argument generator, and a dialog policy. The dialog policy generates dialog actions with which the system can respond to the user based on the current frame state. The dialog manager in the proposed framework uses FS–based DM [

29], one of the rule-based DMs. With FS-based DM, it is easy for developers to intuitively create models, and add or modify for maintenance. Although some advanced rule based-DMs offer more advanced features, FS-based DM with module router can implement similar functionality to other rule-based DMs. Thus, the simplest FS-based DM is utilized to prioritize development convenience and robustness. For reinforcement learning or end-to-end models, it is necessary to collect a large quantity of data to create models. Furthermore, it is also difficult to verify usability of the dialog system. Because of the problems mentioned above, FS-based DM is actively applied in current dialog systems. With the FS-based DM component, the proposed framework can easily manage dialog system.

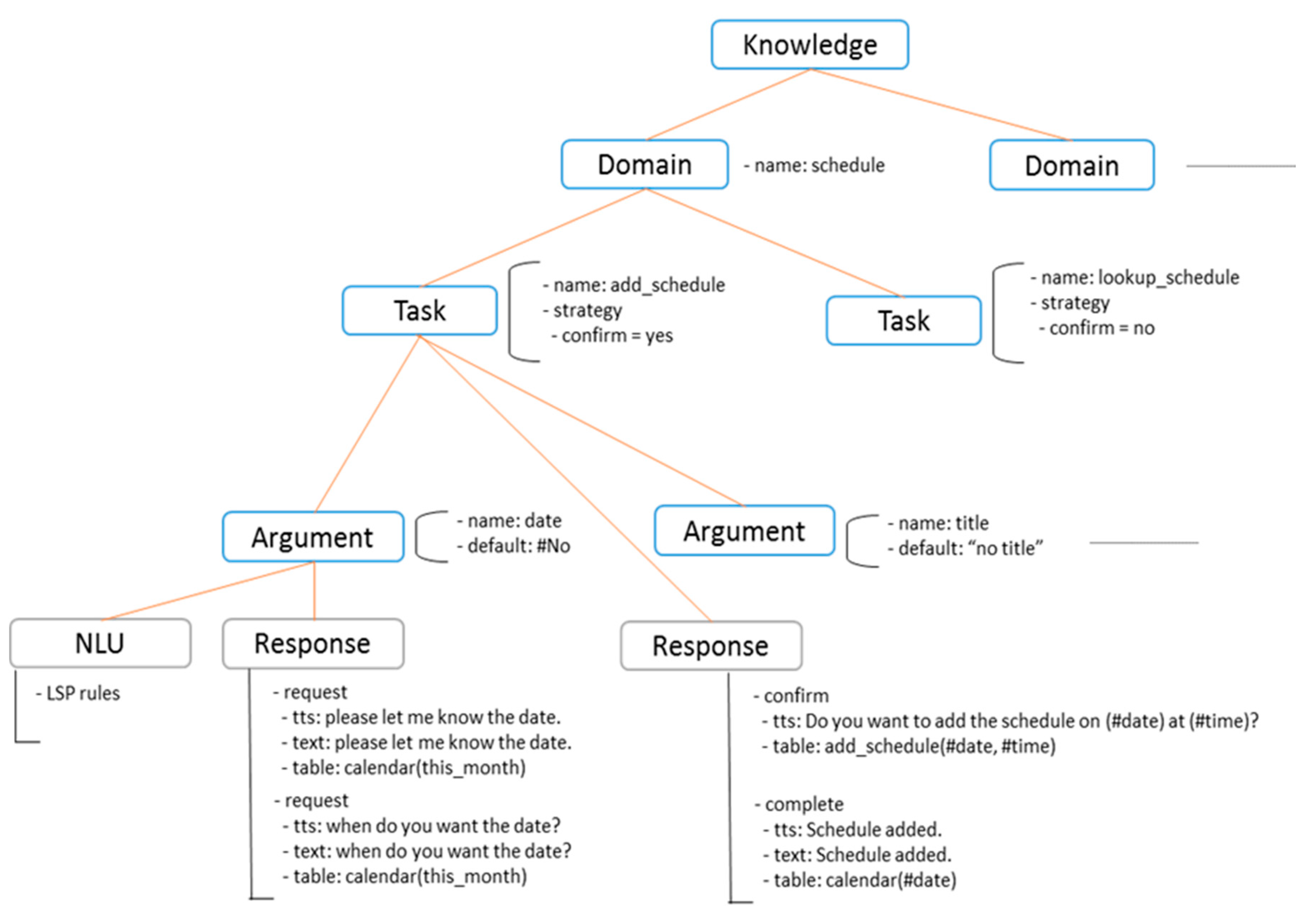

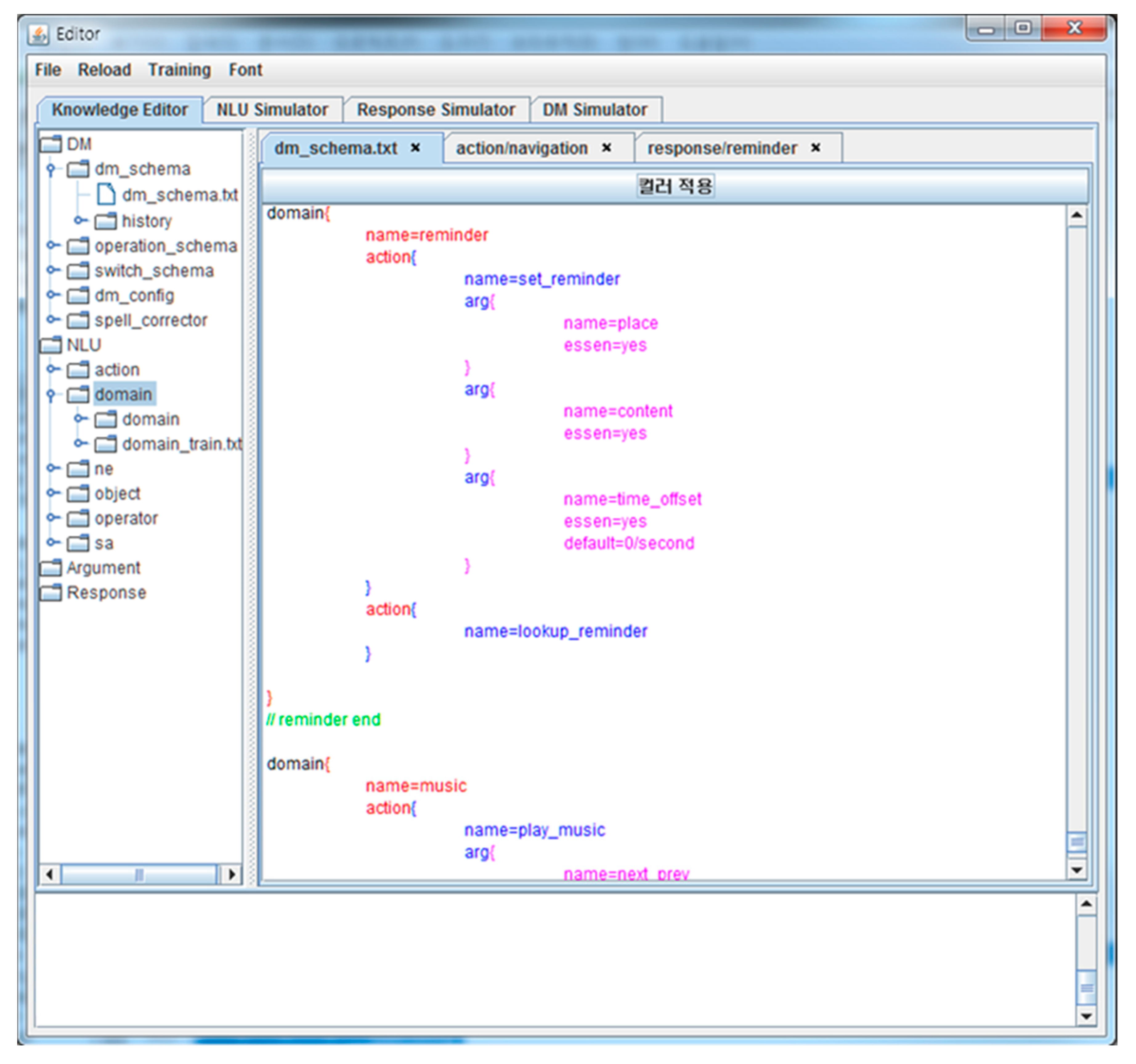

4.1. Dialog Knowledge

In a task-oriented dialog system, dialog knowledge expresses information about the objective and strategy of the dialog system. Using this dialog knowledge expression, developers can construct the operational structure of the dialog system. Therefore, dialog knowledge should be easy for developers to understand and manage and should be as autonomous as possible. In general, dialog knowledge expresses information required to construct the DM component; however, in our proposed dialog framework, the rules used in NLU and NLG are included in the dialog knowledge. The proposed knowledge expression follows an ontology-based structure and can be managed independently according to the domain. An example of dialog knowledge is shown in

Figure 5. The dialog knowledge consists of multiple domains, each of which consists of multiple tasks. Each task has multiple arguments, which are the information needed to perform the task.

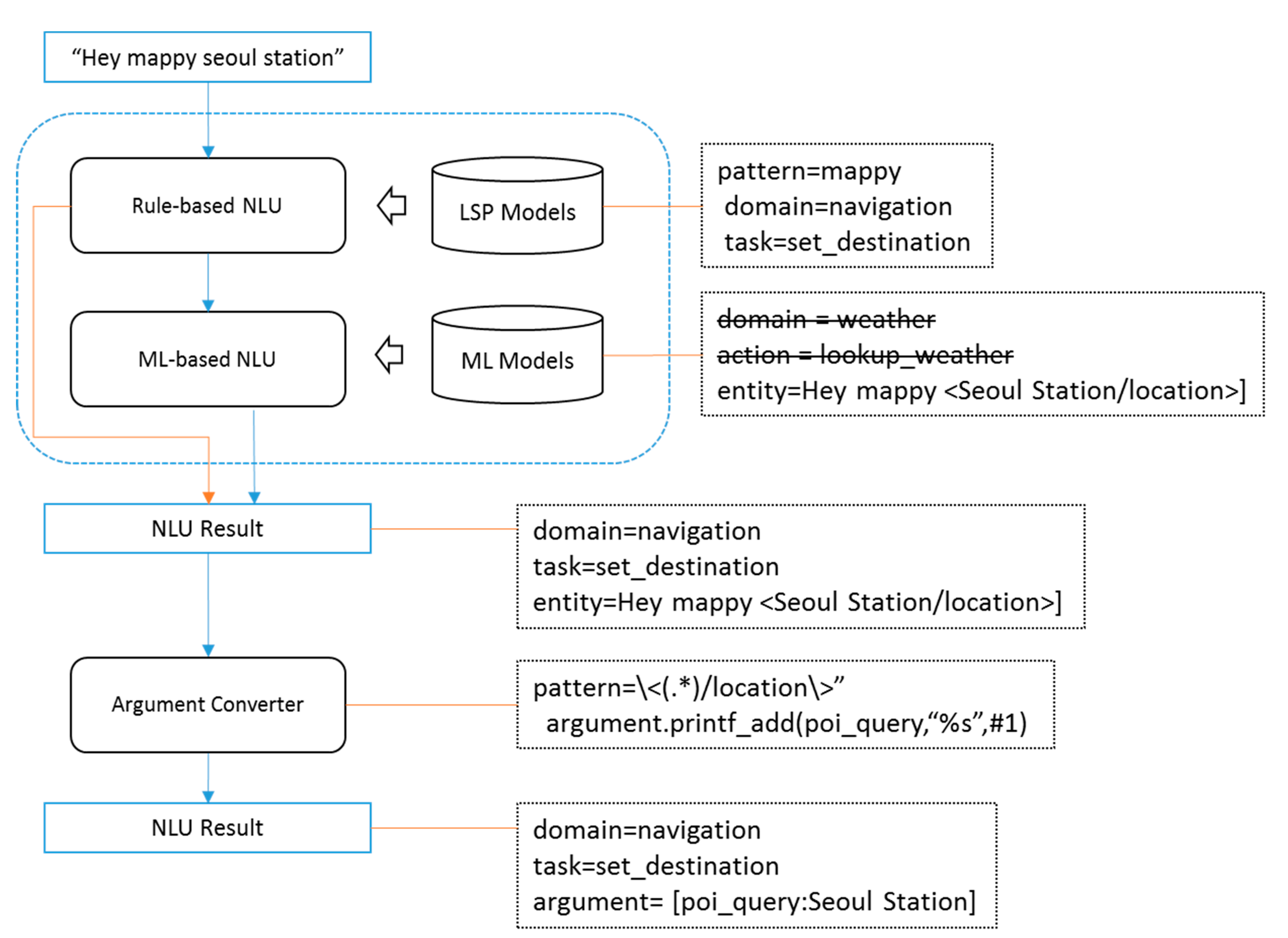

4.2. Argument Generator

An argument that is the target of a task refers to some information needed to perform the task. The arguments of each task are obtained by the slot-value detection operation of the NLU component; in this case, the generated value is a word string present in a natural language sentence. However, this value cannot be used as-is in external applications. For example, if the result of the named-entity recognition (NER) analysis of the input sentence is “Wake me up at (four am)/time (tomorrow)/date”, the information required to perform an actual service should be a normalized value such as “time: 0400, date: 1108”. Therefore, a function is required to convert the NER result into a normalized argument value. The proposed framework provides an argument generation (AG) module that plays this role. AG uses a lexico-semantic pattern (LSP) [

54] that is similar to the rule-based approach of NLU to perform this function.

4.3. Hierarchical Argument Structure

Among the natural language sentences present in the actual dialog system, the argument information is represented in various forms. In some cases, it does not include all necessary information but expresses some partial information. For example, in a sentence such as “please schedule for next week”, “next week” is date information, but only provides partial information. In this case, the same response requesting the date value is repeated, which may lead to the deterioration in the quality of the conversation because the argument value cannot be generated in the general argument structure. A more accurate response in this situation is to request the remaining partial information such as “Which day of next week do you want?” Therefore, in this study, we propose a HAS for the flexible operation of such argument expressions.

Figure 6 shows an example of the HAS for dates. Like the dialog knowledge, HAS also has an ontological structure. HAS uses two relations: an alternative relation and a partial relation. First, the alternative relation indicates that the parent argument can be represented by several alternative argument expressions. A date, for example, can be expressed as an absolute date, such as “December 28”, or as a relative date, such as “today” or “tomorrow”. Expressions such as “Monday of next week” are also possible. Thus, the date is connected to date.absDate (absolute date), date.relDate (relative date), and date.weekDate (day of the week) through the alternative relations. Second, the partial relation indicates that the parent argument can be represented by a combination of several partial arguments. For example, to express the date.weekDate “Monday of next week”, “next week” and “Monday” can be independently expressed, and date.weekDate can be completed when both partial expressions are present. Thus, it can be seen that the date.weekDate.weekUnit and date.weekDate.dayofWeek arguments are connected to each other through the partial relation to date.weekDate.

Using the proposed HAS, developers can design the task arguments more conveniently and provide more intelligent responses in such situations, that is, even if the user uses partial expressions. For example, when a user makes a request such as “please schedule for next week”, date.weekDate.weekUnit will have a value of “+1” after NLU and AG are performed. In this case, it can be seen that the request argument should be date.weekDate.dayofWeek based on the search result for the date argument. Therefore, the DM component will set the request argument of the response dialog action to date.weekDate.dayofWeek instead of date. A more intelligent conversation is possible by defining “Which day of (#date.weekDate.weekUnit) week do you want?” in the template of this dialog action.

The HAS was used to obtain both date.weekDate.weekUnit and date.weekDate.dayofWeek values from the user, but the final value applied as the date argument must be an absolute date value, such as “20180226”. For this task, the HAS executes the merge module for the partial relation and the conversion module for the alternative relation. First, the merge module generates a parent argument by combining the values of all arguments connected through the partial relations of a specific argument. In the case of date.weekDate, for example, mergeWeekDate will be executed, and date.weekDate will have the value “Monday, +1” from the partial relation values. When any of the arguments connected through the alternative relation of a specific argument has a value, the conversion module converts that value to the parent argument. For example, if date.weekDate has a value of “Monday, +1”, then convertWeek2Date is executed, and the converted date value is “20180226”. Consequently, the date argument now has an absolute date expression. Because the modules used in this process can be defined and connected to the ontological structure by developers, they can also be used for arguments other than date.

There are various arguments that can effectively apply HAS, for instance, in a smart home environment. For example, let us consider target_light in the turn_light task that controls the lights in the house. If there are several types of lights in the house, the room_type and light_type can be configured as partially related arguments of target_light. At this point, if the user requests “Turn on the mood light”, then the target_light.light_type is set to “mood light”, and it replies with a question like “In which room do you want me to turn on the mood light?” requesting the target_light.room_type. Thus, using the proposed HAS, we can build a more intelligent dialog system.

6. Experiments

This section describes the experimental procedures and results for verifying the usability of the proposed dialog framework. Experiments were set up to develop a multi-domain task-oriented dialog system for the Korean smartphone. First, three developers who had never developed a dialog system were trained in the structure and use of the dialog framework for a total of 30 hours over the course of a month. The building of a dialog system for simple scenarios was included in the training. After training, approximately six conversation domains in a smartphone environment were selected: call, message, weather, navigation, TV_guide, and schedule. One to six tasks were defined for each domain. For ASR, we employed the Google Cloud Speech API (

https://cloud.google.com/speech). A hybrid approach was applied to the NLU modules of the dialog system by implementing both the rule-based and machine learning approaches. For NLG, a template was created for each situation. The dialog system had been under development for approximately six months. The development period includes training on the framework, domain selection and dialog scenario design, data collection, external application implementation, external API integration, and performance testing. The six-month development period may not seem like a short time to prove the usefulness of the framework. However, considering that the subjects of experiments were not experts in the dialog system and given the quality of the dialogue system developed by them, the six-month development period does not seem significantly long.

In [

55], readers can find a video that shows the functioning of the system with actual smartphone applications. The number of rules and templates and the number of dialogs used in the machine learning approach are listed in

Table 1.

The proposed framework was implemented in a JAVA 8 development environment. The framework provides a text-based editor for editing domains, tasks, arguments, NLU rules, NLG templates, etc., i.e., dialog knowledge. The editor screen is shown in

Figure 8.

6.1. Evaluation

Quantitative and qualitative evaluations of the developed dialog system were performed. For quantitative evaluation, we used the accuracy of NLU modules. On the other hand, for qualitative evaluation, we used the success rate and user satisfaction as parameters.

6.1.1. Accuracy of NLU Modules

As regards the quantitative evaluation, the accuracy of the NLU modules was measured. The models used in NLU were compared based on the approach used: the rule-based approach constructed by the developers, the machine learning approach [

18] created using the training corpus, and the hybrid approach combining the two methods. Here, the machine learning approach can use other alternative classification models, thus varying the output accuracy of NLU. Eighty percent of the corpus was used for machine learning and for creating LSP rules to build each model; the remaining 20% was used for performance evaluation. The evaluation metric for domain, intent, and speech act detection was accuracy; that is, the percentage of correct predictions for each sentence. The evaluation metric for argument detection was the F1 score, which is the harmonic average of precision and recall. Precision, recall, and F1 score are defined in Equations (1)–(3), respectively:

The results are shown in

Table 2. It can be confirmed that the overall accuracy decreased because the rule-based approach is analyzed only by the LSP built by the developers. The low accuracy may be attributed to the fact that the rule-based approach does not provide analysis results for patterns that are not predefined. Therefore, the overall recall is low, which decreases the performance of the F1-score. However, because of the nature of the rule-based approach, it can provide higher performance and several advantages, especially in terms of maintenance, if the LSP rules are accurately constructed. The overall accuracy was the highest with the machine learning approach. The hybrid approach was confirmed to provide performance similar to the machine learning approach despite a slightly lower relative performance. Although there are patterns that are not predefined, the low recall can be complemented because the ML model can handle such patterns. In addition, the precision is similar to the machine learning-based approach, thus showing similar overall performance. If the rules are more sophisticatedly constructed, the hybrid approach can outperform the machine learning-based approach. These findings indicate that the hybrid approach effectively provides all the advantages of the two models.

6.1.2. Conversation Success Rate and Satisfaction

A qualitative evaluation was performed based on the conversation success rate and conversation satisfaction. Also in order to measure the effectiveness of the proposed HAS, the comparison was made with models with and without HAS. For the test, the HAS arguments were designed with location (for weather and navigation domain), date and time (for schedule domain). The conversation success rate refers to the rate at which the objective of a conversation is achieved when started with a specific purpose. In this study, a total of 100 conversations were attempted; 10 purposes were assigned to 10 different users. Here, the maximum number of conversation turn for each conversation is specified as the number of task arguments. If it exceeds the maximum number of conversation turn, it is regarded as a failure. Then, the evaluation metrics, success rate, is defined as shown in Equation (4).

The results listed in

Table 3 demonstrate a conversation success rate of approximately 90% for without HAS and 91% with HAS. However, it is difficult to measure the objective performance, because the conversation success rate depends strongly on the performance of ASR and NLU, and thus, the accuracy tends to increase as the user gains more experience.

Conversation satisfaction was measured based on scores given by users after the functions of the developed dialog system were described to them and they were allowed to use the system freely. Ten users participated in the evaluation and performed 10–30 tasks. To evaluate the usability, the users assigned a score from 1 to 5.

Table 3 indicates that the score for each domain was approximately 3.4 and 3.6. These results suggest that even though it was built by developers with little experience in the dialog model, the developed dialog system provided high-quality performance. In addition, it can be observed in the qualitative evaluation that the conversation success rate is not highly related to the satisfaction level. Although the conversation success rate is low, it resulted in relatively high satisfaction levels for the domains that are mostly likely to help people more profoundly in real life.

Comparing the case with and without HAS, model with HAS demonstrates some performance improvement in measuring success rate, because testers have succeeded in conversation by using different argument styles. More specifically, if model without HAS does not recognize a partial date representation (such as “next week”), the system will respond with a date request. At that time, the user can try the partial expression once more, or try another expression (such as “Monday, next week”, “May fourteen”) that the model can recognize. As a result, the improvement of success rate was not significant because the latter case was more frequent than expected. On the other hand, in measuring satisfaction, model with HAS demonstrates significant improvement, and is especially effective in the schedule domain. Because model with HAS was able to recognize argument using various expressions, the number of conversation turns was reduced. These results reflect that the model with HAS does not increase the success rate, but that it is very effective in improving the actual satisfaction.

6.2. Comparison with Other Frameworks

As regards the evaluation of the dialog framework, unlike other studies, it is difficult to directly compare the performance of the dialog frameworks. Therefore, we compare the characteristics of each framework.

Table 4 shows the comparison of the characteristics of RavenClaw [

46], DialogStudio [

47], Owlspeak [

50], Rasa [

51], OpenDial [

52], PyDial [

53], which are the existing typical dialog frameworks, and the proposed model.

RavenClaw creates a dialog plan by representing the knowledge as a task tree. Then, the dialog engine loads the tasks to be executed onto the dialog stack and calls the routines in sequence. This structure focuses on separating the objective of a dialog into several tasks and managing it in a tree structure. On the other hand, DialogStudio focuses on developing a dialog system based on a corpus because it uses the example-based DM. DialogStudio searches for the most similar example for a given user input. Therefore, it is possible to develop a high-quality dialog system if the example DB built using the tagged corpus is sufficient. On the other hand, if the tagged corpus is insufficient, the quality of the dialog system deteriorates significantly. In addition, it is difficult to predict the results for a particular input because the search results for an input are determined by similarity. Moreover, it is difficult for the developer to precisely control the dialog system; despite the addition of examples that output the desired results, there is no guarantee for them to be selected. Owlspeak is a framework that provides IS-based DM, MVP design pattern, and ontology representation. Owlspeak also employs outstanding development scalability by using universal plug and play (UPNP). IS-based DM provides more generalized domain knowledge and strategy compared to FS-based DM. But FS-based DM with module router functions similarly to IS-based DM. Rasa is a framework focused on developing machine learning-based NLU and machine learning-DM. In particular, Rasa can improve performance at low costs as it requires fewer corpuses for performing initial learning through the application of a bootstrapping technique. In addition, Rasa supports a story graph, which visualizes the path that dialog management can work in the learning process, using a directed graph. This feature can help users identify the characteristics of dialog management. However, Rasa has a similar drawback to Dialog Studio in that it can only use the machine learning method, and it does not support NLG. OpenDial is a framework that can implement the dialog system in an “if ... then ... else ...” approach. OpenDial consists of rules with conditional statements for all the operations of the dialog system and actions with probability; these rules are expressed in XML format. OpenDial provides a function to automatically generate rules from dialog corpus using supervised learning and reinforcement learning. Although it is data-driven, we can interpret the generated model due to this characteristic. On the other hand, OpenDial is difficult to operate effectively given the presence of a large number of rules during the development of a multi-domain dialog system that supports various functions. PyDial is a framework that selectively supports rule-based models and machine learning-based models for each component. In particular, PyDial has the advantage of providing the relatively recently proposed DM, NLU, and NLG. It is a very high-quality framework that can support simulation and evaluation functions.

Because the proposed dialog framework is developed using FS-based DM, it is possible to build a dialog system in a manner that is similar and more intuitive as compared to other frameworks. In addition, because the rule-based and machine-learning-based approaches can be used simultaneously for NLU, it is possible to easily add new tasks without the addition of significant data while providing high performance. Further, the proposed framework provides differentiated functions by using hierarchical arguments and a module router. Hierarchical arguments can contribute to improving the quality of the dialog system; they help the system intelligently respond to various argument representations, that are otherwise difficult to handle using existing dialog frameworks. For situations that necessitate the direct development of a dialog system, existing frameworks must be implemented at the source code level in order to add special functions or perform the desired dialog scenarios, thus rendering the development process more difficult. The module router allows the developer to indirectly control the processing of the dialog system without directly modifying the source code of the dialog framework, thus helping add or manage additional functions more easily. Therefore, this feature considerably reduces the complexity of implementation in our dialog system, resulting in improved productivity.