An Energy-Efficient Compressive Image Coding for Green Internet of Things (IoT)

Abstract

:1. Introduction

1.1. Motivation and Objective

1.2. Related Work

1.3. Main Contribution

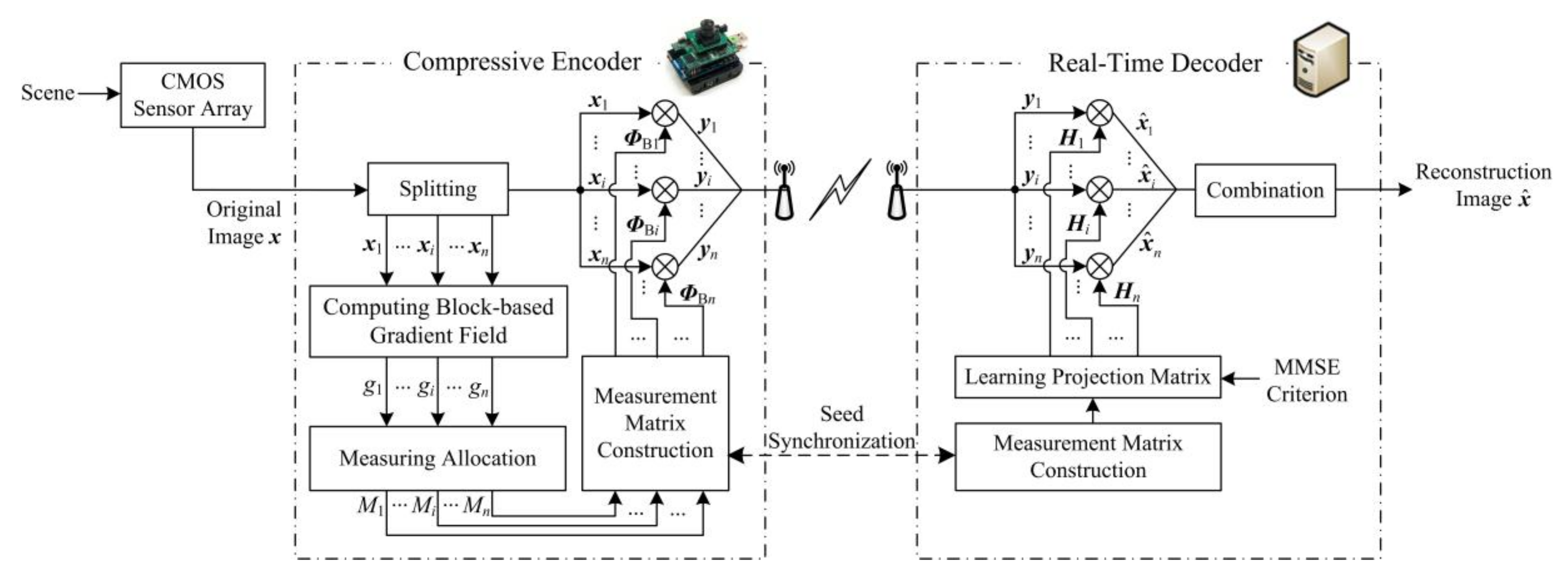

2. Proposed Compressive Image Coding

2.1. Framework Overview

2.2. Compressive Encoder

2.3. Real-Time Decoder

3. Experimental Results

3.1. Encoder Evaluation

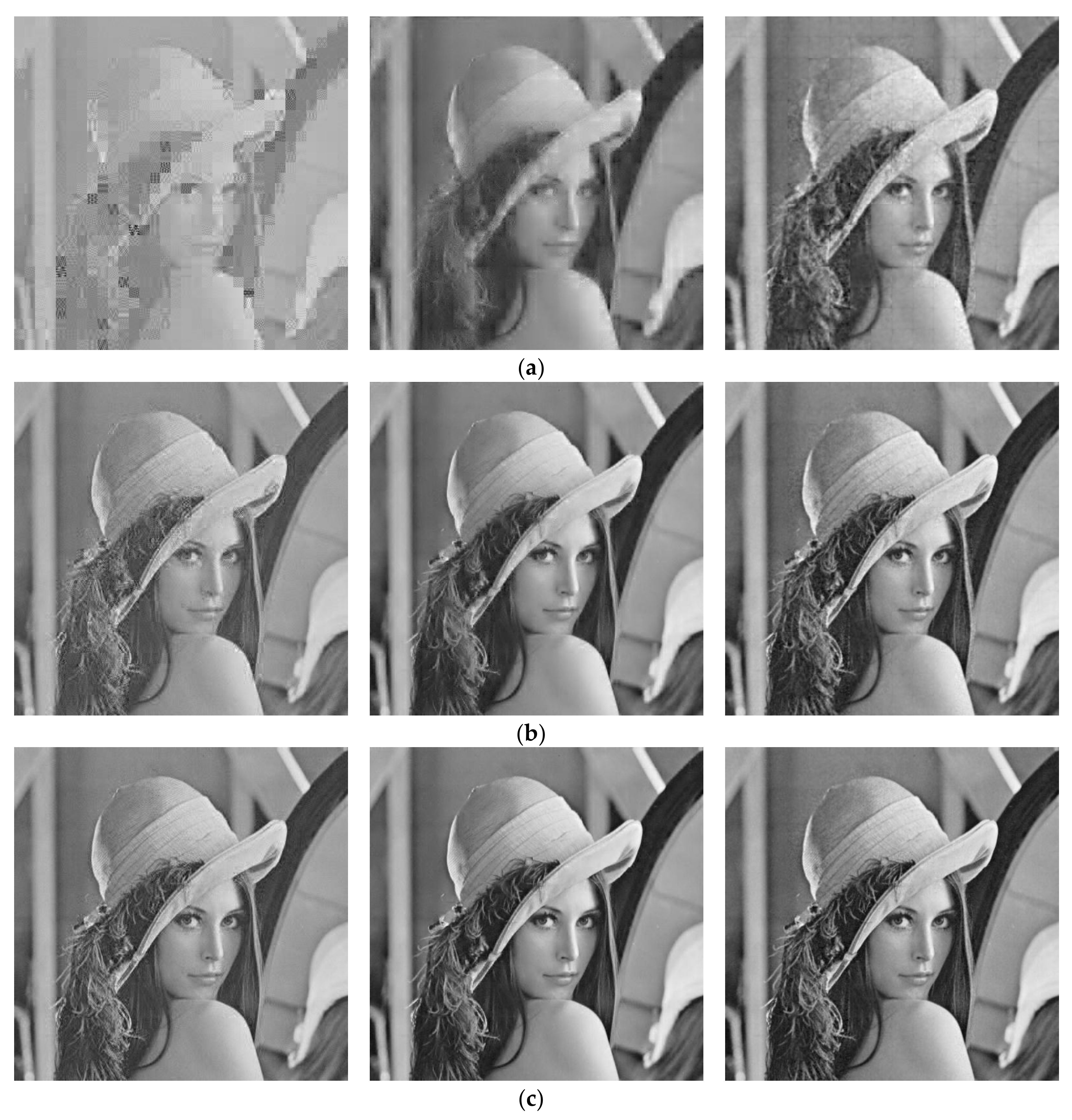

3.2. Decoder Evaluation

3.3. Overall Evaluation

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mahapatra, R.; Nijsure, Y.; Kaddoum, G.; Hassan, N.U.; Yuan, C. Energy efficiency tradeoff mechanism towards wireless green communication: A Survey. IEEE Commun. Surv. Tutor. 2016, 18, 686–705. [Google Scholar] [CrossRef]

- Al-Turjman, F.; Ever, E.; Zahmatkesh, H. Green femtocells in the IoT era: Traffic modeling and challenges—An overview. IEEE Netw. 2017, 31, 48–55. [Google Scholar] [CrossRef]

- Liu, Q.; Ma, Y.; Alhussein, M.; Zhang, Y.; Peng, L. Green data center with IoT sensing and cloud-assisted smart temperature control system. Comput. Netw. 2016, 101, 104–112. [Google Scholar] [CrossRef]

- Chen, P.; Ahammad, P.; Boyer, C.; Huang, S.I.; Lin, L.; Lobaton, E.; Meingast, M.; Oh, S.; Wang, S.; Yan, P.; et al. CITRIC: A low-bandwidth wireless camera network platform. In Proceedings of the ACM/IEEE International Conference on Distributed Smart Cameras, Stanford, CA, USA, 7–11 September 2008; pp. 1–10. [Google Scholar]

- Kerhet, A.; Magno, M.; Leonardi, F.; Boni, A.; Benini, L. A low-power wireless video sensor node for distributed object detection. J. Real Time Image Process. 2007, 2, 331–342. [Google Scholar] [CrossRef]

- Mikhaylov, K.; Jamsa, J.; Luimula, M.; Tervonen, J.; Autio, V. Intelligent sensor interfaces and data format. In Intelligent Sensor Networks: Across Sensing, Signal Processing, and Machine Learning; Hu, F., Hao, Q., Eds.; Taylor & Francis LLC, CRC: Boca Raton, FL, USA, 2012. [Google Scholar]

- Pooranian, Z.; Shojafar, M.; Naranjo, P.G.V.; Chiaraviglio, L.; Conti, M. A novel distributed fog-based networked architecture to preserve energy in fog data centers. In Proceedings of the IEEE International Conference on Mobile Ad Hoc and Sensor Systems, Orlando, FL, USA, 22–25 October 2017; pp. 604–609. [Google Scholar]

- Idzikowski, F.; Chiaraviglio, L.; Cianfrani, A.; Vizcaíno, J.L.; Polverini, M.; Ye, Y. A survey on energy-aware design and operation of core networks. IEEE Commun. Surv. Tutor. 2017, 18, 1453–1499. [Google Scholar] [CrossRef]

- Baiocchi, A.; Chiaraviglio, L.; Cuomo, F.; Salvatore, V. Joint management of energy consumption, maintenance costs, and user revenues in cellular networks with sleep modes. IEEE Trans. Green Commun. Netw. 2017, 1, 167–181. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Pearson Education: Delhi, India, 2004; pp. 197–199. [Google Scholar]

- Candè, E.J.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Baraniuk, R.G. Compressive Sensing. IEEE Signal Process. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, D.; Zhao, C.; Xiong, R.; Ma, S.; Gao, W. Image compressive sensing recovery via collaborative sparsity. IEEE J. Emerg. Sel. Top. Circuits Syst. 2012, 2, 380–391. [Google Scholar] [CrossRef]

- Wu, X.; Dong, W.; Zhang, X.; Shi, G. Model-assisted adaptive recovery of compressed sensing with imaging applications. IEEE Trans. Image Process. 2012, 21, 451–458. [Google Scholar] [PubMed]

- Dong, W.; Shi, G.; Li, X.; Ma, Y.; Huang, F. Compressive sensing via nonlocal low-rank regularization. IEEE Trans. Image Process. 2014, 23, 3618–3632. [Google Scholar] [CrossRef] [PubMed]

- Gan, L. Block compressed sensing of natural images. In Proceedings of the International Conference on Digital Signal Processing, Cardiff, UK, 1–4 July 2007; pp. 403–406. [Google Scholar]

- Stankovi, V.; Stankovi, L.; Cheng, S. Compressive image sampling with side information. In Proceedings of the IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 3001–3004. [Google Scholar]

- Zhang, J.; Xiang, Q.; Yin, Y.; Chen, C.; Luo, X. Adaptive compressed sensing for wireless image sensor networks. Multimed. Tools Appl. 2017, 76, 4227–4242. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, B.; Zhang, L. Saliency-based compressive sampling for image signals. IEEE Signal Process. Lett. 2010, 17, 973–976. [Google Scholar]

- Zhang, L.; Li, X.; Zhang, D. Image denoising and zooming under the linear minimum mean square-error estimation framework. IET Image Process. 2012, 6, 273–283. [Google Scholar] [CrossRef]

- Marsaglia, G.; Tsang, W.W. The ziggurat method for generating random variables. J. Stat. Softw. 2000, 5, 1–7. [Google Scholar] [CrossRef]

- ITU Telecom. Advanced Video Coding for Generic Audio-Visual Services; ITU-T Recommendation H. 264 and ISO/IEC 14496-10 (AVC), ITU-T and ISO/IEC JTC 1; ITU Telecom: Geneva, Switzerland, 2003. [Google Scholar]

- Sullivan, G.J.; Ohm, J.-R.; Han, W.-J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Girod, B.; Aaron, A.M.; Rane, S.; Rebollo-Monedero, D. Distributed video coding. Proc. IEEE 2005, 93, 71–83. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Li, S. Sparse signals recovery from noisy measurements by orthogonal matching pursuit. Inverse Probl. Imaging 2015, 9, 231–238. [Google Scholar] [CrossRef]

- Becker, S.; Bobin, J.; Candes, E.J. NESTA: A fast and accurate first-order method for sparse recovery. SIAM J. Imaging Sci. 2011, 4, 1–39. [Google Scholar] [CrossRef]

- Tavli, B.; Bicakci, K.; Zilan, R.; Barcelo-Ordinas, J.M. A survey of visual sensor network platforms. Multimed. Tools Appl. 2012, 60, 689–726. [Google Scholar] [CrossRef]

| Sequence | Time/s | |||||

|---|---|---|---|---|---|---|

| Proposed | H.264/AVC | HEVC | DISCOVER | |||

| S = 0.1 | S = 0.3 | S = 0.5 | ||||

| Foreman | 3.91 | 6.54 | 9.47 | 389.41 | 2306.60 | 36.40 |

| Mobile | 3.93 | 6.49 | 9.44 | 296.32 | 2880.12 | 60.08 |

| Highway | 3.94 | 6.48 | 9.84 | 372.60 | 1550.31 | 31.21 |

| Container | 3.97 | 6.51 | 9.79 | 292.83 | 1301.99 | 40.65 |

| Algorithm | S | Lenna | Barbara | Peppers | Goldhill | Mandrill | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR/dB | Time/s | PSNR/dB | Time/s | PSNR/dB | Time/s | PSNR/dB | Time/s | PSNR/dB | Time/s | ||

| OMP | 0.1 | 18.89 | 2.80 | 16.64 | 2.79 | 17.28 | 2.80 | 20.49 | 2.81 | 15.71 | 2.81 |

| NESTA | 21.14 | 198.47 | 18.90 | 174.16 | 20.25 | 197.28 | 21.11 | 115.32 | 18.04 | 159.45 | |

| Proposed | 27.41 | 0.88 | 21.78 | 0.88 | 26.79 | 0.88 | 26.30 | 0.89 | 19.76 | 0.88 | |

| OMP | 0.3 | 27.35 | 4.29 | 24.02 | 4.22 | 24.35 | 5.40 | 23.86 | 4.34 | 17.64 | 4.34 |

| NESTA | 27.28 | 134.34 | 22.99 | 170.11 | 26.86 | 126.01 | 26.32 | 125.42 | 20.46 | 117.25 | |

| Proposed | 32.67 | 1.77 | 24.68 | 1.66 | 31.36 | 1.70 | 30.40 | 1.68 | 22.91 | 1.65 | |

| OMP | 0.5 | 31.64 | 5.97 | 28.35 | 5.97 | 31.11 | 6.08 | 29.19 | 6.07 | 21.04 | 5.97 |

| NESTA | 30.83 | 120.89 | 25.52 | 100.81 | 31.16 | 117.19 | 29.63 | 110.17 | 22.77 | 196.12 | |

| Proposed | 36.04 | 2.93 | 27.24 | 2.82 | 34.11 | 2.93 | 33.40 | 2.88 | 25.62 | 2.75 | |

| Algorithm | S | Lenna | Barbara | Peppers | Goldhill | Mandrill | Avg. |

|---|---|---|---|---|---|---|---|

| OMP | 0.1 | 0.5953 | 0.4823 | 0.5589 | 0.5314 | 0.3312 | 0.4998 |

| NESTA | 0.7211 | 0.6104 | 0.7385 | 0.6149 | 0.4322 | 0.6234 | |

| Proposed | 0.8249 | 0.7048 | 0.8300 | 0.7638 | 0.5876 | 0.7422 | |

| OMP | 0.3 | 0.8850 | 0.8482 | 0.8826 | 0.8207 | 0.6334 | 0.8140 |

| NESTA | 0.9036 | 0.8256 | 0.9139 | 0.9429 | 0.9596 | 0.9091 | |

| Proposed | 0.9409 | 0.8510 | 0.9318 | 0.9147 | 0.8250 | 0.8927 | |

| OMP | 0.5 | 0.9515 | 0.9367 | 0.9412 | 0.9164 | 0.7946 | 0.9081 |

| NESTA | 0.9581 | 0.9147 | 0.9596 | 0.9352 | 0.8548 | 0.9245 | |

| Proposed | 0.9712 | 0.9185 | 0.9610 | 0.9595 | 0.9148 | 0.9450 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Duan, X.; Li, X.; He, W.; Li, Y. An Energy-Efficient Compressive Image Coding for Green Internet of Things (IoT). Sensors 2018, 18, 1231. https://doi.org/10.3390/s18041231

Li R, Duan X, Li X, He W, Li Y. An Energy-Efficient Compressive Image Coding for Green Internet of Things (IoT). Sensors. 2018; 18(4):1231. https://doi.org/10.3390/s18041231

Chicago/Turabian StyleLi, Ran, Xiaomeng Duan, Xu Li, Wei He, and Yanling Li. 2018. "An Energy-Efficient Compressive Image Coding for Green Internet of Things (IoT)" Sensors 18, no. 4: 1231. https://doi.org/10.3390/s18041231