A High Throughput Integrated Hyperspectral Imaging and 3D Measurement System

Abstract

:1. Introduction

2. Background and Prototype

2.1. Hyperspectral Measurement

2.1.1. Principle of Concave Grating Spectrometer

2.1.2. Snapshot Imaging

2.2. 3D Measurement

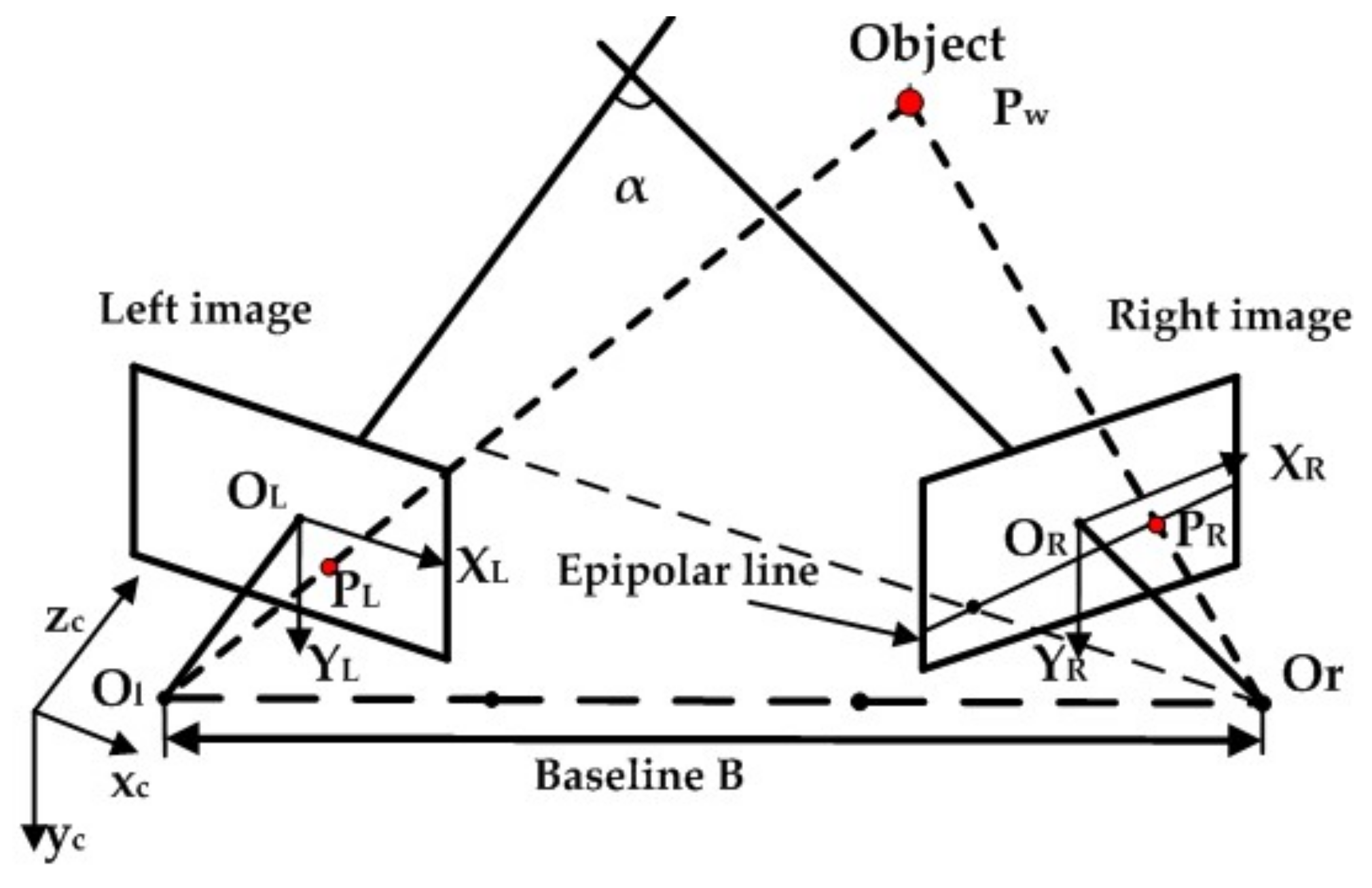

2.2.1. Principle of Binocular Stereo Vision

2.2.2. Stereo Matching

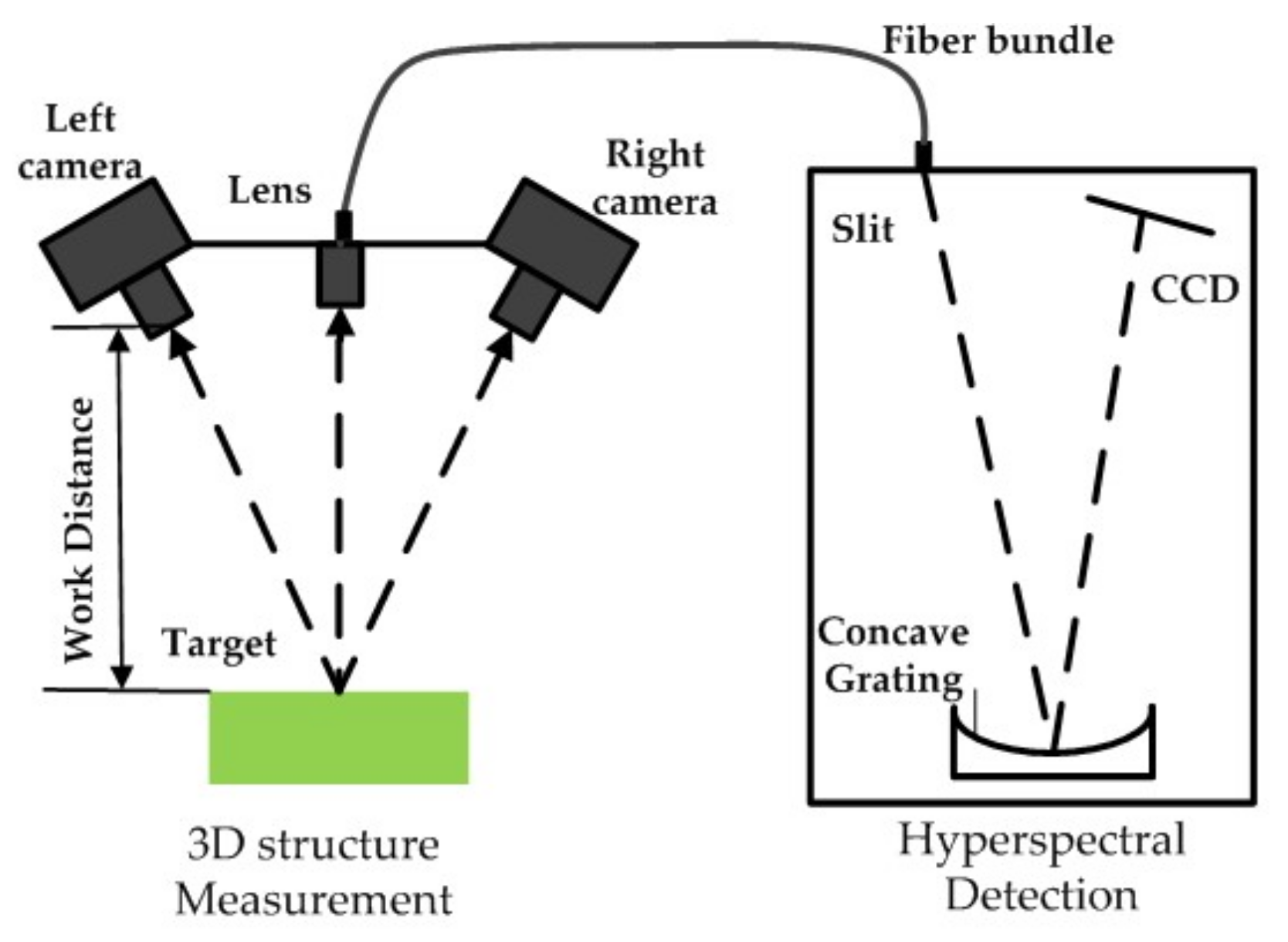

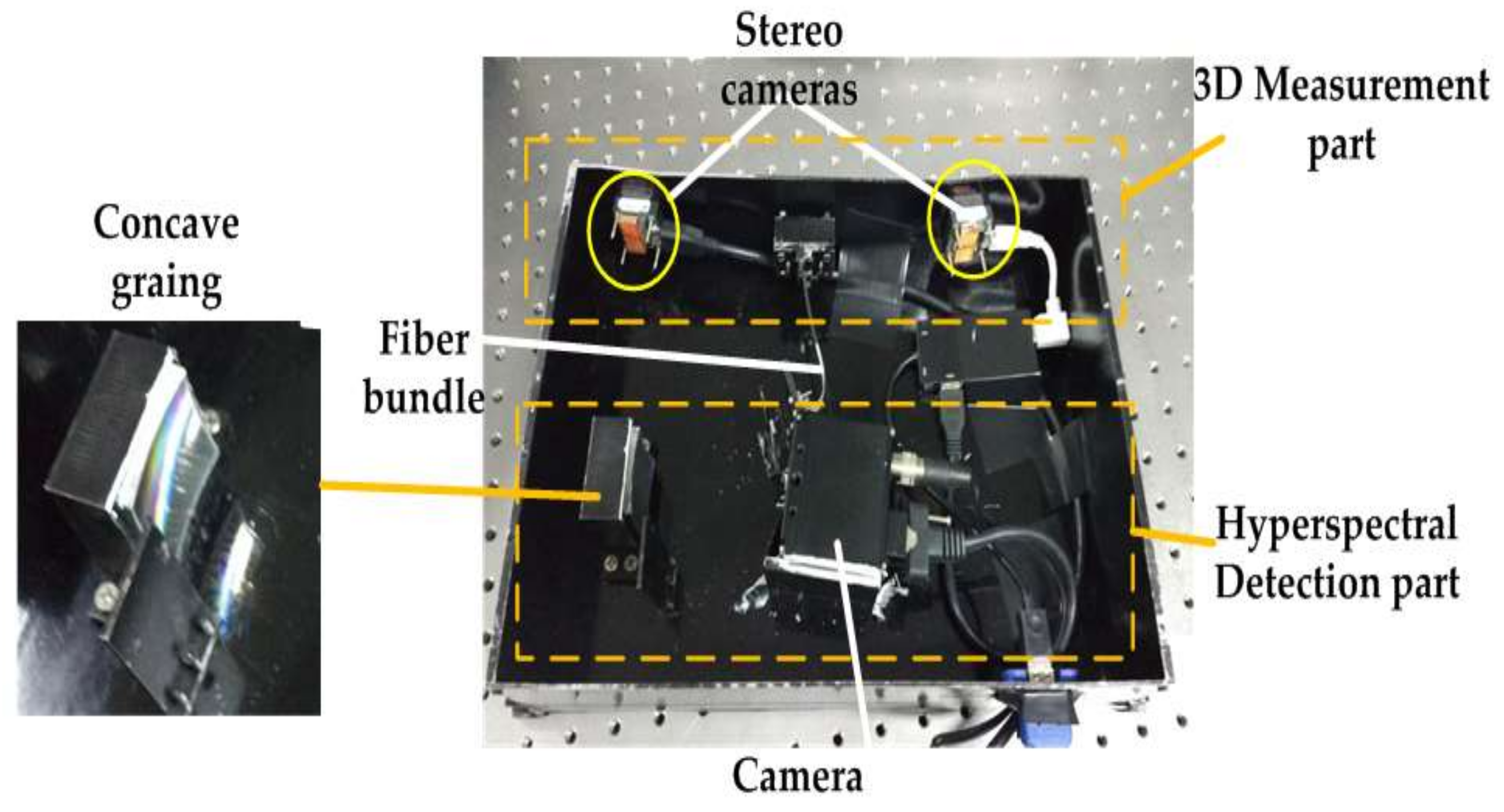

2.3. Prototype Design

2.4. Prototype Calibration

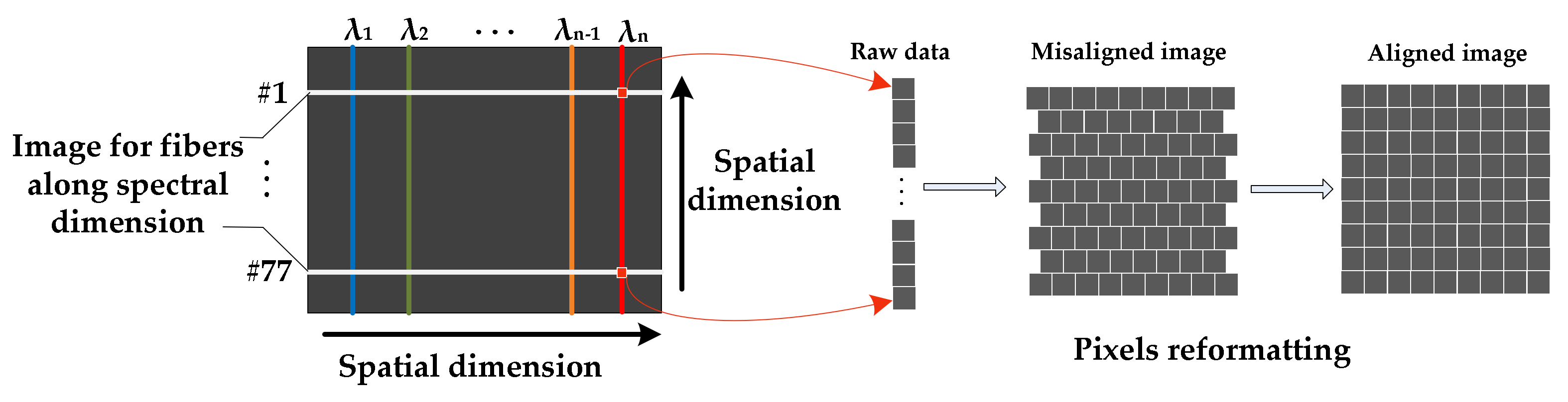

2.4.1. Fiber Calibration

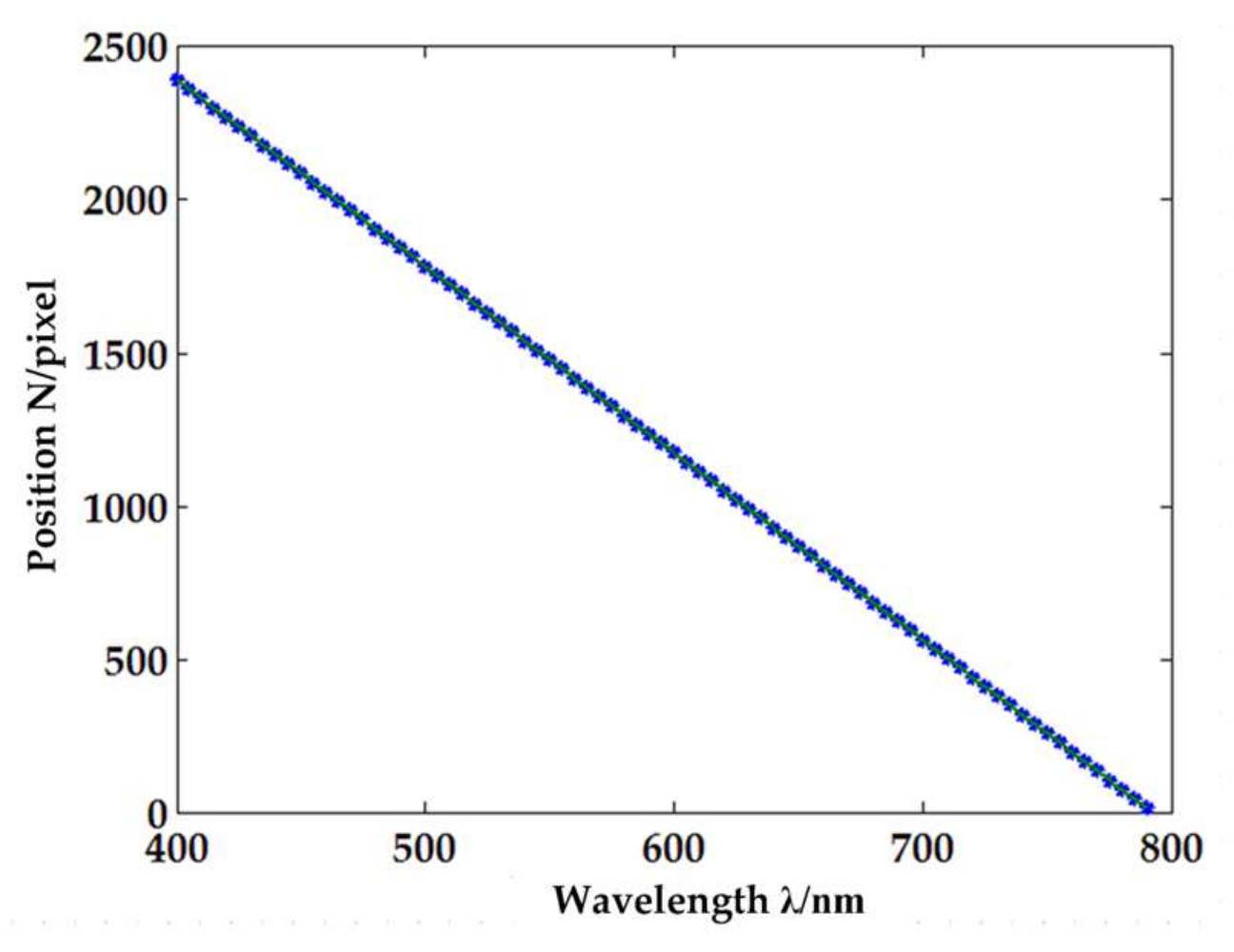

2.4.2. Spectral Calibration

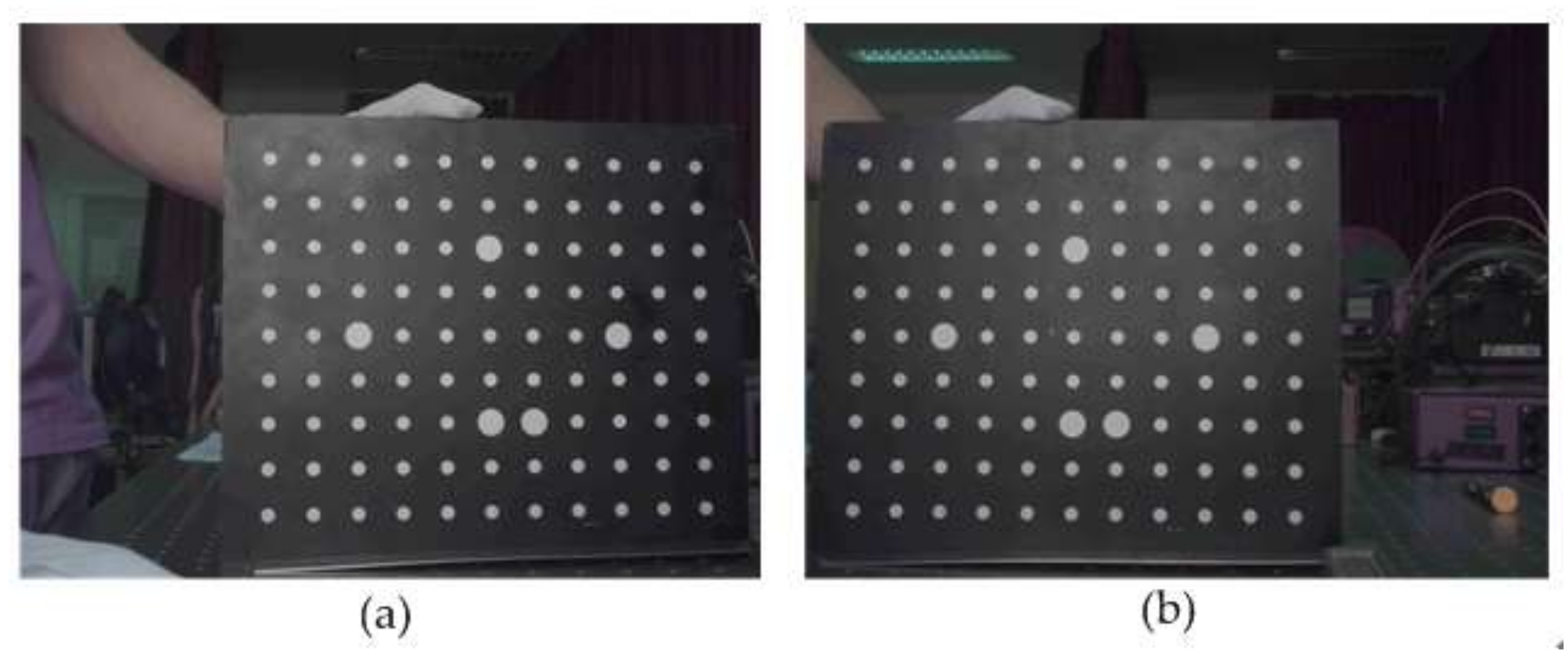

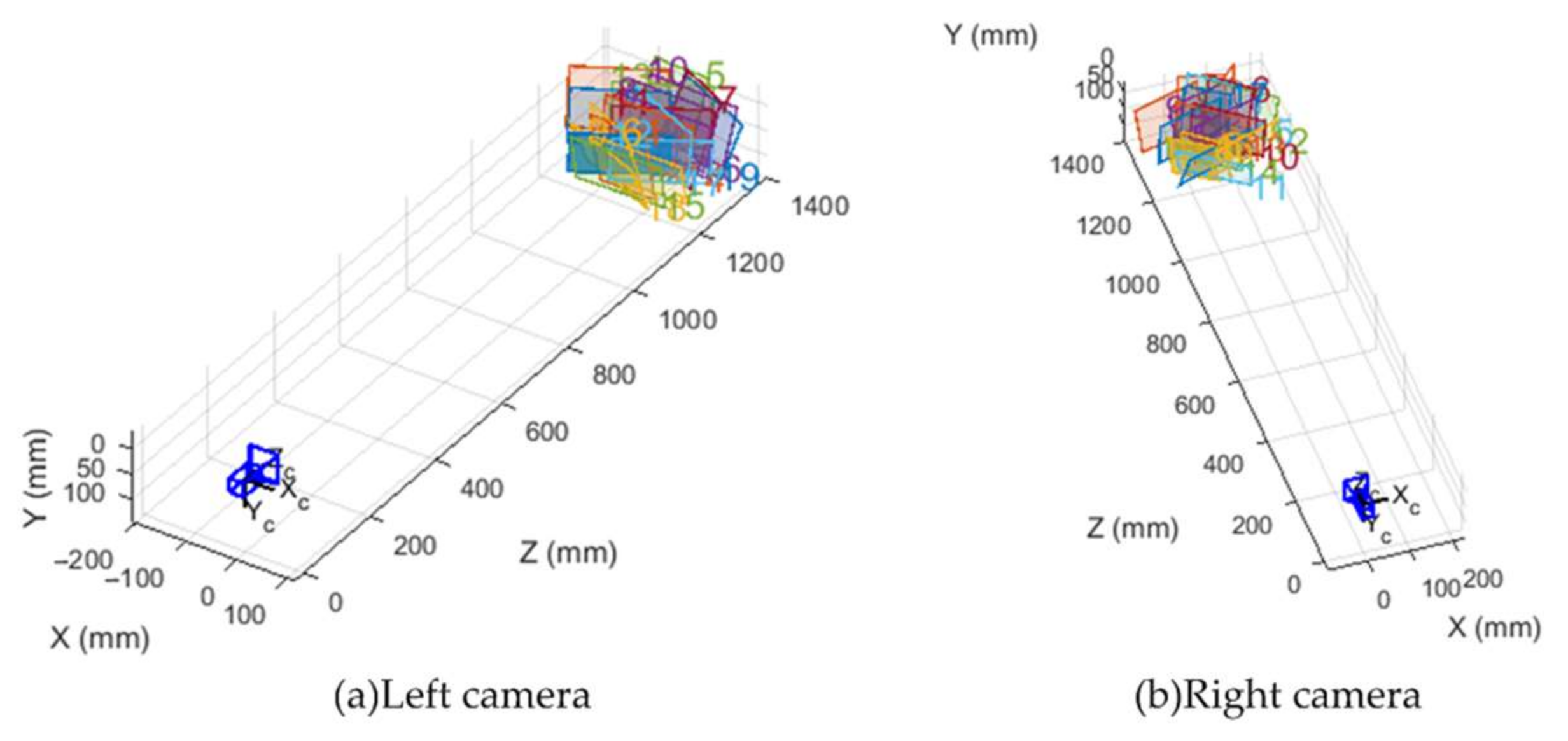

2.4.3. Stereo Camera Calibration

3. Experiment and Results

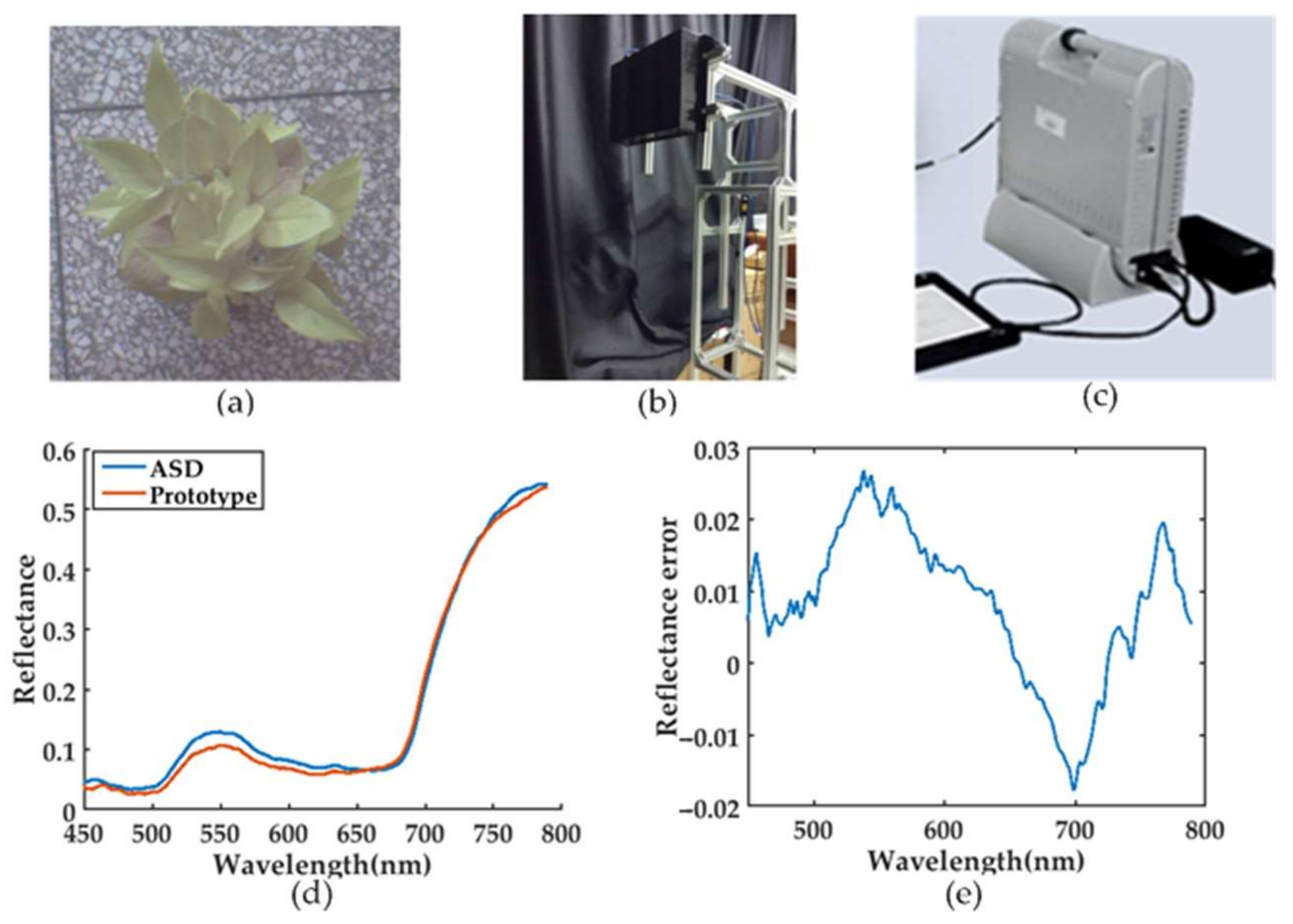

3.1. Accuracy Evaluation

3.1.1. Wavelength Accuracy

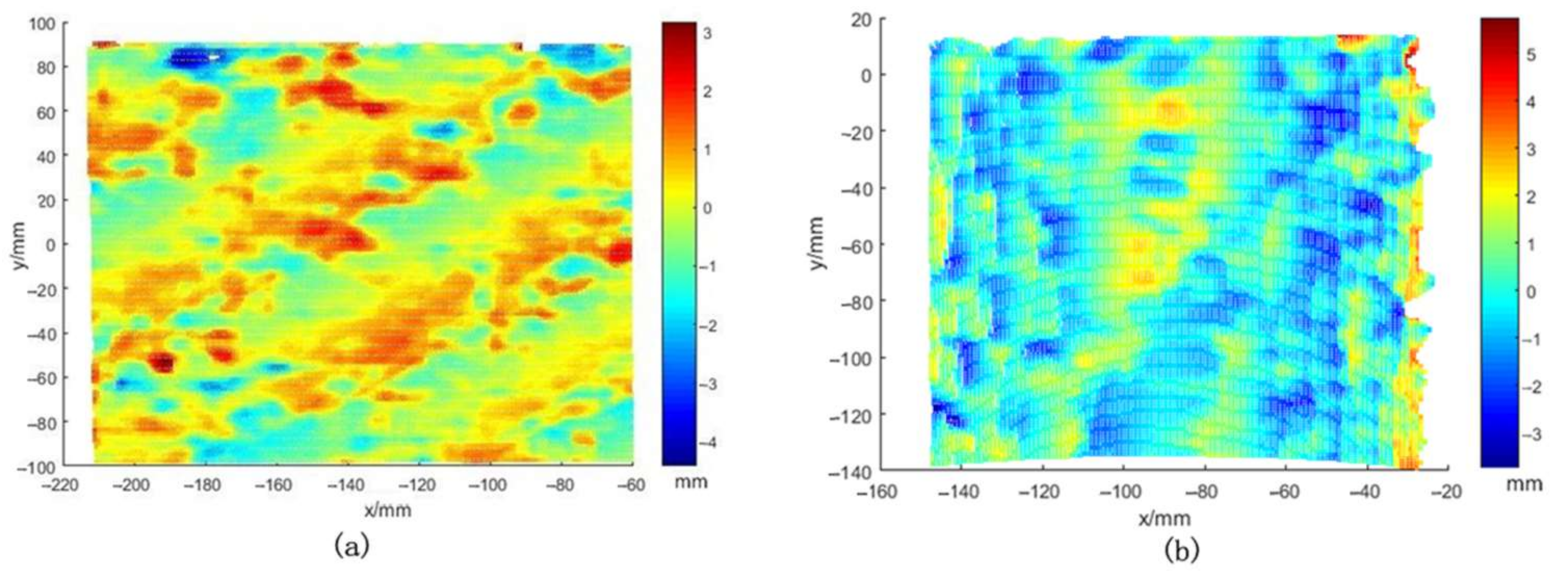

3.1.2. Depth Accuracy

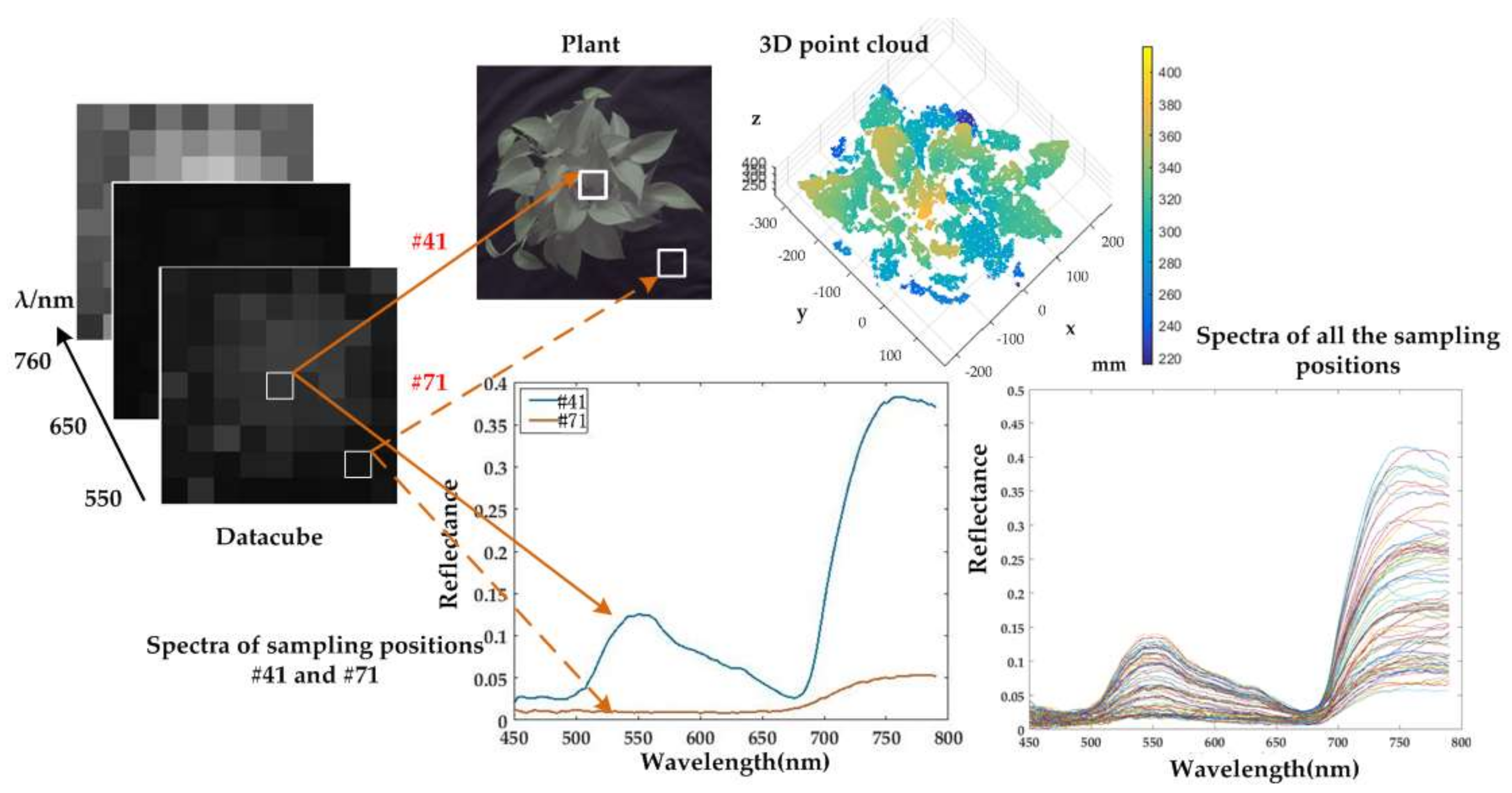

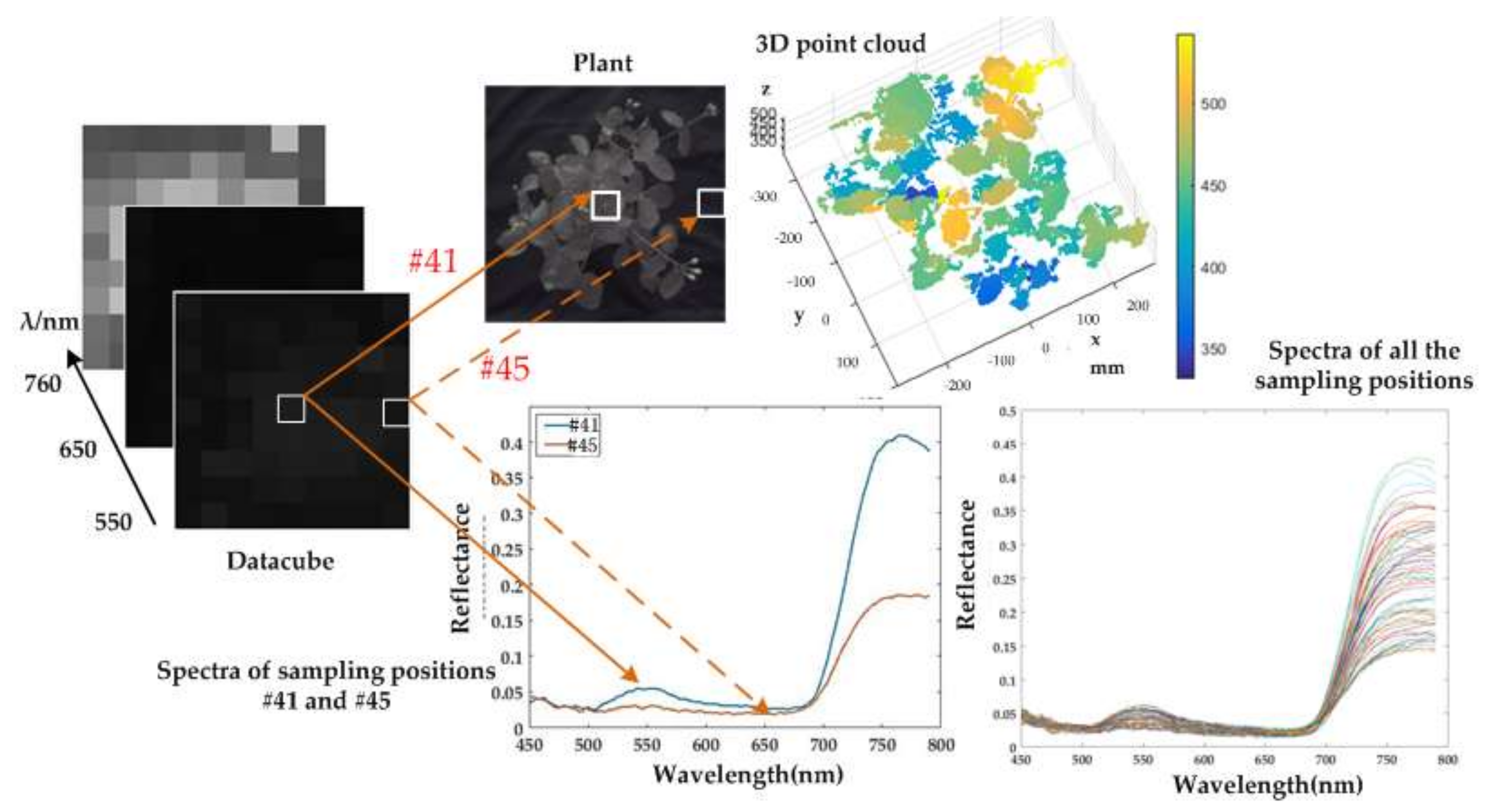

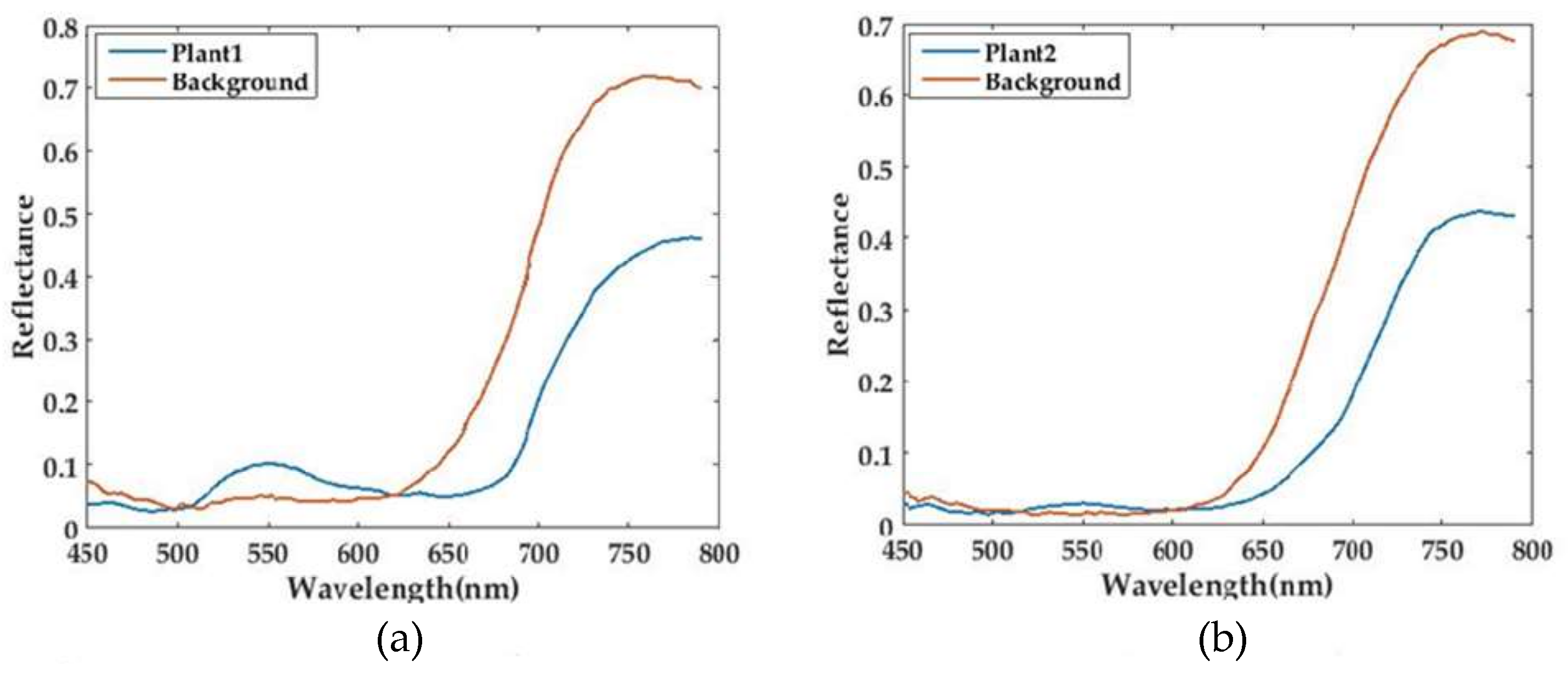

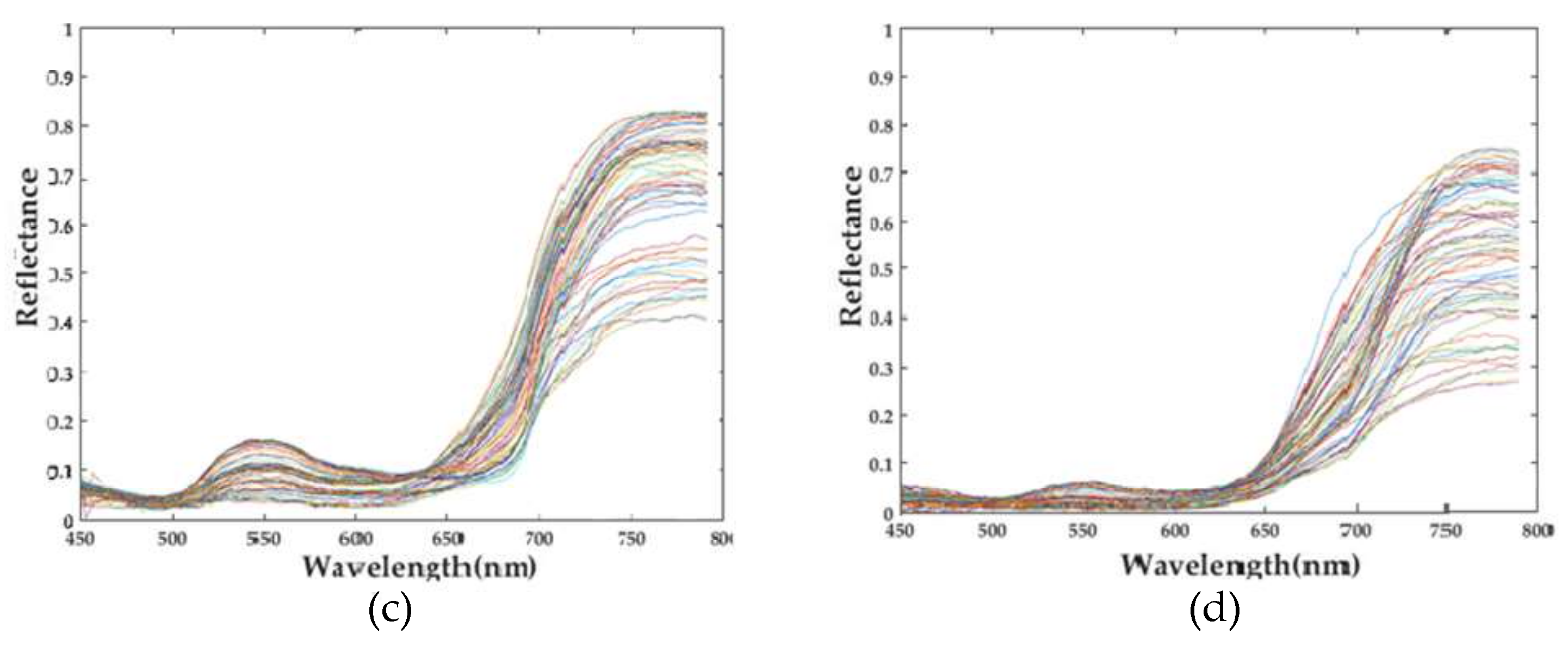

3.2. Vegetation Experiment

4. Discussion

4.1. Application Prospects

4.2. Limitations of This Study

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Furbank, R.T.; Tester, M. Phenomics—Technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Busemeyer, L.; Mentrup, D.; Moller, K.; Wunder, E.; Alheit, K.; Hahn, V.; Maurer, H.P.; Reif, J.C.; Wurschum, T.; Muller, J.; et al. BreedVision—A multi-sensor platform for non-destructive field-based phenotyping in plant breeding. Sensors 2013, 13, 2830–2847. [Google Scholar] [CrossRef] [PubMed]

- White, J.W.; Andrade-Sanchez, P.; Gore, M.A.; Bronson, K.F.; Coffelt, T.A.; Conley, M.M.; Feldmann, K.A.; French, A.N.; Heun, J.T.; Hunsaker, D.J.; et al. Field-based phenomics for plant genetics research. Field Crops Res. 2012, 133, 101–112. [Google Scholar] [CrossRef]

- Ge, Y.; Bai, G.; Stoerger, V.; Schnable, J.C. Temporal dynamics of maize plant growth, water use, and leaf water content using automated high throughput RGB and hyperspectral imaging. Comput. Electron. Agric. 2016, 127, 625–632. [Google Scholar] [CrossRef]

- Pandey, P.; Ge, Y.; Stoerger, V.; Schnable, J. High throughput in vivo analysis of plant leaf chemical properties using hyperspectral imaging. Front. Plant Sci. 2017, 8, 1348. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, S.; Gu, X.; Jia, G.; Xu, L. Integrated System for Auto-Registered Hyperspectral and 3D Structure Measurement at the Point Scale. Remote Sens. 2017, 9, 512. [Google Scholar] [CrossRef]

- Sparks, A.; Kolden, C.; Talhelm, A.; Smith, A.; Apostol, K.; Johnson, D.; Boschetti, L. Spectral Indices Accurately Quantify Changes in Seedling Physiology Following Fire: Towards Mechanistic Assessments of Post-Fire Carbon Cycling. Remote Sens. 2016, 8, 572. [Google Scholar] [CrossRef]

- Rischbeck, P.; Elsayed, S.; Mistele, B.; Barmeier, G.; Heil, K.; Schmidhalter, U. Data fusion of spectral, thermal and canopy height parameters for improved yield prediction of drought stressed spring barley. Eur. J. Agron. 2016, 78, 44–59. [Google Scholar] [CrossRef]

- Simon Chane, C.; Mansouri, A.; Marzani, F.S.; Boochs, F. Integration of 3D and multispectral data for cultural heritage applications: Survey and perspectives. Image Vis. Comput. 2013, 31, 91–102. [Google Scholar] [CrossRef]

- Hagen, N.; Kester, R.T.; Gao, L.; Tkaczyk, T.S. Snapshot advantage: A review of the light collection improvement for parallel high-dimensional measurement systems. Opt. Eng. 2012, 51, 1371–1379. [Google Scholar] [CrossRef] [PubMed]

- Bareth, G.; Aasen, H.; Bendig, J.; Gnyp, M.L.; Bolten, A.; Jung, A.; Michels, R.; Soukkamäki, J. Low-weight and UAV-based Hyperspectral Full-frame Cameras for Monitoring Crops: Spectral Comparison with Portable Spectroradiometer Measurements. Photogramm. Fernerkund. Geoinf. 2015, 2015, 69–79. [Google Scholar] [CrossRef]

- Behmann, J.; Mahlein, A.-K.; Paulus, S.; Dupuis, J.; Kuhlmann, H.; Oerke, E.-C.; Plümer, L. Generation and application of hyperspectral 3D plant models: Methods and challenges. Mach. Vis. Appl. 2015, 27, 611–624. [Google Scholar] [CrossRef]

- Hartley, R.I.; Gupta, R. Linear pushbroom cameras. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 963–975. [Google Scholar]

- Gat, N. Imaging spectroscopy using tunable filters: A review. In Proceedings of the SPIE—The International Society for Optical Engineering, Orlando, FL, USA, 5 April 2000; Volume 4056, pp. 50–64. [Google Scholar]

- Azzari, G.; Goulden, M.L.; Rusu, R.B. Rapid characterization of vegetation structure with a Microsoft Kinect sensor. Sensors 2013, 13, 2384–2398. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Grift, T.E. A LIDAR-based crop height measurement system for Miscanthus giganteus. Comput. Electron. Agric. 2012, 85, 70–76. [Google Scholar] [CrossRef]

- Bellasio, C.; Olejnickova, J.; Tesar, R.; Sebela, D.; Nedbal, L. Computer reconstruction of plant growth and chlorophyll fluorescence emission in three spatial dimensions. Sensors 2012, 12, 1052–1071. [Google Scholar] [CrossRef] [PubMed]

- Gil, E.; Escolà, A.; Rosell, J.R.; Planas, S.; Val, L. Variable rate application of plant protection products in vineyard using ultrasonic sensors. Crop Prot. 2007, 26, 1287–1297. [Google Scholar] [CrossRef]

- Biskup, B.; Scharr, H.; Schurr, U.; Rascher, U. A stereo imaging system for measuring structural parameters of plant canopies. Plant Cell Environ. 2007, 30, 1299–1308. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Li, C.; Paterson, A.H. High throughput phenotyping of cotton plant height using depth images under field conditions. Comput. Electron. Agric. 2016, 130, 57–68. [Google Scholar] [CrossRef]

- Paulus, S.; Behmann, J.; Mahlein, A.K.; Plumer, L.; Kuhlmann, H. Low-cost 3D systems: Suitable tools for plant phenotyping. Sensors 2014, 14, 3001–3018. [Google Scholar] [CrossRef] [PubMed]

- Ivorra, E.; Verdu, S.; Sanchez, A.J.; Grau, R.; Barat, J.M. Predicting Gilthead Sea Bream (Sparus aurata) Freshness by a Novel Combined Technique of 3D Imaging and SW-NIR Spectral Analysis. Sensors 2016, 16, 1735. [Google Scholar] [CrossRef] [PubMed]

- Brusco, N.; Capeleto, S.; Fedel, M.; Paviotti, A.; Poletto, L.; Cortelazzo, G.M.; Tondello, G. A System for 3D Modeling Frescoed Historical Buildings with Multispectral Texture Information. Mach. Vis. Appl. 2006, 17, 373–393. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Zia, A.; Liang, J.; Zhou, J.; Gao, Y. 3D Reconstruction from Hyperspectral Images. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 318–325. [Google Scholar]

- Hartmann, A.; Czauderna, T.; Hoffmann, R.; Stein, N.; Schreiber, F. Htpheno: An image analysis pipeline for high-throughput plant phenotyping. BMC Bioinform. 2011, 12, 148. [Google Scholar] [CrossRef] [PubMed]

- Arvidsson, S.; Pérez-Rodríguez, P.; Mueller-Roeber, B. A growth phenotyping pipeline for arabidopsis thaliana integrating image analysis and rosette area modeling for robust quantification of genotype effects. New Phytol. 2011, 191, 895–907. [Google Scholar] [CrossRef] [PubMed]

- Bai, G.; Ge, Y.; Hussain, W.; Baenziger, P.S.; Graef, G. A multi-sensor system for high throughput field phenotyping in soybean and wheat breeding. Comput. Electron. Agric. 2016, 128, 181–192. [Google Scholar] [CrossRef]

- Virlet, N.; Sabermanesh, K.; Sadeghi-Tehran, P.; Hawkesford, M.J. Field Scanalyzer: An automated robotic field phenotyping platform for detailed crop monitoring. Funct. Plant Biol. 2017, 44, 143–153. [Google Scholar] [CrossRef]

- Andrade-Sanchez, P.; Gore, M.A.; Heun, J.T.; Thorp, K.R.; Carmo-Silva, A.E.; French, A.N.; Salvucci, M.E.; White, J.W. Development and evaluation of a field-based high-throughput phenotyping platform. Funct. Plant Biol. 2014, 41, 68–79. [Google Scholar] [CrossRef]

- Comar, A.; Burger, P.; de Solan, B.; Baret, F.; Daumard, F.; Hanocq, J.-F. A semi-automatic system for high throughput phenotyping wheat cultivars in-field conditions: Description and first results. Funct. Plant Biol. 2012, 39, 914–924. [Google Scholar] [CrossRef]

- Torabzadeh, H.; Morsdorf, F.; Schaepman, M.E. Fusion of imaging spectroscopy and airborne laser scanning data for characterization of forest ecosystems—A review. ISPRS J. Photogramm. Remote Sens. 2014, 97, 25–35. [Google Scholar] [CrossRef]

- Zhou, Q.; Li, X.; Ni, K.; Tian, R.; Pang, J. Holographic fabrication of large-constant concave gratings for wide-range flat-field spectrometers with the addition of a concave lens. Opt. Express 2016, 24, 732–738. [Google Scholar] [CrossRef] [PubMed]

- Murakami, Y.; Nakazaki, K.; Yamaguchi, M. Hybrid-resolution spectral video system using low-resolution spectral sensor. Opt. Express 2014, 22, 20311–20325. [Google Scholar] [CrossRef] [PubMed]

- Matsuoka, H. Single-cell viability assessment with a novel spectro-imaging system. J. Biotechnol. 2002, 94, 299–308. [Google Scholar] [CrossRef]

- Ren, D.; Allington-Smith, J. On the Application of Integral Field Unit Design Theory for Imaging Spectroscopy. Publ. Astron. Soc. Pac. 2002, 114, 866–878. [Google Scholar] [CrossRef]

- Li, D.; Xu, L.; Tang, X.-S.; Sun, S.; Cai, X.; Zhang, P. 3D Imaging of Greenhouse Plants with an Inexpensive Binocular Stereo Vision System. Remote Sens. 2017, 9, 508. [Google Scholar] [CrossRef]

- Weng, J.; Cohen, P.; Herniou, M. Camera Calibration with Distortion Models and Accuracy Evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 965–980. [Google Scholar] [CrossRef]

- Fusiello, A.; Trucco, E.; Verri, A. A compact algorithm for rectification of stereo pairs. Mach. Vis. Appl. 2000, 12, 16–22. [Google Scholar] [CrossRef]

- Li, D.; Zhao, H.; Jiang, H. Fast phase-based stereo matching method for 3D shape measurement. In Proceedings of the IEEE International Symposium on Optomechatronic Technologies, Toronto, ON, Canada, 25–27 October 2011; pp. 1–5. [Google Scholar]

- Lati, R.N.; Filin, S.; Eizenberg, H. Estimating plant growth parameters using an energy minimization-based stereovision model. Comput. Electron. Agric. 2013, 98, 260–271. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Cho, J.; Gemperline, P.J.; Walker, D. Wavelength Calibration Method for a CCD Detector and Multichannel Fiber-Optic Probes. Appl. Spectrosc. 1995, 49, 1841–1845. [Google Scholar] [CrossRef]

- Zhang, Z. A Flexible New Technique for Camera Clibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- August, Y.; Vachman, C.; Rivenson, Y.; Stern, A. Compressive hyperspectral imaging by random separable projections in both the spatial and the spectral domains. Appl. Opt. 2013, 52, 46–54. [Google Scholar] [CrossRef] [PubMed]

- Atzberger, C. Advances in Remote Sensing of Agriculture: Context Description, Existing Operational Monitoring Systems and Major Information Needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

| Wavelength (nm) | Resolution (nm) |

|---|---|

| 450 | 4.6 |

| 500 | 3.4 |

| 600 | 3.1 |

| 700 | 2.8 |

| 790 | 2.6 |

| Physical Meaning | Parameter | Camera | Values |

|---|---|---|---|

| Focal length in direction | l | (3683.05, 3682.42) | |

| r | (3689.58, 3689.36) | ||

| Principle point coordinates | l | (894,79, 934.69) | |

| r | (917.71, 902.49) | ||

| Radial distortion parameters | l | (0.0514, 0.1951) | |

| r | (0.0734, 0.5032) | ||

| Tangential distortion parameters | l | (−3.124 × 10−4, −3.105 × 10−3) | |

| r | (−5.721 × 10−4, 2.883 × 10−4) |

| Spectral Range | Spectral Resolution | Fiber Number | Spectral Band |

|---|---|---|---|

| 450–790 nm | 4.6–2.8 nm @ 450–570 nm 2.8–2.6 nm @ 570–790 nm | 77 | 341 |

| FOV | Depth Accuracy | Measuring Speed | Working Distance |

| 28° | ±3.15 mm @ 1200 mm | 5 frames/s | 1000–1400 mm |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Xu, L.; Shi, S.; Jiang, H.; Chen, D. A High Throughput Integrated Hyperspectral Imaging and 3D Measurement System. Sensors 2018, 18, 1068. https://doi.org/10.3390/s18041068

Zhao H, Xu L, Shi S, Jiang H, Chen D. A High Throughput Integrated Hyperspectral Imaging and 3D Measurement System. Sensors. 2018; 18(4):1068. https://doi.org/10.3390/s18041068

Chicago/Turabian StyleZhao, Huijie, Lunbao Xu, Shaoguang Shi, Hongzhi Jiang, and Da Chen. 2018. "A High Throughput Integrated Hyperspectral Imaging and 3D Measurement System" Sensors 18, no. 4: 1068. https://doi.org/10.3390/s18041068