Context Mining of Sedentary Behaviour for Promoting Self-Awareness Using a Smartphone †

Abstract

:1. Introduction

2. Related Work

2.1. Wearable Devices

2.2. Smartphone Applications

3. Methodology

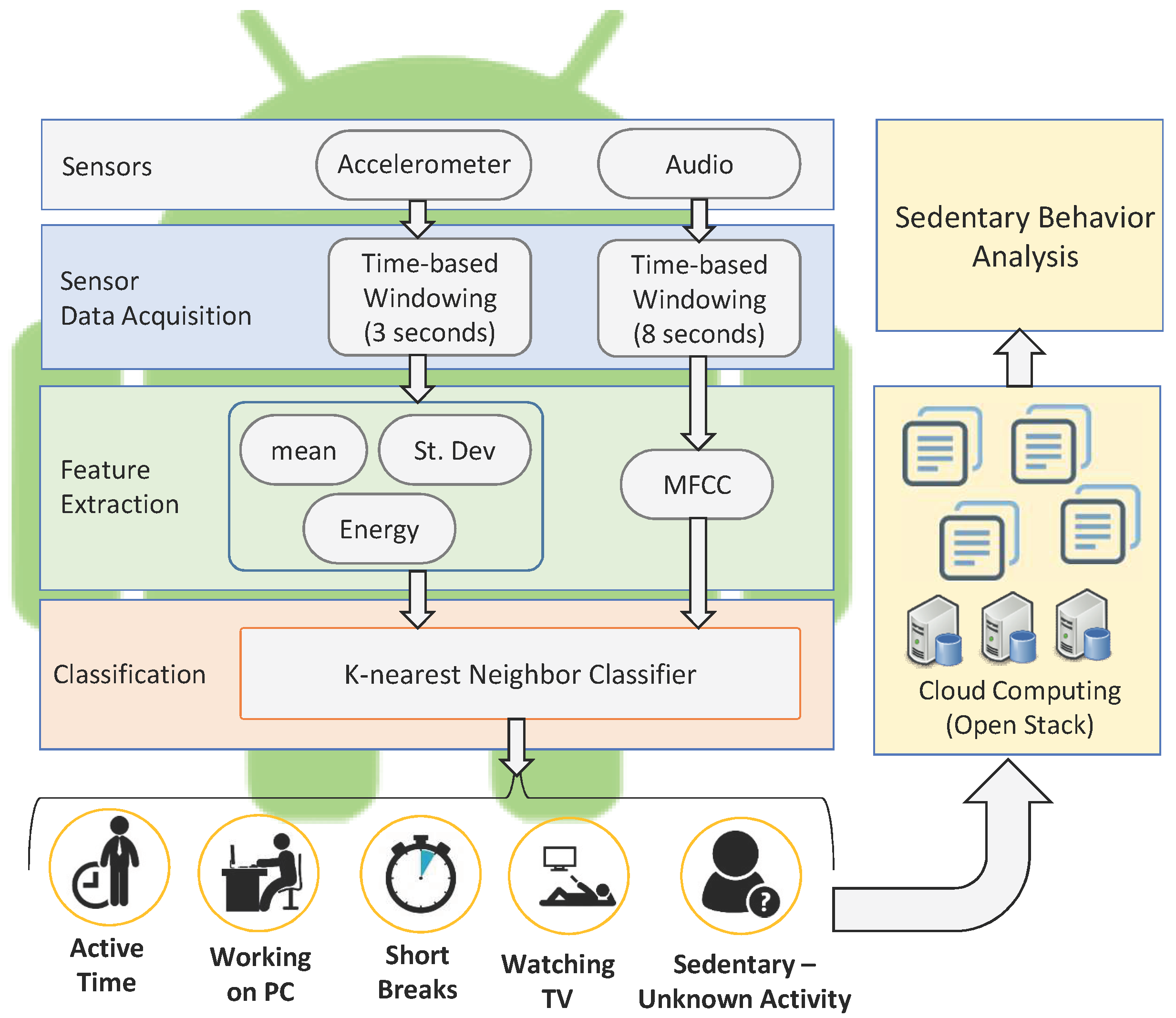

3.1. The Smartphone Environment

3.1.1. Sensor Data Acquisition

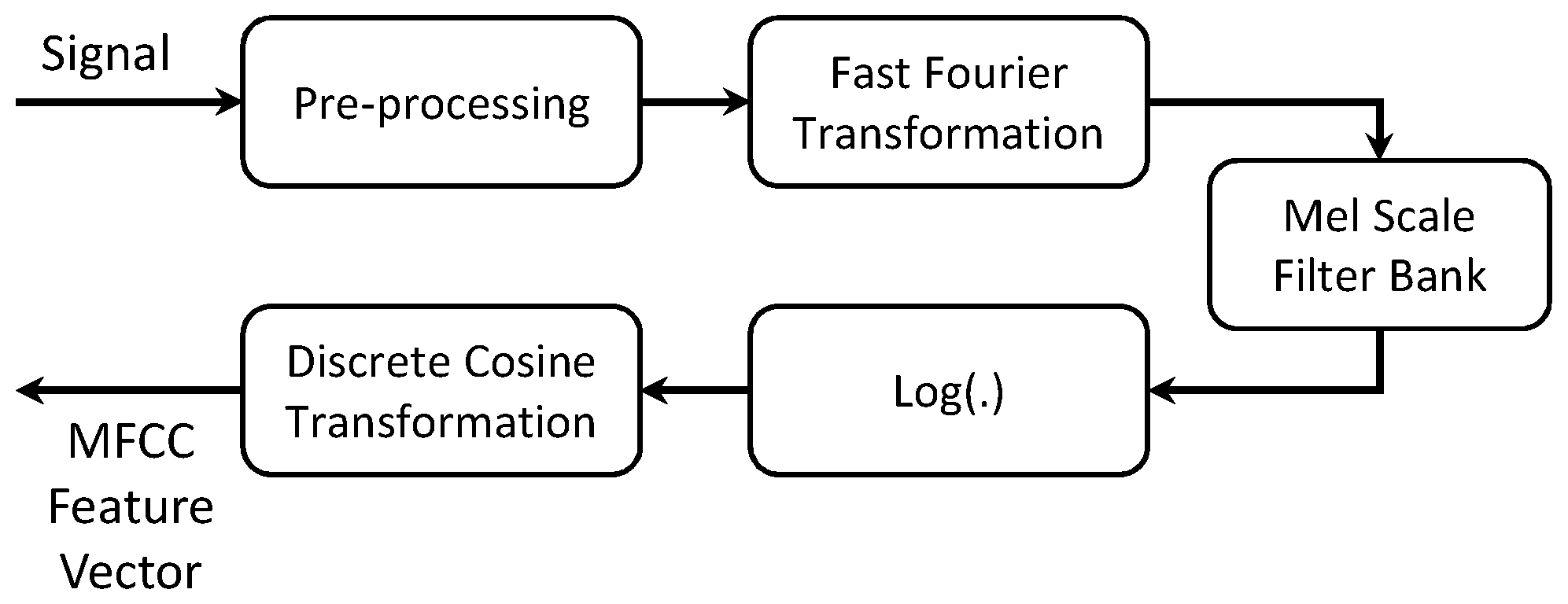

3.1.2. Feature Extraction

3.2. Classifier

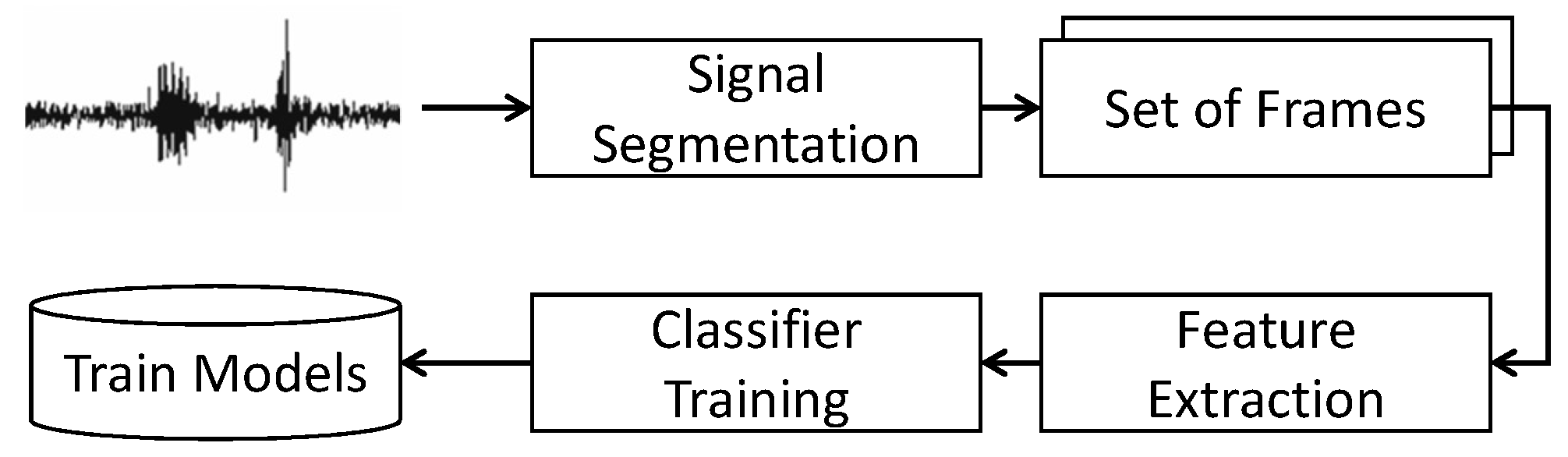

3.2.1. Training Phase

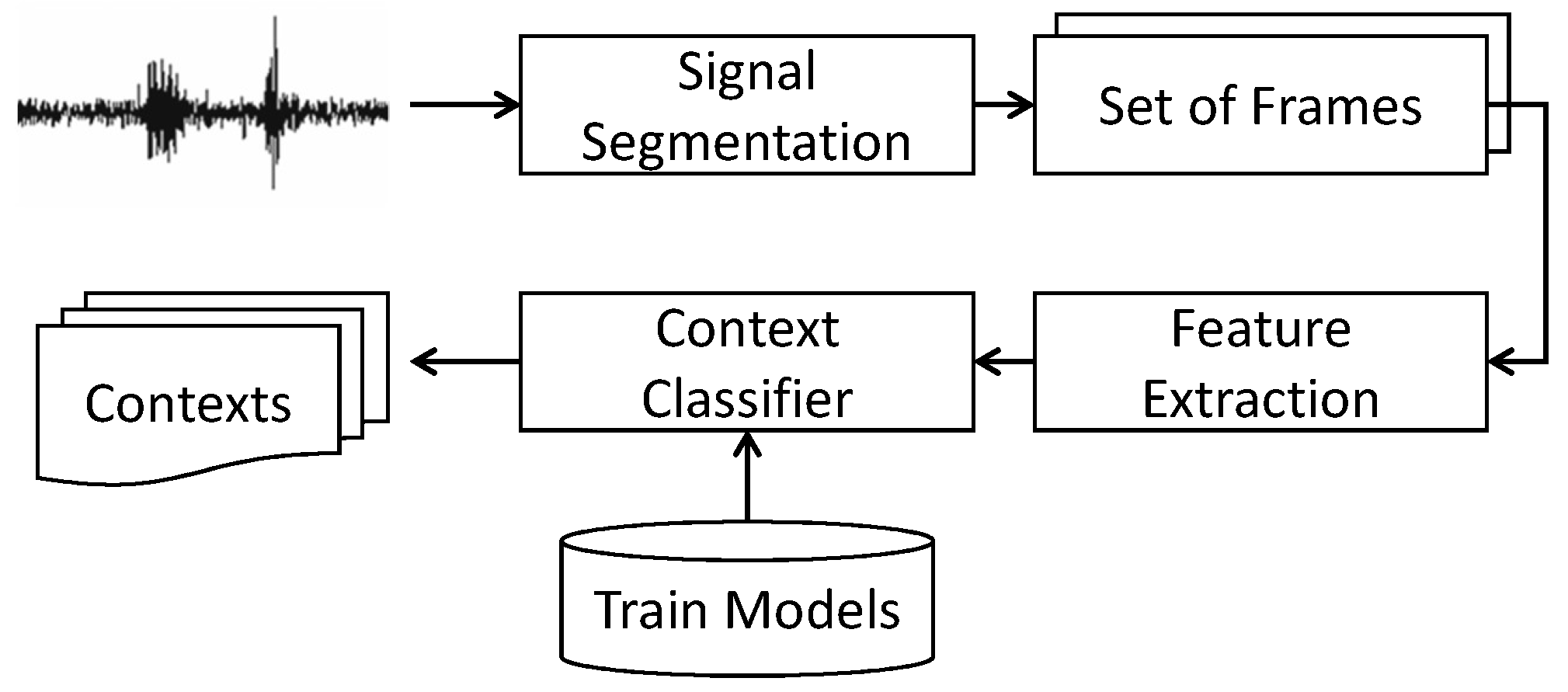

3.2.2. Recognition Phase

3.3. Cloud Computing

3.4. Sedentary Behaviour Analysis

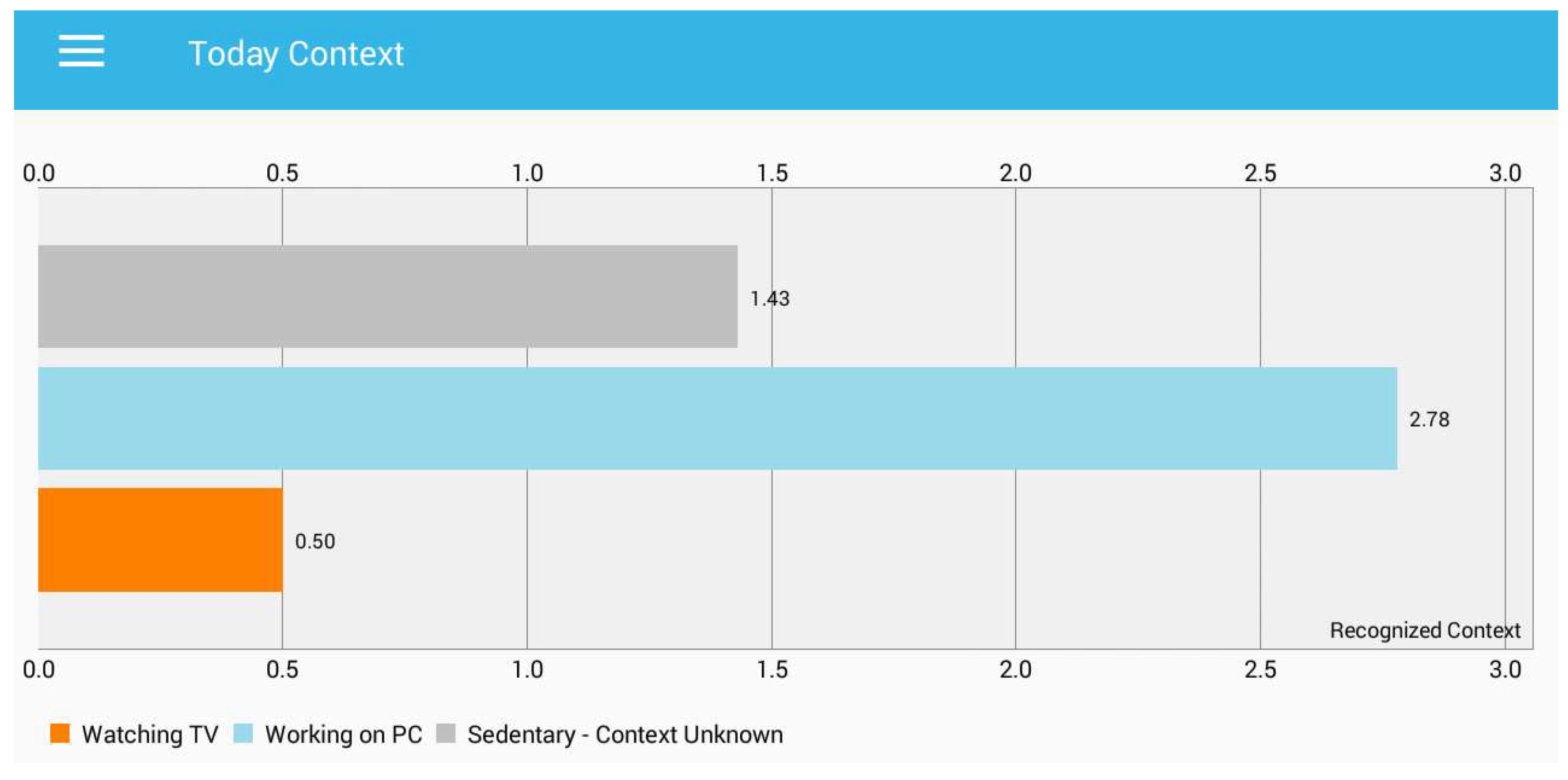

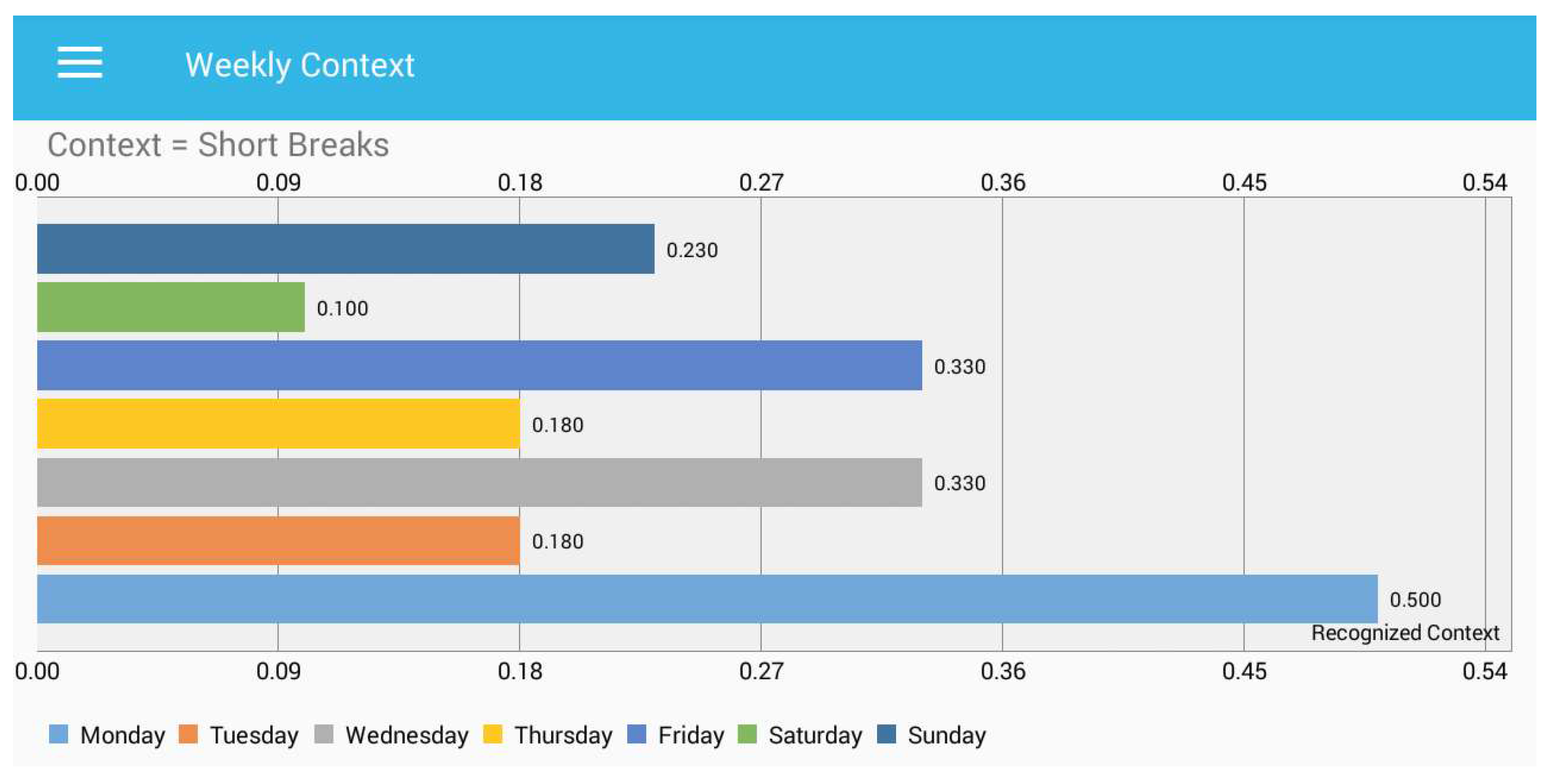

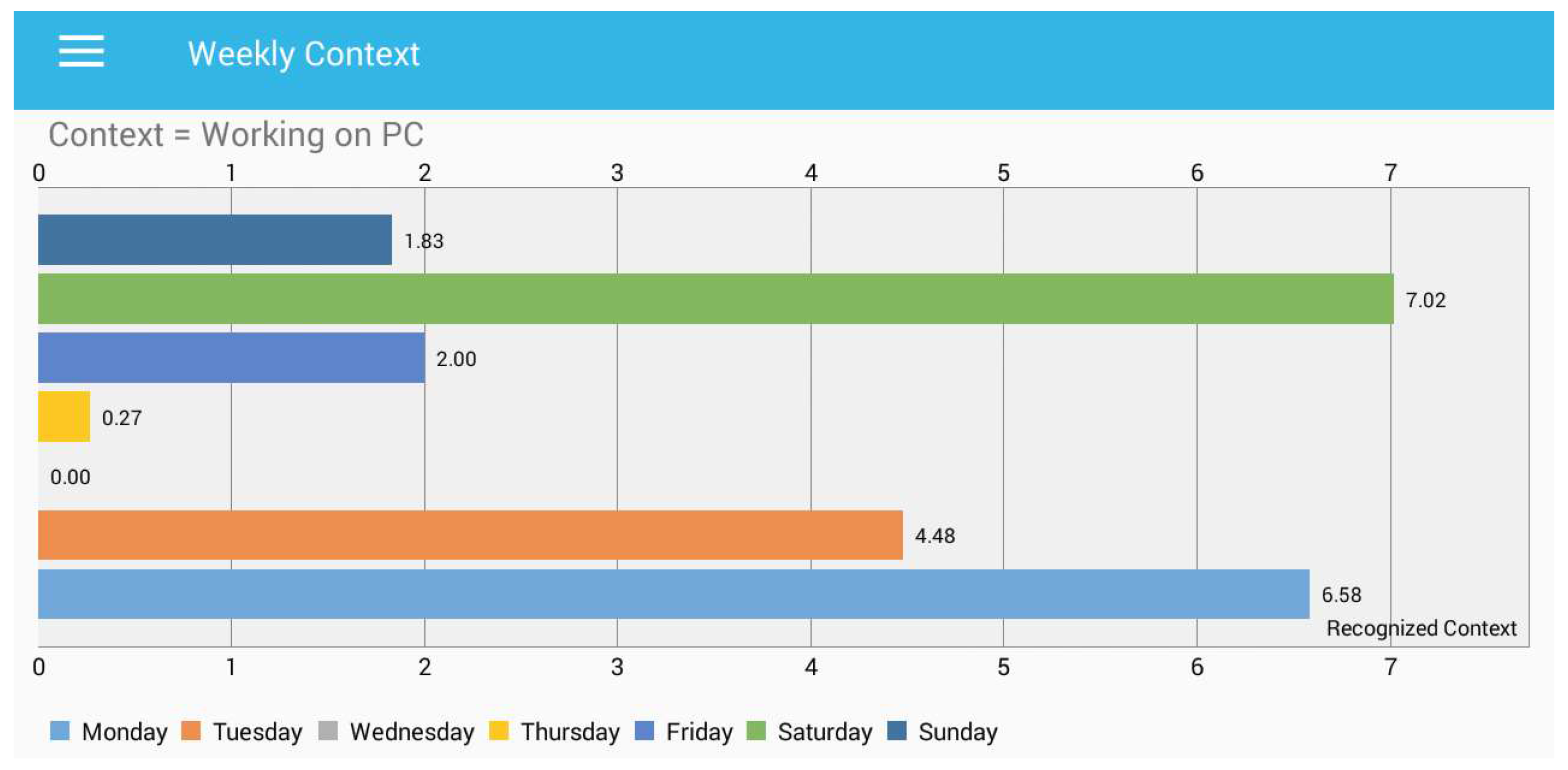

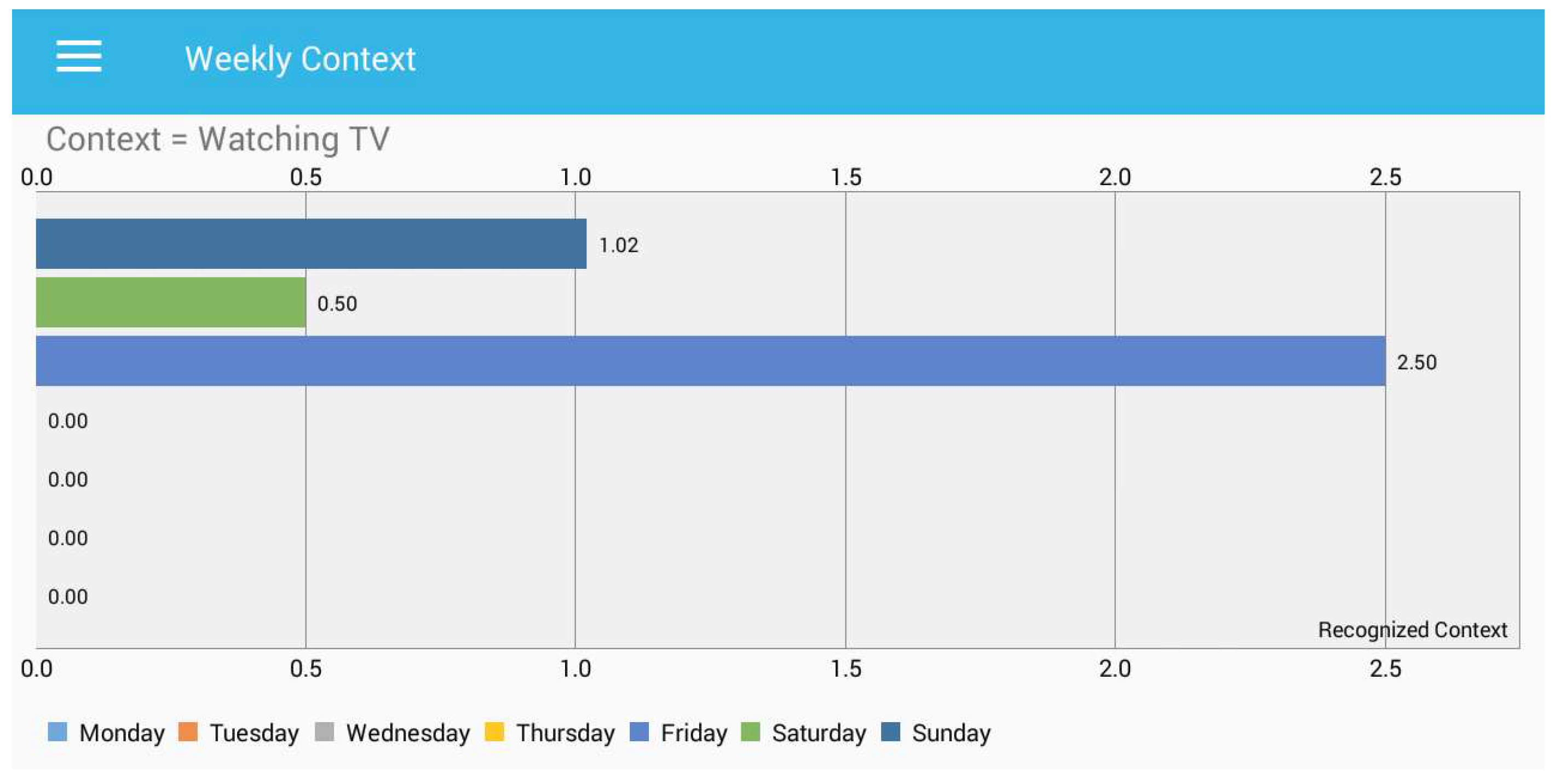

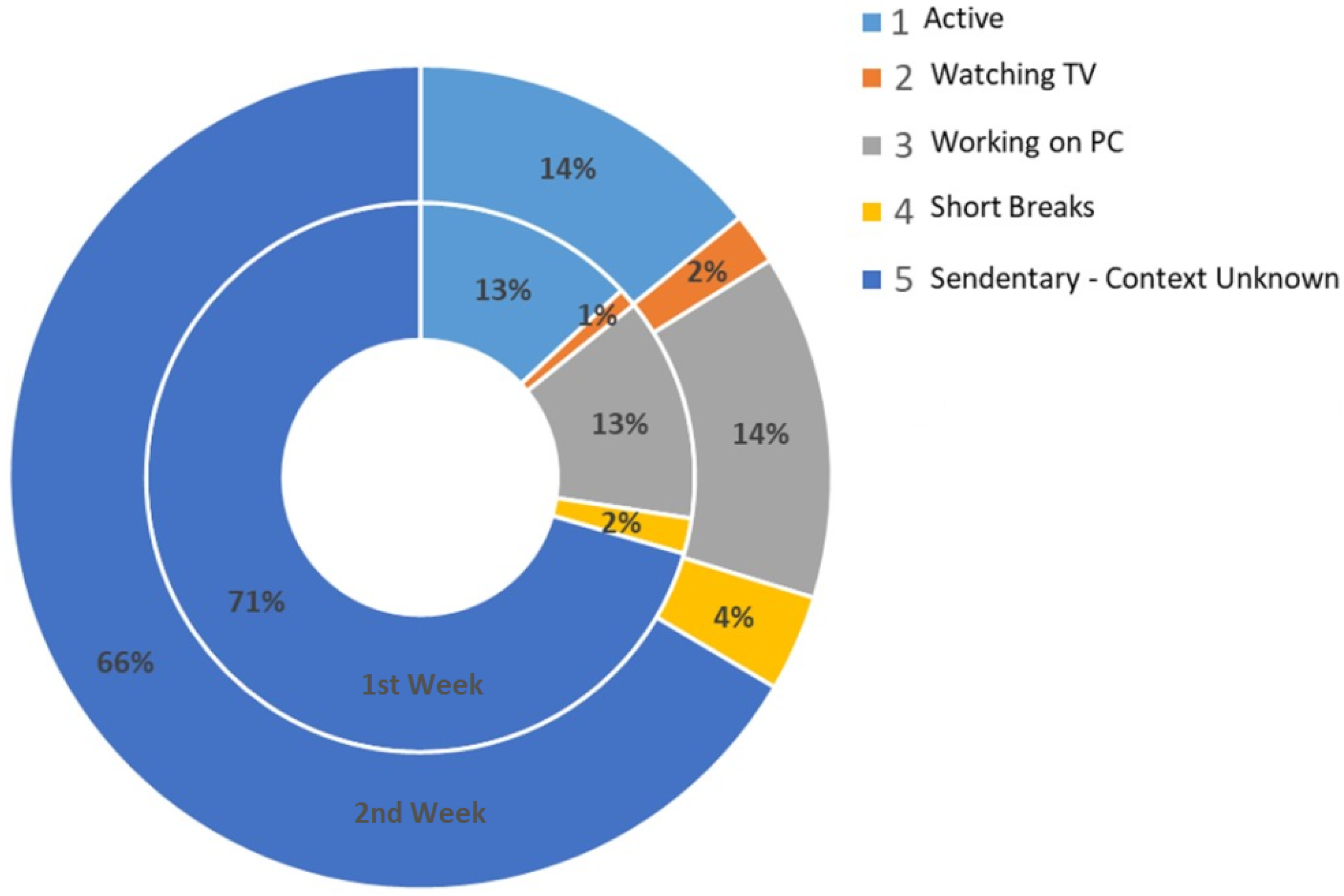

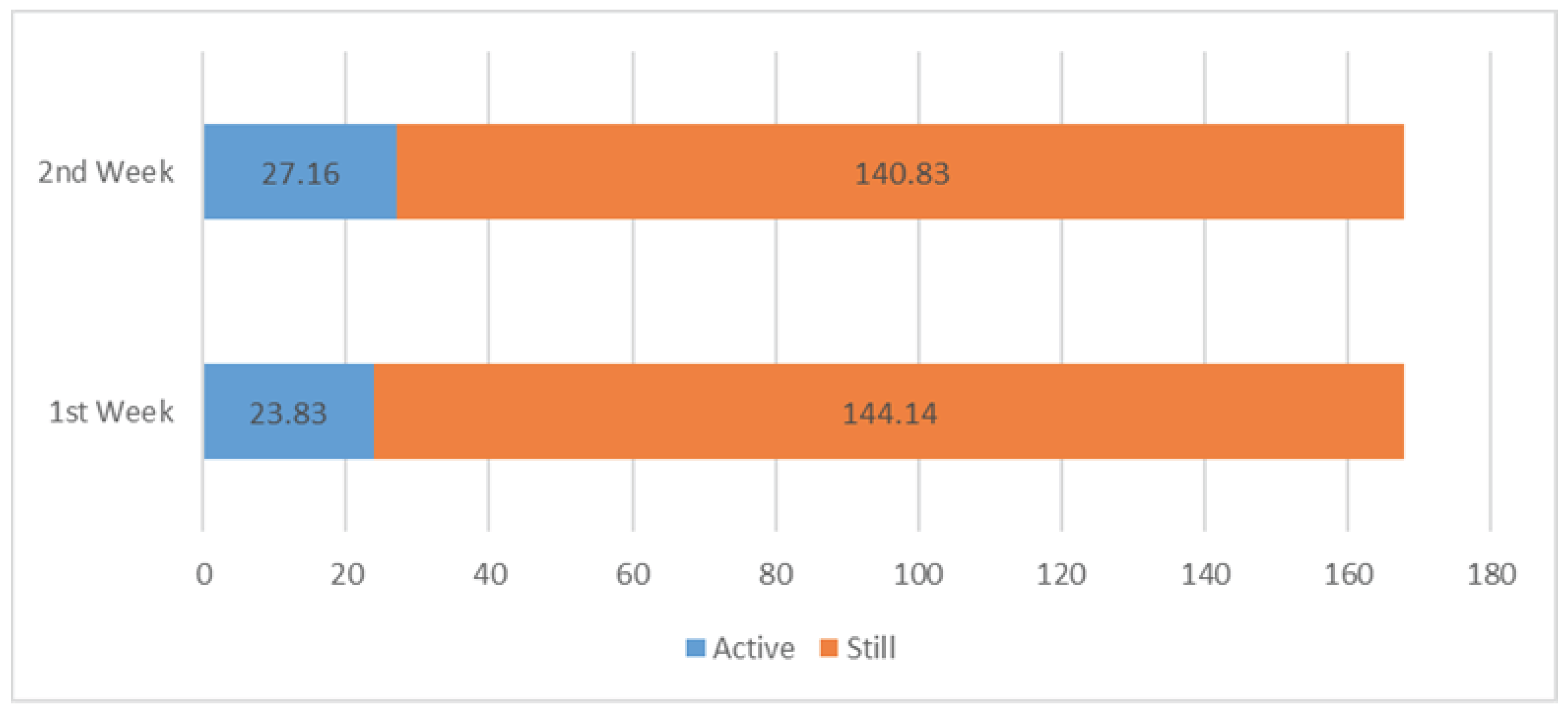

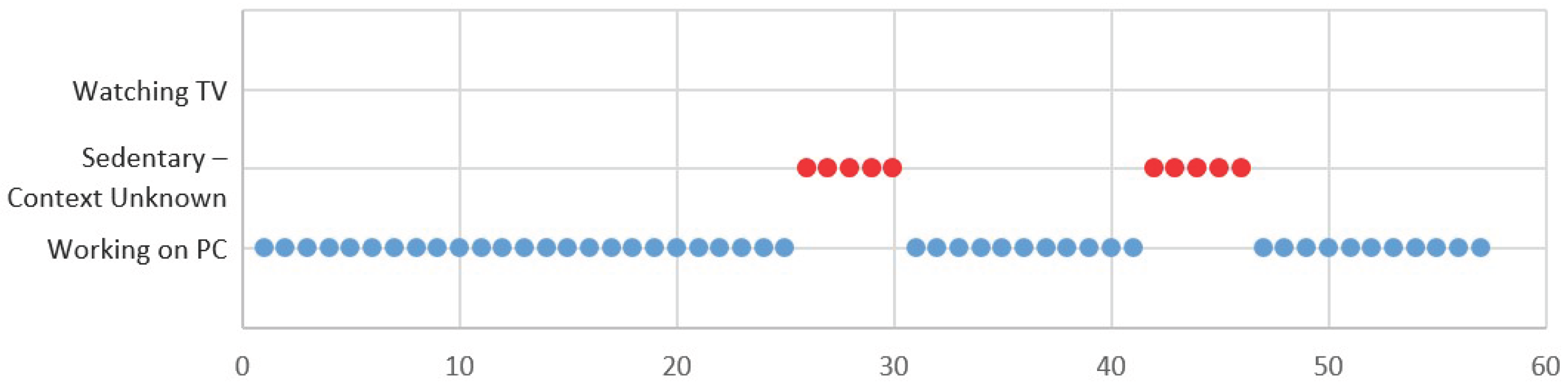

4. Results

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- He, Q.; Agu, E.O. Smartphone usage contexts and sensable patterns as predictors of future sedentary behaviours. In Proceedings of the IEEE Healthcare Innovation Point-Of-Care Technologies Conference (HI-POCT), Cancun, Mexico, 9–11 November 2016; pp. 54–57. [Google Scholar]

- Biswas, A.; Oh, P.I.; Faulkner, G.E.; Bonsignore, A.; Pakosh, M.T.; Alter, D.A. The energy expenditure benefits of reallocating sedentary time with physical activity: A systematic review and meta-analysis. J. Public Health 2017, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Atkin, A.J.; Gorely, T.; Clemes, S.A.; Yates, T.; Edwardson, C.; Brage, S.; Salmon, J.; Marshall, S.J.; Biddle, S.J. Methods of measurement in epidemiology: Sedentary behaviour. Int. J. Epidemiol. 2012, 41, 1460–1471. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Thøgersen-Ntoumani, C.; Ntoumanis, N.; Stenling, A.; Fenton, S.A.; Veldhuijzen van Zanten, J.J. Profiles of Physical Function, Physical Activity, and Sedentary Behavior and their Associations with Mental Health in Residents of Assisted Living Facilities. Appl. Psychol. Health Well-Being 2017, 9, 60–80. [Google Scholar] [CrossRef] [PubMed]

- Vandelanotte, C.; Duncan, M.J.; Short, C.; Rockloff, M.; Ronan, K.; Happell, B.; Di Milia, L. Associations between occupational indicators and total, work-based and leisure-time sitting: A cross-sectional study. BMC Public Health 2013, 13, 1110. [Google Scholar] [CrossRef] [PubMed]

- Duncan, M.J.; Vandelanotte, C.; Trost, S.G.; Rebar, A.L.; Rogers, N.; Burton, N.W.; Murawski, B.; Rayward, A.; Fenton, S.; Brown, W.J. Balanced: A randomised trial examining the efficacy of two self-monitoring methods for an app-based multi-behaviour intervention to improve physical activity, sitting and sleep in adults. BMC Public Health 2016, 16, 670. [Google Scholar] [CrossRef] [PubMed]

- Jia, P.; Cheng, X.; Xue, H.; Wang, Y. Applications of geographic information systems (GIS) data and methods in obesity-related research. Obes. Rev. 2017, 18, 400–411. [Google Scholar] [CrossRef] [PubMed]

- Synnott, J.; Rafferty, J.; Nugent, C.D. Detection of workplace sedentary behaviour using thermal sensors. In Proceedings of the IEEE 38th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 5413–5416. [Google Scholar]

- Fit Bit. Available online: https://www.fitbit.com/ (accessed on 23 October 2017).

- Google Fit. Available online: https://www.google.com/fit/ (accessed on 23 October 2017).

- He, Q.; Agu, E.O. A frequency domain algorithm to identify recurrent sedentary behaviours from activity time-series data. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Las Vegas, NV, USA, 24–27 February 2016; pp. 45–48. [Google Scholar]

- Manini, T.M.; Carr, L.J.; King, A.C.; Marshall, S.; Robinson, T.N.; Rejeski, W.J. Interventions to reduce sedentary behaviour. Med. Sci. Sports Exerc. 2015, 47, 1306. [Google Scholar] [CrossRef] [PubMed]

- Fahim, M.; Lee, S.; Yoon, Y. SUPAR: Smartphone as a ubiquitous physical activity recognizer for u-healthcare services. In Proceedings of the 36th IEEE Annual International Conference of the Engineering in Medicine and Biology Society (EMBC), Chicago, IL, USA, 26–30 August 2014; pp. 3666–3669. [Google Scholar]

- Fahim, M.; Khattak, A.M.; Baker, T.; Chow, F.; Shah, B. Micro-context recognition of sedentary behaviour using smartphone. In Proceedings of the Sixth IEEE International Conference on Digital Information and Communication Technology and Its Applications (DICTAP), Konya, Turkey, 21–23 July 2016; pp. 30–34. [Google Scholar]

- Wu, X.; Kumar, V.; Quinlan, J.R.; Ghosh, J.; Yang, Q.; Motoda, H.; McLachlan, G.J.; Ng, A.; Liu, B.; Philip, S.Y.; et al. Top 10 algorithms in data mining. Knowl. Inf. Syst. 2008, 14, 1–37. [Google Scholar] [CrossRef]

- Bonke, J. Trends in short and long sleep in Denmark from 1964 to 2009, and the associations with employment, SES (socioeconomic status) and BMI. Sleep Med. 2015, 16, 385–390. [Google Scholar] [CrossRef] [PubMed]

- Jean-Louis, G.; Williams, N.J.; Sarpong, D.; Pandey, A.; Youngstedt, S.; Zizi, F.; Ogedegbe, G. Associations between inadequate sleep and obesity in the US adult population: Analysis of the national health interview survey (1977–2009). BMC Public Health 2014, 14, 290. [Google Scholar] [CrossRef] [PubMed]

- Bauman, A.; Ainsworth, B.E.; Sallis, J.F.; Hagströmer, M.; Craig, C.L.; Bull, F.C.; Pratt, M.; Venugopal, K.; Chau, J.; Sjöström, M.; et al. The descriptive epidemiology of sitting: A 20-country comparison using the International Physical Activity Questionnaire (IPAQ). Am. J. Prev. Med. 2011, 41, 228–235. [Google Scholar] [CrossRef] [PubMed]

- Ng, S.W.; Popkin, B. Time use and physical activity: a shift away from movement across the globe. Obes. Rev. 2012, 13, 659–680. [Google Scholar] [CrossRef] [PubMed]

- Duncan, M.J.; Kline, C.E.; Rebar, A.L.; Vandelanotte, C.; Short, C.E. Greater bed-and wake-time variability is associated with less healthy lifestyle behaviours: A cross-sectional study. J. Public Health 2016, 24, 31–40. [Google Scholar] [CrossRef] [PubMed]

- Rezende, L.F.M.; Sá, T.H.; Mielke, G.I.; Viscondi, J.Y.K.; Rey-López, J.P.; Garcia, L.M.T. All-cause mortality attributable to sitting time: Analysis of 54 countries worldwide. Am. J. Prev. Med. 2016, 51, 253–263. [Google Scholar] [CrossRef] [PubMed]

- Biddle, S.; Cavill, N.; Ekelund, U.; Gorely, T.; Griffiths, M.; Jago, R.; Oppert, J.; Raats, M.; Salmon, J.; Stratton, G.; et al. Sedentary behaviour and obesity: Review of the current scientific evidence. Available online: http://epubs.surrey.ac.uk/763180/ (accessed on 14 March 2018).

- Sasai, H. Assessing sedentary behaviour using wearable devices: An overview and future directions. J. Phys. Fit. Sports Med. 2017, 6, 135–143. [Google Scholar] [CrossRef]

- Matthews, C.E.; Chen, K.Y.; Freedson, P.S.; Buchowski, M.S.; Beech, B.M.; Pate, R.R.; Troiano, R.P. Amount of time spent in sedentary behaviours in the United States, 2003–2004. Am. J. Epidemiol. 2008, 167, 875–881. [Google Scholar] [CrossRef] [PubMed]

- Dobbins, C.; Merabti, M.; Fergus, P.; Llewellyn-Jones, D. A user-centred approach to reducing sedentary behaviour. In Proceedings of the IEEE 11th Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 10–13 January 2014; pp. 1–6. [Google Scholar]

- Stratton, G.; Murphy, R.; Rosenberg, M.; Fergus, P.; Attwood, A. Creating intelligent environments to monitor and manipulate physical activity and sedentary behaviour in public health and clinical settings. In Proceedings of the IEEE International Conference on Communications (ICC), Ottawa, ON, Canada, 10–15 June 2012; pp. 6111–6115. [Google Scholar]

- Van Dantzig, S.; Geleijnse, G.; van Halteren, A.T. Toward a persuasive mobile application to reduce sedentary behaviour. Pers. Ubiquitous Comput. 2013, 17, 1237–1246. [Google Scholar] [CrossRef]

- Shin, Y.; Choi, W.; Shin, T. Physical activity recognition based on rotated acceleration data using quaternion in sedentary behaviour: A preliminary study. In Proceedings of the 2014 36th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC), Chicago, IL, USA, 26–30 August 2014; pp. 4976–4978. [Google Scholar]

- Butler, M. Android: Changing the mobile landscape. IEEE Pervasive Comput. 2011, 10, 4–7. [Google Scholar] [CrossRef]

- Fahim, M.; Fatima, I.; Lee, S.; Park, Y.T. EFM: Evolutionary fuzzy model for dynamic activities recognition using a smartphone accelerometer. Appl. Intell. 2013, 39, 475–488. [Google Scholar] [CrossRef]

- Banos, O.; Galvez, J.M.; Damas, M.; Pomares, H.; Rojas, I. Window size impact in human activity recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [PubMed]

- Kao, T.P.; Lin, C.W.; Wang, J.S. Development of a portable activity detector for daily activity recognition. In Proceedings of the ISIE IEEE International Symposium on Industrial Electronics, Seoul, Korea, 5–8 July 2009; pp. 115–120. [Google Scholar]

- Preece, S.J.; Goulermas, J.Y.; Kenney, L.P.; Howard, D. A comparison of feature extraction methods for the classification of dynamic activities from accelerometer data. IEEE Trans. Biomed. Eng. 2009, 56, 871–879. [Google Scholar] [CrossRef] [PubMed]

- Helmi, M.; AlModarresi, S.M.T. Human activity recognition using a fuzzy inference system. In Proceedings of the FUZZ-IEEE 2009 IEEE International Conference on Fuzzy Systems, Jeju Island, Korea, 20–24 August 2009; pp. 1897–1902. [Google Scholar]

- Mizell, D. Using Gravity to Estimate Accelerometer Orientation. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.108.332&rep=rep1&type=pdf (accessed on 14 March 2018).

- Lu, L.; Ge, F.; Zhao, Q.; Yan, Y. A svm-based audio event detection system. In Proceedings of the IEEE International Conference on Electrical and Control Engineering (ICECE), Wuhan, China, 25–27 June 2010; pp. 292–295. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbour pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Banos, O.; Damas, M.; Pomares, H.; Prieto, A.; Rojas, I. Daily living activity recognition based on statistical feature quality group selection. Expert Syst. Appl. 2012, 39, 8013–8021. [Google Scholar] [CrossRef]

- Banos, O.; Damas, M.; Pomares, H.; Rojas, I. On the use of sensor fusion to reduce the impact of rotational and additive noise in human activity recognition. Sensors 2012, 12, 8039–8054. [Google Scholar] [CrossRef] [PubMed]

- Atul, J.; Johnson, D.; Kiran, M.; Murthy, R.; Vivek, C. OpenStack Beginner’s Guide (for Ubuntu–Precise). CSS CORP, May 2012. Available online: https://cssoss.files.wordpress.com/2012/05/openstackbookv3-0_csscorp2.pdf (accessed on 14 March 2018).

- Fahim, M.; Idris, M.; Ali, R.; Nugent, C.; Kang, B.; Huh, E.N.; Lee, S. ATHENA: A personalized platform to promote an active lifestyle and wellbeing based on physical, mental and social health primitives. Sensors 2014, 14, 9313–9329. [Google Scholar] [CrossRef] [PubMed]

- Machine Learning Research Laboratory. Available online: http://ml.ce.izu.edu.tr/ (accessed on 23 October 2017).

- MPAndroidChart. Available online: https://github.com/PhilJay/MPAndroidChart/ (accessed on 23 October 2017).

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fahim, M.; Baker, T.; Khattak, A.M.; Shah, B.; Aleem, S.; Chow, F. Context Mining of Sedentary Behaviour for Promoting Self-Awareness Using a Smartphone. Sensors 2018, 18, 874. https://doi.org/10.3390/s18030874

Fahim M, Baker T, Khattak AM, Shah B, Aleem S, Chow F. Context Mining of Sedentary Behaviour for Promoting Self-Awareness Using a Smartphone. Sensors. 2018; 18(3):874. https://doi.org/10.3390/s18030874

Chicago/Turabian StyleFahim, Muhammad, Thar Baker, Asad Masood Khattak, Babar Shah, Saiqa Aleem, and Francis Chow. 2018. "Context Mining of Sedentary Behaviour for Promoting Self-Awareness Using a Smartphone" Sensors 18, no. 3: 874. https://doi.org/10.3390/s18030874