Evaluation of a Sensor System for Detecting Humans Trapped under Rubble: A Pilot Study

Abstract

:1. Introduction

- Gas sensors (O2 and CO2) for the detection of human breath and quality of air.

- Microphones for the detection of voices, human-produced sounds, or environmental noise.

- Thermal vision camera for a direct view of the environment, localized temperature patterns.

2. Materials and Methods

2.1. Gas Sensors (CO2 and O2 Sensors)

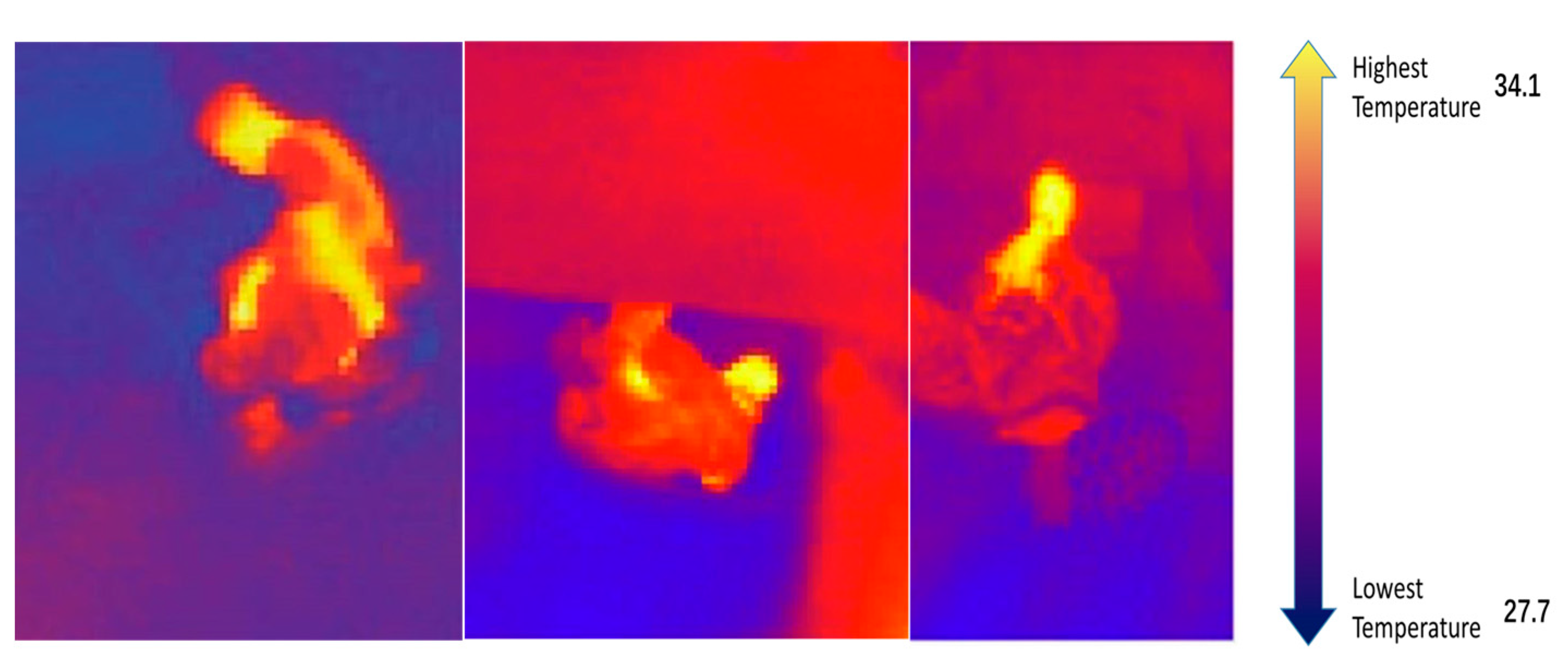

2.2. Thermal Vision Camera

2.3. Microphone

2.3.1. Hardware and Audio Signal Process

2.3.2. Human Voice Detection Algorithm

- Voice recording phase: the system records voice at 5-s intervals.

- Recorded data are bandpass filtered (50 Hz~3000 Hz)

- Data are filtered with a Wiener filter. The Wiener filter minimizes the Mean Square Error (MSE) between the estimated random process and the desired operation. This filter is generally used to remove noise from a recorded voice.

- Short sounds and background noise are removed. First, an adaptive threshold to remove background noise has been used. The reference level of environmental noise must be calculated. As the noise in the disaster area is high and highly variable, an adaptive background noise reference has been defined according to the equation:where α is the smoothing factor of change, is the average volume [dB] of current 5 s voice data, is the volume of previous 5 s voice data. It has been empirically found that a = 30% yields the best performance. Then, if the volume of the sound sample is lower than 1.3 times , the algorithm identifies the sound sample as environmental noise and discards it. Sound signals that are 1.3 times higher than are suspect sounds. Then, the algorithm checks the length of this suspect sound. As human voice sound is assumed to last more than 300 ms, sounds shorter than 300 ms are removed. After removing short sounds, this suspect sound is processed with SVM to identify possible human noise.

- Segmentation. The 5-s audio signal, after removing short sounds and background noise, is broken into shorter audio samples of 10 ms.

- Audio statistical features, as described in Section 2.3.1, are computed for these shorter 10-ms audio samples.

- SVM Classification. Sounds are differentiated in human voice or noise.

2.4. Experiments

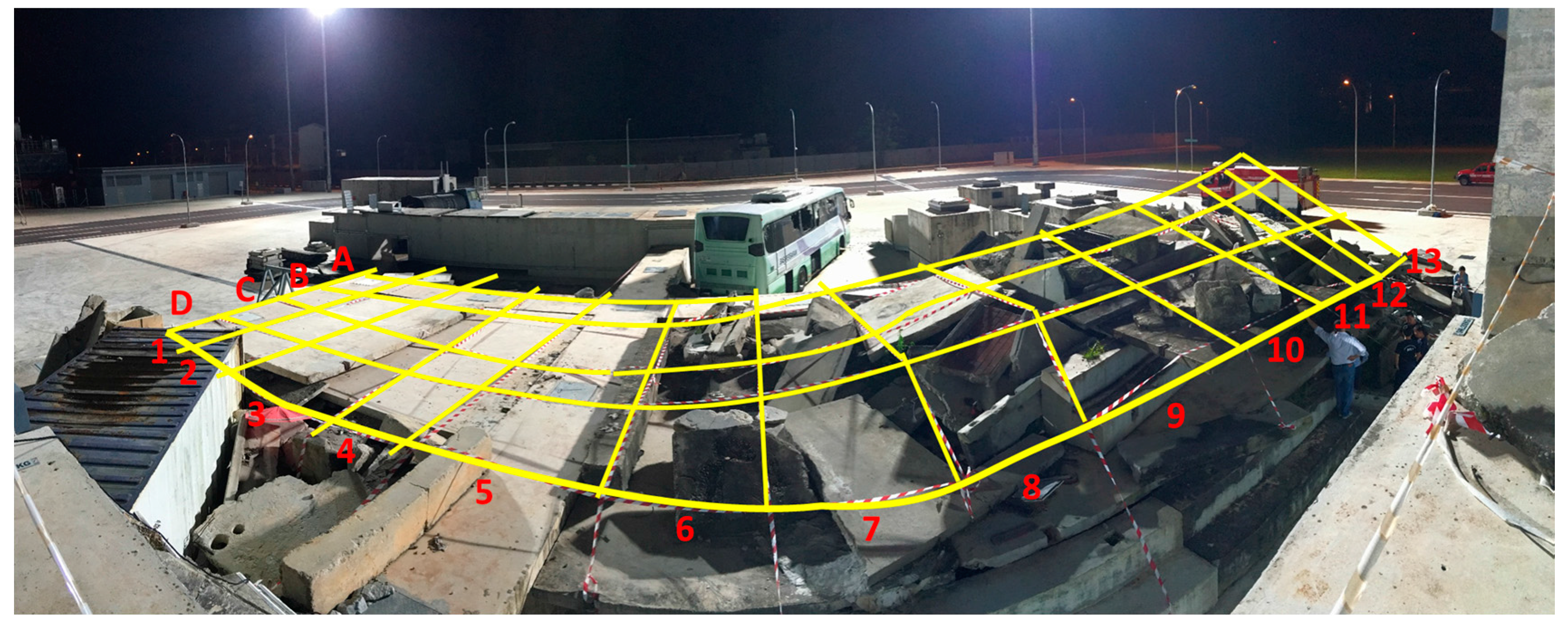

2.4.1. Experimental Environment

2.4.2. Experimental Protocol

3. Results and Discussion

3.1. Experimental Results

3.2. Evaluation of the Gas Sensor and Thermal Camera

3.3. Evaluation of Microphone and Audio Processing Algorithm

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Significant Earthquakes—2017. Available online: http://earthquake.usgs.gov/earthquakes/browse/significant.php (accessed on 23 February 2017).

- Earthquakes. Available online: http://earthquake.usgs.gov/earthquakes/ (accessed on 23 February 2017).

- Huo, R.; Agapiou, A.; Bocos-Bintintan, V.; Brown, L.J.; Burns, C.; Creaser, C.S.; Devenport, N.A.; Gao-Lau, B.; Guallar-Hoyas, C.; Hildebrand, L.; et al. The trapped human experiment. J. Breath Res. 2011, 5, 046006. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, K. Nepal Earthquake: How Does the Search and Rescue Operation Work? 2015. BBC News. Available online: http://www.bbc.com/news/world-asia-32490242 (accessed on 13 March 2018).

- Kiriazis, E.; Zisiadis, A. Technical Handbook for Search & Rescue Operations in Earthquakes, 2nd ed.; Zoi, V., Dandoulaki, M., Eds.; Access Soft Limited: Athens, Greece, 1999; pp. 1–48. [Google Scholar]

- Berger, W.; Coutinho, E.S.F.; Figueira, I.; Marques-Portella, C.; Luz, M.P.; Neylan, T.C.; Marmar, C.R.; Mendlowicz, M.V. Rescuers at risk: A systematic review and meta-regression analysis of the worldwide current prevalence and correlates of PTSD in rescue workers. Soc. Psychiatry Psychiatr. Epidemiol. 2012, 47, 1001–1011. [Google Scholar] [CrossRef] [PubMed]

- Younis, M.; Akkaya, K. Strategies and techniques for node placement in wireless sensor networks: A survey. Ad Hoc Netw. 2008, 6, 621–655. [Google Scholar] [CrossRef]

- Sun, H.; Yang, P.; Liu, Z.; Zu, L.; Xu, Q. Microphone array based auditory localization for rescue robot. In Proceedings of the 2011 Chinese Control and Decision Conference (CCDC), Mianyang, China, 23–25 May 2011; pp. 606–609. [Google Scholar]

- Latif, T.; Whitmire, E.; Novak, T.; Bozkurt, A. Sound Localization Sensors for Search and Rescue Biobots. IEEE Sens. J. 2016, 16, 3444–3453. [Google Scholar] [CrossRef]

- Yang, P.; Sun, H.; Zu, L. An acoustic localization system using microphone array for mobile robot. Int. J. Intell. Eng. Syst. 2007, 2, 18–26. [Google Scholar] [CrossRef]

- Rudol, P.; Doherty, P. Human Body Detection and Geolocalization for UAV Search and Rescue Missions Using Color and Thermal Imagery. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; pp. 1–8. [Google Scholar]

- Kadous, M.W.; Sheh, R.K.-M.; Sammut, C. Effective User Interface Design for Rescue Robotics. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 250–257. [Google Scholar]

- Murphy, R.R. Human-robot interaction in rescue robotics. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2004, 34, 138–153. [Google Scholar] [CrossRef]

- Fenwick, J.W.; Newman, P.M.; Leonard, J.J. Cooperative concurrent mapping and localization. In Proceedings of the IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; Volume 2, pp. 1810–1817. [Google Scholar]

- Baker, M.; Casey, R.; Keyes, B.; Yanco, H.A. Improved interfaces for human-robot interaction in urban search and rescue. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, 10–13 October 2004; Volume 3, pp. 2960–2965. [Google Scholar]

- Nourbakhsh, I.R.; Sycara, K.; Koes, M.; Yong, M.; Lewis, M.; Burion, S. Human-robot teaming for search and rescue. IEEE Pervasive Comput. 2005, 4, 72–79. [Google Scholar] [CrossRef]

- Chen, K.-M.; Huang, Y.; Zhang, J.; Norman, A. Microwave life-detection systems for searching human subjects under earthquake rubble or behind barrier. IEEE Trans. Biomed. Eng. 2000, 47, 105–114. [Google Scholar] [CrossRef] [PubMed]

- Garg, P.; Srivastava, S.K. Life Detection System during Natural Calamity. In Proceedings of the 2016 Second International Conference on Computational Intelligence & Communication Technology (CICT), Ghaziabad, India, 12–13 February 2016; pp. 602–604. [Google Scholar]

- Li, C.; Lubecke, V.M.; Boric-Lubecke, O.; Lin, J. A review on recent advances in Doppler radar sensors for noncontact healthcare monitoring. IEEE Trans. Microw. Theory Tech. 2013, 61, 2046–2060. [Google Scholar] [CrossRef]

- Suzuki, T.; Kawabata, K.; Hada, Y.; Tobe, Y. Deployment of wireless sensor network using mobile robots to construct an intelligent environment in a multi-robot sensor network. In Advances in Service Robotics; InTech Open Access Publisher: Rijeka, Croatia, 2008. [Google Scholar]

- Wang, Y.; Wu, C.-H. Robot-assisted sensor network deployment and data collection. In Proceedings of the International Symposium on Computational Intelligence in Robotics and Automation, Jacksonville, FL, USA, 20–23 June 2007; pp. 467–472. [Google Scholar]

- Bahl, P.; Padmanabhan, V.N. RADAR: An in-building RF-based user location and tracking system. In Proceedings of the Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societies, Tel Aviv, Israel, 26–30 March 2000; Volume 2, pp. 775–784. [Google Scholar]

- Thrun, S.; Liu, Y.; Koller, D.; Ng, A.Y.; Ghahramani, Z.; Durrant-Whyte, H. Simultaneous localization and mapping with sparse extended information filters. Int. J. Robot. Res. 2004, 23, 693–716. [Google Scholar] [CrossRef]

- Corke, P.; Hrabar, S.; Peterson, R.; Rus, D.; Saripalli, S.; Sukhatme, G. Autonomous deployment and repair of a sensor network using an unmanned aerial vehicle. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 4, pp. 3602–3608. [Google Scholar]

- Tuna, G.; Gungor, V.C.; Gulez, K. An autonomous wireless sensor network deployment system using mobile robots for human existence detection in case of disasters. Ad Hoc Netw. 2014, 13, 54–68. [Google Scholar] [CrossRef]

- Ramirez, J.; Górriz, J.M.; Segura, J.C. Voice Activity Detection. Fundamentals and Speech Recognition System Robustness; InTech Open Access Publisher: NewYork, NY, USA, 2007. [Google Scholar]

- Peeters, G. A Large Set of Audio Features for Sound Description (Similarity and Classification) in the CUIDADO Project. 2004. IRCAM Web Site. Available online: http://recherche.ircam.fr/anasyn/peeters/ARTICLES/Peeters_2003_cuidadoaudiofeatures.pdf (accessed on 13 March 2018).

- Yu, H.; Kim, S. Svm tutorial—Classification, regression and ranking. In Handbook of Natural Computing; Springer: New York, NY, USA, 2012; pp. 479–506. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. The Elements of Statistical Learning; Volume 1 Springer Series in Statistics; Springer: Berlin, Germany, 2001. [Google Scholar]

- Andrew, A.M. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods by Nello Christianini and John Shawe-Taylor; Cambridge University Press: Cambridge, UK, 2000; ISBN 0-521-78019-5. [Google Scholar]

| TEST | Execution Time | Result |

|---|---|---|

| Day 1 morning | 1 h 35 min | Success |

| Day 1 afternoon | 56 min | Success |

| Day 1 evening | 1 h 25 min | Success |

| Day 2 morning | 33 min | Success |

| Day 2 afternoon | 50 min | Success |

| Day 2 evening | 1 h 12 min | Failed |

| Day 3 morning | 2 h 13 min | Success |

| Day 3 afternoon | 20 min | Success |

| Day 3 evening | 31 min | Success |

| Predicted Condition Positive | Predicted Condition Negative | |

|---|---|---|

| Condition positive | 6 | 2 |

| Condition negative | 38 | 43 |

| TEST | Human Voice | Suspect Noise | Noise |

|---|---|---|---|

| Test Day 1 afternoon | 87.5% | 89.36% | 100% |

| Test Day 2 afternoon | 89.4% | 91.21% | 100% |

| Test Day 3 afternoon | 90.6% | 98.18% | 100% |

| Average | 89.36% | 93.95% | 100% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Sessa, S.; Kasai, R.; Cosentino, S.; Giacomo, C.; Mochida, Y.; Yamada, H.; Guarnieri, M.; Takanishi, A. Evaluation of a Sensor System for Detecting Humans Trapped under Rubble: A Pilot Study. Sensors 2018, 18, 852. https://doi.org/10.3390/s18030852

Zhang D, Sessa S, Kasai R, Cosentino S, Giacomo C, Mochida Y, Yamada H, Guarnieri M, Takanishi A. Evaluation of a Sensor System for Detecting Humans Trapped under Rubble: A Pilot Study. Sensors. 2018; 18(3):852. https://doi.org/10.3390/s18030852

Chicago/Turabian StyleZhang, Di, Salvatore Sessa, Ritaro Kasai, Sarah Cosentino, Cimarelli Giacomo, Yasuaki Mochida, Hiroya Yamada, Michele Guarnieri, and Atsuo Takanishi. 2018. "Evaluation of a Sensor System for Detecting Humans Trapped under Rubble: A Pilot Study" Sensors 18, no. 3: 852. https://doi.org/10.3390/s18030852