A Novel Anti-Spoofing Solution for Iris Recognition Toward Cosmetic Contact Lens Attack Using Spectral ICA Analysis

Abstract

:1. Introduction

2. Iridal Texture Characteristics and Anti-Spoofing Technique Overview

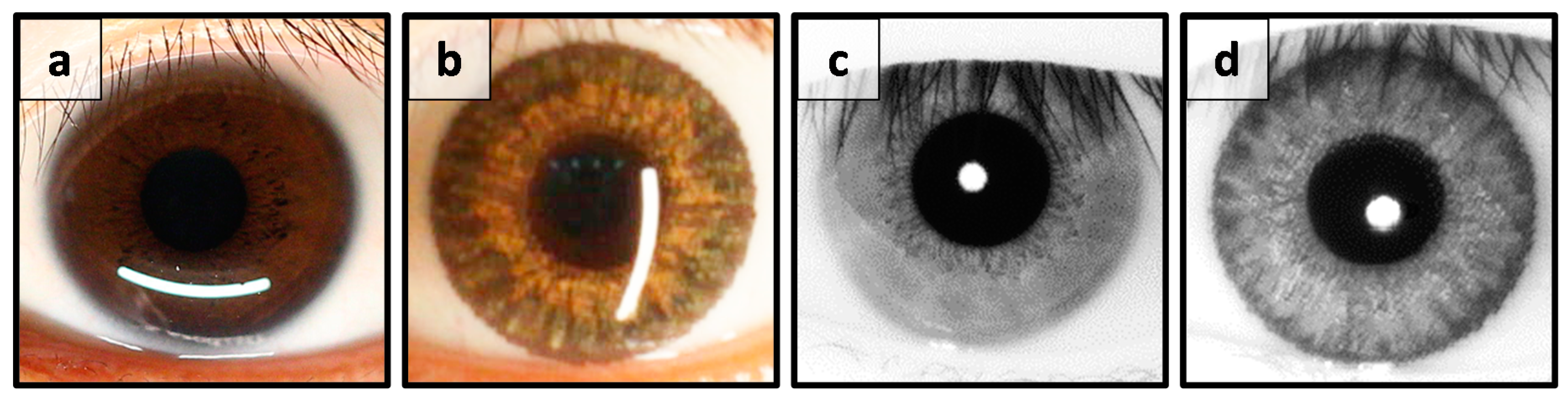

2.1. Iridal Texture Characteristics

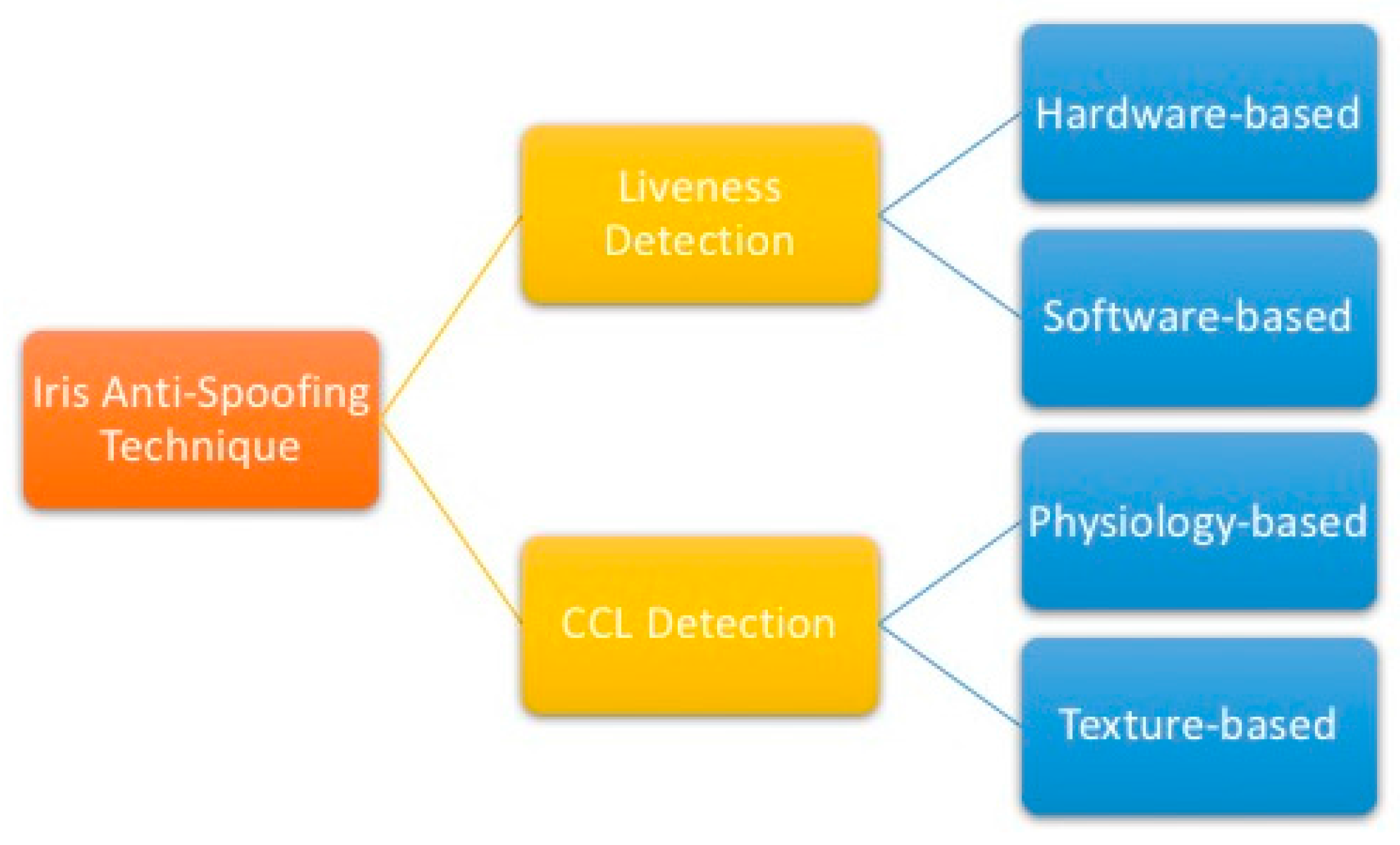

2.2. Iris Anti-Spoofing Technique Overview

2.2.1. Liveness Detection

Hardware-Based Systems

Software-Based Systems

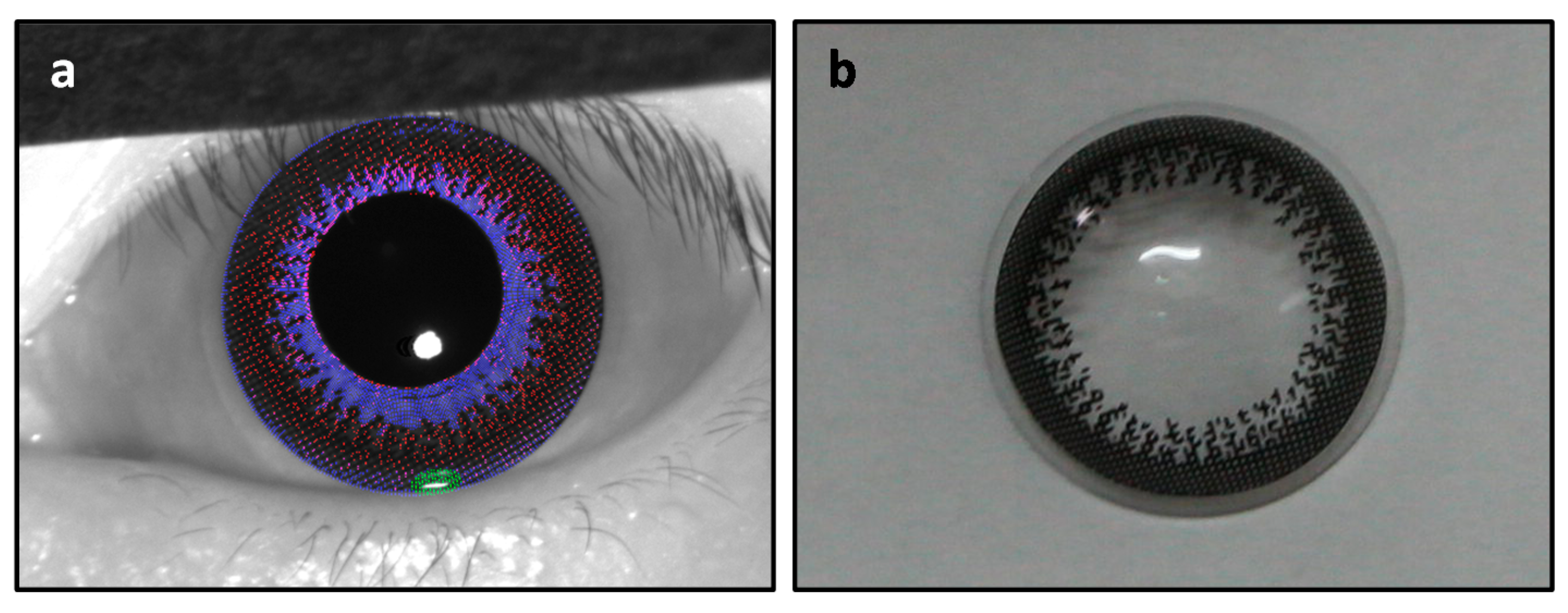

2.2.2. Cosmetic Contact Lenses (CCL) Detection

Physiology-Based Detection

Texture-Based Classifier

2.3. Summary of Iris Antispoofing Technologies

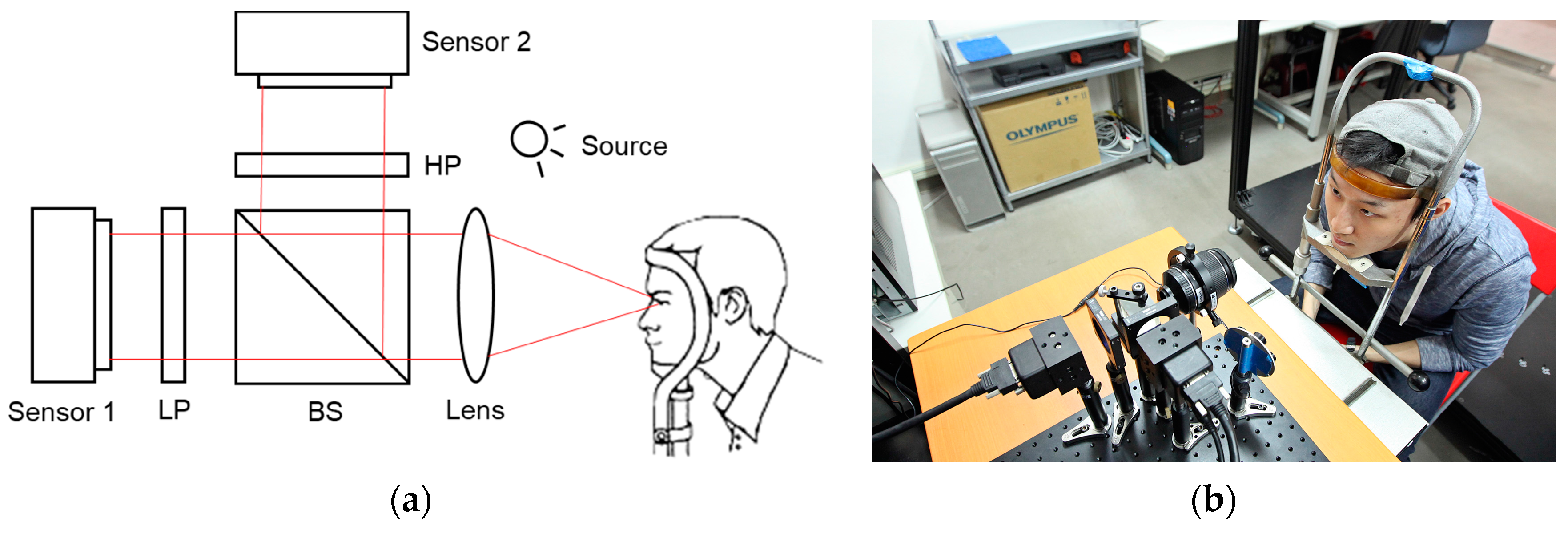

3. Framework of Dual Band Camera System

3.1. DBC Mixing Model

3.2. ICA Algorithm

3.3. Restrictions and Ambiguities in ICA

4. Design of Dual Band Camera System

4.1. Filter Design via Spectral Response

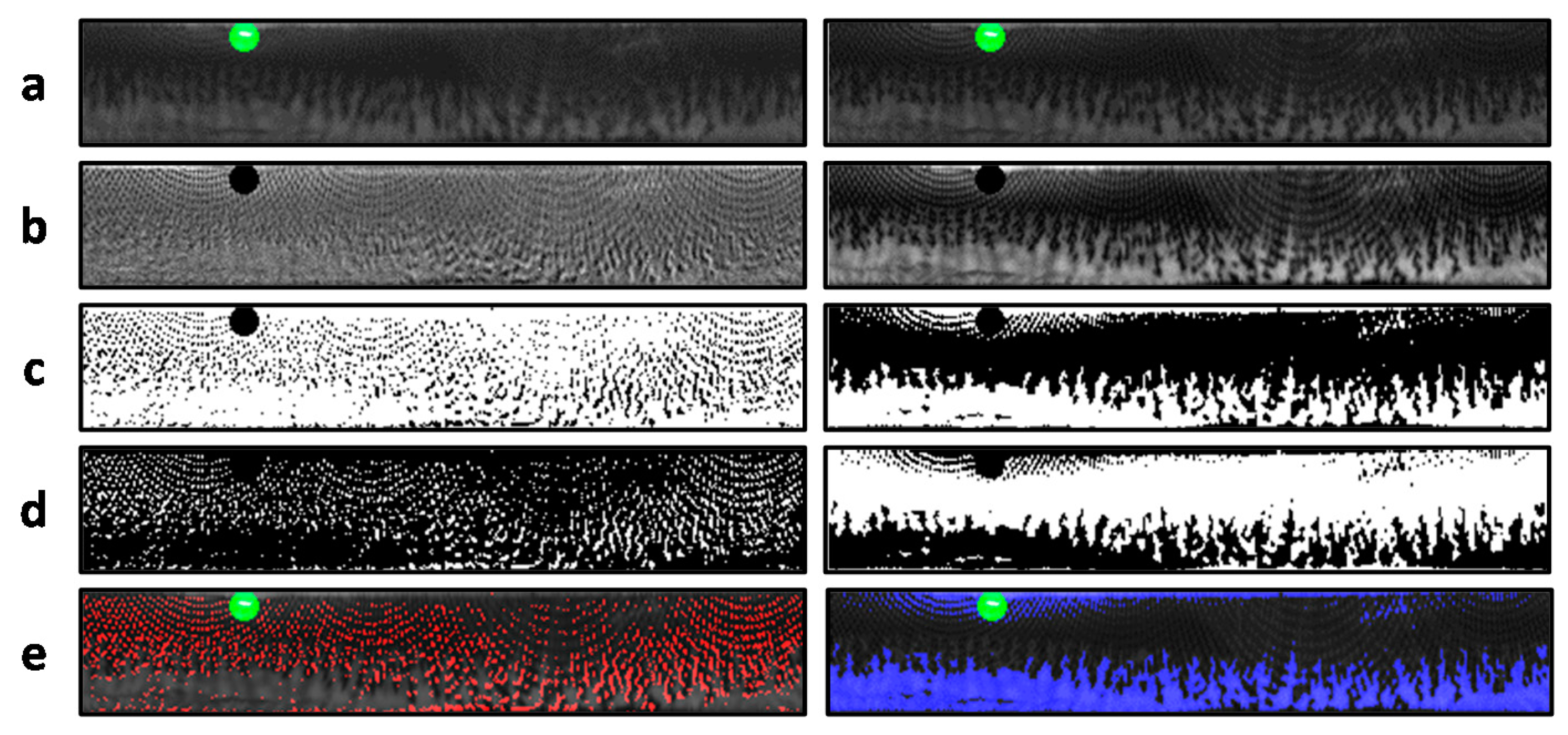

4.2. Mask Generation

4.3. Overcoming the Scaling Ambiguity

4.4. Overcoming the Order Ambiguity

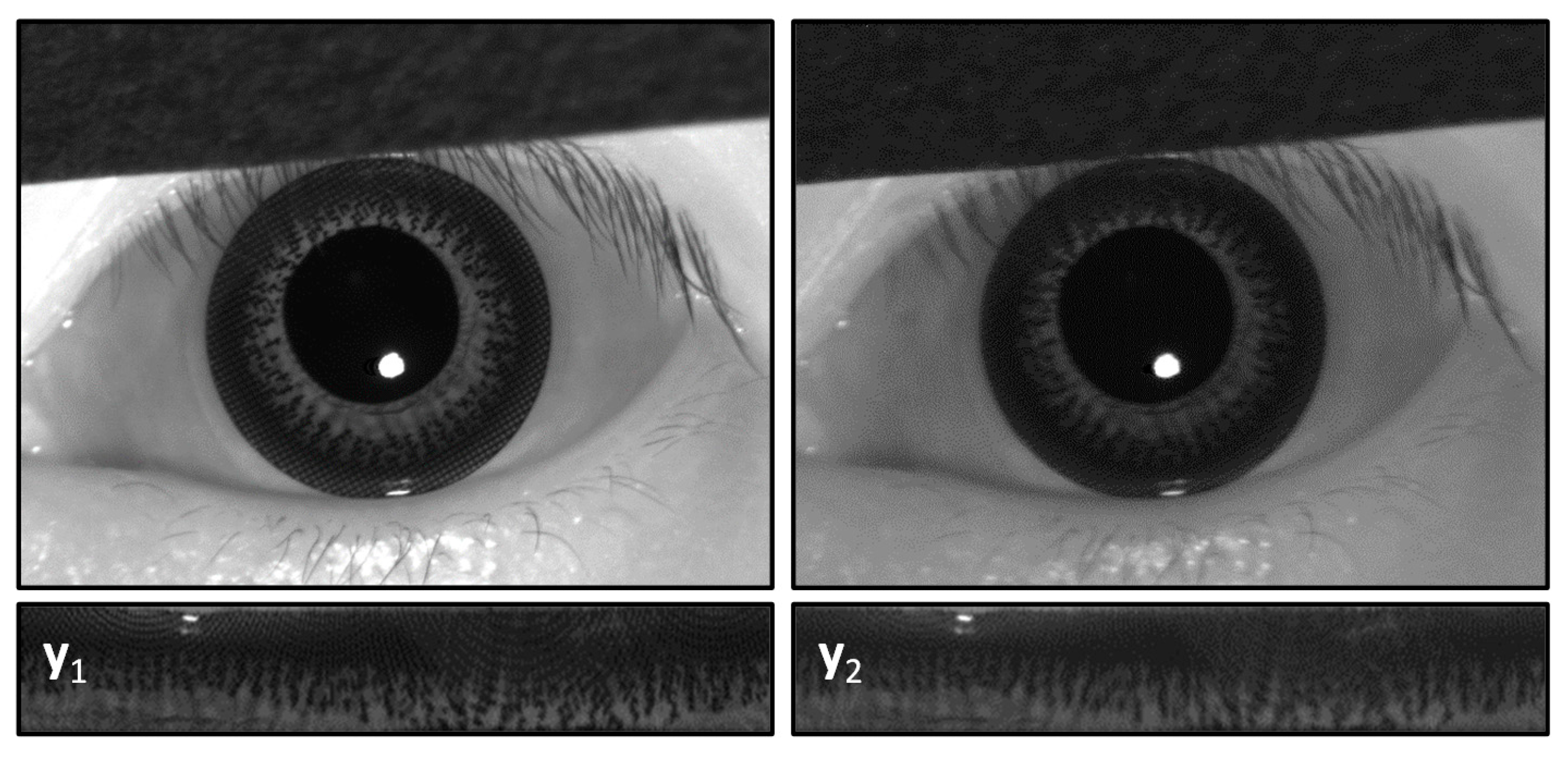

5. Proof-of-Concept Testing

5.1. Setup and Database

5.2. Iris Recognition Algorithm

5.3. Baseline Testing

5.4. Results of ICA-Based Recognition against CCL Spoofing

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Daugman, J.G. How iris recognition works. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 21–30. [Google Scholar] [CrossRef]

- Bowyer, K.W.; Hollingsworth, K.; Flynn, P.J. Image Understanding for Iris Biometrics: A Survey. Comput. Vis. Image Underst. 2008, 110, 281–307. [Google Scholar] [CrossRef]

- Nguyen, K.; Fookes, C.; Jillela, R.; Sridharan, S.; Ross, A. Long range iris 511 recognition: A survey. Pattern Recognit. 2017, 72, 123–143. [Google Scholar] [CrossRef]

- Al-Raisi, A.N.; Al-Khouri, A.M. Iris recognition and the challenge of homeland and border control security in UAE. Telemat. Inform. 2008, 25, 117–132. [Google Scholar] [CrossRef]

- Romero, J. India’s big bet on identity. IEEE Spectr. 2012, 3, 48–56. [Google Scholar] [CrossRef]

- Forrester, J.V.; Dick, A.D.; McMenamin, P.G.; Lee, W. The Eye: Basic Sciences in Practice, 2nd ed.; Saunders: London, UK, 2001. [Google Scholar]

- Prota, G.; Hu, D.-N.; Vincensi, M.R.; McCormick, S.A.; Napolitano, A. Characterization of melanins in human irides and cultured uveal melanocytes from eyes of different colors. Exp. Eye Res. 1998, 67, 293–299. [Google Scholar] [CrossRef] [PubMed]

- Wakamatsu, K.; Hu, D.-N.; McCormick, S.A.; Ito, S. Characterization of melanin in human iridal and choroidal melanocytes from eyes with various colored irides. Pigment Cell Melanoma Res. 2008, 21, 97–105. [Google Scholar] [CrossRef] [PubMed]

- Boyce, C.; Ross, A.; Monaco, M.; Hornak, L.; Li, X. Multispectral iris analysis: A preliminary study51. In Proceedings of the Conference on Computer Vision and Pattern Recognition Workshop, CVPRW’06, New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Sequeira, A.F.; Thavalengal, S.; Ferryman, J.; Corcoran, P.; Cardoso, J.S. A realistic evaluation of iris presentation attack detection. In Proceedings of the 39th International Conference on Telecommunications and Signal Processing (TSP), Vienna, Austria, 27–29 June 2016; pp. 660–664. [Google Scholar]

- Sousedik, C.; Busch, C. Presentation attack detection methods for fingerprint recognition systems: A survey. IET Biometr. 2014, 3, 219–233. [Google Scholar] [CrossRef]

- Sequeira, A.F.; Murari, J.; Cardoso, J.S. Iris Liveness Detection Methods in the mobile biometrics scenario. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014. [Google Scholar]

- Sequeira, A.F.; Oliveira, H.P.; Monteiro, J.C.; Monteiro, J.P.; Cardoso, J.S. MobILive 2014—Mobile Iris Liveness Detection Competition. In Proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Clearwater, FL, USA, 29 September–2 October 2014. [Google Scholar]

- Raghavendra, R.; Busch, C. Robust scheme for iris presentation attack detection using multiscale binarized statistical image feature. IEEE Trans. Inf. Forensics Secur. 2015, 10, 703–715. [Google Scholar] [CrossRef]

- Czajka, A. Pupil dynamics for iris liveness detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 726–735. [Google Scholar] [CrossRef]

- Wildes, R.P. Iris recognition: An emerging biometric technology. IEEE Proc. 1997, 85, 1348–1363. [Google Scholar] [CrossRef]

- Zhu, Y.; Tan, T.; Wang, Y. Biometric personal identification based on iris patterns. In Proceedings of the 15th International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000. [Google Scholar]

- Roberts, C. Biometric attack vectors and defences. Comput. Secur. 2007, 26, 14–25. [Google Scholar] [CrossRef]

- Czajka, A. Development of liveness detection method for iris recognition. In Proceedings of the 18th International Conference on Methods Models in Automation Robotics (MMAR), Międzyzdroje, Poland, 26–29 August 2013. [Google Scholar]

- Clynes, M.; Kohn, M. Color dynamics of the pupil. Ann. N. Y. Acad. Sci. 1969, 156, 931–950. [Google Scholar]

- Daugman, J.G. Iris recognition and anti-spoofing countermeasures. In Proceedings of the International Biometrics Conference IBC, Cairns, Australia, 11–16 July 2004. [Google Scholar]

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. An investigation of local descriptors for biometric spoofing detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 849–863. [Google Scholar] [CrossRef]

- He, Z.; Sun, Z.; Tan, T.; Wei, Z. Efficient iris spoof detection via boosted local binary patterns. In International Conference on Biometrics; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1080–1090. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Raja, K.B.; Raghavendra, R.; Busch, C. Presentation attack detection using laplacian decomposed frequency response for visible spectrum and near-infra-red iris systems. In Proceedings of the IEEE 7th International Conference on Biometrics Theory, Applications and Systems (BTAS), Arlington, VA, USA, 8–11 September 2015; pp. 1–8. [Google Scholar]

- Sun, Z.; Zhang, H.; Tan, T.; Wang, J. Iris image classification based on hierarchical visual codebook. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1120–1133. [Google Scholar] [CrossRef] [PubMed]

- Matsumoto, T. Artificial Fingers and Irises: importance of Vulnerability Analysis. In Proceedings of the 7th Annual Biometrics Conference and Exhibition, London, UK, 13–15 October 2004. [Google Scholar]

- Pacut, A.; Czajka, A. Aliveness detection for iris biometrics. In Proceedings of the Conference on 40th Annual 2006 IEEE International on Carnahan Conferences Security Technology, Lexington, KY, USA, 16–19 October 2006; pp. 122–129. [Google Scholar]

- Bowyer, K.W.; Doyle, J.S. Cosmetic contact lenses and iris recognition spoofing. Computer 2014, 47, 96–98. [Google Scholar]

- Lee, E.C.; Park, K.R.; Kim, J. Fake iris detection by using purkinje image. In International Conference on Biometrics; Springer: Berlin/Heidelberg, Germany, 2006; pp. 397–403. [Google Scholar]

- Park, K.R. Robust fake iris detection. In Proceedings of the 4th International Conference on Articulated Motion and Deformable Objects, Port d’Andratx, Mallorca, Spain, 11–14 July 2006; pp. 10–18. [Google Scholar]

- Lee, S.J.; Park, K.R.; Kim, J. Robust fake iris detection based on variation of the reflectance ratio between the iris and the sclera. In Proceedings of the Biometric Symposium: Special Session on Research at the Biometric Consortium Conference, Baltimore, MD, USA, 19–21 September 2006; pp. 1–6. [Google Scholar]

- Hughes, K.; Bowyer, K.W. Detection of contact-lens-based iris biometric spoofs using stereo imaging. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences, Wailea, Maui, HI, USA, 7–10 January 2013; pp. 1763–1772. [Google Scholar]

- Daugman, J.G. Demodulation by complex-valued wavelets for stochastic pattern recognition. Wavelet Multiresolut. Inf. Process. 2003, 1, 1–17. [Google Scholar] [CrossRef]

- He, X.; An, S.; Shi, P. Statistical texture analysis-based approach for fake iris detection using support vector machines. In International Conference on Biometrics; Springer: Berlin/Heidelberg, Germany, 2007; pp. 540–546. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Wei, Z.; Qiu, X.; Sun, Z.; Tan, T. Counterfeit iris detection based on texture analysis. In Proceedings of the19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, Z.; Tan, T. Contact lens detection based on weighted LBP. In Proceedings of the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 4279–4282. [Google Scholar]

- Doyle, J.S.; Flynn, P.J.; Bowyer, K.W. Automated classification of contact lens type in iris images. In International Conference on Biometrics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 1–6. [Google Scholar]

- Raghavendra, R.; Raja, K.B.; Busch, C. ContlensNet: Robust Iris Contact Lens Detection Using Deep Convolutional Neural Networks. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 1160–1167. [Google Scholar]

- Silva, P.; Luz, E.; Baeta, R.; Pedrini, H.; Falcao, A.X.; Menotti, D. An approach to iris contact lens detection based on deep image representations. In Proceedings of the 28th IEEE SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Salvador, Brazil, 26–29 August 2015; pp. 157–164. [Google Scholar]

- Doyle, J.S.; Bowyer, K.W. Robust detection of textured contact lenses in iris recognition using BSIF. IEEE Access 2015, 1672–1683. [Google Scholar] [CrossRef]

- Baker, S.E.; Hentz, A.; Bowyer, K.W.; Flynn, P.J. Degradation of iris recognition performance due to non-cosmetic prescription contact lenses. Comput. Vis. Image Underst. 2010, 114, 1030–1044. [Google Scholar] [CrossRef]

- Doyle, J.S.; Bowyer, K.W.; Flynn, P.J. Variation in accuracy of textured contact lens detection based on sensor and lens pattern. In Proceedings of the 2013 IEEE Sixth International Conference on Biometrics: Theory, Applications and Systems, Arlington, VA, USA, 29 September–2 October 2013; pp. 1–7. [Google Scholar]

- Yadav, D.; Kohli, N.; Doyle, J.S.; Singh, R.; Vatsa, M.; Bowyer, K.W. Unraveling the effect of textured contact lenses on iris recognition. IEEE Trans. Inf. Forensics Secur. 2014, 9, 851–862. [Google Scholar] [CrossRef]

- Hyvärinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; John Wiley & Sons: New York, NY, USA, 2004. [Google Scholar]

- Phillips, P.J.; Scruggs, W.T.; O’Toole, A.J.; Flynn, P.J.; Bowyer, K.W.; Schott, C.L.; Sharpe, M. FRVT 2006 and ICE 2006 Large-Scale Experimental Results. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 831–846. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hsieh, S.-H.; Li, Y.-H.; Wang, W.; Tien, C.-H. A Novel Anti-Spoofing Solution for Iris Recognition Toward Cosmetic Contact Lens Attack Using Spectral ICA Analysis. Sensors 2018, 18, 795. https://doi.org/10.3390/s18030795

Hsieh S-H, Li Y-H, Wang W, Tien C-H. A Novel Anti-Spoofing Solution for Iris Recognition Toward Cosmetic Contact Lens Attack Using Spectral ICA Analysis. Sensors. 2018; 18(3):795. https://doi.org/10.3390/s18030795

Chicago/Turabian StyleHsieh, Sheng-Hsun, Yung-Hui Li, Wei Wang, and Chung-Hao Tien. 2018. "A Novel Anti-Spoofing Solution for Iris Recognition Toward Cosmetic Contact Lens Attack Using Spectral ICA Analysis" Sensors 18, no. 3: 795. https://doi.org/10.3390/s18030795

APA StyleHsieh, S.-H., Li, Y.-H., Wang, W., & Tien, C.-H. (2018). A Novel Anti-Spoofing Solution for Iris Recognition Toward Cosmetic Contact Lens Attack Using Spectral ICA Analysis. Sensors, 18(3), 795. https://doi.org/10.3390/s18030795