1. Introduction

Wildfire is a natural disaster, causing irreparable damage to local ecosystem. Sudden and uncontrollable wildfires can be a real threat to residents’ lives. Statistics from National Interagency Fire Center (NIFC) in the USA show that the burned area doubled from 1990 to 2015 in the USA. Recent wildfires in northern California (reported by CNN) have already resulted in more than 40 deaths and 50 missing. More than 200,000 local residents have been evacuated under emergency. The wildfires occur 220,000 times per year globally, the annual burned area is over 6 million hectares. Accurate and early detection of wildfire is therefore of great importance [

1].

Traditional wildfire detection is mostly based on human observation from watchtowers. Subject to the spatiotemporal limitation, it is inefficient. Unmanned aerial vehicles (UAVs or drones) have been increasingly used as surveillance tools [

2]. UAVs equipped with global positioning systems (GPS) and ultrahigh resolution digital camera can provide HD aerial images with precise location information. Our previous work demonstrated that well organized UAV swarm is rapid, efficient and low-cost in conducting complicated agricultural applications [

3]. Recent works also suggest that UAVs are quickly emerging implement in a variety of monitoring tasks [

4,

5,

6]. The authors of this paper (Jie Zhang, Shanghai Shengyao Intelligence Technology Co. Ltd., Shanghai, China) developed a specialized UAV system (

Figure 1) for forest and wildfire monitoring. The technique parameters of the forest monitoring UAV are presented in

Table 1 below.

The main issue of this UAV monitoring system is that the whole system is operated by observers in surveillance center. Limited by the wireless transmission distance or the blind zone of GPRS/GSM network, real-time video transmission from the UAV can hardly be guaranteed. The surveyors often wait for the return of the UAV and examine its stored video. It is time consuming, labor intensive, and inefficient. Thus, an autonomous fire localization and identification system for this UAV is right now the top demand.

Early reported works on fire detection are generally based on sensor techniques. For example, heat and smoke detectors are mostly employed. These methods work properly in indoor environments. However, when applied to large outdoor areas, environmental factors can significantly impact their performance. Later, sensor network-based methods are reported effective in prediction rather than detection of wildfire [

7], this is mainly because these methods depend on restricted data of relative temperature rise or wind speed in calculating the probability and intensity of fire. However, due to the limited measuring distance of each sensor, fine grained wildfire mapping over a large geographical area demands very dense deployment of sensors. It is difficult to implement in practice. Advanced sensor technology has been applied in fire detection: infrared sensors are used to capture the thermal radiation of fire, light detection and ranging system (LIDAR) [

8] is employed to detect smoke by examining the backscattered laser light. Yet, these optical systems are also found sensitive to the varying atmospheric and environmental conditions: clouds, light reflections, and dust particles may lead to false alarms.

Satellite imagery is a common method for wildfire detection [

9], but the long scan period and low flexibility make it difficult for early fire detection. Infrared (IR) thermographic cameras are used to generate thermal image of an area [

10]. It can obtain reliable heat distribution data for fire detection. Yet most aerial IR thermographic imaging systems work on the wave band from 0.75 to 100 μm. They detect much less environmental information on this band. This information can also be very important, especially when flammable and combustible materials are presented. Also, limited by Nyquist Theorem, the recorded thermal image has lower spatial resolution than visible spectrum cameras. Moreover, thermographic systems are quite expensive with high maintenance costs.

Admittedly, visible spectrum CCD/CMOS imaging sensors are less sensitive to heat flux. Still they are capable of recording high resolution image of fire, smoke along with the surroundings. They are much cheaper than IR cameras and other type of sophisticated sensor system. Environmental changes have less influence on their performance. Additionally, the visible video-image-based methods combine well with existing monitoring systems: most UAVs and watchtowers have the visible spectrum camera equipped. These are the main reasons that many works using visible spectrum video and image to provide solid fire detection results [

11,

12,

13].

Previous image processing-based fire detection research mostly relies on color and texture models. Chen proposed empirical models with experimental thresholds in detect flame pixels. Vipin proposed a fire pixel classification method using rule-based models in the RGB and YCbCr color space [

14]. Angayarkkani and Radhakrishnan developed fuzzy rules in the YCbCr color space for fire image segmentation and detections [

15]. Yuan developed a set of forest fire tracking algorithms including median filtering, color space conversion, and Otsu threshold [

16]. These works are based on image processing techniques with handcrafted feature extractors, the results highly depend on the accuracy of the manually selected parameters.

Statistical and machine learning methods have been reported. Gaussian mixture model (GMM) is used in flame detection [

17]. However, the use of empirical value of mixture number may not lead to the best results. SVM classifier is applied in [

18]. It is noted that when commonly used feature descriptors like scale-invariant feature transform (SIFT) histogram of oriented gradients (HOG) are employed with these classifiers, the false alarm rate is not low enough.

Indeed, previous machine vision-based fire detection research are divided into two categories: flame detection and smoke detection [

12]. Subject to the methods employed, these works are reliable on specific scene. Many are tested on short-range-shooting video or images with low infestation background. The volume of existing test benchmark is very small [

19]. Up to this point, the fundamental difficulty has not yet been well addressed: how to build an adaptive classifier to identify complex fire features of different color, form, and texture from a varying cluttered background. Therefore, the objective of this paper is to provide an optimal wildfire feature learning model.

Deep convolutional neural networks (DCNN) have been reported to achieve state-of-art performance on a wide range of image recognition tasks [

13], its architecture and learning scheme lead to an efficient extractor of complex, upper-level features which are highly robust to input transformations [

20]. However, from the survey on UAV-based wildfire applications, deep learning technique has rarely been used in wildfire detections [

21]. Until recently, deep learning has been reported in fire recognition in conference publications. Kim proposed an eight-layered CNN model in fire image classification [

22]. The training image dataset are manually cropped and resized. Furthermore, the effect of key parameters and coefficients like dropout ratio, batch size, and learning rate have not been discussed.

Problem Description

In this work, we try to present a complete fire localization and identification solution. To achieve this, there are some practical and technical problems must be solved:

Localization of fire area: First, unlike most contour prominent objects (humans, animals, cars, planes, buildings, etc.) wildfires have irregular form with very vague contours, their shape can vary dramatically with time. Yet, most machine vision-based detection methods are applied on objects with clear contour. Therefore, instead of find the whole fire boundary, localization of the core burring area can be more practical. Also, it is of great importance for further firefighting operation. As discussed in the previous section of this paper, most fire detection methods are tested on shoot-range shooting video or images. The effectiveness of these methods in localization of core fire area in high altitude aerial photographs remains uncertain.

Fixed training image size: Most deep learning models require input training image of fixed size (e.g., 128 × 128, 224 × 224). Yet, the most web-based image sources are of different sizes. In prevalent works, the reformatting of these images requires direct manual manipulation. Handcraft operations are slow, expensive, and inefficient. Worse still, wrapping, cropping, and direct resizing (ex., Gaussian pyramid and Laplacian pyramid method) may cause detail and feature loss or image blurring. In any case, this could lead to a poor DCNN training results [

23]. Although method like Spatial pyramid pooling (SPP) [

23] is used for DCNN to deal with varied size input images. Some disadvantages are notable: first, SPP is a high computational cost operation, according to the study in [

24], the processing speed of SPP-net is considered unsuitable for real-time recognition. Secondly, the convolution layers preceding SPP cannot be updated during its fine tuning. This can be a major limit for a deeper network. Furthermore, high resolution aerial photography is often taken with a wide field of vision, these images usually contain much more background clutter information, which leads to high data redundancy, long calculating times, and poorer classification performance.

Limited amount of aerial view wildfire images: Generally, a DCNN requires a substantial amount of data to fully optimize the network’s parameters and weight values during its training procedure [

25]. Insufficient training data will lead to overfitting and poor classification performance. The amount of wildfire aerial images available online is still very limited, thus a data augmentation necessary.

To address these problems, we proposed a new saliency detection-based segmentation method [

26]. The method is tested effective in localization and extraction of the core fire area. In this way, most of the fire features can be conserved without severe feature loss. In addition, the method can be used to crop multiple fire regions into different fire images so that data augmentation can be achieved.

The rest of this paper is organized as follows. In

Section 2, we present the new saliency detection method in localization and segmentation of core fire regions. In

Section 3, the DCNN architecture is introduced in detail. The results and discussions are presented in

Section 4 and

Section 5 concludes the paper.

2. Saliency Segmentation and Image Dataset Formation

In this work, the original wildfire images were obtained from image searching engine of Google, Baidu, and the database of AInML lab in Chang’An University, The data source contains over 1500 images. These images are of varied size from 300 × 200 to 4000 × 3000 pixels. As our proposed DCNN architecture is implemented on Caffe [

27]. The input images should be normalized into a standard LMDB database file. Thus, the image must be formatted to fixed size (e.g., 227 × 227 or 128 × 128). Therefore, a technical issue is raised: what measures should be taken to extract maximum fire features into the resized image dataset?

As discussed in introduction, most prevalent methods use direct resizing or handcraft warping and cropping. These methods have some known disadvantages like detail loss and geometric distortion [

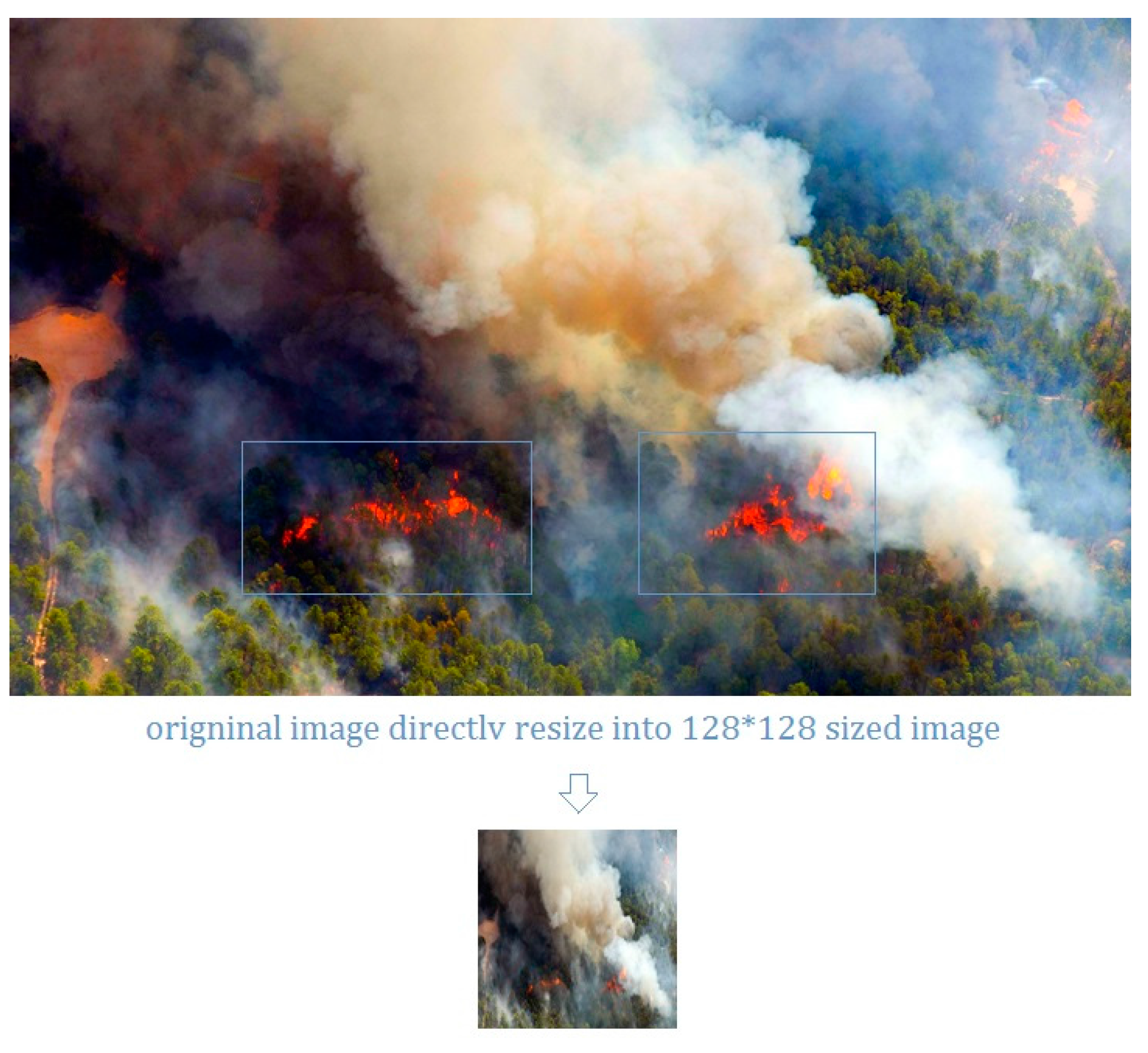

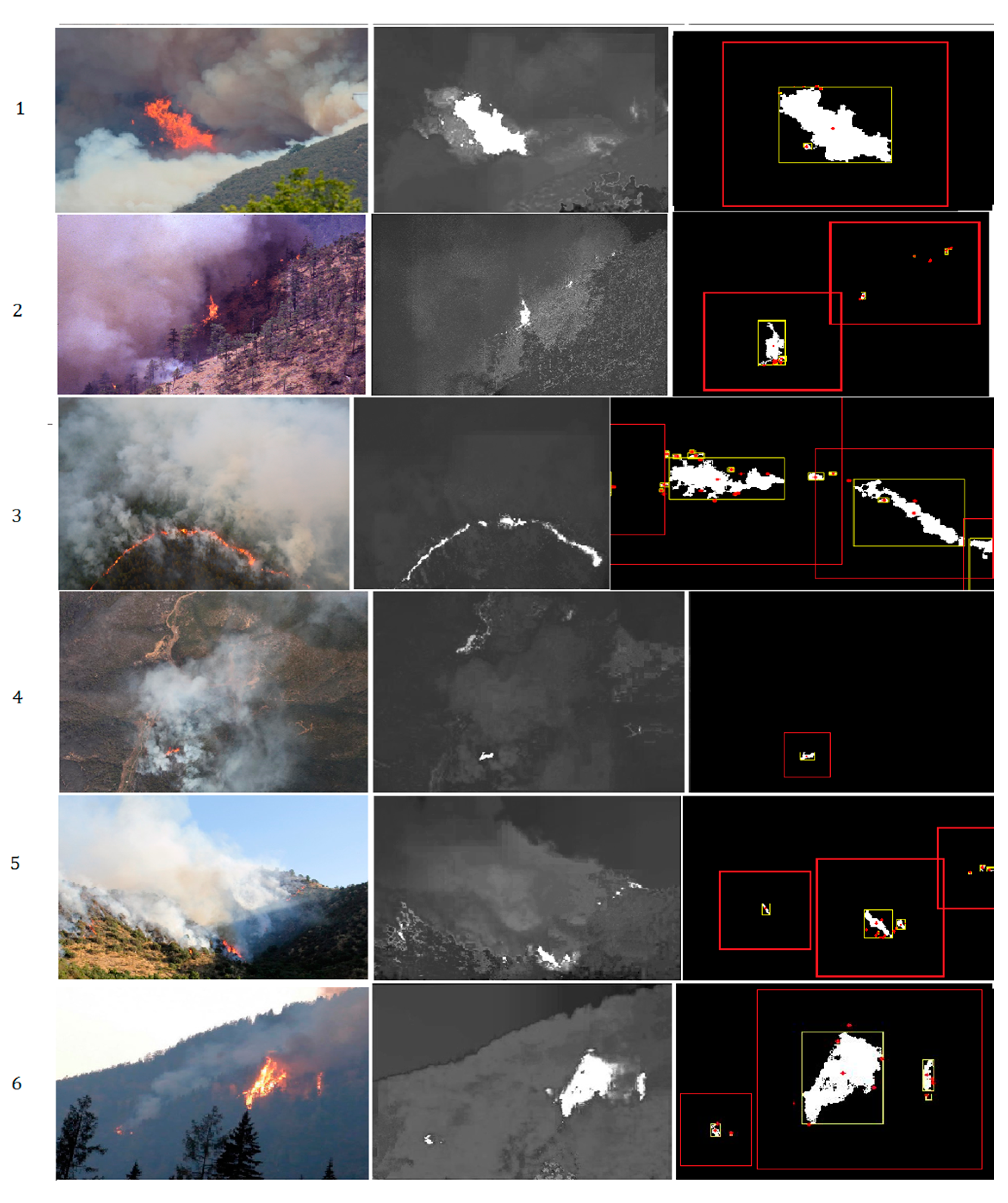

23]. UAV imagery is usually taken with a wide-angle digital camera, the captured image contains highly redundant clutter information. When direct resizing is applied, some of the important fire features may be submerged into the background. For example, In

Figure 2, the original image contains both flame and smoke features; however, after the resizing, the flame features have been submerged into the smoke.

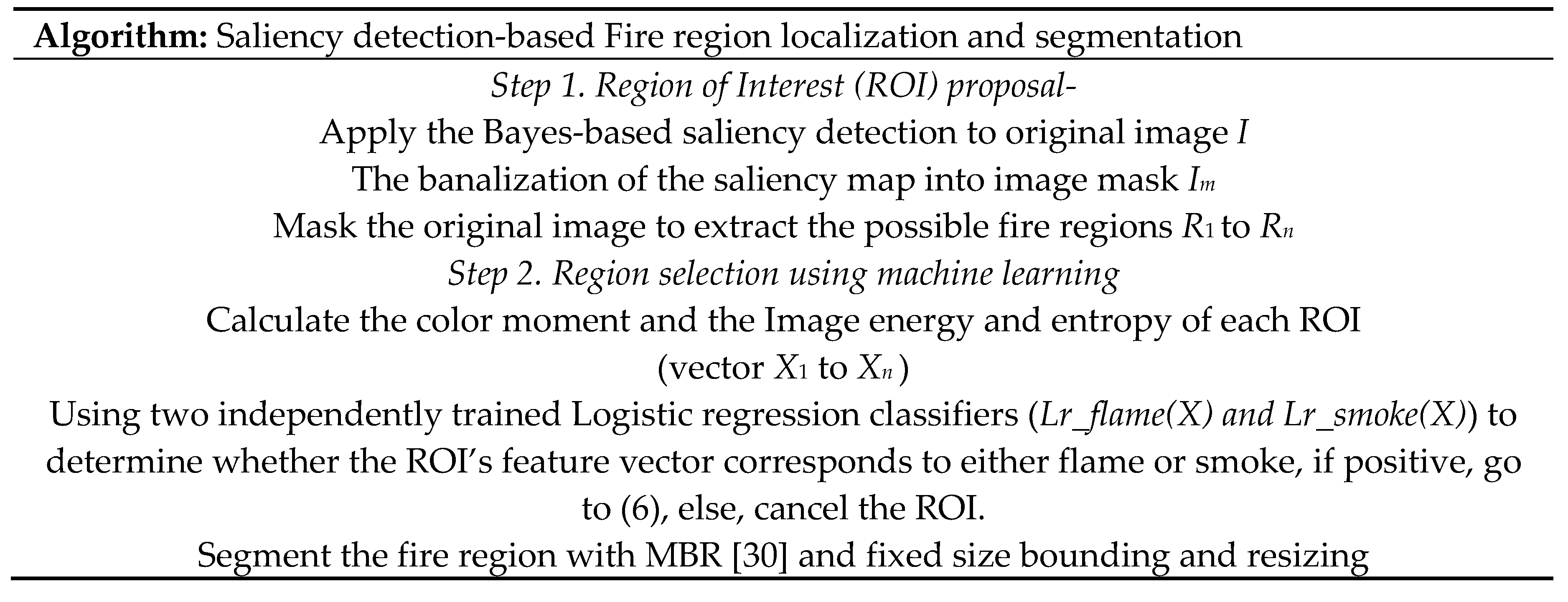

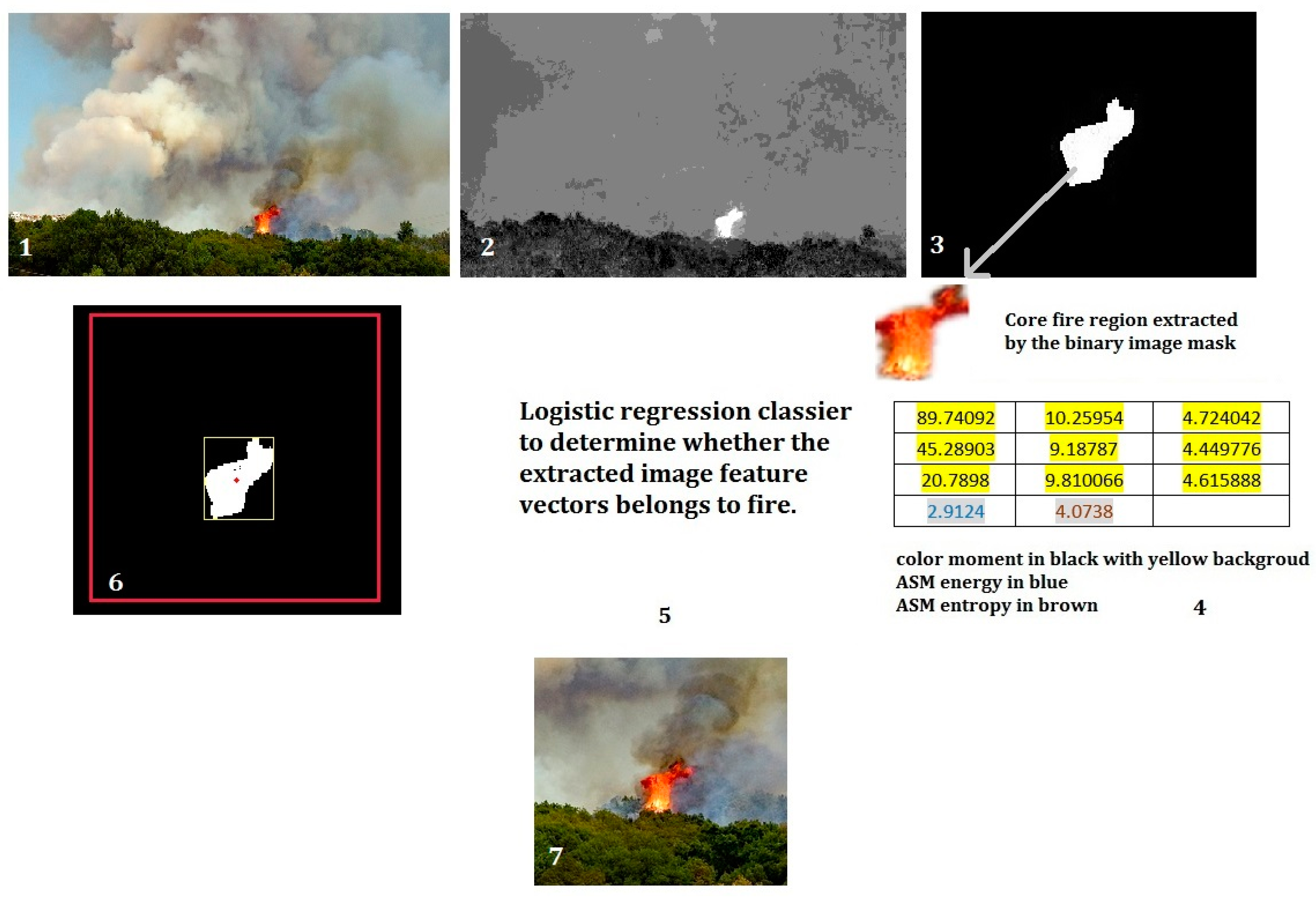

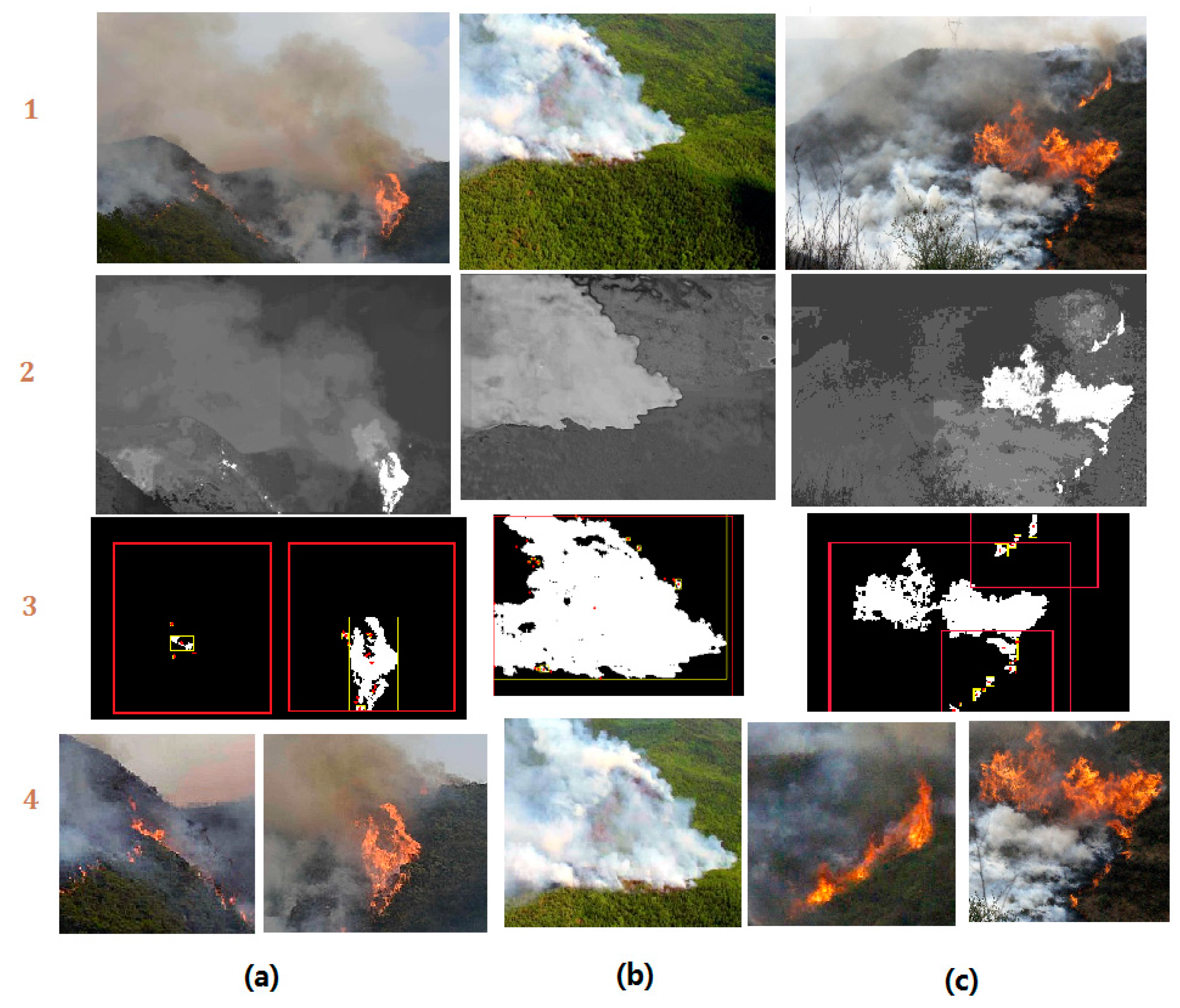

In this work, we developed a new algorithm of wildfire localization and segmentation algorithm by combining saliency detection and logistic regression classifier (

Figure 3). It can quickly locate the core fire region out of a complex background. An example of the method is illustrated in the

Figure 4.

The algorithm can be divided into two phases: region proposal and region selection. In the first phase, saliency detection method is used to extract the region of interest (ROI, possible fire region in our case), we calculate the color and texture features of the ROIs. In the second phase, two logistic regression classifiers are used to determine whether the feature vector of ROIs belong to flame or smoke, if positive, we segment these regions.

Previous works suggests that color and texture are two effective and efficient features in image-based fire detection, therefore, we employ the value of color moment [

28] as color feature descriptor while the image’s angular second moment (ASM) energy and entropy [

29] as texture descriptors. The two groups of values are combined into the feature vector. The use of this feature vector can largely reduce the computational complexity.

The implementation of the algorithm are as follows.

2.1. Saliency Detection

Inspired by the primate’s vision system, saliency detection is highly effective in focusing on the core object from a complicated scene [

31]. Early works on saliency detection have some common disadvantages like low resolution and blurred region boundary. Rahtu demonstrated in his work that the combination of saliency measure and conditional random field (CRF) model is valid in segmenting both still image and scenes from video sequences [

26].

Rahtu’s Bayesian method uses a slide window to calculate the conditional probability of each pixel as its estimated saliency value, if the object’s feature strongly differs from the background, it obtained a high saliency value. The mechanism of the method is presented as follows: a slide window W is composed of both inner kernel K and border B. A pixel x in W can be either salient H1 (in K) or non-salient H0 (in B). Accordingly, the probability for the each cases is P (H1) and P (H0), suppose the kernel K contains the salient object, its feature distribution is therefore differs from the distribution of border B. Based on this assumption, the conditional feature distribution p(F(x)|H1) and p(F(x)|H0) can be estimated with the feature value F(x) computed at x. By Bayes’ definition of P(A|B), we have the expression of written as

Based on Equation (1), for each pixel in K, we use this estimated probability as its saliency level S(x)

This value shows the contrast level of the feature values in

K and

B. If the contrast between

K and

B is stronger, the saliency level of pixels in

K is higher. In order to improve the robustness of the method, a Gaussian kernel is applied to smooth the histograms.

where

N is the normalization operation,

and

are the histogram respectively in

K and

B,

is the Gaussian smoothing function. The authors of this paper applied this method in segmenting pests from its background, and we enhanced the segmentation details by adding edge-aware weightings [

32].

2.2. Color Moment, ASM Energy, and Entropy

Color moment provides a measurement for color feature, it uses three central moments of an image’s color distribution. They are mean, standard deviation, and skewness. For example, an RGB image has three color channels, thus, three moments multiplied by three color channels makes nine moments in total. We define the

ith color channel at the

jth image pixel as

Pij. The three color moments can be defined as

where

E is the mean value, it is the average color value,

σ stands for the standard deviation, it is calculated by the square root of color-distribution’s variance,

S is the skewness, and it represents the asymmetry in the distribution.

The ASM energy and entropy are used to describe the texture feature of an image. They are expressed mathematically below

where

P is the element of the ASM matrix, it stands for the occurrence probability that a gray value

i and

j of a pair pixels separated by distance

d with an angle

θ. The ASM energy (Equation (8)) is the square sum of all element values in ASM matrix. It reflects the uniformity of the gray level distribution and the roughness of the texture. If all the values of the matrix are nearly equal, the energy value is small; otherwise, the energy value is high. Similarly, ASM entropy is a measurement of non-uniformity or complexity of the texture in an image. A more dispersed distribution of ASM elements value leads to high entropy.

2.3. Logistic Regression Classifier

Logistic regression is one of the most employed binary classification method. As introduced in the previous section, the input of the classifier is the extracted ROI’s feature vector, expressed as X = [x0, x1, x2, x3······x10]. It is a vector of eleven dimensions, in which, x0 to x8 are the color moment, x9 is the ASM energy and x10 the ASM entropy. Accordingly, the trained weight’s vector should also be 11 dimensions as W = [w0, w1, w2, w3······w10]. We chose sigmoid as classifier function, the form of the classifier is

Given an input feature vector X and the trained weight vector of W, the output value of this equation will situate between 0 and 1, if this value is above 0.5, we consider the feature vector belongs to positive class, otherwise, if the value is below 0.5, it is then negative. As the classification results are directly related to the weight vector, the aim of logistic regression is to find the optimal W in Equation (10). To achieve this, a gradient rise method is applied. First, based on Equation (10), the correspondent probability can be written as

Followed by a maximum likelihood estimation on W

where

i is the sample number of training feature vectors. For the convenience of calculation, Equation (12) is usually used in Logarithmic form. To gain the maximum value of

L, we use the gradient ascent algorithm to update the value of W

where α is the learning rate,

is the gradient of

, and it equals to

.

is the disturbance term we add to avoid the overfitting problem.

In this work, two regression classifiers for flame and smoke feature are trained with two sets of 150 sampled flame and smoke image blocks, respectively. Stochastic gradient ascent method is applied during the training, thus the weights get updated in every training epoch. The training rate α is 0.001, and the coefficient of disturbance is set to 0.00001.

The reason for using two binary classifiers instead of one multiple-labeled classifier (e.g., Softmax classifier) is that we consider the feature of flame and smoke are not mutually independent. Notably, the gray level texture features of flame and smoke are often mixed. In this case, two independent binary logistic regression classifiers can be more effective.

As shown in

Figure 4, the fire region shows the highest saliency value. After extraction by the saliency map, the core fire region’s image feature vector is calculated. The vector is then examined by the trained logistic regression classifier. With the positive results, minimum bounding rectangle (MBR) [

30] method is used to locate the core fire region’s geometric center and crop the fire region into a standard sized image. This method keeps the full feature of fire (both flame and smoke) without distortion. This method has been applied to form the standard image dataset ‘UAV_Fire’. Furthermore, as demonstrated in

Figure 4, this method can locate the core flame zone, which is considered highly practical in UAV wildfire inspection. More results can be found in the

Section 4 of this paper.