Temperature Compensation Method for Digital Cameras in 2D and 3D Measurement Applications

Abstract

:1. Introduction

2. The Test Stand

3. Preliminary Studies

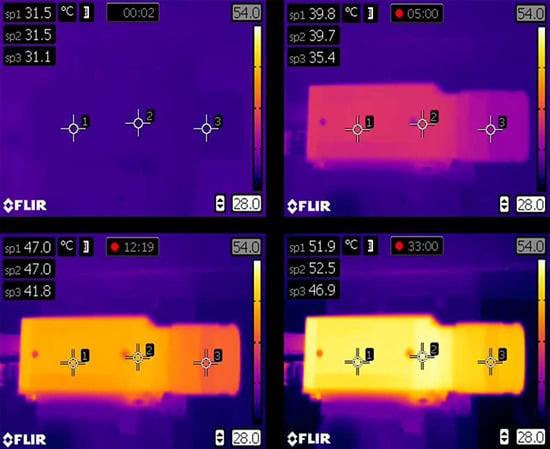

3.1. Warm-Up Effect

3.2. Tests in Thermal Chamber

4. Camera Modifications

5. Experiment with Modified Camera Unit

6. Compensation

6.1. The Compensation Model

6.2. Thermal Compensation

7. Results

- along the X axis: before compensation mm, after compensation mm,

- along the Y axis: before compensation mm, after compensation mm,

- along the Z axis: before compensation mm, after compensation mm.

8. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gorthi, S.S.; Rastogi, P. Fringe projection techniques: Whither we are? Opt. Lasers Eng. 2010, 48, 133–140. [Google Scholar] [CrossRef] [Green Version]

- Lenar, J.; Witkowski, M.; Carbone, V.; Kolk, S.D.; Adamczyk, M.; Sitnik, R.; van der Krogt, M.; Verdonschot, N. Lower body kinematics evaluation based on a multidirectional four-dimensional structured light measurement. J. Biomed. Opt. 2013, 18, 056014. [Google Scholar] [CrossRef] [PubMed]

- Michoński, J.; Glinkowski, W.; Witkowski, M.; Sitnik, R. Automatic recognition of surface landmarks of anatomical structures of back and posture. J. Biomed. Opt. 2012, 17, 056015. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sładek, J.; Sitnik, R.; Kupiec, M.; Błaszczyk, P. The hybrid coordinate measurement system as a response to industrial requirements. Metrol. Meas. Syst. 2010, 17, 537–547. [Google Scholar] [CrossRef]

- Adamczyk, M.; Sieniło, M.; Sitnik, R.; Woźniak, A. Hierarchical, Three-Dimensional Measurement System for investigation Scene Scanning. J. Forensic Sci. 2017, 62, 889–899. [Google Scholar] [CrossRef]

- Karaszewski, M.; Adamczyk, M.; Sitnik, R.; Michoński, J.; Załuski, W.; Bunsch, E.; Bolewicki, P. Automated full-3D digitization system for documentation of paintings. In Proceedings of the Optics for Arts, Architecture, and Archaeology IV, Munich, Germany, 13–16 May 2013; Volume 8790, p. 87900X. [Google Scholar] [CrossRef]

- Sitnik, R. New method of structure light measurement system calibration based on adaptive and effective evaluation of 3D-phase distribution. In Proceedings of the Optical Measurement Systems for Industrial Inspection IV, Munich, Germany, 13–17 June 2005; Volume 5856, pp. 109–117. [Google Scholar]

- Bräuer-Burchardt, C.; Kühmstedt, P.; Notni, G. Error compensation by sensor re-calibration in fringe projection based optical 3D stereo scanners. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2011; Volume 6979, pp. 363–373. [Google Scholar]

- Adamczyk, M.; Kamiński, M.; Sitnik, R.; Bogan, A.; Karaszewski, M. Effect of temperature on calibration quality of structured-light three-dimensional scanners. Appl. Opt. 2014, 53, 5154. [Google Scholar] [CrossRef] [PubMed]

- Lichti, D.; Skaloud, J.; Schaer, P. On the calibration strategy of medium format cameras for direct georeferencing. In Proceedings of the International Calibration and Orientation Workshop EuroCOW, Castelldefels, Spain, 30 January–1 February 2008; pp. 1–9. [Google Scholar]

- Shortis, M.; Robson, S.; Beyer, H. Principal point behaviour and calibration parameter models for Kodak DCS cameras. Photogramm. Rec. 1998, 16, 165–186. [Google Scholar] [CrossRef]

- Smith, M.J.; Cope, E. The Effect of Temperature Variation on Single Lens Reflex Digital Camera Calibration Parameters. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 554–559. [Google Scholar]

- McPherron, S.; Gernat, T.; Hublin, J.J. Structured light scanning for high-resolution documentation of in situ archaeological finds. J. Archaeol. Sci. 2009, 36, 19–24. [Google Scholar] [CrossRef]

- Roman, C.; Inglis, G.; Rutter, J. Application of structured light imaging for high resolution mapping of underwater archaeological sites. In Proceedings of the OCEANS10 IEEE SYDNEY, Sydney, Australia, 24–27 May 2010; pp. 1–9. [Google Scholar]

- Cai, Z.; Qin, H.; Liu, S.; Han, J. An Economical High Precision Machine Vision System and Its Performance Test Using Ceramic Substrate. In Recent Developments in Mechatronics and Intelligent Robotics; Deng, K., Yu, Z., Patnaik, S., Wang, J., Eds.; Springer International Publishing: Heidelberg, Germany, 2018; pp. 985–993. [Google Scholar]

- Lenty, B.; Kwiek, P.; Sioma, A. Quality control automation of electric cables using machine vision. In Proceedings of the Photonics Applications in Astronomy, Communications, Industry, and High-Energy Physics Experiments 2018, Wilga, Poland, 26 May–4 June 2018; Volume 10808. [Google Scholar]

- Handel, H. Analyzing the influences of camera warm-up effects on image acquisition. In Computer Vision— ACCV 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 258–268. [Google Scholar]

- Handel, H. Analyzing the influence of camera temperature on the image acquisition process. In Proceedings of the Three-Dimensional Image Capture and Applications 2008, San Jose, CA, USA, 27–31 January 2008; Volume 6805, pp. 1–8. [Google Scholar]

- Handel, H. Compensation of thermal errors in vision based measurement systems using a system identification approach. In Proceedings of the 2008 9th International Conference on Signal Processing, Beijing, China, 26–29 October 2008; pp. 1329–1333. [Google Scholar]

- Podbreznik, P.; Potočnik, B. Assessing the influence of temperature variations on the geometrical properties of a low-cost calibrated camera system by using computer vision procedures. Mach. Vis. Appl. 2012, 23, 953–966. [Google Scholar] [CrossRef]

- Robson, S.; MacDonald, L.; Kyle, S.; Shortis, M.R. Close Range Calibration of Long Focal Length Lenses in a Changing Environment. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 115–122. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Yu, Q.; Chao, Z.; Jiang, G.; Shang, Y.; Fu, S.; Liu, X.; Zhu, X.; Liu, H. The effects of temperature variation on videometric measurement and a compensation method. Image Vis. Comput. 2014, 32, 1021–1029. [Google Scholar] [CrossRef]

- Bönsch, G.; Potulski, E. Measurement of the refractive index of air and comparison with modified Edlén’s formulae. Metrologia 1998, 35, 133. [Google Scholar] [CrossRef]

- Flir Exx Series Datasheet. Available online: http://www.flir.com/uploadedFiles/Thermography_USA/Products/Product_Literature/flir-e-series-datasheet.pdf (accessed on 6 October 2018).

- 8MT160-300—Motorized Delay Line. Available online: http://www.standa.lt/products/catalog/motorised_positioners?item=61&prod=motorized_delay_line&print=1 (accessed on 6 December 2018).

- 8MR151—Motorized Rotation Stages. Available online: http://www.standa.lt/products/catalog/motorised_positioners?item=9&prod=motorized_rotation_stages&print=1 (accessed on 6 November 2018).

- Chen, R.; Xu, J.; Chen, H.; Su, J.; Zhang, Z.; Chen, K. Accurate calibration method for camera and projector in fringe patterns measurement system. Appl. Opt. 2016, 55, 4293–4300. [Google Scholar] [CrossRef] [PubMed]

- Flir Grasshopper 2 GigE Vision Datasheet. Available online: https://www.ptgrey.com/support/downloads/10309 (accessed on 7 October 2018).

- Factory Automation/Machine Vision-Fixed Focal. Available online: http://www.fujifilmusa.com/shared/bin/1.5Mega-Pixel%202.3%201.2.pdf (accessed on 7 October 2018).

- The Basler acA2500-14gc GigE Camera with the ON Semiconductor MT9P031. Available online: https://www.baslerweb.com/en/products/cameras/area-scan-cameras/ace/aca2500-14gc/ (accessed on 7 October 2018).

- M23FM12. Available online: http://www.tamron.biz/en/data/fa/fa_mg/m23fm12.html (accessed on 7 October 2018).

- OpenCV 2.4.13.3 Documentation. Available online: http://docs.opencv.org/2.4/index.html (accessed on 6 December 2018).

- Single Camera Calibration App—Documentation. Available online: https://www.mathworks.com/help/vision/ug/single-camera-calibrator-app.html (accessed on 6 December 2018).

- Liu, Z.; Wu, Q.; Chen, X.; Yin, Y. High-accuracy calibration of low-cost camera using image disturbance factor. Opt. Express 2016, 24, 24321–24336. [Google Scholar] [CrossRef] [PubMed]

- ISO 10360-2:2009: Geometrical Product Specifications (GPS)—Acceptance and Reverification Tests for Coordinate Measuring Machines (CMM). Part 2: CMMs Used for Measuring Linear Dimensions. Available online: http://www.iso.org/iso/iso_catalogue/catalogue_tc/catalogue_detail.htm?csnumber=40954 (accessed on 5 December 2018).

- Press, H.W.; Teukolsky, A.S.; Vetterling, T.W.; Flannery, P.B. Numerical Recipes 3rd Edition: The Art of Scientific Computing; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Berthold, J.; Jacobs, S.; Norton, M.A. Dimensional Stability of Fused Silica, Invar, and Several Ultra-low Thermal Expansion Materials. Metrologia 1977, 13, 9. [Google Scholar] [CrossRef]

- Rustum, R.; Dinesh, A.; McKinstry, H.A. Very Low Thermal Expansion Coefficient Materials. Annu. Rev. Mater. Sci. 1989, 19, 59–81. [Google Scholar] [CrossRef]

- Dietz, W.R.; Bennet, M.J. Smoothness and Thermal Stability of Cer-Vit Optical Material. Appl. Opt. 1967, 6, 1275–1276. [Google Scholar] [CrossRef] [PubMed]

- Krause, D.; Bach, H. Low Thermal Expansion Glass Ceramics, 2nd ed.; Schott Series on Glass and Glass Ceramics; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

| Translation Deviation [mm] | |||||||

|---|---|---|---|---|---|---|---|

| Temp. | Pos. | Before Compensation | After Compensation | ||||

| X | Y | Z | X | Y | Z | ||

| 55.55 | V0 | 0.0171 | 0.0289 | 0.1174 | 0.0093 | −0.0034 | 0.0354 |

| V1 | −0.0093 | 0.0193 | 0.2016 | −0.0139 | −0.0081 | 0.0341 | |

| V2 | 0.0000 | 0.0537 | 0.0174 | −0.0041 | 0.0312 | −0.0761 | |

| V3 | 0.0157 | 0.0341 | 0.0481 | 0.0092 | 0.0150 | −0.0244 | |

| aver (abs) | 0.0105 | 0.0340 | 0.0961 | 0.0091 | 0.0144 | 0.0425 | |

| 67.05 | V0 | −0.0366 | −0.1943 | 0.1911 | 0.0026 | −0.0058 | −0.0156 |

| V1 | −0.0129 | −0.2312 | 0.3267 | 0.0327 | −0.0121 | 0.1198 | |

| V2 | −0.0352 | −0.2235 | 0.2352 | 0.0228 | 0.0592 | 0.0187 | |

| V3 | −0.0344 | −0.2015 | 0.1775 | 0.0234 | 0.0873 | −0.0455 | |

| aver (abs) | 0.0298 | 0.2126 | 0.2326 | 0.0204 | 0.0411 | 0.0499 | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adamczyk, M.; Liberadzki, P.; Sitnik, R. Temperature Compensation Method for Digital Cameras in 2D and 3D Measurement Applications. Sensors 2018, 18, 3685. https://doi.org/10.3390/s18113685

Adamczyk M, Liberadzki P, Sitnik R. Temperature Compensation Method for Digital Cameras in 2D and 3D Measurement Applications. Sensors. 2018; 18(11):3685. https://doi.org/10.3390/s18113685

Chicago/Turabian StyleAdamczyk, Marcin, Paweł Liberadzki, and Robert Sitnik. 2018. "Temperature Compensation Method for Digital Cameras in 2D and 3D Measurement Applications" Sensors 18, no. 11: 3685. https://doi.org/10.3390/s18113685