Adaptive Monocular Visual–Inertial SLAM for Real-Time Augmented Reality Applications in Mobile Devices

Abstract

:1. Introduction

2. Related Work

- The VO module estimates the approximate 3D camera pose and structure from adjacent images to provide better optimization of the initial value for the back-end. Visual SLAM is divided into feature-based SLAM and appearance-based SLAM depending on whether the feature points are extracted in VO.

- The mapping module creates a map that will be used mainly in SLAM, and can be used for navigation, visualization, and interaction. The map is divided into a metric map and topological map, according to the type of information. The metric map accurately represents the positional relationship of objects, which are usually divided into sparse and dense objects.

- The loop-closure detection module determines whether the camera arrives at a scene it has captured before. Loop-closure solves the problem of drifting of the estimated positions over time.

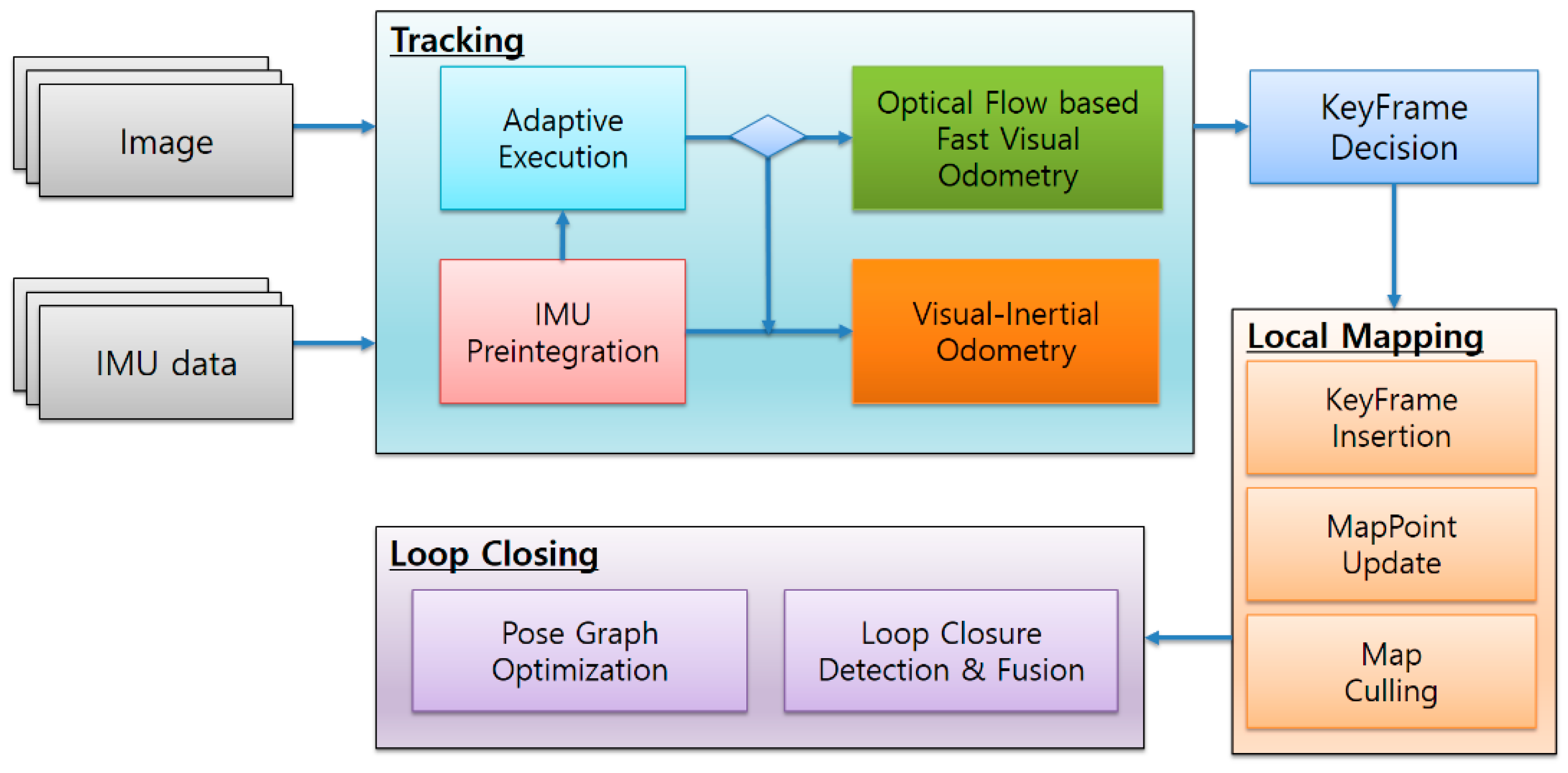

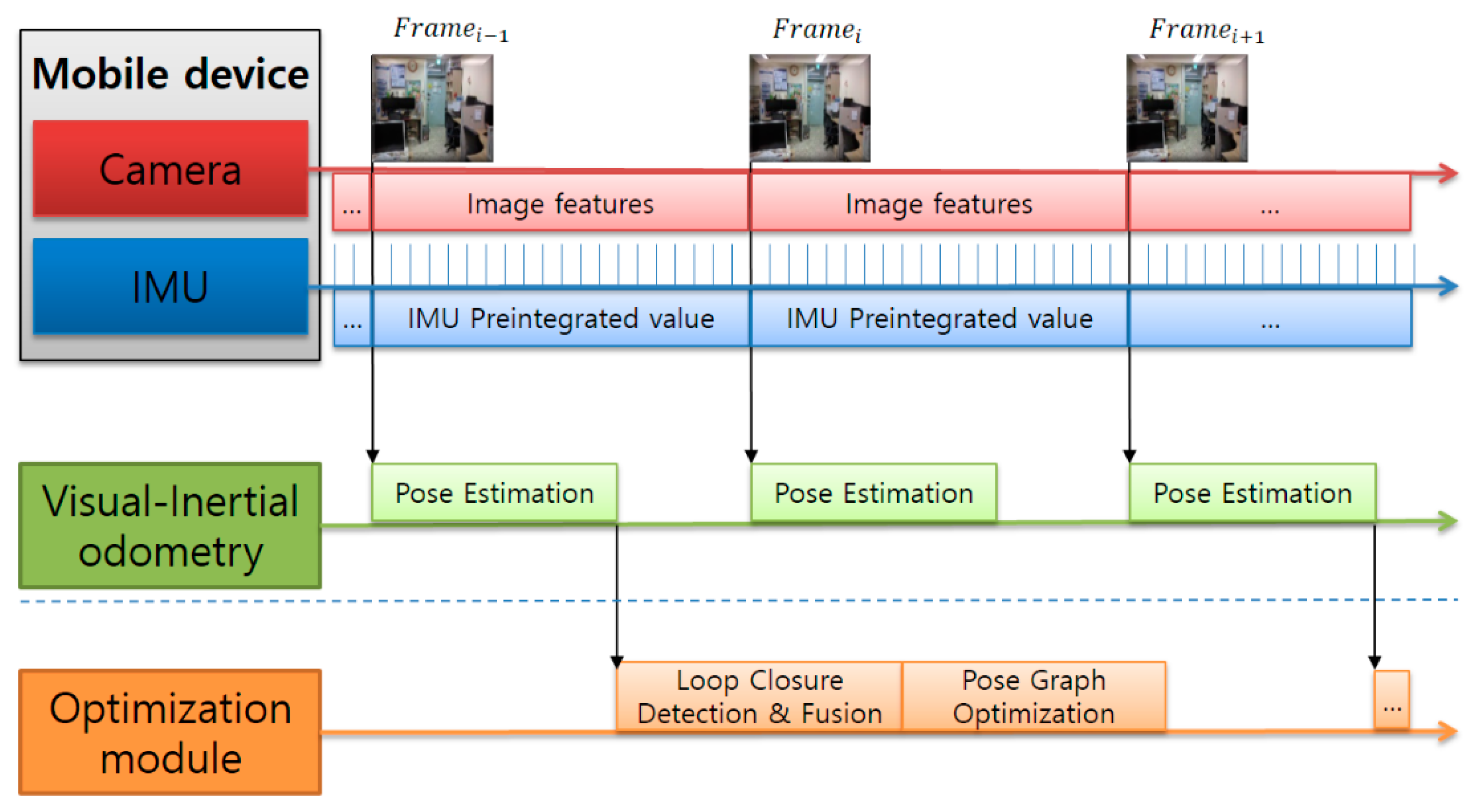

3. Methodologies

3.1. Visual–Inertial Odometry

3.1.1. IMU Preintegration

3.1.2. Initialization

3.1.3. Pose Estimation

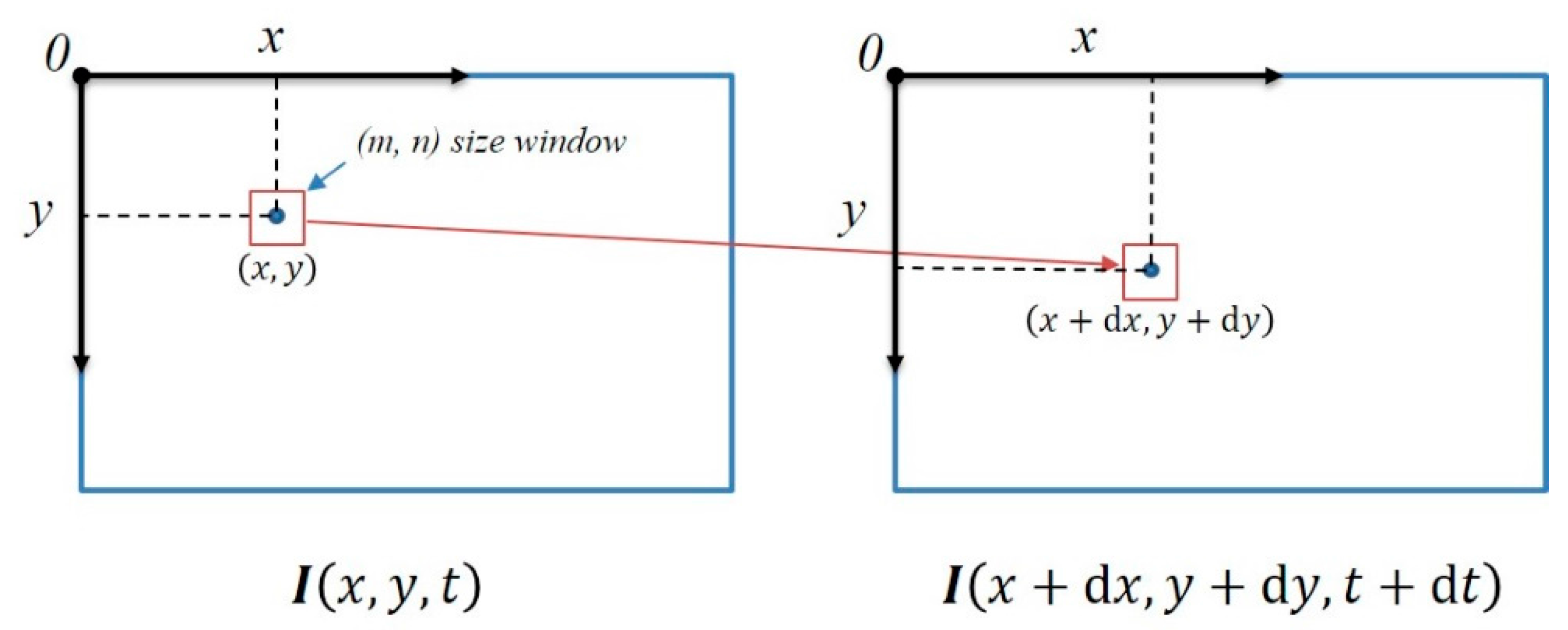

3.2. Optical-Flow-Based Fast Visual Odometry

3.2.1. Advanced KLT Feature Tracking Algorithm

3.2.2. Pose Estimation

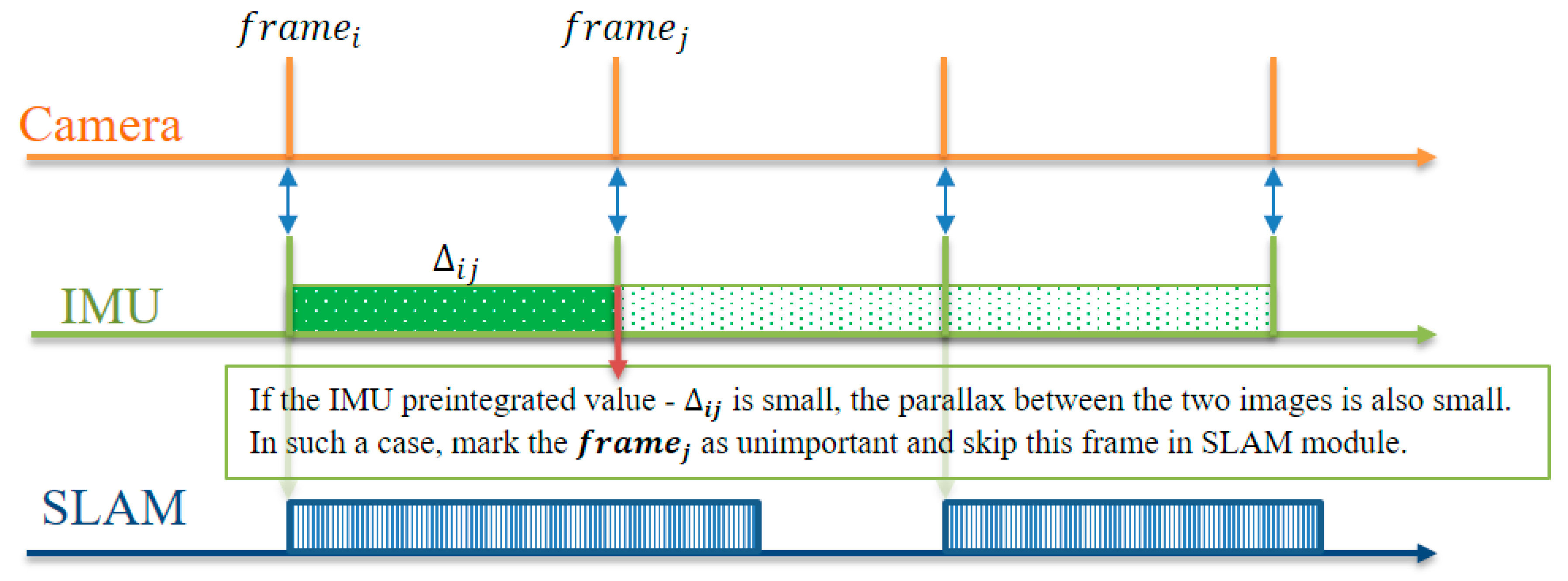

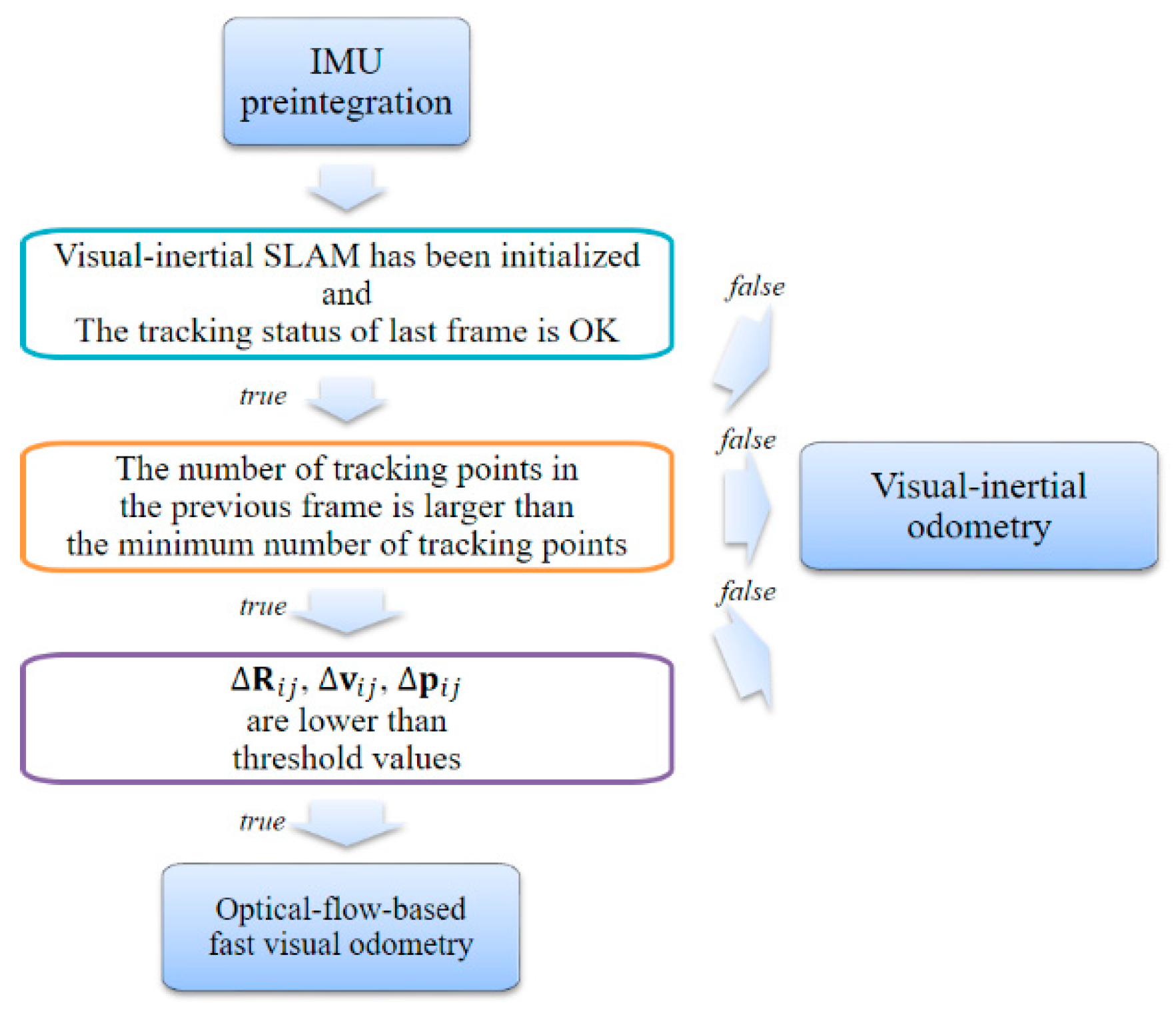

3.3. Adaptive Execution Module

3.3.1. Adaptive Selection Visual Odometry

3.3.2. Adaptive Execution Policies

4. Experiments and Results

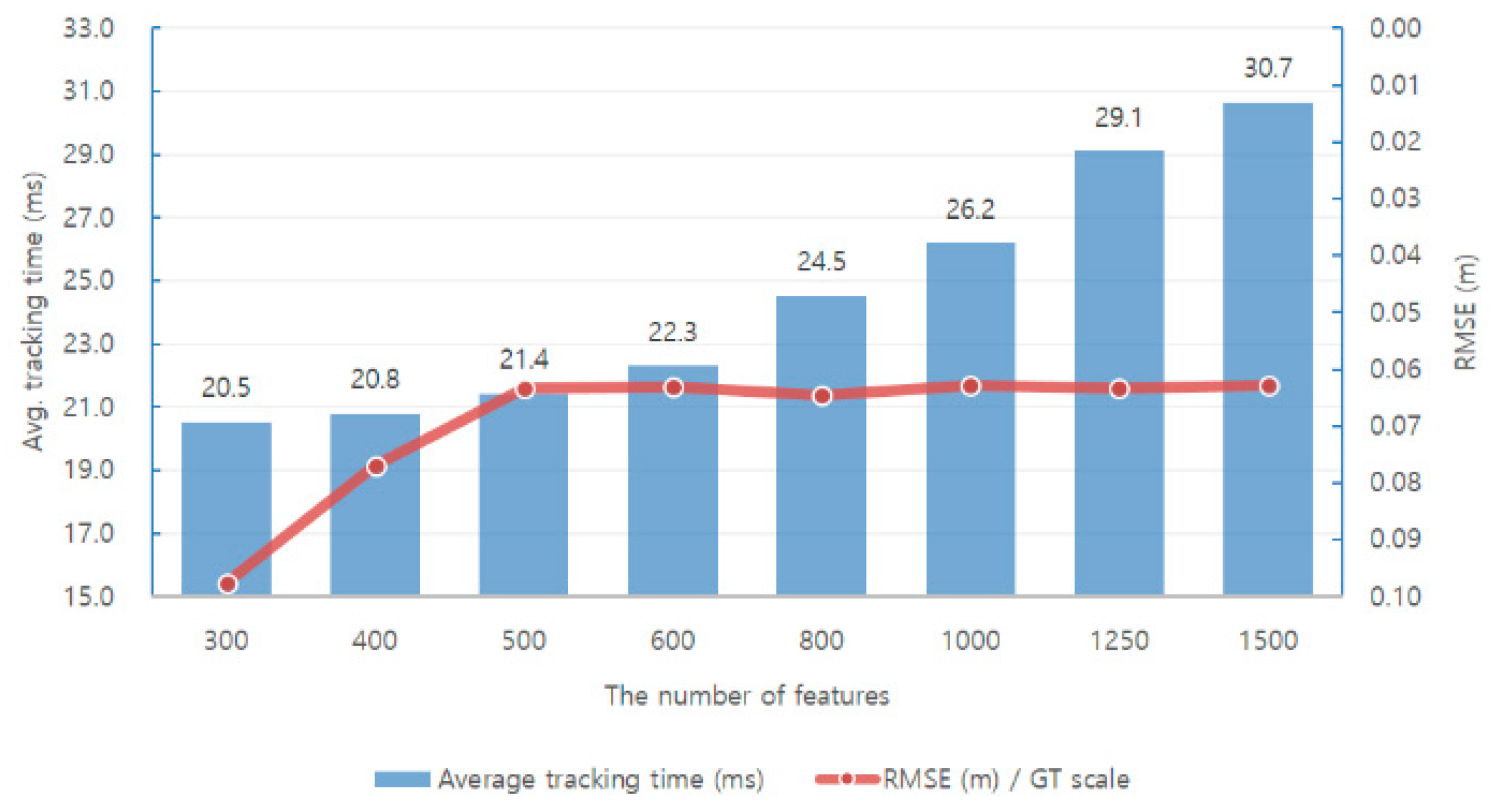

4.1. Monocular ORB-SLAM Evaluation

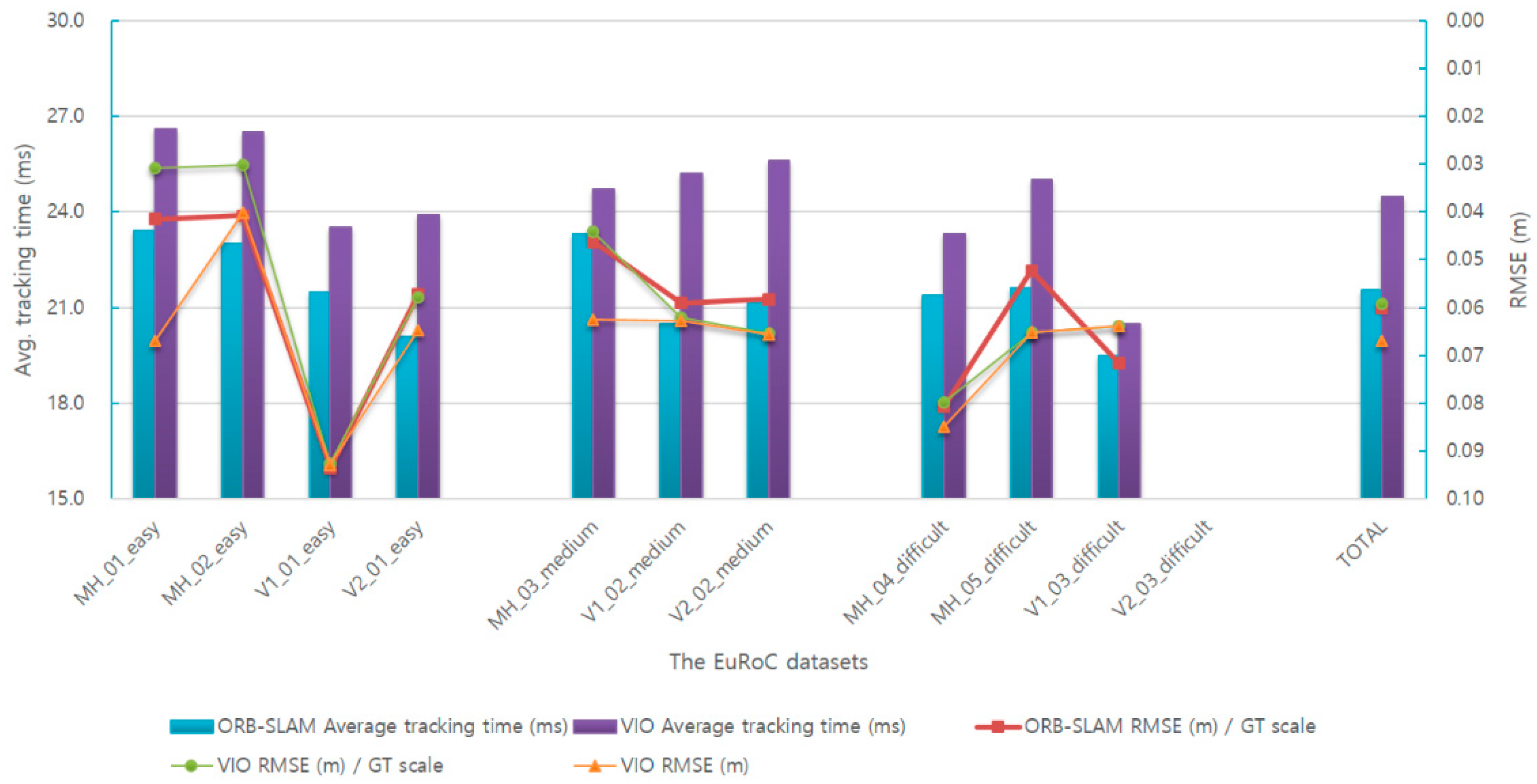

4.2. Visual–Inertial Odometry SLAM Evaluation

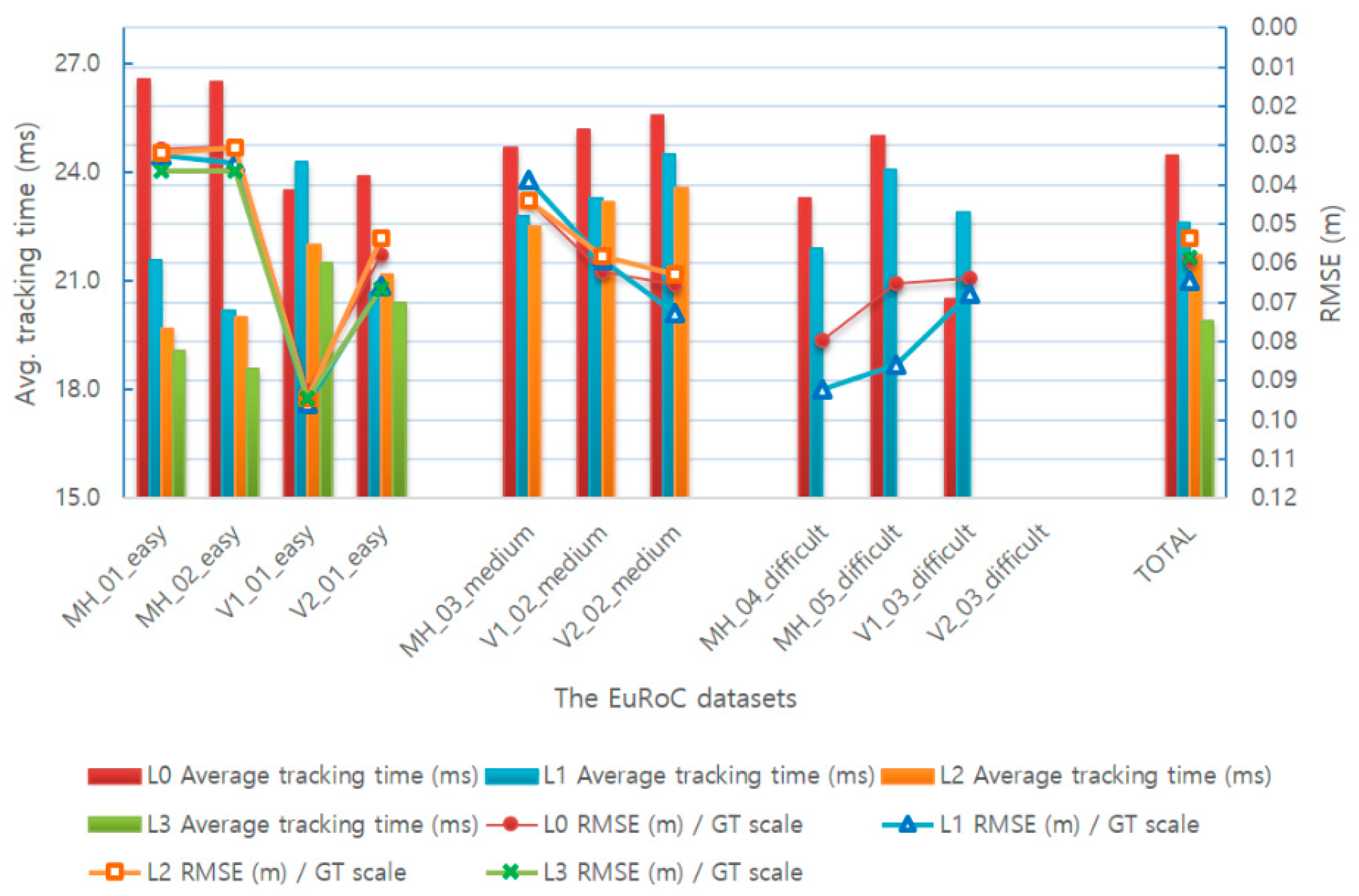

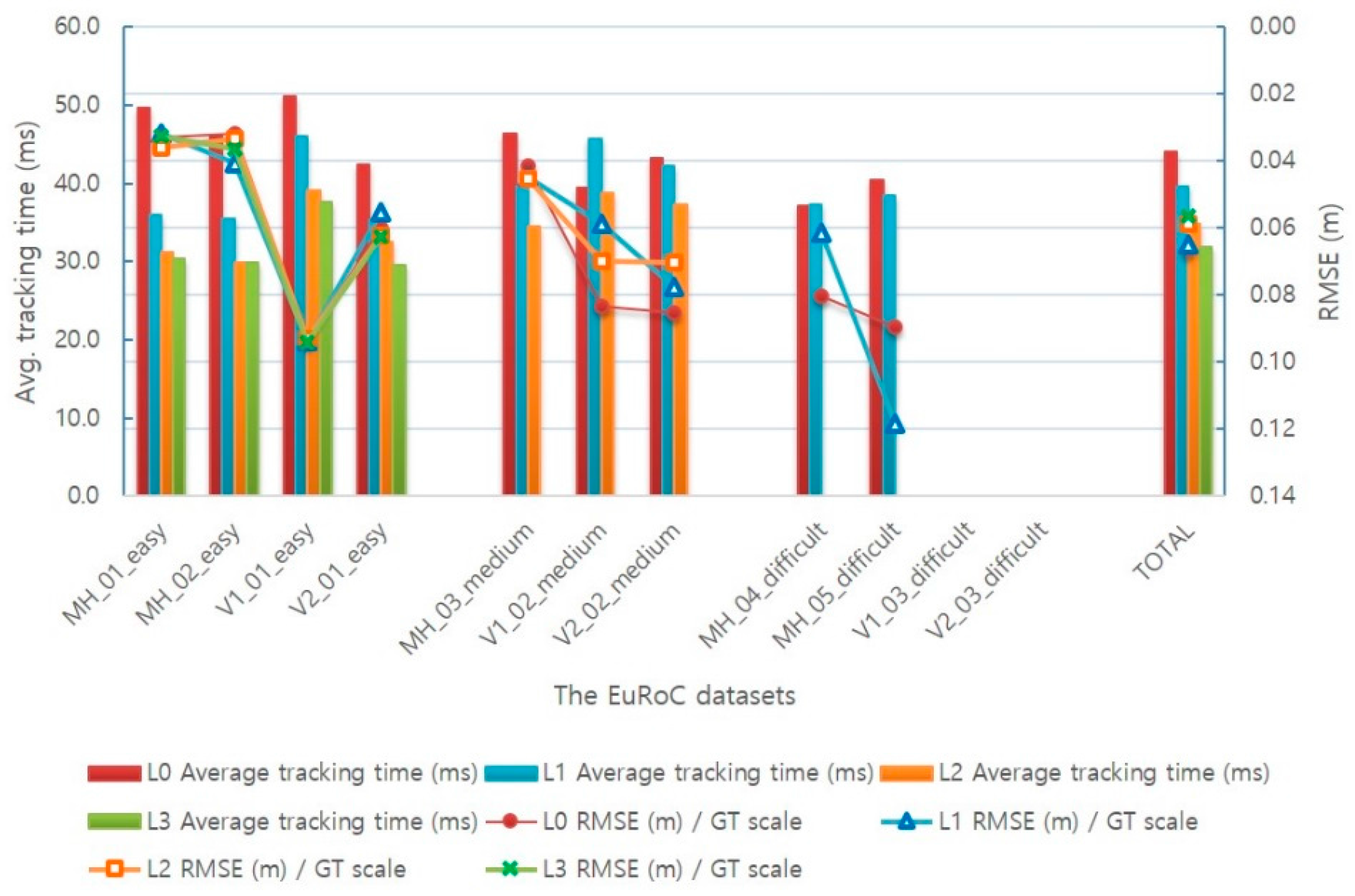

4.3. Adaptive Visual–Inertial Odometry SLAM Evaluation

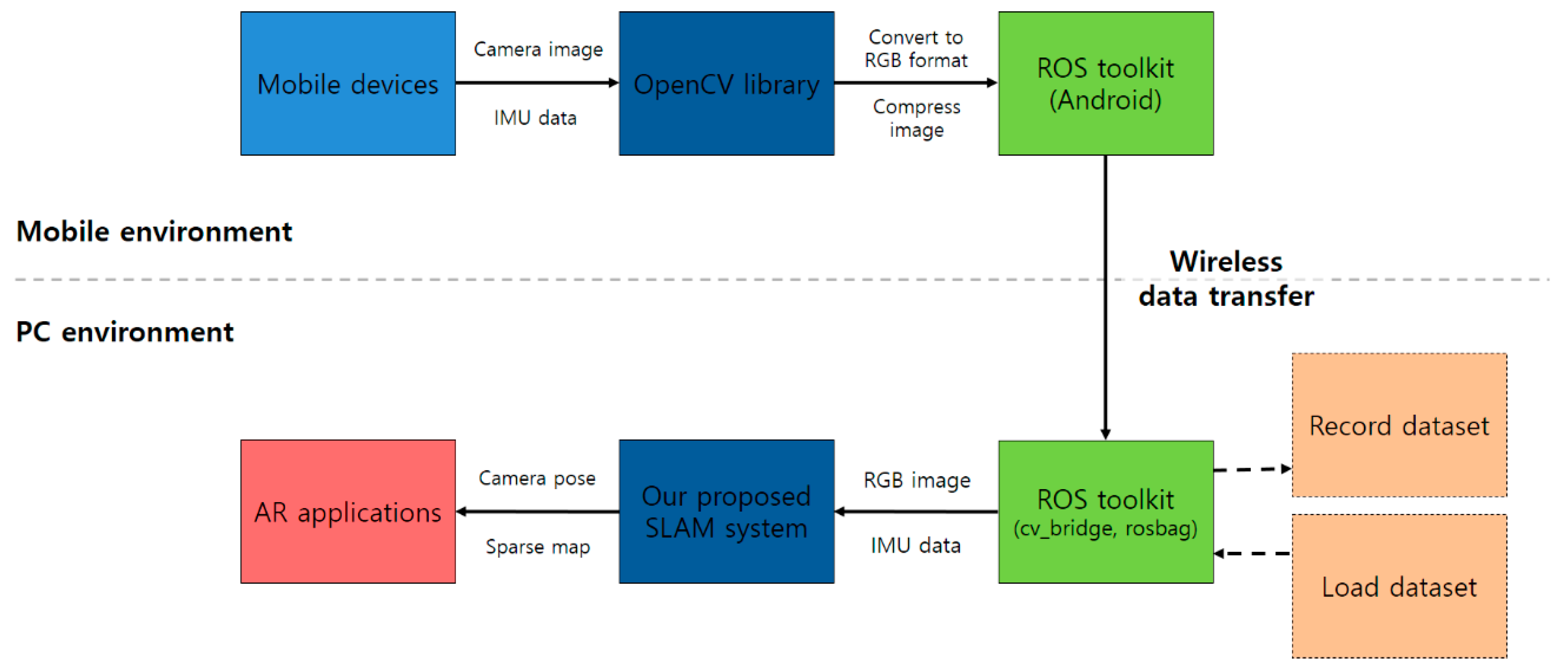

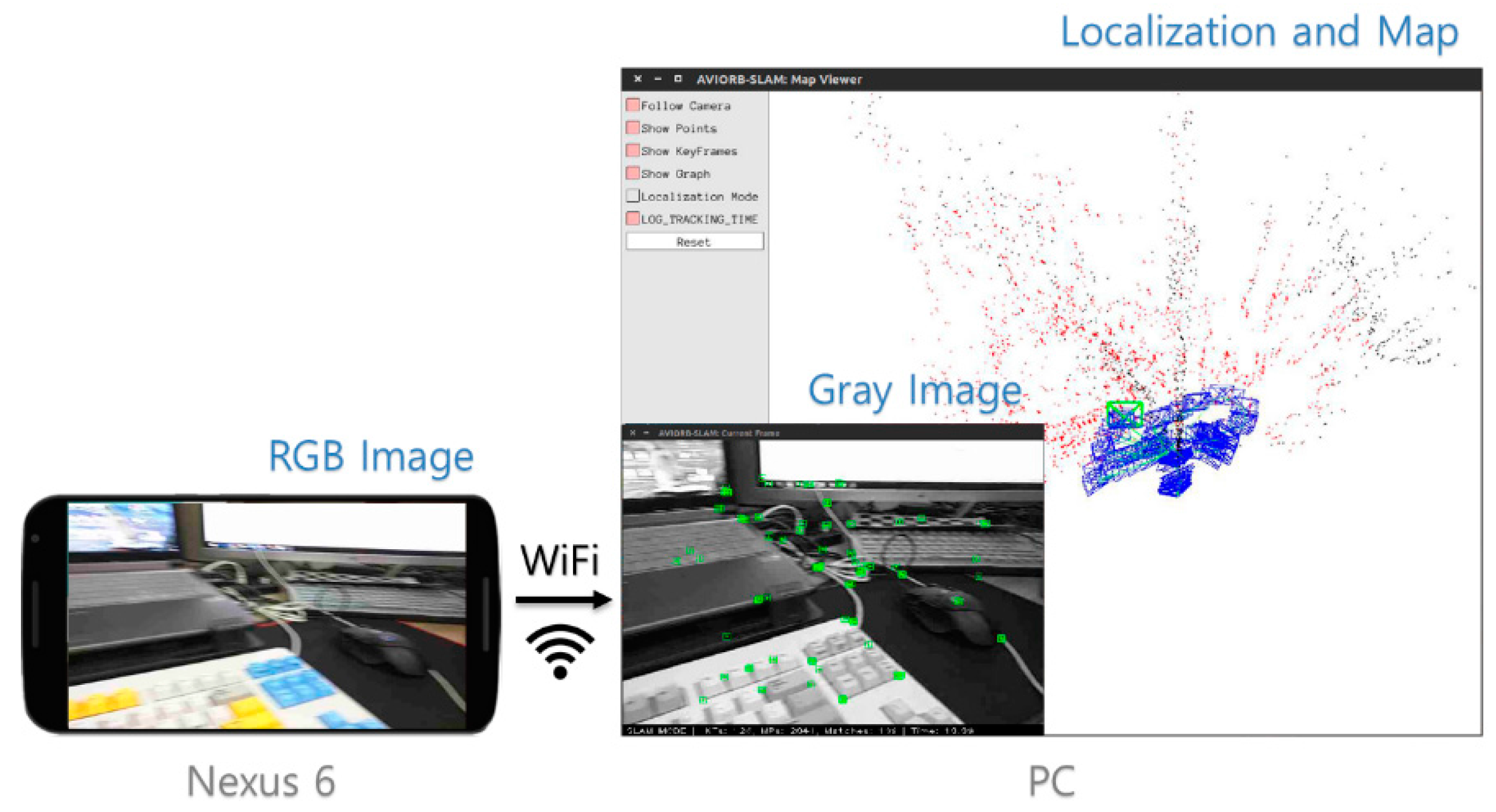

4.4. Experiments of the Adaptive Visual–Inertial Odometry SLAM with Mobile Device Sensors

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. Orb: An efficient alternative to sift or surf. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. Orb-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Angeli, A.; Filliat, D.; Doncieux, S.; Meyer, J.-A. Fast and incremental method for loop-closure detection using bags of visual words. IEEE Trans. Robot. 2008, 24, 1027–1037. [Google Scholar] [CrossRef] [Green Version]

- Greig, D.M.; Porteous, B.T.; Seheult, A.H. Exact maximum a posteriori estimation for binary images. J. R. Stat. Soc. Ser. B 1989, 51, 271–279. [Google Scholar]

- Strasdat, H.; Montiel, J.M.; Davison, A.J. Visual SLAM: Why filter? Image Vis. Comput. 2012, 30, 65–77. [Google Scholar] [CrossRef]

- Faugeras, O.D.; Lustman, F. Motion and structure from motion in a piecewise planar environment. Int. J. Pattern Recognit. Artif. Intell. 1988, 2, 485–508. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Davison, A.J. Real-time simultaneous localisation and mapping with a single camera. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; p. 1403. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. Mono SLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Civera, J.; Davison, A.J.; Montiel, J.M. Inverse depth parametrization for monocular SLAM. IEEE Trans. Robot. 2008, 24, 932–945. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International Workshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Mur-Artal, R.; Tardós, D. Fast relocalisation and loop closing in keyframe-based SLAM. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 846–853. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Mur-Artal, R. ORB_SLAM2. Available online: https://github.com/raulmur/ORB_SLAM2 (accessed on 19 January 2017).

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. Dtam: Dense tracking and mapping in real-time. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2320–2327. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. Lsd- SLAM: Large-scale direct monocular SLAM. In Computer Vision—ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017. [Google Scholar] [CrossRef] [PubMed]

- Weiss, S.; Achtelik, M.W.; Lynen, S.; Chli, M.; Siegwart, R. Real-time onboard visual-inertial state estimation and self-calibration of mavs in unknown environments. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 957–964. [Google Scholar]

- Fang, W.; Zheng, L.; Deng, H.; Zhang, H. Real-time motion tracking for mobile augmented/virtual reality using adaptive visual-inertial fusion. Sensors 2017, 17, 1037. [Google Scholar] [CrossRef] [PubMed]

- Li, M. Visual-Inertial Odometry on Resource-Constrained Systems. Ph.D. Thesis, University of California, Riverside, CA, USA, 2014. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct ekf-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 298–304. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-based Visual-Inertial odometry using nonlinear optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef]

- Piao, J.-C.; Jung, H.-S.; Hong, C.-P.; Kim, S.-D. Improving performance on object recognition for real-time on mobile devices. Multimedia Tool. Appl. 2015, 75, 9623–9640. [Google Scholar] [CrossRef]

- Yang, Z.; Shen, S. Monocular visual-inertial state estimation with online initialization and camera-imu extrinsic calibration. IEEE Trans. Autom. Sci. Eng. 2017, 14, 39–51. [Google Scholar] [CrossRef]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1280–1286. [Google Scholar]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-manifold preintegration for real-time visual-inertial odometry. IEEE Trans. Robot. 2016, 33, 1–21. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Nonlinear Programming; Athena scientific: Belmont, MA, USA, 1999. [Google Scholar]

- Moré, J.J. The levenberg-marquardt algorithm: Implementation and theory. In Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G20: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Rives, P. Visual servoing based on epipolar geometry. In Proceedings of the 2000 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2000), Takamatsu, Japan, 31 October–5 November 2000; pp. 602–607. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Golub, G.H.; Reinsch, C. Singular value decomposition and least squares solutions. Numer. Math. 1970, 14, 403–420. [Google Scholar] [CrossRef]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The euroc micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?—Arguments against avoiding rmse in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Horn, B.K. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 573–580. [Google Scholar]

- Bradski, G. The OpenCV Library. Available online: http://www.drdobbs.com/open-source/the-opencv-library/184404319 (accessed on 7 November 2017).

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source robot operating system. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009. [Google Scholar]

| Abbreviations | Definition |

|---|---|

| IMU | Inertial measurement units |

| VO | Visual odometry |

| VIO | Visual–inertial odometry |

| AVIO | Adaptive visual–inertial odometry |

| RMSE | Root-mean-square error |

| GT scale | Match the ground-truth scale |

| ROS | Robot operating system |

| Mode | Modules | Characteristics |

|---|---|---|

| Level 0 | Visual–inertial odometry SLAM | Accuracy priority, no adaptive module |

| Level 1 | Visual–inertial odometry and optical-flow-based fast visual odometry | Balance, suitable for all scenes |

| Level 2 | Visual–inertial odometry and optical-flow-based fast visual odometry | Speed priority, suitable for easy and medium scenes |

| Level 3 | Visual–inertial odometry and optical-flow-based fast visual odometry | Speed priority, suitable for only easy scenes |

| Desktop Specification | |

| CPU | Intel Core i7-6700K |

| RAM | SDRAM 16 GB |

| OS | Ubuntu 14.04 |

| Phone Specification | |

| Model name | Nexus 6 |

| Android version | 7.0 Nougat |

| Development Software Environment | |

| OpenCV | 3.2.0 version |

| ROS version | ROS Indigo |

| Benchmark dataset | EuRoC dataset |

| Feature | MH_01_e | MH_02_e | V1_01_e | V2_01_e | MH_03_m | V1_02_m | V2_02_m | MH_04_d | MH_05_d | V1_03_d | V2_03_d |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 300 | 22.8 | 22.3 | 20.5 | 19.0 | 22.0 | 19.2 | 19.5 | 21.5 | 20.9 | 17.7 | X |

| 0.057 | 0.052 | 0.093 | 0.057 | 0.064 | 0.069 | 0.087 | 0.216 | 0.150 | 0.131 | X | |

| 220 | 0 | 72 | 114 | 63 | 25 | 68 | 263 | 225 | 99 | X | |

| 400 | 23.2 | 22.1 | 20.8 | 19.9 | 22.5 | 19.9 | 19.7 | 20.9 | 21.1 | 18.0 | X |

| 0.043 | 0.047 | 0.094 | 0.060 | 0.045 | 0.065 | 0.071 | 0.147 | 0.054 | 0.146 | X | |

| 16 | 0 | 0 | 110 | 62 | 0 | 67 | 261 | 26 | 64 | X | |

| 500 | 23.4 | 23.0 | 21.5 | 20.1 | 23.3 | 20.5 | 21.2 | 21.4 | 21.6 | 19.5 | 20.0 |

| 0.042 | 0.041 | 0.094 | 0.057 | 0.046 | 0.059 | 0.058 | 0.081 | 0.052 | 0.072 | 0.095 | |

| 0 | 0 | 0 | 109 | 0 | 0 | 67 | 0 | 0 | 59 | 250 | |

| 600 | 25.1 | 24.6 | 23.1 | 21.0 | 23.6 | 21.3 | 22.7 | 21.9 | 22.1 | 20.6 | 19.2 |

| 0.046 | 0.037 | 0.095 | 0.059 | 0.039 | 0.064 | 0.056 | 0.076 | 0.052 | 0.064 | 0.104 | |

| 0 | 0 | 0 | 109 | 0 | 0 | 67 | 0 | 0 | 58 | 146 | |

| 800 | 27.8 | 26.0 | 26.2 | 22.4 | 25.5 | 23.3 | 26.0 | 23.2 | 23.6 | 22.3 | 23.3 |

| 0.043 | 0.036 | 0.097 | 0.057 | 0.041 | 0.064 | 0.056 | 0.087 | 0.068 | 0.064 | 0.097 | |

| 0 | 0 | 0 | 108 | 0 | 0 | 67 | 0 | 0 | 58 | 143 | |

| 1000 | 29.5 | 28.4 | 28.8 | 24.2 | 27.2 | 25.2 | 27.4 | 25.6 | 24.5 | 23.0 | 24.3 |

| 0.046 | 0.036 | 0.095 | 0.060 | 0.038 | 0.063 | 0.058 | 0.056 | 0.052 | 0.066 | 0.123 | |

| 0 | 0 | 0 | 108 | 0 | 0 | 0 | 0 | 0 | 117 | 121 | |

| 1250 | 32.3 | 30.9 | 32.2 | 27.9 | 29.5 | 27.7 | 31.1 | 27.8 | 27.5 | 26.5 | 26.7 |

| 0.044 | 0.035 | 0.095 | 0.058 | 0.039 | 0.064 | 0.058 | 0.062 | 0.050 | 0.071 | 0.119 | |

| 0 | 0 | 0 | 108 | 0 | 0 | 0 | 0 | 0 | 52 | 136 | |

| 1500 | 33.5 | 32.9 | 33.6 | 29.4 | 31.4 | 29.7 | 32.0 | 29.3 | 29.3 | 27.8 | 28.3 |

| 0.044 | 0.035 | 0.096 | 0.056 | 0.038 | 0.064 | 0.057 | 0.051 | 0.050 | 0.071 | 0.128 | |

| 0 | 0 | 0 | 108 | 0 | 0 | 0 | 0 | 0 | 55 | 127 |

| ORB-SLAM (500) | VIO-SLAM (500) | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | ORB Average Tracking Time (ms) | ORB RMSE (m)/GT Scale | Lost Tracking Frame Count | VIO Average Tracking Time (ms) | VIO RMSE (m)/GT Scale | Scale Error | VIO RMSE (m) | Lost Tracking Frame Count |

| MH_01_easy | 23.4 | 0.042 | 0 | 26.6 | 0.031 | 1.3% | 0.067 | 0 |

| MH_02_easy | 23.0 | 0.041 | 0 | 26.5 | 0.030 | 0.6% | 0.040 | 0 |

| V1_01_easy | 21.5 | 0.094 | 0 | 23.5 | 0.093 | 0.3% | 0.093 | 0 |

| V2_01_easy | 20.1 | 0.057 | 109 | 23.9 | 0.058 | 1.2% | 0.065 | 108 |

| Easy level | 22.0 | 0.058 | 109 | 25.1 | 0.053 | 0.86% | 0.066 | 108 |

| MH_03_medium | 23.3 | 0.046 | 0 | 24.7 | 0.044 | 1.1% | 0.063 | 0 |

| V1_02_medium | 20.5 | 0.059 | 0 | 25.2 | 0.062 | 0.5% | 0.063 | 0 |

| V2_02_medium | 21.2 | 0.058 | 67 | 25.6 | 0.066 | 0.2% | 0.066 | 0 |

| Medium level | 21.7 | 0.055 | 67 | 25.2 | 0.057 | 0.61% | 0.064 | 0 |

| MH_04_difficult | 21.4 | 0.081 | 0 | 23.3 | 0.080 | 0.4% | 0.085 | 0 |

| MH_05_difficult | 21.6 | 0.052 | 0 | 25.0 | 0.065 | 0% | 0.065 | 0 |

| V1_03_difficult | 19.5 | 0.072 | 59 | 20.5 | 0.064 | 0.1% | 0.064 | 0 |

| V2_03_difficult | X | X | X | X | X | X | X | X |

| Difficult level | 20.8 | 0.068 | 59 | 22.9 | 0.070 | 0.18% | 0.071 | 0 |

| Total | 21.6 | 0.060 | 235 | 24.5 | 0.059 | 0.58% | 0.067 | 108 |

| Optical-Flow-Based Fast Visual Odometry | Mean Time (ms) | Visual–Inertial Odometry | Mean Time (ms) |

|---|---|---|---|

| Obtain keypoints | 0.16 | ORB extraction | 20.85 |

| Advanced KLT tracking | 2.53 | Initial Pose Estimation with IMU | 5.09 |

| Pose Estimation | 6.13 | TrackLocalMap with IMU | 8.02 |

| Total | 8.82 | 33.96 |

| VIO (500)-L0 | AVIO-L1 (Suitable for All) | AVIO-L2 (Suitable for Easy & Medium) | AVIO-L3 (Suitable for Easy) | |||||

|---|---|---|---|---|---|---|---|---|

| Dataset | Level 0 Average Tracking Time (ms) | Level 0 RMSE (m)/GT Scale | Level 1 Average Tracking Time (ms) | Level 1 RMSE (m)/GT Scale | Level 2 Average Tracking Time (ms) | Level 2 RMSE (m)/GT Scale | Level 3 Average Tracking Time (ms) | Level 3 RMSE (m)/GT Scale |

| MH_01_easy | 26.6 | 0.031 | 21.6 | 0.032 | 19.7 | 0.032 | 19.1 | 0.036 |

| MH_02_easy | 26.5 | 0.030 | 20.2 | 0.034 | 20.0 | 0.031 | 18.6 | 0.036 |

| V1_01_easy | 23.5 | 0.093 | 24.3 | 0.096 | 22.0 | 0.094 | 21.5 | 0.094 |

| V2_01_easy | 23.9 | 0.058 | 20.7 | 0.066 | 21.2 | 0.054 | 20.4 | 0.066 |

| Easy level | 25.1 | 0.053 | 21.7 | 0.057 | 20.7 | 0.053 | 19.9 | 0.058 |

| MH_03_medium | 24.7 | 0.044 | 22.8 | 0.039 | 22.5 | 0.044 | X | X |

| V1_02_medium | 25.2 | 0.062 | 23.3 | 0.059 | 23.2 | 0.058 | X | X |

| V2_02_medium | 25.6 | 0.066 | 24.5 | 0.073 | 23.6 | 0.063 | X | X |

| Medium level | 25.2 | 0.057 | 23.5 | 0.057 | 23.1 | 0.055 | X | X |

| MH_04_difficult | 23.3 | 0.080 | 21.9 | 0.092 | X | X | X | X |

| MH_05_difficult | 25.0 | 0.065 | 24.1 | 0.086 | X | X | X | X |

| V1_03_difficult | 20.5 | 0.064 | 22.9 | 0.068 | X | X | X | X |

| V2_03_difficult | X | X | X | X | X | X | X | X |

| Difficult level | 22.9 | 0.070 | 23.0 | 0.082 | X | X | X | X |

| Total | 24.5 | 0.059 | 22.6 | 0.064 | 21.9 | 0.054 | 19.9 | 0.058 |

| VIO (500)-L0 | AVIO-L1 (Suitable for All) | AVIO-L2 (Suitable for Easy & Medium) | AVIO-L3 (SUITABLe for Easy) | |||||

|---|---|---|---|---|---|---|---|---|

| Dataset | Level 0 Average Tracking Time (ms) | Level 0 RMSE (m)/GT Scale | Level 1 Average Tracking Time (ms) | Level 1 RMSE (m)/GT Scale | Level 2 Average Tracking Time (ms) | Level 2 RMSE (m)/GT Scale | Level 3 Average Tracking Time (ms) | Level 3 RMSE (m)/GT Scale |

| MH_01_easy | 49.6 | 0.033 | 36.0 | 0.032 | 31.3 | 0.036 | 30.4 | 0.032 |

| MH_02_easy | 46.1 | 0.032 | 35.5 | 0.041 | 29.9 | 0.033 | 29.9 | 0.036 |

| V1_01_easy | 51.1 | 0.093 | 46.0 | 0.094 | 39.1 | 0.093 | 37.6 | 0.094 |

| V2_01_easy | 42.5 | 0.060 | 35.3 | 0.055 | 32.5 | 0.062 | 29.5 | 0.062 |

| Easy level | 47.3 | 0.054 | 38.2 | 0.055 | 33.2 | 0.056 | 31.9 | 0.056 |

| MH_03_medium | 46.4 | 0.041 | 39.7 | 0.045 | 34.5 | 0.045 | X | X |

| V1_02_medium | 39.4 | 0.083 | 45.8 | 0.059 | 38.8 | 0.070 | X | X |

| V2_02_medium | 43.3 | 0.085 | 42.3 | 0.077 | 37.3 | 0.070 | X | X |

| Medium level | 43.0 | 0.070 | 42.6 | 0.060 | 36.9 | 0.062 | X | X |

| MH_04_difficult | 37.2 | 0.080 | 37.4 | 0.061 | X | X | X | X |

| MH_05_difficult | 40.4 | 0.089 | 38.5 | 0.118 | X | X | X | X |

| V1_03_difficult | X | X | X | X | X | X | X | X |

| V2_03_difficult | X | X | X | X | X | X | X | X |

| Difficult level | 38.8 | 0.085 | 38.0 | 0.090 | X | X | X | X |

| Total | 44.0 | 0.066 | 39.6 | 0.065 | 34.8 | 0.059 | 31.9 | 0.056 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Piao, J.-C.; Kim, S.-D. Adaptive Monocular Visual–Inertial SLAM for Real-Time Augmented Reality Applications in Mobile Devices. Sensors 2017, 17, 2567. https://doi.org/10.3390/s17112567

Piao J-C, Kim S-D. Adaptive Monocular Visual–Inertial SLAM for Real-Time Augmented Reality Applications in Mobile Devices. Sensors. 2017; 17(11):2567. https://doi.org/10.3390/s17112567

Chicago/Turabian StylePiao, Jin-Chun, and Shin-Dug Kim. 2017. "Adaptive Monocular Visual–Inertial SLAM for Real-Time Augmented Reality Applications in Mobile Devices" Sensors 17, no. 11: 2567. https://doi.org/10.3390/s17112567