Recognition and Matching of Clustered Mature Litchi Fruits Using Binocular Charge-Coupled Device (CCD) Color Cameras

Abstract

:1. Introduction

2. Materials and Methods

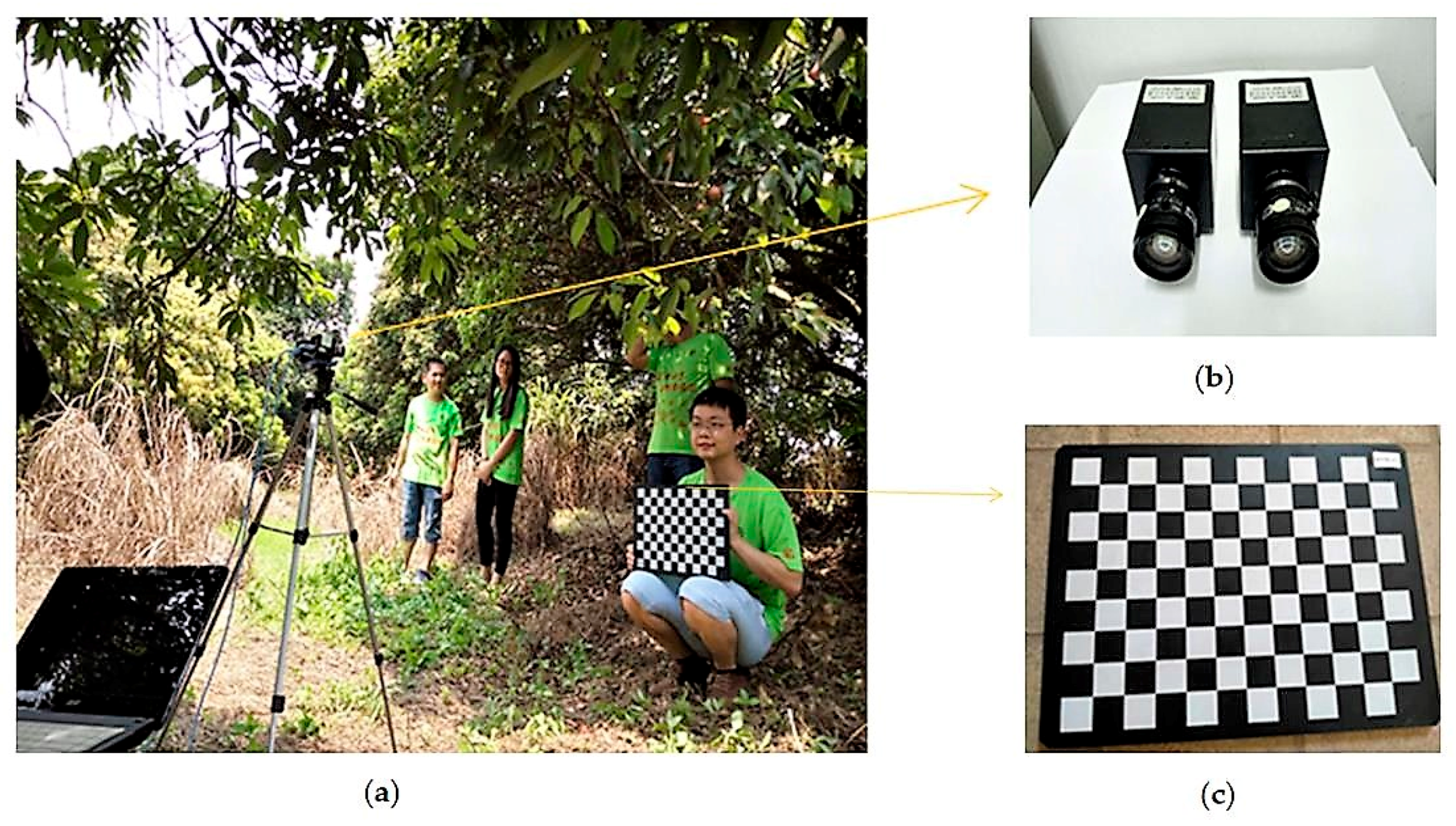

2.1. Calibration of Cameras and Image Acquisition

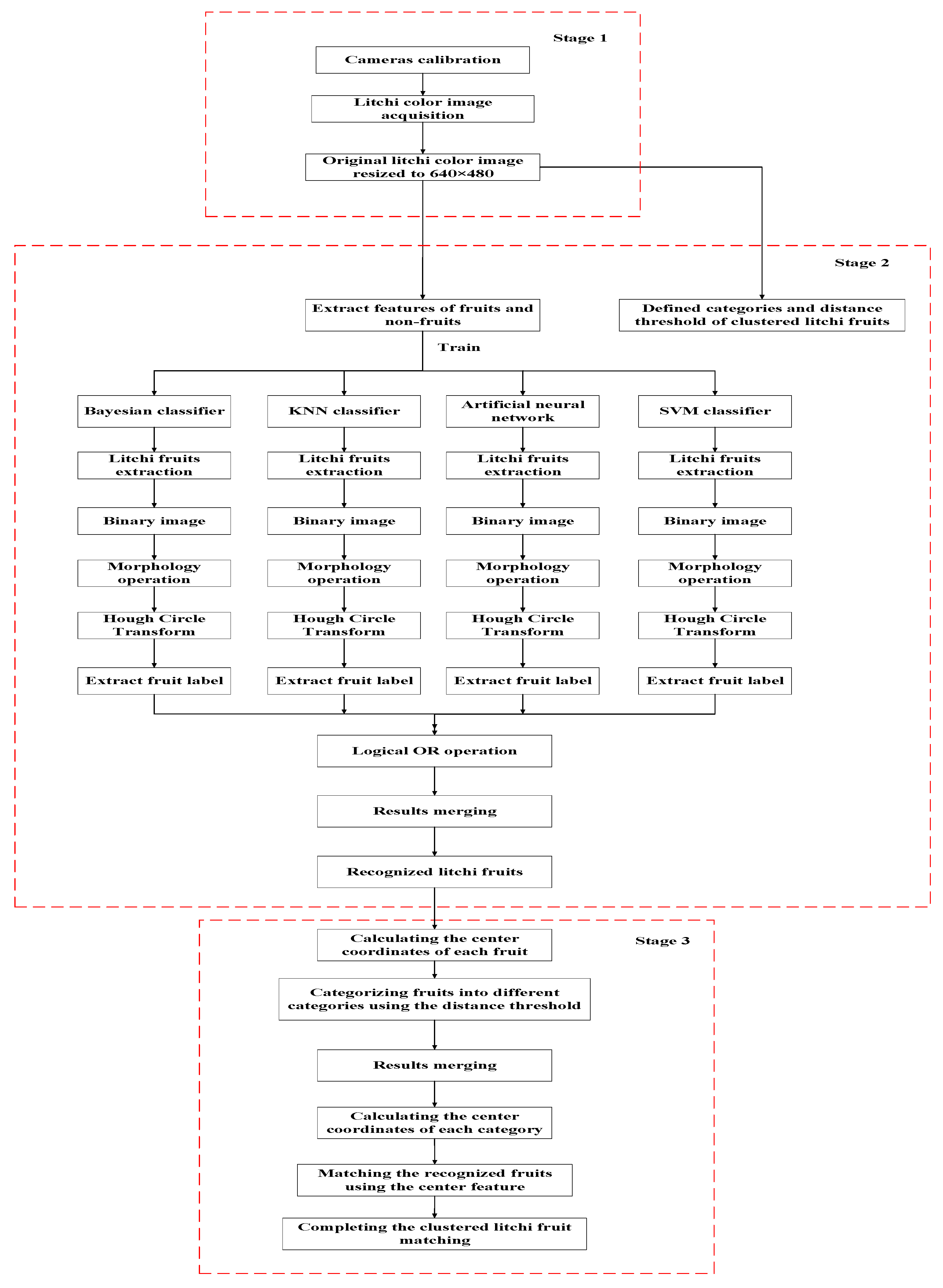

2.2. Algorithm Description

2.3. Category Definition of Clustered Litchi Fruit

- Single litchi fruit (category A): If the Euclidean distance between the geometric center of one litchi fruit and the geometric center of any other litchi fruit is greater than the average diameter of the single litchi fruit (i.e., 40 pixels), the litchi fruit will be defined as the single litchi fruit like A region;

- Two clustered litchi fruits (category B): If the Euclidean distance between the geometric centers of only any two litchi fruits is smaller than the average diameter of the single litchi fruit (i.e., 40 pixels), the litchi fruits will be defined as the two clustered litchi fruits like B region;

- Multiple clustered litchi fruits (category C): If the Euclidean distance between the geometric centers of more than two litchi fruits is smaller than the average diameter of the single litchi fruit (i.e., 40 pixels), the litchi fruits will be defined as the multiple clustered litchi fruits like C region.

2.4. Recognition Algorithm of Category of Clustered Litchi Fruit

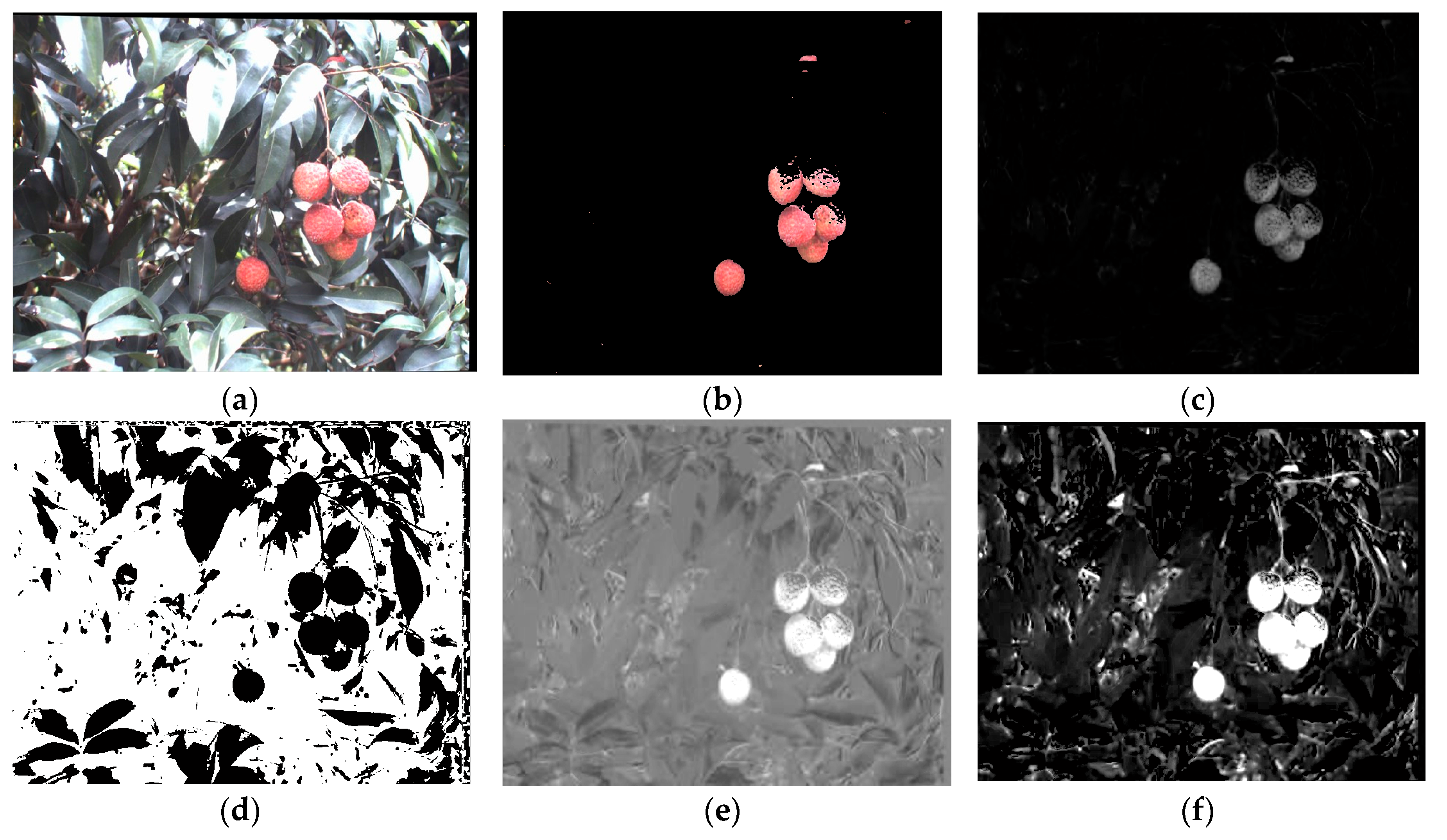

2.4.1. Analysis and Extraction of Features of Mature Litchi Fruit and Non-Fruit

2.4.2. Construction and Training of Four Kinds of Classifiers

- Naive Bayes classifier is a simple probabilistic classifier based on applying Bayes theorem with strong independence assumptions, which can predict the probability that a given sample belongs to a certain category. The training and classification processes using naive Bayes classifier for mature litchi fruit recognition are as follows. We used a 40 × 40 sub-window to slide on the image for searching the fruit, and use the posterior probability shown as Equation (3) [32] to judge whether the sub-window the fruit region is.In which and could be obtained by doing statistics of training sample and calculating the probability of in the training sample, respectively. And can be obtained by calculating the total probability. If , the sub-window is the fruit region, or it is the non-fruit region.

- The KNN is used to test the degree of similarity between documents and k training data, and to store a certain amount of classification data, thereby determining the category of test documents. This method is an instant-based learning algorithm that categorizes objects based on closest feature space in the training set. The training and classification processes using the KNN classifier for mature litchi fruit recognition are as follows. We set as the testing sample, in which and represented the value of four effective color components and six primary visual features of the testing sample, respectively. Use the cosine similarity shown as Equation (4) [33] to measure the similarity between and .Calculate all of the distances between and all using the Equation (4), and sorting the distances in ascending. Then, take the first k samples and calculate both of the percent of and in the first k samples. Lastly, classify into the corresponding class based on the larger percent between and .

- Artificial neural network is a description of the first order characteristics of the human brain system, which is a mathematical model consisting of many simple parallel processing units. In this study, we selected BP neural network as the artificial neural network classifier. The training and classification processes by using the BP neural network for mature litchi fruit recognition are as follows. BP neural network with three layers was applied and 3 × 3 neighbor pixels with feature were selected as the input neuron. The function of the neuron for inputting and outputting was . The neuron number of intermediate layer was determined by using Equation (5) [34].In which and indicated the number of inputting neuron and outputting neuron, respectively. And was an integer between 1 and 10. In this study, the number of outputting neuron was determined as 1. Its value has 2, 1 and 0, respectively, indicating whether the tested point belonged to mature litchi fruit or not. Therefore, and . was taken any integer between 5 and 14. We set as 7 to segment mature litchi fruit in this study.

- SVM is one of the discriminative classification methods which are commonly recognized to be more accurate. The SVM classification method is based on the structural risk minimization principle from computational learning theory. The training and classification processes using the SVM for mature litchi fruit recognition are as follows. We used vectors as training feature vectors, in which represented the value of the effective color and texture components of fruit and non-fruit, and represented the class labels satisfying the following equation [35].In which and indicated fruit region and non-fruit region, respectively. Linear optimal discriminant function is shown in Equation (7).where and were the global optimal solutions obtained by using the linear discriminant function shown as Equation (8) to obtain the minimum solution of Equation (9).The testing feature vectors were substituted into Equation (7) to solve the . If , belonged to , that was the fruit region. Or belonged to , that was the non-fruit region.

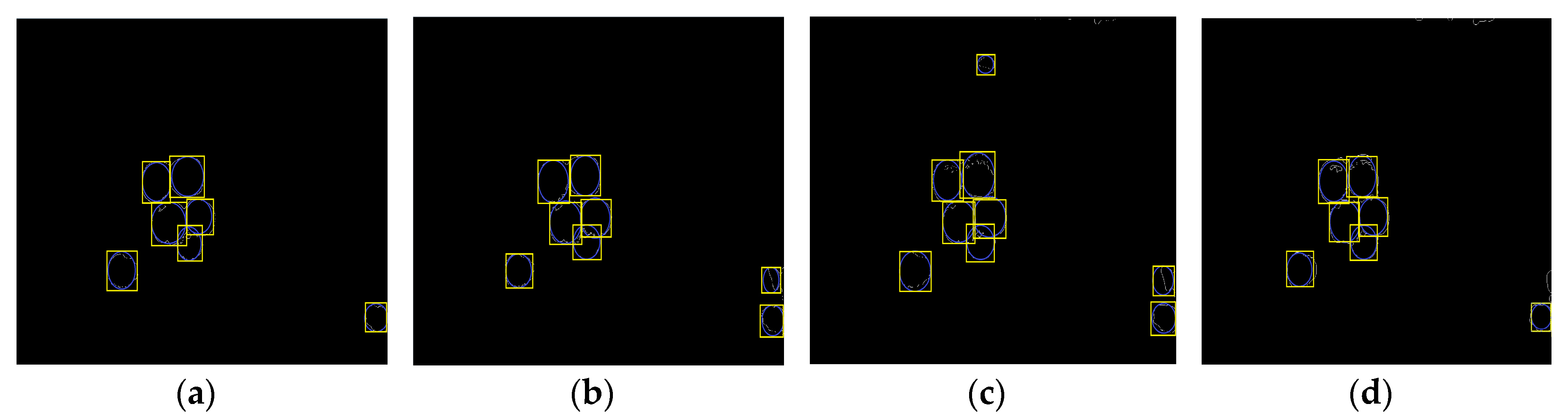

2.4.3. Litchi Fruit Recognition Based on the Combination of Four Kinds of Classifiers

2.4.4. Categories Recognition of Clustered Litchi Fruit Based on the Pixel Threshold Method

- If the Euclidean distance between one circle center and the center of any circle was greater than the threshold, the litchi fruit represented by the circle would be categorized as a single litchi fruit. The coordinates of its circle center and four vertices of its label would not change.

- If the Euclidean distance between the centers of only any two circles was smaller than the threshold, the litchi fruits represented by the two circles would be categorized as two clustered litchi fruits. The coordinates of their circle centers were deleted. The labels were combined into a large label, whose four vertices were the minimum and maximum abscissas and the minimum and maximum ordinates of the two labels.

- If the Euclidean distance between the centers of more than two circles was smaller than 40 pixels, the litchi fruits represented by the circles would be categorized as multiple clustered litchi fruits. The coordinates of their circle centers were deleted. The labels were combined into a large label, whose four vertices were the minimum and maximum abscissas and the minimum and maximum ordinates of all the labels.

2.5. Matching Method for the Recognized Categories of Clustered Litchi Fruit

2.5.1. Geometric Center-Based Clustered Litchi Fruit Matching

2.5.2. Implementation of Clustered Litchi Fruit Matching Algorithm

3. Results

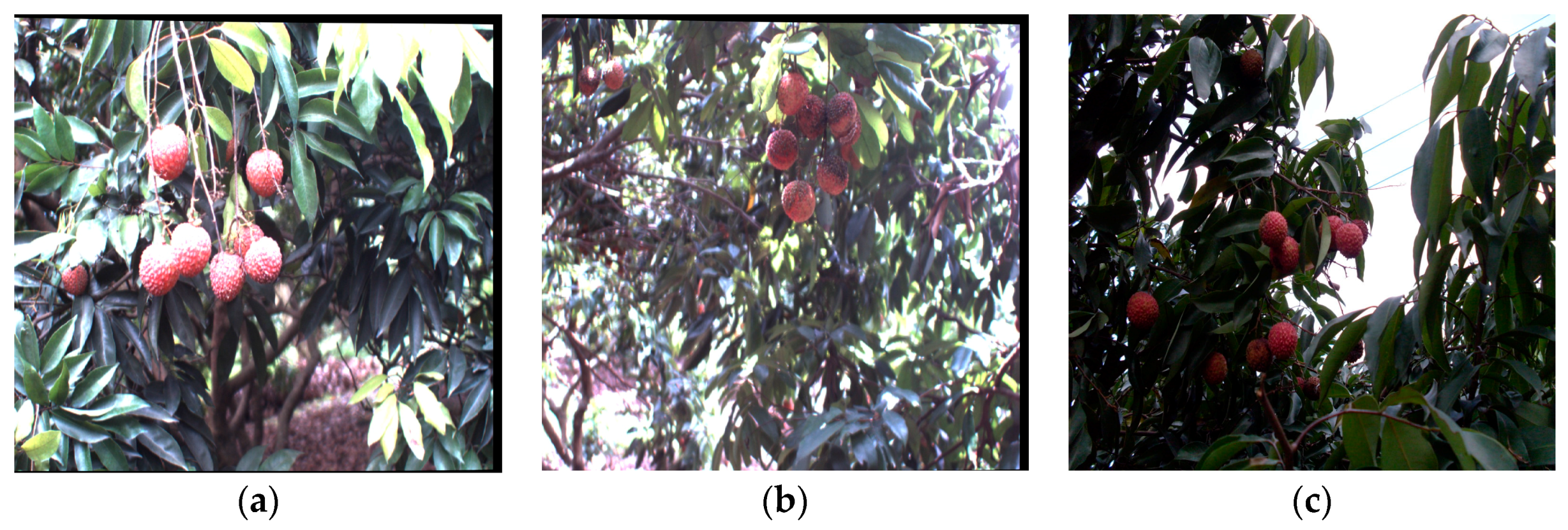

3.1. Recognition of Clustered Litchi Fruit under Natural Environment Conditions

- Missed single litchi fruit: If the recognized fruit was less than 25% of the actual fruit in the category of single litchi fruit, the single litchi fruit would be considered as the missed single litchi fruit;

- Missed two clustered litchi fruits: If there were one or two recognized fruits less than 25% of the actual fruits in the category of two clustered litchi fruits, the two clustered litchi fruits would be considered as the missed two clustered litchi fruits;

- Missed multiple clustered litchi fruits: If there were two or more recognized fruits less than 25% of the actual fruits in the category of multiple clustered litchi fruits, the multiple clustered litchi fruits would be considered as the missed multiple clustered litchi fruits.

3.2. Performance of Clustered Litchi Fruit Matching Algorithm

3.3. Real Time Performance of the Proposed Algorithm

4. Discussion

5. Conclusions and Future Work

- (1)

- The proposed litchi recognition method based on the results of combining the four different classifiers had better recognition results than the results obtained by using single classifier.

- (2)

- The proposed method recognized and matched the clustered litchi fruits instead of single litchi fruits, which made the effects of varying illumination and occlusion on fruit recognition much weaker and improved the recognition and matching accuracy.

- (3)

- The recognition method was able to automatically separate clustered litchi fruits from background, and the accuracy of the classifications could achieve 94.17% under sunny back-lighting and partial occlusion conditions, 91.07% under sunny front-lighting and partial occlusion conditions and 92.82% under natural environment.

- (4)

- The highest and lowest matching success rates of clustered litchi fruits of the proposed method were 97.37% and 91.96% under sunny back-lighting and non-occlusion and sunny front-lighting and partial occlusion conditions, respectively, which were superior to single litchi matching using CHT.

- (5)

- The interactive performance of the proposed algorithm was investigated, and the average consumed time from the extraction of clustered litchi fruit to fruit localization was 2536 ms, which can meet the requirements of litchi harvesting robots.

Acknowledgments

Author Contributions

Conflicts of Interest

Nomenclature

| NIR | near-infrared |

| RGB | red, green and blue |

| CCD | charge-coupled device |

| HSI | hue, saturation and intensity |

| YIQ | luminance, in-phase and quadrature-phase |

| YCbCr | luminance, blue chrominance and red chrominance |

| HSV | hue, saturation and value |

| BP | Back Propagation |

| KNN | k-NearestNeighbor |

| La*b* | luminosity, the range from magenta to green and the range from yellow to blue |

| SVM | Support Vector Machine |

| RBF | Radial Basis Function |

| PC | personal computer |

| CHT | Circle Hough Transform |

| NCC | normalized cross-correlation |

References

- Overview of Litchi Industry in the World. Available online: http://ir.tari.gov.tw:8080/bitstream/345210000/5854/2/no171-2.pdf (accessed on 14 July 2017).

- Baeten, J.; Donné, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous fruit picking machine: A robotic apple harvester. In Field and Service Robotics; Springer: Berlin, Germany, 2008; pp. 531–539. [Google Scholar]

- Ceres, R.; Pons, J.; Jimenez, A.; Martin, J.; Calderon, L. Design and implementation of an aided fruit-harvesting robot (Agribot). Ind. Robot 1998, 25, 337–346. [Google Scholar] [CrossRef]

- Yichich, C.; Suming, C.; Jiafeng, L. Study of an autonomous fruit picking robot system in greenhouses. Eng. Agric. Environ. Food 2013, 6, 92–98. [Google Scholar]

- Kondo, N.; Monta, M.; Fujiura, T. Fruit harvesting robots in Japan. Adv. Space Res. 1996, 18, 181–184. [Google Scholar] [CrossRef]

- Yamamoto, S.; Hayashi, S.; Saito, S.; Ochiai, Y.; Yamashita, T.; Sugano, S. Development of robotic strawberry harvester to approach target fruit from hanging bench side. IFAC Proc. Vol. 2010, 43, 95–100. [Google Scholar] [CrossRef]

- Yasukawa, S.; Li, B.; Sonoda, T.; Ishii, K. Development of a Tomato Harvesting Robot. In Proceedings of the 2017 International Conference on Artificial Life and Robotics, Miyazaki, Japan, 19–22 January 2017; pp. 408–411. [Google Scholar]

- Bulanon, D.M.; Burks, T.F.; Alchanatis, V. Study on temporal variation in citrus canopy using thermal imaging for citrus fruit detection. Biosyst. Eng. 2008, 101, 161–171. [Google Scholar] [CrossRef]

- Wachs, J.; Stern, H.I.; Burks, T.; Alchanatis, V.; Betdagan, I. Apple detection in natural tree canopies from multimodal images. In Proceedings of the 7th European Conference on Precision Agriculture, Wageningen, The Netherlands, 6–8 July 2009; pp. 293–302. [Google Scholar]

- Kane, K.E.; Lee, W.S. Multispectral imaging for in-field green citrus identification. In Proceedings of the ASABE Annual International Meeting, Minneapolis, Minnesota, 17–20 June 2007; pp. 1–11. [Google Scholar]

- Safren, O.; Alchanatis, V.; Ostrovsky, V.; Levi, O. Detection of green apples in hyperspectral images of apple-tree foliage using machine vision. Trans. ASABE 2007, 50, 2303–2313. [Google Scholar] [CrossRef]

- Rajendra, P.; Kondo, N.; Ninomiya, K.; Kamata, J.; Kurita, M.; Shiigi, T.; Hayashi, S.; Yoshida, H.; Kohno, Y. Machine Vision Algorithm for Robots to Harvest Strawberries in Tabletop Culture Greenhouses. Eng. Agric. Environ. Food 2009, 2, 24–30. [Google Scholar] [CrossRef]

- Bulanon, D.M.; Kataoka, T.; Ota, Y.; Hiroma, T. AE—Automation and emerging technologies: A segmentation algorithm for the automatic recognition of Fuji apples at harvest. Biosyst. Eng. 2002, 83, 405–412. [Google Scholar] [CrossRef]

- Arefi, A.; Motlagh, A.M.; Mollazade, K.; Teimourlou, R.F. Recognition and localization of ripen tomato based on machine vision. Aust. J. Crop Sci. 2011, 5, 1144–1150. [Google Scholar]

- Akin, C.; Kirci, M.; Gunes, E.O.; Cakir, Y. Detection of the pomegranate fruits on tree using image processing. In Proceedings of the 2012 First International Conference on Agro-Geoinformatics, Shanghai, China, 2–4 August 2012; pp. 1–4. [Google Scholar]

- Payne, A.B.; Walsh, K.B.; Subedi, P.; Jarvis, D. Estimation of mango crop yield using image analysis segmentation method. Comput. Electron. Agric. 2013, 91, 57–64. [Google Scholar] [CrossRef]

- Fu, L.S.; Wang, B.; Cui, Y.J.; Su, S.; Gejima, Y.; Kobayashi, T. Kiwifruit recognition at nighttime using artificial lighting based on machine vision. Int. J. Agric. Biol. Eng. 2015, 8, 52–59. [Google Scholar]

- Luo, L.; Zou, X.; Yang, Z.; Li, G.; Song, X.; Zhang, C. Grape image fast segmentation based on improved artificial bee colony and fuzzy clustering. Trans. CSAM 2015, 46, 23–28. [Google Scholar]

- Xiong, J.; Zou, X.; Chen, L.; Guo, A. Recognition of mature litchi in natural environment based on machine vision. Trans. CSAM 2011, 42, 162–166. [Google Scholar]

- Guo, A.; Zou, X.; Zhu, M.; Chen, Y.; Xiong, J.; Chen, L. Color feature analysis and recognition for litchi fruits and their main fruit bearing branch based on exploratory analysis. Trans. CSAM 2013, 29, 191–198. [Google Scholar]

- Wang, C.; Zou, X.; Tang, Y.; Luo, L.; Feng, W. Localisation of litchi in an unstructured environment using binocular stereo vision. Biosyst. Eng. 2016, 145, 39–51. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Zou, X.; Wang, C.; Zhang, P.; Feng, W. Robust Grape Cluster Detection in a Vineyard by Combining the AdaBoost Framework and Multiple Color Components. Sensors 2016, 16, 2098. [Google Scholar] [CrossRef] [PubMed]

- Chinchuluun, R.; Lee, W.S.; Burks, T.F. Machine vision-based citrus yield mapping system. In Proceedings of the 119th annual meeting of the Florida State Horticultural Society, Tamp, FL, USA, 4–6 June 2006; pp. 142–147. [Google Scholar]

- Seng, W.C.; Mirisaee, S.H. A new method for fruits recognition system. In Proceedings of the 2009 International Conference on Electrical Engineering and Informatics, Selangor, Malaysia, 5–7 August 2009; pp. 130–134. [Google Scholar]

- Wachs, J.P.; Stern, H.I.; Burks, T.; Alchanatis, V. Low and high-level visual feature-based apple detection from multi-modal images. Precis. Agric. 2010, 11, 717–735. [Google Scholar] [CrossRef]

- Qiang, L.; Jianrong, C.; Bin, L.; Lie, D.; Yajing, Z. Identification of fruit and branch in natural scenes for citrus harvesting robot using machine vision and support vector machine. Int. J. Agric. Biol. Eng. 2014, 7, 115–121. [Google Scholar]

- Jian, L.; Shujuan, C.; Chengyan, Z.; Haifeng, C. Research on localization of apples based on binocular stereo vision marked by cancroids matching. In Proceedings of the 2012 Third International Conference on Digital Manufacturing and Automation, Guilin, China, 31 July–2 August 2012; pp. 683–686. [Google Scholar]

- Si, Y.; Liu, G.; Feng, J. Location of apples in trees using stereoscopic vision. Comput. Electron. Agric. 2015, 112, 68–74. [Google Scholar] [CrossRef]

- Zou, X.; Zou, H.; Lu, J. Virtual manipulator-based binocular stereo vision positioning system and errors modeling. Mach. Vis. Appl. 2012, 23, 43–63. [Google Scholar] [CrossRef]

- Tamura, H.; Mori, S.; Yamawaki, T. Texture features corresponding to visual perception. IEEE Trans. Syst. Man Cybern. 1978, 8, 460–473. [Google Scholar] [CrossRef]

- Khan, A.; Baharudin, B.; Lee, L.H.; Khan, K. A review of machine learning algorithms for text-documents classification. J. Adv. Inf. Technol. 2010, 1, 4–20. [Google Scholar]

- Pedro, D.; Michael, P. On the optimality of the simple Bayesian classifier under zero-one loss. Mach. Learn. 1997, 29, 103–130. [Google Scholar]

- Wang, L.; Zhao, X. Improved knn classification algortihm research in text categorization. In Proceedings of the 2nd International Conference on Communications and Networks, Yichang, China, 21–23 April 2012; pp. 1848–1852. [Google Scholar]

- Yu, X.L.; Qian, G.H.; Zhou, J.L.; Jia, X.G. Learning sample selection in multi-spectral remote sensing image classification using BP neural network. J. Infrared Millim. Waves 1999, 6, 449–454. [Google Scholar]

- Reyna, R.A.; Hernandez, N.; Esteve, D.; Cattoen, M. Segmenting images with support vector machines. In Proceedings of the IEEE International Conference on Image Processing (ICIP 2000), Vancouver, BC, Canada, 10–13 September 2000; pp. 120–126. [Google Scholar]

- Van Henten, E.J.; Van Tuijl, B.A.J.; Hemming, J.; Kornet, J.G.; Bontsema, J.; Van Os, E.A. Field test of an autonomous cucumber picking robot. Biosyst. Eng. 2003, 86, 305–313. [Google Scholar] [CrossRef]

| Illumination Conditions | Litchi Clusters | True Positives Rate | False Positives Rate | False Negatives Rate | Precision | Recall | F1 | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Amount % | Amount % | Amount | % | % | % | % | ||||

| SFP | 112 | 102 | 91.07 | 12 | 10.53 | 10 | 8.93 | 89.47 | 91.07 | 90.26 |

| SFN | 25 | 23 | 92.00 | 9 | 28.13 | 2 | 8.00 | 71.86 | 92.00 | 80.69 |

| SBP | 103 | 97 | 94.17 | 9 | 9.49 | 6 | 5.83 | 91.51 | 94.17 | 92.82 |

| SBN | 38 | 35 | 92.11 | 5 | 12.50 | 3 | 7.89 | 87.50 | 92.11 | 89.75 |

| CP | 109 | 102 | 93.58 | 11 | 9.73 | 7 | 6.42 | 90.27 | 93.58 | 91.90 |

| CN | 45 | 42 | 93.33 | 3 | 6.67 | 3 | 6.67 | 93.33 | 93.33 | 93.33 |

| Total | 432 | 401 | 92.82 | 49 | 10.89 | 31 | 7.18 | 89.11 | 92.82 | 90.93 |

| Illumination Conditions | Pairs of Litchis | Correct Matching Rate of CHT | Pairs of Litchi Clusters | Correct Matching Rate of Proposed Method | ||

|---|---|---|---|---|---|---|

| Amount | % | Amount | % | |||

| SFP | 392 | 310 | 79.08 | 112 | 103 | 91.96 |

| SFN | 88 | 71 | 80.68 | 25 | 24 | 96.00 |

| SBP | 360 | 293 | 81.39 | 103 | 98 | 95.15 |

| SBN | 135 | 112 | 82.96 | 38 | 37 | 97.37 |

| CP | 381 | 312 | 81.89 | 109 | 101 | 92.66 |

| CN | 157 | 113 | 71.97 | 45 | 42 | 93.33 |

| Total | 1513 | 1211 | 80.04 | 432 | 405 | 93.75 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Tang, Y.; Zou, X.; Luo, L.; Chen, X. Recognition and Matching of Clustered Mature Litchi Fruits Using Binocular Charge-Coupled Device (CCD) Color Cameras. Sensors 2017, 17, 2564. https://doi.org/10.3390/s17112564

Wang C, Tang Y, Zou X, Luo L, Chen X. Recognition and Matching of Clustered Mature Litchi Fruits Using Binocular Charge-Coupled Device (CCD) Color Cameras. Sensors. 2017; 17(11):2564. https://doi.org/10.3390/s17112564

Chicago/Turabian StyleWang, Chenglin, Yunchao Tang, Xiangjun Zou, Lufeng Luo, and Xiong Chen. 2017. "Recognition and Matching of Clustered Mature Litchi Fruits Using Binocular Charge-Coupled Device (CCD) Color Cameras" Sensors 17, no. 11: 2564. https://doi.org/10.3390/s17112564