3.1.1. Sub-Pixel Centroid Extraction Based on Compress Processing

In order to meet the aviation parts measurement requirements with high accuracy and speed in the industrial field, the laser stripe center should be extracted highly accurately in the least amount of time possible. Thus, an improved extraction method of laser stripe with high accuracy and less calculation is very important for the achievement of aviation parts’ measurement.

Image compression technology can remove the interference information in the original image and retain the structural feature of the measured part [

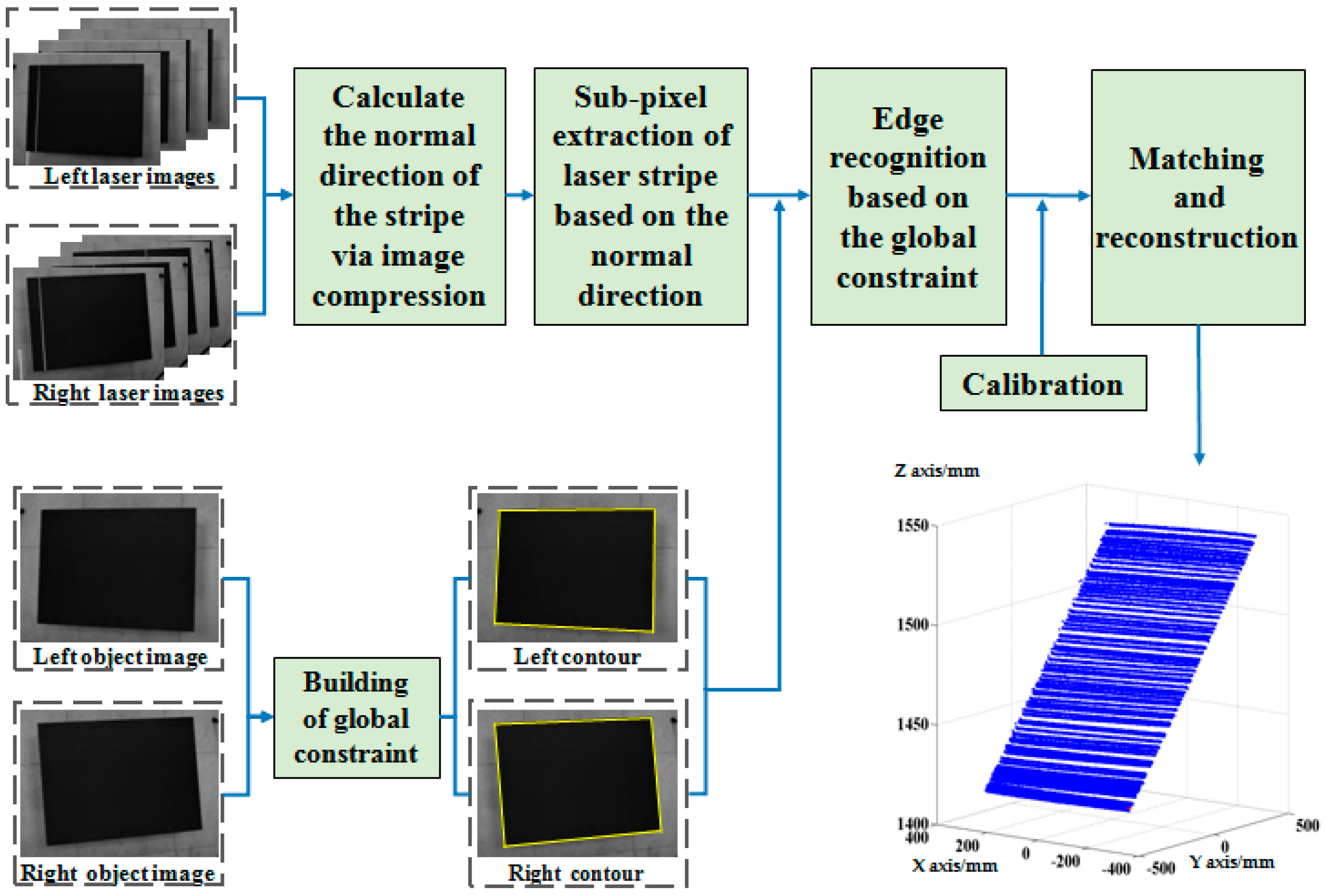

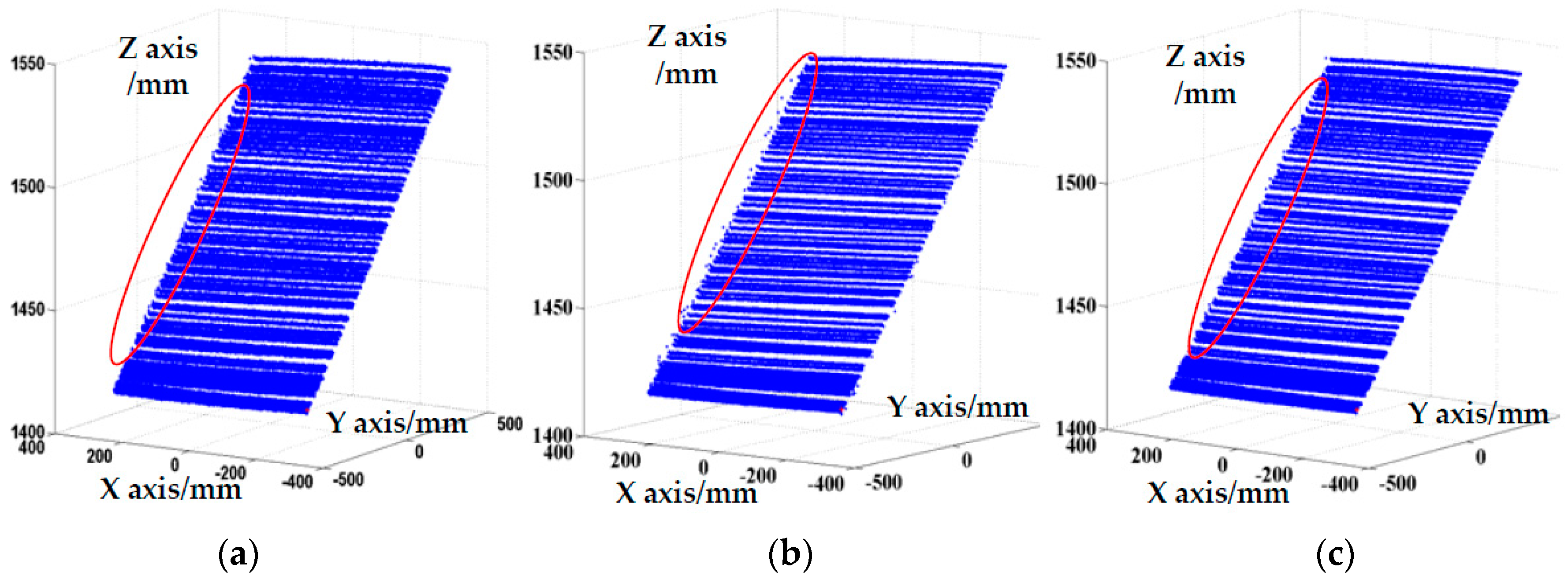

19]. Based on the compression processing technology, a sub-pixel centroid extraction method based on compress processing is proposed in this paper. The proposed method improves extraction accuracy in the industrial field and reduces the time taken to compute the normal direction. In the proposed method, the normal direction of the stripes is calculated using the low-resolution image compressed from the original image based on image compression technology to minimize the computation time. Combined with the stability of the normal direction and the presented judgment criterion of the normal center, the sub-pixel center of the laser stripe is extracted rapidly and precisely. The principle is illustrated in

Figure 3.

According to the compression ratio,

, images (resolution

) captured by the binocular camera are compressed into low-resolution images. The

pixels of the image are compressed to one pixel by computing the average value of the local grayscale. As the image resolution number is even, compression factor

is defined as an even number to simplify the calculation.

is the grayscale matrix of the original image.

and

are the row and column value, respectively. After compressing the image with factor

, the grayscale matrix of the compressed image can be computed as follows:

where

is the grayscale matrix of the compressed image. For more clarity, letters with the superscript

in this paper denote compressed image information.

and

are compression matrixes with scale

and

, respectively. The mathematical expressions can be depicted as follows:

The compression image is shown in

Figure 3. As the structural features of the laser stripes primarily remain after compressing the original image, the normal vector of the stripe can be computed using the compressed image to reduce the calculation time. First, the stripe centers of the low-resolution image are preliminarily extracted using the gray centroid method. The initial values of the centers are used to compute the normal vector of the laser stripe. Thus, the initial center values of the compressed image are set as

.

and

are the values of the row and column, respectively. The normal vectors of the laser stripe are calculated by fitting the extracted initial center points. As the curvature variation is small in a small region, the polynomial fitting method is used to fit the curve of initial centers. To calculate the normal vector of the stripe center on the

th row, the center of the

th row and centers of the

rows before and after the

th row are selected. The number of selected points should be greater than four to ensure fitting accuracy. The set of selected points is as follows:

where

is a set of positive integers. Based on the

th-row set of selected points, the fitting equation of the curve of stripe centers can be established as follows:

where

is the polynomial fitting coefficient and

are the row and column variable, respectively.

is the maximal optional polynomial order. Then, the normal vector

satisfies the following equation:

where

and

are partial derivatives of

to column

and row

, respectively. Therefore,

and

can be expressed as follows:

Subsequently, using the hierarchical calculation method, the normal vectors obtained in the compressed image can be restored to the original image. According to the principle of compression, one row of pixels in the compressed image represents

rows in the original image. Thus, the normal vector at each row of laser stripe in the compressed image is defined as the one at the

row of laser stripe in the original image:

is the normal vector of the center in the row

of the original image. According to the normal vector of the rows before and after the selected row in the compressed image, the normal vectors of the middle rows in the original image can be acquired via linear interpolation. The computation equation is as follows:

therefore, stripe slope

of the stripe center on the

th row of the original image can be calculated as follows:

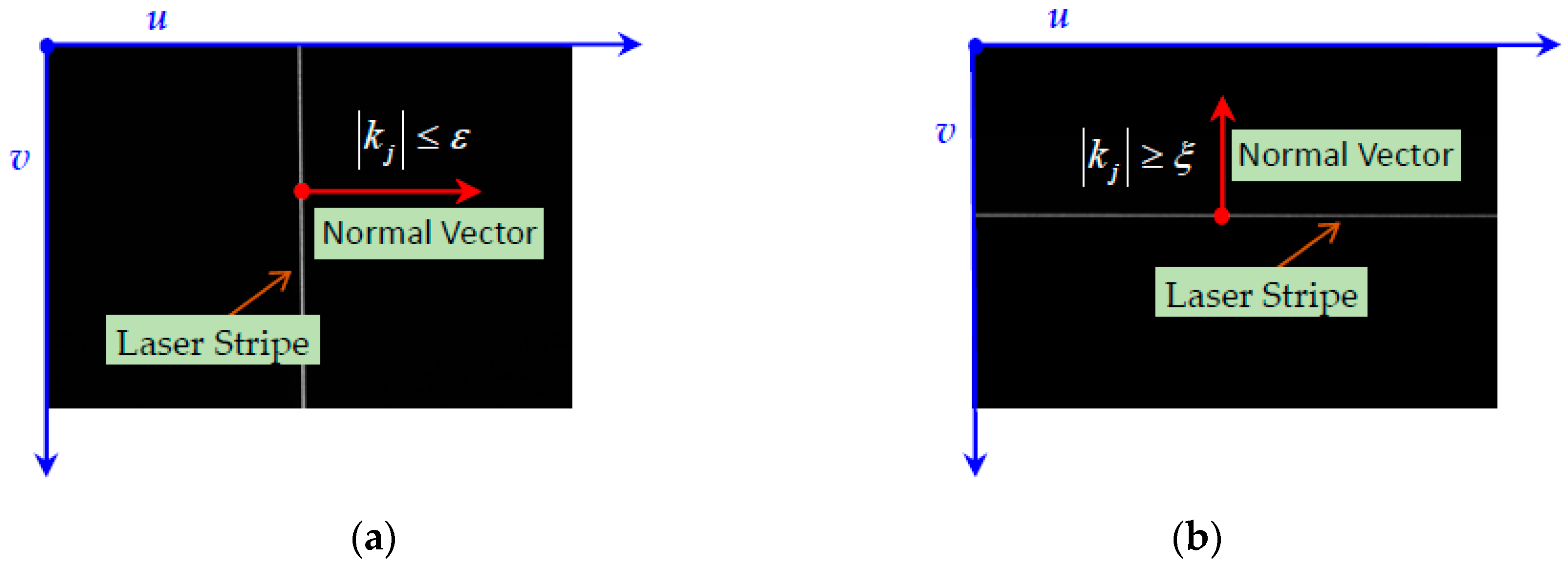

The stripes slopes have two special limiting values: infinity and infinitely close to zero. When the slope is infinitely close to zero, specifically,

, the normal vector of laser stripe is approximately parallel to the column vector of the image. In this case, the grayscale values of the stripe are ascertained along with the column direction of the image. When the slope is close to infinity, namely

, the normal direction is approximately parallel to the row vector of the image. In this case, the grayscale values of the stripe are ascertained along with the row direction of the image. With the exception of these two special situations, the grayscale values of the stripe are ascertained along with the normal direction. The two special situations are as shown in

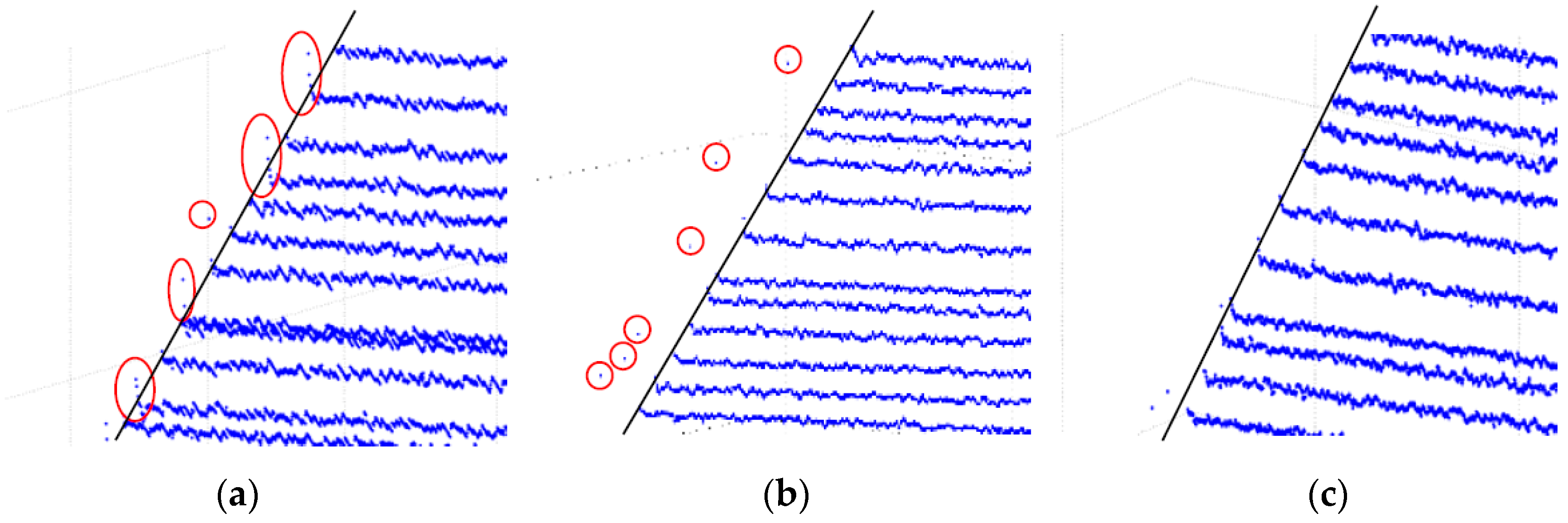

Figure 4.

After calculating the normal slopes, the centers of the laser stripes on the original image are extracted. First, the centers are preliminarily extracted. In the process of image acquisition, the quality of the images is affected by noise caused by various factors such as the quality of the sensor and ambient light. Therefore, a Gaussian filter is used to preprocess the image before stripe center extraction to remove some of the image noise. Then, the original image is binarized and the edge of the laser stripe is extracted [

20]. The sequence of the points on the edge is denoted as

. Next, the centroid of gray method is used to calculate the pixel coordinates of the stripe centers on the original image. To facilitate subsequent analysis and calculation, the pixel coordinates of the centers are rounded off. The rounded values are defined as the initial values of the stripe centers of the original image, denoted as

. Combined with the initial values of the stripe centers and their corresponding normal slopes, the grayscale information in the normal direction of the original image is determined, and the gray centroid along the normal direction of the stripe is obtained. Traditionally, the stripe center is acquired by using the algorithm of COG in normal direction at one row of the laser stripe. However, because the quality of the captured image is poor in an industrial setting, the extraction accuracy of the stripe center is easily affected by error points. Consequently, in this paper, interpolation and edge judgment are adopted to improve the judgment criterion of the normal pixels and to precisely calculate the gray centroid. The following procedure is utilized. The process of Gray centroid judgment is shown in

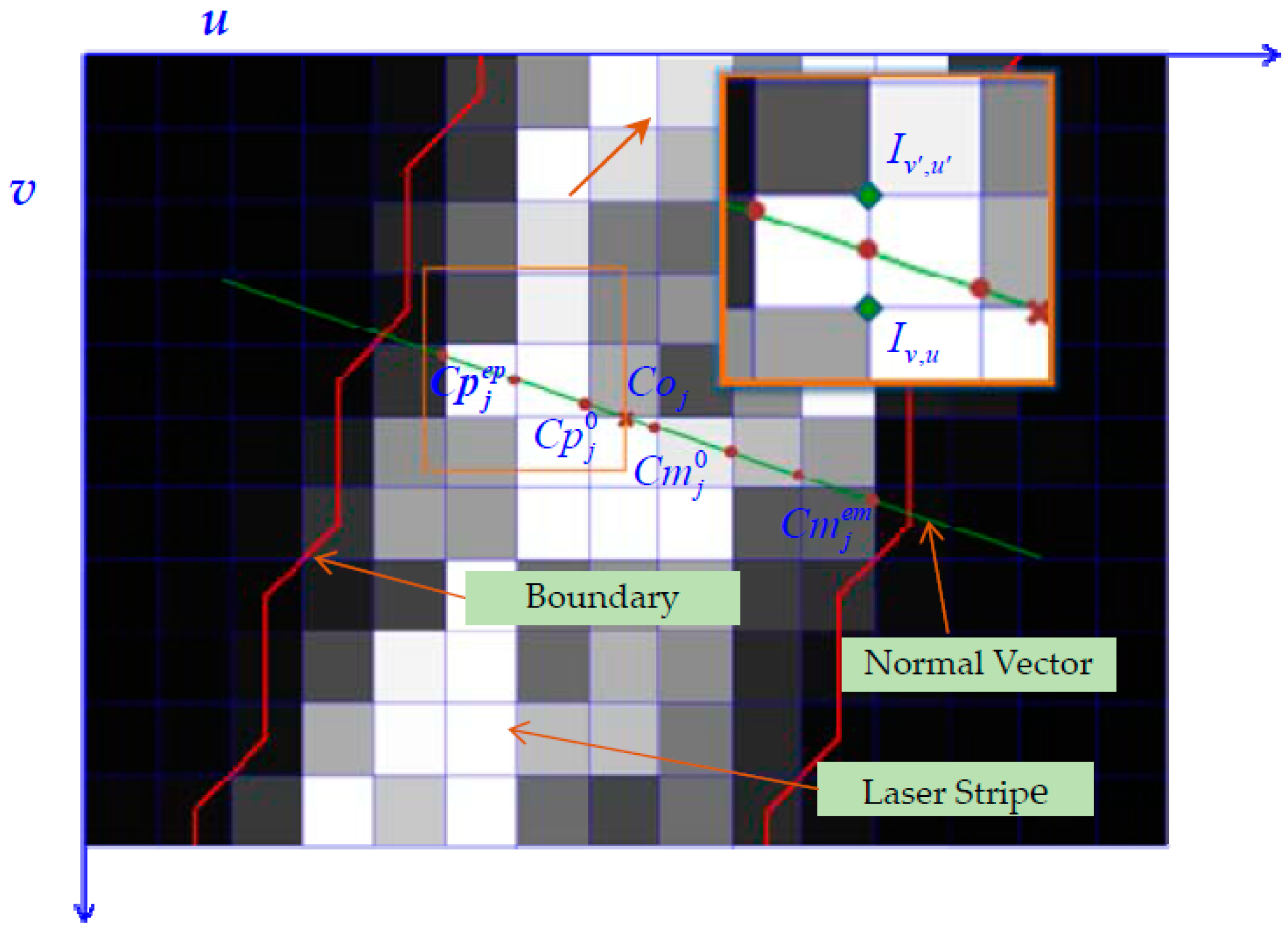

Figure 5.

Determine the Points on the Normal Direction of the Laser Stripes

The normal line of the stripe intersects with both the row and column of the image. As selecting all these intersections would increase the computation burden, owing to the significant amounts of redundant data present, considering the characteristics of the laser image, the search is conducted along the intersections of the row or column, which results in more intersections without redundant data. The sequence of the stripe slopes is the set of normal slopes of the initial center point in each row of the original image. Then, the numbers of and in the sequence are calculated, denoted as and respectively. When , the stripe has more normal vectors with a smaller angle in the column direction. The stripe is defined as a column stripe. The length of these laser stripes is distributed in the row and the width is distributed in the column. Therefore, the pixel points for these stripes are sought in the column. Conversely, when , the stripes can be defined as row stripes, and so they are sought in the row.

Select Pixel Points

Let us take a column stripe as an example stripe that is sought by the column. The pixel-point selection method is as follows: the preliminary stripe centers of the original image

are taken as the initial points. Then,

is rounded to infinity and zero, respectively, denoted as

and

. Next, the pixel points are sought along the normal direction where the slope of the normal line is

according to the manner in which the column increases and decreases. Then, the pixel points where the stripe normal intersects with the column vector of the original image can be determined. Therefore, the column vector in the increasing and decreasing direction can be obtained as

and

, respectively:

The row vector where the stripe normal intersects with the column vector is

Then, the sets of selected points in the increasing and the decreasing direction are expressed as

and

, respectively:

where

and

are, respectively, the selected pixel center in the increasing and the decreasing direction. Then, two critical points are obtained, which are the intersection points between the edge of laser stripe and the normal line of laser stripe. Since the edge of laser stripe is composed of discrete points, the extraction criterion of critical points is as follows. Firstly, extreme points of stripe edge at each row are recorded by using parameters

and

. They are the maximum and minimum column values of stripe edge at the

th row of image, respectively. According to extreme points, the critical points are determined. To facilitate this method introduction, the calculation method of critical point that belongs to the sequence of selected points.

in the increasing direction is taken as an example.

is the initial point. Row vector

is rounded to

and

is marked as

for brevity. When

is satisfied, the selected pixel point is the one on the laser stripe. Then,

is calculated based on Equations (11) and (12). Next, the edge condition of

is determined in the same manner as the initial point. The iterative calculation is terminated when

,

. The critical point in the increasing direction is denoted as

. The one in the decreasing direction

is obtained in the same manner. The equation is

Finally, the set of selected pixel points in the th row is arranged as : when (the column vector of the initial center) is non-integer, we can get . Thus, is chosen as the set of pixel points; when is an integer, is obtained. The set of selected pixel points without repeat points is chosen. This is denoted as .

Calculate the Grayscale of the Selected Point

Since the selected points are obtained via the sub-pixel algorithm, the grayscales of selected points should be calculated via interpolation. The row value

of selected pixel point

is rounded towards plus infinity and zero, respectively, which are denoted as

and

. If

, the row vector

is an integer. Hence, the grayscale of this point is

, that is, the grayscale of the selected pixel point is:

where

represent the row and column values of the original image.

is the grayscale in the

th row and

th column in the original image. If

, the interpolation method is used to calculate the grayscale:

where

and

are the grayscales in

and

of the original image, respectively. There is an exceptional situation in which the row vector of the selected point is the extreme value of the image row; that is,

. In this case, the gray value

is defined as the value where

.

3.1.2. Edge-Point Extraction Based on Directed Arc-Length Criterion

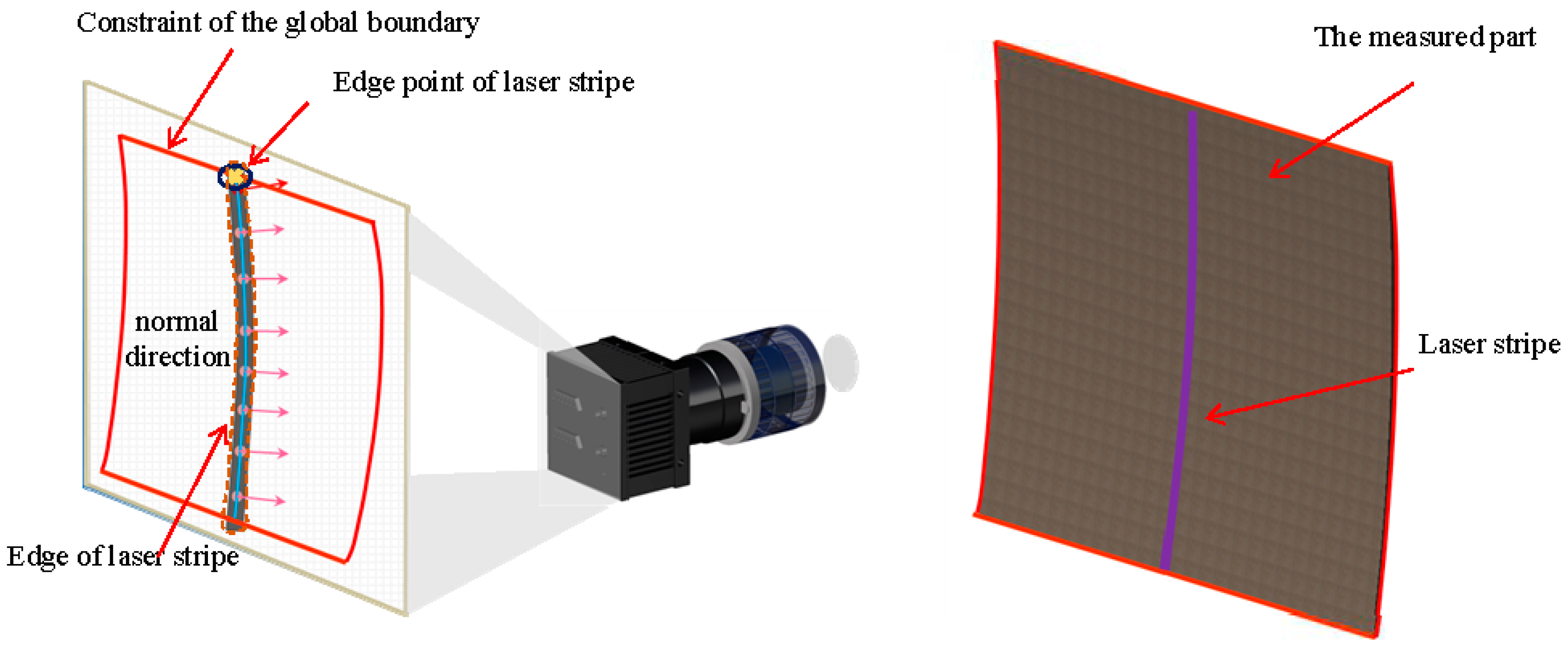

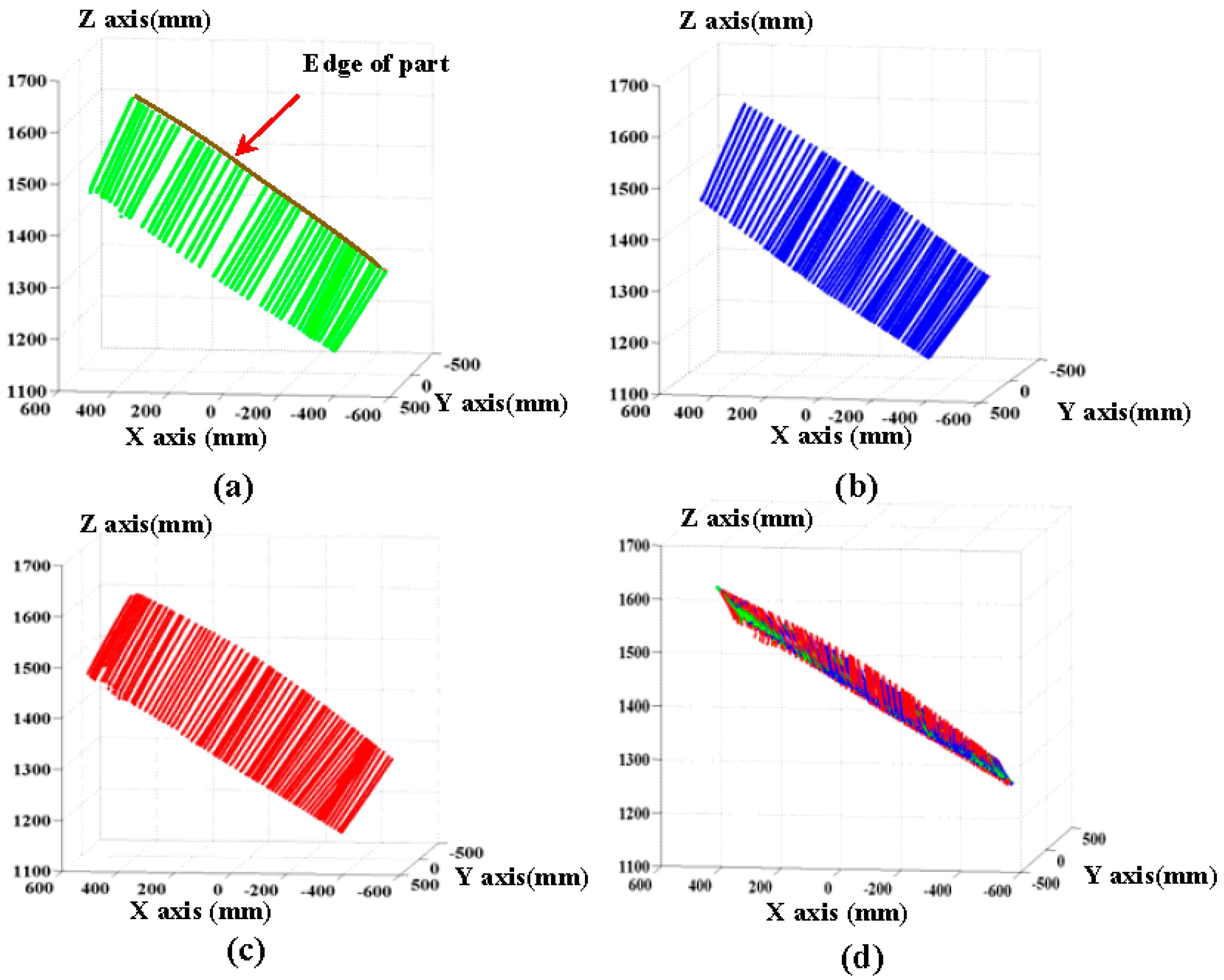

In the manufacturing process, the boundary of the aerodynamic shape is usually used as the geodetic datum owing to the deformation of a large aviation space. Thus, accurate extraction of the boundary is critical to the detection of aviation parts. Because the measuring environments in the industrial field are complicated, much noise is generated in the process of image acquisition. Thus, the quality of the images captured in industrial environments is poor, especially in the boundary region. Conventionally, the noise is reduced using data analysis software. However, some information of boundary of the measured object is lost as a result of deleting the points with a certain distance from the measuring plane. In order to reconstruct the aerodynamic shape more accurately, the boundary of the parts should be precisely extracted to retain the geometric features of the parts more completely. Therefore, a method based on global boundary constraint and the analysis of local stripe feature is proposed in this paper to obtain more accurate information about the laser stripes on the edge of the parts.

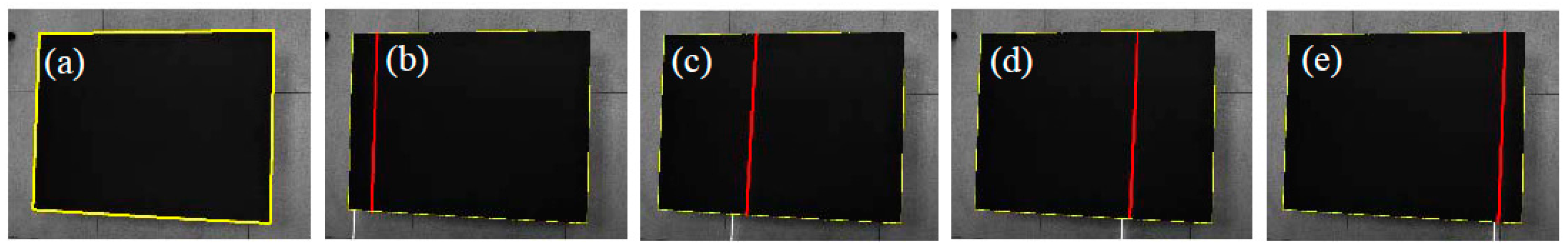

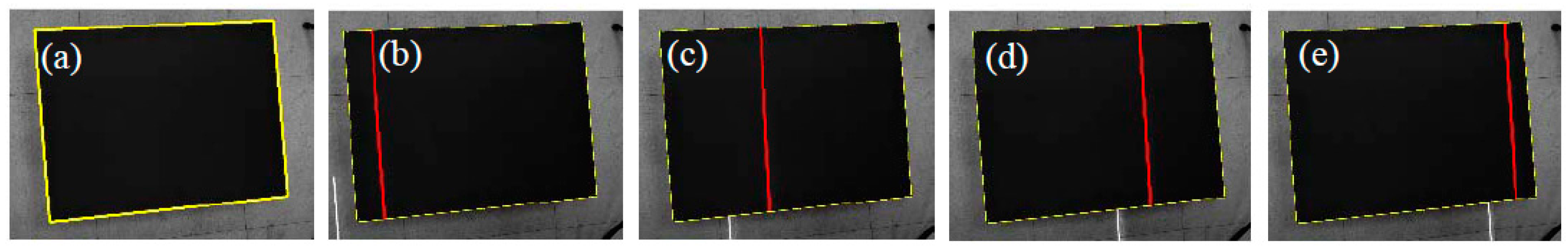

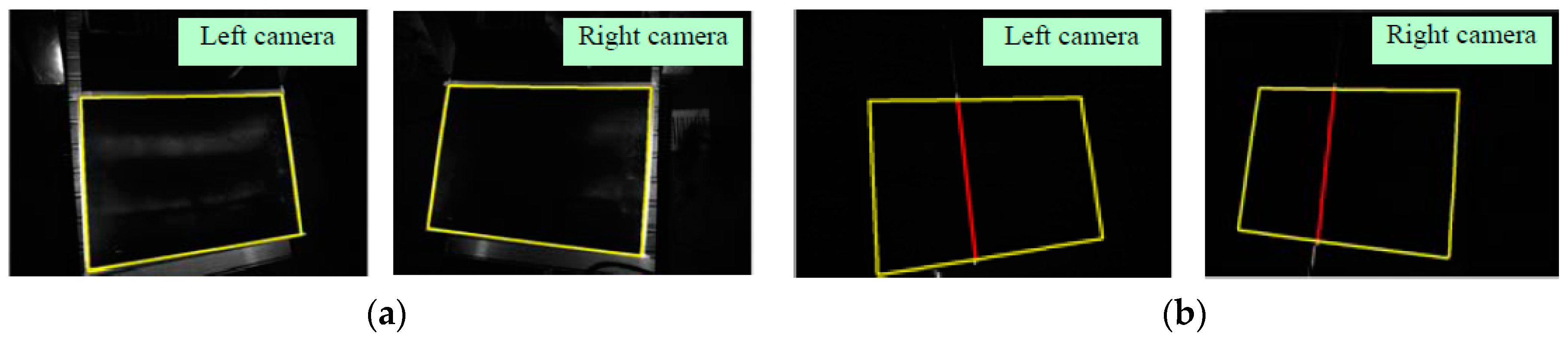

Carbon fiber composite material is widely used in the manufacture of aviation parts. Because the surface of the composite material is black, absorbance is high. When the light entering the camera is increased, the camera gets more of the light reflected from the background than the light reflected from the surface of the part. Thus, there is a substantial contrast between the part and background. Using this high contrast, a global boundary is first extracted in this study as constraints of the edges of the laser stripes.

The global boundary image acquisition method is as follows. Before scanning the laser stripes, the exposure time of the camera is increased. With added light into the camera, a non-stripe image with a large contrast between the boundary of the part and the background is captured based on the surface characteristic of the carbon fiber. If the reflectance of the background is low, reflective tapes can be pasted on the border area of the parts’ back. Therefore, the boundary of the part has a high contrast with the background of the image. The boundary constraint of the laser stripe can be established by extracting the entire boundary of the part. The procedure is as follows: First, the region of the part is selected to establish the region of interest and reduce the quantum of calculation. Then, the image is processed using a median filter to denoise it. Following binarization of the image and deletion of the small/big area affected by noise, the global boundaries of the part are preliminarily extracted using an edge-detector algorithm. Finally, the boundaries of the parts are confirmed via the intersection seek method and marked as , where is the pixel coordinates of the extracted points of the boundary.

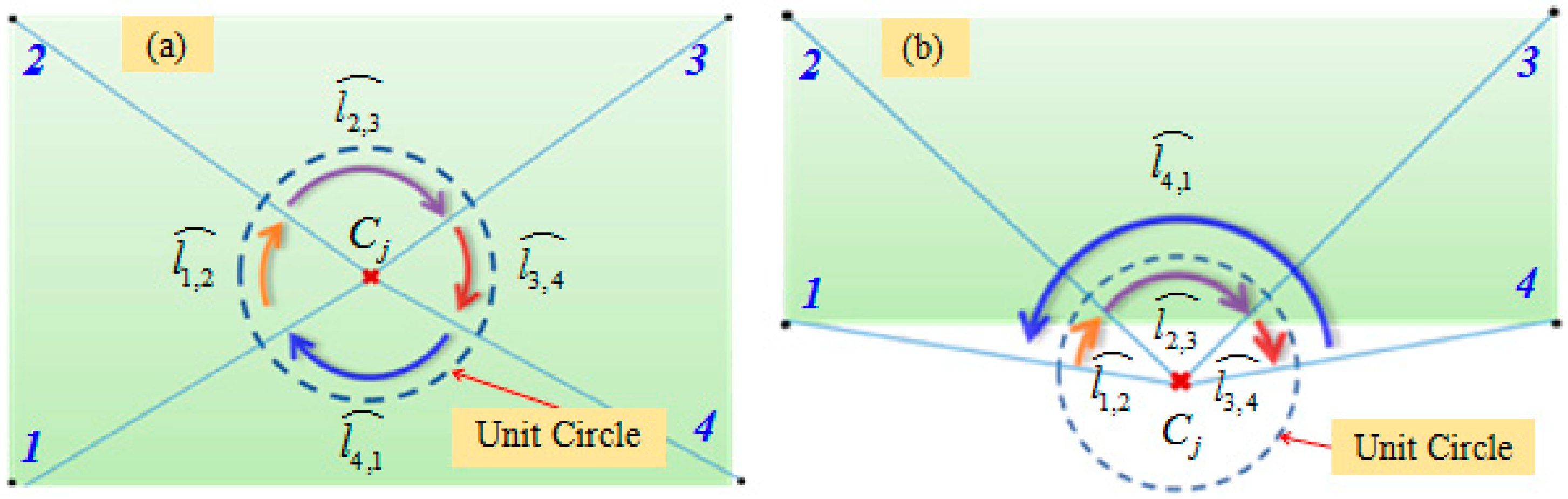

Based on the constraint of the global boundary, a point on the local edge is determined from the extracted centers of scanning laser stripes. In this paper, an edge-point extraction method based on directed arc-length criterion is adopted to determine whether the laser stripe centers are within the global boundary. The principle of the arc-length method is to calculate the sum of the directed arc-lengths that the edge points projected onto a unit circle with the center of the judged point. The principle is shown in

Figure 6. The graph enclosed by the boundary is closed and directed. A unit circle is constituted with the center of the judged point. The edge points are connected to the center in accordance with a certain sequence. The intersections of these connections and the unit circle are defined as projection points. In accordance with the sequence, the directed unit arc-lengths of the projection points are summed in a manner that the angle is less than or equal to 180 degrees. If the judged point is on or within the boundary, the sum of the directed arc length is

, and the point is called an interior point; if it is outside of the global boundary, the sum is zero.

Because of the large amount of laser stripe centers and boundary constraint, we improved the method to determine the point of the local edge based on the arc-length method. For convenient introduction, the column laser stripe is taken as an example. The extracted center of the laser stripe is arranged in an extraction order with the increasing of the column value of the image. Therefore, the points of the edge are distributed on the regions of the starting and ending, respectively. We judge the extracted light strip centers from the two ends of the center sequence. According to Equation (16), the center sequence of a laser stripe is defined as

. First, the initial point of the center sequence is judged as to whether it is within the boundary, that is, when

,

is judged whether it has met the requirements of the interior point. Because the boundary consists of thousands of points, the minimum bounding rectangle

of the global boundary is taken as an initial boundary to simplify the calculation. The rectangle is described as follows:

Four vertexes are marked as

, respectively. The sum of the directed arc-length

is calculated as

. If the arc-length is zero,

is not an interior point, and

is judged. If the arc-length is

, the directed arc-length of points on the global boundary is summed:

if

does not meet the requirements of the interior point, the next point is sequentially judged. The calculation continues until the inner point appears. The inner point is considered as a boundary point

of the laser stripe. Similarly, another edge point is judged from another end of the center sequence. Then, a series of laser stripe centers

is reserved as the center points within the boundary. Parameters

and

are the first number and last number, respectively.