Tracking and Classification of In-Air Hand Gesture Based on Thermal Guided Joint Filter

Abstract

:1. Introduction

2. Background

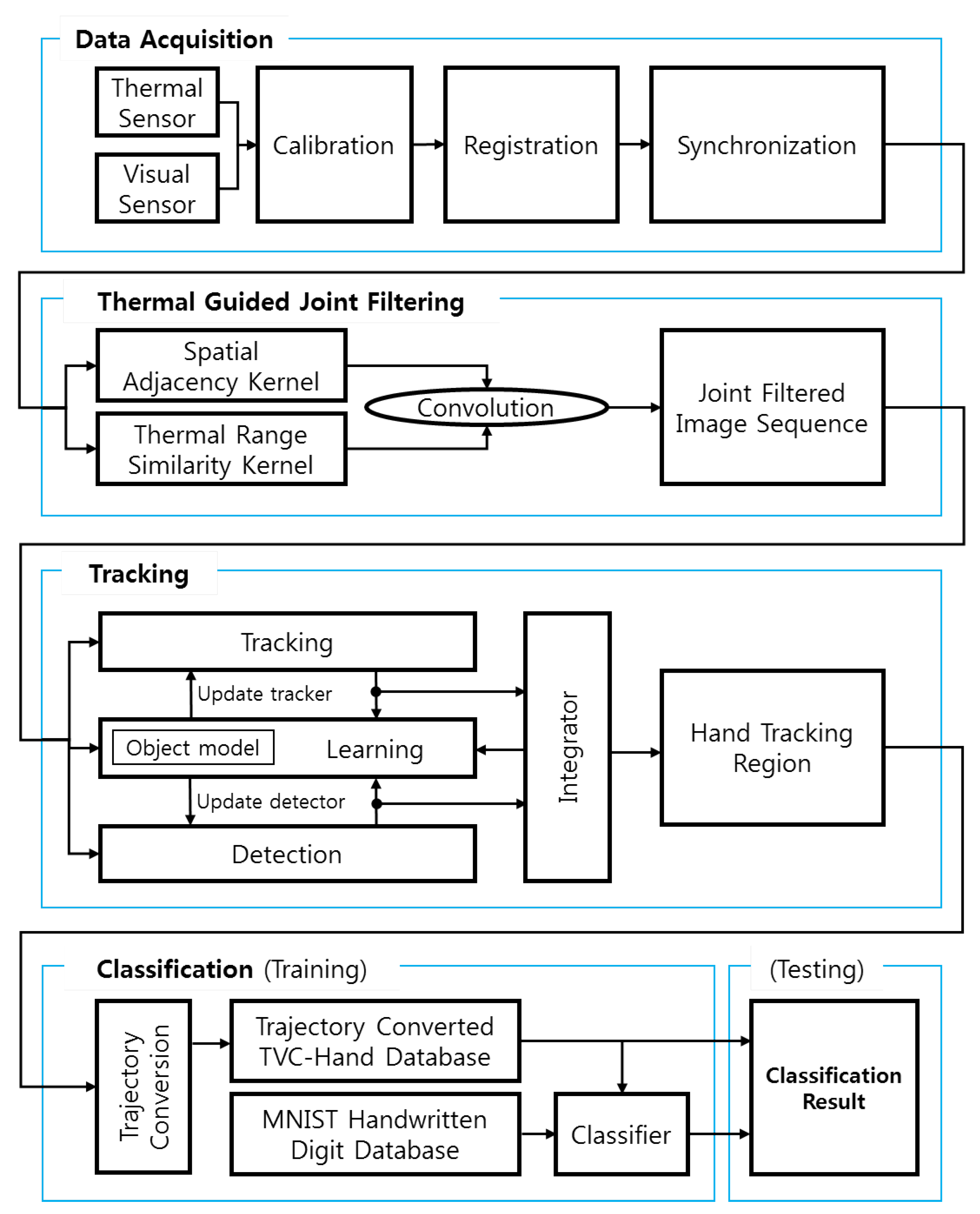

3. Proposed Method

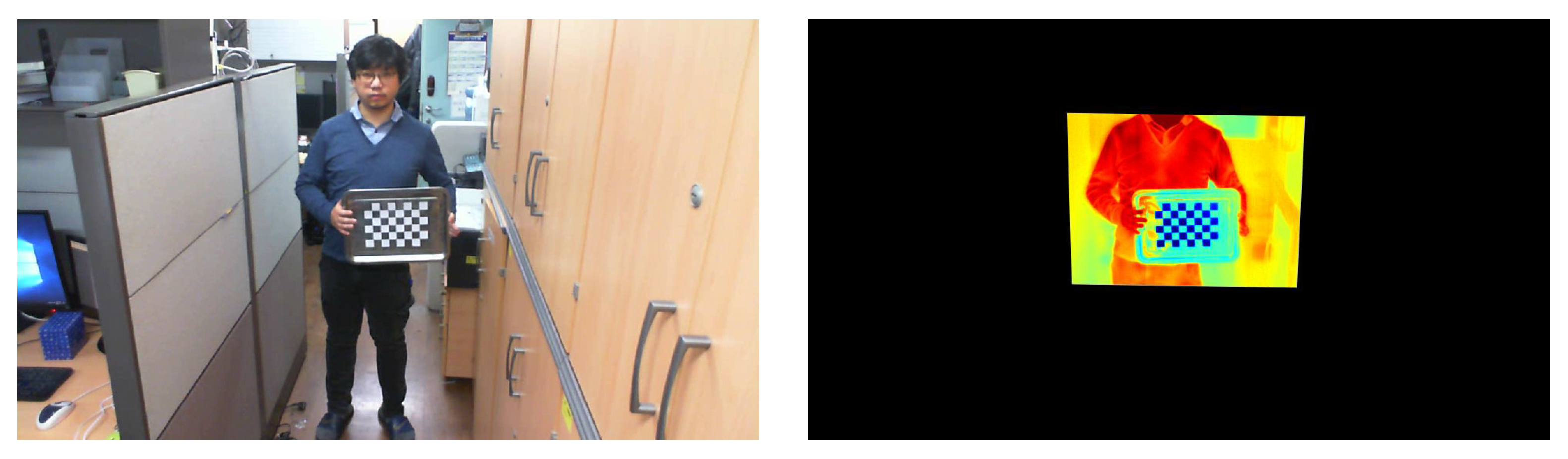

3.1. Calibration, Registration, and Synchronization

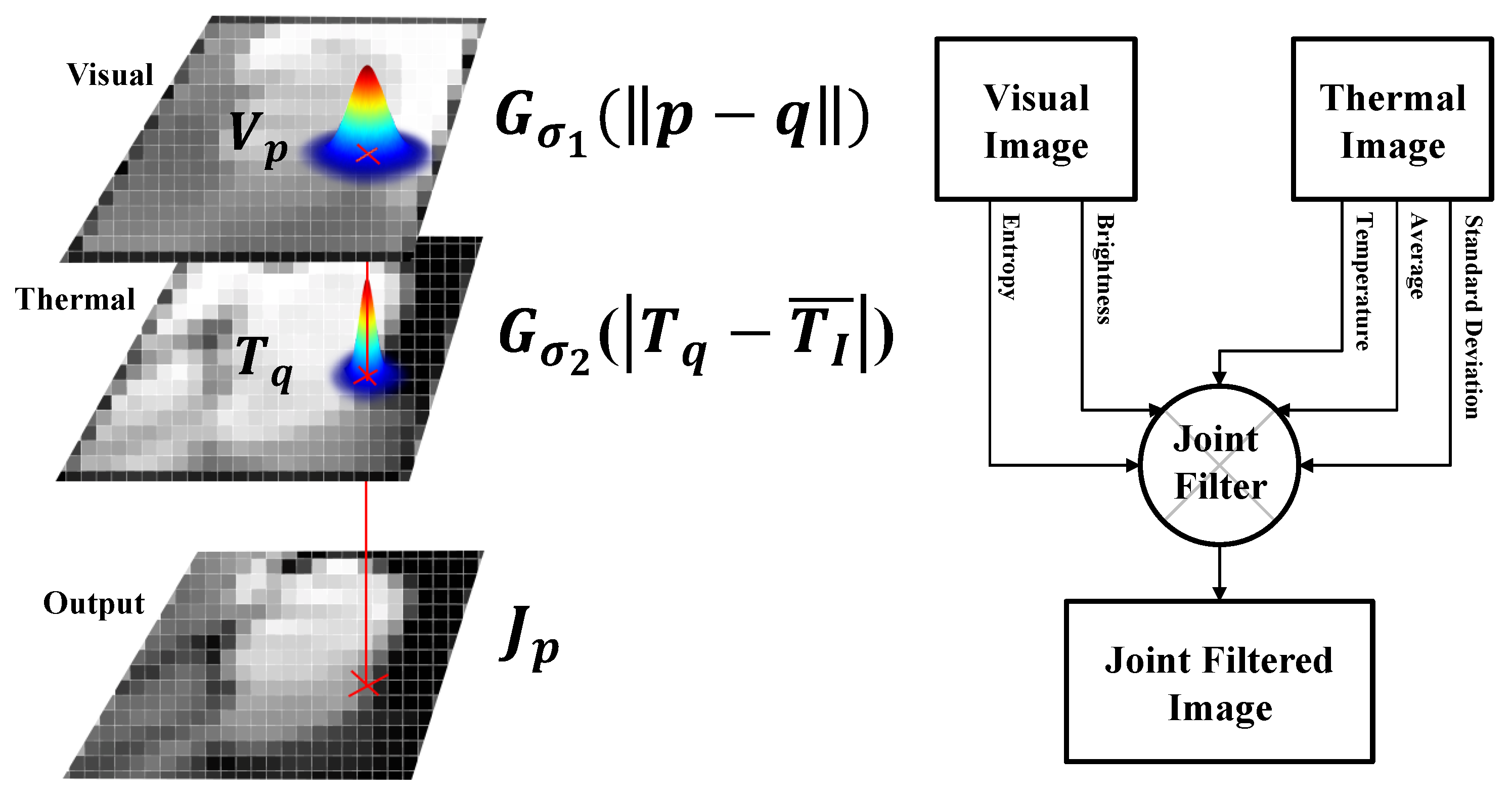

3.2. Thermal-Guided Joint Filter

3.3. Deep Learning-Based Hand Gesture Recognition

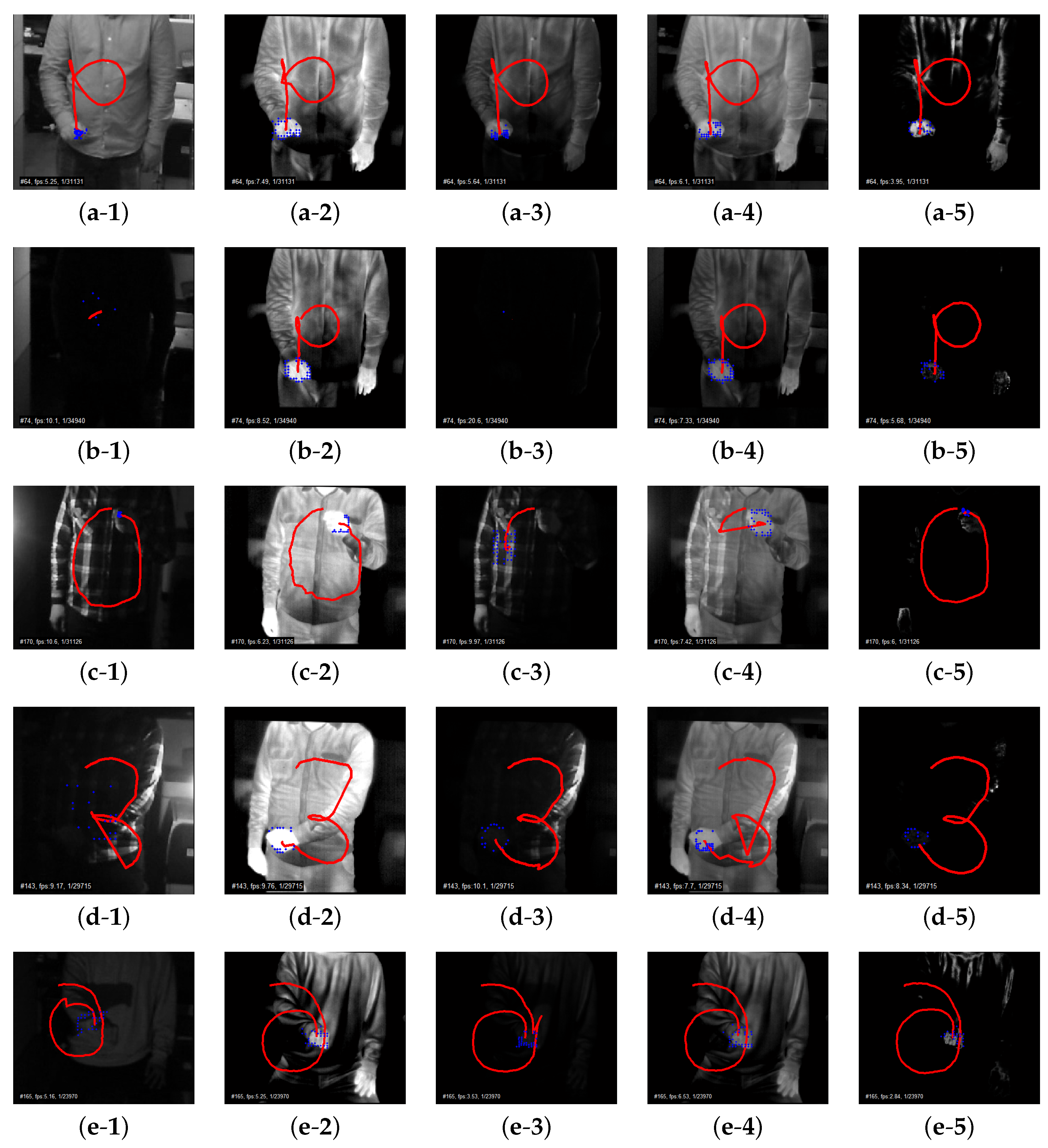

3.3.1. Hand Gesture Tracking

3.3.2. Hand Gesture Recognition

3.4. Overview

- Step 1: Build the database for tracking and classifying a hand gesture as stated in Section 3.1.

- -

- Step 1-1: Calibration between the thermal and visual sensors

- -

- Step 1-2: Registration between the thermal and visual sensors

- -

- Step 1-3: Synchronization between the thermal and visual sensors

- Step 2: Compute the output image based on thermal-guided joint filter as stated in Section 3.2.

- -

- Step 2-1: Computation of spatial adjacency kernel

- -

- Step 2-2: Computation of thermal range similarity kernel

- -

- Step 2-3: Joint filtering based on the convolution using the two kernels

- Step 3: Track a hand gesture as stated in Section 3.3.1.

- -

- Step 3-1: Tracking phase

- -

- Step 3-2: Detection phase

- -

- Step 3-3: Learning phase

- Step 4: Classify the hand gesture as stated in Section 3.3.2.

- -

- Step 4-1: Training phase

- -

- Step 4-2: Testing phase

4. Experiments

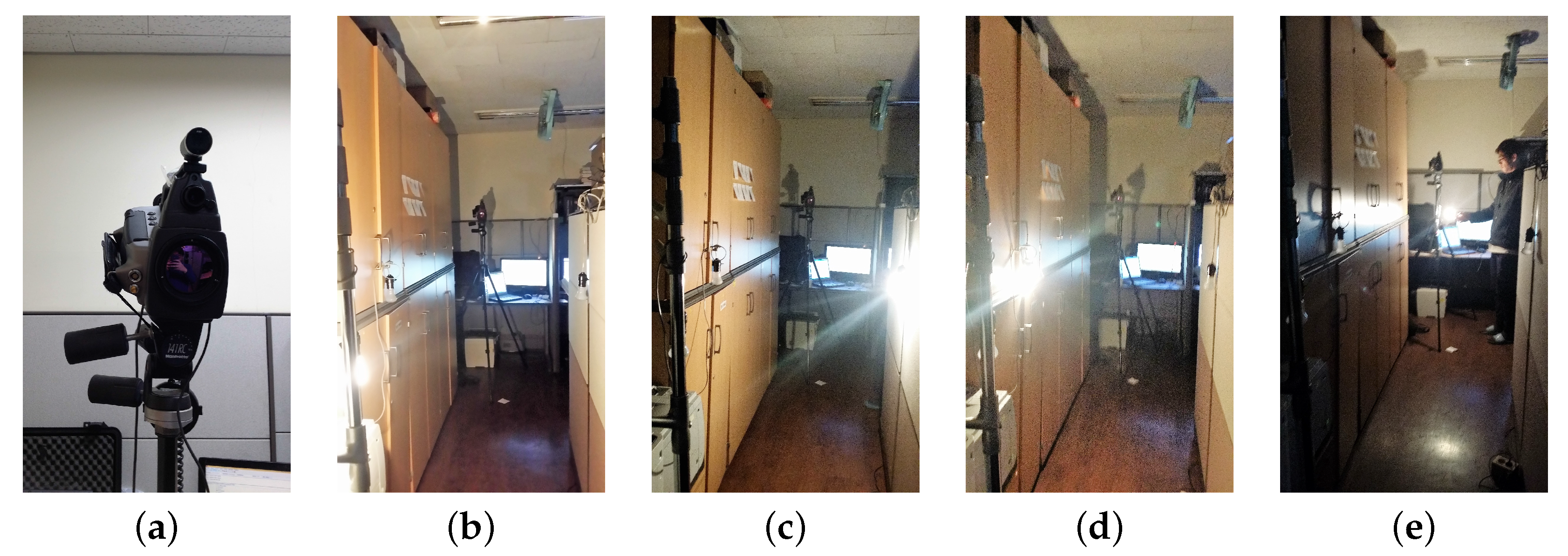

4.1. Experimental Environment

4.1.1. Hardware and Software

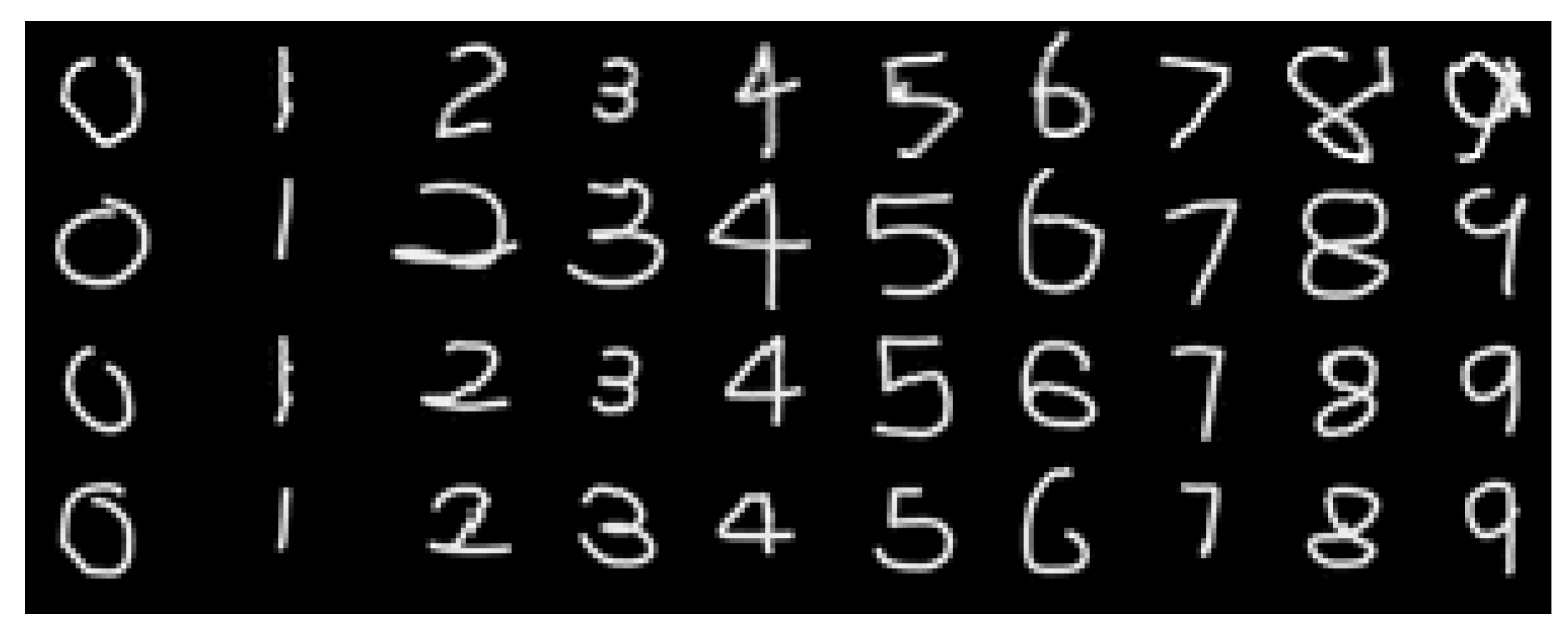

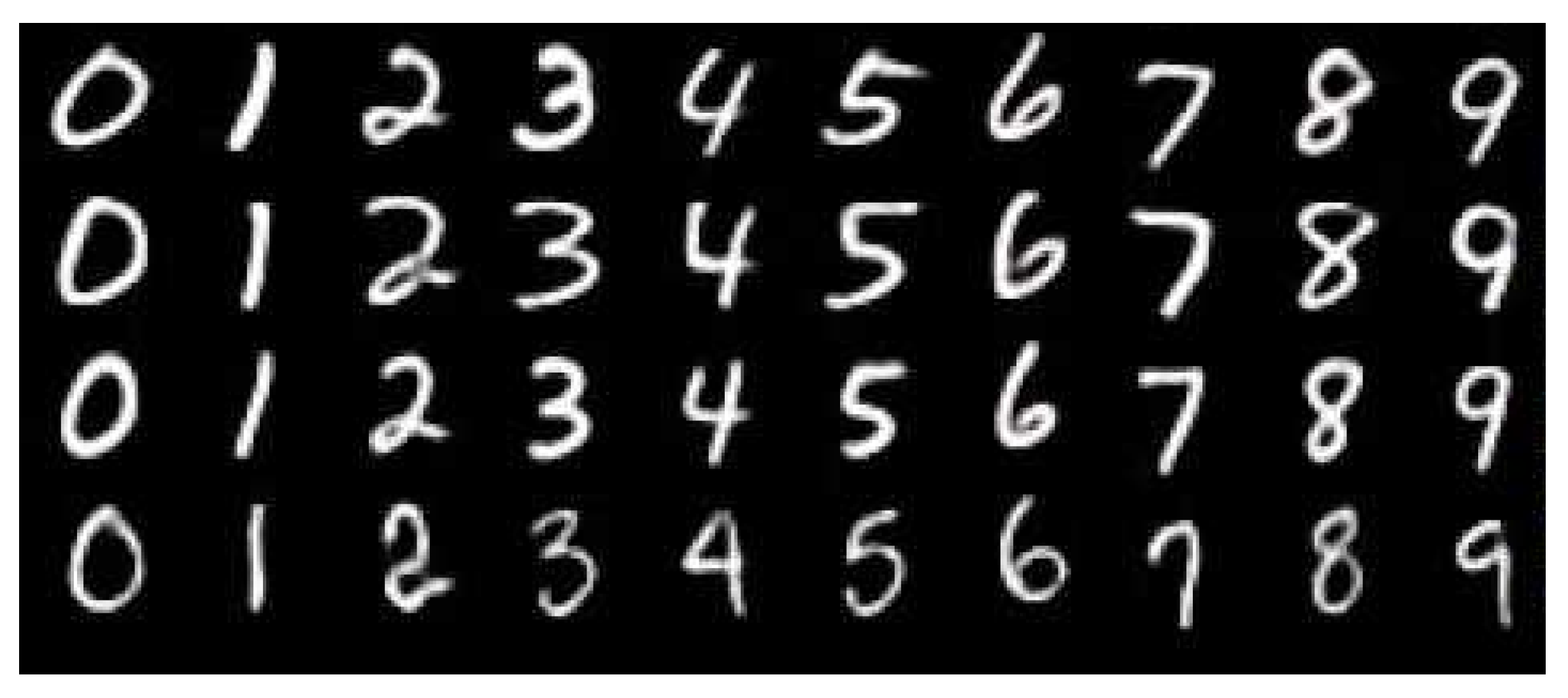

4.1.2. Database

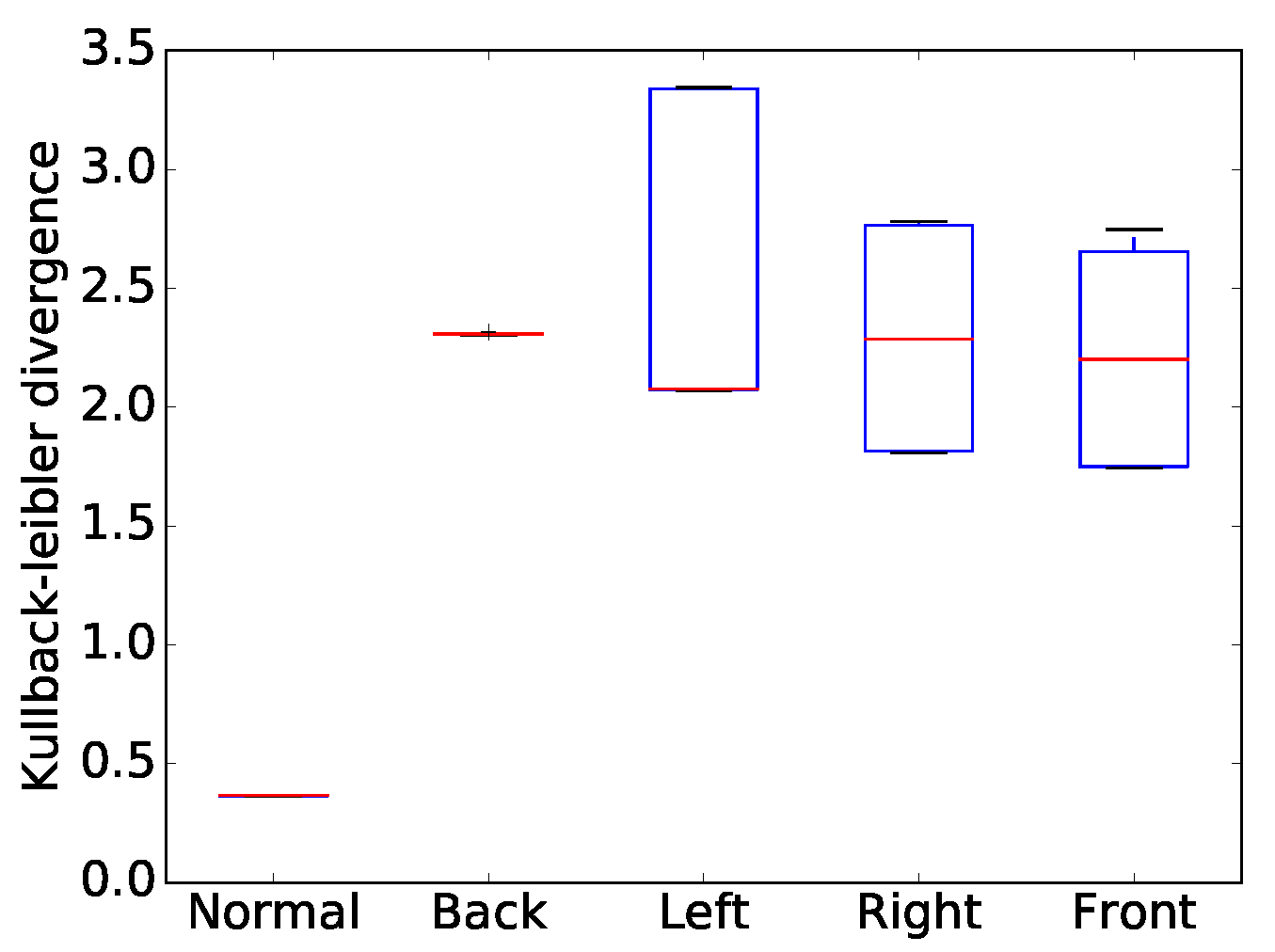

4.1.3. Evaluation Metrics

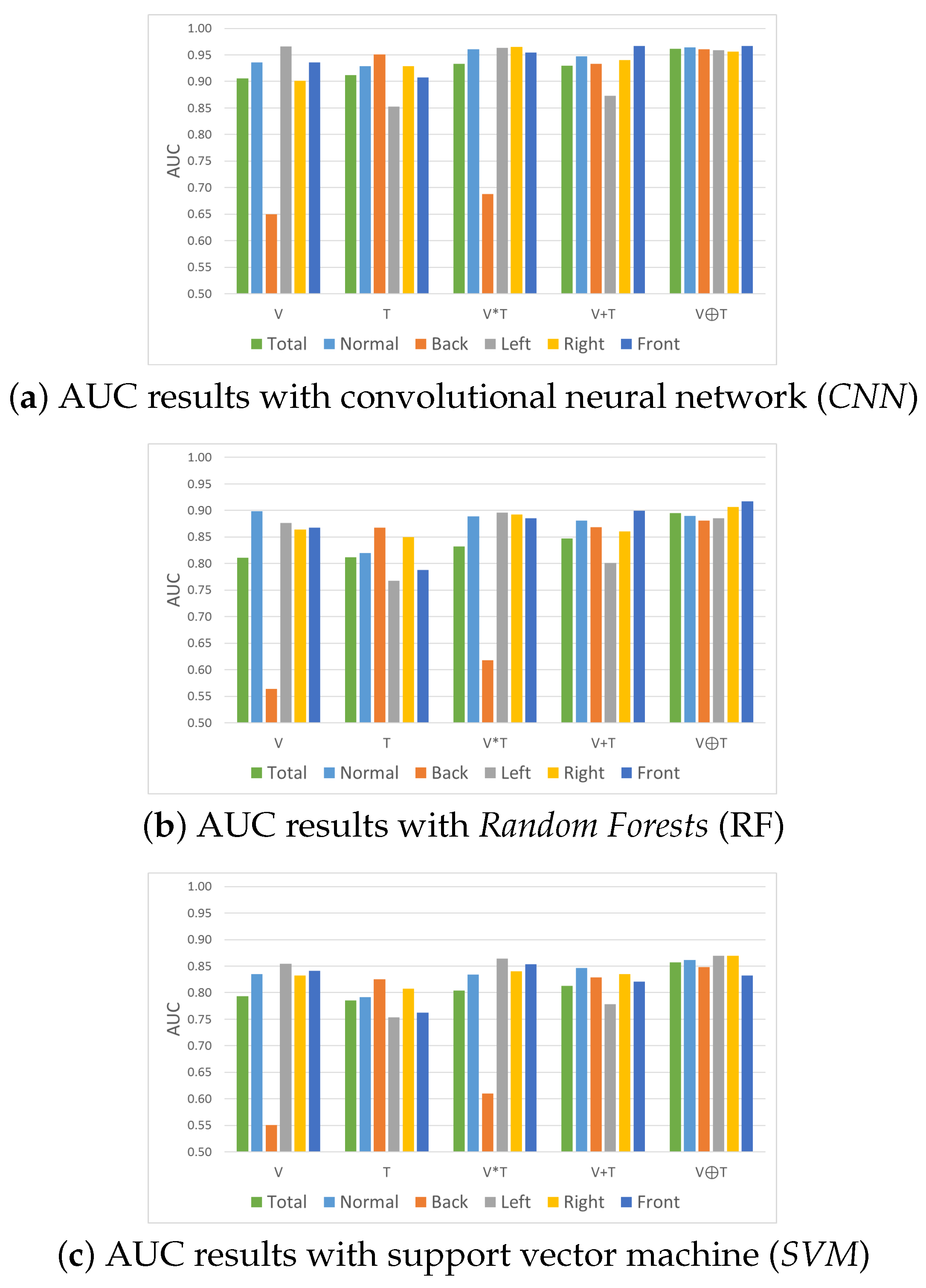

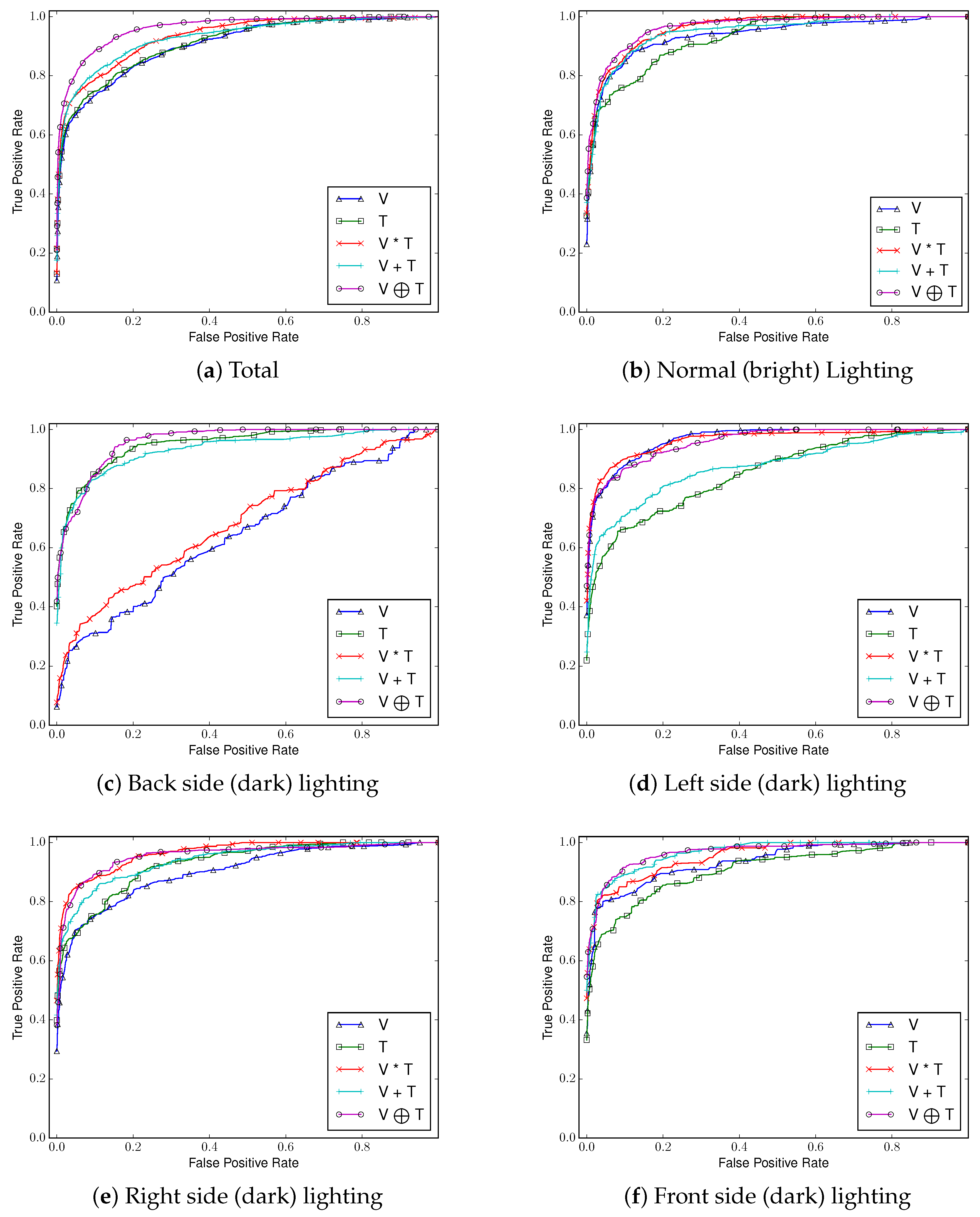

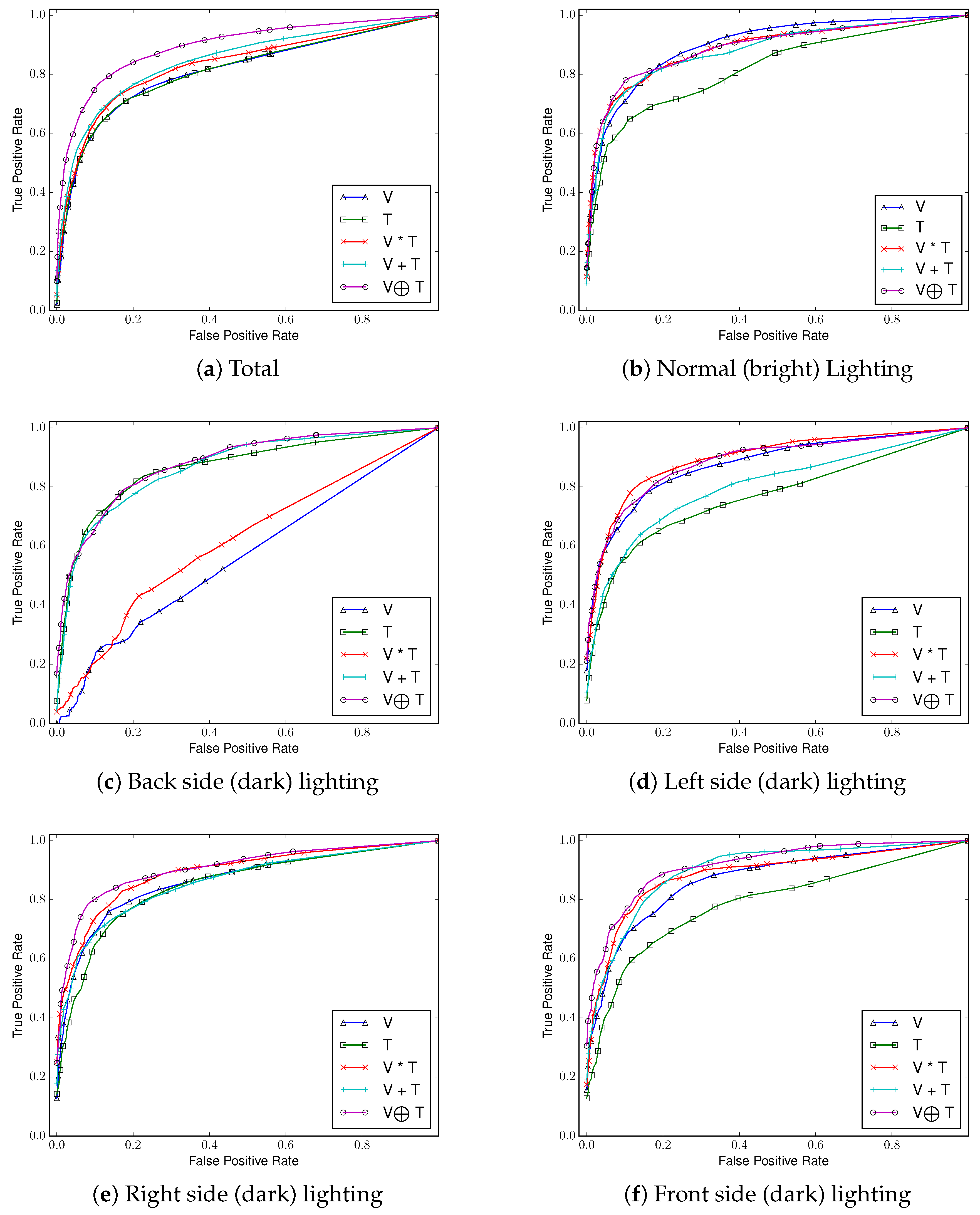

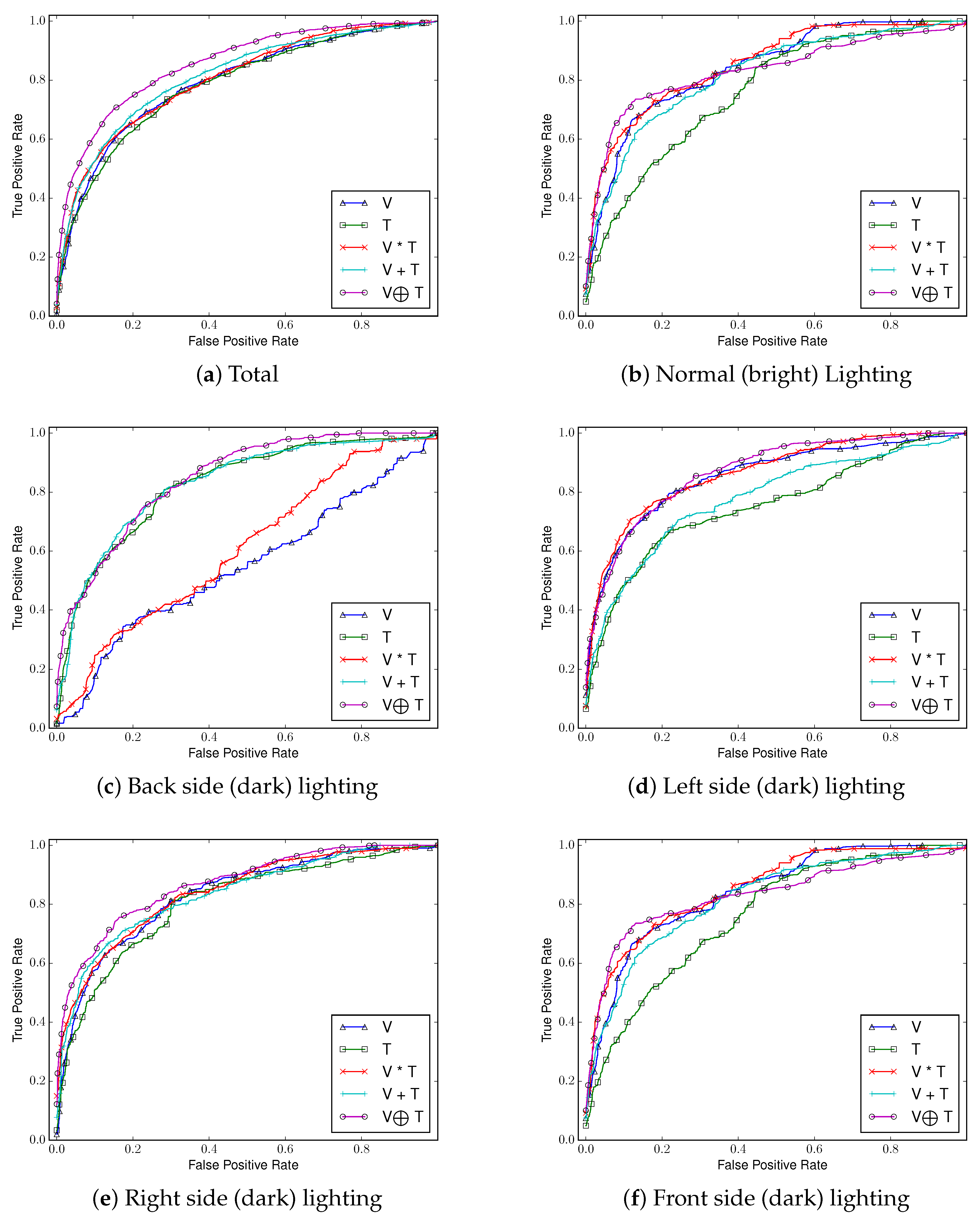

4.2. Experimental Results

4.2.1. Bright Lighting Condition

4.2.2. Dark Lighting Condition

Back Side Lighting

Left or Right Side Lighting

Front Side Lighting

Comparing ROC Curves and AUCs

Computational Complexity

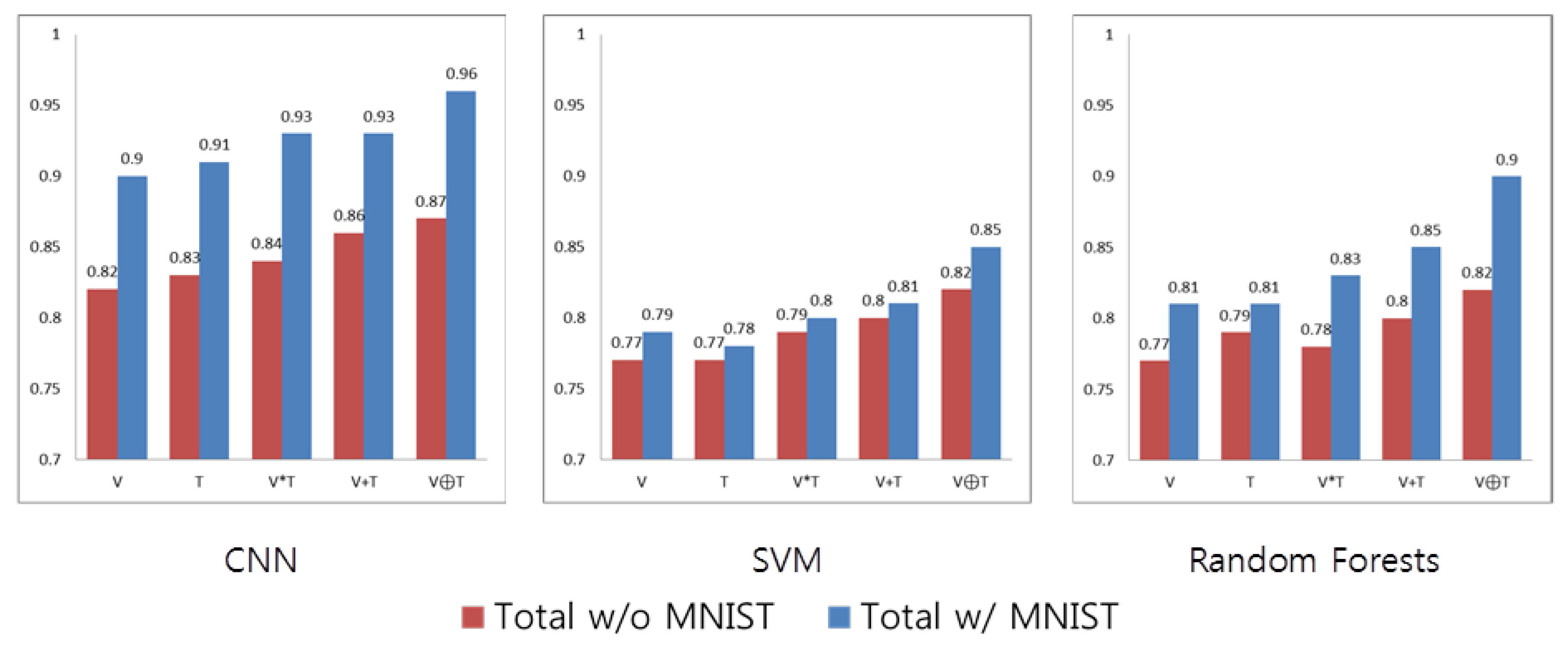

Learning Data

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mitra, S.; Acharya, T. Gesture Recognition: A Survey. IEEE Trans. Syst. Man Cybern. C (Appl. Rev.) 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Jarc, A.; Perš, J.; Rogelj, P.; Perše, M.; Kovačič, S. Texture Features for Affine Registration of Thermal (FLIR) and Visible Images. In Proceedings of the Computer Vision Winter Workshop 2007, St. Lambrecht, Austria, 6–8 February 2007.

- Zin, T.T.; Takahashi, H.; Hama, H.; Toriu, T. Fusion of Infrared and Visible Images for Robust Person Detection; INTECH Open Access Publisher: Rijeka, Croatia, 2011; pp. 239–264. [Google Scholar]

- Ring, E.; Ammer, K. Infrared thermal imaging in medicine. Physiol. Meas. 2012, 33, R33. [Google Scholar] [CrossRef] [PubMed]

- Sissinto, P.; Ladeji-Osias, J. Fusion of infrared and visible images using empirical mode decomposition and spatial opponent processing. In Proceedings of the 2011 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 11–13 October 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–6. [Google Scholar]

- Shah, P.; Merchant, S.; Desai, U.B. Fusion of surveillance images in infrared and visible band using curvelet, wavelet and wavelet packet transform. Int. J. Wavelets Multiresolut. Inf. Process. 2010, 8, 271–292. [Google Scholar] [CrossRef]

- Ó Conaire, C.; O’Connor, N.E.; Cooke, E.; Smeaton, A.F. Comparison of Fusion Methods for Thermo-Visual Surveillance Tracking; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2006. [Google Scholar]

- Torabi, A.; Massé, G.; Bilodeau, G.A. An iterative integrated framework for thermal–visible image registration, sensor fusion, and people tracking for video surveillance applications. Comput. Vis. Image Underst. 2012, 116, 210–221. [Google Scholar] [CrossRef]

- Correa, M.; Hermosilla, G.; Verschae, R.; Ruiz-del Solar, J. Human detection and identification by robots using thermal and visual information in domestic environments. J. Intell. Robot. Syst. 2012, 66, 223–243. [Google Scholar] [CrossRef]

- Airouche, M.; Bentabet, L.; Zelmat, M.; Gao, G. Pedestrian tracking using color, thermal and location cue measurements: A DSmT-based framework. Mach. Vis. Appl. 2012, 23, 999–1010. [Google Scholar] [CrossRef]

- Szwoch, G.; Szczodrak, M. Detection of moving objects in images combined from video and thermal cameras. In International Conference on Multimedia Communications, Services and Security; Springer: Berlin/Heidelberg, Germany, 2013; pp. 262–272. [Google Scholar]

- Bebis, G.; Gyaourova, A.; Singh, S.; Pavlidis, I. Face recognition by fusing thermal infrared and visible imagery. Image Vis. Comput. 2006, 24, 727–742. [Google Scholar] [CrossRef]

- Chen, X.; Flynn, P.J.; Bowyer, K.W. IR and visible light face recognition. Comput. Vis. Image Underst. 2005, 99, 332–358. [Google Scholar] [CrossRef]

- Wolff, L.B.; Socolinsky, D.A.; Eveland, C.K. Face recognition in the thermal infrared. In Computer Vision Beyond the Visible Spectrum; Springer: Berlin/Heidelberg, Germany, 2005; pp. 167–191. [Google Scholar]

- Socolinsky, D.A.; Wolff, L.B.; Neuheisel, J.D.; Eveland, C.K. Illumination invariant face recognition using thermal infrared imagery. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; IEEE: Piscataway, NJ, USA, 2001; Volume 1, pp. I-527–I-534. [Google Scholar]

- Selinger, A.; Socolinsky, D.A. Appearance-Based Facial Recognition Using Visible and Thermal Imagery: A Comparative Study; Technical Report, DTIC Document; Equinox Corp.: New York, NY, USA, 2006. [Google Scholar]

- Heo, J. Fusion of Visual and Thermal Face Recognition Techniques: A Comparative Study. Master’s Thesis, The University of Tennessee, Knoxville, TN, USA, 2003. [Google Scholar]

- Zou, X.; Kittler, J.; Messer, K. Illumination Invariant Face Recognition: A Survey. In Proceedings of the 2007 First IEEE International Conference on Biometrics: Theory, Applications, and Systems, Crystal City, VA, USA, 27–29 September 2007; pp. 1–8.

- Jiang, L.; Tian, F.; Shen, L.E.; Wu, S.; Yao, S.; Lu, Z.; Xu, L. Perceptual-based fusion of ir and visual images for human detection. In Proceedings of the 2004 International Symposium on Intelligent Multimedia, Video and Speech Processing, Hong Kong, China, 20–22 October 2004; IEEE: Piscataway, NJ, USA, 2004; pp. 514–517. [Google Scholar]

- Larson, E.; Cohn, G.; Gupta, S.; Ren, X.; Harrison, B.; Fox, D.; Patel, S. HeatWave: Thermal imaging for surface user interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; ACM: New York, NY, USA, 2011; pp. 2565–2574. [Google Scholar]

- Zeng, B.; Wang, G.; Lin, X. A hand gesture based interactive presentation system utilizing heterogeneous cameras. Tsinghua Sci. Technol. 2012, 17, 329–336. [Google Scholar] [CrossRef]

- Appenrodt, J.; Al-Hamadi, A.; Michaelis, B. Data gathering for gesture recognition systems based on single color-, stereo color-and thermal cameras. Int. J. Signal Process. Image Process. Pattern Recognit. 2010, 3, 37–50. [Google Scholar]

- Hanif, M.; Ali, U. Optimized Visual and Thermal Image Fusion for Efficient Face Recognition. In Proceedings of the 2006 9th International Conference on Information Fusion, Florence, Italy, 10–13 July 2006; pp. 1–6.

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Torr, P.H.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), St Paul, MN, USA, 14–18 May 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3936–3943. [Google Scholar]

- Hilsenstein, V. Surface reconstruction of water waves using thermographic stereo imaging. In Proceedings of the Image and Vision Computing New Zealand 2005: University of Otago, Dunedin, New Zealand, 28–29 November 2005.

- Cheng, S.Y.; Park, S.; Trivedi, M.M. Multiperspective Thermal IR and Video Arrays for 3D Body Tracking and Driver Activity Analysis. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)-Workshops, San Diego, CA, USA, 21–23 September 2005; p. 3.

- Prakash, S.; Lee, P.Y.; Caelli, T.; Raupach, T. Robust thermal camera calibration and 3D mapping of object surface temperatures. Proc. SPIE 2006, 6205. [Google Scholar] [CrossRef]

- Vidas, S.; Lakemond, R.; Denman, S.; Fookes, C.; Sridharan, S.; Wark, T. A Mask-Based Approach for the Geometric Calibration of Thermal-Infrared Cameras. IEEE Trans. Instrum. Meas. 2012, 61, 1625–1635. [Google Scholar] [CrossRef] [Green Version]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Kopf, J.; Cohen, M.F.; Lischinski, D.; Uyttendaele, M. Joint bilateral upsampling. In ACM Transactions on Graphics (TOG); ACM: New York, NY, USA, 2007; Volume 26, p. 96. [Google Scholar]

- Kullback, S.; Leibler, R.A. On Information and Sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sermanet, P.; Chintala, S.; LeCun, Y. Convolutional neural networks applied to house numbers digit classification. In Proceedings of the 2012 21st International Conference on Pattern Recognition (ICPR), Tsukuba, Japan, 11–15 November 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3288–3291. [Google Scholar]

- Hu, J.T.; Fan, C.X.; Ming, Y. Trajectory image based dynamic gesture recognition with convolutional neural networks. In Proceedings of the 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1885–1889. [Google Scholar]

- Wang, W.H.A.; Tung, C.L. Dynamic hand gesture recognition using hierarchical dynamic Bayesian networks through low-level image processing. In Proceedings of the 2008 International Conference on Machine Learning and Cybernetics, Kunming, China, 12–15 July 2008; Volume 6, pp. 3247–3253.

- Hsieh, C.C.; Liou, D.H.; Lee, D. A real time hand gesture recognition system using motion history image. In Proceedings of the 2010 2nd International Conference on Signal Processing Systems, Dalian, China, 5–7 July 2010; Volume 2, pp. 394–398.

- Kim, I.C.; Chien, S.I. Analysis of 3D Hand Trajectory Gestures Using Stroke-Based Composite Hidden Markov Models. Appl. Intell. 2001, 15, 131–143. [Google Scholar] [CrossRef]

- Cheng, J.; Xie, C.; Bian, W.; Tao, D. Feature fusion for 3D hand gesture recognition by learning a shared hidden space. Pattern Recognit. Lett. 2012, 33, 476–484. [Google Scholar] [CrossRef]

- Zhu, H.M.; Pun, C.M. Movement Tracking in Real-Time Hand Gesture Recognition. In Proceedings of the 2010 IEEE/ACIS 9th International Conference on Computer and Information Science, Yamagata, Japan, 18–20 August 2010; pp. 240–245.

- Wang, J.S.; Chuang, F.C. An Accelerometer-Based Digital Pen with a Trajectory Recognition Algorithm for Handwritten Digit and Gesture Recognition. IEEE Trans. Ind. Electron. 2012, 59, 2998–3007. [Google Scholar] [CrossRef]

- Alon, J.; Athitsos, V.; Yuan, Q.; Sclaroff, S. A Unified Framework for Gesture Recognition and Spatiotemporal Gesture Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1685–1699. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: http://tensorflow.org (accessed on 14 January 2017).

- Sermanet, P.; LeCun, Y. Traffic sign recognition with multi-scale Convolutional Networks. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 2809–2813.

- FLIR. FLIR Advanced Radiometry Note; FLIR: Wilsonville, OR, USA, 2013. [Google Scholar]

- Zhang, Y.; Wu, L.; Neggaz, N.; Wang, S.; Wei, G. Remote-sensing image classification based on an improved probabilistic neural network. Sensors 2009, 9, 7516–7539. [Google Scholar] [CrossRef] [PubMed]

- Kahaki, S.M.M.; Nordin, M.J.; Ashtari, A.H. Contour-based corner detection and classification by using mean projection transform. Sensors 2014, 14, 4126–4143. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Farooq, M.; Sazonov, E. A novel wearable device for food intake and physical activity recognition. Sensors 2016, 16, 1067. [Google Scholar] [CrossRef] [PubMed]

| Stage | Operation | Feature |

|---|---|---|

| 1st stage | convolution with ReLU | |

| 2nd stage | convolution with ReLU | |

| Densely connected stage | ReLU | 1024 |

| Decision stage | Softmax | 10 |

| Method | V | T | V⨂T | ||||

|---|---|---|---|---|---|---|---|

| Tracking | 150.1 | 159.3 | 155.4 | 172.9 | 222.7 | ||

| Recognition | CNN | Training | 11,316,850 | ||||

| Testing | 9.39 | 9.38 | 9.35 | 9.58 | 9.43 | ||

| Random Forests | Training | 205,833 | |||||

| Testing | 186.55 | 186.77 | 185.97 | 185.91 | 186.77 | ||

| SVM | Training | 3,085,456 | |||||

| Testing | 127.99 | 128.37 | 128.60 | 128.36 | 128.47 | ||

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Ban, Y.; Lee, S. Tracking and Classification of In-Air Hand Gesture Based on Thermal Guided Joint Filter. Sensors 2017, 17, 166. https://doi.org/10.3390/s17010166

Kim S, Ban Y, Lee S. Tracking and Classification of In-Air Hand Gesture Based on Thermal Guided Joint Filter. Sensors. 2017; 17(1):166. https://doi.org/10.3390/s17010166

Chicago/Turabian StyleKim, Seongwan, Yuseok Ban, and Sangyoun Lee. 2017. "Tracking and Classification of In-Air Hand Gesture Based on Thermal Guided Joint Filter" Sensors 17, no. 1: 166. https://doi.org/10.3390/s17010166

APA StyleKim, S., Ban, Y., & Lee, S. (2017). Tracking and Classification of In-Air Hand Gesture Based on Thermal Guided Joint Filter. Sensors, 17(1), 166. https://doi.org/10.3390/s17010166