Road Lane Detection by Discriminating Dashed and Solid Road Lanes Using a Visible Light Camera Sensor

Abstract

:1. Introduction

2. Related Works

- -

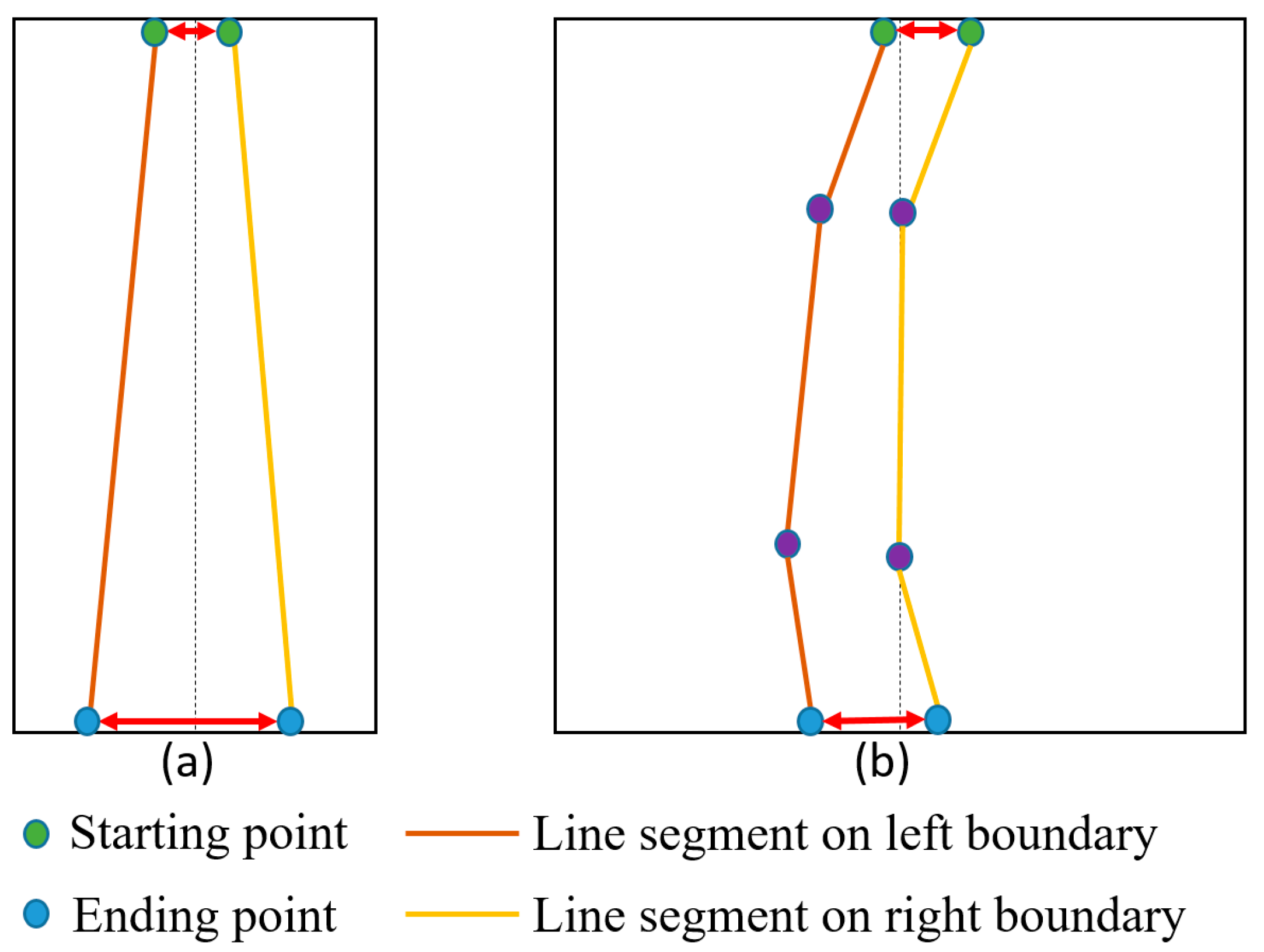

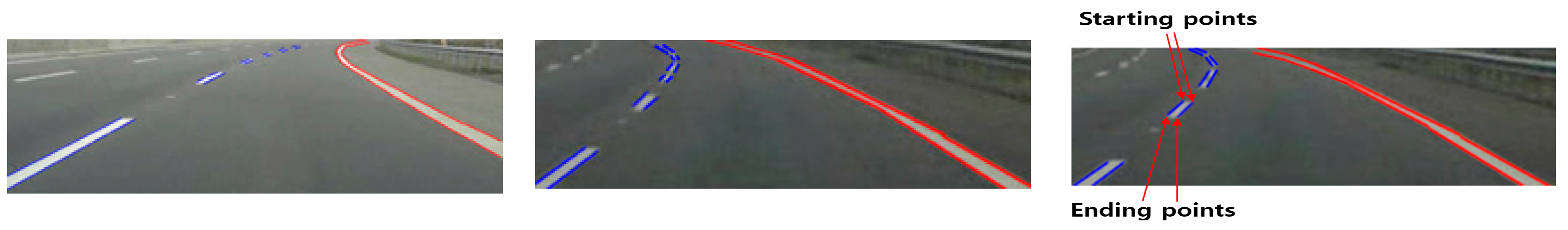

- In most previous researches, they detect only the centerline of the left and right boundaries of a road lane. Different from them, our method can detect the accurate left and right boundaries of a road lane.

- -

- In most previous studies, they detected the starting and ending positions of a road lane without discriminating the dashed and solid ones. In some research, they just classified the kinds of road lane such as dashed, solid, dashed solid, solid dashed, and double solid ones without detecting the starting and ending positions of a road lane. Different from them, our method correctly detects the starting and ending positions of lane with the discrimination of dashed and solid lanes.

- -

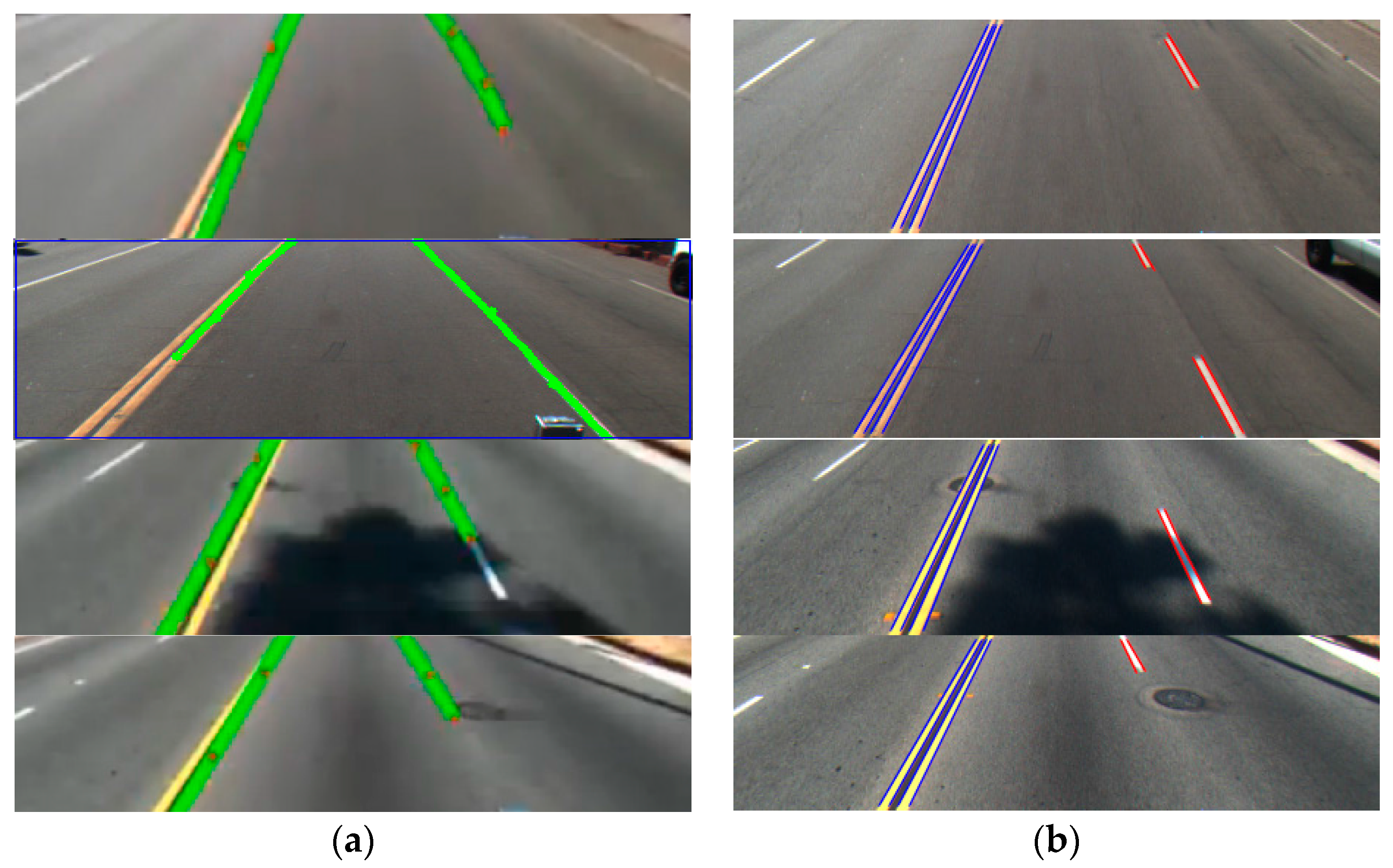

- We can remove incorrect line segments using the line segments’ angles and merging the line segments according to their inter-distance. In order to detect curve lane, the angular condition is adaptively changed within the upper area of ROI based on tracing information of angular changes of line segments.

- -

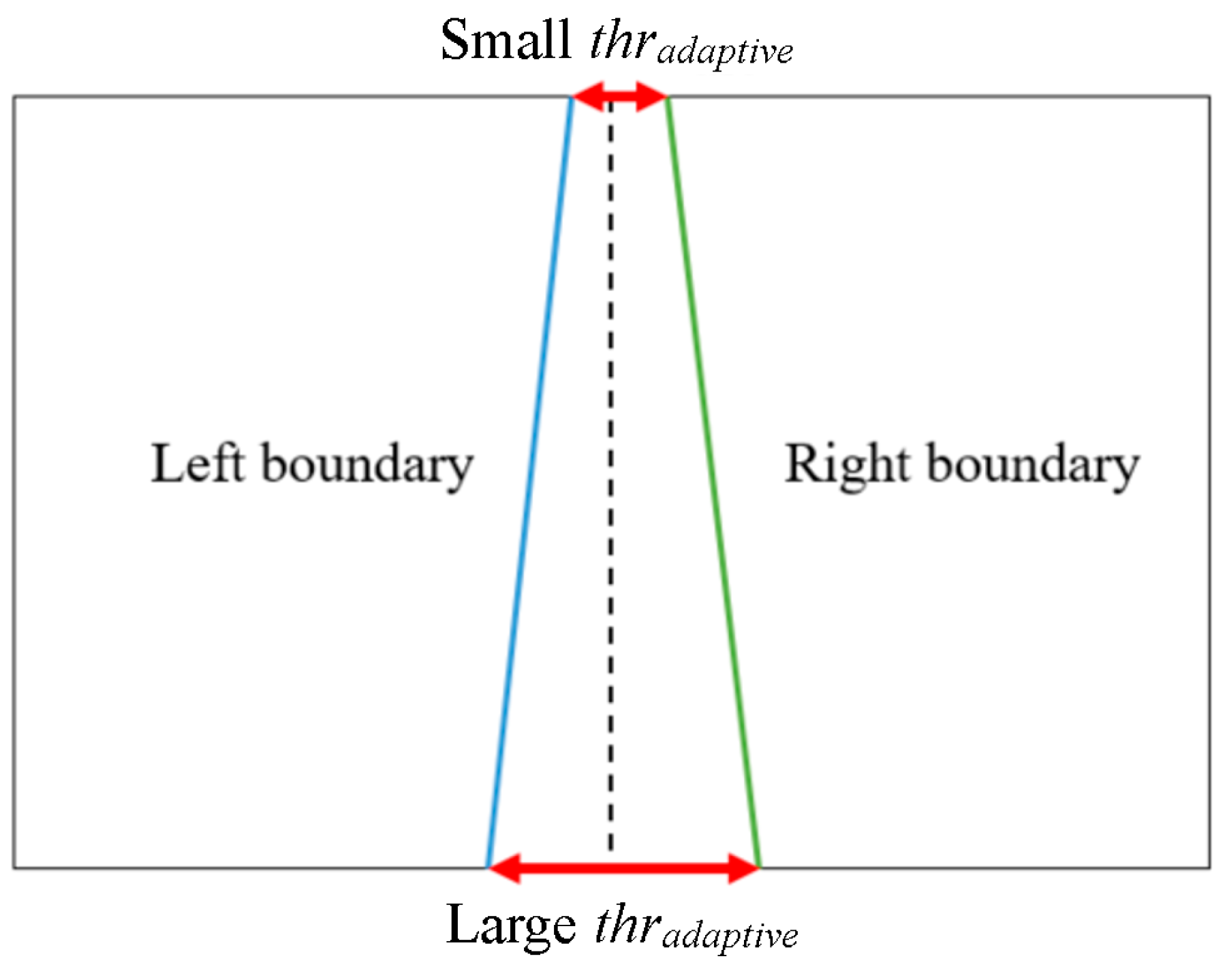

- Using a perspective camera model, the adaptive threshold is determined to measure the distance and used to detect the final line segments of the road lane’s left and right boundaries.

3. Proposed Method

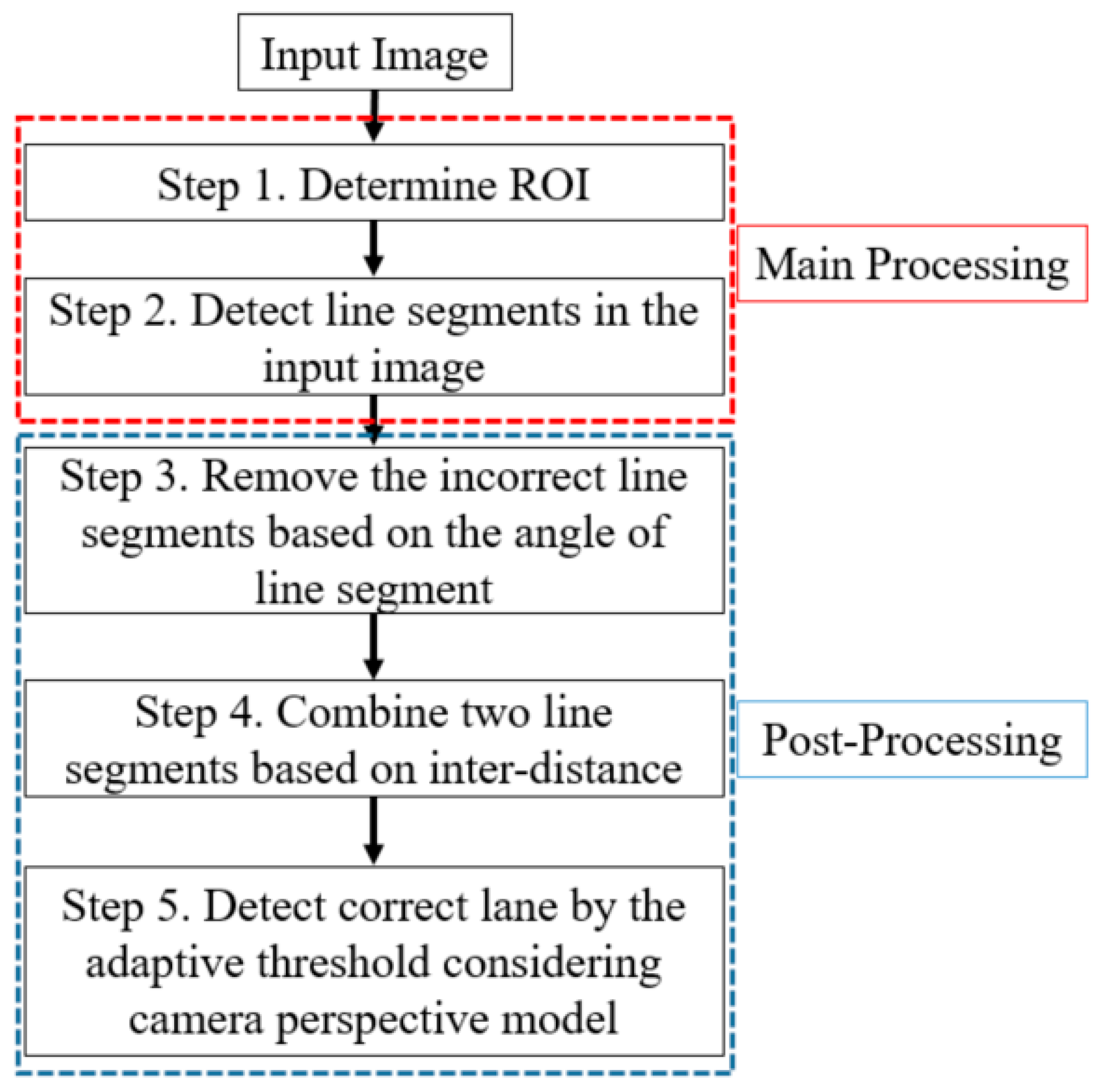

3.1. Proposed Method

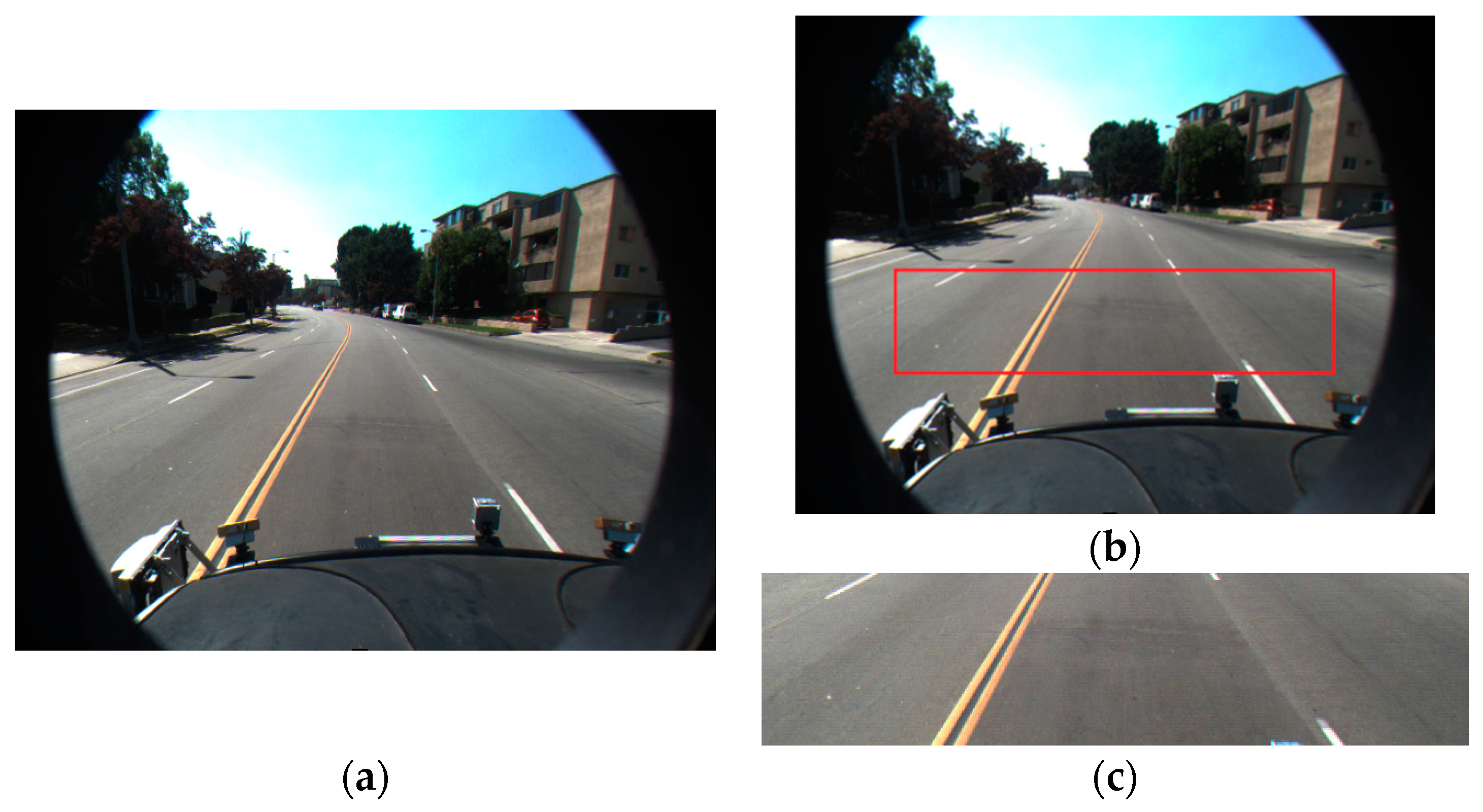

3.2. Determination of ROI

3.3. Lane Detection by Locating Line Segments

3.4. Correct Lane Detection Based on Post-Processing

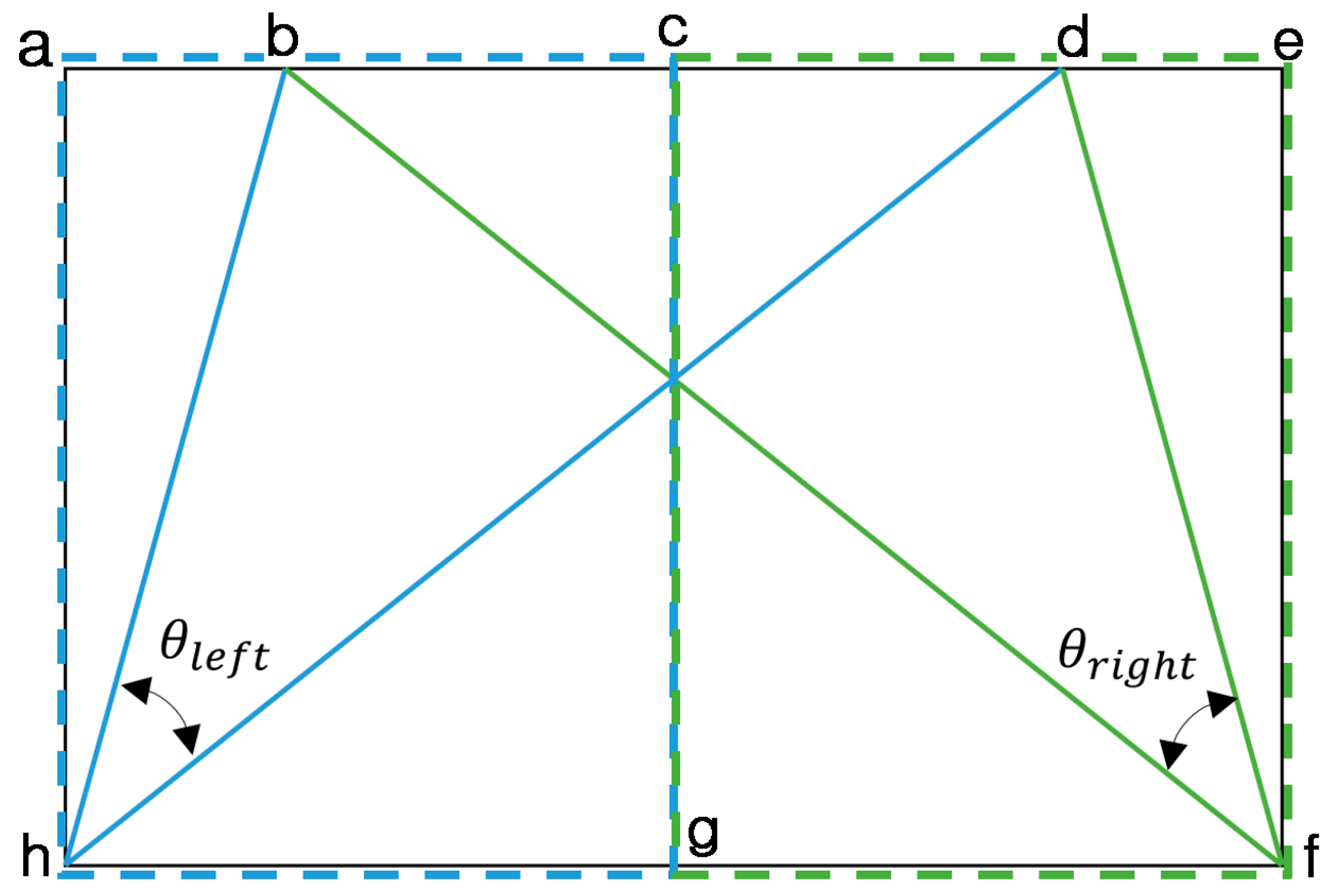

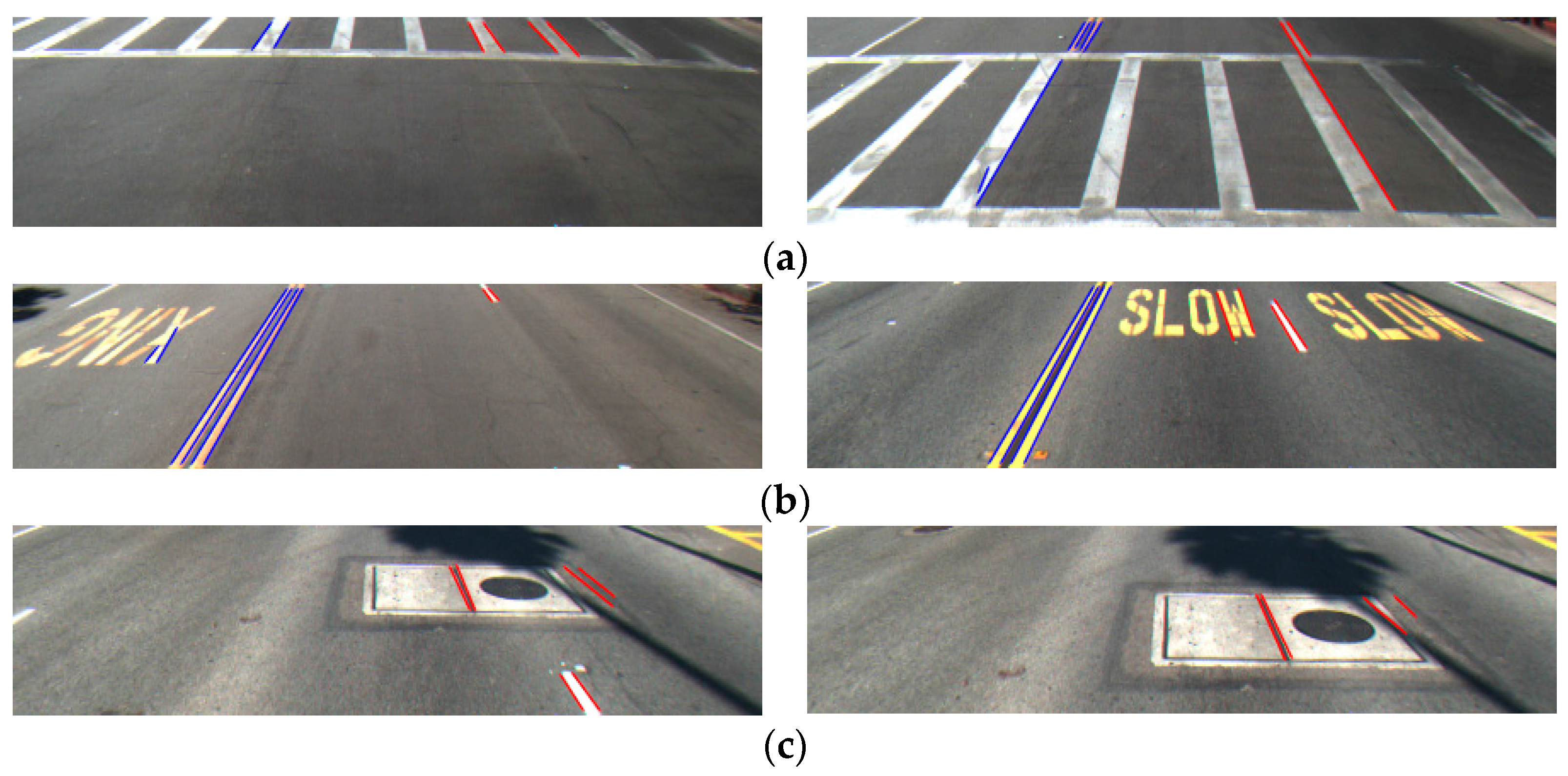

3.4.1. Eliminating Incorrect Line Segment Based on Angle

3.4.2. Combining the Pieces of Line Segments

| Algorithm 1. Line Combination Algorithm. |

| Input: Set of line segments S |

| Output: Set of combined lines |

| While (line I S) |

| { |

| Get starting point ending point , and angle |

| While ((line J ) and (J S)) |

| { |

| Get starting point ending point and angle |

| If () |

| { |

| = d( |

| If ( < thrdst) |

| { |

| = | – | |

| If <= ) |

| { |

| Define a new straight line K having and //Case 1 of Figure 8 |

| Remove lines I and J |

| } |

| Else if > ) |

| { |

| Define a new curve line having , , and //Case 3 of Figure 8 |

| } |

| } |

| } |

| Else if ( >= ) |

| { |

| = d( |

| If ( < thrdst) |

| { |

| = | – | |

| If <= ) |

| { |

| Define a new straight line K having and //Case 2 of Figure 8 |

| Remove lines I and J |

| } |

| Else if > ) |

| { |

| Define a new curve line having , , and //Case 3 of Figure 8 |

| } |

| } |

| } |

| } |

| } |

3.4.3. Detecting the Left and Right Boundaries Based on Adaptive Threshold

4. Experimental Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Xu, H.; Wang, X.; Huang, H.; Wu, K.; Fang, Q. A Fast and Stable Lane Detection Method Based on B-Spline Curve. In Proceedings of the IEEE 10th International Conference on Computer-Aided Industrial Design & Conceptual Design, Wenzhou, China, 26–29 November 2009; pp. 1036–1040.

- Li, W.; Gong, X.; Wang, Y.; Liu, P. A Lane Marking Detection and Tracking Algorithm Based on Sub-Regions. In Proceedings of the International Conference on Informative and Cybernetics for Computational Social Systems, Qingdao, China, 9–10 October 2014; pp. 68–73.

- Wang, Y.; Teoh, E.K.; Shen, D. Lane detection and tracking using B-Snake. Image Vis. Comput. 2004, 22, 269–280. [Google Scholar] [CrossRef]

- Özcan, B.; Boyraz, P.; Yiğit, C.B. A MonoSLAM Approach to Lane Departure Warning System. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Besançon, France, 8–11 July 2014; pp. 640–645.

- Tan, H.; Zhou, Y.; Zhu, Y.; Yao, D.; Li, K. A Novel Curve Lane Detection Based on Improved River Flow and RANSA. In Proceedings of the International Conference on Intelligent Transportation Systems, Qingdao, China, 8–11 October 2014; pp. 133–138.

- Zhou, S.; Jiang, Y.; Xi, J.; Gong, J.; Xiong, G.; Chen, H. A Novel Lane Detection Based on Geometrical Model and Gabor Filter. In Proceedings of the IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 59–64.

- Chen, Q.; Wang, H. A Real-Time Lane Detection Algorithm Based on a Hyperbola-Pair Model. In Proceedings of the Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 510–515.

- Jung, C.R.; Kelber, C.R. A Robust Linear-Parabolic Model for Lane Following. In Proceedings of the 17th Brazilian Symposium on Computer Graphics and Image Processing, Curitiba, Brazil, 17–20 October 2004; pp. 72–79.

- Litkouhi, B.B.; Lee, A.Y.; Craig, D.B. Estimator and Controller Design for Lanetrak, a Vision-Based Automatic Vehicle Steering System. In Proceedings of the 32nd Conference on Decision and Control, San Antonio, TX, USA, 15–17 December 1993; pp. 1868–1873.

- Shin, J.; Lee, E.; Kwon, K.; Lee, S. Lane Detection Algorithm Based on Top-View Image Using Random Sample Consensus Algorithm and Curve Road Model. In Proceedings of the 6th International Conference on Ubiquitous and Future Networks, Shanghai, China, 8–11 July 2014; pp. 1–2.

- Lu, W.; Rodriguez, F.S.A.; Seignez, E.; Reynaud, R. Monocular Multi-Kernel Based Lane Marking Detection. In Proceedings of the 4th Annual International Conference on Cyber Technology in Automation, Control, and Intelligent Systems, Hong Kong, China, 4–7 June 2014; pp. 123–128.

- Aly, M. Real Time Detection of Lane Markers in Urban Streets. In Proceedings of the IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12.

- Yoo, H.; Yang, U.; Sohn, K. Gradient-enhancing conversion for illumination-robust lane detection. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1083–1094. [Google Scholar] [CrossRef]

- Li, H.; Feng, M.; Wang, X. Inverse Perspective Mapping Based Urban Road Markings Detection. In Proceedings of the International Conference on Cloud Computing and Intelligent Systems, Hangzhou, China, 30 October–1 November 2013; pp. 1178–1182.

- Chiu, K.-Y.; Lin, S.-F. Lane Detection Using Color-Based Segmentation. In Proceeding of the Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 706–711.

- Deng, J.; Kim, J.; Sin, H.; Han, Y. Fast lane detection based on the B-spline fitting. Int. J. Res. Eng. Tech. 2013, 2, 134–137. [Google Scholar]

- Mu, C.; Ma, X. Lane detection based on object segmentation and piecewise fitting. TELKOMNIKA Indones. J. Electr. Eng. 2014, 12, 3491–3500. [Google Scholar] [CrossRef]

- Chang, C.-Y.; Lin, C.-H. An Efficient Method for Lane-Mark Extraction in Complex Conditions. In Proceedings of the International Conference on Ubiquitous Intelligence and Computing and International Conference on Autonomic and Trusted Computing, Fukuoka, Japan, 4–7 September 2012; pp. 330–336.

- Ding, D.; Lee, C.; Lee, K.-Y. An Adaptive Road ROI Determination Algorithm for Lane Detection. In Proceedings of the TENCON 2013–2013 IEEE Region 10 Conference, Xi’an, China, 22–25 October 2013; pp. 1–4.

- Chen, M.; Jochem, T.; Pomerleau, D. AURORA: A Vision-Based Roadway Departure Warning System. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems 95 Human Robot Interaction and Cooperative Robots, Pittsburgh, PA, USA, 5–9 August 1995; pp. 243–248.

- Benligiray, B.; Topal, C.; Akinlar, C. Video-Based Lane Detection Using a Fast Vanishing Point Estimation Method. In Proceedings of the IEEE International Symposium on Multimedia, Irvine, CA, USA, 10–12 December 2012; pp. 348–351.

- Jingyu, W.; Jianmin, D. Lane Detection Algorithm Using Vanishing Point. In Proceedings of the International Conference on Machine Learning and Cybernetics, Tianjin, China, 14–17 July 2013; pp. 735–740.

- Son, J.; Yoo, H.; Kim, S.; Sohn, K. Real-time illumination invariant lane detection for lane departure warning system. Expert Syst. Appl. 2015, 42, 1816–1824. [Google Scholar] [CrossRef]

- Paula, M.B.D.; Jung, C.R. Automatic detection and classification of road lane markings using onboard vehicular cameras. IEEE Trans. Intell. Transp. Syst. 2015, 16, 3160–3169. [Google Scholar] [CrossRef]

- Li, Z.; Cai, Z.-X.; Xie, J.; Ren, X.-P. Road Markings Extraction Based on Threshold Segmentation. In Proceedings of the International Conference on Fuzzy Systems and Knowledge Discovery, Chongqing, China, 29–31 May 2012; pp. 1924–1928.

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.-M.; Randall, G. LSD: A line segment detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.-M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Burns, J.B.; Hanson, A.R.; Riseman, E.M. Extracting straight lines. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 425–455. [Google Scholar] [CrossRef]

- Desolneux, A.; Moisan, L.; Morel, J.-M. Meaningful alignments. Int. J. Comput. Vis. 2000, 40, 7–23. [Google Scholar] [CrossRef]

- Desolneux, A.; Moisan, L.; Morel, J.-M. From Gestalt Theory to Image Analysis—A Probabilistic Approach; Springer: New York, NY, USA, 2007. [Google Scholar]

- Curved Lane Detection. Available online: https://www.youtube.com/watch?v=VlH3OEhZnow (accessed on 22 June 2016).

- Real-Time Lane Detection and Tracking System. Available online: https://www.youtube.com/watch?v=0v8sdPViB1c (accessed on 22 June 2016).

- Sensitivity and Specificity. Available online: http://en.wikipedia.org/wiki/Sensitivity_and_specificity (accessed on 18 March 2016).

- F1 Score. Available online: https://en.wikipedia.org/wiki/F1_score (accessed on 20 June 2016).

- Truong, Q.-B.; Lee, B.-R. New Lane Detection Algorithm for Autonomous Vehicles Using Computer Vision. In Proceedings of the International Conference on Control, Automation and Systems, Seoul, Korea, 14–17 October 2008; pp. 1208–1213.

- Santiago Lanes Dataset. Available online: http://ral.ing.puc.cl/datasets.htm (accessed on 10 June 2016).

| Category | Model-Based Method | Feature-Based Method | |

|---|---|---|---|

| Not Discriminating Dashed and Solid Lanes | Discriminating Dashed and Solid Lanes (Proposed Method) | ||

| Methods |

|

| |

| Advantages |

|

|

|

| Disadvantages |

|

|

|

| |||

| Database | #Images | #TP | #FP | #FN | Precision | Recall | F-Measure |

|---|---|---|---|---|---|---|---|

| Cordova 1 | 233 | 1252 | 53 | 92 | 0.96 | 0.93 | 0.94 |

| Cordova 2 | 253 | 734 | 112 | 15 | 0.87 | 0.98 | 0.92 |

| Washington 1 | 175 | 875 | 94 | 64 | 0.9 | 0.93 | 0.91 |

| Washington 2 | 205 | 1180 | 196 | 79 | 0.86 | 0.94 | 0.90 |

| Total | 866 | 4041 | 455 | 250 | 0.90 | 0.94 | 0.90 |

| Database Accuracies | Cordova 1 | Cordova 2 | Washington 1 | Washington 2 | |

|---|---|---|---|---|---|

| Precision | Ours | 0.96 | 0.87 | 0.9 | 0.86 |

| [12] | 0.012 | 0.112 | 0.037 | 0.028 | |

| [35] | 0.553 | 0.389 | 0.423 | 0.440 | |

| Recall | Ours | 0.93 | 0.98 | 0.93 | 0.94 |

| [12] | 0.006 | 0.143 | 0.037 | 0.026 | |

| [35] | 0.512 | 0.402 | 0.407 | 0.430 | |

| F-measure | Ours | 0.94 | 0.92 | 0.91 | 0.90 |

| [12] | 0.008 | 0.126 | 0.037 | 0.027 | |

| [35] | 0.532 | 0.395 | 0.415 | 0.435 | |

| Module Database | Processing Time |

|---|---|

| Cordova 1 | 26.56 |

| Cordova2 | 32.89 |

| Washington1 | 37.80 |

| Washington 2 | 35.12 |

| Average | 33.09 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoang, T.M.; Hong, H.G.; Vokhidov, H.; Park, K.R. Road Lane Detection by Discriminating Dashed and Solid Road Lanes Using a Visible Light Camera Sensor. Sensors 2016, 16, 1313. https://doi.org/10.3390/s16081313

Hoang TM, Hong HG, Vokhidov H, Park KR. Road Lane Detection by Discriminating Dashed and Solid Road Lanes Using a Visible Light Camera Sensor. Sensors. 2016; 16(8):1313. https://doi.org/10.3390/s16081313

Chicago/Turabian StyleHoang, Toan Minh, Hyung Gil Hong, Husan Vokhidov, and Kang Ryoung Park. 2016. "Road Lane Detection by Discriminating Dashed and Solid Road Lanes Using a Visible Light Camera Sensor" Sensors 16, no. 8: 1313. https://doi.org/10.3390/s16081313

APA StyleHoang, T. M., Hong, H. G., Vokhidov, H., & Park, K. R. (2016). Road Lane Detection by Discriminating Dashed and Solid Road Lanes Using a Visible Light Camera Sensor. Sensors, 16(8), 1313. https://doi.org/10.3390/s16081313