Haptic, Virtual Interaction and Motor Imagery: Entertainment Tools and Psychophysiological Testing

Abstract

:1. Entertainment, Virtual Environment and Affordance

1.1. Gestural Technologies

1.2. EEG, Virtual Interaction and Motor Imagery

2. Method

2.1. Participants

2.2. Measurements and Stimuli

EEG

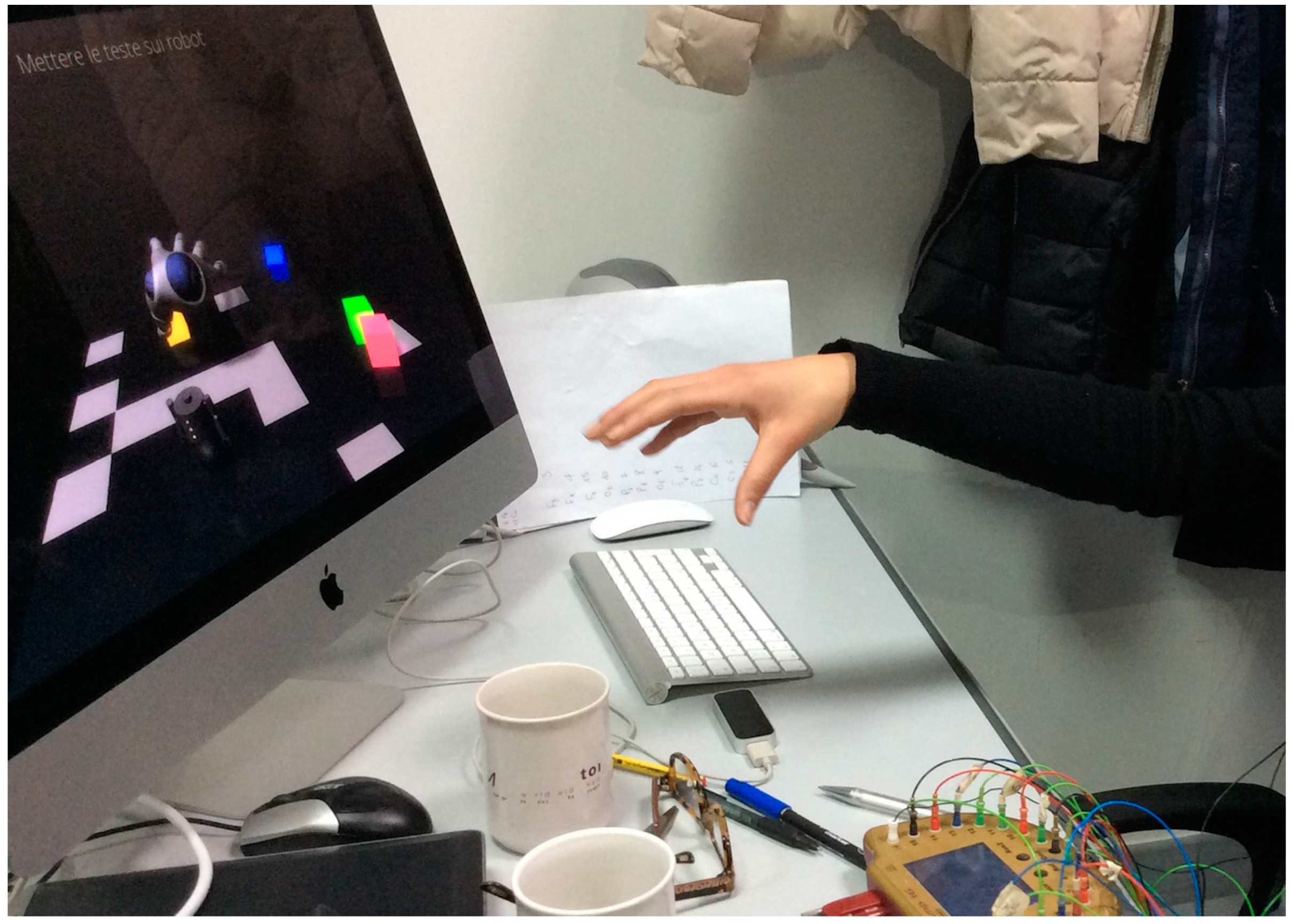

2.3. Procedure and Task

- Task Training A, in which they were asked to think using the objects with affordance of grasping (the objects were positioned in front of the subject, on the table);

- Task Training B, in which they were asked to think using the objects while interacting with the Leap Motion Playground app (they had a visual feedback of the hand motion on the screen);

- Task Training C, in which they really used the grasping objects.

3. Statistical Analysis and Results

3.1. Behavioral Task Analysis

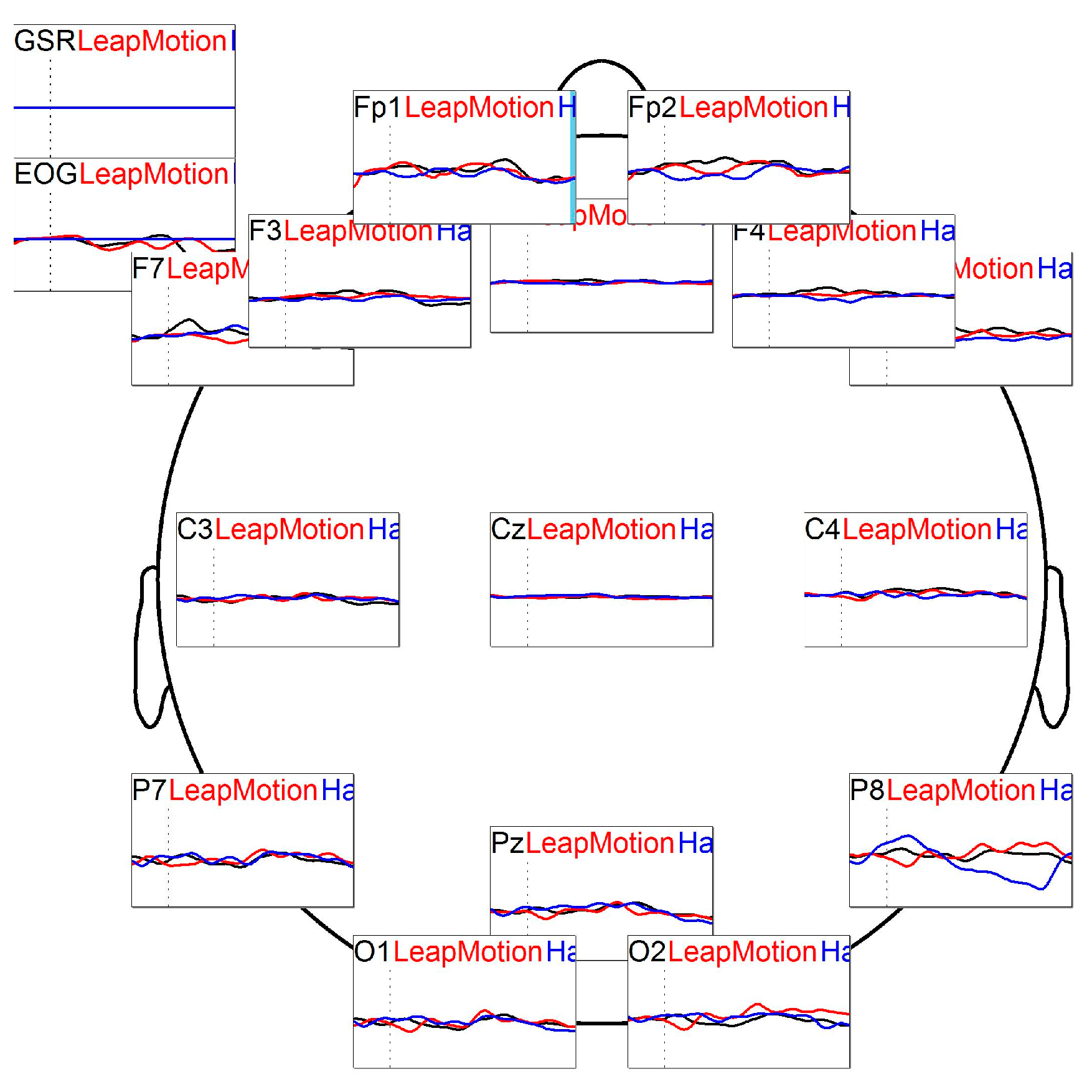

3.2. ERP Analysis

- P1 shows significant value for F4 Latency (F = 4.334; p = 0.025);

- post hoc analysis (Bonferroni test) shows a significant difference between condition 1 and condition 3 (p = 0.031);

- no significant difference in P3.

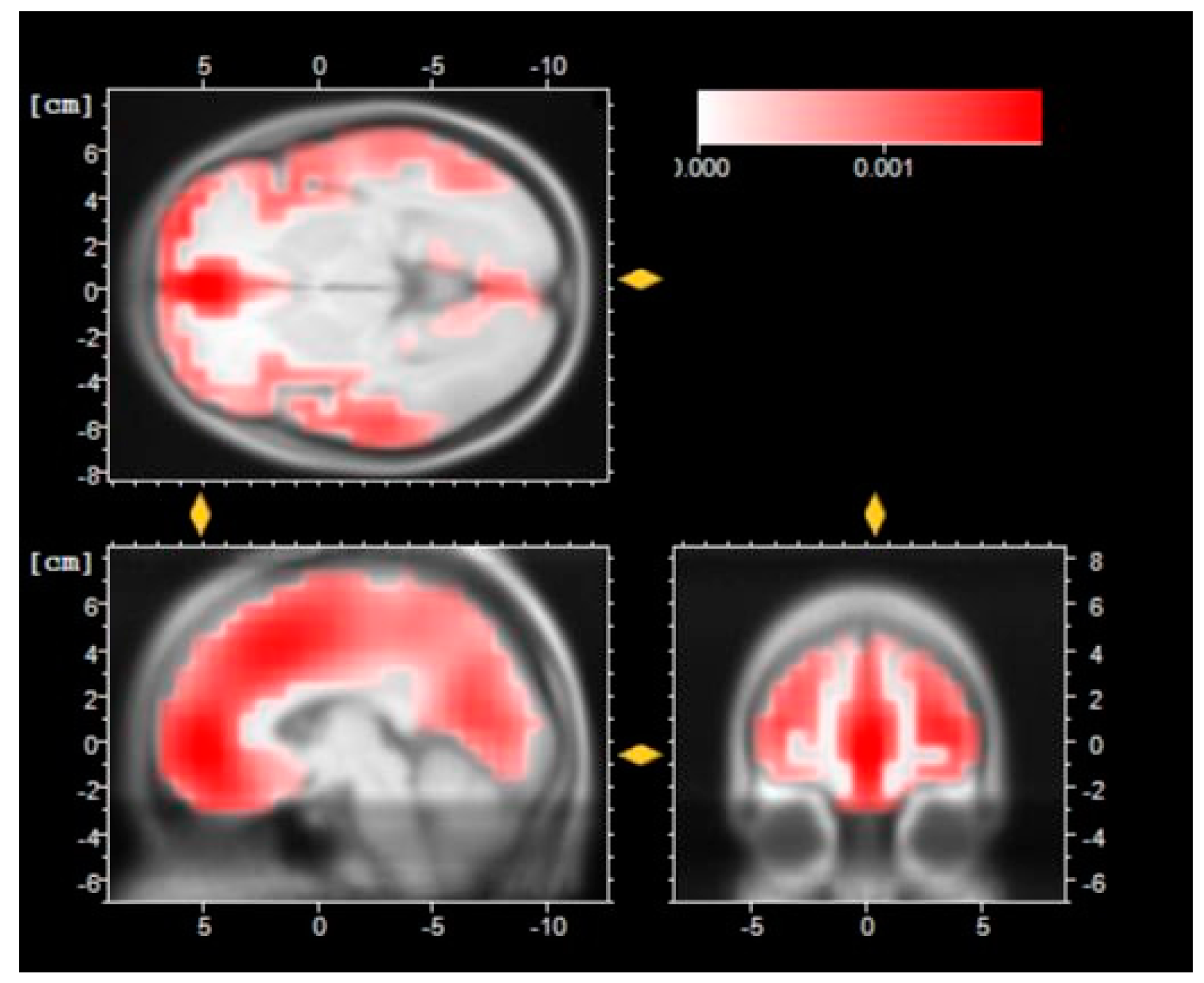

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bryan, J.; Vorderer, P.E. Psychology of Entertainment; Routledge, Taylor & Francis group: New York, NY, USA, 2006; p. 457. [Google Scholar]

- Ricciardi, F.; De Paolis, L.T. A Comprehensive Review of Serious Games in Health Professions. Int. J. Comput. Games Technol. 2014, 2014. [Google Scholar] [CrossRef]

- Invitto, S.; Faggiano, C.; Sammarco, S.; De Luca, V.; De Paolis, L.T. Interactive entertainment, virtual motion training and brain ergonomy. In Proceedings of the 7th International Conference on Intelligent Technologies for Interactive Entertainment (INTETAIN), Turin, Italy, 10–12 June 2015; pp. 88–94.

- Mandryk, R.L.; Inkpen, K.M.; Calvert, T.W. Using psychophysiological techniques to measure user experience with entertainment technologies. Behav. Inf. Technol. 2006, 25, 141–158. [Google Scholar] [CrossRef]

- Bau, O.; Poupyrev, I. REVEL: Tactile Feedback Technology for Augmented Reality. ACM Trans. Graph. 2012, 31, 1–11. [Google Scholar] [CrossRef]

- Gibson, J.J. The Theory of Affordances. In Perceiving, Acting, and Knowing. Towards an Ecological Psychology; John Wiley & Sons Inc.: Hoboken, NJ, USA, 1977; pp. 127–143. [Google Scholar]

- Thill, S.; Caligiore, D.; Borghi, A.M.; Ziemke, T.; Baldassarre, G. Theories and computational models of affordance and mirror systems: An integrative review. Neurosci. Biobehav. Rev. 2013, 37, 491–521. [Google Scholar] [CrossRef] [PubMed]

- Jones, K.S. What Is an Affordance? Ecol. Psychol. 2003, 15, 107–114. [Google Scholar] [CrossRef]

- Chemero, A. An Outline of a Theory of Affordances. Ecol. Psychol. 2003, 15, 181–195. [Google Scholar] [CrossRef]

- Handy, T.C.; Grafton, S.T.; Shroff, N.M.; Ketay, S.; Gazzaniga, M.S. Graspable objects grab attention when the potential for action is recognized. Nat. Neurosci. 2003, 6, 421–427. [Google Scholar] [CrossRef] [PubMed]

- Gibson, J.J. The Ecological Approach to Visual Perception; Houghton Mifflin: Boston, MA, USA, 1979. [Google Scholar]

- Apel, J.; Cangelosi, A.; Ellis, R.; Goslin, J.; Fischer, M. Object affordance influences instruction span. Exp. Brain Res. 2012, 223, 199–206. [Google Scholar] [CrossRef] [PubMed]

- Caligiore, D.; Borghi, A.M.; Parisi, D.; Ellis, R.; Cangelosi, A.; Baldassarre, G. How affordances associated with a distractor object affect compatibility effects: A study with the computational model TRoPICALS. Psychol. Res. 2013, 77, 7–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gross, D.C.; Stanney, K.M.; Cohn, L.J. Evoking affordances in virtual environments via sensory-stimuli substitution. Presence Teleoper. Virtual Environ. 2005, 14, 482–491. [Google Scholar] [CrossRef]

- Lepecq, J.-C.; Bringoux, L.; Pergandi, J.-M.; Coyle, T.; Mestre, D. Afforded Actions As a Behavioral Assessment of Physical Presence in Virtual Environments. Virtual Real. 2009, 13, 141–151. [Google Scholar] [CrossRef]

- Warren, W.H., Jr.; Whang, S. Visual Guidance of Walking Through Apertures: Body-Scaled Information for Affordances. J. Exp. Psychol. Hum. Percept. Perform. 1987, 13, 371–383. [Google Scholar] [CrossRef] [PubMed]

- Luck, S.J. An Introduction to the Event-Related Potential Technique, 2nd ed.; MIT Press: Cambridge, MA, USA, 2014; pp. 1–50. [Google Scholar]

- Cutmore, T.R.H.; Hine, T.J.; Maberly, K.J.; Langford, N.M.; Hawgood, G. Cognitive and gender factors influencing navigation in a virtual environment. Int. J. Hum. Comput. Stud. 2000, 53, 223–249. [Google Scholar] [CrossRef]

- Liu, B.; Wang, Z.; Song, G.; Wu, G. Cognitive processing of traffic signs in immersive virtual reality environment: An ERP study. Neurosci. Lett. 2010, 485, 43–48. [Google Scholar] [CrossRef] [PubMed]

- Neumann, U.; Majoros, A. Cognitive, performance, and systems issues for augmented reality applications in manufacturing and maintenance. In Proceedings of the IEEE 1998 Virtual Reality Annual International Symposium, Atlanta, GA, USA, 14–18 March 1998; pp. 4–11.

- The Leap Motion Controller. Available online: https://www.leapmotion.com (accessed on 26 December 2015).

- Garber, L. Gestural Technology: Moving Interfaces in a New Direction [Technology News]. Computer 2013, 46, 22–25. [Google Scholar] [CrossRef]

- Giuroiu, M.-C.; Marita, T. Gesture recognition toolkit using a Kinect sensor. In Proceedings of the 2015 IEEE International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2015; pp. 317–324.

- Microsoft Developer Network. Face Tracking. Available online: https://msdn.microsoft.com/en-us/library/dn782034.aspx (accessed on 26 December 2015).

- Takahashi, T.; Kishino, F. Hand Gesture Coding Based on Experiments Using a Hand Gesture Interface Device. SIGCHI Bull. 1991, 23, 67–74. [Google Scholar] [CrossRef]

- Rehg, J.M.; Kanade, T. Visual Tracking of High DOF Articulated Structures: An Application to Human Hand Tracking. In Computer Vision—ECCV ’94, Proceedings of Third European Conference on Computer Vision Stockholm, Sweden, 2–6 May 1994; Springer-Verlag: London, UK, 1994; pp. 35–46. [Google Scholar]

- Ionescu, B.; Coquin, D.; Lambert, P.; Buzuloiu, V. Dynamic Hand Gesture Recognition Using the Skeleton of the Hand. EURASIP J. Appl. Signal Process. 2005, 2005, 2101–2109. [Google Scholar] [CrossRef]

- Joslin, C.; El-Sawah, A.; Chen, Q.; Georganas, N. Dynamic Gesture Recognition. In Proceedings of the IEEE Instrumentation and Measurement Technology Conference, Ottawa, ON, Canada, 16–19 May 2005; Volume 3, pp. 1706–1711.

- Xu, D.; Yao, W.; Zhang, Y. Hand Gesture Interaction for Virtual Training of SPG. In Proceedings of the 16th International Conference on Artificial Reality and Telexistence–Workshops, Hangzhou, China, 29 November–1 December 2006; pp. 672–676.

- Wang, R.Y.; Popovic, J. Real-time Hand-tracking with a Color Glove. ACM Trans. Graphics 2009, 28. [Google Scholar] [CrossRef]

- Bellarbi, A.; Benbelkacem, S.; Zenati-Henda, N.; Belhocine, M. Hand Gesture Interaction Using Color-Based Method For Tabletop Interfaces. In Proceedings of the 7th International Symposium on Intelligent Signal Processing (WISP), Floriana, Malta, 19–21 September 2011; pp. 1–6.

- Fiala, M. ARTag, a fiducial marker system using digital techniques. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005, San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 590–596.

- Seichter, H. Augmented Reality and Tangible User Interfaces in Collaborative Urban Design. In CAAD Futures; Springer: Sydney, Australia, 2007; pp. 3–16. [Google Scholar]

- Irawati, S.; Green, S.; Billinghurst, M.; Duenser, A.; Ko, H. Move the Couch Where: Developing an Augmented Reality Multimodal Interface. In Proceedings of the 5th IEEE and ACM International Symposium on Mixed and Augmented Reality, ISMAR ’06, Santa Barbara, CA, USA, 22–25 October 2006; pp. 183–186.

- Berlia, R.; Kandoi, S.; Dubey, S.; Pingali, T.R. Gesture based universal controller using EMG signals. In Proceedings of the 2014 International Conference on Circuits, Communication, Control and Computing (I4C), Bangalore, India, 21–22 November 2014; pp. 165–168.

- Bellarbi, A.; Belghit, H.; Benbelkacem, S.; Zenati, N.; Belhocine, M. Hand gesture recognition using contour based method for tabletop surfaces. In Proceedings of the 10th IEEE International Conference on Networking, Sensing and Control (ICNSC), Evry, France, 10–12 April 2013; pp. 832–836.

- Sandor, C.; Klinker, G. A Rapid Prototyping Software Infrastructure for User Interfaces in Ubiquitous Augmented Reality. Pers. Ubiq. Comput. 2005, 9, 169–185. [Google Scholar] [CrossRef]

- Bhame, V.; Sreemathy, R.; Dhumal, H. Vision based hand gesture recognition using eccentric approach for human computer interaction. In Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics, New Delhi, India, 24–27 September 2014; pp. 949–953.

- Microchip Technology’s GestIC. Available online: http://www.microchip.com/pagehandler/en_us/technology/gestic (accessed on 26 December 2015).

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home Gesture Recognition Using Wireless Signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September 2013; pp. 27–38.

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Gesture Recognition Using Wireless Signals. GetMob. Mob. Comput. Commun. 2015, 18, 15–18. [Google Scholar] [CrossRef]

- WiSee Homepage. Available online: http://wisee.cs.washington.edu/ (accessed on 26 December 2015).

- Sony PlayStation. Available online: http://www.playstation.com (accessed on 26 December 2015).

- Nintendo Wii System. Available online: http://wii.com (accessed on 26 December 2015).

- Li, K.F.; Sevcenco, A.-M.; Cheng, L. Impact of Sensor Sensitivity in Assistive Environment. In Proceedings of the 2014 Ninth International Conference on Broadband and Wireless Computing, Communication and Applications (BWCCA), Guangdong, China, 8–10 November 2014; pp. 161–168.

- Microsoft Kinect. Available online: http://support.xbox.com/en-US/browse/xbox-one/kinect (accessed on 26 December 2015).

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with Leap Motion and Kinect devices. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1565–1569.

- Cook, H.; Nguyen, Q.V.; Simoff, S.; Trescak, T.; Preston, D. A Close-Range Gesture Interaction with Kinect. In Proceedings of the 2015 Big Data Visual Analytics (BDVA), Hobart, Australia, 22–25 September 2015; pp. 1–8.

- Coles, M.G.H.; Rugg, M.D. Event-related brain potentials: An introduction. Electrophysiol. Mind Event-relat. Brain Potentials Cognit. 1996, 1, 1–26. [Google Scholar]

- Invitto, S.; Scalinci, G.; Mignozzi, A.; Faggiano, C. Advanced Distributed Learning and ERP: Interaction in Augmented Reality, haptic manipulation with 3D models and learning styles. In Proceedings of the XXIII National Congress of the Italian Society of Psychophysiology, Lucca, Italy, 19–21 November 2015.

- Delorme, A.; Miyakoshi, M.; Jung, T.P.; Makeig, S. Grand average ERP-image plotting and statistics: A method for comparing variability in event-related single-trial EEG activities across subjects and conditions. J. Neurosci. Methods 2015, 250, 3–6. [Google Scholar] [CrossRef] [PubMed]

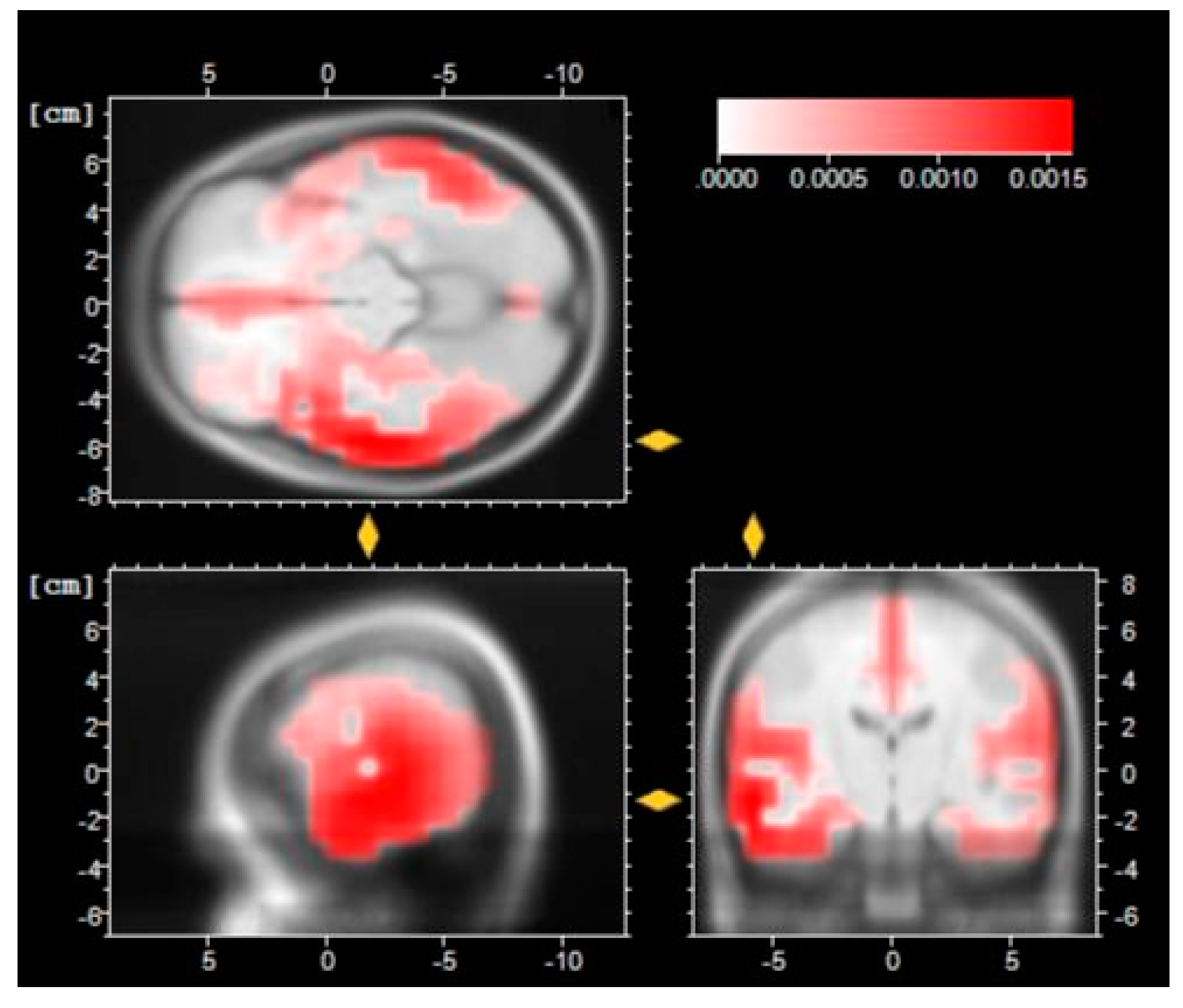

- Pascual-Marqui, R.D.; Esslen, M.; Kochi, K.; Lehmann, D. Functional imaging with low-resolution brain electromagnetic tomography (LORETA): A review. Methods Find. Exp. Clin. Pharm. 2002, 24 (Suppl. C), 91–95. [Google Scholar]

- Burgess, P.W.; Dumontheil, I.; Gilbert, S.J. The gateway hypothesis of rostral prefrontal cortex (area 10) function. Trends Cognit. Sci. 2007, 11, 290–298. [Google Scholar] [CrossRef] [PubMed]

- Kassuba, T.; Menz, M.M.; Röder, B.; Siebner, H.R. Multisensory interactions between auditory and haptic object recognition. Cereb. Cortex 2013, 23, 1097–1107. [Google Scholar] [CrossRef] [PubMed]

- Amedi, A.; Malach, R.; Hendler, T.; Peled, S.; Zohary, E. Visuo-haptic object-related activation in the ventral visual pathway. Nat. Neurosci. 2001, 4, 324–330. [Google Scholar] [CrossRef] [PubMed]

- Ellis, R.; Tucker, M. Micro-affordance: The potentiation of components of action by seen objects. Br. J. Psychol. 2000, 91, 451–471. [Google Scholar] [CrossRef] [PubMed]

- Foxe, J.J.; Simpson, G.V. Flow of activation from V1 to frontal cortex in humans. Exp. Brain Res. 2002, 142, 139–150. [Google Scholar] [CrossRef] [PubMed]

- Coull, J.T. Neural correlates of attention and arousal: Insights from electrophysiology, functional neuroimaging and psychopharmacology. Prog. Neurobiol. 1998, 55, 343–361. [Google Scholar] [CrossRef]

- Kudo, N.; Nakagome, K.; Kasai, K.; Araki, T.; Fukuda, M.; Kato, N.; Iwanami, A. Effects of corollary discharge on event-related potentials during selective attention task in healthy men and women. Neurosci. Res. 2004, 48, 59–64. [Google Scholar] [CrossRef] [PubMed]

- Gratton, G.; Coles, M.G.; Sirevaag, E.J.; Eriksen, C.W.; Donchin, E. Pre- and poststimulus activation of response channels: A psychophysiological analysis. J. Exp. Psychol. Hum. Percept. Perform. 1988, 14, 331–344. [Google Scholar] [CrossRef] [PubMed]

- Vainio, L.; Ala-Salomäki, H.; Huovilainen, T.; Nikkinen, H.; Salo, M.; Väliaho, J.; Paavilainen, P. Mug handle affordance and automatic response inhibition: Behavioural and electrophysiological evidence. Q. J. Exp. Psychol. 2014, 67, 1697–1719. [Google Scholar] [CrossRef] [PubMed]

| ERP N1 | MotorImageryLat | LeapMotionLat | Haptic Lat | F | p |

| Fz | 113.30 * | 58 * | 107.75 * | 5.206 | 0.013 |

| O1 | 81.40 * | 128 * | 80.25 * | 5.373 | 0.012 |

| O2 | 89.40 | 137.56 * | 77.75 * | 5.570 | 0.010 |

| ERP P1 | MotorImageryLat | LeapMotionLat | Haptic Lat | F | p |

| F4 | 100.20 * | 122.50 | 148.41 * | 4.334 | 0.025 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Invitto, S.; Faggiano, C.; Sammarco, S.; De Luca, V.; De Paolis, L.T. Haptic, Virtual Interaction and Motor Imagery: Entertainment Tools and Psychophysiological Testing. Sensors 2016, 16, 394. https://doi.org/10.3390/s16030394

Invitto S, Faggiano C, Sammarco S, De Luca V, De Paolis LT. Haptic, Virtual Interaction and Motor Imagery: Entertainment Tools and Psychophysiological Testing. Sensors. 2016; 16(3):394. https://doi.org/10.3390/s16030394

Chicago/Turabian StyleInvitto, Sara, Chiara Faggiano, Silvia Sammarco, Valerio De Luca, and Lucio T. De Paolis. 2016. "Haptic, Virtual Interaction and Motor Imagery: Entertainment Tools and Psychophysiological Testing" Sensors 16, no. 3: 394. https://doi.org/10.3390/s16030394

APA StyleInvitto, S., Faggiano, C., Sammarco, S., De Luca, V., & De Paolis, L. T. (2016). Haptic, Virtual Interaction and Motor Imagery: Entertainment Tools and Psychophysiological Testing. Sensors, 16(3), 394. https://doi.org/10.3390/s16030394