A TSR Visual Servoing System Based on a Novel Dynamic Template Matching Method †

Abstract

:1. Introducation

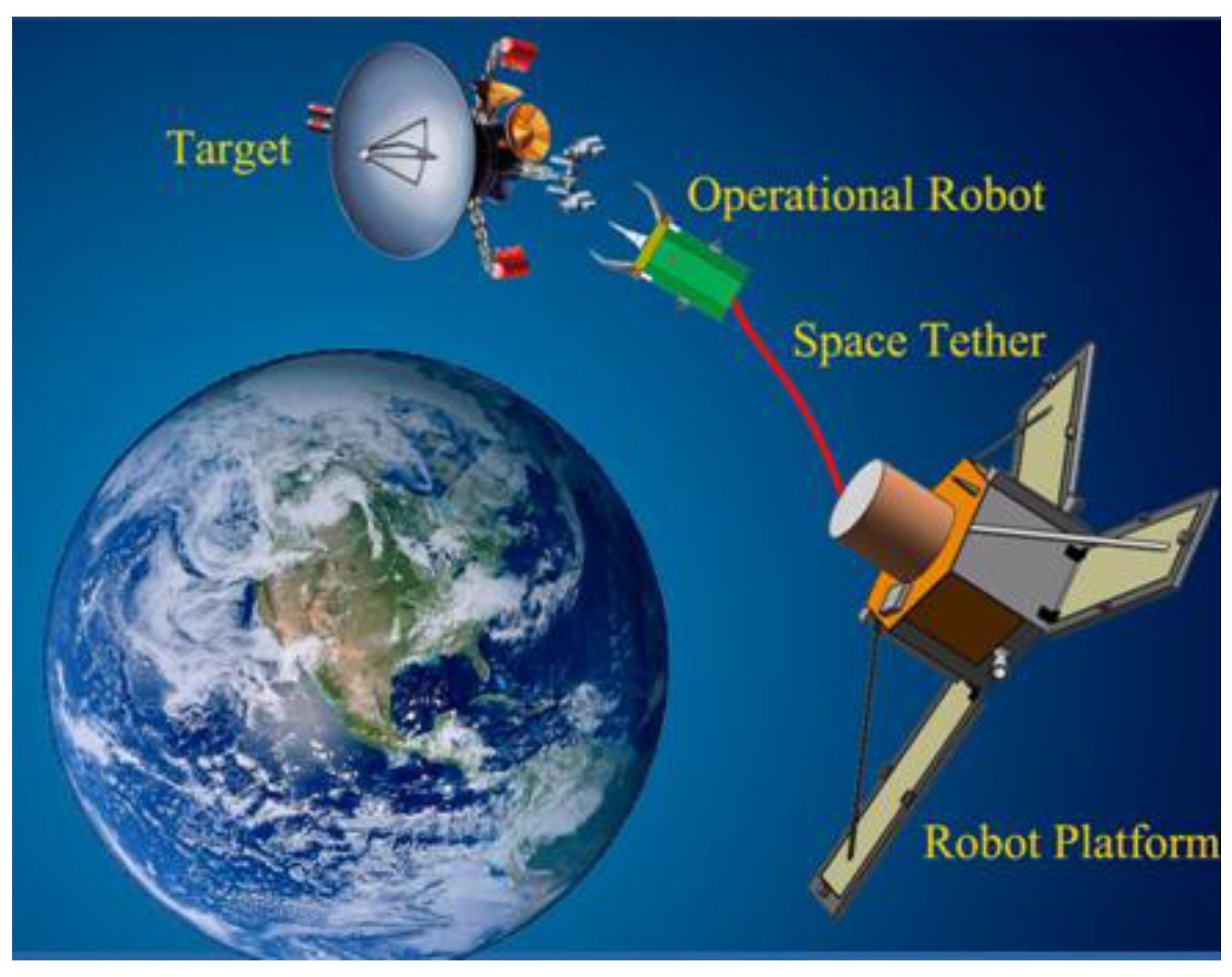

1.1. Motivation

1.2. Challenge Descriptions

1.3. Related Works

2. Methodology

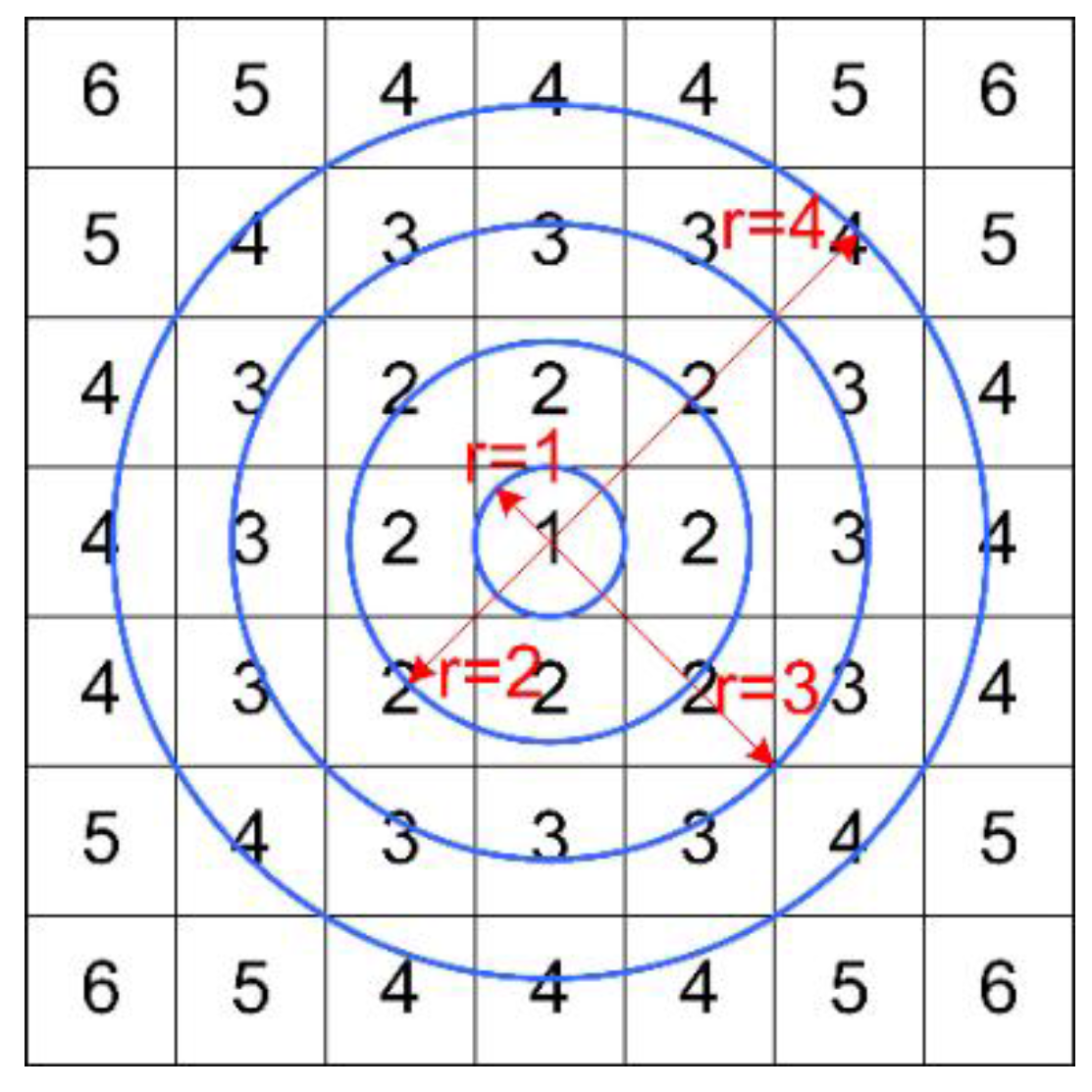

2.1. Improved Template Matching

2.2. Coarse to Fine Matching Strategy

2.3. Least Square Integrated Predictor

2.4. Dynamic Template Updating Strategy

2.4.1. Updating Strategy

2.4.2. Anti-Occlusion Strategy

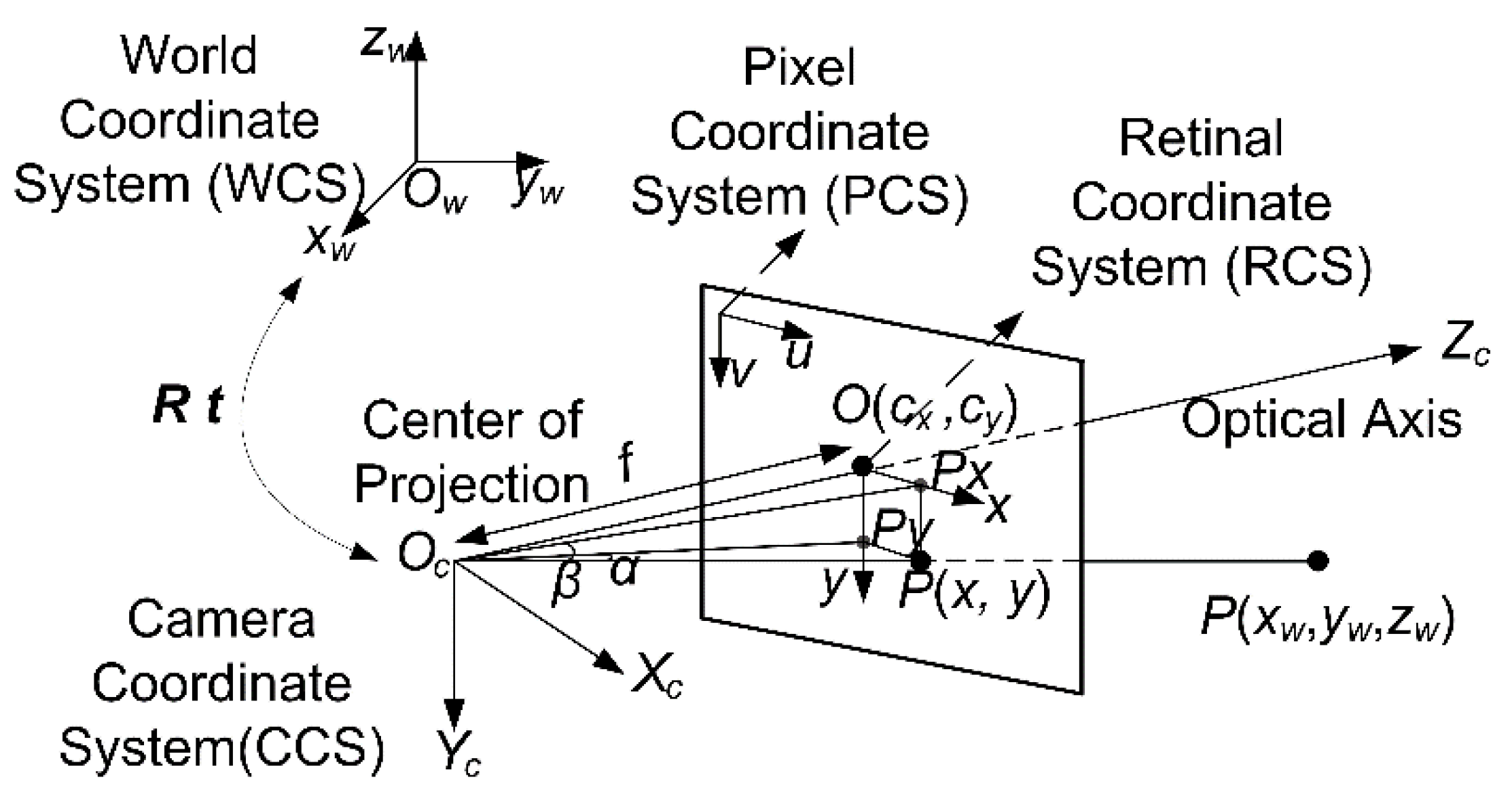

2.5. Theory of Calculating Azimuth Angles

3. Visual Servoing Controller

4. Experimental Validation

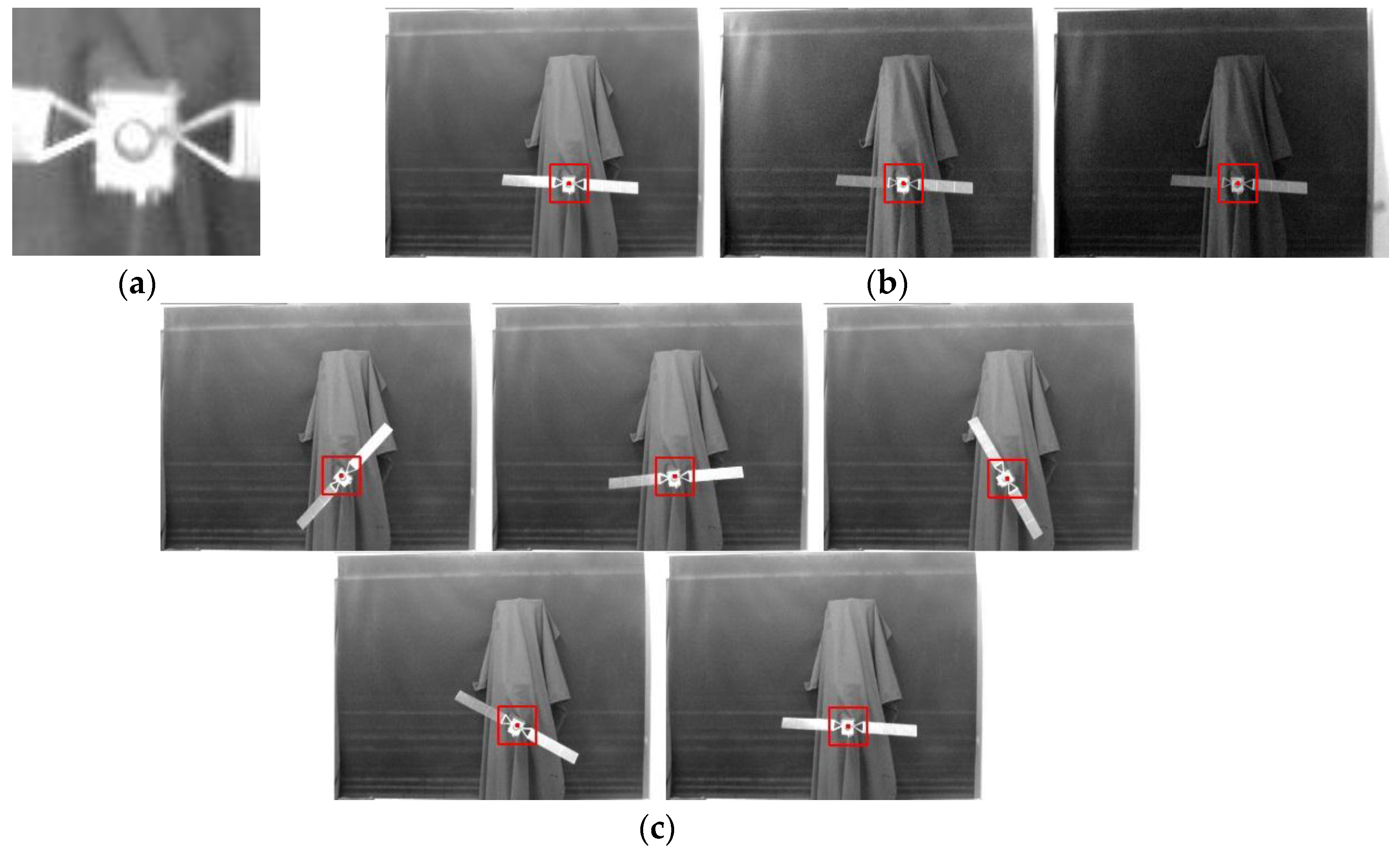

4.1. Experimental Set-up

4.2. Design of Experiments

| Axis | Route (mm) | Velocity (m/s) | Acceleration (m/s2) |

|---|---|---|---|

| X axis | 0–1000 | ±0.01–1 | ±0.01–1 |

| Y axis | 0–1500 | ±0.01–1 | ±0.01–1 |

| Z axis | 0–1200 | ±0.01–1 | ±0.01–1 |

| Axis | Amplitude (°) | Angle Velocity (°/s) | Angle Acceleration (°/s2) |

| pitch axis | ±45 | ±0.01~10 | ±0.01~10 |

| yaw axis | ±90 | ±0.01~10 | ±0.01~10 |

| roll axis | ±90 | ±0.01~10 | ±0.01~10 |

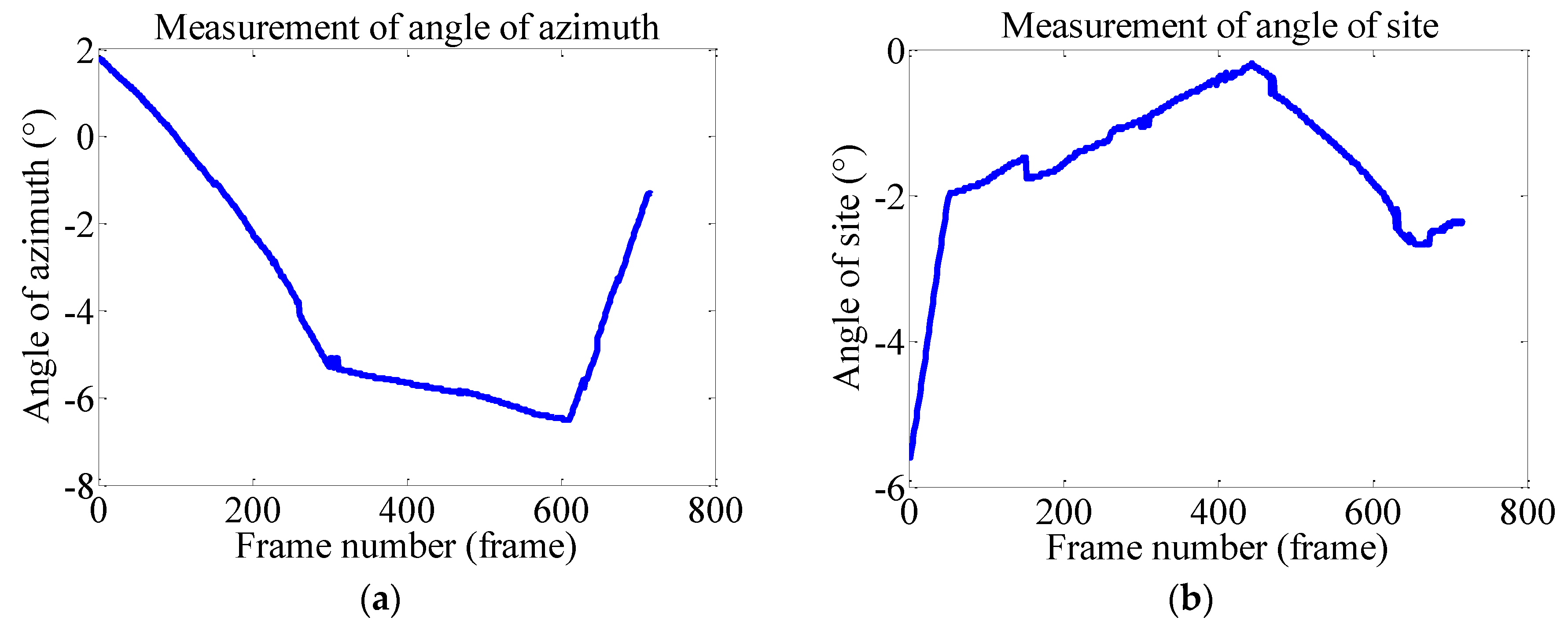

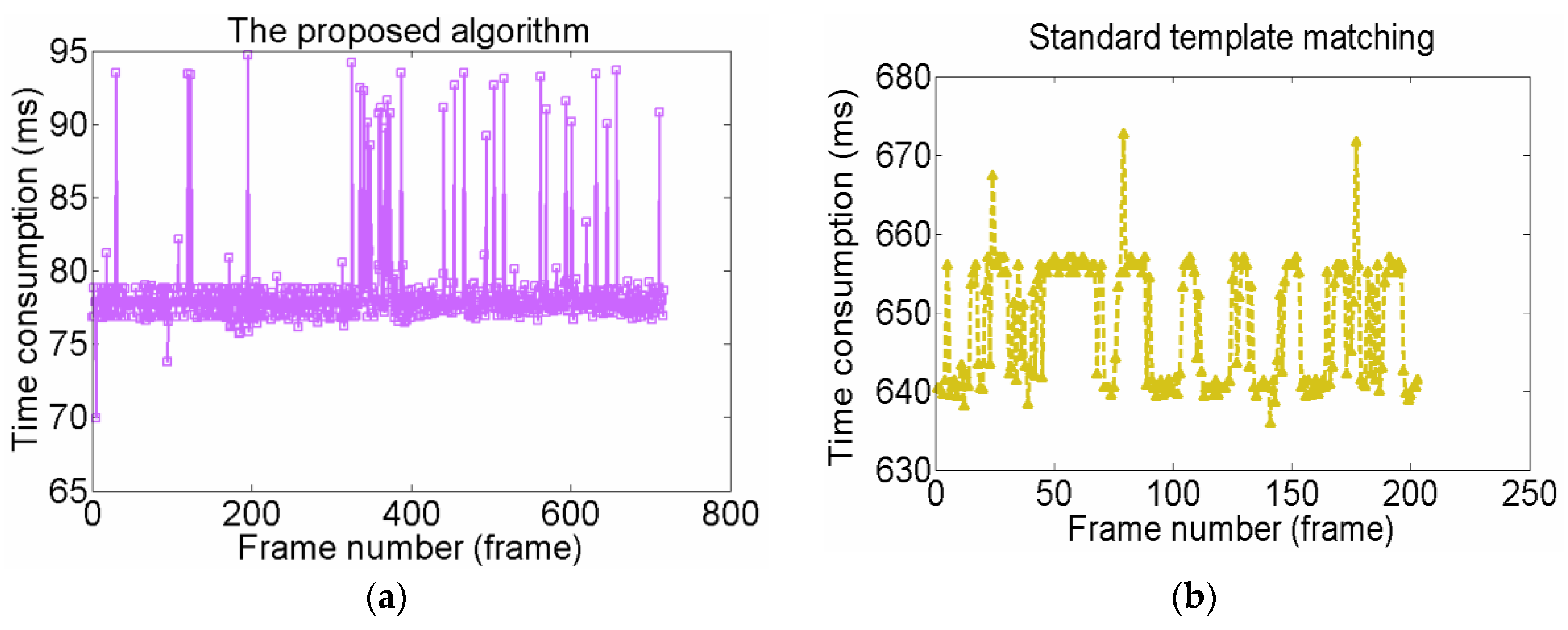

4.3. Results and Discussion

4.3.1. Qualitative Analysis

4.3.2. Quantitative Comparisons

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Xu, W.; Liang, B.; Li, B.; Xu, Y. A universal on-orbit servicing system used in the geostationary orbit. Adv. Sp. Res. 2011, 48, 95–119. [Google Scholar] [CrossRef]

- Coleshill, E.; Oshinowo, L.; Rembala, R.; Bina, B.; Rey, D.; Sindelar, S. Dextre: Improving maintenance operations on the international space station. Acta Astronaut. 2009, 64, 869–874. [Google Scholar] [CrossRef]

- Friend, R.B. Orbital express program summary and mission overview. In Sensors and Systems for Space Applications II; SPIE: San Francisco, CA, USA, 2008; pp. 1–11. [Google Scholar]

- Nock, K.T.; Aaron, K.M.; McKnight, D. Removing orbital debris with less risk. J. Spacecr. Rocket. 2013, 50, 365–379. [Google Scholar] [CrossRef]

- Liu, J.; Cui, N.; Shen, F.; Rong, S. Dynamics of Robotic GEostationary orbit Restorer system during deorbiting. IEEE Aerosp. Electron. Syst. Mag. 2014, 29, 36–42. [Google Scholar]

- Flores-Abad, A.; Ma, O.; Pham, K.; Ulrich, S. A review of space robotics technologies for on-orbit servicing. Progress Aerosp. Sci. 2014, 68, 1–26. [Google Scholar] [CrossRef]

- Huang, P.; Cai, J.; Meng, Z.J.; Hu, Z.H.; Wang, D.K. Novel Method of Monocular Real-Time Feature Point Tracking for Tethered Space Robots. J. Aerosp. Eng. 2013. [Google Scholar] [CrossRef]

- Crétual, A.; Chaumette, F. Application of motion-based visual servoing to target tracking. Int. J. Robot. Res. 2001, 20, 878–890. [Google Scholar] [CrossRef]

- Petit, A.; Marchand, E.; Kanani, K. Vision-based space autonomous rendezvous: A case study. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2011), San Francisco, CA, USA, 25–30 September 2011; pp. 619–624.

- Larouche, B.P.; Zhu, Z.H. Autonomous robotic capture of non-cooperative target using visual servoing and motion predictive control. Auton. Robot. 2014, 37, 157–167. [Google Scholar] [CrossRef]

- Wang, C.; Dong, Z.; Yin, H.; Gao, Y. Research Summarizing of On-orbit Capture Technology for Space Target. J. Acad. Equip. 2013, 24, 63–66. [Google Scholar]

- Du, X.; Liang, B.; Xu, W.; Qiu, Y. Pose measurement of large non-cooperative satellite based on collaborative cameras. Acta Astronaut. 2011, 68, 2047–2065. [Google Scholar] [CrossRef]

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. Acm Comput. Surv. (CSUR) 2006, 38, 1–45. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 2nd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Ivan, I.A.; Ardeleanu, M.; Laurent, G.J. High dynamics and precision optical measurement using a position sensitive detector (PSD) in reflection-mode: Application to 2D object tracking over a smart surface. Sensors 2012, 12, 16771–16784. [Google Scholar] [CrossRef] [PubMed]

- Máthé, K.; Buşoniu, L. Vision and control for UAVs: A survey of general methods and of inexpensive platforms for infrastructure inspection. Sensors 2015, 15, 14887–14916. [Google Scholar] [CrossRef] [PubMed]

- Hutchinson, S.; Hager, G.D.; Corke, P. A tutorial on visual servo control. IEEE Trans. Robot. Autom. 1996, 12, 651–670. [Google Scholar] [CrossRef]

- Kragic, D.; Christensen, H.I. Survey on visual servoing for manipulation. Comput. Vis. Act. Percept. Lab. Fiskartorpsv 2002, 15, 6–9. [Google Scholar]

- Herzog, A.; Pastor, P.; Kalakrishnan, M.; Righetti, L.; Bohg, J.; Asfour, T.; Schaal, S. Learning of grasp selection based on shape-templates. Auton. Robot. 2014, 36, 51–65. [Google Scholar] [CrossRef]

- Li, H.; Duan, H.B.; Zhang, X.Y. A novel image template matching based on particle filtering optimization. Pattern Recognit. Lett. 2010, 31, 1825–1832. [Google Scholar] [CrossRef]

- Türkan, M.; Guillemot, C. Image prediction: Template matching vs. Sparse approximation. In Proceedings of the 2010 17th IEEE International Conference on Image Processing (ICIP 2010), Hong Kong, China, 26–29 September 2010; pp. 789–792.

- You, L.; Xiu, C.B. Template Matching Algorithm Based on Edge Detection. In Proceedings of the 2011 International Symposium on Computer Science and Society (ISCCS 2011), Kota Kinabalu, Malaysia, 16–17 July 2011; pp. 7–9.

- Lu, X.H.; Shi, Z.K. Detection and tracking control for air moving target based on dynamic template matching. J. Electron. Meas. Instrum. 2010, 24, 935–941. [Google Scholar] [CrossRef]

- Paravati, G.; Esposito, S. Relevance-based template matching for tracking targets in FLIR imagery. Sensor 2014, 14, 14106–14130. [Google Scholar] [CrossRef] [PubMed]

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A Model-Based 3D Template Matching Technique for Pose Acquisition of an Uncooperative Space Object. Sensors 2015, 15, 6360–6382. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.; Huang, P.F.; Wang, D.K. Novel Dynamic Template Matching of Visual Servoing for Tethered Space Robot. In Proceedings of the Fourth IEEE International Conference on Information Science and Technology (ICIST2014), Shen Zhen, China, 26–28 April 2014; pp. 388–391.

- Rosten, E.; Porter, R.; Drummond, T. Faster and better: A machine learning approach to corner detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Yang, S.Q.; Sun, M. Application of least square filtering for target tracking. Command Control Simul. 2007, 29, 41–42. [Google Scholar]

- Zeng, D.X.; Dong, X.R.; Li, R. Optical measurement for spacecraft relative angle. J. Cent. South Univ. 2007, 38, 1155–1158. [Google Scholar]

- Pounds, P.E.; Bersak, D.R.; Dollar, A.M. Stability of small-scale UAV helicopters and quadrotors with added payload mass under PID control. Auton. Robot. 2012, 33, 129–142. [Google Scholar] [CrossRef]

- Wang, D.K.; Huang, P.F.; Cai, J.; Meng, Z.J. Coordinated control of tethered space robot using mobile tether attachment point in approaching phase. Adv. Sp. Res. 2014, 54, 1077–1091. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, J.; Huang, P.; Zhang, B.; Wang, D. A TSR Visual Servoing System Based on a Novel Dynamic Template Matching Method. Sensors 2015, 15, 32152-32167. https://doi.org/10.3390/s151229884

Cai J, Huang P, Zhang B, Wang D. A TSR Visual Servoing System Based on a Novel Dynamic Template Matching Method. Sensors. 2015; 15(12):32152-32167. https://doi.org/10.3390/s151229884

Chicago/Turabian StyleCai, Jia, Panfeng Huang, Bin Zhang, and Dongke Wang. 2015. "A TSR Visual Servoing System Based on a Novel Dynamic Template Matching Method" Sensors 15, no. 12: 32152-32167. https://doi.org/10.3390/s151229884