Estimation of Human Arm Joints Using Two Wireless Sensors in Robotic Rehabilitation Tasks

Abstract

:1. Introduction

2. Algorithm Description

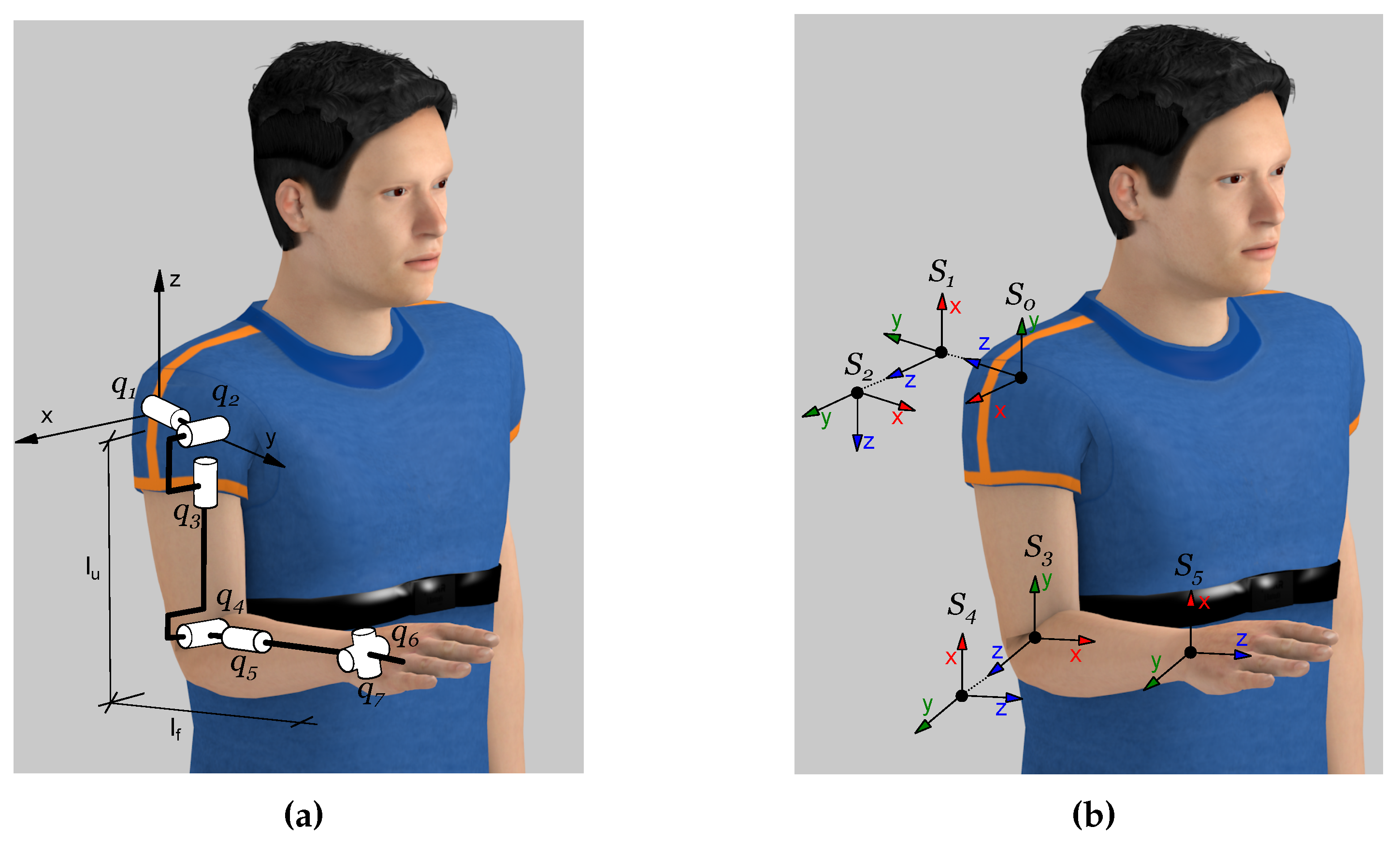

2.1. Human Arm Kinematic Chain

| i | ||||

|---|---|---|---|---|

| 1 | 0 | 0 | ||

| 2 | 0 | 0 | ||

| 3 | 0 | |||

| 4 | 0 | 0 | ||

| 5 | 0 | 0 |

2.2. Integration Method

2.3. Accelerometer Orientation

2.4. MARG Orientation

2.5. Elbow and Shoulder Location

2.6. Initial Conditions

3. Results and Discussion

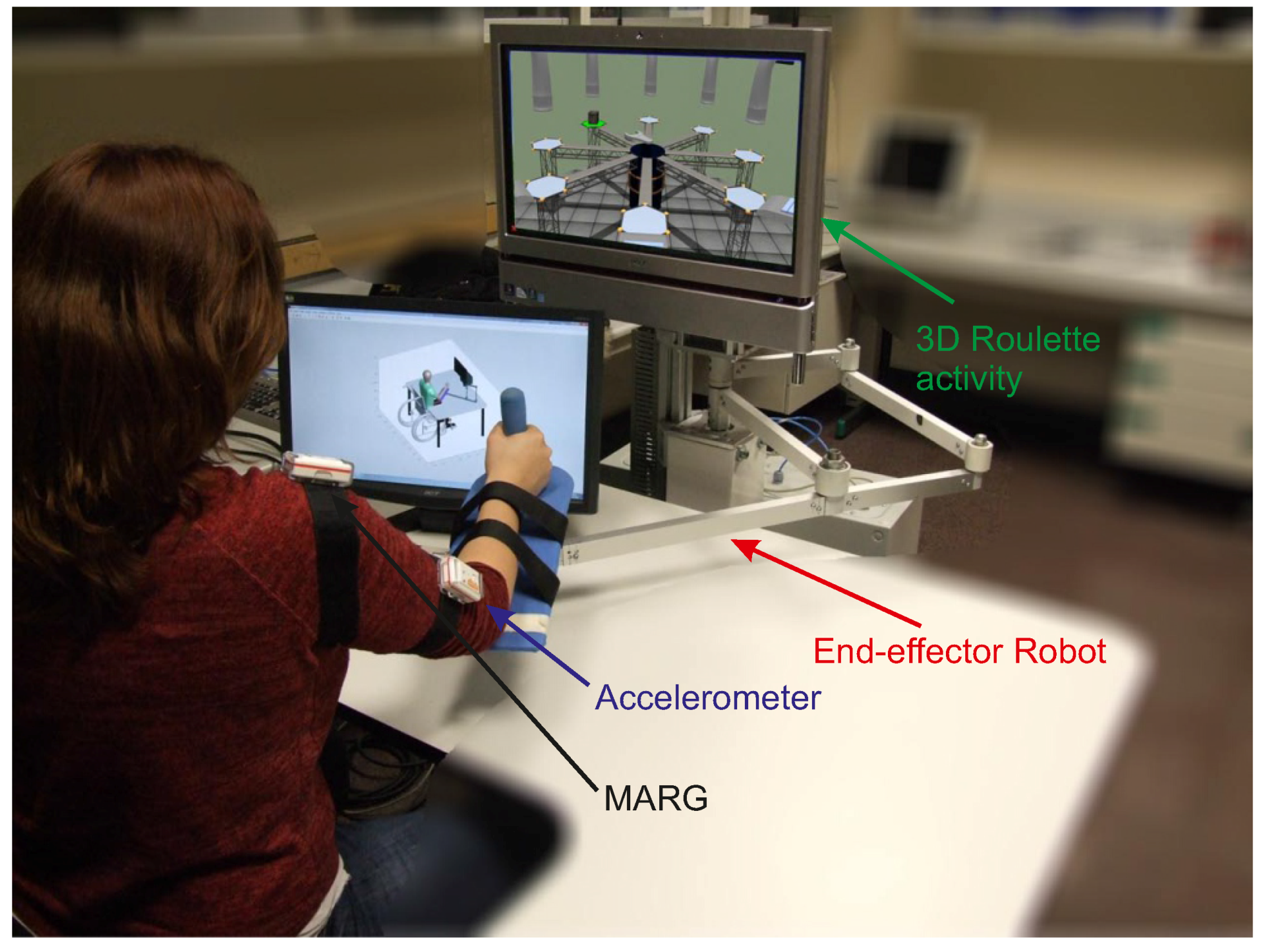

3.1. Experimental Exercises

| ID | Age | Gender | Forearm Length (m) | Upper Arm |

|---|---|---|---|---|

| 1 | 21 | Male | 0.23 | 0.32 |

| 2 | 51 | Female | 0.21 | 0.33 |

| 3 | 32 | Male | 0.25 | 0.31 |

| 4 | 31 | Male | 0.21 | 0.33 |

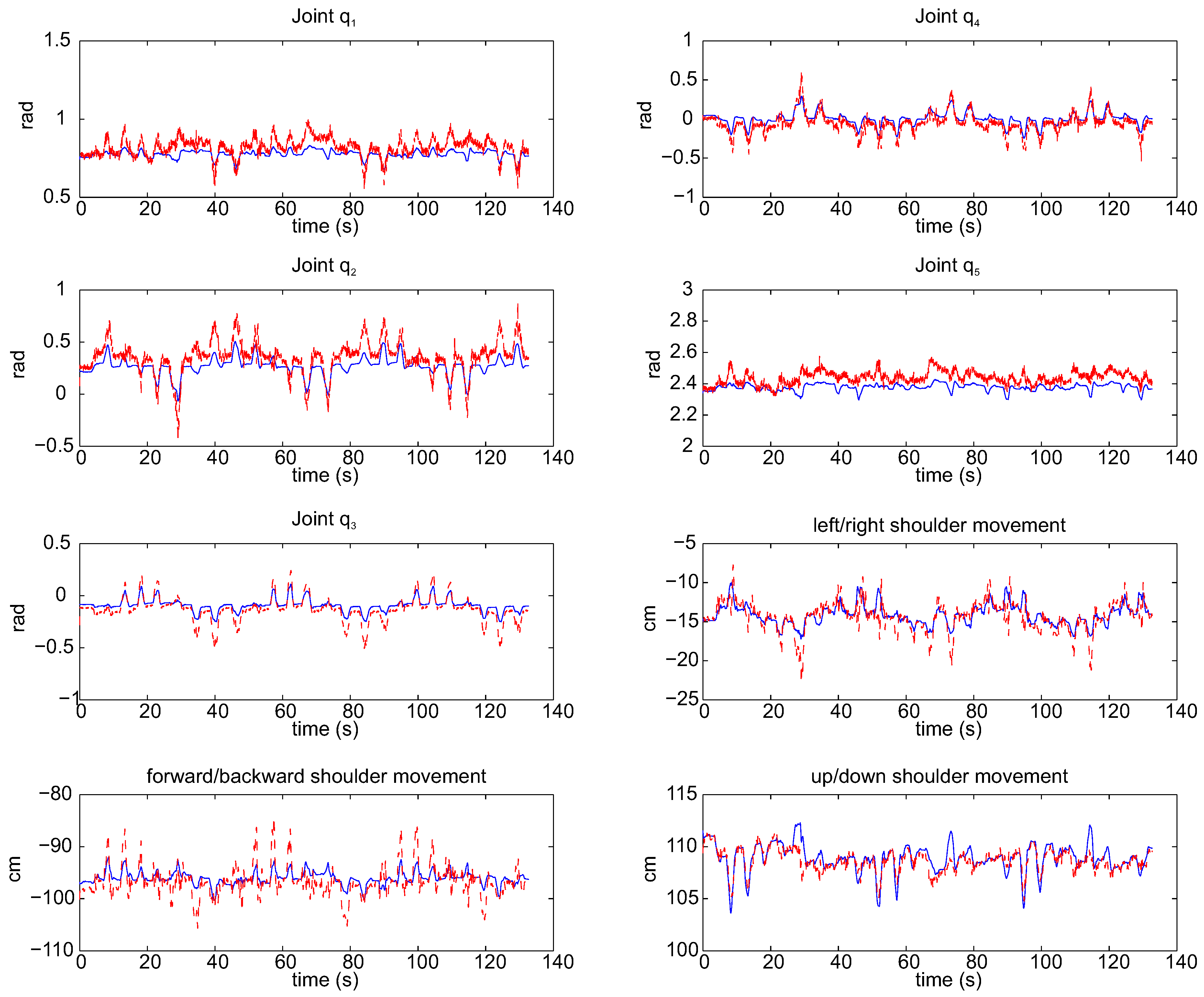

3.2. Algorithm Validation

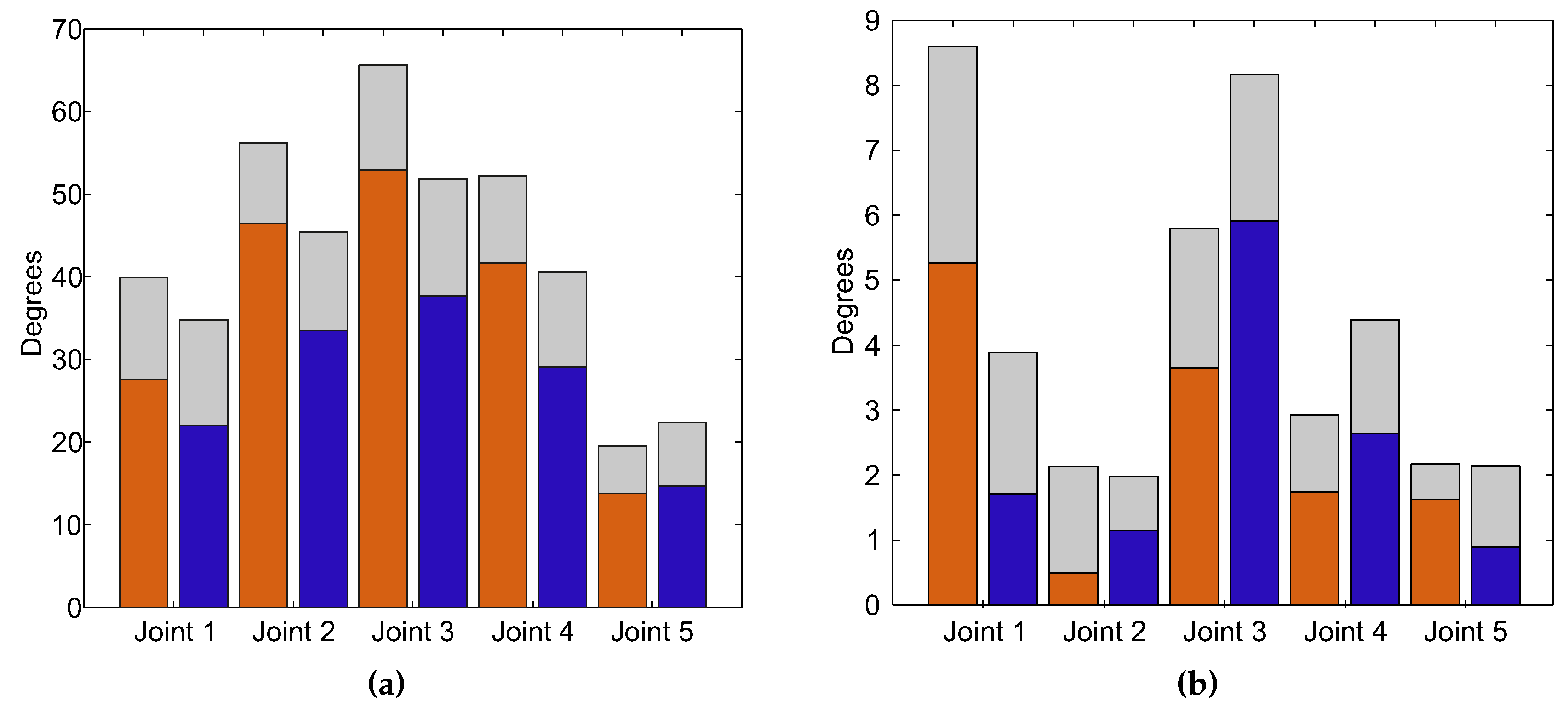

3.3. Arm Joint Range

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Nef, T.; Mihelj, M.; Colombo, G.; Riener, R. ARMin-robot for rehabilitation of the upper extremities. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006.

- Tang, Z.; Zhang, K.; Sun, S.; Gao, Z.; Zhang, L.; Yang, Z. An upper-limb power-assist exoskeleton using proportional myoelectric control. Sensors 2014. [Google Scholar] [CrossRef] [PubMed]

- Lum, P.S.; Burgar, C.G.; Shor, P.C.; Majmundar, M.; der Loos, M.V. Robot-assisted movement training compared with conventional therapy techniques for the rehabilitation of upper-limb motor function after stroke. Arch. Phys. Med. Rehabil. 2002, 83, 952–959. [Google Scholar] [CrossRef] [PubMed]

- Badesa, F.; Morales, R.; Garcia-Aracil, N.; Alfaro, A.; Bernabeu, A.; Fernandez, E.; Sabater, J. Robot-assisted rehabilitation treatment of a 65-year old woman with alien hand syndrome. Biomed. Robot. Biomech. 2002. [Google Scholar] [CrossRef]

- Cameirao, M.; Badia, S.; Oller, E.; Verschure, P. Neurorehabilitation using the virtual reality based Rehabilitation Gaming System: methodology, design, psychometrics, usability and validation. J. NeuroEng. Rehabil. 2010. [Google Scholar] [CrossRef] [PubMed]

- Wittmann, F.; Lambercy, O.; Gonzenbach, R.R.; van Raai, M.A.; Hover, R.; Held, J.; Starkey, M.L.; Curt, A.; Luft, A.; Gassert, R. Assessment-driven arm therapy at home using an IMU-based virtual reality system. Rehabil. Robot. 2015. [Google Scholar] [CrossRef]

- Klopčar, N.; Lenarčič, J. Kinematic model for determination of human arm reachable workspace. Meccanica 2005, 40, 203–219. [Google Scholar] [CrossRef]

- Rab, G.; Petuskey, K.; Bagley, A. A method for determination of upper extremity kinematics. Gait Posture 2002. [Google Scholar] [CrossRef]

- Mihelj, M. Human arm kinematics for robot based rehabilitation. Robotica 2006, 24, 377–383. [Google Scholar] [CrossRef]

- Papaleo, E.; Zollo, L.; Garcia-Aracil, N.; Badesa, F.; Morales, R.; Mazzoleni, S.; Sterzi, S.; Guglielmelli, E. Upper-limb kinematic reconstruction during stroke robot-aided therapy. Med. Biol. Eng. Comput. 2015, 53, 815–828. [Google Scholar] [CrossRef] [PubMed]

- Kreutz-Delgado, K.; Long, M.; Seraji, H. Kinematic analysis of 7 DOF anthropomorphic arms. Robot. Autom. 1990. [Google Scholar] [CrossRef]

- Taati, B.; Wang, R.; Huq, R.; Snoek, J.; Mihailidis, A. Vision-based posture assessment to detect and categorize compensation during robotic rehabilitation therapy. Biomed. Robot. Biomech. 2012. [Google Scholar] [CrossRef]

- Siciliano, B.; Sciavicco, L.; Villani, L.; Oriolo, G. Robotics: Modelling, Planning and Control; Springer-Verlag London: London, UK, 2009. [Google Scholar]

- Badesa, F.J.; Llinares, A.; Morales, R.; Garcia-Aracil, N.; Sabater, J.M.; Perez-Vidal, C. Pneumatic planar rehabilitation robot for post-stroke patientes. Biomed. Eng. Appl. Basis Commun. 2014. [Google Scholar] [CrossRef]

- Lenarčič, J.; Umek, A. Simple model of human arm reachable workspace. IEEE Trans. Syst. Man. Cybern. 1994, 24, 1239–1246. [Google Scholar] [CrossRef]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Madgwick, S.; Harrison, A.; Vaidyanathan, R. Estimation of IMU and MARG orientation using a gradient descent algorithm. Rehabil. Robot. 2011. [Google Scholar] [CrossRef]

- Bachmann, E.; Yun, X.; Peterson, C. An investigation of the effects of magnetic variations on inertial/magnetic orientation sensors. Robot. Autom. 2004. [Google Scholar] [CrossRef]

- Kuipers, J.B. Quaternions and Rotation Sequences; Princeton University Press: Princeton, NJ, USA, 1999. [Google Scholar]

- Mazza, J.C. Mediciones antropométricas. Estandarización de las Técnicas de medición, Actualizada según Parámetros Internacionales. Available online: http://g-se.com/es/journals/publice-standard/articulos/mediciones-antropometricas.-estandariza-cion-de-las-tecnicas-de-medicion-actualizada-segun-parametros-internacionales-197 (accessed on 9 October 2015).

- McCrea, P.H.; Eng, J.J.; Hodgson, A.J. Biomechanics of reaching: Clinical implications for individuals with acquired brain injury. Disabil. Rehabil. 2002, 24, 534–541. [Google Scholar] [CrossRef] [PubMed]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bertomeu-Motos, A.; Lledó, L.D.; Díez, J.A.; Catalan, J.M.; Ezquerro, S.; Badesa, F.J.; Garcia-Aracil, N. Estimation of Human Arm Joints Using Two Wireless Sensors in Robotic Rehabilitation Tasks. Sensors 2015, 15, 30571-30583. https://doi.org/10.3390/s151229818

Bertomeu-Motos A, Lledó LD, Díez JA, Catalan JM, Ezquerro S, Badesa FJ, Garcia-Aracil N. Estimation of Human Arm Joints Using Two Wireless Sensors in Robotic Rehabilitation Tasks. Sensors. 2015; 15(12):30571-30583. https://doi.org/10.3390/s151229818

Chicago/Turabian StyleBertomeu-Motos, Arturo, Luis D. Lledó, Jorge A. Díez, Jose M. Catalan, Santiago Ezquerro, Francisco J. Badesa, and Nicolas Garcia-Aracil. 2015. "Estimation of Human Arm Joints Using Two Wireless Sensors in Robotic Rehabilitation Tasks" Sensors 15, no. 12: 30571-30583. https://doi.org/10.3390/s151229818