An Indoor Navigation System for the Visually Impaired

Abstract

: Navigation in indoor environments is highly challenging for the severely visually impaired, particularly in spaces visited for the first time. Several solutions have been proposed to deal with this challenge. Although some of them have shown to be useful in real scenarios, they involve an important deployment effort or use artifacts that are not natural for blind users. This paper presents an indoor navigation system that was designed taking into consideration usability as the quality requirement to be maximized. This solution enables one to identify the position of a person and calculates the velocity and direction of his movements. Using this information, the system determines the user's trajectory, locates possible obstacles in that route, and offers navigation information to the user. The solution has been evaluated using two experimental scenarios. Although the results are still not enough to provide strong conclusions, they indicate that the system is suitable to guide visually impaired people through an unknown built environment.1. Introduction

People with visual disabilities, i.e., partially or totally blind, are often challenged by places that are not designed for their special condition. Examples of these places are bus and train terminals, public offices, hospitals, educational buildings, and shopping malls. Several “everyday” objects that are present in most built environments become real obstacles for blind people, even putting at risk their physical integrity. Simple objects such as chairs, tables and stairs, hinder their movements and can often cause serious accidents.

Several proposals have tried to address this challenge in indoor and outdoor environments [1]. However most of them have limitations, since this challenge involves many issues (e.g., accuracy, coverage, usability and interoperability) that are not easy to address with the current technology. Therefore, this can still be considered an open problem.

This paper presents a prototype of a navigation system that helps the visually impaired to move within indoor environments. The system designed has been focused on usability of the solution, and also on its suitability for deployment in several built areas.

The main objective of the system is to provide, in real-time, useful navigation information that enables a user to make appropriate and timely decisions on which route to follow in an indoor space. In order to provide such information, the system must take into account all the objects in the immediate physical environment which may become potential “obstacles” for blind people. This kind of solution is known as a micro-navigation system [1]. Two main aspects should be addressed by this system to provide navigation support: (1) detection of the position and movement intentions of a user, and (2) positioning of all the objects or possible obstacles into the environment.

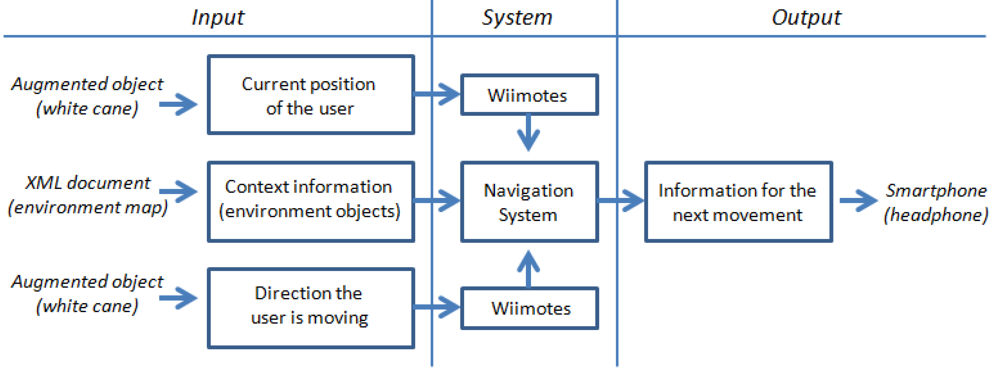

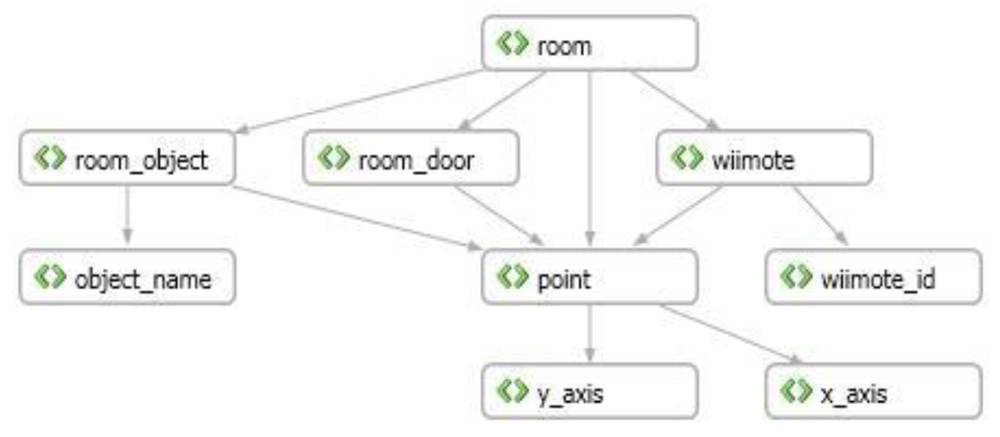

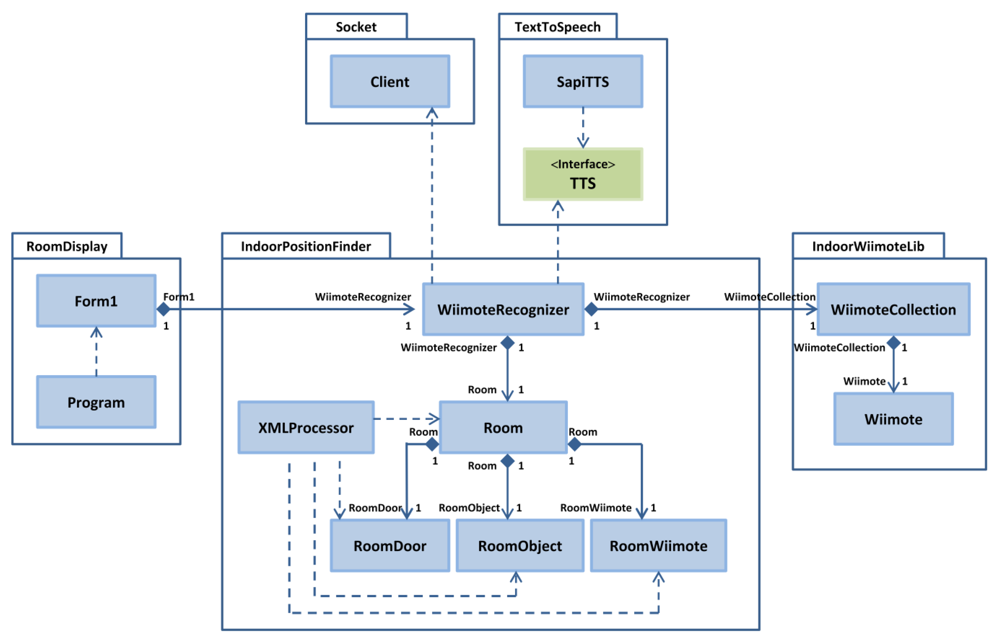

In order to deal with these issues the solution uses the interaction among several components as a platform to capture and process the user and environment information, and to generate and deliver navigation messages to users while they are moving in an indoor area. The system's main components are the following: an augmented white cane with various embedded infrared lights, two infrared cameras (embedded in a Wiimotes unit), a computer running a software application that coordinates the whole system, and a smartphone that delivers the navigation information to the user through voice messages (Figure 1).

When the users request navigation information, they push a button on the cane. It activates the infrared LEDs embedded in the cane. The software application instantly tries to determine the user's position and the presence of obstacles in the surrounding area. The user's position and movement are detected through the infrared camera embedded in the Wiimotes. These devices transmit that information via Bluetooth to a software application running on the computer. The application then uses such information and also the data that it has about the obstacles in the area, to generate single navigation voice messages that are delivered through the user's smartphone. The system follows a common-sense approach [2] for the message delivery.

The system prototype has been tested in two different scenarios. Although these results are still preliminary, they indicate the proposal is usable and useful to guide the visually impaired in indoor environments. The next section presents and discusses the related work. Section 3 establishes the requirements of the solution. Section 4 describes the navigation process and its main components. Section 5 explains the design and implementation of the software application that is in charge of coordinating all peripherals and processing the system information in order to deliver useful navigation messages to end-users. Section 6 presents and discusses the preliminary results. Section 7 presents the conclusions and future work.

2. Related Work

Many interesting proposals have been done in the area of location and mobility assistance for people with visual disabilities. These works are mainly focused on three types of environments [1]: outdoor [3–5], indoor [6–9], and some mix of the previous ones [10–12].

The research on outdoor environments mainly addresses the problem of users positioning during micro-navigation and macro-navigation [1]. Micro-navigation studies the delivery of information from the immediate physical environment, and macro-navigation explores the challenges of dealing with the distant environment. In both cases the use of global positioning systems (GPS) has shown to be quite useful in recognizing the user's position.

The studies focused on indoor environments have proposed several ad hoc technologies and strategies to deliver useful information to the user [1]. However, just some of them are suitable to be used by visually impaired people. For example, Sonnenblick [9] implemented a navigation system for indoor environments based on the use of infrared LEDs. Such LEDs must be strategically located in places used by the blind person to perform their activities (e.g., rooms and corridors), thus, acting as guides for them. The signal of these guiding LEDs is captured and interpreted by a special device which transforms it into useful information to support the user's movements. The main limitation of such a solution is the use of an infrared receptor instead of a device with large coverage such as an infrared camera. Because the infrared signal must be captured to identify the user's position, the receptor device must point directly at the light source (e.g., the LEDs), thus losing the possibility of smooth integration between the device and the environment.

Hub, Diepstraten and Ertl [6] developed a system to determine the position of objects and individuals in an indoor environment. That solution involved the use of cameras to detect objects and direction sensors to recognize the direction in which the user is moving. The main limitation of that proposal is the accessibility of the technology used to implement it, since the system requires a specialized device to enable the user to interact with the environment. This system also pre-establishes possible locations for the cameras, which also generates several limitations; for example the detection process requires the person points out his white cane at the eventual obstacles.

In a later work, Hub, Hartter and Ertl [7] went beyond their previous proposal and included in the system, the capability of tracking various types of mobile objects, e.g., people and pets. Then, using an algorithm similar to human perception, they attempted to identify such tracked objects by comparing their color and shape, with a set of known objects.

Treuillet and Royer [11] proposed an interesting vision-based navigation system to guide visually impaired people in indoor and outdoor environments. The positioning system uses a body mounted camera that periodically takes pictures from the environment, and an algorithm to match (in real-time) particular references extracted from the images, with 3D landmarks already stored in the system. This solution has shown very good results to locate people in memorized paths, but it is not suitable to be used in environments that are visited for the first time. The same occurs with the proposal of Gilliéron et al. [13].

There are also several research works in the robotic and artificial intelligence fields, which have studied the recognition of indoor scenes in real-time [14–17]. Some of these solutions allow for creating the reference map dynamically, e.g., the vision-based systems proposed by Davison et al. [18] and Strasdat et al. [19], or the ultrasound positioning system developed by Ran et al. [12]. Although they have shown to be accurate and useful in several domains such as robotics, wearable computing and the automotive sector, they require that the vehicle (in our case the blind person) carry a computing device (e.g., a nettop) to sense the environment and to process such information in real-time. Since they must carry the white cane with them all of the time, the use of extra gadgets that are not particularly wearable, typically jeopardizes the suitability of such solutions [20]. In that sense the solution proposed by Hesch and Roumeliotis [21] is particularly interesting because they instrumented a white cane, which is a basic tool for blind people. However, such a solution has two important usability limitations: (1) the sensors mounted in the cane (a laser scanner and a 3-axis gyroscope) are too large and heavy, which limits the user movements, and (2) the laser scanner in the cane is directional, therefore it has the same limitations as the previously discussed infrared-based solutions.

Radio Frequency Identification (RFID) is a technology commonly used to guide the visually impaired in indoor environments. For example, in [22,23] the authors propose a system based on smartphones that allows a blind person to follow a route drawn on the floor. This solution combines a cane with a portable RFID reader attached to it. Using this cane, a user can follow a specific lane, composed by RFID labels, on the floor of a supermarket. Kulyukin et al. [24] propose a similar solution, replacing the white cane with a robot that is in charge of guiding the visually impaired person.

Another RFID-based solution that supports the navigation of visually impaired people was proposed by Na [25]. The system, named BIGS (for Blind Interactive Guide System), consists of a PDA, a RFID reader, and several tags deployed in the floor. Using these elements the system can recognize the current location of the PDA's user, calculate the direction of the user, recognize voice commands and deliver voice messages. The main limitations of using RFID-based solutions are two: (1) the reconfiguration of the positioning area (e.g., because it has now a new setting) involves more time and effort than other solutions, like vision-based systems, and (2) the users are typically guided just through predefined paths.

An important number of works have also been conducted to determine users' position and perform object tracking in indoor environments as support of ubiquitous computing systems [26–29]. Although they have shown to be useful to estimate the users' position, they do not address the problem of obstacles recognition in real-time systems. Therefore, they are partially useful in addressing the stated problem.

Hervás et al. [30] introduced the concept of “tagging-context” for distributing awareness information about the environment and for providing automatic services to the augmented objects used in these environments. Finally, an interesting work regarding a network of software agents with visual sensors is presented in [31]. This system uses several cameras for recognition of users and tracking of their positions in an indoor space. However these last two works do not address the particularities involved in the supporting of such navigation for the visually impaired.

3. Requirements of the Solution

A list of functional and non-functional requirements were defined for the proposed navigation system. Such requirements were based on the results of the study conducted by Wu et al. [32] and also on the experience of the authors as developers of solutions for blind people. These requirements were then validated with real end-users. Some non-functional requirements, such as privacy, security and interoperability, were not considered in the system at this stage, as a way to ease its evaluation process.

The general requirements that were included in the design of the proposed micro-navigation system were classified in the following categories:

Navigation model. These requirements are related to the model and not to its implementation. The following are main requirements related to such a component:

- -

Generality. The navigation model must be able to be used (with or without self-adaptations) in several indoor environments. It ensures that the user will count on navigation support in an important number of built areas.

- -

Usefulness. The model must allow the detection and positioning of mobile users and obstacles, and based on that, it has to provide useful navigation information.

- -

Accuracy. The model must count on quite accurate information about the user's movement and location. The information accuracy must allow the system to support the navigation of blind people in a safe way. Based on the authors' experience, the navigation system should be suitable if the worst case has a positioning error of 0.4 m. In the worst case the system must detect the user's movement in 500 ms.

- -

Feasibility. The model implementation should be feasible using technologies that are accessible (in terms of cost, usability and availability) to the end-users.

- -

Multi-user. The model must consider the presence of more than one user in the same environment.

Navigation system. The requirements related to the implementation of the navigation model, i.e., navigation system, are the following:

- -

User-centric. The services provided by the system must consider the particular disabilities of the users, e.g., their level of blindness.

- -

Availability: The system must maximize the availability of its services independently of the environment where the user is located. Thus, the solution becomes usable in several built areas.

- -

Identification. The system must be able to unequivocally identify the users.

- -

Multi-user. The navigation services provided by the system must consider the eventual participation of more than one user in the same environment.

- -

Performance: The system performance must be good enough to provide navigation services on-time, considering the users' movements, the walking speed and the obstacles in the environment. Based on the authors' experience, the response time to end-users was set in 300 ms.

- -

Usability. The use of the system should be as natural as possible for the end-users.

- -

Usefulness. The information delivered by the system must be useful and allow the users to navigate indoor environments properly, even if they are visiting those spaces for the first time.

- -

Economical feasibility. The cost of the system must be affordable to the end-users.

6. Preliminary Results

In Sections 6.1 and 6.2 we report on the experiments performed using the proposed system. Moreover, in Section 6.3 we present some metrics on non-functional requirements of this solution. Section 6.4 discusses the preliminary results.

6.1. First Evaluation Process

During the first experimentation process, it was not possible to count on visually impaired people to perform the test. Therefore, we decided to begin a pre-experimentation process with blindfolded people in order to run a first analysis of the proposed solution (Figure 10). Five volunteers participated in this experiment.

In order to make the participation interesting for these volunteers, we set up the following challenge: they should all be able to cross a room just by using the information delivered through the application. The type of information delivered to them does not intend to indicate which movements the user must perform. It only provided contextual information that helped them make their own decisions about which route to follow. For example, if the person is moving towards a table, the system does not assume that the user must dodge it, since the user could be intentionally going to the table to pick up something. Therefore, the system informs the user if moving towards an object or a possible obstacle, and what type of object it is. For example “An object is one meter ahead”. These messages are configurable; therefore they can be changed according to the user's preferences.

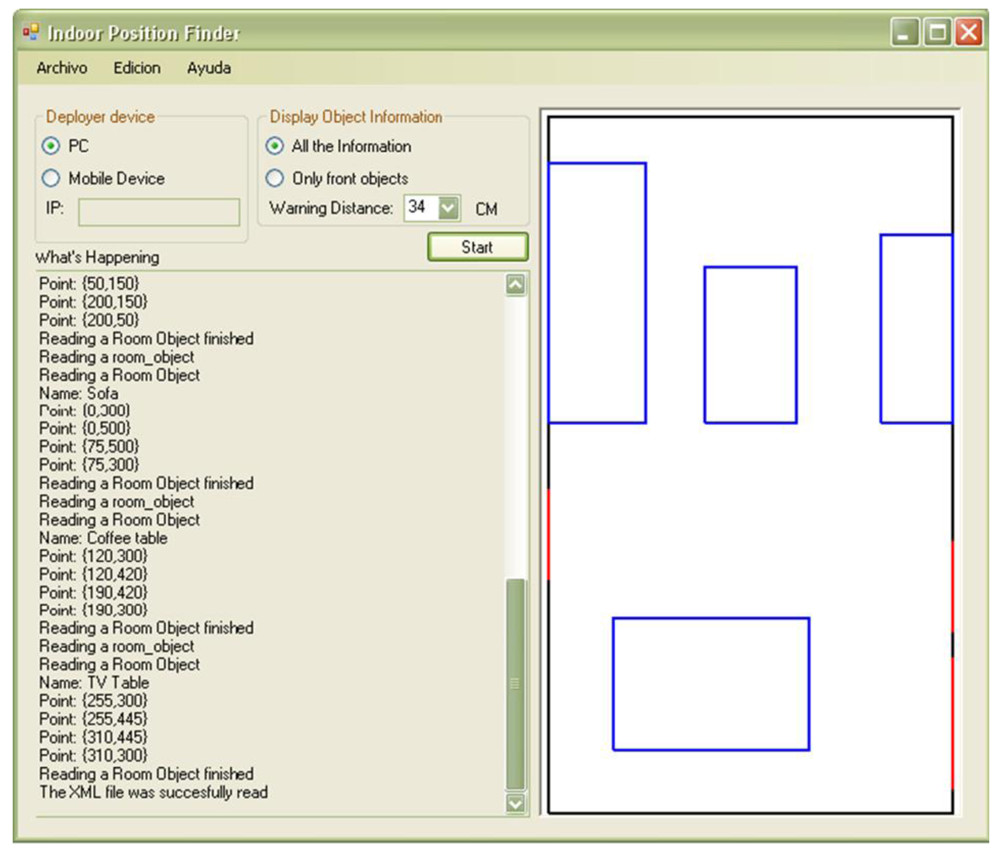

As shown in Figure 5, the warning distance is a configurable parameter. In this test the warning distance was set to 1 m. Other parameter that can be established according to the user's preferences is the waiting time between messages, i.e., the minimum time the system waits before delivering a next message to the user. In this experimentation such a time was set to 2 s.

The room used in this experiment was 20 m2 approximately and it consisted of several obstacles which made the test more interesting (Figure 11). Two versions of the same system were used by each participant and a score was obtained in each test. One version of the system delivered the audio messages through the speakers installed in the room, and the second one used the speakers of the smartphone.

The distribution of obstacles in the room was changed after each test so that the users would not memorize the room setting. Two equivalent object distributions were used in this experiment. The users randomly selected the room distribution and the version of the system to be used first.

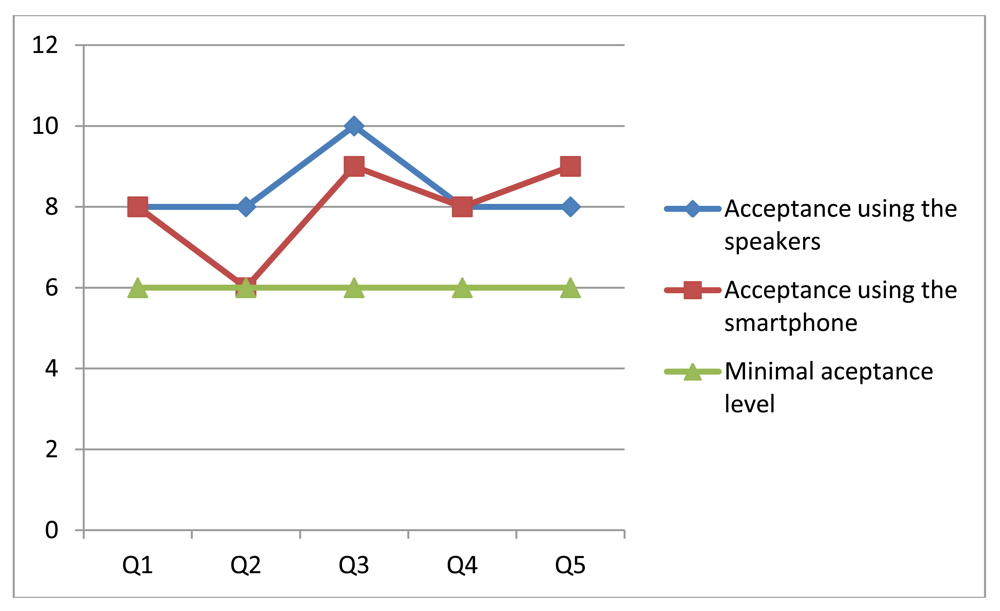

The obtained results were divided into two groups: with and without a smartphone. In both cases, the volunteers were asked five questions to capture their impressions regarding the system. The responses were obtained as soon as the participants finished the test to get their perception of the experience. Moreover, each participant performed the tests using both versions of the system in a consecutive way. We then tried to capture comparative scores between these two alternatives of the system.

Participants had to use a scale of 1 to 10 to represent their responses. For this scale it was considered that the distance between any pair of adjacent numbers is exactly the same (for example, the distance between 1 and 2 is exactly the same as from 2 to 3, and so on). The questions made to the participants were the following:

On a scale of 1 to 10, where 1 is “hard to use” and 10 corresponds to “easy to use”, how do you rate the system usability?

On a scale of 1 to 10, where 1 is “incomprehensible” and 10 is “clear”, how understandable was the information delivered by the system?

On a scale of 1 to 10, where 1 corresponds to “useless” and 10 corresponds to “very useful”, how useful is the information provided by the system?

On a scale of 1 to 10, where 1 corresponds to “very delayed” and 10 corresponds to “immediate”, how fast is the delivery of information from the system?

On a scale of 1 to 10, where 1 is “very imprecise” and 10 corresponds to “exact”, how precise was the contextual information provided by the system?

Given the questions and weights indicated by the users, a general criterion of acceptability was defined for each evaluated item. In order to do that, the tests and the obtained results were analyzed carefully. Based on this analysis it was established that the minimal acceptable value corresponds to 6 on a scale of 1 to 10. Figure 12 shows the median of the scores assigned by the users to each version of the system.

The results indicate a favorable evaluation, with scores over the minimal acceptance level. However, analyzing the test results obtained when messages were delivered through the smartphone, we see that in question 2 (related to the understandability of the information delivered by the system) the solution did not pass the minimal acceptance criteria. This was because the messages were not delivered as fluently as expected, since there was a poor communication link between the laptop and the smartphone. Particularly a single peer-to-peer link was used to communicate these devices. That situation was then addressed through the use of the HLMP platform [35].

All participants completed the challenge. Their walking speed was (in average) 0.2 m per second using any version of the system. The scores obtained in the rest of the items were similar for both versions of the system. This could mean that the user did not perceive an advantage in the use of a smartphone, although operatively it represented an important contribution.

6.2. Second Evaluation Process

The second evaluation process involved a user population and physical scenario different from the previous one. The system prototype used in this tests was improved, mainly in terms of performance, compared to the one used in the previous experiment. The only change done to the system was the use of HLMP as the communication platform that manages the interactions between the notebook and the smartphone. Such a communication infrastructure is able to keep a quite stable communication throughput among devices participating in the MANET, and it also provides advanced services to deal with the packet loss and micro-disconnection that usually affect to this type of communication. The inclusion of HLMP represented an important improvement to the system performance. Although this improvement was not measured formally, it was clearly identified in the video records of the experimentation process and also in the users' comments.

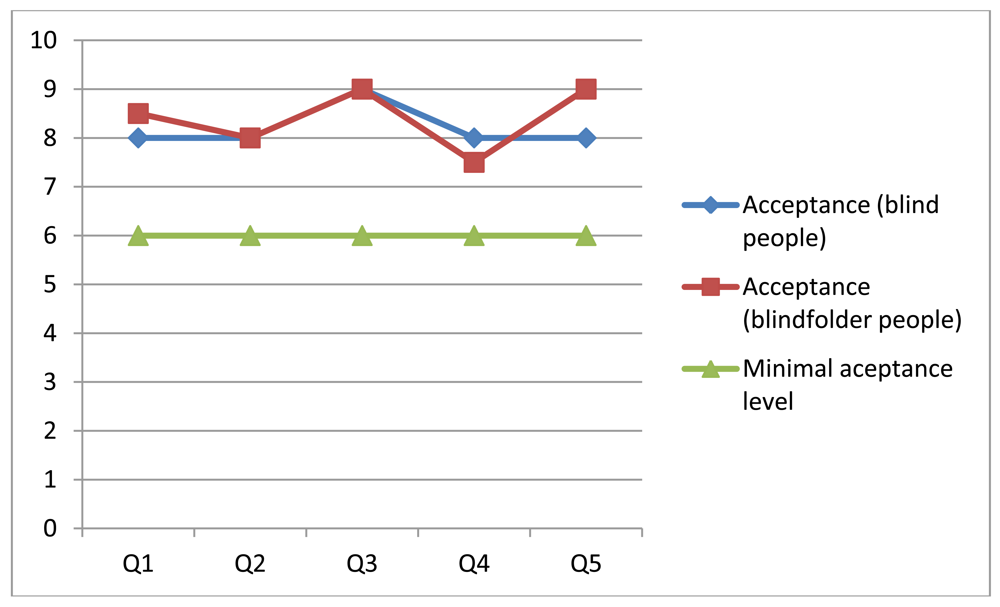

Nine people participated in this experimentation process: two blind people and the rest were blindfolded engineering students. The physical scenario involved two rooms of 20 m2 each (Figure 13). The challenge for the participants was similar to the previous one, but now the experimentation area involved two rooms. In addition, the participants used two settings during the tests: (1) the navigation system with the smartphone, or (2) just their intuition and walking capabilities.

After the test the people responded to the same questions as in the previous experience. The obtained results are shown in Figure 14. Although the number of participants is still low, their feeling about the usability and usefulness of the navigation system is good, and their perception is better than the previous experience. A possible reason for that is performance improvement that was done on this version of the navigation system. These results also show almost no difference among the perception of the blind and blindfolded people regarding the navigation system.

Like in previous case, all participants were able to complete the challenge when they used the navigation system. Blind people and just one blindfolded person also completed the challenge without navigation support. Table 1 summarizes the average walking speeds of each test, considering just the completed challenges. Each test was recorded in video, which allowed us then to determine times, distances and walking speeds. These results show that blind and blindfolded people improved their walking speed when the navigation system was used, which indicates the system was useful.

6.3. Evaluation of Transversal Issues

During the second evaluation process, some additional metrics were captured to evaluate transversal aspects of the solution. In order to understand the system performance, we measured the time period between the instant in which the user pushes the button (asking for navigation support) and the instant in which the first voice message is delivered to the user. Such a period was below 2 s in 90% of the cases. There was no difference if the user was on the move or stationary when asked for supporting information. These preliminary numbers and also the responses to question 4 (shown in the two previous experimentation processes) lead us to believe that the system has an acceptable performance level.

Considering all cases when the white cane was in an area visible by both Wiimotes (see Figure 7), the system was able to deliver accurate and useful information to the end-user. Considering these numbers and the responses to questions 3 and 5 we can say that the system seems to be useful and accurate enough to support the navigation for blind people in indoor environments.

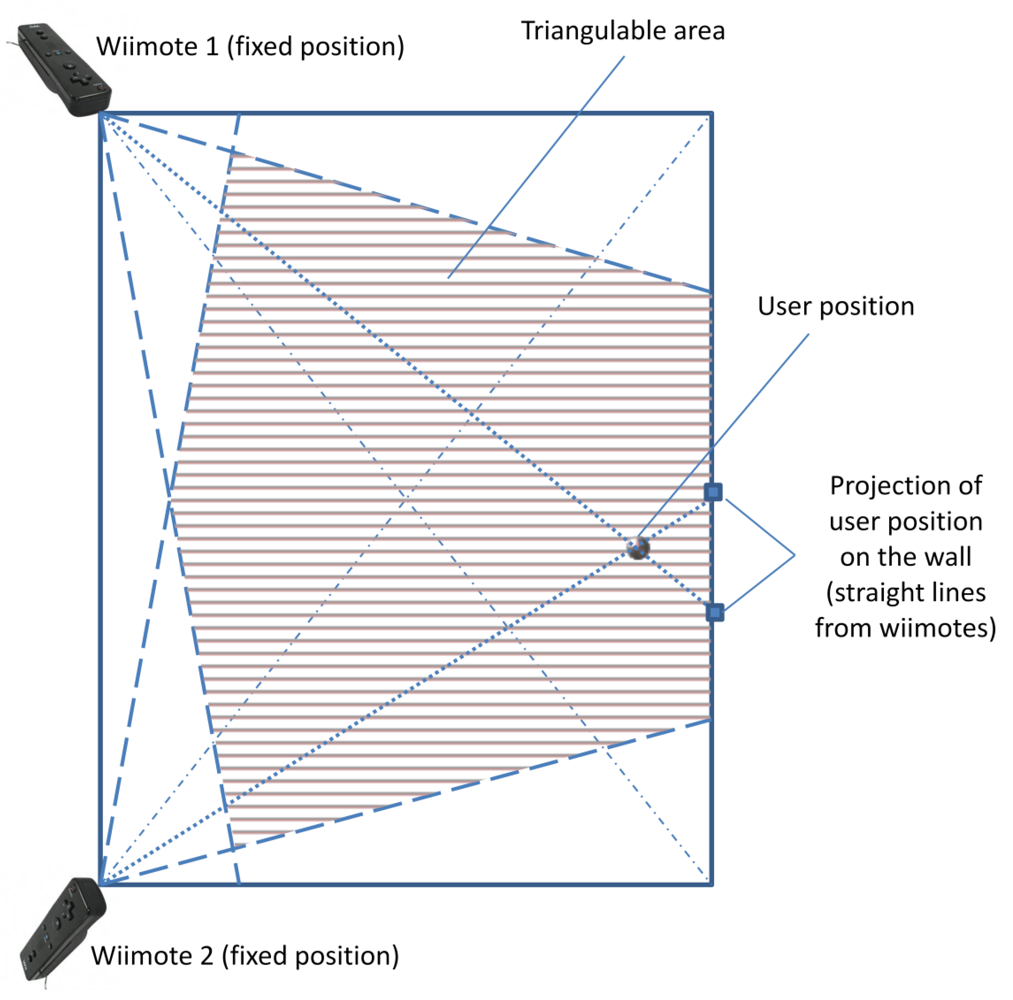

The system availability was also deemed acceptable. The navigation services were available at all times, however the positioning strategy was able to accurately determine the user position when the white cane was in the area visible by the Wiimotes. It represents approximately 65% of the room area (see Figure 7). In areas visible just by one Wiimote (i.e., approximately 25% of the room area) the positioning process was conducted using an estimation based on the last known user's position and the velocity and direction of the user's movement. Ten information requests were conducted in those partially visible areas. In 9 of 10 cases the system was able to provide useful (but not accurate) information to the user.

6.4. Discussion

Considering the requirements defined in Section 3 (user-centric, performance, usability, usefulness and economical feasibility), we can say that the system addresses most of them. Concerning this last requirement, it is clear that the cost of this solution can be reduced considerably if specialized hardware is used, e.g., an infrared camera instead of a Wiimote.

The availability of the solution was partially addressed in the current version of the system. Although the system is potentially able to manage large areas, its main limitation is the use of Bluetooth that has a short communication threshold. However this limitation can be overcame just using WiFi communication.

The user individualization and the support for multiple users were not formally considered in the current version of the system; however they were considered in the navigation model. Due to every room and user have an individual identification, the system can manage collections of these elements by extending the XML file. The only concern could be the system performance when a large number of these components are managed simultaneously by a simple computer. Particularly the network throughput could represent a bottleneck negatively affecting the performance, and therefore the usability and usefulness of the system. This issue can be addressed by distributing the coordination process over more than one computer.

7. Conclusions and Further Work

This article presented the prototype of a micro-navigation system that helps the visually impaired to ambulate within indoor environments. The system uses few components and accessible technology. The results of the preliminary tests show that the solution is useful and usable to guide the user in indoor environments. However, it is important to continue testing the solution in real environments, involving visually impaired people to obtain feedback that allows us to improve the proposal in the right direction.

This solution not only allows a user with visual disabilities to ambulate into an indoor environment while avoiding obstacles, but it could also help them interact with the environment, given that the system has mapped all the objects found therein. For example, if the user wants to sit down to rest, the system can tell him where a chair or a sofa is situated. The system can also be used to promote face-to-face meeting of blind people in built areas

Regarding the initial data capture (i.e., initial settings), the article presents a software application that lets the developer create room maps in a graphic way, by drawing the walls and objects. This tool translates this vector map to the XML file required by the system, which simplifies the rooms' specification and the system deployment.

Of course, the most important limitation of the system is that it will fail if a piece of furniture, for instance a chair, is moved. When an object in the environment is moved, the map needs to be refreshed. However, this could be implemented in places that do not change frequently, like museums, theaters, hospitals, public administration offices and buildings halls.

Although the developed prototype and the pre-experimentation phase met all our expectations, more rigorous experiments must be designed and conducted to identify the real strengths and weaknesses of this proposal. Particularly, various non-functional requirements such as privacy, security and interoperability must be formally addressed by this proposal.

The algorithm used for detecting the objects and the movement of the users in the environment works satisfactorily. However, different algorithms could be tested in order to improve the system accuracy.

The limits of this proposal need to be established in order to identify the scenarios where the system can be a contribution for the visually impaired. That study will also help determine improvement areas of this solution.

Acknowledgments

This paper has been partially supported by CITIC-UCR (Centro de Investigación en Tecnologías de la Información y Comunicación de la Universidad de Costa Rica), and by FONDECYT (Chile), grant 1120207 and by LACCIR, grant R1210LAC002.

References

- Bradley, N.A.; Dunlop, M.D. An experimental investigation into wayfinding directions for visually impaired people. Pers. Ubiquitous Comput. 2005, 9, 395–403. [Google Scholar]

- Santofimia, M.J.; Fahlman, S.E.; Moya, F.; López, J.C. A Common-Sense Planning Strategy for Ambient Intelligence. Proceedings of the 14th International Conference on Knowledge-Based and Intelligent Information and Engineering Systems, Cardiff, UK, 8–10 September 2010; LNCS 6277. pp. 193–202.

- Holland, S.; Morse, D.R.; Gedenryd, H. Audio GPS: Spatial Audio in a Minimal Attention Interface. Proceedings of the 3rd International Workshop on Human Computer Interaction with Mobile Devices, Lille, France, 10 September 2001; pp. 28–33.

- Sanchez, J.; Oyarzun, C. Mobile Assistance Based on Audio for Blind People Using Bus Services. In New Ideas in Computer Science Education (In Spanish); Lom Ediciones: Santiago, Chile, 2007; pp. 377–396. [Google Scholar]

- Sanchez, J.; Saenz, M. Orientation and mobility in external spaces for blind apprentices using mobile devices. Mag. Ann. Metrop. Univ. 2008, 8, 47–66. [Google Scholar]

- Hub, A.; Diepstraten, J.; Ertl, T. Design and Development of an Indoor Navigation and Object Identification System for the Blind. Proceedings of the ACM SIGACCESS Conference on Computers and Accessibility, Atlanta, GA, USA, 18–20 October 2004; pp. 147–152.

- Hub, A.; Hartter, T.; Ertl, T. Interactive Localization and Recognition of Objects for the Blind. Proceedings of the 21st Annual Conference on Technology and Persons with Disabilities, Los Angeles, CA, USA, 22–25 March 2006; pp. 1–4.

- Pinedo, M.A.; Villanueva, F.J.; Santofimia, M.J.; López, J.C. Multimodal Positioning Support for Ambient Intelligence. Proceedings of the 5th International Symposium on Ubiquitous Computing and Ambient Intelligence, Riviera Maya, Mexico, 5–9 December 2011; pp. 1–8.

- Sonnenblick, Y. An Indoor Navigation System for Blind Individuals. Proceedings of the 13th Annual Conference on Technology and Persons with Disabilities, Los Angeles, CA, USA, 17–21 March 1998; pp. 215–224.

- Coroama, V. The Chatty Environment—A World Explorer for the Visually Impaired. Proceedings of the 5th International Conference on Ubiquitous Computing, Seattle, WA, USA, 12–15 October 2003; pp. 221–222.

- Treuillet, S.; Royer, E. Outdoor/indoor vision-based localization for blind pedestrian navigation assistance. Int. J. Image Graph. 2010, 10, 481–496. [Google Scholar]

- Ran, L.; Helal, S.; Moore, S. Drishti: An Integrated Indoor/Outdoor Blind Navigation System and Service. Proceedings of the 2nd Annual Conference on Pervasive Computing and Communications, Orlando, FL, USA, 14–17 March 2004; pp. 23–32.

- Gilliéron, P.; Büchel, D.; Spassov, I.; Merminod, B. Indoor Navigation Performance Analysis. Proceedings of the European Navigation Conference GNSS, Rotterdam, The Netherlands, 17–19 May 2004.

- Bosch, A.; Muñoz, X.; Martí, R. A review: Which is the best way to organize/classify images by content? Image Vis. Comput. 2007, 25, 778–791. [Google Scholar]

- Espinace, P.; Kollar, T.; Roy, N.; Soto, A. Indoor Scene Recognition through Object Detection. Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010; pp. 1406–1413.

- Quattoni, A.; Torralba, A. Recognizing Indoor Scenes. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 413–420.

- Santofimia, M.J.; Fahlman, S.E.; del Toro, X.; Moya, F.; López, J.C. A semantic model for actions and events in ambient intelligence. Eng. Appl. Artif. Intell. 2011, 24, 1432–1445. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar]

- Strasdat, H.; Montiel, J.M.; Davison, A.J. Real-Time Monocular SLAM: Why Filter? Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–8 May 2010; pp. 2657–2664.

- Velázquez, R. Wearable Assistive Devices for the Blind. In Wearable and Autonomous Biomedical Devices and Systems for Smart Environment: Issues and Characterization; LNEE 75; Lay-Ekuakille, A., Mukhopadhyay, S.C., Eds.; Springer: Berlin, Germany, 2010; Chapter 17; pp. 331–349. [Google Scholar]

- Hesch, J.A.; Roumeliotis, S.I. Design and analysis of a portable indoor localization aid for the visually impaired. Int. J. Robot. Res. 2010, 29, 1400–1415. [Google Scholar]

- López-de-Ipiña, D.; Lorido, T.; López, U. BlindShopping: Enabling Accessible Shopping for Visually Impaired People through Mobile Technologies. Proceedings of the 9th International Conference on Smart Homes and Health Telematics, Montreal, Canada, 20–22 June 2011; LNCS 6719. pp. 266–270.

- López-de-Ipiña, D.; Lorido, T.; López, U. Indoor Navigation and Product Recognition for Blind People Assisted Shopping. Proceedings of the 3rd International Workshop on Ambient Assisted Living, Malaga, Spain, 8–10 June 2011; LNCS 6693. pp. 33–40.

- Kulyukin, V.; Gharpure, C.; Nicholson, J.; Pavithran, S. RFID in Robot Assisted Indoor Navigation for the Visually Impaired. Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Sandai, Japan, 28 September–2 October 2004; pp. 1979–1984.

- Na, J. The Blind Interactive Guide System Using RFID-Based Indoor Positioning System. Proceedings of the 10th International Conference on Computers Helping People with Special Needs, Linz, Austria, 11–13 July 2006; LNCS 4061. pp. 1298–1305.

- Bravo, J.; Hervás, R.; Sanchez, I.; Chavira, G.; Nava, S. Visualization services in a conference context: An approach by RFID technology. J. Univ. Comput. Sci. 2006, 12, 270–283. [Google Scholar]

- Castro, L.A.; Favela, J. Reducing the uncertainty on location estimation of mobile users to support hospital work. IEEE Trans. Syst. Man Cybern. Part C: Appl. Rev. 2008, 38, 861–866. [Google Scholar]

- López-de-Ipiña, D.; Díaz-de-Sarralde, I.; García-Zubia, J. An ambient assisted living platform integrating RFID data-on-tag care annotations and twitter. J. Univ. Comput. Sci. 2010, 16, 1521–1538. [Google Scholar]

- Vera, R.; Ochoa, S.F.; Aldunate, R. EDIPS: An easy to deploy indoor positioning system to support loosely coupled mobile work. Pers. Ubiquitous Comput. 2011, 15, 365–376. [Google Scholar]

- Hervás, R.; Bravo, J.; Fontecha, J. Awareness marks: Adaptive services through user interactions with augmented objects. Pers. Ubiquitous Comput. 2011, 15, 409–418. [Google Scholar]

- Castanedo, F.; Garcia, J.; Patricio, M.A.; Molina, J.M. A Multi-Agent Architecture to Support Active Fusion in a Visual Sensor Network. Proceedings of the ACM/IEEE International Conference on Distributed Smart Cameras, Stanford, CA, USA, 7–11 September 2008; pp. 1–8.

- Wu, H.; Marshall, A.; Yu, W.; Cheng, Y.-M. Applying HTA Method to the Design of Context-Aware Indoor Navigation for the Visually-Impaired. Proceedings of the 4th International Conference on Mobile Technology, Applications, and Systems, Singapore, 12–14 September 2007; pp. 632–635.

- Ishii, H.; Ullmer, B. Tangible Bits: Towards Seamless Interfaces between People, Bits and Atoms. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 22–27 March 1997; pp. 234–241.

- Guerrero, L.A.; Ochoa, S.F.; Horta, H. Developing augmented objects: A process perspective. J. Univ. Comput. Sci. 2010, 16, 1612–1632. [Google Scholar]

- Rodríguez-Covili, J.F.; Ochoa, S.F.; Pino, J.A.; Messeguer, R.; Medina, E.; Royo, D. A Communication Infrastructure to Ease the Development of Mobile Collaborative Applications. J. Netw. Comput. Appl. 2011, 34, 1883–1893. [Google Scholar]

- Williams, A.; Rosner, D.K. Wiimote Hackery Studio Proposal. Proceedings of the 4th ACM International Conference on Tangible and Embedded Interaction, Cambridge, MA, USA, 25–27 January 2010; pp. 365–368.

| Blind People | Blindfolded People | |

|---|---|---|

| With Navigation Support | 0.7 m/s | 0.2 m/s |

| Without Navigation Support | 0.4 m/s | 0.05 m/s |

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Guerrero, L.A.; Vasquez, F.; Ochoa, S.F. An Indoor Navigation System for the Visually Impaired. Sensors 2012, 12, 8236-8258. https://doi.org/10.3390/s120608236

Guerrero LA, Vasquez F, Ochoa SF. An Indoor Navigation System for the Visually Impaired. Sensors. 2012; 12(6):8236-8258. https://doi.org/10.3390/s120608236

Chicago/Turabian StyleGuerrero, Luis A., Francisco Vasquez, and Sergio F. Ochoa. 2012. "An Indoor Navigation System for the Visually Impaired" Sensors 12, no. 6: 8236-8258. https://doi.org/10.3390/s120608236

APA StyleGuerrero, L. A., Vasquez, F., & Ochoa, S. F. (2012). An Indoor Navigation System for the Visually Impaired. Sensors, 12(6), 8236-8258. https://doi.org/10.3390/s120608236