1. Introduction

Throughout the recent years, triggered by an increasingly more profound understanding of disease mechanisms, clinical researchers have come to the conclusion that the assumption of a homogenous treatment effect throughout the patient population of interest does not always hold true. Instead, it has become known that there are subgroups of patients which may have a larger benefit as compared to the total population. Usually, these subgroups are identified with an (often binary) biomarker, and patients are tested for their biomarker status with a suitable bioassay. We here can distinguish between two types of biomarkers: in case that a biomarker can be used to predict the most likely prognosis of an individual patient, the biomarker is called “prognostic”. If, however, a biomarker is likely to predict the response to a specific therapy, it is called “predictive”, see, e.g., [

1]. There are also many cases where a biomarker shows both predictive and prognostic properties.

There exist several examples of therapies which have only been approved for a specific subset of patients identified by a predictive biomarker, such as human epidermal growth factor receptor 2 (HER2) overexpression for the treatment of breast cancer patients, Kirsten rat sarcoma viral oncogene homolog (KRAS) mutations in patients with colorectal cancer, and epidermal growth factor receptor (EGFR) mutations for patients with non-small cell lung cancer [

1].

Until recently, the most common approach to evaluate potentially targeted therapies was to identify the most promising subgroup within an exploratory phase II trial and then to investigate this subgroup within a subsequent confirmatory phase III trial. However, this approach has the disadvantage that the data obtained in the exploratory phase II trial cannot be incorporated into the proof of efficacy which can only be claimed based on the data from the confirmatory trial. So-called adaptive enrichment designs, which have recently been proposed as an alternative (see, e.g., [

2–

13]), do not show this disadvantage. They allow the possibility to select the target population mid-trial at an interim analysis to subsequently investigate the most promising target population, and finally to combine the data from both stages of the trial for the proof of efficacy. There exists a broad range of designs for biomarker-based trials. For an overview we refer to [

1]. The investigation of one or more target populations within a single trial yields the problem of multiple testing. In order to control the nominal significance level, adaptive enrichment designs address this issue by incorporating adjustment methods for multiple testing.

Since it crucially influences the properties and outcome of the trial, the role of the applied interim decision rule should not be undervalued. However, there exists only very sparse literature on the impact of the applied interim decision rule on design properties such as power, type I error rate or probability for a correct selection. As has been shown recently for the case of a normally distributed outcome [

14,

15], the role of the applied decision rule is vital for a clinical trial with subgroup selection. Hence, this article aims to further investigate the role of the applied decision rule in an adaptive enrichment design in case of a binary outcome variable, which has not yet been covered in the literature. Another important issue concerning the determination of a decision rule with desirable properties is the generally common uncertainty about treatment effects. When prior knowledge about treatment effects can be modeled by prior distributions and the sustained loss for a false decision can be quantified by means of a loss function, it is possible to determine optimal decision rules which minimize the expected loss. In this article, we derive such optimal rules, discuss their properties and investigate their performance in an adaptive enrichment trial by means of a simulation study.

The article is structured as follows: Section 2 introduces the test problem and some notation. Section 3 presents the adaptive enrichment design and the related testing procedure. Optimal decision rules are derived in Section 4 for a wide range of scenarios. Section 5 investigates the impact of these rules in terms of power and probability for a correct selection within the proposed trial design by means of a simulation study. The practical utility of our proposed rules is illustrated in Section 6 by a clinical trial example. Finally, we conclude with a discussion in Section 7.

2. Notation and General Considerations

Throughout this article, a parallel group randomized controlled trial is considered, where an experimental treatment

T is compared to a control treatment

C. The participants of the trial stem from a total patient population

G0 which contains a subset

G1 and a complementary subset

G2 :=

G0 \ G1. Patients from

G1 are expected to have a greater benefit from the investigated therapy and are identified by a biomarker. Biomarker status is assessed by a bioassay and patients with a positive bioassay outcome are identified as biomarker-positive. Let us assume that that treatment allocation is balanced and both treatment groups are assumed to contain an identical proportion of biomarker-postive patients π. The primary outcome is a binary variable taking values 0 and 1, where 1 stands for a favorable und 0 for an unfavorable event. Let

pT1 and

pC1 be the rates of success for subgroup

G1 in the treatment and control group, respectively, and

pT2 and

pC2 be the respective treatment success rates in the complementary subset

G2. The efficacy measures of our trial are the differences in event rates ∆

1 :=

pT1 −

pC1, ∆

2 :=

pT2 −

pC2 and the difference in event rates in the total population ∆

0 = π∆

1 + (1 − π)∆

2. The one-sided global null and alternative hypothesis for our trial are then:

This global test problem consists of two local test problems for the respective populations of interest:

In order to properly control the family wise error rate in this situation of multiple testing, a closed testing procedure will be applied. This means that a local null hypothesis, e.g.,

, can only be rejected at level a, if the global hypothesis H0 which implies

is also rejected at level a. Further details on the testing procedure will be described in the following section.

The outcome variables are assumed to be independent with:

where

Ber(

p) denotes the Bernoulli distribution which is the probability distribution of a random variable taking the value 1 with a success probability of

p and value 0 with a failure probability of

q = 1−

p.

3. Trial Design and Adaptive Testing Procedure

In this article, we consider a two-stage adaptive enrichment trial which was proposed for a time-to-event outcome by Jenkins

et al. [

7] which can be transferred to the setting of a binary outcome variable by appropriate modifications. The proposed design consists of two trial stages

I and

II with an

a priori fixed sample size for both stages of

n patients per treatment group, which may, however, change after an interim analysis after stage

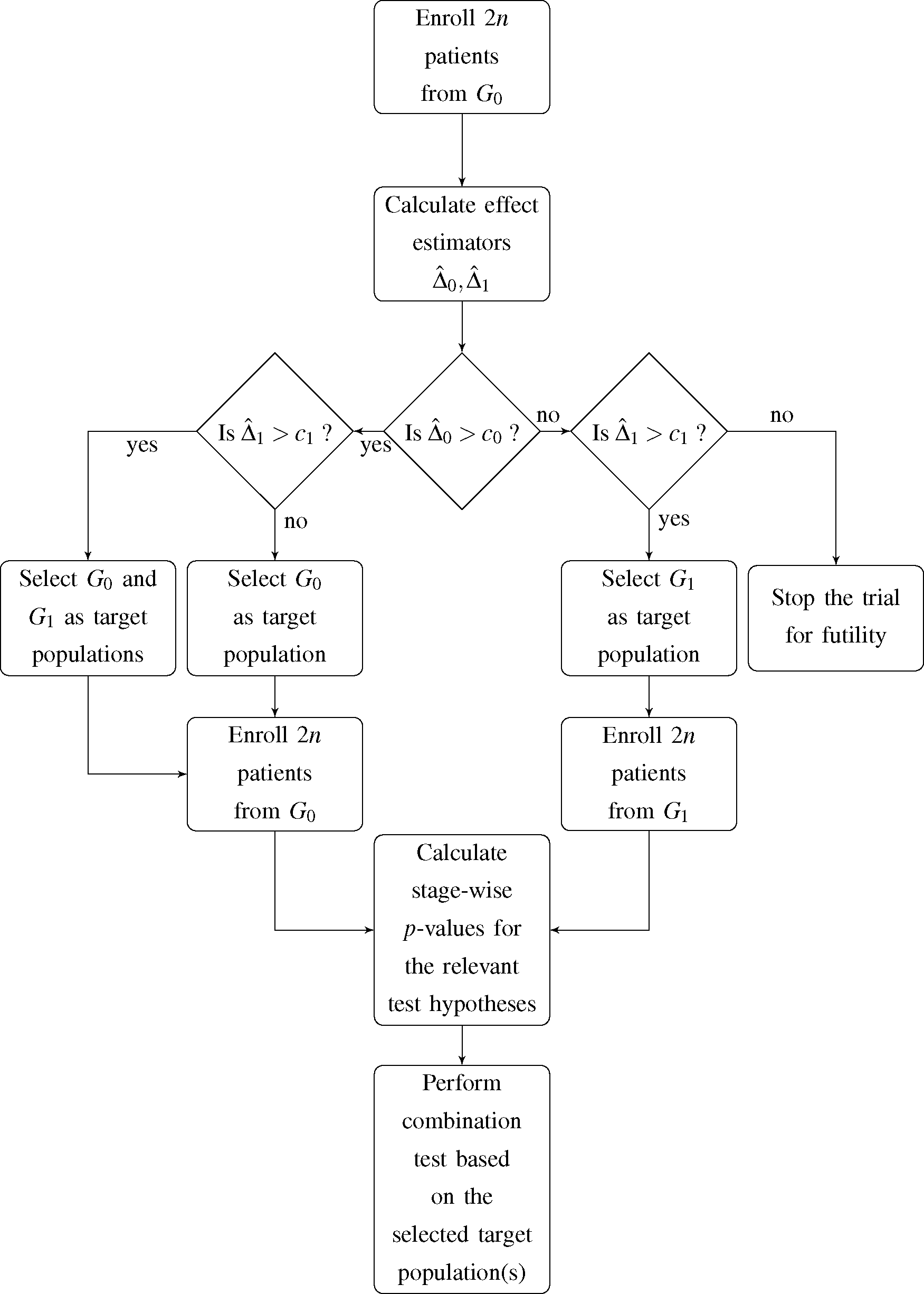

I. At this point, an interim decision concerning the target population for the subsequent stage of the trial has to be made. The decision is based on the observed event rates in the first stage of the trial and incorporates four possible options for the second stage and, accordingly, the final analysis:

- (1)

G0 and G1 are selected as co-primary target populations

- (2)

G0 is selected as the only target population

- (3)

G1 is selected as the only target population

- (4)

The trial is stopped for futility

Jenkins

et al. [

7] expressed the decision rule in terms of the estimated hazard ratio in the total population and subgroup. Since we deal with a binary outcome variable, we choose the observed difference in event rates in both

G0 and

G1 as decision criterion. Hence, if the observed difference in event rates within population

Gi,

, does not exceed the pre-specified decision threshold

ci,

i = 0,1, the respective population is dropped as a target population for the second stage of the trial. A flowchart of the proposed trial design is shown in

Figure 1.

3.1. First Trial Stage

Let

be the event rate estimators of the first stage of the trial. The treatment effect estimators are then:

Test statistics

and stage-wise

p-values for the first stage of the trial,

,

are defined as:

where Φ denotes the distribution function of the standard normal distribution. The

p-value

corresponds to the global null hypothesis

H0 and is a multiplicity-corrected

p-value according to the method of Hochberg [

16]. We chose the

z-test for difference in proportions to test the trial hypotheses due to their one-sided nature. It should be noted that the same method is also used in one of the most frequently used software for planning and analysis of adaptive enrichment designs, ADDPLAN™ [

17].

3.2. Second Trial Stage

In case both total population and subgroup are selected as co-primary target population or only the total population is chosen for the second stage of the trial, this will yield event rate estimators:

and test statistics

and

p-values

will be calculated analogously as for the first trial stage.

In case that the subgroup is chosen as target population at interim, 2

n bioassay-positive patients will be enrolled for the second stage of the trial. Hence, the event rate estimators now are:

This yields the following test statistics and

p-value:

In analogy to [

7], for the co-primary case,

i.e., when both populations are considered as target populations, the hypotheses

H0,

will be assessed via the following combination test statistics:

Here, we chose the reasonable approach to weigh the stage-wise

p-values equally due to the identical sample sizes across stages. The combined test statistics in case of selecting the total population as the only target population are only slightly different:

Since the hypothesis

was dropped at interim,

is chosen as the

p-value for the global hypothesis

H0 at the second trial stage, see [

7].

If enrollment is restricted to the subgroup only after the interim analysis, we have:

Note that

is chosen as the p-value for the global null hypothesis at the second stage and that the weights for

are adjusted in order to properly reflect the different sample sizes of patients from G1 enrolled in stages I and II.

Finally, efficacy of the treatment will be analyzed by first testing H0. If the null hypothesis can be rejected at one-sided significance level α,

and

will be tested at the same a-level if the respective hypothesis was not dropped at interim. Note that a claim of efficacy in either of the target populations is only valid if one of the local null hypotheses can be rejected but cannot be justified when only H0 can be rejected.

4. Optimization of Decision Rules

When planning a clinical trial with the design described above, the decision thresholds (

c0,

c1) have to be chosen carefully. Usually, they should reflect considerations in which situation a treatment effect is meaningful enough to pursue investigation of the therapy in the respective study population. The determination of decision boundaries, however, may be difficult when the magnitude of the treatment effects is uncertain, which is usually the case. If the uncertainty about treatment effects can be modeled in terms of prior distributions, it is possible to determine an optimal decision rule. The optimality criterion comes from a so-called loss function, which has to be pre-specified and yields a penalization in case of a false decision. Then, the optimal decision rule is the one that minimizes the expected loss which sometimes is also called Bayes risk (see, e.g., [

18]). In order to determine whether a decision is correct or not, it is necessary to specify two relevance thresholds τ

0 and τ

1. If the actual treatment effect ∆

i exceeds

ti, it would be correct to further investigate population

Gi and, vice versa, if ∆

i ≤ τ

i, it would be correct to stop enrolling patients from

Gi for the second stage of the trial,

i = 0,1.

4.1. Derivation of Optimal Decision Thresholds

In order to properly model the Bayes risk, we first need to derive some distributional properties of our effect measures, the rate difference estimators from the first stage of the trial:

with expectations, variances and covariance (for derivation, see

Appendix A.1):

Now let

be the two-dimensional space of realizations of the bivariate estimator

. Let

denote a set of decision rules with

, such that:

In our case, the space of possible actions

contains the following four elements: (1, 1) denotes the co-primary case, (1, 0) leads us to drop the subgroup as a target population, (0, 1) means that solely patients from the subgroup are enrolled for the second stage of the trial and (0, 0) stands for stopping the trial for futility. We assume that continuation of the total population as a target population is desirable if the actual treatment effect exceeds the pre-specified relevance threshold τ0 and, accordingly, selection of the subgroup as target population is desired if the true effect in the subgroup exceeds τ1. Otherwise, discontinuation of enrollment from the respective population is desired.

We now have to quantify the sustained loss given a false decision has occurred in order to find a decision rule minimizing the expected loss. Hence, we have to define both a loss function and to model our knowledge about event rates in the respective sub- and treatment groups by prior distributions. A frequently employed loss function is the so-called quadratic loss function, which is, mainly due to its simplicity, the most popular loss function for decision theoretic approaches and which is commonly used for sequential trial designs (see, e.g., [

19]). In our case, it yields a loss which is the squared difference between the treatment effects ∆

i and the relevance thresholds

τi,

i = 0,1, in case of a false decision (for the mathematical definition of the loss function, see

Appendix A.2).

Throughout this article, π

T1, π

C1, π

T2, π

C2 are defined as the prior random variables for the respective event rates

pT1,

pC1,

pT2,

pC2 in the treatment groups

T,

C and subgroups

G1,

G2, which are not to be mixed up with the subgroup prevalence π. We assume that these priors follow independently distributed continuous uniform distributions with:

However, it is also possible to apply other meaningful prior distributions, such as a triangular or a (truncated) normal distribution.

The Bayes risk

r can now be modeled by incorporating the distributional properties of the employed priors and effect estimators

conditional on π

ij,

i =

T,

C,

j = 1, 2. The optimal decision rule

is the one which yields the minimal Bayes risk,

i.e.,

After calculating the derivative of the Bayes risk (for details, see

Appendix A.2), we used

Mathematica 9.0 [

20] for numerical integration and root solving to determine the optimal decision thresholds (program code is provided as

Supplementary Information). In order to obtain the results presented in this manuscript, the precision for numerical integration by local adaptive method was set to 8 digits, and 3 digits were chosen for the precision for the numerical root solving procedure. In case the root was found to be out of the bounds of reasonable decision thresholds [−1, 1], the sign of the root,

i.e., either −1 or 1, was chosen as optimal decision threshold.

4.2. Examples for Optimal Decision Thresholds

In this subsection we provide some optimal decision rules which were derived for some specific parameter situations. In the following, we consider three different prior situations:

- (1)

The biomarker is assumed to be predictive for treatment effect with prior knowledge about treatment effect modeled as:

- (2)

The biomarker is assumed to be predictive and prognostic with prior knowledge about treatment effect modeled as:

- (3)

There is no prior knowledge about the event rates at all,

i.e., the prior is non-informative:

Optimal decision thresholds were determined for relevance thresholds τ

0 = 0

:05 and τ

1 = 0

:1. We investigated sample sizes per group and stage starting from

n = 20 and increasing up to

n = 200 in steps of 20, for

n = 300 and

n = 400. The results are shown in

Tables 1–

3.

In

Tables 1 and

2, it can be observed that given subgroup prevalence π or sample size

n is small, the optimal decision threshold

tends to be relatively low thus favoring a selection of the subgroup at interim.

then gradually increases with increasing subgroup prevalence and sample size, and it approaches the relevance threshold τ

1 = 0.1. In case of a predictive prior, the optimal decision threshold

for the total population also approaches the respective relevance threshold

τ0. However, it can be observed in

Table 1 that it approaches this value from above in case of π = 0

:1 and π = 0

:25 and, in contrast, from below in case of π = 0

:5. This holds also true for the case of a predictive and prognostic prior, with the exception of the prevalence situation π = 0

:25, where the optimal decision threshold now approaches

t0 from below. This may be explained by the fact that in case of an increasing prevalence of the subgroup, it may be more desirable to select the total population, since with an increasing subgroup prevalence, the treatment effect ∆

0 gradually increases too.

Table 3 displays optimal decision thresholds in case of a non-informative prior. One can observe that as compared to the previously discussed cases of prior knowledge, the optimal decision thresholds stick relatively close to the relevance thresholds. All of these thresholds exceed the respective relevance thresholds, but not to a great extent. It can also be observed that the optimal thresholds approach the relevance thresholds with increasing sample size. Interestingly, for an increasing subgroup prevalence,

however will depart from

τ0, while on the other hand

will approach

τ1.

5. Simulation Study

In this section, we compare the performance of the previously derived optimal decision rules to the performance of an ad hoc decision rule in terms of statistical power within a simulation study. The decision thresholds are employed in the adaptive enrichment design presented in Section 3. A sample size per group and stage of n = 200 was chosen, and the one-sided significance level was set to a = 0:025. It is assumed that it is desirable to continue with the total population if the actual treatment effect ∆0 exceeds τ0 = 0:05, and selection of the subgroup is desired if ∆1 > τ1 = 0:1. We chose the following situation for the respective event rates: pT1 = 0:45; pC1 = 0.3, pT2 = 0.43, and pC2 = 0.4. Three situations were considered for the subgroup prevalence, namely π = 0.1, 0.25, 0.5. The treatment effect in the subgroup is ∆1 = 0.45 − 0.3 = 0.15, and thus, selection of the subgroup is desirable in all considered situations. If π = 0.1, the treatment effect in the total population is ∆0 = 0.1 (0.45 – 0.3) + 0.9 (0.43 − 0.4) = 0.042 < τ0 and, hence, it would be desirable to continue only with the subgroup here. For the situations π = 0.25 and π = 0.5, we have ∆0 = 0.06 and ∆0 = 0.09, respectively. In these latter two situations, the treatment effect in the total population exceeds the relevance threshold τ0 = 0.05. Hence, it would be desirable to investigate both target populations during the second stage of the trial.

In the following, we will consider four distinct decision rules:

- (a)

an ad hoc rule based on the relevance thresholds, i.e., (c0, c1) = (τ0, τ1) = (0.05, 0.1),

- (b)

an optimal decision rule based on a prior which assumes that the biomarker is predictive,

i.e.,

which yields the decision thresholds

for π = 0.1,

for π = 0.25,

for π = 0.5,

- (c)

an optimal decision rule based on a prior which assumes that the biomarker is predictive and prognostic,

i.e.,

which yields the decision thresholds

for π = 0.1,

for π = 0.25,

for π = 0.5,

- (d)

an optimal decision rule based on a noninformative prior,

i.e.,

which yields the decision thresholds

for π = 0.1,

for π = 0.25,

for π = 0.5.

We used Monte Carlo simulations to obtain our results (program code is provided as

Supplementary Information) and simulated 1,000,000 data sets per scenario (standard error equals 5·10

−4 for a rate of 0.5).

Figure 2 shows the respective probabilities to

reject the global null hypothesis H0,

reject the global null hypothesis

,

reject the global null hypothesis

,

reject either

,

, or both.

It can be observed in

Figure 2 that the

ad hoc decision rule (a) and the “noninformative” optimal rule (d) yield the same results. Obviously, the decision thresholds in (d) exceed the respective relevance thresholds only by a slight margin which apparently did not yield to a difference in statistical power. In general, decision rule (c) based on a prognostic and predictive prior assumption shows the best performance in terms of probability for a rejection of the global null hypothesis

H0. Decision rule (b) based on a predictive prior assumption only performs slightly worse. This pattern prevails for the probability of rejecting the local null hypothesis

as well as the rejection of either one or both of the two local null hypotheses. All four decision rules show a comparable performance concerning the rejection of the local null hypothesis for the total population,

. For the situation π = 0.5, however, it can be observed that, again, decision rules (b) and (c) slightly outperform the other rules. In all other cases the figure shows that the difference in power between the four rules slightly decreases with increasing subgroup prevalence.

The advantage of decision rules (b) and (c) over rules (a) and (d) may be explained by the fact that they favor the selection of the subgroup for all three prevalence scenarios due to their rather generous decision threshold c1. Furthermore, the decision thresholds for the total population c0 are also slightly more generous as compared to rules (a) and (d) for an increasing subgroup prevalence which, accordingly, yields to an advantage in power.

6. Application to a Clinical Trial Example

One of the most prominent examples of a targeted therapy is the monoclonal antibody trastuzumab. Treatment with trastuzumab as an add-on combined with chemotherapy has shown to be effective as a breast cancer treatment in patients which overexpress HER2, occurring in about 20% to 30% of all invasive breast cancer carcinoma (see, e.g., [

21]). Up to today, trastuzumab has only been approved as a treatment for HER2-positive patients. However, recent evidence has led medical researchers to believe that trastuzumab might also be effective for HER2-negative patients. Currently, there is an ongoing large randomized controlled trial, which investigates the efficacy of trastuzumab in HER2-negative patients (ClinicalTrials.gov Identifier. NCT01548677).

For illustrative purposes, let us assume that trastuzumab has not yet been demonstrated to be effective in neither the total population of breast cancer patients nor in the subgroup, and that a research team plans to investigate its efficacy in terms of 5 year event-free survival. An event is defined as disease recurrence, progression or death from any cause and an adaptive enrichment design is chosen. When planning the trial, the researchers used the results from the NOAH trial, a randomized controlled trial investigating the efficacy of trastuzumab combined with chemotherapy (

n = 117) versus chemotherapy alone (

n = 118) within HER2-positive patients [

22]. This trial, however, additionally featured a parallel HER2-negative cohort which received neoadjuvant chemotherapy alone (

n = 99). The 5-year event-free survival rates in this trial were 0.58 (95%-CI = [0.48–0.66]) in the experimental group and 0.43 (95%-CI = [0.43–0.52]) in the control group of HER2-positive patients. The survival rates within the parallel HER2-negative patients receiving chemotherapy only amounted to 0.61 (95%-CI = [0.50–0.70]).

Let us further assume that the researchers select the design proposed in this article with a sample size per group and stage of n = 400 and a one-sided significance level of α = 0.025 and that the prevalence of HER2-positive patients is π = 0.2. It is furthermore assumed that a selection of the total population would be desired if the treatment effect ∆0 exceeds τ0 = 0.08 and, respectively, it is desirable to select the subgroup as a target population if ∆1 > τ1 = 0.1.

With regard to the interim decision rule, let us consider the following four plausible scenarios.

- (a)

The trial team decides not to incorporate the knowledge from the NOAH trial but to choose the relevance thresholds τ0, τ1 as ad hoc decision thresholds, i.e., c0 = 0.08; c1 = 0.1.

- (b)

The trial team decides to incorporate the information from the NOAH trial. They choose uniformly distributed priors for the event rates and are basing them on the 95%-confidence intervals from the trial, i.e.,

,

and

. Since no data on HER2-negative patients treated with trastuzumab was available from the NOAH trial, they choose

. An optimal decision rule is then determined by incorporating the information obtained from the priors, which is

.

- (c)

As in scenario (b), the trial team decides to incorporate the prior knowledge from the NOAH trial. However, they are more optimistic in regard of the treatment effect in the HER2-negative population and choose

. In this scenario, the optimal decision rule is

.

- (d)

The trial team is unaware of the results of the NOAH trial and has no further information at hand. Hence, they decide to conduct the trial with an optimal decision threshold based on a non-informative uniform prior, i.e.,

, i = T;C, j = 1,2. The optimal decision rule is in this case

.

We investigated the impact of the choice of the applied decision rule on the power of our design by a simulation study. Here, we chose

pT1 = 0.6,

pC1 = 0.45 and

pC2 = 0.6. In order to investigate the sensitivity of the design with respect to parameter assumptions, we investigated two scenarios for the event rate of HER2-negative patients in the experimental group

pT2, namely 0.65 and 0.7. In case

pT2 = 0.65, the treatment effect in the total population would be ∆

0 = 0.2·0.15 + 0.8·0.05 = 0.07 and hence below the relevance threshold

τ0 making the selection of the total population unfavorable and thus less likely. For the scenario of a larger treatment benefit for HER2-negative patients,

i.e.,

pT2 = 0.7, ∆

0 = 0.2·0.15+0.8·0.1 = 0.11 exceeds

τ0 and thus a selection of the total population would be favorable here. For each scenario, 1,000,000 data sets were simulated (standard error for a rate of 0.5 equals 5·10

−4). The results are displayed in

Table 4.

First of all, we observe that decision rules (a) and (d) yielded the same results. This can be explained by the fact that, as in the simulation study in the previous section, the decision thresholds only slightly deviate from each other and this small difference did not have any influence on the interim decision. One can observe that decision rule (b) yields the largest overall power both in terms of rejecting the global null hypothesis H0 and rejecting either of the two local null hypothesis in both scenarios. In both scenarios, however, decision rule (c) achieves an only slightly worse performance as compared to decision rule (c). Both rules generally favor the selection of G1 at interim. This yields to a large advantage in power in scenario (A), where decision rule (c) performs slightly better than decision rule (b) when it comes to the detection of a treatment effect in G1. In scenario (A), the relatively high probability of 0.2361 for a futility stop may be a disadvantage of decision rules (a) and (d).

In scenario (B), decision rules (a) and (d) show the best performance when an effect in G0 is identified. However, these rules only slightly outperform decision rule (b). Here, decision rule (c) shows some minor disadvantage. since it yields a relatively high probability to select G1 only at interim, there is some lack in performance regarding the detection of the effect in G0. Again, decision rules (a) and (d) show the highest probability for a futility stop. However, the advantage is no longer that pronounced as in scenario (A).

Overall, we conclude that decision rule (b) is the one with the best overall performance in terms of power. Decision rule (c) comes close in case of scenario (A), but drops in performance in scenario (B). Decision rules (a) and (d) perform acceptably in scenario (B), but are outperformed by rules (b) and (c) in scenario (A).

7. Discussion

Adaptive enrichment designs, which include the option of selecting the most promising target population at interim, are a useful and powerful tool for the evaluation of targeted therapies. It can be assumed that alongside the rise of personalized medicine, adaptive enrichment designs will also experience an increasing importance in the near future. The decision which population to investigate during the second stage of such a design is a crucial one and, hence, it is important that the decision rule applied shows desirable properties in terms of successfully demonstrating a treatment effect.

In this article, we introduced an adaption of the design proposed by Jenkins

et al. [

7] in order to fit the setting of a binary outcome variable. For the situation of uncertainty about treatment effects, we derived and investigated optimal decision rules which take prior knowledge about event rates and trial characteristics, such as sample size and subgroup prevalence, into account. In our case, the derivation of these optimal decision rules was achieved by the use of standard computational software.

Within a simulation study and a clinical trial example, it was shown that the applied decision rule had substantial impact on the power of the trial. For the investigated parameter situations, optimal decision thresholds generally performed at least equally or better in terms of power as compared to a decision rule based on ad hoc assumptions.

In this article, we consider the specific setting of a single biomarker, one interim analysis and a binary outcome. In phase III trials in oncology, time-to-event variables are frequently used as primary endpoint. The presented optimal decision rules may then be applied if the interim decision is based on a short-term binary outcome, such as treatment response, and confirmatory analysis is performed for the time-to-event variable. In this case, even though the applied statistical tests are different, the decision framework and, accordingly, the applied decision rule, could adopt the approach developed in this work. Furthermore, the presented methodology can be easily transferred to a setting where the interim analysis occurs at an arbitrary time point. Consider the situation that not 4n but tn patients, with t > 2 being an arbitrary positive number, are enrolled during the trial with the interim analysis occurring after 2n patients. Then, solely the test statistics for the second stage have to be adapted and the weights for the combined test statistics have to be adjusted accordingly. The optimal decision thresholds for this situation would not change since they only depend on the data obtained from 2n patients during the first stage of the trial.

Throughout this article, we considered the situation of a bioassay which evaluates the biomarker with perfect sensitivity and specificity. However, in many cases this assumption may not hold true leading to patients being assigned to the wrong subgroup. It has been shown for a normally distributed outcome that the situation of imperfect classification leads to a potential downward bias in treatment effect estimates for the subgroup [

14]. This has an immediate harmful impact on both the probability of correct interim decisions [

14] and the power within an adaptive enrichment trial [

15]. This worsening in performance in case of an imperfect bioassay can also be expected for the case of a binary outcome. Hence, it remains of paramount importance to carefully choose a bioassay with a sufficient accuracy when conducting an adaptive enrichment trial.

In summary, our investigations strongly highlight the importance to thoroughly evaluate the impact of the applied decision rule when planning a clinical trial with an adaptive enrichment procedure and yields to the recommendation to consider optimal decision thresholds as a possibility to increase the probability of successful trials and drug development programs.