A Utility-Based Approach to Some Information Measures

Abstract

:1 Introduction

- Entropy can be defined as essentially the only quantity that is consistent with a plausible set of information axioms (the approach taken by Shannon (1948) for a communication system–see also Csisz´ar and K¨orner (1997) for additional axiomatic approaches).

- The definition of entropy is related to the definition of entropy from thermodynamics (see, for example, Brillouin (1962) and Jaynes (1957)).

2 ![Entropy 09 00001 i018]() -Entropy

-Entropy

−entropy) and the generalized relative entropy (relative (

−entropy) and the generalized relative entropy (relative (  -entropy) from Friedman and Sandow (2003b).

-entropy) from Friedman and Sandow (2003b).2.1 Probabilistic Model and Horse Race

.1 Let q(x) denote prob{X = x} under the probability measure q. We adopt the viewpoint of an investor who uses the model to place bets on a horse race, which we define as follows:2

.1 Let q(x) denote prob{X = x} under the probability measure q. We adopt the viewpoint of an investor who uses the model to place bets on a horse race, which we define as follows:2 , where an investor can place a bet that X = x, which pays the odds ratio

, where an investor can place a bet that X = x, which pays the odds ratio  for each dollar wagered if X = x, and 0, otherwise.

for each dollar wagered if X = x, and 0, otherwise.

denotes the winning horse.

denotes the winning horse. to each state x, where

to each state x, where

2.2 Utility and Optimal Betting Weights

- (i)

- is strictly concave,

- (ii)

- is twice differentiable,

- (iii)

- is strictly monotone increasing,

- (iv)

- has the property (0, ∞) ⊆ range(U ′), i.e., there exists a ’blow-up point’, Wb, withand a ’saturation point’, Wb, withand

- (v)

- is compatible with the market in the sense that Wb < B < Ws.

denotes the inverse of the function U′, and λ is the solution of the following equation:

denotes the inverse of the function U′, and λ is the solution of the following equation:

2.3 Generalization of Entropy and Relative Entropy

-entropy from the probability measure p to the probability measure q is given by:

-entropy from the probability measure p to the probability measure q is given by:

-entropy is the difference between

-entropy is the difference between

- (i)

- the expected (under the measure p) utility of the payoffs if we allocate optimally according to p, and,

- (ii)

- the expected (under the measure p) utility of the payoffs if we allocate optimally according to the misspecified model, q.

-entropy of the probability measure p is given by:

-entropy of the probability measure p is given by:

-entropy is the difference between the expected utility of the payoffs for a clairvoyant who wins every race and the expected utility under the optimal allocation.

-entropy is the difference between the expected utility of the payoffs for a clairvoyant who wins every race and the expected utility under the optimal allocation. and

and  have important properties summarized in the following theorem.

have important properties summarized in the following theorem. and

and  have the following properties

have the following properties- (i)

≥ 0 with equality if and only if p = q,

- (ii)

is a strictly convex function of p,

- (iii)

≥ 0, and

- (iv)

is a strictly concave function of p.

and substituting from (13), one can obtain the following decomposition for the expected utility, under the measure p, for an investor who allocates under the (misspecified measure) q:

and substituting from (13), one can obtain the following decomposition for the expected utility, under the measure p, for an investor who allocates under the (misspecified measure) q:

2.4 Connection with Kullback-Leibler Relative Entropy

-entropy essentially reduces to Kullback-Leibler relative entropy for a logarithmic family of utilities:

-entropy essentially reduces to Kullback-Leibler relative entropy for a logarithmic family of utilities: -entropy,

-entropy,  , is independent of the odds ratios, for any candidate model p and prior measure, q, if and only if the utility function, U, is a member of the logarithmic family

, is independent of the odds ratios, for any candidate model p and prior measure, q, if and only if the utility function, U, is a member of the logarithmic family

-entropy reduces, up to a multiplicative constant, to Kullback-Leibler relative entropy, if and only if the utility is a member of the logarithmic family (16).

-entropy reduces, up to a multiplicative constant, to Kullback-Leibler relative entropy, if and only if the utility is a member of the logarithmic family (16).3 U −Entropy

represents the expected gain in utility from allocating under the true measure p, rather than allocating according to the misspecified measure q under market odds

represents the expected gain in utility from allocating under the true measure p, rather than allocating according to the misspecified measure q under market odds  for an investor with utility U . One of the main goals of this paper is to explore what happens when we set q(x) equal to the homogeneous expected return measure,

for an investor with utility U . One of the main goals of this paper is to explore what happens when we set q(x) equal to the homogeneous expected return measure,

can be interpreted as the excess performance from allocating according to the real world measure p over the (risk-neutral) measure q derived from the odds ratios.6

can be interpreted as the excess performance from allocating according to the real world measure p over the (risk-neutral) measure q derived from the odds ratios.6

which we denote by

which we denote by  , we obtain

, we obtain

3.1 Definitions

−entropy and relative

−entropy and relative −entropy inherit all of the properties stated in Section 2. It is easy to show that for logarithmic utilities, they reduce to entropy and Kullback-Leibler relative entropy, respectively, so these quantities are generalizations of entropy and Kullback-Leibler relative entropy, respectively. Next, we generalize the above definitions to conditional probability measures. To keep our notation simple, we use p(x) and p(y) to represent the probability distributions for the random variables X and Y and use both HU (p) and HU (X) to represent the U-entropy for the random variable X which has probability measure p.

−entropy inherit all of the properties stated in Section 2. It is easy to show that for logarithmic utilities, they reduce to entropy and Kullback-Leibler relative entropy, respectively, so these quantities are generalizations of entropy and Kullback-Leibler relative entropy, respectively. Next, we generalize the above definitions to conditional probability measures. To keep our notation simple, we use p(x) and p(y) to represent the probability distributions for the random variables X and Y and use both HU (p) and HU (X) to represent the U-entropy for the random variable X which has probability measure p.

−entropy and conditional relative

−entropy and conditional relative  −entropy as in Friedman and Sandow (2003b). The latter quantities can be interpreted in the context of a conditional horse race.

−entropy as in Friedman and Sandow (2003b). The latter quantities can be interpreted in the context of a conditional horse race.

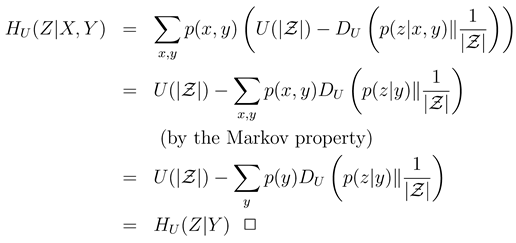

3.2 Properties of U −Entropy and Relative U −Entropy

- (i)

≥ 0 with equality if and only if p = q,

- (ii)

is a strictly convex function of p,

- (iii)

≥ 0, and

- (iv)

is a strictly concave function of p.

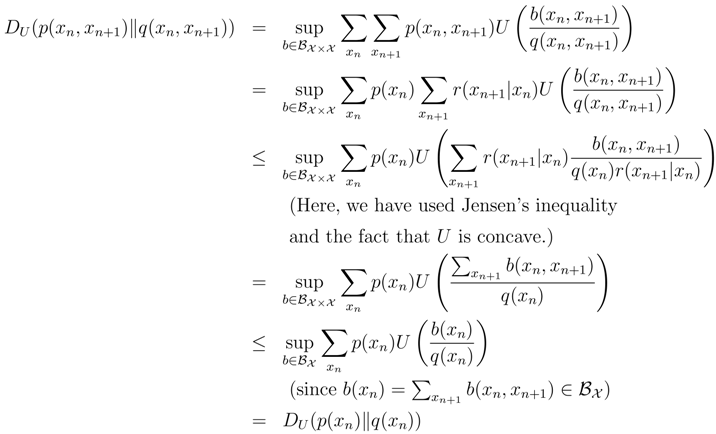

- (i)

, and

- (ii)

be any onto function and

be any onto function and  be the induced probabilities on

be the induced probabilities on  ,i.e.,

,i.e.,

Then

Then  and

and

and

and  be the probability distributions (at time n) of two different Markov chains arising from the same transition mechanism but from different starting distributions. Then

be the probability distributions (at time n) of two different Markov chains arising from the same transition mechanism but from different starting distributions. Then  decreases with n.

decreases with n.

☐

☐ betweena distribution µn on the states at time n and a stationary distribution µ decreases with n.

betweena distribution µn on the states at time n and a stationary distribution µ decreases with n. for any n. ☐

for any n. ☐ is a stationary distribution, then entropy

is a stationary distribution, then entropy  increases with n.

increases with n.- (i)

, and

- (ii)

. ☐

. ☐3.3 Power Utility

, we have

, we have

3.3.1 U −Entropy for Large Relative Risk Aversion

to Kullback-Leibler relative entropy.

to Kullback-Leibler relative entropy.3.3.2 Relation with Tsallis Entropy

4 Application: Probability Estimation via Relative U −Entropy Minimization

4.1 MRE and Dual Formulation

to

to  ), p0 is a probability measure,

), p0 is a probability measure,  represents the empirical measure, and Q is the set of all probability measures.

represents the empirical measure, and Q is the set of all probability measures.

denotes the empirical probability that X = x.

denotes the empirical probability that X = x. .

.4.2 Robustness of MRE when the Prior Measure is the Odds Ratio Pricing Measure

, then

, then

with

with  in (84) doesn’t change the finiteness or the convexity properties of the function under the infsup or the supinf. Since the proof of Known Result 3 relies only on those properties of this function, we have

in (84) doesn’t change the finiteness or the convexity properties of the function under the infsup or the supinf. Since the proof of Known Result 3 relies only on those properties of this function, we have

4.3 General Risk Preferences and Robust Relative Performance

entropy problem. The solution to this generalized problem is robust in the following sense:

entropy problem. The solution to this generalized problem is robust in the following sense:

with respect to p ∈ K is equivalent to searching for the measure p* ∈ Q that maximizes the worst-case (with respect to the potential true measures, p′ ∈ K) relative model performance (over the benchmark model, p0), in the sense of expected utility. The optimal model, p*, is robust in the sense that for any other model, p, the worst (over potential true measures p′ ∈ K) relative performance is even worse than the worst-case relative performance under p*. We do not know the true measure; an investor who makes allocation decisions based on p* is prepared for the worst that “nature” can offer.

with respect to p ∈ K is equivalent to searching for the measure p* ∈ Q that maximizes the worst-case (with respect to the potential true measures, p′ ∈ K) relative model performance (over the benchmark model, p0), in the sense of expected utility. The optimal model, p*, is robust in the sense that for any other model, p, the worst (over potential true measures p′ ∈ K) relative performance is even worse than the worst-case relative performance under p*. We do not know the true measure; an investor who makes allocation decisions based on p* is prepared for the worst that “nature” can offer.4.4 Robust Absolute Performance and MRUE

to

to  ), p0 representsthe prior measure,

), p0 representsthe prior measure,  represents the empirical measure, and Q is the set of all probability measures.

represents the empirical measure, and Q is the set of all probability measures. -entropy to relative U−entropy by setting the “prior” measure to the risk neutral pricing measure generated by the odds ratios,

-entropy to relative U−entropy by setting the “prior” measure to the risk neutral pricing measure generated by the odds ratios,  . We obtain

. We obtain

.

.

5 Conclusions

and

and  presented in Friedman and Sandow (2003a).

presented in Friedman and Sandow (2003a).- I

- General Risk Preferences (not expressible by (15)), real or assumed odds ratios available

- (i)

- MRUE, Problem 3: If p0 is the pricing measure generated by the odds ratios, we get robust absolute performance (in the market described by the odds ratios) in the sense of Corollary 10.

- (ii)

- Minimum Relative

−entropy Problem from Friedman and Sandow (2003a): If p0 represents a benchmark model (possibly prior beliefs), we get robust relative outperformance (relative to the benchmark model, in the market described by the odds ratios) in the sense of Known Result 4.

- II

- Special Case: Logarithmic Family Risk Preferences (15), odds ratios need not be available

- MRE, Problem 1: If p0 represents a benchmark model (possibly prior beliefs), we get robust relative outperformance with respect to the benchmark model, under any odds ratios.

6 Appendix

-entropy to relative U−entropy, substituting

-entropy to relative U−entropy, substituting

.

.References

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge, 2004. [Google Scholar]

- Brillouin, L. Science and Information Theory; Academic Press: New York, 1962. [Google Scholar]

- Cover, T.; Thomas, J. Elements of Information Theory; Wiley: New York, 1991. [Google Scholar]

- Cowell, F. Additivity and the entropy concept: An axiomatic approach to inequality measurement. Journal of Economic Theory 1981, 25, 131–143. [Google Scholar]

- Cressie, N.; Read, T. Multinomial goodness of fit tests. journal of the royal statistical society b 1984, 46(3), 440–464. [Google Scholar]

- Cressie, N.; Read, T. Pearson’s x2 and the loglikelihood ratio statistic g2: a comparative review. International statistical review 1989, 57(1), 19–43. [Google Scholar]

- Csiszár, I.; Körner, J. Information theory: Coding Theorems for Discrete Memoryless Systems; Academic Press: New York, 1997. [Google Scholar]

- Duffie, D. Dynamic Asset Pricing Theory; Princeton University Press: Princeton, 1996. [Google Scholar]

- Friedman, C.; Sandow, S. Learning probabilistic models: An expected utility maximization approach. Journal of Machine Learning Research 2003a, 4, 291. [Google Scholar]

- Friedman, C.; Sandow, S. Model performance measures for expected utility maximizing investors. International Journal of Theoretical and Applied Finance 2003b, 6(4), 355. [Google Scholar]

- Friedman, C.; Sandow, S. Model performance measures for leveraged investors. International Journal of Theoretical and Applied Finance 2004, 7(5), 541. [Google Scholar]

- Golan, A.; Judge, G.; Miller, D. Maximum Entropy Econometrics; Wiley: New York, 1996. [Google Scholar]

- Group of Statistical Physics. Nonextensive statistical mechanics and thermodynamics: Bibliography. Working Paper. http://tsallis.cat.cbpf.br/TEMUCO.pdf2006. [Google Scholar]

- Grünwald, P.; Dawid, A. Game theory, maximum generalized entropy, minimum discrepancy, robust bayes and pythagoras. Proceedings ITW 2002. [Google Scholar]

- Grünwald, P.; Dawid, A. Game theory, maximum generalized entropy, minimum discrepancy, and robust bayesian decision theory. Annals of Statistics 2004, 32(4), 1367–1433. [Google Scholar]

- Ingersoll, J. Theory of Financial Decision Making; Rowman and Littlefield: New York, 1987. [Google Scholar]

- Jaynes, E. T. Information theory and statistical mechanics. Physical Review 1957, 106, 620. [Google Scholar]

- Kitamura, Y.; Stutzer, M. An information-theoretic alternative to generalized method of moments. Econometrica 1997, 65(4), 861–874. [Google Scholar]

- Lebanon, G.; Lafferty, J. Boosting and maximum likelihood for exponential models. Technical Report CMU-CS-01-144 School of Computer Science Carnegie Mellon University, 2001. [Google Scholar]

- Liese, F.; Vajda, I. Convex Statistical Distances; Teubner, Leipzig, 1987. [Google Scholar]

- Luenberger, D. Investment Science; Oxford University Press: New York, 1998. [Google Scholar]

- Morningstar. The new morningstar ratingTM methodology. 2002. http://www.morningstar.dk/downloads/MRARdefined.pdf.

- Österreicher, F.; Vajda, I. Statistical information and discrimination. IEEE transactions on information theory, May 1993; 39, 3, 1036–1039. [Google Scholar]

- Plastino, A.; Plastino, A. R. Tsallis entropy and Jaynes’ informnation theory formalism. Brazilian Journal of Physics 1999, 29(1), 50. [Google Scholar]

- Shannon, C. E. A mathematical theory of communication. Bell System Technical Journal, Jul and Oct 1948; 27, 379–423 and 623–656379–423 and 623–656. [Google Scholar]

- Slomczyński, W.; Zastawniak, T. Utility maximizing entropy and the second law of thermodynamics. Annals of Probability 2004, 32(3A), 2261. [Google Scholar]

- Stummer, W. On a statistical information measure of diffusion processes. statistics and decisions 1999, 17, 359–376. [Google Scholar]

- Stummer, W. On a statistical information measure for a Samuelson-Black-Scholes model. statistics and decisions 2001, 19, 289–313. [Google Scholar]

- Stummer, W. Exponentials, Diffusions, Finance, Entropy and Information, Shaker. 2004.

- Stutzer, M. A bayesian approach to diagnosis of asset pricing models. Journal of Econometrics 1995, 68, 367–397. [Google Scholar]

- Stutzer, M. Portfolio choice with endogenous utility: A large deviations approach. Journal of Econometrics 2003, 116, 365–386. [Google Scholar]

- Topsøe, F. Information theoretical optimization techniques. Kybernetika 1979, 15(1), 8. [Google Scholar]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. Journal of Statistical Physics 1988, 52, 479. [Google Scholar]

- Tsallis, C.; Brigatti, E. Nonextensive statistical mechanics: A brief introduction. Continuum Mechechanics and Thermodynanmics 2004, 16, 223–235. [Google Scholar]

- 1We have chosen this setting for the sake of simplicity. One can generalize the ideas in this paper to continuous random variables and to conditional probability models; for details, see Friedman and Sandow (2003b).

- 2See, for example, Cover and Thomas (1991), Chapter 6.

- 3See, for example, Cover and Thomas (1991).

- 4Such an investor maximizes his expected utility with respect to the probability measure that he believes (see, for example, Luenberger (1998)).

- 5See, for example Duffie (1996). We note that the risk neutral pricing measure generated by the odds ratios need not coincide with any “real world” measure.

- 6Stutzer (1995), provides a similar interpretation in a slightly different setting.

- 7It is straightforward to develop the material below under more general assumptions.

- 8We note that this definition of U−entropy is quite similar to, but not the same as, the definition of u−entropy in Slomczy´nski and Zastawniak (2004).

- 9It is possible to consider more general settings, such as incomplete markets, but a number of results depend in a fundamental way on the horse race setting.

- 10MRE problems can be stated for conditional probability estimation and with regularization. We keep the context and notation as simple as possible by confining our discussion to unconditional estimation without regularization. Extensions are straightforward.

- 11See, for example, Lebanon and Lafferty (2001).

- 12See, for example, Cover and Thomas (1991), Theorem 6.1.2.

- 13For ease of exposition, we have proved this result for U(·) = log(·). The same result holds for utilities in the generalized logarithmic family (15).

- 14As noted above, we keep the context and notation as simple as possible by confining our discussion to unconditional estimation without regularization. Extensions are straightforward.

- 15A version that is more easily implemented is given in the Appendix.

©2007 by MDPI (http://www.mdpi.org). Reproduction for noncommercial purposes permitted.

Share and Cite

Friedman, C.; Huang, J.; Sandow, S. A Utility-Based Approach to Some Information Measures. Entropy 2007, 9, 1-26. https://doi.org/10.3390/e9010001

Friedman C, Huang J, Sandow S. A Utility-Based Approach to Some Information Measures. Entropy. 2007; 9(1):1-26. https://doi.org/10.3390/e9010001

Chicago/Turabian StyleFriedman, Craig, Jinggang Huang, and Sven Sandow. 2007. "A Utility-Based Approach to Some Information Measures" Entropy 9, no. 1: 1-26. https://doi.org/10.3390/e9010001

APA StyleFriedman, C., Huang, J., & Sandow, S. (2007). A Utility-Based Approach to Some Information Measures. Entropy, 9(1), 1-26. https://doi.org/10.3390/e9010001