1. Introduction

Intelligent data recommendation has been one of the most fundamental techniques in wireless mobile Internet, and becomes more and more crucial in the era of big data. Note that, with the explosive growth of data, it is difficult to send all the data to the users within a tolerable time with traditional data processing technology [

1,

2]. By sending data in advance when the system is idle other than wasting time to wait for a clear request from users, the delay for users to acquire their desired data could be reduced significantly [

3]. It is also arduous for users to find desired data among the mass of data available in the Internet [

4]. In general, users can access their interested data faster and easier with the help of data recommendation systems, since they are usually well-designed based on the preferences of users [

5,

6]. Compared to search engines, recommendation systems are more convenient since they are less action/skill/knowledge demanding [

4]. For example, many applications in mobile phones prefer to recommend some data based on the user’s interests to improve user experience. In addition, the Internet enterprises can make a profit through mobile networks by using push-based advertisement technology [

7].

Most previous works mainly discussed data push based on the content to solve the problem of data delivery [

3,

4,

5,

6,

7,

8,

9,

10]. Data push is usually regarded as a strategy of data delivery in distributed information systems [

3]. The architecture for a mobile push system was proposed in [

4,

8]. In addition, Ref. [

9] put forward an effective wireless push system for high-speed data broadcasting. The push and pull techniques for time-varying data network was discussed in [

10]. Furthermore, on this basis, various content-based recommendation systems were provided as information-filtering systems which push data to users based on the knowledge about their preferences [

11,

12]. Ref. [

11] discussed the joint content recommendation, and the privacy-enhancing technology in recommendation systems was investigated in [

12]. In addition, Ref. [

13] put forward a personalized social image recommendation method. Furthermore, the recommendation technology was also used to solve the problem in multimedia big data [

14]. Recently, user customization becomes crucial. The personalized concern of users usually can be characterized by many data properties, such as data format and keywords [

4,

15,

16]. Instead of discussing data delivery based on content, as an alternative, we shall discuss the distribution of recommendation sequence based on users’ preference when the revenue of the recommendation system is required. In particular, we choose the frequency of data used to describe the preference of users since it has nothing to do with the concrete content.

Furthermore, we take the revenue of the recommendation system into account. That is, a recommendation process consumes resources while getting benefits. Different recommendations may bring different results. For example, the cost of omitting data that should be pushed may be much smaller than that of pushing invalid data to a user erroneously. On one hand, the users would rate the recommendation system higher if the desired data is correctly pushed. On the other hand, pushing the information that users do not ask, such as advertisements, may seriously degrade the user experience. Nevertheless, pushing advertisements does bring higher revenue. To balance the loss of different types of push errors, we shall weigh different types of push errors differently, which is similar to cost-sensitive learning [

17,

18,

19,

20].

For different application scenarios, the system focuses on different events according to the need. For example, the small-probability events captures our attention in the minority subsets detection [

21,

22], while someone prefers the event with high probability in support vector machine (SVM) [

23]. For applications in the wireless mobile Internet, in the stage of user expansion, recommending the desired data accurately to attract more users is more important. However, in the mature stage of applications, more advertisements would be pushed to earn more, although it may degrade the user experience at some extent. This paper will mainly discuss both of these two cases.

We also present some new findings by employing the message importance [

24,

25,

26,

27,

28], which characterizes the concern degree of events. Message importance measure (MIM) was proposed to characterize the message importance of events which can be described by discrete random variables where people pay most attention to the small-probability ones, and it highlights the importance of minority subsets [

29]. In fact, it is an extension of Shannon entropy [

30,

31] and Rényi entropy [

32] from the perspective of Fadeev’s postulates [

32,

33]. That is, the first three postulates are satisfied for all of them, and MIM weakens the fourth postulates on the foundation of Rényi entropy. Moreover, the logarithmic form and polynomial form are adopted in Shannon entropy and Rényi entropy, respectively, while MIM uses the exponential form. Ref. [

34] showed that MIM focuses on a specific event by choosing a corresponding importance coefficient. In fact, MIM has a wide range of applications in big data, such as compressed storage and communication [

35] and mobile edge computing [

36].

Note that a superior recommendation sequence should resemble that generated by the user himself, which means that the data recommendation mechanism agrees with user behavior. To this end, the probability distribution of the recommendation sequence and the utilization frequency of the user data should be as close as possible in the statistical sense. According to [

37], this means that the relative entropy between the distribution of recommendation sequence and that of user data should be minimized. We assume that the recommendation model pursues best user performance with a certain revenue guarantee in this paper.

In this paper, we first find a particular recommendation distribution on the best effort in maximizing the probability of observing the recommendation sequence with the utilization frequency of the user data when the expected revenue is provided. Then, its main properties, such as monotonicity and geometrical characteristic, are fully discussed. This optimal recommendation system can be regarded as an information-filtering system, and the importance coefficient determines what events the system prefers to recommend. The results also show that excessively low expectation of revenue can not constrain recommendation distribution and exorbitant expectation of revenue makes the recommendation system impossible to design. The constraints on the recommendation distribution are true if the minimum average revenue is neither too small nor too large, and there is a trade-off between the recommendation accuracy and the expected revenue.

It is also noted that the form of this optimal recommendation distribution is the same as that of MIM when the minimum average revenue is neither too small nor too large. The optimal recommendation distribution is determined by the proportion of recommendation value of the corresponding event in total recommendation value, where the recommendation value is a special weight factor. The recommendation value can be seen as a measure of message importance, since it satisfies the postulates of the message importance. Due to the same form with MIM, the optimal recommendation probability can be given by the normalized message importance measure when MIM is used to characterize the concern of system. Furthermore, when importance coefficient is positive, the small-probability events will be given more attention, which is magnifying the importance index of small-probability events and lessening that of high-probability events. Therefore, we confirm the rationality of MIM from another perspective in this paper for characterizing the physical meaning of MIM by using data recommendation system rather than based on information theory. In addition, we expand MIM to the general case whatever the probability of systems interested events is. Since the importance coefficient determines what events set systems are interested in, we can switch to different application scenarios by means of it. That is, advertising systems are discussed if the importance coefficient is positive, while noncommercial systems are adopted if importance coefficient is negative. Compared with previous works about MIM [

29,

34,

35], most properties of optimal recommendation distribution is the same, but a clear definition of the desired event set can be given in this paper. The relationship between utility distribution and MIM was preliminarily discussed in [

38].

The main contributions of this paper can be summarized as follows. (1) We put forward an optimal recommendation distribution that makes the recommendation mechanism agree with user behavior with a certain revenue guarantee, which can improve the design of recommendation strategy. (2) We illuminate that this optimal recommendation distribution is normalized message importance, when we use MIM to characterize the concern of systems, which presents a new physical explanation of MIM from data recommendation perspective. (3) We expand MIM to the general case, and we also discuss the importance coefficient selection as well as its relationship with what events systems focus on.

The rest of this paper is organized as follows. The setup of optimal recommendation is introduced in

Section 2, including the system model and the discussion of constraints. In

Section 3, we solve the problem of optimal recommendation in our system model, and give complete solutions.

Section 4 investigates the properties of this optimal recommendation distribution. The geometric interpretation is also discussed in this part. Then, we discuss the relationship between this optimal recommendation distribution and MIM in

Section 5. It is noted that recommendation distribution can be seen as normalized message importance in this case. The numerical results is shown and discussed to certificate our theoretical results in

Section 6.

Section 7 concludes the paper. In addition, the main notations in this paper are listed in

Table 1.

2. System Model

We consider a recommendation system with

N classes of data, as shown in

Figure 1. In fact, data is often stored based on its categories for the convenience of indexing. For example, the news website usually classifies news into the following categories: politics, entertainment, business, scientific, sports, and so on. At each time instant, the information source generates a piece of data, which belongs to a certain class with probability distribution

Q. In general, the generated data sequence does not match the preference of the user. To optimize the information transmission process, therefore, a recommendation unit is used to determine whether the generated data should be pushed to the user with some deliberately designed probability distribution

P. One the one hand, the recommendation unit can make predictions of the user’s needs and push some data to the user before he actually starts the retrieval process. In doing so, the transmission delay can be largely reduced, especially when the data amount is large. On the other hand, the recommending unit enables non-expert users to search and to access their desired data much easier. Furthermore, we can profit more by pushing some advertisements to the user.

2.1. Data Model

We refer to the empirical probability mass function of the class indexes over the whole data set as the raw distribution and denote it as . We refer to the probability mass function of users’ preferences over the classes as the utility distribution and denote it as . That is, for each piece of data, it belongs to class i with probability and would be preferred by the user with probability . To fit the preference of the user under some target revenue constraint, the system will make random recommendations according to a recommendation distribution .

We assume that each piece of data belongs to one and only one of the N sets. That is, for , where is the set of data belonging to the i-th class. Thus, the whole data set would be . The raw distribution can be expressed as . In addition, the utility distribution U can be obtained by studying the data-using behavior of a specific group of users and thus, in this paper, is assumed to be known prior.

For traditional data push, we usually expect to make

smaller than a given value [

10]. Different from them, we do not consider this problem based on content. As an alternative, our goal is to find the optimal recommendation distribution

P so that the recommended data would fit the preference of the user as much as possible. To be specific, each recommended sequence of data should resemble the desired data sequence of the user in the statistical sense. For a sequence of user’s favorite data, let

be the corresponding class indexes. As

n goes to infinity, it is clear that

with probability one, where

is the typical set under distribution

U. That is,

, where

is the occurring probability of

and

is the joint entropy of

U [

37]. Since the class-index sequence

of recommended data is actually generated with distribution

P, the probability that

falls in the typical set

of distribution

U would be

, where

is the relative entropy between

P and

U [

37]. It is clear that the optimal

P would maximizes probability

, which is equivalent to minimizing the relative entropy

In particular, our desired recommendation distribution P is not exactly the same as the utility distribution of the user because we would also like to intentionally push some advertisements to the users to increase our profit.

2.2. Revenue Model

We assume that the user divides the whole data set into two parts, i.e., the desired ones and the unwanted ones (e.g., advertisements). At the recommendation unit, the data can also be classified into two types according to whether it is recommended to the user. Different push types may strikingly lead to different results. For example, the cost of omitting data that should be pushed may be much smaller than that of erroneously pushing invalid data to a user. The user experience will be enhanced if the data needed by users is correctly pushed. Pushing some not needed content to users, such as some advertisements, may seriously degrade the user experience, but it still can bring in advertising revenue for the content delivery enterprise. Using a similar revenue model as that in cost-sensitive learning [

17,

18,

19,

20], we shall evaluate the revenue of the recommendation system as follows:

The cost of making a recommendation is ;

The revenue of a recommendation when the pushed data is liked by the user is ;

The cost of a recommendation when the pushed data is not liked is ;

The revenue of a recommendation when the pushed data is not liked (but can serve as an advertisement) is ;

The cost of missing to recommend a piece of desired data of the user is ;

Therefore, the revenue of recommending a piece of data belonging to class

i can be summarized in

Table 2.

Moreover, the corresponding matrix of occurring probability is given by

Table 3.

In this paper, we assume

are constraints for a given recommendation system for simplicity. The expected system revenue can then be expressed as

2.3. Problem Formulation

In this paper, we consider the following three kinds of recommendation systems: (1) the

adverting system where recommending unwanted advertisements yields higher revenue; (2) the

noncommercial system where recommending user’s desired data brings higher revenue; (3) the

neutral system where the system revenue is independent from recommendation probability

P. For each given target revenue constraint

and each kind of system, we shall optimize the recommendation distribution

P by minimizing

. In particular, the following auxiliary variable is used:

2.3.1. Advertising Systems

In an

advertising system, recommending a piece of user’s unwanted data (an advertisement) yields higher revenue. Since the revenue of recommending an advertisement is the main source of income in this case, which is larger than other revenue and cost, the advertising system would satisfy the following condition:

By combining Labels (

4)–(

6), it is clear that the constraint

is equivalent to

For advertising systems, therefore, the feasible set of recommendation distribution

P can be expressed as

For a given target revenue

, we shall solve the optimal recommendation distribution

P of advertising systems from

2.3.2. Noncommercial Systems

A

noncommercial system is defined as a recommendation system where the revenue

of recommending a piece of desired data is larger than the sum of revenue

of recommending an advertisement and the cost of not recommending a piece of desired data

. That is,

Accordingly, the constraint

is equivalent to

Therefore, the feasible set of recommendation distribution

P for noncommercial systems can be expressed as

Afterwards, we can solve the optimal recommendation distribution

P through the following optimization problem:

2.3.3. Neutral Systems

For the case

, the corresponding expected system revenue degrades to

and is independent from the recommendation distribution

P. As long as the target revenue satisfies

, the constraint

can be met by any recommendation distribution. Therefore, the recommendation distribution can be chosen as

.

2.3.4. Discussion of Systems

We note that in the scenario of the wireless mobile Internet, each new application needs to attract more users (i.e., grab larger market share) with excellent user experience in its early stage. After the market share and the user groups being stable, the application can earn money by pushing some advertisements at the cost of some degradations in user experience.

Noncommercial systems usually appear in the stage of user expanding. In order to enlarge market share, the main tasks of this stage are to attract more users and to convince them of the recommendation systems with excellent user experience. Therefore, the revenue by recommending desired data of users should be larger than that of recommending advertisements. To be specific, the desired data of users would be recommended with higher probability to increase the target revenue . Since is decreasing with , those high-probability events are more important in this case.

Advertising systems are usually adopted in the mature stage of applications where users have been accustomed to the recommendation system and some advertisements are acceptable. Therefore, the applications would increase the revenue of pushing advertisements and the actual number of advertisement recommendations. To be specific, the desired data of users should be recommended with relatively small-probability while advertisements should be recommended with higher probability. In this sense, the small-probability events are more important here.

Remark 1. Since , these three kinds of recommendation systems cover all the cases of this problem.

Different from the other recommendation systems based on content [

11,

13,

14], the recommendation systems in this paper discuss the distribution of recommendations based on the preference of users and the target revenue of the recommendation system. Thus, we have focused on the integrated planning of a sequence of recommendations other than the recommendation of a specific piece of data.

3. Optimal Recommendation Distribution

In this part, we shall present the optimal recommendation distribution for both advertising systems and noncommercial systems explicitly. We define an auxiliary variable and an auxiliary function as follows:

where

is a constant and

is a general probability mass function. Actually, we have

where

is the Rényi entropy

when

[

39].

In particular, we have the following lemma on .

Lemma 1. Function is monotonically decreasing with ϖ.

Lemma 2. , , and , where and .

It is clear that we have and .

3.1. Optimal Advertising System

Theorem 1. For an advertising system with , the optimal recommendation distribution is the solution of Problem and is given byfor , where α is defined in Label (

5)

, is defined in Label (

15)

, NaN means no solution exists, and is the solution to . Proof of Theorem 1. First, if , no solution exists since is always smaller than or equal to one and the constraint (9a) can never be satisfied.

Second, if , we have . That is, the constraint (9a) could by satisfied with any distribution P. Thus, the solution to Problem would be , which minimizes .

Third, if

, we shall solve Problem

based on the following Karush–Kuhn–Tucher (KKT) conditions:

Differentiating

with respect to

and set it to zero, we have

and thus

. Together with constraint (18b), we further have

and

where

is the solution to

, i.e.,

. By substituting

with

, the desired result in Label (

17) would be obtained.

The condition implies . Since has been shown to be monotonically decreasing with in Lemma 1, we then have . This completes the proof of Theorem 1. □

Remark 2. is equivalent to , which means that the target revenue is low and can be achieved by pushing data according to the preference of the user exactly.

is equivalent to , which means that the target revenue can only be achieved when some advertisements are pushed according to probability .

is equivalent to , which means that the target is too high to achieve, even when advertisements are pushed with probability one.

3.2. Optimal Noncommercial System

Theorem 2. For a noncommercial system with , the optimal recommendation distribution is the solution of Problem and is given byfor , where α is defined in Label (

5)

, is defined in Label (

15)

, NaN means no solution exists, and is the solution to . Proof of Theorem 2. First, if , no solution exists since is positive and cannot be smaller a negative number, and thus the constraint (13b) can never be satisfied.

Second, if and we set , we would have , i.e., constraint (13a) is satisfied. Since setting minimizes , should be the solution of Problem 2.

Third, if

, we shall solve Problem

using the following KKT conditions:

Differentiating

with respect to

and set it to zero, we have

and

. Together with constraint (24b), we then have

and

where

is the solution to

.

By denoting

, (

26) turns to be

which is the desired result in Label (

23).

Moreover, the condition implies . Since is monotonically decreasing with (cf. Lemma 1), we see that . Thus, Theorem 2 is proved. □

Remark 3. We denoteand have the following observations. is equivalent to , which means that the target revenue is high and cannot be achieved by any recommendation distribution.

is equivalent to , which means that the target revenue is not too high and the information is pushed according to probability . The user experience is limited by the target revenue in this case.

is equivalent to , which can be achieved by pushing data according to the preference of the user exactly.

3.3. Short Summary

We further denote

and the optimal recommendation distributions for various systems and various target revenues can then be summarized in

Table 4.

Cases ③, ⑤, and ⑧ are extreme cases where the target revenue is beyond the reach of the system. For cases ①, ④, and ⑦, the target revenue is low ( or ), and thus is easy to achieve. In particular, the constraints (9a) and (13a) are actually inactive. Thus, the optimal recommendation distribution would be exactly the same with the utility distribution.

Cases ② and ⑥ are more practical and meaningful due to the appropriate target revenues used. To further study the property of the optimal recommendation distribution of these two cases, the following function is introduced:

where

.

In doing so, the optimal recommendation distribution of cases ② and ⑥ can be jointly expressed as

where

is the solution to

.

In particular,

presents the optimal solution of case ② if

and presents the solution of case ⑥ if

. Moreover, when

, we have

which can be considered as the solution to cases ①, ④, and ⑦.

4. Property of Optimal Recommendation Distributions

In this section, we shall investigate how the optimal recommendation distribution diverges with respect to the utility distribution, in various systems and under various target revenue constraints. To do so, we first study the property of function

, where

and

are, respectively, the solution to the following equations:

4.1. Monotonicity of

Theorem 3. has the following properties:

- (1)

is monotonically increasing with ϖ in ;

- (2)

is monotonically decreasing with ϖ in ;

- (3)

is decreasing with , i.e, if ;

- (4)

if ;

if ;

if .

Proof of Theorem 3. (1) and (2) The derivative of

with respect to

can be expressed as

Since we have and , the sign of the derivative only depends on the term .

Lemma 1 and Lemma 2 show that is monotonically decreasing with and is the unique solution to equation . For , therefore, we then have , which is equivalent to . Thus, we have and is increasing with if . Likewise, it can be readily proved that is decreasing with if .

(3) Suppose , and , we would have, . Since is decreasing with (cf. Lemma 1), we then have , i.e., is decreasing with .

(4) First, we have

by the definition of

(cf. (

16)). Using the monotonicity of

with respect to

(cf. Lemma 1), we have

if

and

if

.

Thus, the proof of Theorem 3 is completed. □

In particular, according to Lemma 2, if = , will approach positive infinity, while will approach negative infinity if = .

Remark 4. - (1)

is monotonously decreasing with ϖ if ;

- (2)

is monotonously increasing with ϖ if .

Remark 5. We denoteand the relationships between and β in different systems are shown as follows. 4.2. Discussion of Parameters

Assume that there is unique minimum and unique maximum in utility distribution U. Without loss of generality, let , and and . is used to denote the optimal recommendation distribution. In addition, we only discuss the relationship between the optimal recommendation distribution and the parameters ( and ) in Cases ② and ⑥ in this part.

In fact, we have the following proposition on .

Proposition 1. has the following properties:

- (1)

exists when , and ;

- (2)

if ;

if ;

- (3)

is decreasing with , i.e, if .

For convenience, we denote and .

For advertising systems, the optimal recommendation distribution is given by (

17) and

(cf. Theorem 1). As

, we have

Obviously, when . Therefore, the utility distribution is here.

Based on Proposition 1, if (), we have for and for . The amount of optimal recommendation probability which is larger than corresponding utility probability is . Let . decreases with increasing of (cf. Proposition 1). In particular, if , only the recommendation probability of the event with the smallest utility probability is enlarged and that of other events is reduced, compared to the corresponding utility probability. As the parameter approaches positive infinity, the recommendation distribution will be . In conclusion, this recommendation system is a special information-filtering system which prefers to push the small-probability events.

For noncommercial systems, we can get the optimal recommendation distribution by (

23) and

(cf. Theorem 2). As

, it is noted that

and

when

. Hence,

in this case.

If (), for and for . The number of optimal recommendation probability which is larger than corresponding utility probability is . Let and . It is noted that decreases with decreasing of (cf. Proposition 1). In particular, if , only the recommendation probability of the event with largest utility probability is enlarged and that of other events is reduced, compared to the corresponding utility probability. As the parameter approaches negative infinity, the push distribution will be . In this case, the high-probability events are preferred to be pushed by this recommendation system.

Let the optimal recommendation distribution be equal to the utility distribution, i.e.,

, and we have

It is noted that

is the solution of (

38) according to (

31). Since

is monotonously increasing with

(cf. Remark 4),

when

. Thus, there exists one and only one root for (

38), which is

, and

in this case. Here, all the data types are fairly treated.

For convenience, the relationship between parameters, the optimal recommendation distribution and the utility distribution is summarized in

Table 5.

In fact, the recommendation system can be regarded as an information-filtering system which push data based on the preferences of users [

12]. The input and output of this information-filtering system can be seen as utility distribution and optimal recommendation distribution, respectively. For advertising systems, compared to the input, on the output port, the recommendation probability of data belonging to the set

is amplified, and that belonging to the set

is minified, where

and

. Since

, the advertising system is a special information-filtering system which prefers to push the small-probability events. For noncommercial systems, the data with higher utility probability, i.e.,

, is more likely to be pushed, and the data with smaller utility probability, i.e.,

, tends to be overlooked, where

and

. Since

, the high-probability events are preferred to be pushed by the noncommercial system.

Remark 6. The recommendation system can be regarded as an information-filtering system, and parameter ϖ is like an indicator which can reflect the system preference. If , the system is a advertising system, which prefers to recommend the small-probability events, while the system is a noncommercial system, which is more likely to recommend the high-probability events if . In particular, the system pushes data according to the preference of the user exactly if .

4.3. Geometric Interpretation

In this part, we will give a geometric interpretation of optimal recommendation distribution by means of probability simplex. Let

denote all the probability distributions from an alphabet

, and point

U denote the utility distribution. In addition,

P is the notation to denote recommendation distribution and

denotes the optimal recommendation distribution. With the help of the method of types [

37], we have

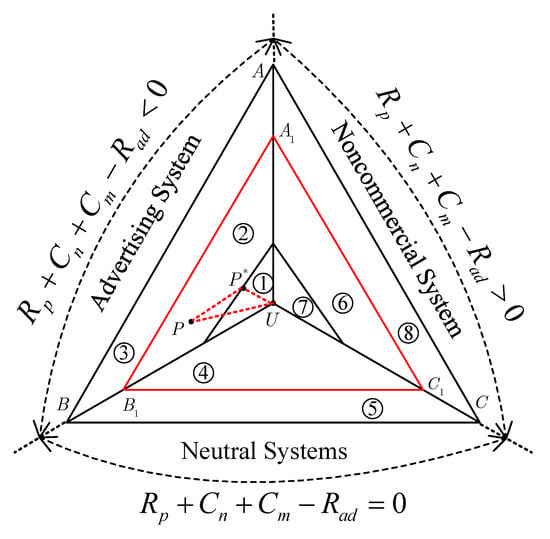

Figure 2 to characterize the generation of optimal recommendation distribution.

In

Figure 2, all cases can be grouped into three major categories, i.e.,

,

and

. In fact, these three categories are advertising systems, neutral systems and noncommercial systems, which are denoted by triangles

,

and

, respectively. Triangles

denotes the region where the average revenue is equal to or larger than a given value. In this paper, our goal is to find the optimal recommendation distribution so that the recommended data would fit the preference of the user as much as possible when the average revenue satisfies the predefined requirement. Since the matching degree between the recommended data and the user’s preference is characterized by

(cf.

Section 2.1),

should be a recommendation distribution on the border of or in the triangle

, which is closest to utility distribution

U in relative entropy. Therefore, there is no solution if recommendation distribution

P falls within regions ③, ⑤ and ⑧.

For advertising systems, can be divided into regions ①②③. Obviously, for region ① since U falls within region ①. in ② is the recommendation distribution closest to U in relative entropy. It is noted that if and . There is no solution in region ③ since . There is a similar situation for noncommercial systems, which are composed of regions ⑥, ⑦ and ⑧. There are only two regions for neutral systems. In this case, there is no solution when , and when .

Furthermore, triangle characterizes the set and triangle characterizes the set .

6. Numerical Results

In this section, some numerical results are presented to to validate the theoretical founds in this paper.

6.1. Property of

Figure 4 depicts the function

versus parameter

. The probability distributions are

,

,

,

and

. The scaling factor of

is varying from

to 100.

Some observations are obtained in

Figure 4. The functions in all the cases are monotonically decreasing with

. When

is small enough, i.e.,

,

is close to

for

, while

approaches

for

when

. In fact, we obtain

. These results validate Lemma 1 and Lemma 2. Furthermore, the velocity of change of

in region

is bigger than that in regions

and

. The KL distances between these probability distributions and uniform distribution are

, respectively. Thus, the amplitude of variation of

decreases with decreasing of KL distance with uniform distribution.

6.2. Optimal Recommendation Distribution

Then, we focus on proposed optimal recommendation distribution in this paper. The parameters of recommendation system are D1 =

and D2 =

. The minimum average revenue

is varying from 0 to 3. The utility distributions are U1 =

and U2

. Let

. Some results are listed in

Table 6.

Figure 5 shows the relationship between optimal recommendation distribution and the minimum average revenue. Some observations can be obtained. In fact, the optimal recommendation distribution can be divided into three phases. In phase one, in which the minimum average revenue is small (

), the optimal recommendation distribution is exactly the same as utility distribution. In phase two, the minimum average revenue is neither too small nor too large (

or

). In this case, the optimal recommendation distribution changes with the increasing of minimum average revenue. In the phase three, in which the minimum average revenue is too large (

or

), there is no appropriate recommendation distribution.

The optimal recommendation probability

versus the average revenue is depicted in subgraph one of

Figure 5. If D1 is adopted, which means advertising systems, we obtain

increases with the increasing of minimum average revenue when

.

is larger than

in this case.

approaches one when the average revenue is close to

. If D2 is adopted,

decreases with the increasing of minimum average revenue in the region

, and

is smaller than

.

approaches zero as the average revenue increasing to

. In addition,

when

.

Subgraph two of

Figure 5 depicts the optimal recommendation probability

versus the minimum average revenue. If

, the optimal recommendation probability is equal to the corresponding utility probability. If

or

,

in the four cases will decrease from

to zero. In this case,

increases at the early stage and then decreases for D1, U1 and D1, U2, while

is monotonously increasing for D2, U1 and D2, U2. Since

,

for D1 and

for D2, these numerical results agree with the discussion in

Section 4.1. Subgraph three is similar to the Subgraph two.

Subgraph four is contrary to the Subgraph one, which shows the optimal recommendation probability versus the minimum average revenue. If , is equal to . For D1, i.e., advertising systems, if the minimum average revenue is larger than and smaller than , is smaller than and it decreases to zero as . However, is larger than and it increases to one as for D2, i.e., noncommercial systems.

Figure 6 illustrates minimum KL distance between recommendation distribution and utility distribution versus the minimum average revenue when

or

. It is noted that the constraints on the minimum average revenue are true. The minimum KL distance increases with the increasing of the minimum average revenue. This figure also shows that the minimum average revenue can be obtained for a given minimum KL distance, when the utility distribution and recommendation system parameters are invariant. Since this KL distance represents the accuracy of recommendation strategy, there is a trade-off between the recommendation accuracy and the minimum average revenue.

6.3. Property of

Figure 3 shows that the importance coefficient

versus the function

. The utility distribution is

and the importance coefficient is varying from

to 40. It is easy to check that

. We denote

.

is monotonically increasing with increasing of the importance coefficient. is close to zero when . () increases with the increasing of when , and then it decreases with the increasing of when . They achieve the maximum value when . It is also noted that . If , () is very close to zero, and () if , which means it changes faster when . On the contrary, () changes faster with in than that in since that it is still bigger than when and it approaches zero when . In addition, decreases monotonically with the increasing of the importance coefficient, and is close to zero when .

Some other observations are also obtained. When , we obtain (). Without loss of generality, we take as an example. There is a non-zero importance coefficient which makes . If , we obtain for and for . Compared with the utility distribution, the results of the elements in set are enlarged, and those in set are minified. The difference between these two sets is that the utility probability of or is larger than that in . In addition. it is also noted that for and for , when . Here, only the function output of the element in high-probability set is larger than the corresponding utility probability.

Furthermore, when for , for .

7. Conclusions

In this paper, we discussed the optimal data recommendation problem when the recommendation model pursues best user performance with a certain revenue guarantee. Firstly, we illuminated the system model and formulated this problem as an optimization. Then, we gave the explicit solution of this problem in different cases, such as advertising systems and noncommercial systems, which can improve the design of data recommendation strategy. In fact, the optimal recommendation distribution is the one that is the closest to the utility distribution in the relative entropy when it satisfies expected revenue. There is a trade-off between the recommendation accuracy and the expected revenue. In addition, the properties of this optimal recommendation distribution, such as monotonicity and geometric interpretation, were also discussed in this paper. Furthermore, the optimal recommendation system can be regarded as an information-filtering system, and the importance coefficient determines what events the system prefers to recommend.

We also obtained that the optimal recommendation probability is the proportion of corresponding recommendation value in total recommendation value if the minimum average revenue is neither too small nor too large. In fact, the recommendation value is a special weight factor in determining the optimal recommendation distribution, and it can be regarded as a measure of importance. Since its form and properties are the same as those of MIM, the optimal recommendation distribution is exactly the normalized MIM, where MIM is used to characterize the concern of the system. The parameter in MIM, i.e., importance coefficient, plays a switch role on the event sets of systems’ attention. That is, the importance index of high-probability events is enlarged for the negative importance coefficient (i.e., noncommercial system), while the importance index of small-probability events is magnified by systems for the positive importance coefficient (i.e., advertising systems). In particular, only the maximum-probability event or the minimum-probability event are focused on as the importance coefficient approaches to negative infinity or positive infinity, respectively. These results give a new physical explanation of MIM from the data recommendation perspective, which can validate the rationality of MIM in one aspect. MIM is also extended to the general case for whatever the probability of systems interested events is. One can adjust the importance coefficient to focus on desired data type. Compared with previous MIM, the set of desired events can be defined precisely. These results can help us formulate appropriate data recommendation strategy in different scenarios.

In the future, we may consider its applications in next generation cellular systems [

41,

42], wireless sensor networks [

43] and very high speed railway communication systems [

44] by taking the signal transmission mode into accounts.