M-SAC-VLADNet: A Multi-Path Deep Feature Coding Model for Visual Classification

Abstract

:1. Introduction

2. Related Work

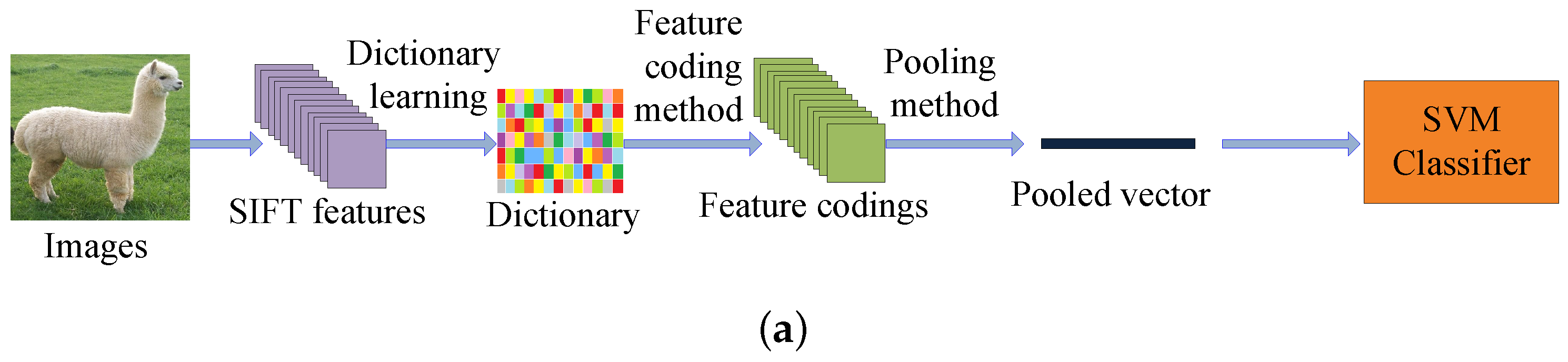

2.1. The Conventional Feature Coding Framework for Image Recognition

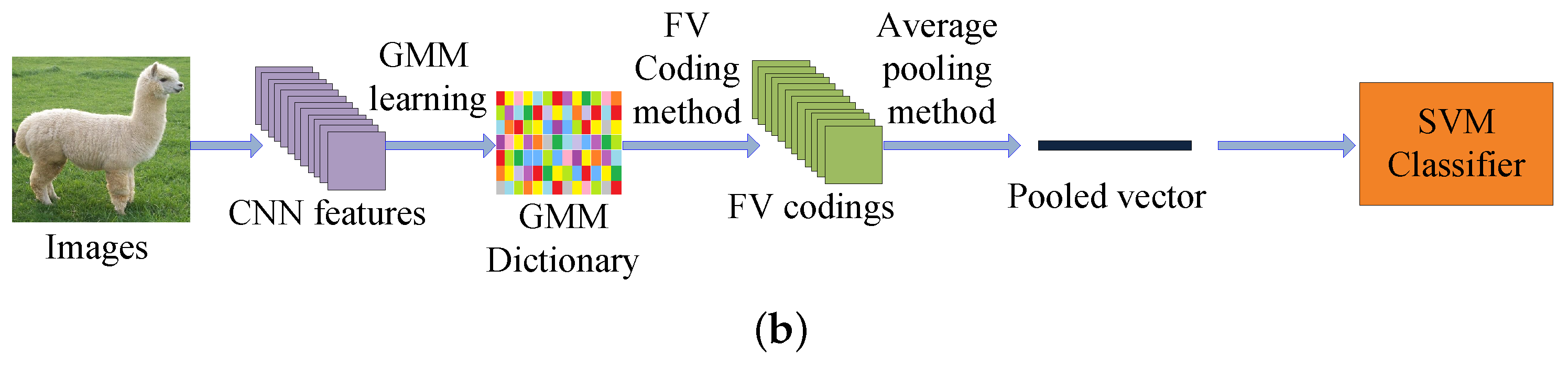

2.2. The CNN Feature for Feature Coding Network

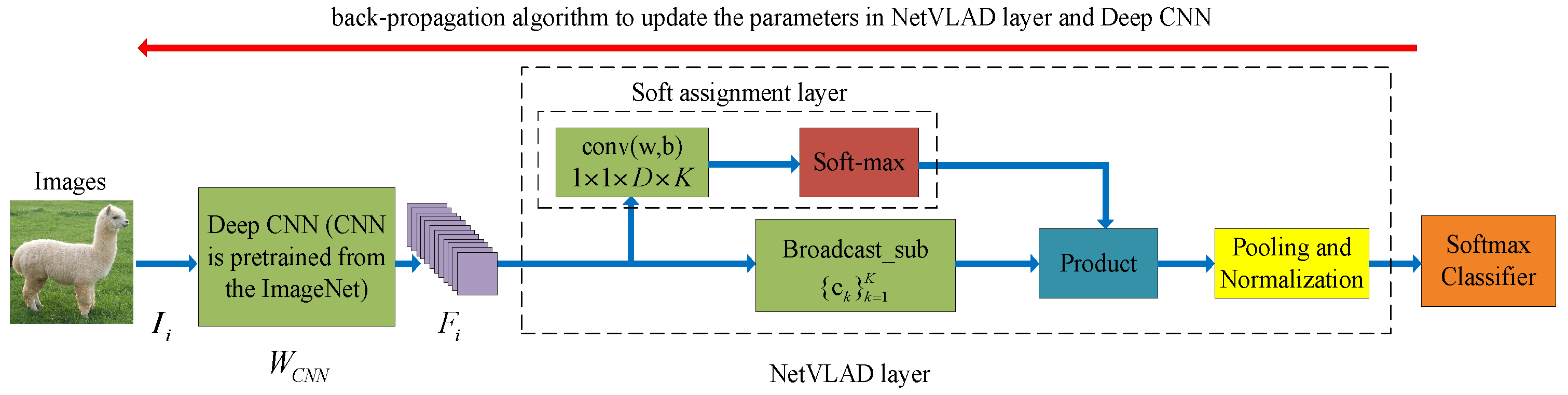

2.3. The End-to-End NetVLAD Model

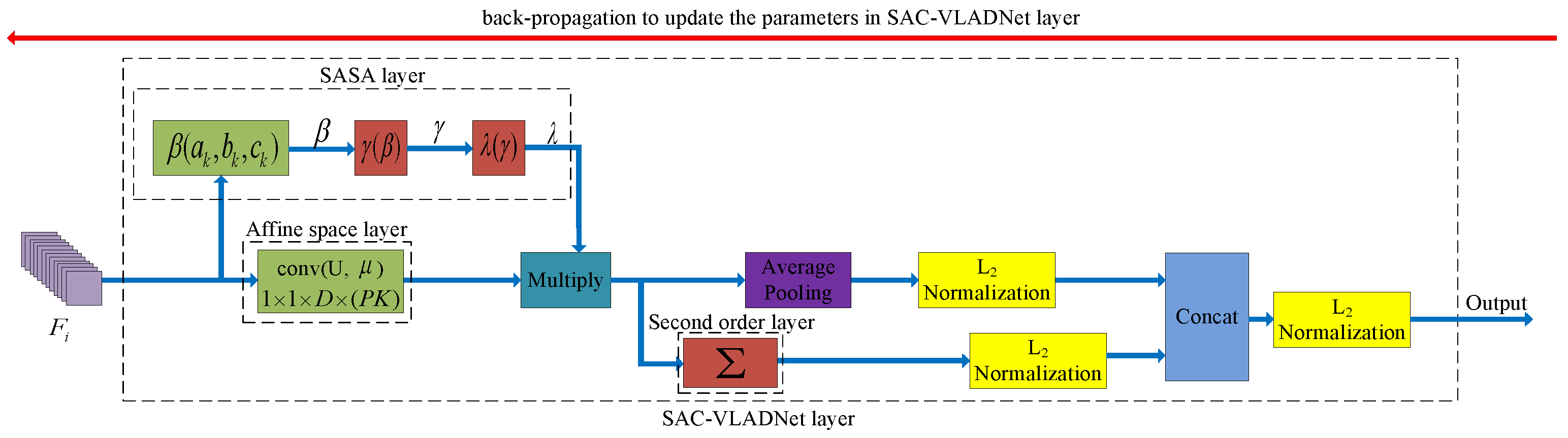

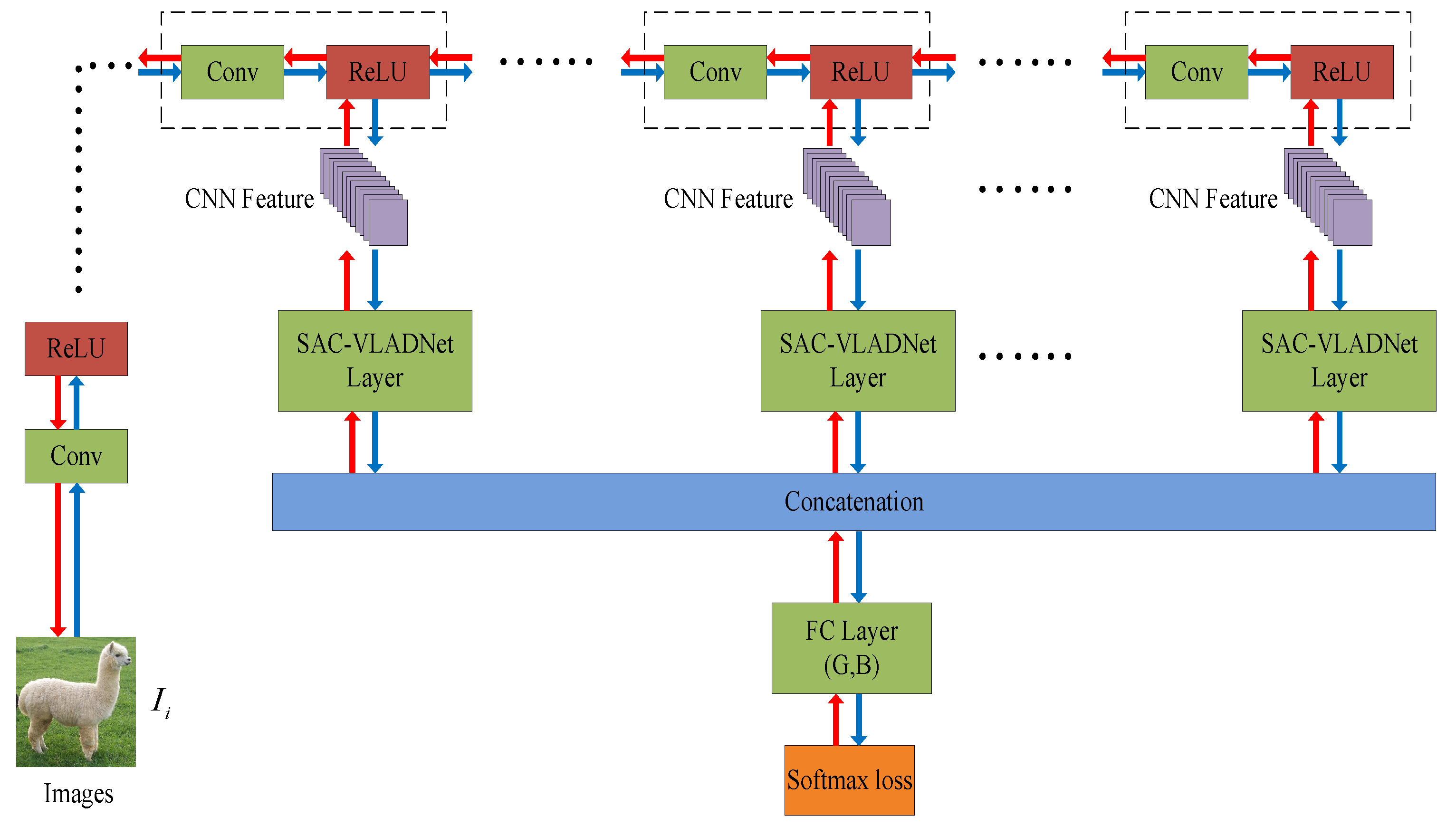

3. The Proposed SAC-VLADNet

3.1. The Sparsely-Adaptive Soft Assignment Coding (SASAC) Layer

3.2. The End-to-End Affine Subspace Layer

3.3. The Covariance Layer

3.4. The Complete SAC-VLADNet

3.5. The Proposed M-SAC-VLADNet

4. Experimental Results

4.1. Experimental Setting

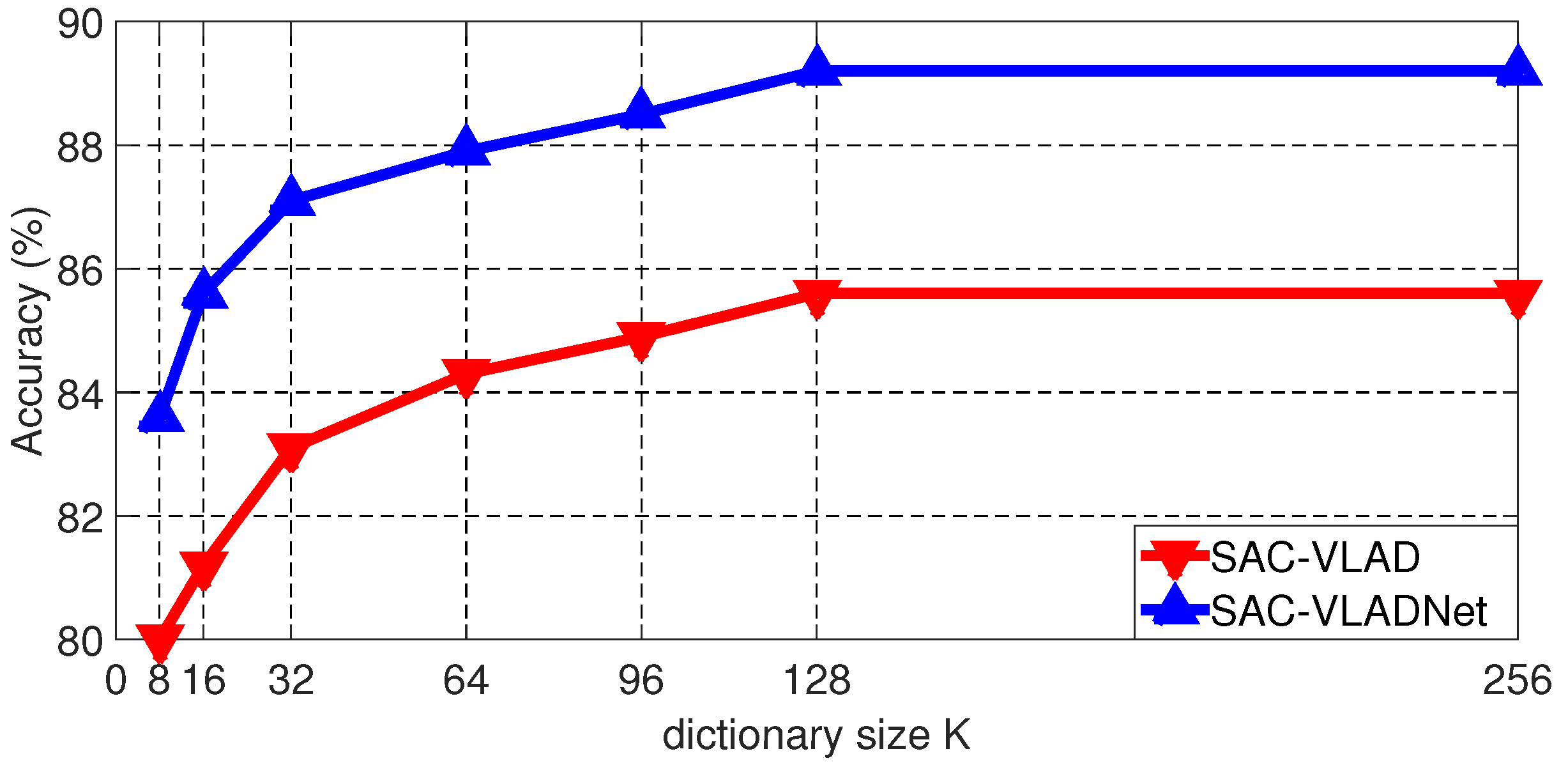

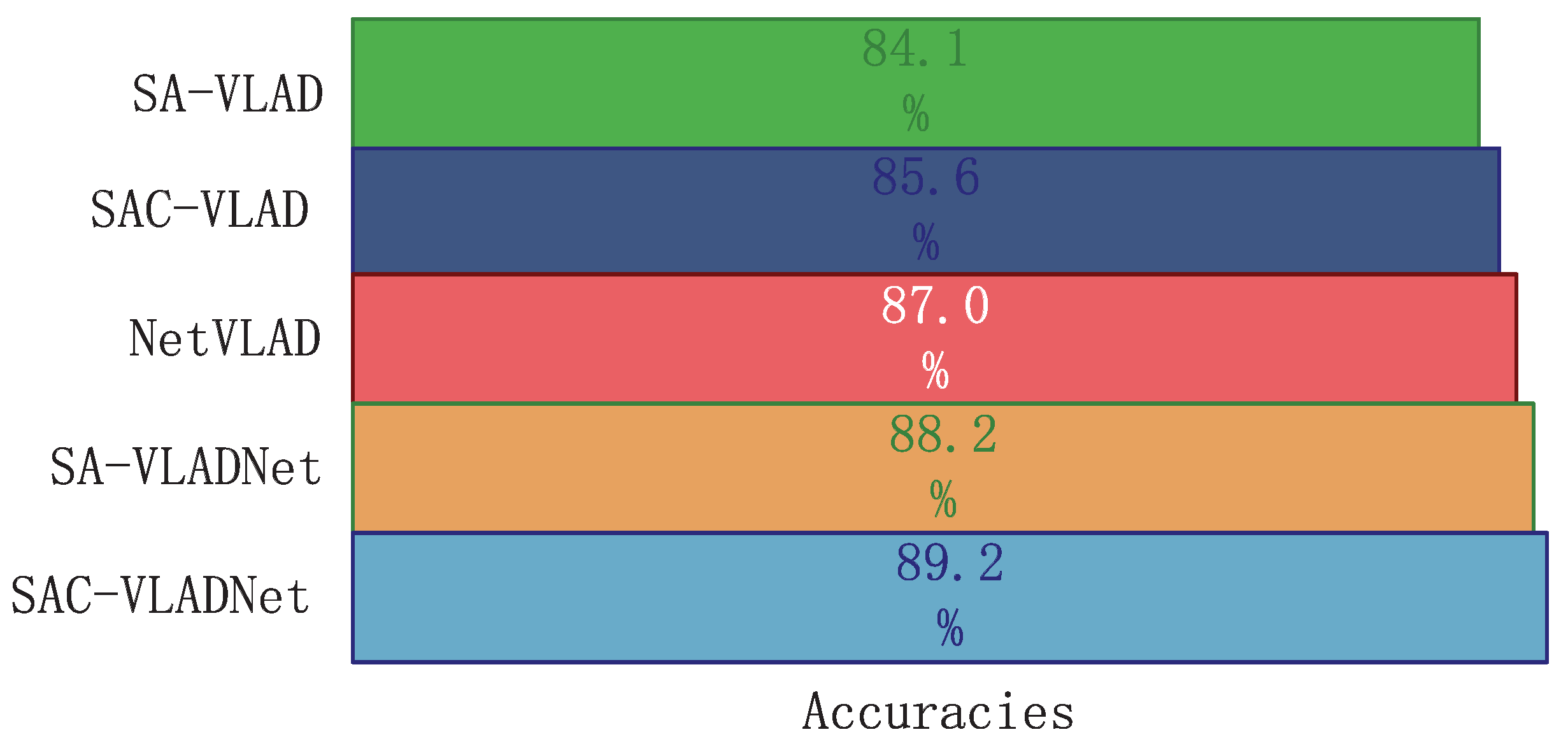

4.2. Analyses of Some Important Factors

4.3. Statistical Test of SAC-VLADNet and NetVLAD

4.4. Analysis of Coding Results

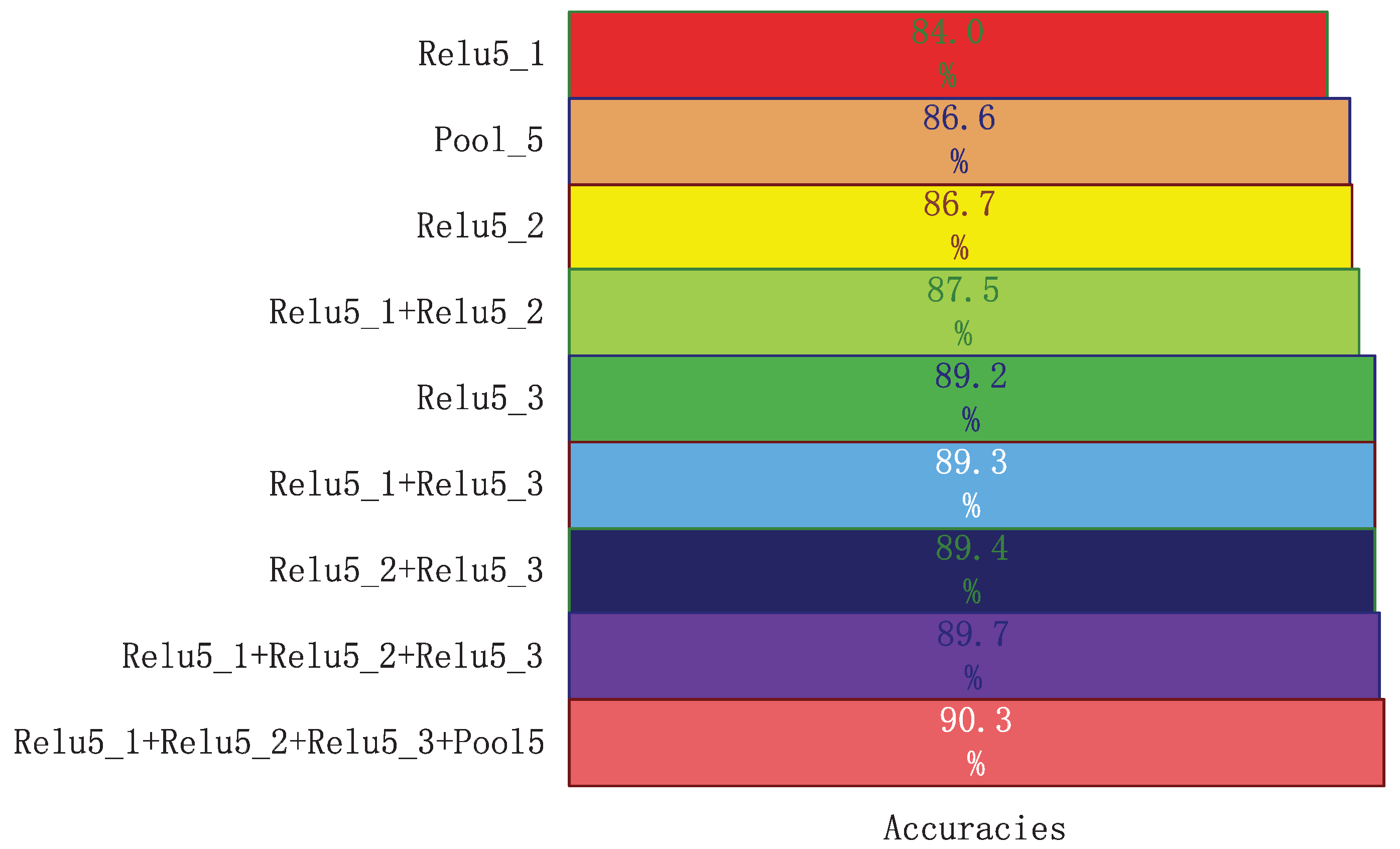

4.5. Analysis of Multi-Path Features

4.6. Comparisons with Other State-of-the-Art Classification Models

4.6.1. MIT Indoor Recognition

4.6.2. CUB200 Classification

4.6.3. Car Categorization

4.6.4. Caltech256 Classification

4.7. Running Speed Comparison

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A. The Back Propagation Function of SASAC Layer

References

- Krizhevsky, A.; Sutskever, L.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS), Lake Tahoe, NV, USA, 3–8 December 2012. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Hu, J.; Li, S.; Yao, Y.; Yu, L.; Yang, G.; Hu, J. Patent Keyword Extraction Algorithm Based on Distributed Representation for Patent Classification. Entropy 2018, 20, 104. [Google Scholar] [CrossRef]

- Lu, X.; Yang, Y.; Zhang, W.; Wang, Q.; Wang, Y. Face Verification with Multi-Task and Multi-Scale Feature Fusion. Entropy 2017, 19, 228. [Google Scholar] [CrossRef]

- Albelwi, S.; Mahmood, A. A Framework for Designing the Architectures of Deep Convolutional Neural Networks. Entropy 2017, 19, 242. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Jiang, X.; Pang, Y.; Sun, M.; Li, X. Cascaded Subpatch Networks for Effective CNNs. IEEE Trans. Neural Netw. Learn. Syst. 2017, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Pang, Y.; Sun, M.; Jiang, X.; Li, X. Convolution in Convolution for Network in Network. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1587–1597. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Liu, D.; Yang, J.; Han, W.; Huang, T. Deep Networks for Image Super-Resolution with Sparse Prior. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H.S. Conditional Random Fields as Recurrent Neural Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015. [Google Scholar]

- Ouyang, W.; Zeng, X.; Wang, X.; Qiu, S.; Luo, P.; Tian, Y.; Li, H.; Yang, S.; Wang, Z.; Li, H.; et al. DeepID-Net: Object Detection with Deformable Part Based Convolutional Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1320–1334. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Mei, X.; Prokhorov, D.; Tao, D. Deep Neural Network for Structural Prediction and Lane Detection in Traffic Scene. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 690–703. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Shrivastava, A.; Gupta, A. A-Fast-RCNN: Hard Positive Generation via Adversary for Object Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ma, C.; Huang, J.-B.; Yang, X.; Yang, M.-H. Hierarchical Convolutional Features for Visual Tracking. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Zuo, W.; Ren, D.; Zhang, D.; Gu, S.; Zhang, L. Learning Iteration-wise Generalized Shrinkage-Thresholding Operators for Blind Deconvolution. IEEE Signal Process. Soc. 2016, 25, 1751–1764. [Google Scholar] [CrossRef] [PubMed]

- Peng, X.; Xiao, S.; Feng, J.; Yau, W.-Y.; Yi, Z. Deep Subspace Clustering with Sparsity Prior. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI-16), New York, NY, USA, 9–15 July 2016. [Google Scholar]

- Wang, Z.; Yang, Y.; Chang, S.; Ling, Q.; Huang, T.S. Learning A Deep l∞ Encoder for Hashing. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI-16), New York, NY, USA, 9–15 July 2016. [Google Scholar]

- Wang, K.; Lin, L.; Zuo, W.; Gu, S.; Zhang, L. Dictionary Pair Classifier Driven Convolutional Neural Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Gu, S.; Zhang, L.; Zuo, W.; Feng, X. Projective dictionary pair learning for pattern classification. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS), Montréal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Huang, Y.; Wu, Z.; Wang, L.; Tan, T. Feature Coding in Image Classification: A Comprehensive Study. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 493–506. [Google Scholar] [CrossRef] [PubMed]

- Goh, H.; Thome, N.; Cord, M.; Lim, J.H. Learning Deep Hierarchical Visual Feature Coding. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 2212–2225. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Yu, K.; Gong, Y.; Huang, T. Linear Spatial Pyramid Matching Using Sparse Coding for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Chen, B.; Li, J.; Ma, B.; Wei, G. Convolutional Sparse Coding Classification Model for Image Classification. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Zhou, Y.; Chang, H.; Barner, K.; Spellman, P.; Parvin, B. Classification of Histology Sections via Multispectral Convolutional Sparse Coding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Zeiler, M.D.; Taylor, G.W.; Fergus, R. Adaptive Deconvolutional Networks for Mid and High Level Feature Learning. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Wang, J.; Yang, J.; Yu, K.; Lv, F.; Huang, T.; Gong, Y. Locality-Constrained Linear Coding for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Gemert, J.C.; Geusebroek, J.M.; Veenman, C.J.; Smeulders, A.W. Kernel Codebooks for Scene Categorization. In Proceedings of the European Conference on Computer Vision (ECCV), Marseille, France, 12–18 October 2008. [Google Scholar]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Natural Scene Categories. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Huang, Y.; Huang, K.; Yu, Y.; Tan, T. Salient Coding for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Nchez, J.; Perronnin, F.; Mensink, T.; Verbeek, J. Image Classification with the Fisher Vector: Theory and Practice. Int. J. Comput. Vis. 2013, 105, 222–245. [Google Scholar]

- Jégou, H.; Douze, M.; Schmid, C.; Pérez, P. Aggregating Local Descriptors Into a Compact Image Representation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Girdhar, R.; Ramanan, D.; Gupta, A.; Sivic, J.; Russell, B. ActionVLAD: Learning Spatio-Temporal Aggregation for Action Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, P.; Lu, X.; Wang, Q. From Dictionary of Visual Words to Subspaces: Locality-Constrained Affine Subspace Coding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Cimpoi, M.; Maji, S.; Vedaldi, A. Deep Filter Banks for Texture Recognition and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. ImageNet: A large-Scale Hierarchical Image Database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Arandjelovic, R.; Zisserman, A. All about VLAD. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Gao, B.B.; Wei, X.S.; Wu, J.; Lin, W. Deep spatial pyramid: The devil is once again in the details. arXiv, 2015; arXiv:1504.05277. [Google Scholar]

- Quattoni, A.; Torralba, A. Recognizing Indoor Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Krause, J.; Stark, M.; Deng, J.; Li, F.-F. 3D Object Representations for Fine-Grained Categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-Ucsd Birds-200-2011 Dataset; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Griffin, G.; Holub, A.; Perona, P. Caltech-256 Object Category Dataset; California Institute of Technology: Pasadena, CA, USA, 2007. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representation (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zhang, Z.; Chen, T.; Li, M.; Li, Y.; Lin, M.; Wang, N.; Wang, M.; Xiao, T.; Xu, B.; Zhang, C. Mxnet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems. arXiv, 2015; arXiv:1512.01274. [Google Scholar]

- Vedaldi, A.; Fulkerson, B. Vlfeat: An Open and Portable Library of Computer Vision Algorithms. In Proceedings of the International Conference on Multimedea, Firenze, Italy, 25–29 October 2010. [Google Scholar]

- Lin, T.Y.; RoyChowdhury, A.; Maji, S. Bilinear CNN Models for Fine-Grained Visual Recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 7–13 December 2015. [Google Scholar]

- Xie, G.S.; Zhang, X.Y.; Shu, X.; Yan, S.; Liu, C.L. Task-Driven Feature Pooling for Image Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Yang, S.; Ramanan, D. Multi-Scale Recognition with DAG-CNNs. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Zhang, N.; Donahue, J.; Girshick, R.; Darrell, T. Part-Based RCNNs for Fine-Grained Category Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Huang, S.; Xu, Z.; Tao, D.; Zhang, Y. Part-Stacked CNN for Fine-Grained Visual Categorization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Lin, D.; Shen, X.; Lu, C.; Jia, J. Deep LAC: Deep Localization, Alignment and Classification for Fine-Grained Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Cai, S.; Zhang, L.; Zuo, W.; Feng, X. A Probabilistic Collaborative Representation Based Approach for Pattern Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Simon, M.; Rodner, E. Neural Activation Constellations: Unsupervised Part Model Discovery with Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015. [Google Scholar]

- Krause, J.; Jin, H.; Yang, J.; Li, F.-F. Fine-Grained Recognition Without Part Annotations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Wang, D.; Shen, Z.; Shao, J.; Zhang, W.; Xue, X.; Zhang, Z. Multiple Granularity Descriptors for Fine-Grained Categorization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Gao, Y.; Beijbom, O.; Zhang, N.; Darrell, T. Compact Bilinear Pooling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Kong, S.; Fowlkes, C. Low-Rank Bilinear Pooling for Fine-Grained Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhang, H.; Xu, T.; Elhoseiny, M.; Huang, X.; Zhang, S.; Elgammal, A.; Metaxas, D. SPDA-CNN: Unifying Semantic Part Detection and Abstraction for Fine-Grained Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Gosselin, P.-H.; Murray, N.; Jegou, H.; Perronnin, F. Revisiting the Fisher Vector for Fine-Grained Classification. Pattern Recognit. Lett. 2014, 49, 92–98. [Google Scholar] [CrossRef]

- Moghimi, M.; Belongie, S.; Saberian, M.; Yang, J.; Vasconcelos, N.; Li, L.J. Boosted Convolutional Neural Networks. In Proceedings of the British Machine Vision Conference (BMVC), York, UK, 19–22 September 2016. [Google Scholar]

| Sample Number | Classes | T | K | P | |

|---|---|---|---|---|---|

| MIT indoor | 15620 | 67 | 7 | 128 | 128 |

| CUB200 | 11788 | 200 | 5 | ||

| Standford Car | 16185 | 196 | 3 | ||

| Caltech256 | 30680 | 256 | 7 |

| Methods | Features | Accuracies (%) |

|---|---|---|

| CaffeNet [1] | AlexNet | 59.5 |

| Caffe-DAG [52] | AlexNet | 64.6 |

| FC-CNN [39] | VGG-VD | 68.1 |

| FV-CNN [39] | VGG-VD | 76.0 |

| TDP [51] | VGG-VD | 75.6 |

| DAG-CNN [52] | VGG-VD | 77.5 |

| NetVLAD [36] | VGG-VD | 79.2 |

| B-CNN [50] | VGG-VD | 79.6 |

| SAC-VLAD | VGG-VD | 78.6 |

| SAC-VLADNet | VGG-VD | 82.0 |

| M-SAC-VLADNet | VGG-VD | 82.9 |

| Methods | Features | Train | Test | Acc (%) |

|---|---|---|---|---|

| FV coding [34] | SIFT | n/a | n/a | 18.8 |

| Part R-CNN [53] | AlexNet | Box+Part | n/a | 73.9 |

| PS-CNN [54] | AlexNet | Box+Part | Box | 76.6 |

| Deep LAC [55] | AlexNet | Box+Part | Box | 80.2 |

| FV-CNN [39] | VGG-VD | n/a | n/a | 71.3 |

| ProCRC [56] | VGG-VD | n/a | n/a | 78.3 |

| NetVLAD [36] | VGG-VD | n/a | n/a | 80.5 |

| NAC [57] | VGG-VD | n/a | n/a | 81.0 |

| Multi-grained [59] | VGG-VD | n/a | n/a | 81.7 |

| WPA [58] | VGG-VD | Box | n/a | 82.0 |

| CBP-RM [60] | VGG-VD | n/a | n/a | 83.9 |

| B-CNN [50] | VGG-VD | n/a | n/a | 84.0 |

| CBP-TS [60] | VGG-VD | n/a | n/a | 84.0 |

| LRBP [61] | VGG-VD | n/a | n/a | 84.2 |

| SPDA-CNN [62] | VGG-VD | Box+Part | Box | 84.6 |

| SAC-VLAD | VGG-VD | n/a | n/a | 77.0 |

| SAC-VLADNet | VGG-VD | n/a | n/a | 84.6 |

| M-SAC-VLADNet | VGG-VD | n/a | n/a | 85.5 |

| Methods | Features | Accuracies (%) |

|---|---|---|

| FV coding [34] | SIFT | 59.2 |

| RFV [63] | SIFT | 82.7 |

| FV-CNN [39] | VGG-VD | 85.7 |

| NetVLAD [36] | VGG-VD | 88.5 |

| CBP-RM [60] | VGG-VD | 89.5 |

| CBP-TS [60] | VGG-VD | 90.2 |

| B-CNN [50] | VGG-VD | 90.6 |

| LRBP [61] | VGG-VD | 90.9 |

| BoostCNN [64] | VGG-VD | 92.1 |

| SAC-VLAD | VGG-VD | 84.1 |

| SAC-VLADNet | VGG-VD | 91.3 |

| M-SAC-VLADNet | VGG-VD | 92.5 |

| Methods | Features | Accuracies (%) |

|---|---|---|

| ScSPM [26] | SIFT | 40.1 |

| LLC [30] | SIFT | 47.7 |

| FV-CNN [39] | VGG-VD | 81.2 |

| NAC [57] | VGG-VD | 84.1 |

| FC-CNN [39] | VGG-VD | 85.1 |

| DSP [42] | VGG-VD | 85.5 |

| ProCRC [56] | VGG-VD | 86.1 |

| NetVLAD [36] | VGG-VD | 87.0 |

| SAC-VLAD | VGG-VD | 85.6 |

| SAC-VLADNet | VGG-VD | 89.2 |

| M-SAC-VLADNet | VGG-VD | 90.3 |

| Train | Test | |

|---|---|---|

| VGG-VD | 13.95 | 103.8 |

| NetVLAD | 24.7 | 114.8 |

| SAC-VLADNet | 22.0 | 105.3 |

| M-SAC-VLADNet | 14.3 | 98.8 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.; Li, J.; Wei, G.; Ma, B. M-SAC-VLADNet: A Multi-Path Deep Feature Coding Model for Visual Classification. Entropy 2018, 20, 341. https://doi.org/10.3390/e20050341

Chen B, Li J, Wei G, Ma B. M-SAC-VLADNet: A Multi-Path Deep Feature Coding Model for Visual Classification. Entropy. 2018; 20(5):341. https://doi.org/10.3390/e20050341

Chicago/Turabian StyleChen, Boheng, Jie Li, Gang Wei, and Biyun Ma. 2018. "M-SAC-VLADNet: A Multi-Path Deep Feature Coding Model for Visual Classification" Entropy 20, no. 5: 341. https://doi.org/10.3390/e20050341