1. Introduction

In an attempt to generalize classical thermodynamics to irreversible nonequilibrium thermodynamics, a relatively new framework has been developed that combines stochasticity and nonequilibrium dynamics. This framework is known as stochastic thermodynamics [

1,

2,

3,

4,

5] and goes beyond linear irreversible thermodynamics addressing transport properties and entropy production in terms of forces and fluxes via linear system response theory [

6,

7,

8,

9]. Stochastic thermodynamics is applicable to nonequilibrium systems extending the validity of the laws of thermodynamics beyond the linear response regime by providing a system thermodynamic paradigm formulated on the level of individual system state realizations that are arbitrarily far from equilibrium. The thermodynamic variables of heat, work, and entropy, along with the concomitant first and second laws of thermodynamics, are formulated on the level of individual dynamical system trajectories using stochastic differential equations.

The nonequilibrium conditions in stochastic thermodynamics are imposed by an exogenous stochastic disturbance or an initial system state that is far from the system equilibrium resulting in an open (i.e., driven) or relaxation dynamical process. More specifically, the exogenous disturbance is modeled as an independent standard Wiener process (i.e., Brownian motion) defined on a complete filtered probability space wherein the current state is only dependent on the most recent event. The stochastic system dynamics are described by an overdamped Langevin equation [

2,

3] in which fluctuation and dissipation forces obey the Einstein relation expressing that diffusion is a result of both thermal fluctuations and frictional dissipation [

10].

Brownian motion refers to the irregular movement of microscopic particles suspended in a liquid and was discovered [

11,

12] by the botanist Robert Brown [

13]. This random motion is explained as the result of collisions between the suspended particles (i.e., Brownian particles) and the molecules of the liquid. Einstein was the first to formulate the theory of Brownian motion by assuming that the particles suspended in the liquid contribute to the thermal fluctuations of the medium and, in accordance with the principle of equipartition of energy [

14], the average translational kinetic energy of each particle [

10]. Thus, Brownian motion results from collisions by molecules of the fluid, wherein the suspended particles acquire the same average kinetic energy as the molecules of the fluid. This theory suggested that all matter consists of atoms (or molecules) and heat is the energy of motion (i.e., kinetic energy) of the atoms.

The use of statistical methods in developing a general molecular theory of heat predicated on random motions of Newtonian atoms led to the connection between the dynamics of heat flow and the behavior of electromagnetic radiation. A year after Einstein published his theory on Brownian motion, Smoluchovski [

15] confirmed the relation between friction and diffusion. In an attempt to simplify Einstein’s theory of Brownian motion, Langevin [

16] was the first to model the effect of Brownian motion using a stochastic differential equation (now known as a Langevin equation) wherein spherical particles are suspended in a medium and acted upon by external forces.

In stochastic thermodynamics, the Langevin equation captures the coupling between the system particle damping and the energy input to the particles via thermal effects. Namely, the frictional forces extract the particle kinetic energy, which in turn is injected back to the particles in the form of thermal fluctuations. This captures the phenomenological behavior of a Brownian particle suspended in a fluid medium which can be modeled as a continuous Markov process [

17]. Specifically, since collisions between the fluid molecules and a Brownian particle are more inelastic at higher viscosities, and temperature decreases with increasing viscosity in a fluid, additional heat is transferred to the fluid to maintain its temperature in accordance with the equipartition theorem. This heat is transferred to the Brownian particle through an increased disturbance intensity by the fluid molecules. These collisions between the Brownian particle and fluid molecules result in the observed persistent irregular and random motion of the particles.

The balance between damping (i.e., deceleration) of the particles due to frictional effects resulting in local heating of the fluid, and consequently entropy production, and the energy injection of the particles due to thermal fluctuations resulting in local cooling of the fluid, and consequently entropy consumption, is quantified by fluctuation theorems [

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28]. Thus, even though, on average, the entropy is positive (i.e., entropy production), there exist sample paths wherein the entropy decreases, albeit with an exponentially lower probability than that of entropy production. In other words, a stochastic thermodynamic system exhibits a symmetry in the probability distribution of the entropy production in the asymptotic nonequilibrium process.

Fluctuation theorems give a precise prediction for the cases in which entropy decreases in stochastic thermodynamic systems and provide a key relation between entropy production and irreversibility. Specifically, the entropy production of individual sample path trajectories of a stochastic thermodynamic system described by a Markov process is not restricted by the second law, but rather the average entropy production is determined to be positive. Furthermore, the notions of heat and work in stochastic thermodynamic systems allow for a formulation of the first law of thermodynamics on the level of individual sample path trajectories with microscopic states (i.e., positions and velocities) governed by a stochastic Langevin equation and macroscopic states governed by a Fokker–Planck equation [

29] (or a Kolmogorov forward equation, depending on context) describing the evolution of the probability density function of the microscopic (stochastic) states.

In this paper, we combine our large-scale thermodynamic system model developed in [

30] with stochastic thermodynamics to develop a stochastic dynamical systems framework of thermodynamics. Specifically, we develop a large-scale dynamical system model driven by Markov diffusion processes to present a unified framework for statistical thermodynamics predicated on a stochastic dynamical systems formalism. In particular, using a stochastic state space formulation, we develop a nonlinear stochastic compartmental dynamical system model characterized by energy conservation laws that is consistent with statistical thermodynamic principles. Moreover, we show that the difference between the average supplied system energy and the average stored system energy for our stochastic thermodynamic model is a martingale with respect to the system filtration. In addition, we show that the average stored system energy is equal to the mean energy that can be extracted from the system and the mean energy that can be delivered to the system in order to transfer it from a zero energy level to an arbitrary nonempty subset in the state space over a finite stopping time.

Finally, using the system ectropy [

30] as a Lyapunov function candidate, we show that in the absence of energy exchange with the environment the proposed stochastic thermodynamic model is stochastically semistable in the sense that all sample path trajectories converge almost surely to a set of equilibrium solutions, wherein every equilibrium solution in the set is almost surely Lyapunov stable. In addition, we show that the steady-state distribution of the large-scale sample path system energies is uniform, leading to system energy equipartitioning corresponding to a maximum entropy equilibrium state.

2. Stochastic Dynamical Systems

To extend the dynamical thermodynamic formulation of [

30] to stochastic thermodynamics we need to establish some notation, definitions, and mathematical preliminaries. A review of some basic results on nonlinear stochastic dynamical systems is given in [

31,

32,

33,

34,

35]. Recall that given a sample space

, a

-algebra

on

is a collection of subsets of

such that

, if

, then

, and if

, then

and

. The pair (

) is called a

measurable space and the

probability measure defined on (

) is a function

such that

,

, and if

and

,

, then

. The triple (

) is called a

probability space if

contains all subsets of

with

-outer measure [

36] zero [

33].

The subsets of belonging to are called -measurable sets. If and is the family of all open sets in , then is called the Borel σ-algebra and the elements of are called Borel sets. If () is a given probability space, then the real valued function (random variable) is -measurable if for all Borel sets . Given the probability space (), a filtration is a family of -algebras such that for all .

In this paper, we use the notation and terminology as established in [

37]. Specifically, define a complete probability space as

, where

denotes the sample space,

denotes a

-algebra, and

defines a probability measure on the

-algebra

; that is,

is a nonnegative countably additive set function on

such that

[

32]. Furthermore, we assume that

is a standard

d-dimensional Wiener process defined by

, where

is the classical Wiener measure ([

33], p. 10), with a continuous-time filtration

generated by the Wiener process

up to time

t.

We denote a stochastic dynamical system by generating a filtration adapted to the stochastic process on satisfying , , such that , , for all Borel sets contained in the Borel -algebra . We say that the stochastic process is -adapted if is -measurable for every . Furthermore, we say that satisfies the Markov property if the conditional probability distribution of the future states of the stochastic process generated by only depends on the present state. In this case, generates a Markov process which results in a decoupling of the past from the future in the sense that the present state of contains sufficient information so as to encapsulate the effects of the past system inputs. Here we use the notation to represent the stochastic process omitting its dependence on . Furthermore, denotes the -algebra of Borel sets in and denotes a -algebra generated on a set .

We denote the set of equivalence classes of measurable, integrable, and square-integrable or (depending on context) valued random processes on over the semi-infinite parameter space by , , and , respectively, where the equivalence relation is the one induced by -almost-sure equality. In particular, elements of take finite values -almost surely (a.s.) or with probability one. Hence, depending on the context, will denote either the set of real variables or the subspace of comprising of random processes that are constant almost surely. All inequalities and equalities involving random processes on are to be understood to hold -almost surely. Furthermore, and denote, respectively, the expectation with respect to the probability measure and with respect to the classical Wiener measure .

Given , denotes the set , and so on. Given and , we say x is nonzero on if . Furthermore, given and a -algebra , and denote, respectively, the expectation of the random variable x and the conditional expectation of x given , with all moments taken under the measure . In formulations wherein it is clear from context which measure is used, we omit the symbol in denoting expectation, and similarly for conditional expectation. Specifically, in such cases we denote the expectation with respect to the probability space by , and similarly for conditional expectation.

A stochastic process on is called a martingale with respect to the filtration if and only if is a -measurable random vector for all , , and for all . Thus, a martingale has the property that the expectation of the next value of the martingale is equal to its current value given all previous values of the dynamical process. If we replace the equality in with “≤” (respectively, “≥”), then is a supermartingale (respectively, submartingale). Note that every martingale is both a submartingale and supermartingale.

A random variable is called a stopping time with respect to if and only if , . Thus, the set of all such that is a -measurable set. Note that can take on finite as well as infinite values and characterizes whether at each time t an event at time has occurred using only the information in .

Finally, we write for the Euclidean vector norm, and for the i-th row and j-th column of a matrix , tr(·) for the trace operator, for the inverse operator, for the Freéchet derivative of V at x, for the Hessian of V at x, and for the Hilbert space of random vectors with finite average power, that is, . For an open set , ≜ denotes the set of all the random vectors in induced by . Similarly, for every , ≜ . Moreover, and denote the nonnegative and positive orthants of , that is, if , then and are equivalent, respectively, to and , where (respectively, ) indicates that every component of x is nonnegative (respectively, positive). Furthermore, denotes the space of real-valued functions that are two-times continuously differentiable with respect to . Finally, we write as to denote that approaches the set , that is, for every there exists such that for all , where .

Definition 1. Let and be measurable spaces, and let . If the function is -measurable in for a fixed and is a probability measure in for a fixed , then μ is called a (probability) kernel

from S to T. Furthermore, for , the function is called a regular conditional probability measure

if is measurable, is a probability measure, and satisfieswhere , , and , , is the transition probability

of the point at time instant s into all Borel subsets at time instant t. Any family of regular conditional probability measures

satisfying the Chapman– Kolmogorov equation ([

32])

or, equivalently,

where

,

, and

, is called a

semigroup of Markov kernels. The Markov kernels are called

time homogeneous if and only if

holds for all

.

Consider the nonlinear stochastic dynamical system

given by

where, for every

,

is a

-measurable random state vector,

,

is relatively open set with

,

is a

d-dimensional independent standard Wiener process (i.e., Brownian motion) defined on a complete filtered probability space

,

is independent of

and

are continuous,

is nonempty, and

,

, is the maximal interval of existence for the solution

of (

4).

An

equilibrium point of (

4) is a point

such that

and

. It is easy to see that

is an equilibrium point of (

4) if and only if the constant stochastic process

is a solution of (

4). We denote the set of equilibrium points of (

4) by

.

The filtered probability space

is clearly a real vector space with addition and scalar multiplication defined componentwise and pointwise. A

-valued stochastic process

is said to be a

solution of (

4) on the time interval

with initial condition

if

is

progressively measurable (i.e.,

is nonanticipating and measurable in

t and

) with respect to the filtration

,

,

, and

where the integrals in (

5) are Itô integrals [

38]. If the map

,

, had a bounded variation, then the natural definition for the integrals in (

5) would be the Lebesgue-Stieltjes integral where

is viewed as a parameter. However, since sample Wiener paths are nowhere differentiable and not of bounded variation for almost all

the integrals in (

5) need to be defined as Itô integrals [

39,

40].

Note that for each fixed

, the random variable

assigns a vector

to every outcome

of an experiment, and for each fixed

, the mapping

is the

sample path of the stochastic process

,

. A path-wise solution

of (

4) in

is said to be

right maximally defined if

x cannot be extended (either uniquely or non-uniquely) forward in time. We assume that all right maximal path-wise solutions to (

4) in

exist on

, and hence, we assume that (

4) is

forward complete. Sufficient conditions for forward completeness or

global solutions of (

4) are given in [

34,

38].

Furthermore, we assume that

and

satisfy the uniform Lipschitz continuity condition

and the growth restriction condition

for some Lipschitz constant

, and hence, since

and

is independent of

it follows that there exists a unique solution

of (

4) forward in time for all initial conditions in the following sense. For every

there exists

such that if

and

are two solutions of (

4); that is, if

with continuous sample paths almost surely solve (

4), then

and

.

The uniform Lipschitz continuity condition (

6) guarantees uniqueness of solutions, whereas the linear growth condition (

7) rules out finite escape times. A weaker sufficient condition for the existence of a unique solution to (

4) using a notion of (finite or infinite) escape time under the local Lipschitz continuity condition (

6) without the growth condition (

7) is given in [

41]. Alternatively, existence and uniqueness of solutions even when the uniform Lipschitz continuity condition (

6) does not hold are given in ([

38], p. 152).

The unique solution to (

4) determines a

-valued, time homogeneous Feller continuous Markov process

, and hence, its stationary Feller transition probability function is given by (([

31], Theorem 3.4), ([

32], Theorem 9.2.8))

for all

and all Borel subsets

of

, where

denotes the probability of transition of the point

at time instant

s into the set

at time instant

t. Recall that every continuous process with Feller transition probability function is also a strong Markov process ([

31] p. 101). Finally, we say that the dynamical system (

4) is

convergent in probability with respect to the closed set

if and only if the pointwise

exists for every

and

.

Here, the measurable map

is the

dynamic or

flow of the stochastic dynamical system (

2) and, for all

, satisfies the

cocycle property

and the

identity (on

) property

for all

and

. The measurable map

is continuously differentiable for all

outside a

-null set and the sample path trajectory

is continuous in

for all

. Thus, for every

, there exists a trajectory of measures defined for all

satisfying the dynamical processes (

4) with initial condition

. For simplicity of exposition we write

for

omitting its dependence on

.

Definition 2. A point is a limit point

of the trajectory of (

4)

if there exists a monotonic sequence of positive numbers, with as , such that as . The set of all limit points of , is the limit set

of of (

4).

It is important to note that the

-limit set of a stochastic dynamical system is a

-limit set of a trajectory of measures, that is,

is a weak limit of a sequence of measures taken along every sample continuous bounded trajectory of (

4). It can be shown that the

-limit set of a stationary stochastic dynamical system attracts bounded sets and is measurable with respect to the

-algebra of invariant sets. Thus, the measures of the stochastic process

tend to an invariant set of measures and

asymptotically tends to the closure of the support set (i.e., kernel) of this set of measures almost surely.

However, unlike deterministic dynamical systems, wherein

-limit sets serve as global attractors, in stochastic dynamical systems stochastic invariance (see Definition 4) leads to

-limit sets being defined for each fixed sample

of the underlying probability space

, and hence, are path-wise attractors. This is due to the fact that a cocycle property rather than a semigroup property holds for stochastic dynamical systems. For details see [

42,

43,

44].

Definition 3 ([

33], Def. 7.7).

Let be a time-homogeneous Markov process in and let . Then the infinitesimal generator

of , , with , is defined bywhere denotes the expectation with respect to the transition probability measure . If

and has a compact support [

45], and

,

, satisfies (

4), then the limit in (

9) exists for all

and the infinitesimal generator

of

,

, can be characterized by the system

drift and

diffusion functions

and

defining the stochastic dynamical system (

4) and is given by ([

33], Theorem 7.9)

Next, we extend Proposition 2.1 of [

30] to stochastic dynamical systems. First, however, the following definitions on stochastic invariance and essentially nonnegative vector fields are needed.

Definition 4. A relatively open set is invariant with respect to (

4)

if is Borel and, for all , , . Definition 5. Let . Then f is essentially nonnegative if for all and such that , where denotes the i-th component of x.

Proposition 1. Suppose . Then is an invariant set with respect to (4) if and only if is essentially nonnegative and , , whenever , . Proof. Define dist

,

. Now, suppose

is essentially nonnegative and let

. For every

, if

, then

for all

and all

, whereas, if

, then it follows from the continuity of

and the sample continuity of

that

for all

sufficiently small and all

. Thus,

for all sufficiently small

and all

, and hence,

. It now follows from Lemma 2.1 of [

46], with

, that

for all

.

Conversely, suppose that

is invariant with respect to (

4), let

, and suppose, ad absurdum,

x is such that there exists

such that

and

for all

. Then, since

f and

D are continuous and a Wiener process

can be positive or negative with equal probability, there exists sufficiently small

such that

for all

, where

is the solution to (

4). Hence,

is strictly decreasing on

with nonzero probability, and thus,

for all

, which leads to a contradiction. ☐

It follows from Proposition 1 that if

, then

,

, if and only if

f is essentially nonnegative and

,

, whenever

,

. In this case, we say that (

4) is a

stochastic nonnegative dynamical system. Henceforth, we assume that

f and

D are such that the nonlinear stochastic dynamical system (

4) is a stochastic nonnegative dynamical system.

4. Semistability of Stochastic Nonnegative Dynamical Systems

As shown in [

30], thermodynamic systems give rise to systems that possess a continuum of equilibria. In this section, we develop a stability analysis framework for stochastic systems having a continuum of equilibria. Since, as noted in [

37,

52], every neighborhood of a non-isolated equilibrium contains another equilibrium, a non-isolated equilibrium cannot be asymptotically stable. Hence, asymptotic stability is not the appropriate notion of stability for systems having a continuum of equilibria. Two notions that are of particular relevance to such systems are

convergence and

semistability. Convergence is the property whereby every system solution converges to a limit point that may depend on the system initial condition. Semistability is the additional requirement that all solutions converge to limit points that are Lyapunov stable. Semistability for an equilibrium thus implies Lyapunov stability, and is implied by asymptotic stability.

In this section, we present necessary and sufficient conditions for stochastic semistability. It is important to note that stochastic semistability theory was also developed in [

37] for a stronger set of stability in probability definitions. The results in this section, though parallel the results in [

37], are predicated on a weaker set of stability in probability definitions, and hence, provide a stronger set of semistability results. First, we present several key propositions. The following proposition gives a sufficient condition for a trajectory of (

4) to converge to a limit point. For this result,

denotes a positively invariant set with respect to (

4) and

denotes the image of

under the flow

, that is,

.

Proposition 2. Consider the nonlinear stochastic nonnegative dynamical system (4) and let . If the limit set of (4) contains a Lyapunov stable in probability (with respect to ) equilibrium point y, then , that is, as . Proof. Suppose is Lyapunov stable in probability with respect to and let be a relatively open neighborhood of y. Since y is Lyapunov stable in probability with respect to , there exists a relatively open neighborhood of y such that as for every . Now, since , it follows that there exists such that . Hence, for every . Since is arbitrary, it follows that . Thus, as for every sequence , and hence, as . ☐

The following definition introduces the notion of stochastic semistability.

Definition 8. An equilibrium solution of (

4)

is stochastically semistable with respect to

if the following statements hold. - (i)

For every , Equivalently, for every and , there exist such that, for all , - (ii)

. Equivalently, for every , there exist such that if , then

The dynamical system (

4)

is stochastically semistable with respect to

if every equilibrium solution of (

4)

is stochastically semistable with respect to . Finally, the dynamical system (

4)

is globally stochastically semistable with respect to

if (

i)

holds and for all . Note that if

only satisfies (i) in Definition 8, then the equilibrium solution

of (

4) is Lyapunov stable in probability with respect to

.

Definition 9. For a given , the -domain of semistability with respect to

is the set of points such that if , , is a solution to (4) with , then converges to a Lyapunov stable (with respect to ) in probability equilibrium point in with probability greater than or equal to . Note that if (

4) is stochastically semistable, then its

-domain of semistability contains the set of equilibria in its interior.

Next, we present alternative equivalent characterizations for stochastic semistability of (

4). This result is an extension of Proposition 2.2 of [

37] to the more general semistability definition presented in this paper.

Proposition 3. Consider the nonlinear stochastic nonnegative dynamical system given by (4). Then the following statements are equivalent: (i) is stochastically semistable with respect to .

() For every and , there exist class and functions and , respectively, and such that, if , thenand , . (iii) For every and , there exist class functions and , a class function , and such that, if , then Proof. To show that (i) implies (ii), suppose (

4) is stochastically semistable with respect to

and let

. It follows from Lemma 1 that for every

there exists

and a class

function

such that if

, then

,

. Without loss of generality, we can assume that

is such that

is contained in the

-domain of semistability of (

4). Hence, for every

,

and, consequently,

.

For every

,

, and

, define

to be the infimum of

T with the property that

, that is,

For each

and

, the function

is nonnegative and nonincreasing in

, and

for sufficiently large

.

Next, let . We claim that T is well defined. To show this, consider , , and . Since , it follows from the sample continuity of s that, for every and , there exists an open neighborhood of such that for every . Hence, implying that the function is upper semicontinuous at the arbitrarily chosen point , and hence on . Since an upper semicontinuous function defined on a compact set achieves its supremum, it follows that is well defined. The function is the pointwise supremum of a collection of nonnegative and nonincreasing functions, and hence is nonnegative and nonincreasing. Moreover, for every .

Let . The function is positive, continuous, strictly decreasing, and as . Choose . Then is positive, continuous, strictly decreasing, and . Furthermore, . Hence, , .

Next, to show that (ii) implies (iii), suppose (ii) holds and let

. Then it follows from (i) of Lemma 1 that

is Lyapunov stable in probability with respect to

. For every

, choosing

sufficiently close to

, it follows from the inequality

,

, that trajectories of (

4) starting sufficiently close to

are bounded, and hence, the positive limit set of (

4) is nonempty. Since

as

, it follows that the positive limit set is contained in

as

.

Now, since every point in

is Lyapunov stable in probability with respect to

, it follows from Proposition 2 that

as

, where

is Lyapunov stable in probability with respect to

. If

, then it follows using similar arguments as above that there exists a class

function

such that

for every

satisfying

and

. Hence,

Next, consider the case where

and let

be a class

function. In this case, note that

and hence, it follows using similar arguments as above that there exists a class

function

such that

Now, note that

is of class

(by [

48]), Lemma 4.2), and hence, (iii) follows immediately.

Finally, to show that (iii) implies (i), suppose (iii) holds and let

. Then it follows that for every

,

that is,

, where

and

is of class

(by [

48], Lemma 4.2). It now follows from (i) of Lemma 1 that

is Lyapunov stable in probability with respect to

. Since

was chosen arbitrarily, it follows that every equilibrium point is Lyapunov stable in probability with respect to

. Furthermore,

.

Choosing

sufficiently close to

, it follows from the inequality

that trajectories of (

4) are almost surely bounded as

, and hence, the positive limit set of (

4) is nonempty as

. Since every point in

is Lyapunov stable in probability with respect to

, it follows from Proposition 2 that

as

, where

is Lyapunov stable in probability with respect to

. Hence, by Definition 8, (

4) is stochastically semistable with respect to

. ☐

Next, we develop necessary and sufficient conditions for stochastic semistability. First, we present sufficient conditions for stochastic semistability. The following theorems generalize Theorems 3.1 and 3.2 of [

37].

Theorem 3. Consider the nonlinear stochastic nonnegative dynamical system (4). Let be a relatively open neighborhood of and assume that there exists a two-times continuously differentiable function such thatIf every equilibrium point of (4) is Lyapunov stable in probability with respect to , then (4) is stochastically semistable with respect to . Moreover, if and as , then (4) is globally stochastically semistable with respect to . Proof. Since every equilibrium point of (

4) is Lyapunov stable in probability with respect to

by assumption, for every

, there exists a relatively open neighborhood

of

z such that

,

, is bounded and contained in

as

. The set

,

, is a relatively open neighborhood of

contained in

. Consider

so that there exists

such that

and

,

, as

. Since

is bounded and invariant with respect to the solution of (

4) as

, it follows that

is invariant with respect to the solution of (

4) as

. Furthermore, it follows from (

52) that

,

, and hence, since

is bounded it follows from Theorem 2 that

as

.

It is easy to see that

by assumption and

,

. Therefore,

as

and

, which implies that

. Finally, since every point in

is Lyapunov stable in probability with respect to

, it follows from Proposition 2 that

as

, where

is Lyapunov stable in probability with respect to

. Hence, by Definition 8, (

4) is semistable. For

global stochastic semistability with respect to

follows from identical arguments using the radially unbounded condition on

. ☐

Next, we present a slightly more general theorem for stochastic semistability wherein we do not assume that all points in are Lyapunov stable in probability with respect to but rather we assume that all points in are Lyapunov stable in probability with respect to for some continuous function .

Theorem 4. Consider the nonlinear stochastic nonnegative dynamical system (4) and let be a relatively open neighborhood of . Assume that there exist a two-times continuously differentiable function and a continuous function such thatIf every point in the set ≜

is Lyapunov stable in probability with respect to , then (4) is stochastically semistable with respect to . Moreover, if and as , then (4) is globally stochastically semistable with respect to . Proof. Since, by assumption, (

4) is Lyapunov stable in probability with respect to

for all

, there exists a relatively open neighborhood

of

z such that

,

, is bounded and contained in

as

. The set

is a relatively open neighborhood of

contained in

. Consider

so that there exists

such that

and

,

, as

. Since

is bounded it follows that

is invariant with respect to the solution of (

4) as

. Furthermore, it follows from (

53) that

,

, and hence, since

is bounded and invariant with respect to the solution of (

4) as

, it follows from Theorem 2 that

as

. Therefore,

as

and

, which implies that

.

Finally, since every point in

is Lyapunov stable in probability with respect to

, it follows from Proposition 2 that

as

, where

is Lyapunov stable in probability with respect to

. Hence, by definition, (

4) is semistable. For

global stochastic semistability with respect to

follows from identical arguments using the radially unbounded condition on

. ☐

Example 1. Consider the nonlinear stochastic nonnegative dynamical system on given by ([37])where , , , are Lipschitz continuous and . Equations (

54)

and (55)

represent the collective dynamics of two subsystems which interact by exchanging energy. The energy states of the subsystems are described by the scalar random variables and . The unity coefficients scaling , , , appearing in (

54)

and (55)

represent the topology of the energy exchange between the subsystems. More specifically, given , , a coefficient of 1 denotes that subsystem j receives energy from subsystem i, and a coefficient of zero denotes that subsystem i and j are disconnected, and hence, cannot exchange energies. The connectivity between the subsystems can be represented by a graph having two nodes such that has a directed edge from node i to node j if and only if subsystem j can receive energy from subsystem i. Since the coefficients scaling , , , are constants, the graph topology is fixed. Furthermore, note that the directed graph is weakly connected since the underlying undirected graph is connected; that is, every subsystem receives energy from, or delivers energy to, at least one other subsystem.

Note that (

54)

and (55)

can be cast in the form of (

4)

withwhere the stochastic term represents probabilistic variations in the energy transfer between the two subsystems. Furthermore, note that sincewhere , it follows that , which implies that the total system energy is conserved. In this example, we use Theorem 3 to analyze the collective behavior of (

54)

and (55).

Specifically, we are interested in the energy equipartitioning behavior of the subsystems. For this purpose, we make the assumptions if and only if , , and for . The first assumption implies that if the energies in the connected subsystems i and j are equal, then energy exchange between the subsystems is not possible. This statement is reminiscent of the zeroth law of thermodynamics, which postulates that temperature equality is a necessary and sufficient condition for thermal equilibrium. The second assumption implies that energy flows from more energetic subsystems to less energetic subsystems and is reminiscent of the second law of thermodynamics, which states that heat (energy) must flow in the direction of lower temperatures. It is important to note here that due to the stochastic term capturing probabilistic variations in the energy transfer between the subsystems, the second assumption requires that the scaled net energy flow is bounded by the negative intensity of the diffusion coefficient given by .

To show that (54) and (55) is stochastically semistable with respect to , note that and consider the Lyapunov function candidate , where . Now, it follows thatwhich implies that is Lyapunov stable in probability with respect to . Next, it is easy to see that when , and hence, , . Therefore, it follows from Theorem 3 that is stochastically semistable with respect to for all . Furthermore, note that , , impliesNote that an identical assertion holds for the collective dynamics of n subsystems with a connected undirected energy graph topology. △

Finally, we extend Theorem 3.3 of [

37] to provide a converse Lyapunov theorem for stochastic semistability. For this result, recall that

for every

. Also note that it follows from (

9) that

.

Theorem 5. Consider the nonlinear stochastic nonnegative dynamical system (4). Suppose (4) is stochastically semistable with a ρ-domain of semistability . Then there exist a continuous nonnegative function and a class function such that (i) , , () , , and () , . Proof. Let

denote the set of all sample trajectories of (

4) for which

and

,

, and let

,

, denote the indicator function defined on the set

, that is,

Note that by definition

for all

. Define the function

by

and note that

is well defined since (

4) is stochastically semistable with respect to

. Clearly, (i) holds. Furthermore, since

,

, it follows that (ii) holds with

.

To show that

is continuous on

, define

by

, and denote

Note that

is open and contains an open neighborhood of

. Consider

and define

. Then it follows from stochastic semistability of (

4) that there exists

such that

. Consequently,

for all

, and hence, it follows that

is well defined. Since

is open, there exists a neighborhood

such that

. Hence,

is a neighborhood of

z such that

.

Next, choose

such that

and

. Then, for every

and

,

Therefore, for every

,

Hence,

Now, since

and

satisfy (

6) and (

7), it follows from continuous dependence of solutions

on system initial conditions ([

32], Theorem 7.3.1) and (

60) that

is continuous on

.

To show that

is continuous on

, consider

. Let

be a sequence in

that converges to

. Since

is Lyapunov stable in probability with respect to

, it follows that

is the unique solution to (

4) with

. By continuous dependence of solutions

on system initial conditions ([

32], Theorem 7.3.1),

as

,

.

Let

and note that it follows from (ii) of Proposition 3 that there exists

such that for every solution of (

4) in

there exists

such that

for all

. Next, note that there exists a positive integer

such that

for all

. Now, it follows from (

57) that

Next, it follows from ([

32], Theorem 7.3.1) that

converges to

uniformly on

. Hence,

which implies that there exists a positive integer

such that

for all

. Combining (

62) with the above result yields

for all

, which implies that

.

Finally, we show that

is negative along the solution of (

4) on

. Note that for every

and

such that

, it follows from the definition of

that

is reached at some time

such that

. Hence, it follows from the law of iterated expectation that

which implies that

and hence, (iii) holds. ☐

5. Conservation of Energy and the First Law of Thermodynamics: A Stochastic Perspective

In this section, we extend the thermodynamic model proposed in [

30] to include probabilistic variations in the instantaneous rate of energy dissipation as well as probabilistic variations in the energy transfer between the subsystems. Even though the treatment in this and the next two sections closely parallels that of [

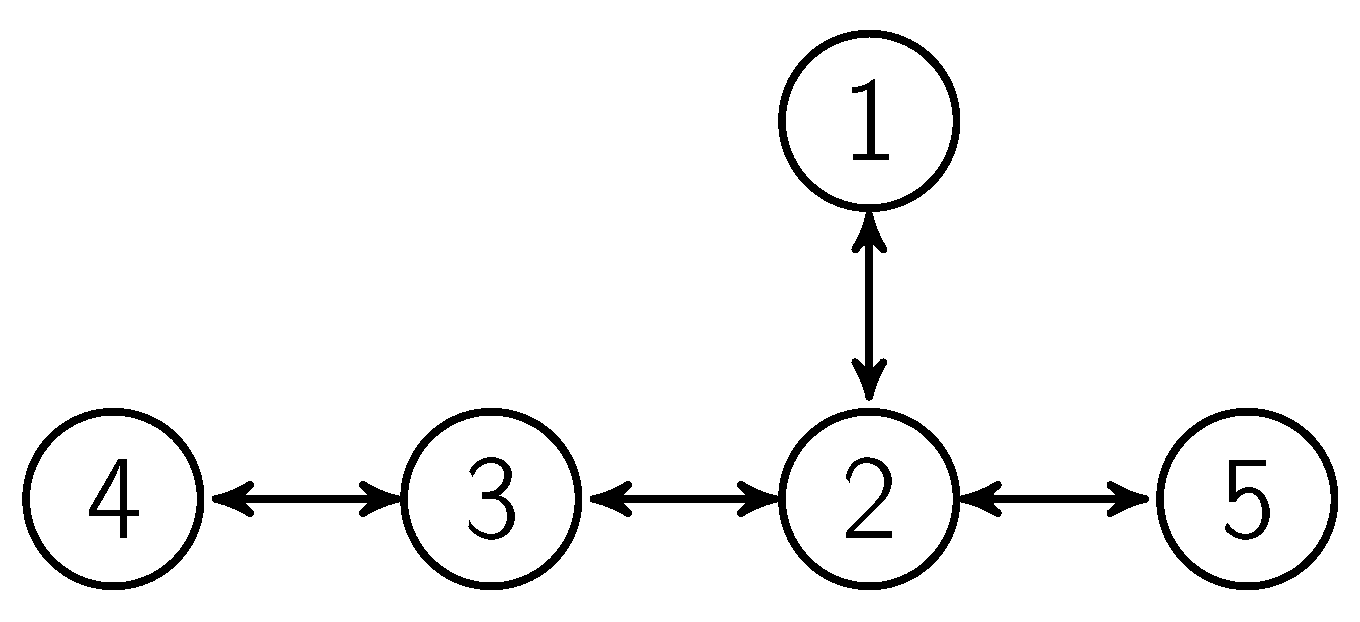

30] for deterministic thermodynamics, the thermodynamic models and proofs of our results are rendered more difficult due to the inclusion of stochastic disturbances. To formulate our state space stochastic thermodynamic model, we consider the large-scale stochastic dynamical system

shown in

Figure 1 involving energy exchange between

q interconnected subsystems and use the notation developed in [

30].

Specifically, denotes the energy (and hence a nonnegative quantity) of the i-th subsystem, denotes the external power (heat flux) supplied to (or extracted from) the i-th subsystem, , , denotes the instantaneous rate of energy (heat) flow from the j-th subsystem to the i-th subsystem, , , , denotes the instantaneous rate of energy (heat) received or delivered to the i-th subsystem from all other subsystems due to the stochastic disturbance , , denotes the instantaneous rate of energy (heat) dissipation from the i-th subsystem to the environment, and , denotes the instantaneous rate of energy (heat) dissipation from the i-th subsystem to the environment due to the stochastic disturbance . Here we assume that , , , , and , are locally Lipschitz continuous on and satisfy a linear growth condition, and , are bounded piecewise continuous functions of time.

An

energy balance for the

i-th subsystem yields

or, equivalently, in vector form,

where

,

and

are, respectively, a

-dimensional and

-dimensional independent standard Wiener process (i.e., Brownian motion) defined on a complete filtered probability space

,

is independent of

, and

,

Here, the stochastic disturbance

in (

66) captures probabilistic variations in the energy transfer rates between compartments and the stochastic disturbance

captures probabilistic variations in the instantaneous rate of energy dissipation.

Equivalently, (

65) can be rewritten as

or, in vector form,

where

, yielding a

differential energy balance equation that characterizes energy flow between subsystems of the large-scale stochastic dynamical system

. Here we assume that

satisfies sufficient regularity conditions such that (

68) has a unique solution forward in time. Specifically, we assume that the external power (heat flux)

supplied to the large-scale stochastic dynamical system

consists of measurable functions

adapted to the filtration

such that

,

, for all

,

is independent of

,

,

, and

, where

, and hence,

is non-anticipative. Furthermore, we assume that

takes values in a compact metrizable set. In this case, it follows from Theorem 2.2.4 of [

53] that there exists a path-wise unique solution to (

68) in

.

Equation (

66) or, equivalently, (

68) is a statement of the

first law for stochastic thermodynamics as applied to

isochoric transformations (i.e., constant subsystem volume transformations) for each of the subsystems

. To see this, let the total energy in the large-scale stochastic dynamical system

be given by

, where

and

, and let the net energy received by the large-scale dynamical system

over the time interval

be given by

where

, is the solution to (

68). Then, premultiplying (

66) by

and using the fact that

and

, it follows that

where

denotes the variation in the total energy of the large-scale stochastic dynamical system

over the time interval

.

For our large-scale stochastic dynamical system model , we assume that , , , , and , , whenever . In this case, , is essentially nonnegative. The above constraint implies that if the energy of the j-th subsystem of is zero, then this subsystem cannot supply any energy to its surroundings nor dissipate energy to the environment. Moreover, we assume that whenever , , , which implies that when the energy of the i-th subsystem is zero, then no energy can be extracted from this subsystem.

The following proposition is needed for the main results of this paper.

Proposition 4. Consider the large-scale stochastic dynamical system with differential energy balance equation given by (68). Suppose , , , , and , , whenever , , and whenever , , . Then the solution , , to (68) is nonnegative for all nonnegative initial conditions . Proof. First note that , is essentially nonnegative, , , and , , whenever , . Next, since whenever , , , it follows that for all and whenever and for all and . This implies that for all nonnegative initial conditions , every sample trajectory of is directed towards the interior of the nonnegative orthant whenever , and hence, remains nonnegative almost surely for all . ☐

Next, premultiplying (

66) by

, using Proposition 4, and using the fact that

and

, it follows that

Now, for the large-scale stochastic dynamical system

, define the input

and the output

. Hence, it follows from (

71) that for any two

-stopping times

and

such that

almost surely,

Thus, the large-scale stochastic dynamical system

is

stochastically lossless [

54] with respect to the

energy supply rate and with the

energy storage function . In other words, the difference between the supplied system energy and the stored system energy is a martingale with respect to the differential energy balance system filtration.

The following lemma is required for our next result.

Lemma 2. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68). Then, for every equilibrium state and every and , there exist , , and such that, for every with , there exists such that , , and , . Proof. Note that with

, the state

is an equilibrium state of (

68). Let

and

, and define

Note that for every

,

,

, and

, and for every

,

,

, and

. Moreover, it follows from Lévy’s modulus of continuity theorem [

55] that for sufficiently small

,

and

, where

.

Next, let

and

be given and, for sufficiently small

, let

be such that

(The existence of such an

is guaranteed since

,

, and

as

.) Now, let

be such that

. With

and

it follows that

is a solution to (

68).

The result is now immediate by noting that

and

and hence,

which proves the result. ☐

It follows from Lemma 2 that the large-scale stochastic dynamical system

with the differential energy balance Equation (

68) is

stochastically reachable from and

stochastically controllable to the origin in

. Recall from [

54] that the large-scale stochastic dynamical system

with the differential energy balance Equation (

68) is stochastically reachable from the origin in

if, for all

and

, there exist a finite random variable

, called the

first hitting time, defined by

and a

-adapted square integrable input

defined on

such that the state

,

, can be driven from

to

and

, where

and the supremum is taken pointwise. Alternatively,

is stochastically controllable to the origin in

if, for all

,

, there exists a finite random variable

defined by

and a

-adapted square integrable input

defined on

such that the state

, can be driven from

to

and

with a pointwise supremum.

We let denote the set of measurable bounded -valued stochastic processes on the semi-infinite interval consisting of power inputs (heat fluxes) to the large-scale stochastic dynamical system such that for every the system energy state can be driven from to by . Furthermore, we let denote the set of measurable bounded -valued stochastic processes on the semi-infinite interval consisting of power inputs (heat fluxes) to the large-scale stochastic dynamical system such that the system energy state can be driven from , to by . Finally, let be an input space that is a subset of measurable bounded -valued stochastic processes on . The spaces , , and are assumed to be closed under the shift operator, that is, if (respectively, or ), then the function defined by is contained in (respectively, or ) for all .

The next result establishes the uniqueness of the internal energy function

, for our large-scale stochastic dynamical system

. For this result define the

available energy of the large-scale stochastic dynamical system

by

where

,

, is the solution to (

68) with

and admissible inputs

. The infimum in (

79) is taken over all

-measurable inputs

, all finite

-stopping times

, and all system sample paths with initial value

and terminal value left free. Furthermore, define the

required energy supply of the large-scale stochastic dynamical system

by

The infimum in (

80) is taken over all system sample paths starting from

and ending at

at time

, and all times

.

Note that the available energy is the maximum amount of stored energy (net heat) that can be extracted from the large-scale stochastic dynamical system at any finite stopping time , and the required energy supply is the minimum amount of energy (net heat) that can be delivered to the large-scale stochastic dynamical system such that, for all , .

Theorem 6. Consider the large-scale stochastic dynamical system with differential energy balance equation given by (68). Then is stochastically lossless with respect to the energy supply rate , where and , and with the unique energy storage function corresponding to the total energy of the system given bywhere , , is the solution to (68) with admissible input , , and . Furthermore, Proof. Note that it follows from (

71) that

is stochastically lossless with respect to the energy supply rate

and with the energy storage function

. Since, by Lemma 2,

is reachable from and controllable to the origin in

, it follows from (

71), with

and

for some

and

, that

Alternatively, it follows from (

71), with

for some

and

, that

Thus, (

83) and (

84) imply that (

81) is satisfied and

Conversely, it follows from (

71) and the fact that

, that, for all

and

,

which implies that

Furthermore, it follows from the definition of

that

, since the infimum in (

79) is taken over the set of values containing the zero value (

).

Next, note that it follows from (

71), with

and

,

, for all

and

, that

Moreover, since the system

is reachable from the origin, it follows that for every

, there exists

and

such that

is finite, and hence,

. Finally, combining (

85), (

87), and (

88), it follows that (

82) holds. ☐

It follows from (

82) and the definitions of available energy

and the required energy supply

,

, that the large-scale stochastic dynamical system

can deliver to its surroundings all of its stored subsystem energies and can store all of the work done to all of its subsystems. This is in essence a statement of the first law of stochastic thermodynamics and places no limitation on the possibility of transforming heat into work or work into heat. In the case where

, it follows from (

71) and the fact that

, that the zero solution

of the large-scale stochastic dynamical system

with the differential energy balance Equation (

68) is Lyapunov stable in probability with respect to

with Lyapunov function

corresponding to the total energy in the system.

6. Entropy and the Second Law of Thermodynamics

As for the deterministic dynamical thermodynamic model presented in [

30], the nonlinear differential energy balance Equation (

68) can exhibit a full range of nonlinear behavior, including bifurcations, limit cycles, and even chaos. However, a thermodynamically consistent energy flow model should ensure that the evolution of the system energy is diffusive in character with convergent subsystem energies. As established in [

30], such a system model would guarantee the absence of the Poincaré recurrence phenomenon [

56]. To ensure a thermodynamically consistent energy flow model, we require the following axioms [

57]. For the statement of these axioms, we first recall the following graph-theoretic notions.

Definition 10 ([

58]).

A directed graph

associated with the connectivity matrix

has vertices

and an arc

from vertex i to vertex j, , if and only if . A graph

associated with the connectivity matrix is a directed graph for which the arc set

is symmetric, that is, . We say that is strongly connected

if for any ordered pair of vertices , , there exists a path

(i.e., a sequence of arcs) leading from i to j. Recall that the connectivity matrix

is irreducible, that is, there does not exist a permutation matrix such that

is cogredient to a lower-block triangular matrix, if and only if

is strongly connected (see Theorem 2.7 of [

58]). Let

, denote the net energy flow from the

j-th subsystem

to the

i-th subsystem

of the large-scale stochastic dynamical system

.

Axiom (i): For the connectivity matrix

associated with the large-scale stochastic dynamical system

defined by

and

rank

, and for

,

,

if and only if

.

Axiom (ii): For

,

,

, and, for all

,

As discussed in [

30] for the deterministic thermodynamic problem, the fact that

if and only if

, implies that subsystems

and

of

are

connected; alternatively,

implies that

and

are

disconnected. Axiom (i) implies that if the energies in the connected subsystems

and

are equal, then energy exchange between these subsystems is not possible. This statement is consistent with the

zeroth law of thermodynamics, which postulates that temperature equality is a necessary and sufficient condition for thermal equilibrium. Furthermore, it follows from the fact that

and rank

that the connectivity matrix

is irreducible, which implies that for any pair of subsystems

and

,

, of

there exists a sequence of connectors (arcs) of

that connect

and

.

Axiom (ii) implies that energy flows from more energetic subsystems to less energetic subsystems and is consistent with the

second law of thermodynamics, which states that heat (energy) must flow in the direction of lower temperatures [

59]. Furthermore, note that

,

, which implies conservation of energy between lossless subsystems. With

and

, Axioms (i) and (ii) along with the fact that

,

, imply that at a given instant of time, energy can only be transported, stored, or dissipated but not created, and the maximum amount of energy that can be transported and/or dissipated from a subsystem cannot exceed the energy in the subsystem. Finally, it is important to note here that due to the stochastic disturbance term

capturing probabilistic variations in heat transfer between the subsystems, Axiom (ii) requires that the scaled net energy flow between the subsystems is bounded by the negative intensity of the system diffusion.

Next, we show that the classical Clausius equality and inequality for reversible and irreversible thermodynamics over cyclic motions are satisfied for our stochastic thermodynamically consistent energy flow model. For this result ∮ denotes a cyclic integral evaluated along an arbitrary closed path of (

68) in

; that is,

with

and

such that

.

Proposition 5. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68), and assume that Axioms (i) and (ii) hold. Then, for all , , and such that ,where , , , is the amount of net energy (heat) received by the i-th subsystem over the infinitesimal time interval , and , , is the solution to (68) with initial condition . Furthermore,if and only if there exists a continuous function such that , . Proof. Since, by Proposition 4,

, and

,

, it follows from (

68), Ito’s lemma, and Axiom (ii) that, for all

,

which proves (

92).

To show (

93), note that it follows from (

94), Axiom (i), and Axiom (ii) that (

93) holds if and only if

,

, or, equivalently, there exists a continuous function

such that

. ☐

Inequality (

92) is a generalization of Clausius’ inequality for reversible and irreversible thermodynamics as applied to large-scale stochastic dynamical systems and restricts the manner in which the system dissipates (scaled) heat over cyclic motions. Note that the Clausius inequality (

92) for the stochastic thermodynamic model is stronger than the Clausius inequality for the deterministic model presented in [

30].

It follows from Axiom (i) and (

68) that for the

adiabatically isolated large-scale stochastic dynamical system

(that is,

and

), the energy states given by

, correspond to the equilibrium energy states of

. Thus, as in classical thermodynamics, we can define an equilibrium process as a process in which the trajectory of the large-scale stochastic dynamical system

moves along the equilibrium manifold

corresponding to the set of equilibria of the isolated [

60] system

. The power input that can generate such a trajectory can be given by

, where

is such that

. Our definition of an equilibrium transformation involves a continuous succession of intermediate states that differ by infinitesimals from equilibrium system states and thus can only connect initial and final states, which are states of equilibrium. This process need not be slowly varying, and hence, equilibrium and quasistatic processes are not synonymous in this paper. Alternatively, a

nonequilibrium process is a process that does not lie on the equilibrium manifold

. Hence, it follows from Axiom (i) that for an equilibrium process

,

, and thus, by Proposition 5, inequality (

92) is satisfied as an equality. Alternatively, for a nonequilibrium process it follows from Axioms (i) and (ii) that (

92) is satisfied as a strict inequality.

Next, we give a stochastic definition of entropy for the large-scale stochastic dynamical system that is consistent with the classical thermodynamic definition of entropy.

Definition 11. For the large-scale stochastic dynamical system with differential energy balance Equation (68), a function satisfyingfor every -stopping times and is called the entropy

function of . Note that it follows from Definition 11 that the difference between the system entropy production and the stored system entropy is a submartingale with respect to the differential energy balance filtration.

Next, we show that (

92) guarantees the existence of an entropy function for

. For this result define the

available entropy of the large-scale stochastic dynamical system

by

where

and

, and define the

required entropy supply of the large-scale stochastic dynamical system

by

where

. Note that the available entropy

is the minimum amount of scaled heat (entropy) that can be extracted from the large-scale stochastic dynamical system

in order to transfer it from an initial state

to

. Alternatively, the required entropy supply

is the maximum amount of scaled heat (entropy) that can be delivered to

to transfer it from the origin to a given subset in the state space containing the initial state

over a finite stopping time. For further details, see [

54].

Theorem 7. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68), and assume that Axiom holds. Then there exists an entropy function for . Moreover, , , and , , are possible entropy functions for with . Finally, all entropy functions , , for satisfy Proof. Since, by Lemma 2,

is stochastically controllable to and stochastically reachable from the origin in

, it follows from (

96) and (

97) that

, and

, respectively. Next, let

, and let

be such that

and

, where

. In this case, it follows from (

92) that, for all

,

Next, using the strong Markov property we have

and hence, (

99) implies

Now, taking the supremum on both sides of (

101) over all

and

yields

Next, taking the infimum on both sides of (

102) over all

and

, we obtain

, which implies that

. Hence, the functions

and

are well defined.

Next, it follows from the definition of

, the law of iterated expectation, and the strong Markov property that for every stopping time

and

such that

and

,

which implies that

, satisfies (

95). Thus,

, is a possible entropy function for

. Note that with

it follows from (

92) that the supremum in (

96) is taken over the set of negative semidefinite values with one of the values being zero for

. Thus,

.

Similarly, it follows from the definition of

that for every stopping time

and

such that

and

,

which implies that

, satisfies (

95). Thus,

, is a possible entropy function for

. Note that with

it follows from (

92) that the supremum in (

97) is taken over the set of negative semidefinite values with one of the values being zero for

. Thus,

.

Next, suppose there exists an entropy function

for

, and let

in (

95). Then it follows from (

95) that

for all

and

, which implies that

Since

is arbitrary, it follows that

.

Alternatively, let

in (

95). Then it follows from (

95) that

for all

and

. Hence,

which, since

is arbitrary, implies that

. Thus, all entropy functions for

satisfy (

98). ☐

It is important to note that inequality (

92) is equivalent to the existence of an entropy function for

. Sufficiency is simply a statement of Theorem 7, while necessity follows from (

95) with

. This definition of entropy leads to the second law of stochastic thermodynamics being viewed as an axiom in the context of stochastic (anti)cyclo-dissipative dynamical systems [

54].

The next result shows that all entropy functions for are continuous on .

Theorem 8. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68), and let be an entropy function of . Then is continuous on . Proof. Let

and

be such that

. Note that with

,

is an equilibrium point of the differential energy balance Equation (

68). Next, it follows from Lemma 2 that

is locally stochastically controllable, that is, for every

and

, the set of points that can be reached from and to

in time

T using admissible inputs

, satisfying

, contains a neighborhood of

.

Next, let and note that it follows from the continuity of , , , and that there exist and such that for every and , , , where and , denotes the solution to (68) with the initial condition . Furthermore, it follows from the local controllability of that for every , there exists a strictly increasing, continuous function such that , and for every such that , there exists and an input such that , , and . Hence, there exists such that for every such that , there exists and an input such that , and . In addition, it follows from Lemma 2 that is such that .

Next, since

,

, is continuous, it follows that there exists

such that

Hence, it follows that

Now, if

is an entropy function of

, then

or, equivalently,

If

, then combining (

110) and (

112) yields

Alternatively, if

, then (

113) can be derived by reversing the roles of

and

. Specifically, for

and

, (

113) becomes

Hence, since

is continuous and

is arbitrary, it follows that

is continuous on

. ☐

Next, as a direct consequence of Theorem 7, we show that all possible entropy functions of form a convex set, and hence, there exists a continuum of possible entropy functions for ranging from the required entropy supply to the available entropy .

Proposition 6. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68), and assume that Axioms and hold. Thenis an entropy function for . Proof. The result is a direct consequence of the reachability of

along with inequality (

95) by noting that if

and

satisfy (

95), then

satisfies (

95). ☐

It follows from Proposition 6 that Definition 11 does not provide enough information to define the entropy uniquely for nonequilibrium thermodynamic systems with differential energy balance Equation (

68). This difficulty has long been pointed out in [

61]. Two particular entropy functions for

can be computed a priori via the variational problems given by (

96) and (

97). For equilibrium thermodynamics, however, uniqueness is not an issue, as shown in the next proposition.

Proposition 7. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68), and assume that Axioms and hold. Then at every equilibrium state of the isolated system , the entropy , of is unique (modulo a constant of integration) and is given bywhere and denotes the vector natural logarithm given by . Proof. It follows from Axiom (i) and Axiom (ii) that for an equilibrium process

,

, and

. Consider the entropy function

given by (

96), and let

for some equilibrium state

. Then it follows from (

68) that

Since the solution

, to (

68) is nonnegative for all nonnegative initial conditions, it follows from Axiom (ii) that the infimum in (

116) is taken over the set of nonnegative values. However, the zero value of the infimum is achieved on an equilibrium process for which

,

,

. Thus,

Similarly, consider the entropy function

given by (

97). Then, it follows from (

68) that, for

,

Now, it follows from Axioms (i) and (ii) that the zero value of the supremum in (

118) is achieved on an equilibrium process and thus

Finally, it follows from (

98) that (

115) holds. ☐

The next proposition shows that if (

95) holds as an equality for some transformation starting and ending at an equilibrium point of the isolated dynamical system

, then this transformation must lie on the equilibrium manifold

.

Proposition 8. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68), and assume that Axioms ( and ( hold. Let denote an entropy of , and let denote the solution to (68) with and , where , . Thenif and only if there exists a continuous function such that , , and , . Proof. Since

and

are equilibrium states of the isolated dynamical system

, it follows from Proposition 7 that

Furthermore, it follows from (

68) that

Now, it follows from Axioms (i) and (ii) that (

118) holds if and only if

,

,

, or, equivalently, there exists a continuous function

such that

,

,

, and

. ☐

Even though it follows from Proposition 6 that Definition 11 does not provide a unique continuous entropy function for nonequilibrium systems, the next theorem gives a

unique,

two-times continuously differentiable entropy function for

for equilibrium and nonequilibrium processes. This result answers the long-standing question of how the entropy of a nonequilibrium state of a dynamical process should be defined [

61,

62], and establishes its global existence and uniqueness.

Theorem 9. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68), and assume that Axioms and hold. Then the function given bywhere , is a unique (modulo a constant of integration), two-times continuously differentiable entropy function of . Furthermore, for , , where , , denotes the solution to (68) and , (123) satisfiesfor every and . Proof. Since, by Proposition 1,

, and

,

, it follows that

Furthermore, in the case where

, it follows from Axiom (i), Axiom (ii), and (

125) that (

124) holds.

To show that (

123) is a unique, two-times continuously differentiable entropy function of

, let

be a two-times continuously differentiable entropy function of

so that

satisfies (

95) or, equivalently,

where

and

,

, denotes the solution to the differential energy balance Equation (

68), and

denotes the infinitesimal generator of

along the solution

. Hence, it follows from (

126) that

which implies that there exist continuous functions

and

such that

Now, equating coefficients of equal powers (of

S and

D), it follows that

,

,

, and

Hence,

, and

Thus, (

123) is a unique, two-times continuously differentiable entropy function for

. ☐

Note that it follows from Axiom (i), Axiom (ii), and the last equality in (

125) that the entropy function given by (

123) satisfies (

95) as an equality for an equilibrium process and as a strict inequality for a nonequilibrium process. For any entropy function of

, it follows from Proposition 8 that if (

95) holds as an equality for some transformation starting and ending at equilibrium points of the isolated system

, then this transformation must lie on the equilibrium manifold

. However, (

95) may hold as an equality for nonequilibrium processes starting and ending at nonequilibrium states.

The entropy expression given by (

123) is identical in form to the Boltzmann entropy for statistical thermodynamics. Due to the fact that the entropy given by (

123) is indeterminate to the extent of an additive constant, we can place the constant of integration

to zero by taking

. Since

given by (

123) achieves a maximum when all the subsystem energies

, are equal, the entropy of

can be thought of as a measure of the tendency of a system to lose the ability to do useful work, lose order, and settle to a more homogenous state. For further details see [

30].

Recalling that

, is the infinitesimal amount of the net heat received or dissipated by the

i-th subsystem of

over the infinitesimal time interval

, it follows from (

95) that

Inequality (

131) is analogous to the classical thermodynamic inequality for the variation of entropy during an infinitesimal irreversible transformation with the shifted subsystem energies

playing the role of the

i-th subsystem thermodynamic (absolute) temperatures. Specifically, note that since

, where

denotes the unique continuously differentiable

i-th subsystem entropy, it follows that

, defines the reciprocal of the subsystem thermodynamic temperatures. That is,

and

,

. Hence, in our formulation, temperature is a function derived from entropy and does not involve the primitive subjective notions of hotness and coldness.

It is important to note that in this paper we view subsystem temperatures to be synonymous with subsystem energies. Even though this does not limit the generality of our theory from a mathematical perspective, it can be physically limiting since it does not allow for the consideration of two subsystems of

having the same stored energy with one of the subsystems being at a higher temperature (i.e., hotter) than the other. This, however, can be easily addressed by assigning different specific heats (i.e., thermal capacities) for each of the compartments of the large-scale system

as shown in [

30].

7. Stochastic Semistability and Energy Equipartition

For the (adiabatically) isolated large-scale stochastic dynamical system

, (

95) yields the fundamental inequality

Inequality (

133) implies that, for any dynamical change in an adiabatically isolated large-scale stochastic dynamical system

, the entropy of the final state can never be less than the entropy of the initial state and is a generalization of Clausius’ version of the entropy principle, which states that for every irreversible (

nicht umkehrbar) process in an adiabatically isolated system beginning and ending at an

equilibrium state, the entropy of the final state is greater than or equal to the entropy of the initial state. Inequality (

133) is often identified with the second law of thermodynamics for stochastic systems and gives as a statement about entropy increase. It is important to stress that this result holds for an adiabatically isolated dynamical system. It is, however, possible with power (heat flux) supplied from an external system to reduce the entropy of the dynamical system

. The entropy of both systems taken together, however, cannot decrease.

As for the deterministic thermodynamic problem [

30], this observation implies that when the isolated large-scale dynamical system

with thermodynamically consistent energy flow characteristics (i.e., Axioms (i) and (ii) hold) is at a state of maximum entropy consistent with its energy, it cannot be subject to any further dynamical change since any such change would result in a decrease of entropy. This of course implies that the state of

maximum entropy is the stable state of an isolated system, and this equilibrium state has to be stochastically semistable. The following theorem generalizes Theorem 3.9 of [

30] to the stochastic setting.

Theorem 10. Consider the large-scale stochastic dynamical system with differential energy balance Equation (68) with , , and , and assume that Axioms and hold. Then, for every , is a stochastic semistable equilibrium state of (68). Furthermore, as and is a semistable equilibrium state. Finally, if for some , , , and if and only if [63], then the zero solution to (68) is a globally asymptotically stable in probability equilibrium state of (68). Proof. It follows from Axiom (i) and (ii) that

, is an equilibrium state of (

68). To show Lyapunov stability of the equilibrium state

, consider

as a Lyapunov function candidate. Note that for

,

,

Since, Axiom (ii) holds for all

, we have

Now, since

,

,

, and

, it follows from (

135) that

which establishes Lyapunov stability in probability of the equilibrium state

.

To show that

is stochastically semistable, let