1. Introduction

In recent decades, with the development of the Internet and mobile computing platforms, huge amounts of digital images and videos have emerged every day, leading to many large visual databases. How to search for similar results to a query image in these large databases with high search accuracy, high efficiency and low memory usage is the main challenge in the image retrieval research field.

To search for images in a large database and obtain candidates of the same object or location, most of which include occlusions and clutter, the first issue is how to represent the images, which leads to the image feature problem. Image features depict the information contained in the image; an ideal image feature should have a high degree of robustness and distinctiveness. This means that the feature should be as stable as possible if the visual content is generated from the same object or scene. In contrast, different visual content should produce distinguishable features, regardless of how similar their appearances might be. Under this constraint, local invariant features, such as SIFT (Scale Invariant Feature Transform) [

1], SURF (Speeded Up Robust Features) [

2], ORB (ORiented Brief) [

3], and FREAK (Fast Retina KeyPoint) [

4], which are distinctive and robust in many visual transformations, are widely adopted in applications such as object recognition [

5] and location recognition [

6].

There are three constraints to be considered in large-scale image retrieval applications: search accuracy, efficiency and memory usage [

7]. If local invariant features are the only image representation, because the number of local features inside a single image could be huge (greater than 1000), cross matching these local features between the query and the database could lead to long computation time. As a result, local features may not always provide effective representation in large-scale image retrieval. A reasonable solution is to build global representation based on local features. Bag of Words (BoW) is the most widely adopted global representation for this purpose [

8]. BoW builds a high-dimensional sparse histogram as a global feature for an image. There are three reasons for the success of BoW [

9] representations: they are based on local invariant features, they can be compared with standard distances, and they can rely on an inverted list to boost their retrieval efficiency. Nevertheless, BoW has some drawbacks [

10]. One of the most critical is the tradeoff between quantization error and search accuracy. In a quantization-based method, high search accuracy relies on a huge vocabulary to reduce the quantization error and improve the distinctiveness of the global features. However, this might result in high-dimensional sparse vectors. Although the inverted index mechanism [

8] and hierarchical clustering methods, such as vocabulary tree [

5], can improve memory usage and index efficiency, the encoding consumption cannot be improved effectively. Recently, MDPV (Metric Distance Permutation Vocabulary) uses permutations of metric distances to create compact visual words to attain time and space efficiency of vocabulary construction [

11]. Moreover, BoW adopts a simple counting method for each cluster to build the final representation. It fails to depict the elaborate details of each cluster, and it loses the distinctiveness of the original local features after the clustering step. Therefore, geometric verification is always leveraged as a post-processing step after BoW search to further improve the search accuracy [

6].

There are other global vector generation schemes that share a similar working flow to BoW, such as Vector quantization [

8], sparse coding [

12], soft assignment [

13], and locality-constrained linear coding [

14]. Based on sets of local descriptors, e.g., a codebook or dictionary is trained from a training set. With this dictionary, the set of local features of each image is encoded to new vectors and finally aggregated into a global vector. Some studies have shown that aggregated vector-based encoding methods provide excellent performance in visual recognition [

7,

8,

12,

14,

15]. These image representations are also produced from local features, yet by relying on a small codebook, they utilize an alternative aggregation stage to replace the BoW histogram. Their main merit is that they can be reduced to very compact vectors by dimension reduction while preserving high search accuracy. Moreover, small codebooks greatly reduce encoding time and provide another possibility to embed codebooks into mobile ends to generate global representation.

Among these aggregated vector encoding methods, VLAD (Vector of Locally Aggregated Descriptors) [

7] is a type of efficient encoding method. First, a training set is employed to generate a codebook by the K-means algorithm. Each local feature is assigned to its closest visual word. Unlike BoW, which simply counts the features assigned to each word, VLAD accumulates the residual vectors of all local features assigned to the same visual word. The final VLAD vector is generated by concatenating all residual vectors of the whole codebook. VLAD can be efficiently computed, and its effectiveness has been demonstrated in several tasks, such as instance retrieval [

16], scene recognition [

17], and action recognition [

3].

However, there are two crucial issues to be tackled:

- (1)

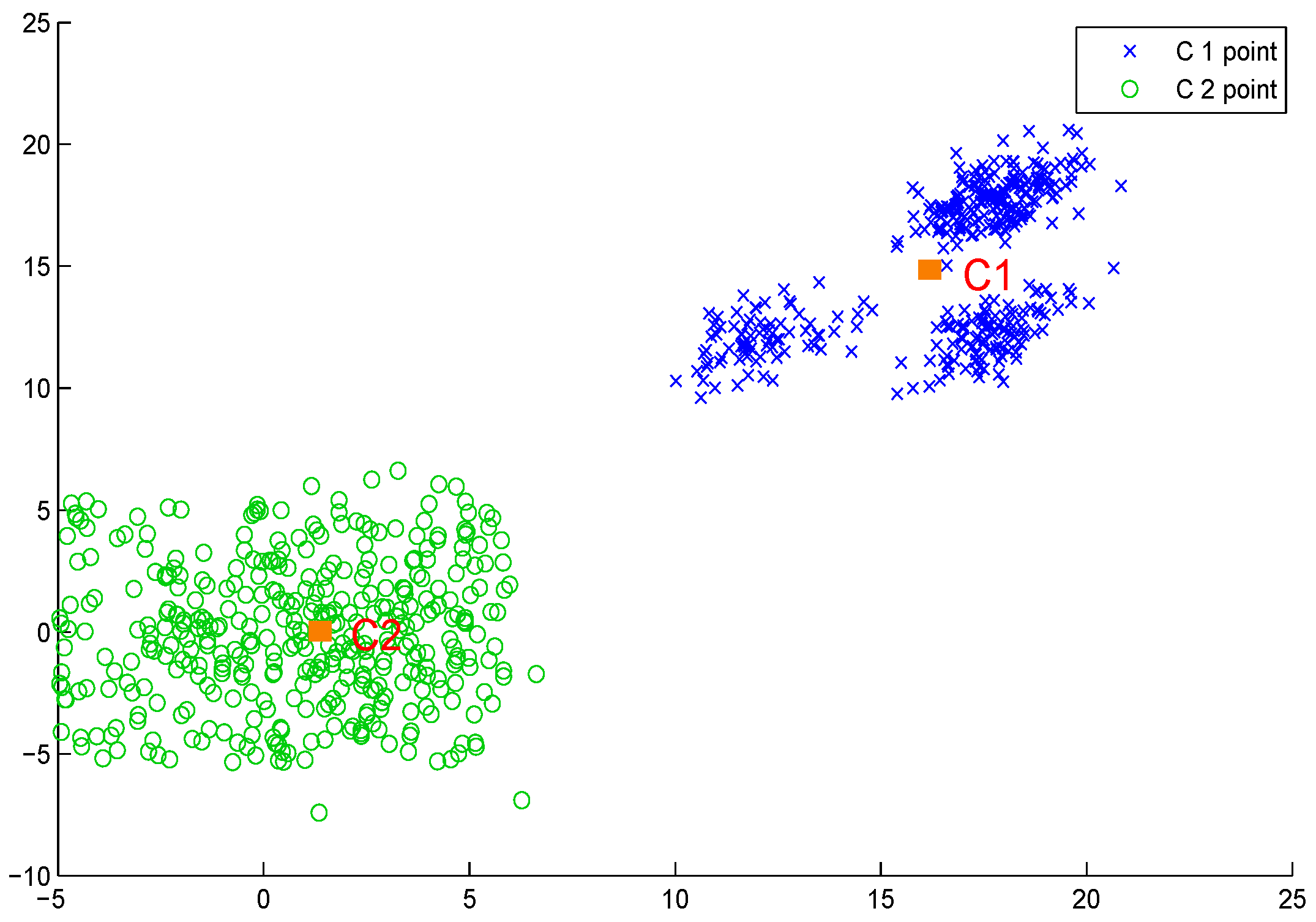

VLAD converts the feature description from a local image patch to a cluster. However, residuals can provide only partial cluster distribution information. As shown in

Figure 1, the two clusters share identical residual vectors, whereas it can be clearly found that clusters’ dispersion degrees differ significantly. More statistical information must be introduced to provide a more discriminatory representation.

- (2)

When facing the large-scale image database retrieval task, search time and memory consumption must be considered. An obvious advantage of aggregation-based image representations, such as VLAD or Fisher Vector, is that they use only a small vocabulary but achieve a great performance improvement. Furthermore, for web-scale search applications, a small vocabulary has a good advantage in terms of search time and memory usage. However, this could introduce a large quantization error during the encoding step and reduce the distinctiveness of the final VLAD.

In this paper, we aim to boost the aggregated vector with more detailed distribution information for every cluster. To this end, we utilize distribution entropy as the main method. As we know, in the information processing field, entropy can describe the dispersion degree. As shown in

Figure 1, we adopt distribution entropy as a complementary clue to the original residual vector to build a more thorough descriptor of each cluster. In this manner, even for those clusters sharing similar residual vectors, as in C1 and C2 in

Figure 1, the entropy part can still differentiate them.

We initially focus on employing distribution entropy inside each single cluster to boost the original VLAD. The distribution entropy vector is then generated in each cluster. We investigate the effective fusion mechanism to combine the entropy vector and residual vector. We propose compact fusion and extended fusion, which fuse these two vectors in each cluster or in the full representation accordingly. We evaluate these two fused patterns in experiments, and the results indicate that extended fusion generating the double length representations could always attain a better mAP, whereas compact fusion that builds the same size vector as the original VLAD provides lower memory cost and a moderate performance improvement.

Subsequently, we aim to further improve the performance of the distribution entropy boosted vector. Although there are many add-ons to improve the original VLAD, to the best of our knowledge, there is no method that adopts distribution entropy as a complementary clue. However, entropy boosted VLAD can be easily used in those improved VLAD algorithms. We apply these improvements to the entropy-boosted VLAD vector and evaluate their performance. Finally, we choose appropriate add-ons to improve the performance of the entropy boosted VLAD.

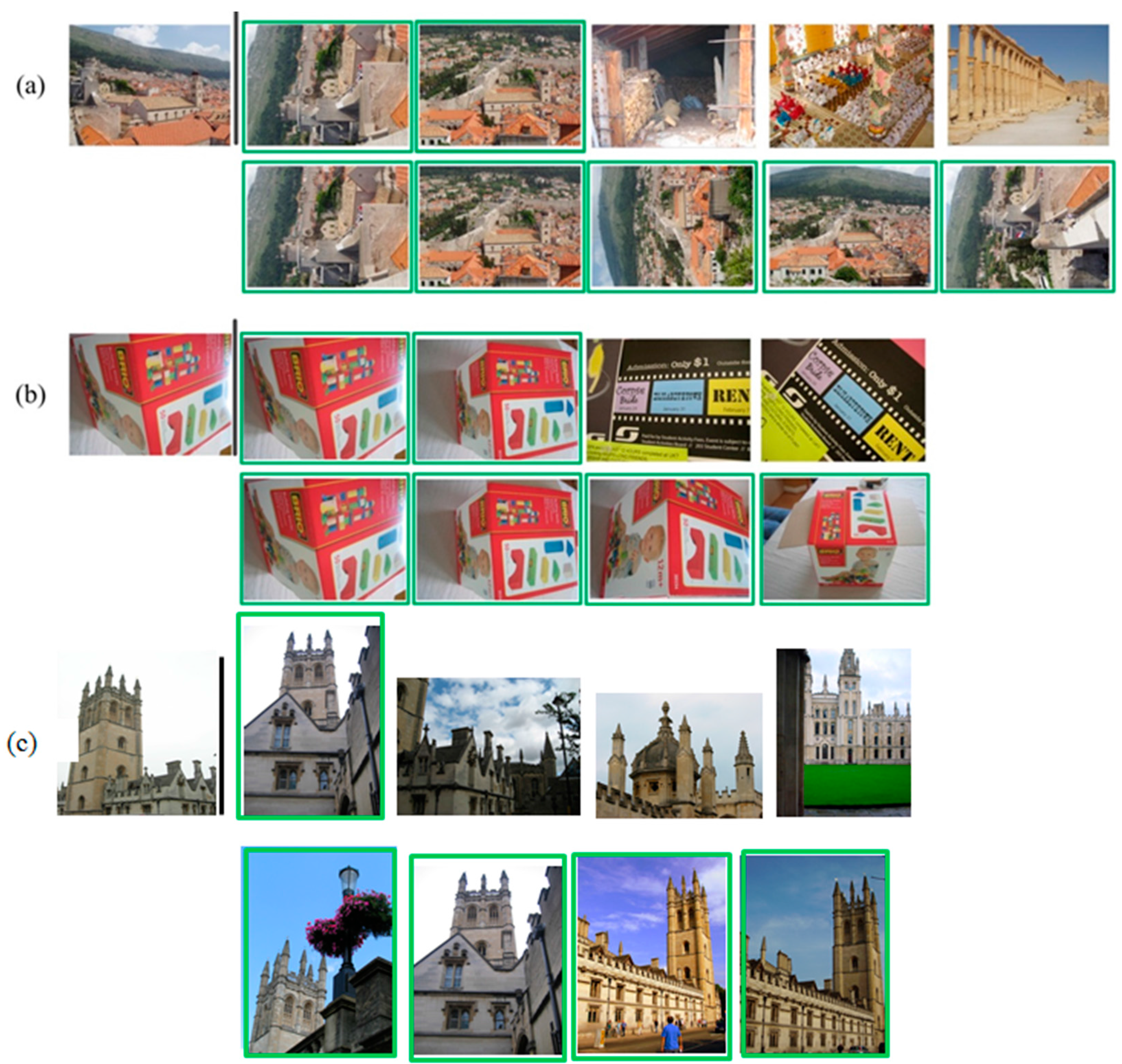

An example of image retrieval using the distribution entropy boosted mechanism is shown in

Figure 2.

Our main contributions are as follows:

- (1)

Analyze how the descriptor distribution entropy can affect the original VLAD descriptor. For further improvement of the search accuracy, we have proposed the application of a novel normalization stage meeting power law to enhance the distinctiveness of the distribution entropy representation, which is called difference normalization.

- (2)

To fuse the distribution entropy and the original VLAD vector, we investigate two fusion mechanisms. One is concatenation, which will produce a double-length vector as the original VLAD. The other is a weighted combination, which will generate a vector of the same length as the original VLAD.

- (3)

We survey the state-of-the-art improved VLAD algorithms and evaluate numerous existing studies and new extensions. We compare our proposed method to these algorithms and draw a conclusion that the distribution entropy boosted VLAD obtains performance competitive with the state-of-the-art among several challenging datasets.

The rest of this paper is organized as follows. We first review related work on global representation in large-scale image retrieval in

Section 2. We then introduce how to build the distribution entropy boosted VLAD representation and discuss the motivations in

Section 3. To validate the effectiveness of our proposed method, detailed experiments and main results are provided in

Section 4. Finally, we summarize the paper with conclusive remarks in

Section 5.

3. Proposed Method

In this section, we will discuss our scheme and our motivations. After the entropy boosted VLAD representation is generated, we further discuss the normalization effect and fusion of the residual vector and entropy vector.

3.1. Distribution Entropy Boosted VLAD

From a conventional point of view, entropy is a basic thermodynamic concept that measures the number of specific realizations. Shannon redefined the entropy concept as a measure of unpredictability of information content [

28], which can be described as:

where

is the probability of the system in each possible state

i. If entropy is used to describe a data distribution, the more disperse the distribution is, the greater the entropy, and vice versa.

VLAD accumulates residuals to describe each cluster’s distribution. Residuals that provide the summarized distance to the cluster center would yield only a one-dimensional distribution description. It is not rare that two clusters with different degrees of dispersion will share the same residual, as shown in

Figure 1. The reason might be that VLAD summarizes all residuals to the cluster center, and if some of the descriptors are distributed symmetrically, the residuals offset mutually, which makes distinctiveness of the residual vector limited. Therefore, in this paper, we investigate to add distribution entropy as a kind of complementary clue to residuals to build improved VLAD descriptors.

After the quantization stage in the original VLAD algorithm, every SIFT descriptor is assigned to a cluster center (visual word) . Here, we introduce the distribution entropy in each cluster as follows.

First, a set of SIFT descriptors is assigned to , and the distribution entropy is built on these descriptors.

Then, a distribution histogram is first built on the

-th dimension of

as

where

denotes the bin amount, and the histogram is in equal-interval. In the experiments, we set

B equal to 150.

The probability density can be further computed as:

where

.

Finally, we obtain the distribution entropy on the

-th dimension of cluster

as

The above distribution entropy gives the dispersive degree of the SIFT descriptor located inside each cluster. Large entropy means that the distribution of the descriptor is dispersed; otherwise, the distribution is concentrated. Because entropy is a statistical feature of data distribution, there might be some clusters with similar entropy degrees. Therefore, adopting entropy as a separate distribution feature of every cluster might lose some distinctiveness. This is why we leverage distribution entropy as a type of complementary feature to residuals to give a comprehensive distribution description of every cluster.

By concatenating the distribution entropy of all clusters, entropy features can be represented as .

3.2. Normalization

When we extend VLAD with distribution entropy, the first issue need to address is how to fuse the two types of vectors. As discussed above, the accumulated residual vector should reduce burstiness by an appropriate normalization operation, such as residual normalization [

22], intra normalization [

16] and power normalization [

7]. For distribution entropy, most of the value is in the scope [0, 6]. Zero denotes that all SIFT descriptors are located in the same bin within a cluster, so the aggregation degree is high. It is also necessary to note that as a statistical feature, many entropies are very close. If we perform L2 normalization [

7] directly on the original entropy vector, their differences will be nearly lost after normalization. Therefore, we must propose an appropriate manner to enhance the distinctiveness of entropy. Inspired by power normalization [

7] in alleviating burstiness, we utilize a reverse method to improve the discrimination of distribution entropy, named difference normalization. Difference normalization first magnifies the difference among entropies by a simple exponential function, and then, power normalization [

7] and L2 normalization [

7] will be handled sequentially. In the experiment, we set

equal to 0.1.

3.3. Fusion

We use the distribution entropy as a type of complementary clue to the original VLAD.

From the distribution entropy computation, the same dimensionality entropy vector is generated together with the computation of the residual vector. To fuse these two types of vectors, we investigated two fusion mechanisms: compact fusion and extended fusion.

The simplest way to combine two vectors is to concatenate them. In this paper, we call this extended fusion, in which the residual vector and entropy vector will be combined after these two vectors are fully generated. However, concatenation could build a double-length vector (2 × K × D) as the original VLAD vector. We name this vector the extended entropy boosted VLAD (EEVLAD).

We introduce another fusion method, named compact fusion. Specifically, compact fusion occurs in the processing of each visual word. Accumulated residual

vi and distribution entropy

ei can be computed for each visual word

. We employ a type of arithmetic combination given by

In the experiment, we set equal to 0.1.

In compact fusion, has the same dimensionality as the accumulated residual or distribution entropy ei. After concatenating the of all clusters, a K × D dimensionality vector is generated. We name this vector compact entropy-boosted VLAD (CEVLAD).

We provide the whole algorithm below (Algorithm 1):

| Algorithm 1. Computing distribution entropy boosted VLAD descriptors EEVLAD and CEVLAD from a set of descriptors . The set of centroids is learned on a training set using -means |

| % Accumulate descriptor residual |

| For t = 1,…,n |

| % Apply power normalization and intra-normalization for |

| For i = 1,…,K |

|

| % Build the distribution entropy for every cluster |

| For |

| % Apply compact fusion to generate CEVLAD |

| For |

|

| % Apply L2 normalization for |

|

| % Apply extended fusion to generate EEVLAD |

|

| % Apply L2 normalization for |

|

| % Apply L2 normalization for |

|

|

| % Apply L2 normalization for EEV |

|