2.1. Mathematical Model

Let the probability of the

ith possible event be

and suppose the forecaster gets a payoff

if he predicts this event, that is his expected payoff is

. If we want to keep the forecaster honest, we must select a function

such that for any other probability distribution

one has:

that is, the expected payoff is maximal if the forecaster predicts the events according to their correct probability. In a brilliant paper, Pál Fischer [

5] proved that the only function satisfying Inequality (1) is

that is—apart from a constant factor—the expected payoff is the Shannon entropy

. Putting aside the “forecaster” analogy, we can say that

the only reasonable and unbiased quantitative "value" what we can associate with the information about a probability distribution is its entropy, .

This consideration had been one of the motivations for our group to introduce, some 10 years ago, the

TRISA relative-entropy triangle to analyze and conveniently plot the joint development and mutual dependency of three variables, measured in incommensurable units [

7,

8]. In the present paper we use Shannon entropy in a very different context, as a measure of the structural (configurational) disorder of random geometrical patterns [

9]. In the statistical physics of point patterns configurational entropy is defined as

where

W is the number of different configurations, assuming that all configurations are equally probable (Boltzmann's equation,

is the Boltzmann constant,

Figure 1). If the configurations have different probabilities

then combinatorial reasoning and application of the Stirling’s approximation yield

([

10,

11]).

Figure 1.

Ludwig Boltzmann’s grave in the Central Vienna Cemetery, with his famous equation, S=k log W.

Figure 1.

Ludwig Boltzmann’s grave in the Central Vienna Cemetery, with his famous equation, S=k log W.

In the geometrical probability theory of irregularly placed points the distances to nearest neighbor, and their probability distribution, have become a standard tool to characterize spatial relationships in populations [

12]. It was first proved by Hertz ([

13], simplified in [

12]), that if

a large number of points are Poisson-distributed on the plane with density ρ, and for every point

its distance to the nearest neighbor is

then the average value of

, that is

, tends to an expected value

for

:

Thus, the randomness of a point arrangement can be characterized by the ratio [

12]:

For a completely random point distribution

, if all points are at the same position then

, for periodical arrangements one can have

(such as

for a square lattice,

for the hexagonal lattice, [

12]).

Another convenient measure of irregularity is the Shannon-entropy of the distribution of nearest neighbor distances

. For a regular square lattice, all distances

are equal, and the Shannon entropy of the distance-to-nearest-neighbor distribution is 0. The more irregular is the lattice, the larger will be the variation among the values

, and consequently, the larger will be the Shannon entropy of their distribution. If, for a randomly selected point

, and any

we have, independently of the index

i, that

where

and

Pr means probability of a random event,

dist is the Euclidean distance, then:

is a meaningful (and, as we discussed above in connection with the forecaster problem, the

only objective) measure of the irregularity of a point distribution.

The intimate connection between distance-to-nearest-neighbors and entropy is expressed by a Theorem of Kozachenko and Leonenko ([

14,

15,

16]) which states that, under some mild conditions, for

N points distributed in the

d-dimensional Euclidean space:

where

is the

entropy of the d-dimensional point distribution, the factor in square brackets is the volume of the

d-dimensional unit sphere, γ = 0.5772 …. is Euler's constant,

the gamma function. For the 2-dimensional case:

By the inequality between the geometric and arithmetic means of positive numbers ([

17])

, which gives an upper bound for the entropy of an arrangement of

N points:

2.2. Entropy of the Shortest Distance

In Economic Geology, Geochemistry, and Mineral Exploration there are legions of empirical rules, which claim cause-effect relations between observable planar objects (such as faults, lineaments on aerial photographs; halos with increased radon activity,

etc.) and the presence of proved mineral occurrences [

18,

19,

20,

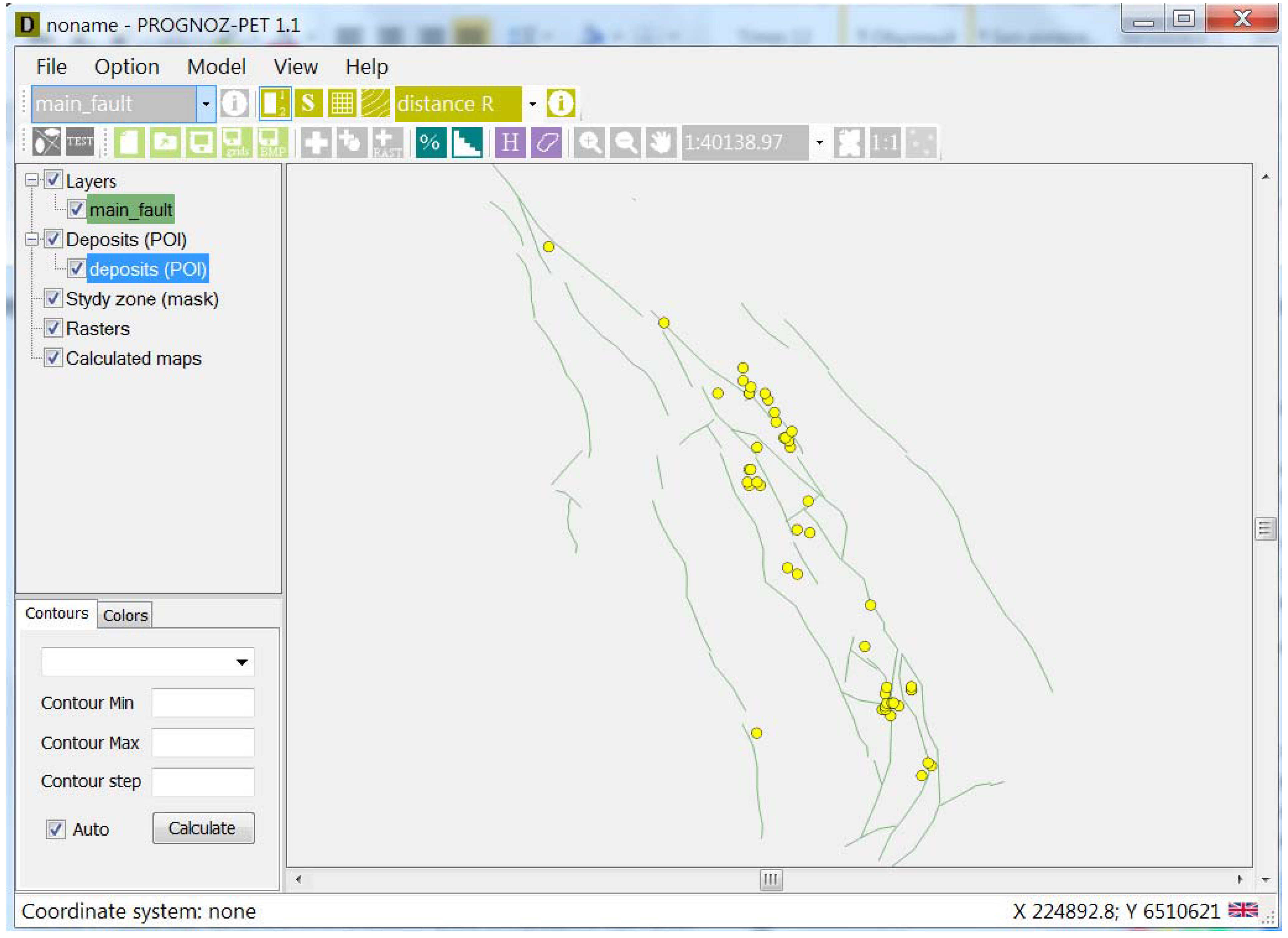

21]. A hypothetical case is shown in

Figure 2 where the lineaments (green lines) are apparently related to mineral occurrences (yellow dots). In the spirit of the "entropy of shortest distance" we expect that if the distances of the dots from the nearest lines are very randomly distributed, with large entropy, then there is no valid relation between the two sets of objects. On the other hand, if all distances are small, within the measurement accuracy only a few different values will be observed, and the distribution will have a small entropy. Thus, a low entropy of shortest dot-to-line distances would prove the causal relation between the two sets. The idea can be easily extended to three kinds of randomly distributed objects (“ellipses”, “lineaments”, “points”), see

Figure 3.

Figure 2.

A model representing the case of strong correlation between the placement of the mineral occurrences (yellow dots), and lineaments.

Figure 2.

A model representing the case of strong correlation between the placement of the mineral occurrences (yellow dots), and lineaments.

Of course other, metric approaches are also possible [

12,

18], based on the actual values of the shortest dot-to-line distances, their distribution, mean, their normality,

etc. Still, as discussed previously, by Fischer’s [

5] Theorem only the entropy can be considered as an objective measure.

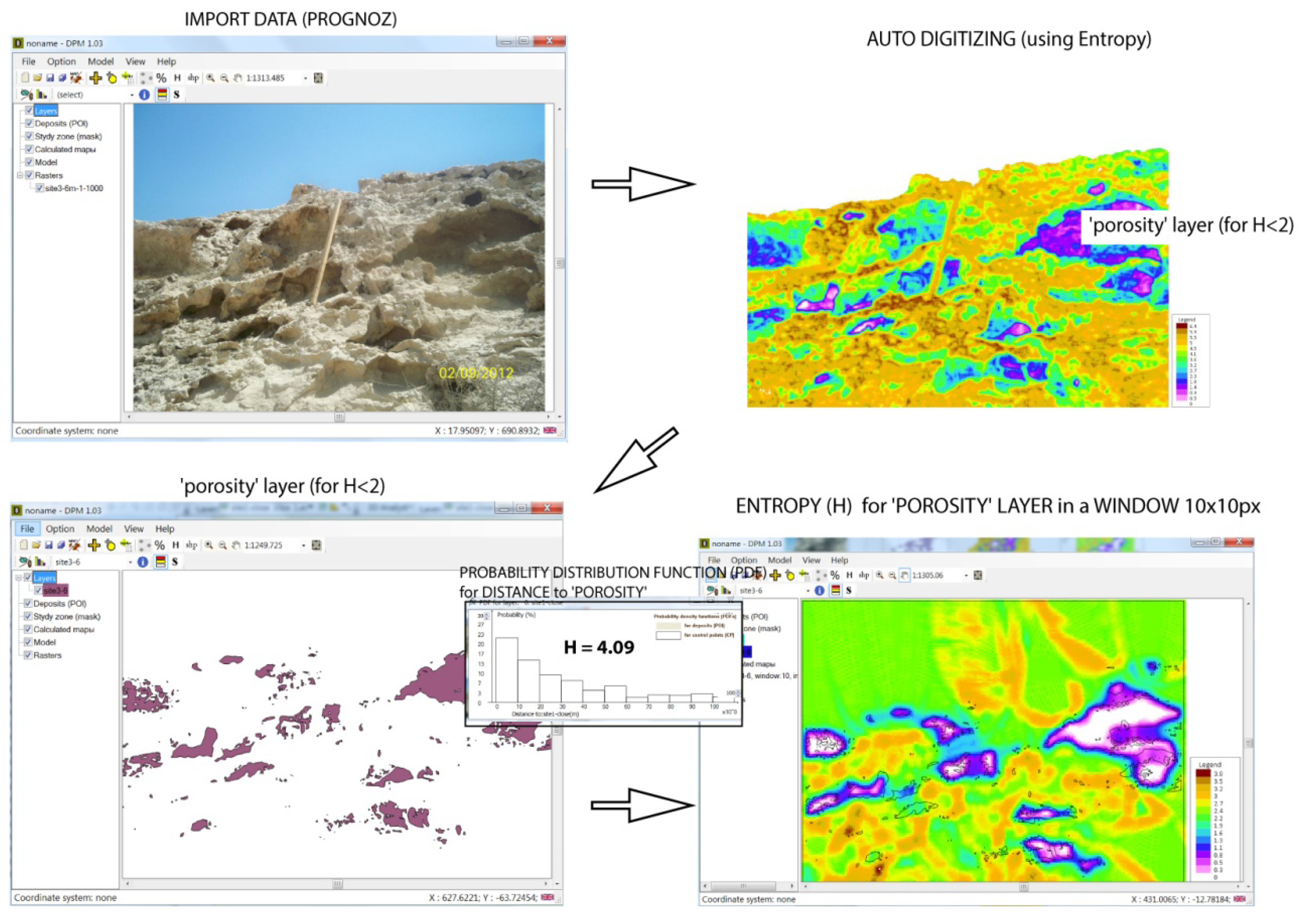

The ESD (

entropy of shortest distance to neighboring element) idea was studied in depth in the PhD thesis of B. Sterligov [

22] then it has been further developed, in collaboration with Professors S. Cherkasov and K. Oleschko to the user friendly

PROGNOZ software [

23]. Quite recently, we realized that making an analogy between the three geographic elements “ellipses”, “lineaments”, "points" and the macro- and microscopically observable “vugs”, “fractures” and “pores” of triple porosity naturally fractured vuggy carbonates, we get a powerful new tool for the digital processing, analysis, and classification of the void space in carbonates, and other reservoir rocks. The procedure is applicable at all scales, from micrographs to outcrop photos, as we shall illustrate by examples.

Out of the many possible ways to apply the ESD concept, we only discuss the sliding window entropy filtering for pore boundary enhancement, in the next Section. A similar technique, based on the ESD of Poisson distributed random points from nearest pores, will be briefly mentioned in the concluding part.

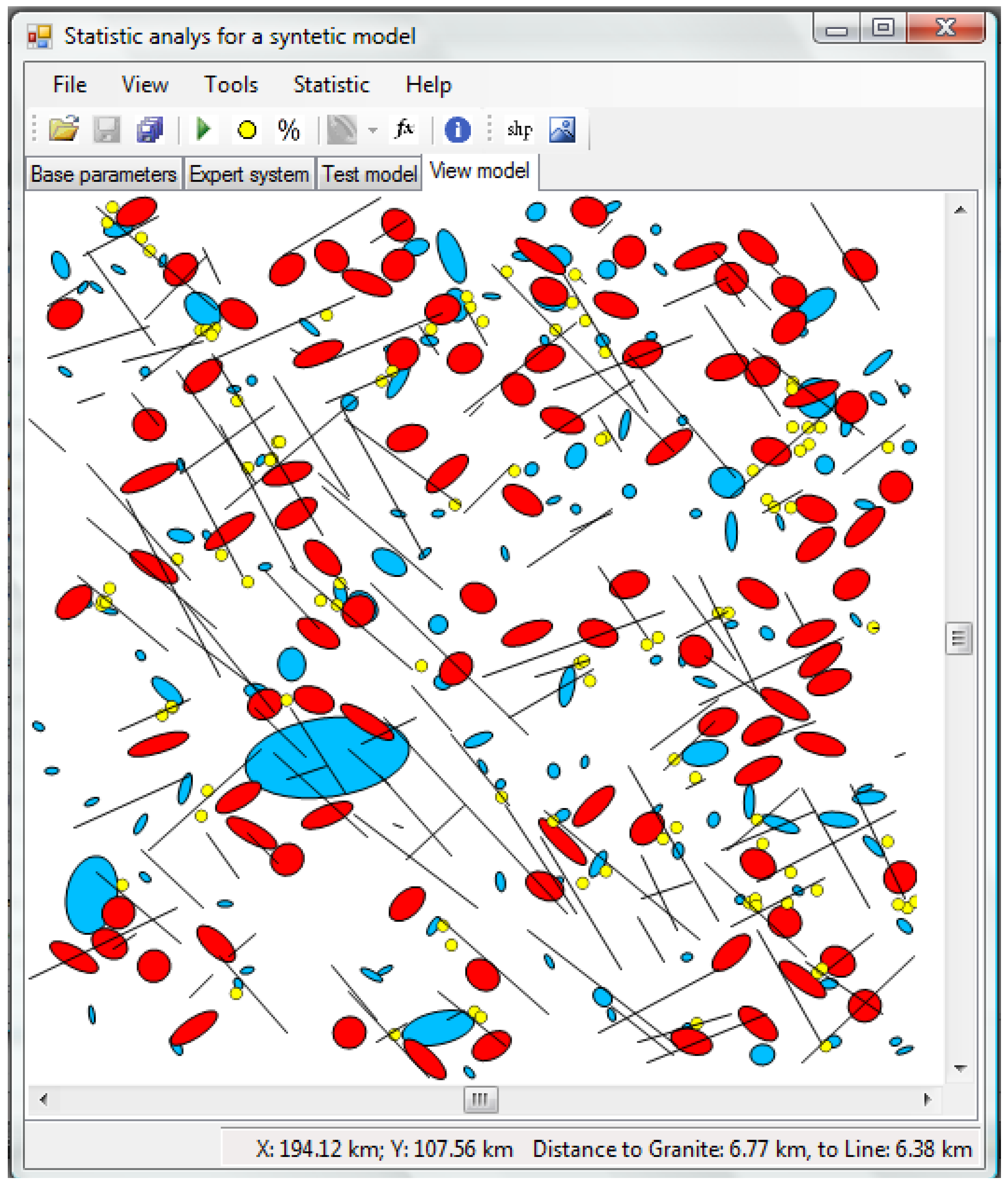

Figure 3.

Spatial relation between three shapes ("granite outcrops" blue, "mineral occurrences" (red), and "lineaments", black). Scaled down by a factor

, the model might represent an outcrop of a vuggy, fractured limestone (see

Figure 7), reducing it by

it will resemble an optical micrograph of a triple porosity carbonate (

Figure 8,

Figure 9). Our entropy technique remains applicable through this enormous range of scales.

Figure 3.

Spatial relation between three shapes ("granite outcrops" blue, "mineral occurrences" (red), and "lineaments", black). Scaled down by a factor

, the model might represent an outcrop of a vuggy, fractured limestone (see

Figure 7), reducing it by

it will resemble an optical micrograph of a triple porosity carbonate (

Figure 8,

Figure 9). Our entropy technique remains applicable through this enormous range of scales.

2.3. Sliding Window Entropy Filtering for Bore Boundary Enhancement

Using the standard notation of geometry [

24,

25,

26] if

A and

B are sets in the

n-dimensional Euclidean space

of finite measure

, then the

Minkowski sum of

A and

B is defined as:

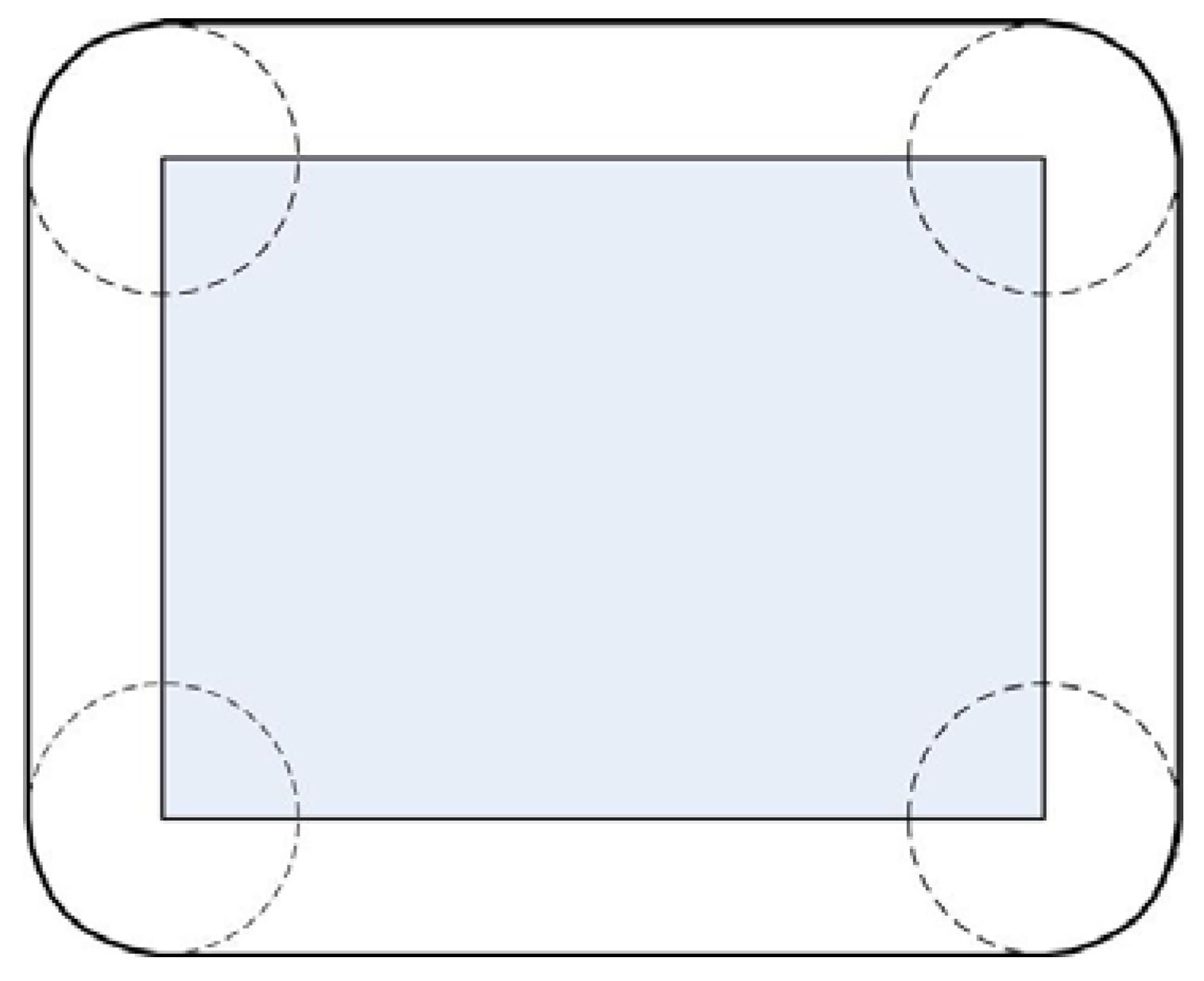

In the special case when B is the

n-dimensional hypersphere, we call

the

extended hypersphere of radius r around A. In the 2-dimensional (planar) case, assuming that the set A is convex, and denoting the length of its circumference by

c(A), by a Theorem of Tomiczková [

26] the area of the extended circle

is given by:

where in the 2-dimensional case

is area. An example of “extended circle” around a rectangle is shown in

Figure 4. If the radius of the circle

B is

r, the sides of the rectangle

A are

a and

b, it is easy to check Equation (7) because

and, directly from the figure,

.

Figure 4.

Minkowski sum of a rectangle of sides a, b with a circle of radius r ().

Figure 4.

Minkowski sum of a rectangle of sides a, b with a circle of radius r ().

Consider now a "pore"

A in the digital image, suppose the distance of

A from the nearest pore is

D. Let Δ denote pixel size, select a reasonably large

-size (say

pixels) window

W, where

is less than half the distance of

A from the closest pore,

i.e. , but at the same time it is much less than the size of the pore

A. The "pore" in the image is distinguished with a separate color, or a distinct range of values of gray scale. The boundary of the pore is generally

diffuse, not clearly defined because of non zero thickness of the thin sections (which commonly measured less than 30 μm). Let us consider the sequence of extended circles with increasing radii around

A (see

Figure 5):

The sequence of these sets satisfies (where in the 2-D case the measure μ is

area):

Taking set-theoretical differences between successive extended spheres around

A of respective radii

and

we get a sequence of rings

around the pore

A defined as:

. By the construction, each ring is one pixel wide. If the moving window

W is closer to the pore

A than

D/2 then:

and, consequently, (because the rings are distinct):

Figure 5.

Illustration of the sliding window entropy technique for a better definition of the boundary of the pore . The sliding window W, which moves out of , has a size less than half the distance to the nearest pore. The sequence is strictly increasing, the difference sets form one pixel wide “rings” or “halos” around .

Figure 5.

Illustration of the sliding window entropy technique for a better definition of the boundary of the pore . The sliding window W, which moves out of , has a size less than half the distance to the nearest pore. The sequence is strictly increasing, the difference sets form one pixel wide “rings” or “halos” around .

Suppose the square-shaped window W moves, without rotation, staying parallel to its original position, along a linear path as shown in

Figure 5. In the figure, W starts to move from a position where it is fully inside

A,

, then it passes through intermediate positions when only a part of W is inside the pore:

∅, up to a final position when W is fully outside the pore and it is covered by

M successive rings:

∅ and

.

In any position of the moving window, the altogether

pixels in

W define the set of

distances

where

is the shortest distance (with the precision of pixel-size Δ) between the pixel

,

and the pore

A. Considering these distances as

random variables, any

can take a value from among the possible distances

and we can compute their empirical probability distribution

as:

where

denotes the number of elements of the set

S. The

Shannon entropy of this distribution is

, with the usual convention that for

the product

is defined as

. Consider the three possible positions of the window

W relative to the pore

A.

If W is fully inside A then all distances are 0, so that and .

If

W is fully outside

A but still inside the extended sphere of radius

around

A, then in a typical case it will have non-empty intersections with

w consecutive rings:

for some value of

k in such a way that each intersection with a ring

contains about

w pixels, and in the set

all distances are equal to the same value

. In this case, the typical probability distribution will be:

The corresponding Shannon entropy is:

Consider now the most interesting case, when part of the window

W lies inside pore

A, the rest of it is outside in such a way that it has non-empty intersections with the first

l rings only:

∅,

∅ for

where

In a typical case each intersection with a given ring

contains about

w pixels, and in the set

all distances are equal to the same value

. In this case the probability distribution is:

which yields the entropy:

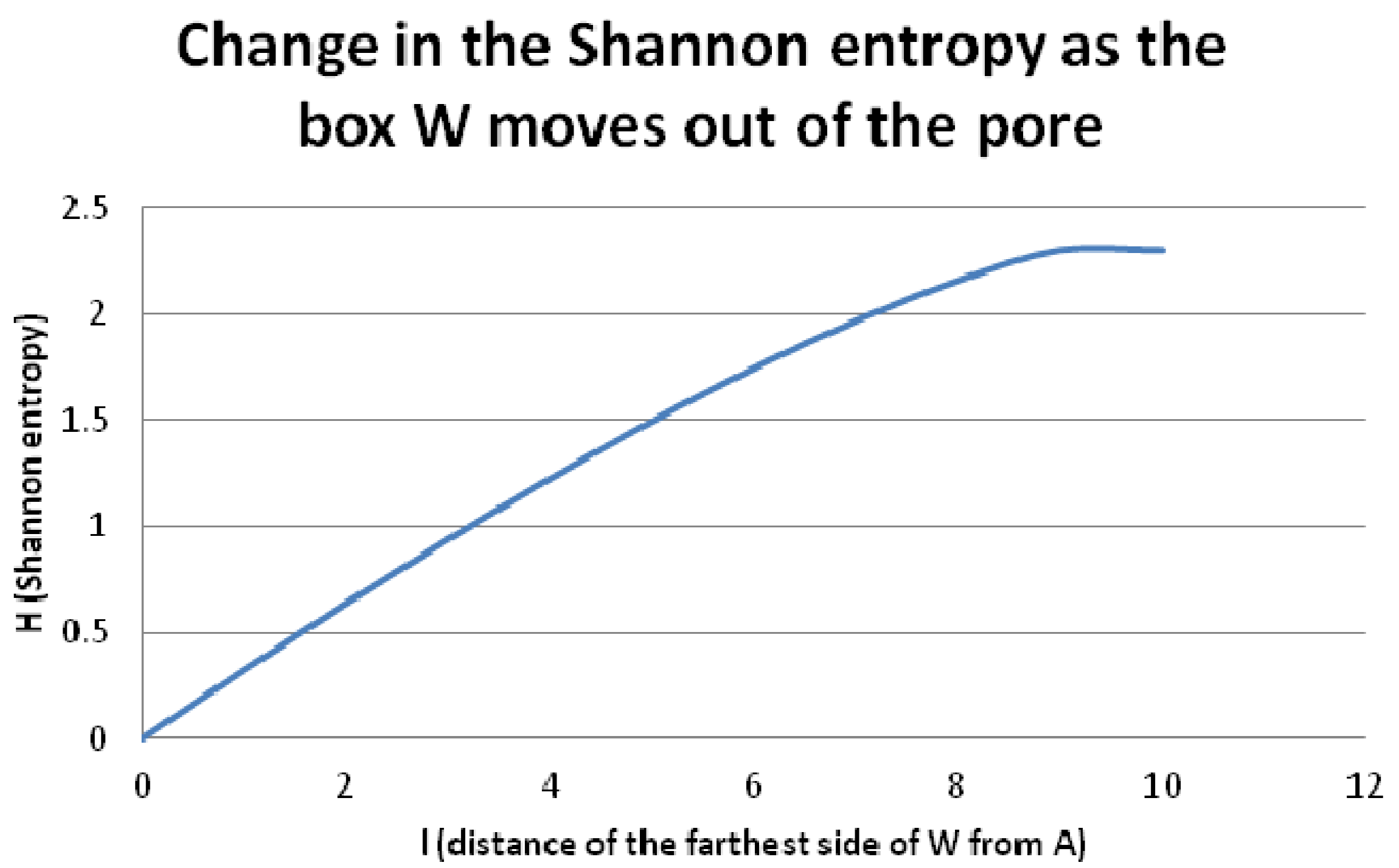

Figure 6 shows how the Shannon entropy (Equation (17)) increases as the box

W gradually moves out from the pore, for the case when

W consists of

pixels. We emphasize that in order to compute the entropy, we do not have to actually construct the rings around the pore, but we do need an algorithm to find the distance of any pixel from the nearest pore.

As seen from the graph (

Figure 6), we can use the following algorithm to define the boundary

of the pore A: Select the size of

W less than the half distance between nearest pores. In any position of the moving window

W compute the distances

of its

pixels from the nearest pore with the precision of pixel-size Δ. Define the probability distribution of the different distances,

, where

, (see Eq. 12), and calculate the corresponding Shannon entropy

. When

W is fully inside a pore, then

, when

W is moving out of the pore, step by step, the entropy of distances from the pixels of W to the pore will increase to

(according to Equation (17)). The maximal possible entropy of the distribution of distances

would occur when all

are different and equally probable, and this would be twice as large as

H in Equation (15):

If we select W as consisting of pixels, then in Eq. (15) we have , and it is a reasonable criterion to define the interior of the pore with the inequality . More generally, using a - sized window, the boundary of the pore is defined by .

Figure 6.

Change of the Shannon entropy (Equation (17)) as W gradually moves out from the pore.

Figure 6.

Change of the Shannon entropy (Equation (17)) as W gradually moves out from the pore.