Some New Results on the Wiretap Channel with Side Information

Abstract

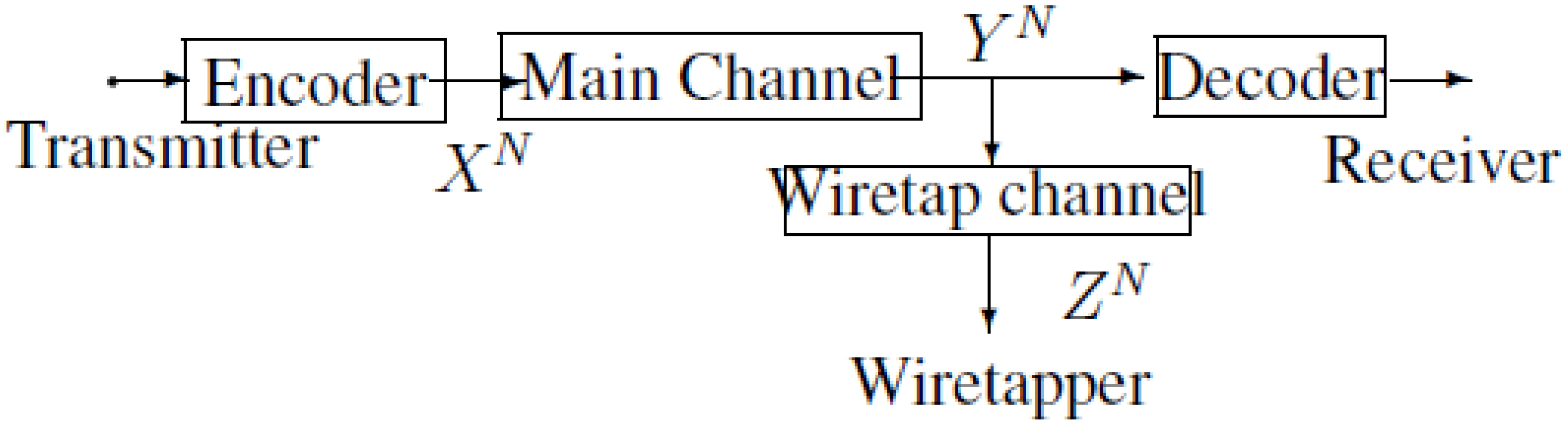

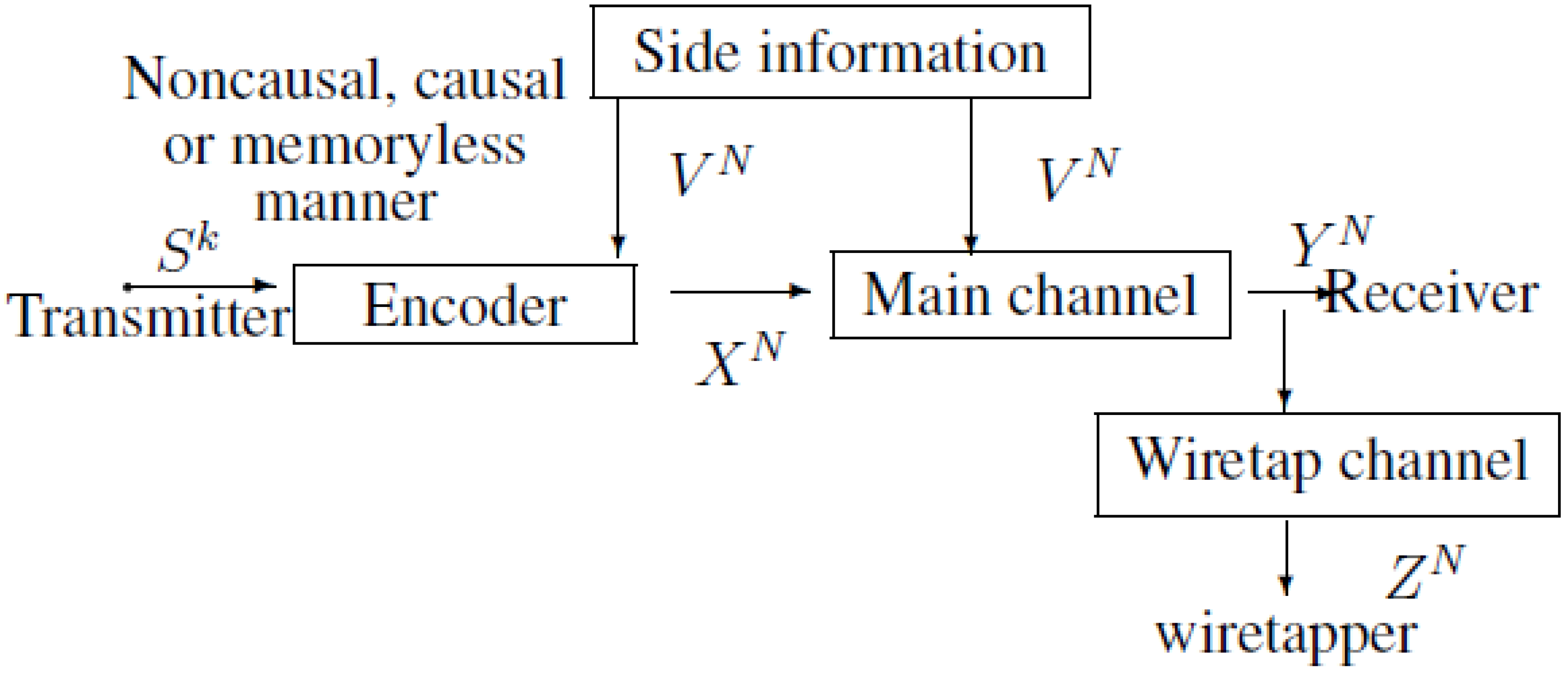

:1. Introduction

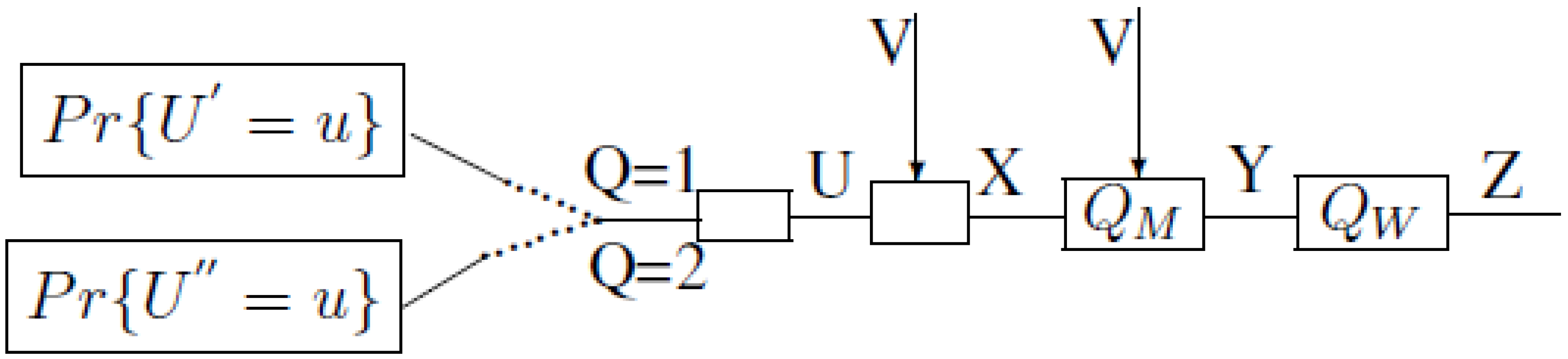

2. Notations, Definitions and the Main Results

2.1. The Model of Figure 2 with Noncausal Side Information

- The range of the random variable U satisfiesThe proof is similar to that of Theorem 2, and it is omitted here.

- Secrecy capacityThe points in for which are of considerable interest, which imply the perfect secrecy . Clearly, we can easily bound the secrecy capacity of the model of Figure 2 with noncausal side information by

- The ranges of the random variables U, K and A satisfyThe proof is in Appendix 5.

- Observing the formula in Theorem 2, we havewhere (a) is from the fact that A may be assumed to be a (deterministic) function of K, and (b) is from the Markov chain . Then it is easy to see that .

2.2. The Model of Figure 2 with Causal Side Information

- The range of the random variable U satisfiesThe proof is similar to that in Theorem 2, and it is omitted here.

- Secrecy capacityThe points in for which are of considerable interest, which imply the perfect secrecy . Clearly, we can easily bound the secrecy capacity of the model of Figure 2 with causal side information by

- The ranges of the random variables U, K and A satisfyThe proof is similar to that of Theorem 2, and it is omitted here.

- Since the causal side information is a special case of the noncausal manner, the outer bound can be directly obtained from by using the fact that U is independent of V.

- Note that (the proof is the same as that in Remark 2), and therefore, it is easy to see that

2.3. The Model of Figure 2 with Memoryless Side Information

- (i)

- The “supremum” in the definition of is, in fact, a maximum, i.e., for each R, there exists a mass function such that .

- (ii)

- is a concave function of R.

- (iii)

- is non-increasing in R.

- (iv)

- is continuous in R.

- Comparison with A. D. Wyner’s wiretap channel [1]The main channel capacity denoted by Equation (2.9) in Theorem 1 is different from that of [1]. When the channel state information V is a constant, the model of Figure 2 reduces to A. D. Wyner’s wiretap channel [1]. Substituting V by a constant and U by X into Equations (2.9), (2.10) and (2.11), the characters , , and the region are the same as those of [1].

- Secrecy capacityA transmission rate denoted byis called the secrecy capacity in the model of Figure 2 with memoryless side information. Furthermore, is the unique solution of the equationand satisfiesProof 1 (Proof of Equations (2.13) and (2.14)) Firstly, since , and is a non-increasing function of R, then there exists a unique such that and . Secondly, if , then , so that . Since is a non-increasing function of R, we conclude that . Thus is the maximum of those in which , i.e., is the secrecy capacity in the model of Figure 2 with memoryless side information. By using the Formula (2.13), and the non-increasing property of (see Lemma 1 (iii)), we get Equation (2.14). The proof is completed.

- Note that in Equation (2.14), we have , which implies that . Also note that for the causal model, the secrecy capacity satisfies (see Equation (2.7).Then, it is easy to see that the memoryless manner for the encoder can not help to obtain the same secrecy capacity as that of the wiretap channel with causal side information.

3. Proof of Theorem 2, Theorem 4 and Converse Half of Theorem 5

3.1. Proof of Theorem 2

3.1.1. Proof of Equation (3.1)

3.1.2. Proof of Equations (3.2) and (3.3)

3.2. Proof of Theorem 4

3.3. Converse Half of Theorem 5

3.3.1. Proof of Step (i)

3.3.2. Proof of Step (ii)

3.3.3. Proof of Step (iii)

- For , and any , letDenoteIt follows from the definition of in Equation (2.10) that the distribution , defined bybelongs to . Similarly, for , letwhere . Then it is easy to see that . Thus, from the definition of in Equation (2.11),and for , ,

- By using the Formulas (3.35) and (3.40), the proof of step (iii) is as follows,where Formula (a) follows from the inequality Equation (3.40). Formula (b) follows from the concavity of [Lemma 1 (ii)]. Formula (c) follows from the definition Equation (3.35).

3.3.4. Proof of Step (iv)

3.3.5. Proof of Step (v)

4. Proof of Theorem 3 and Direct Half of Theorem 5

4.1. Proof of Theorem 3

4.1.1. Coding Construction

4.1.2. Proof of , , and

4.1.3. Proof of

4.2. Proof of the Direct Half of Theorem 5

4.2.1. Code Construction

4.2.2. Proofs of and

- where , and is the resulting error probability of a code used on the channel .

- Since is composed of N i.i.d. random variables with probability mass function , and is available at the encoder in a memoryless case, we have

4.2.3. Proof of

5. Conclusions

Acknowledgment

Appendix

Size Constraints of the Auxiliary Random Variables in Theorem 2

- (Proof of )Define the following continuous scalar functions of :Since there are functions of , the total number of the continuous scalar functions of is .Let . With these distributions , we haveAccording to the support lemma ([11], p. 310), the random variable A can be replaced by new ones such that the new A takes at most different values and the expressions in Equations (1) and (2) are preserved.

- (Proof of )Once the alphabet of A is fixed, we apply similar arguments to bound the alphabet of K, see the following. Let , define the following continuous scalar functions of :Since there are functions of , the total number of the continuous scalar functions of is .Let . With these distributions , we haveAccording to the support lemma ([11], p. 310), for every fixed a, the random variable K can be replaced by new ones such that the new K takes at most different values and the expressions Equations (3) and (4) are preserved. Therefore, is proved.

- (Proof of )Once the the alphabet of K is fixed, we apply similar arguments to bound the alphabet of U, see the following. Define the following continuous scalar functions of :Since there are functions of , the total number of the continuous scalar functions of is .Let . With these distributions , we haveAccording to the support lemma ([11], p. 310), for every fixed k, the random variable U can be replaced by new ones such that the new U takes at most different values and the expressions in Equations (5–7) are preserved. Therefore, is proved.

Proof of Lemma 1

Proof of (i)

Proof of (ii)

Proof of (iii)

Proof of (iv)

Proof of Lemma 3

Proof of Lemma 3

References

- Wyner, A.D. The wire-tap channel. Bell Syst. Tech. J. 1975, 54, 1355–1387. [Google Scholar] [CrossRef]

- Csiszár, I.; Körner, J. Broadcast channels with confidential messages. IEEE Trans. Inf. Theory 1978, 24, 339–348. [Google Scholar]

- Leung-Yan-Cheong, S.K.; Hellman, M.E. The Gaussian wire-tap channel. IEEE Trans. Inf. Theory 1978, 24, 451–456. [Google Scholar] [CrossRef]

- Shannon, C.E. Channels with side information at the transmitter. IBM J. Res. Dev. 1958, 2, 289–293. [Google Scholar] [CrossRef]

- Kuznetsov, N.V.; Tsybakov, B.S. Coding in memories with defective cells. Probl. Peredachi Informatsii 1974, 10, 52–60. [Google Scholar]

- Gel’fand, S.I.; Pinsker, M.S. Coding for channel with random parameters. Problems. Control Inf. Theory 1980, 9, 19–31. [Google Scholar]

- Costa, M.H.M. Writing on dirty paper. IEEE Trans. Inf. Theory 1983, 29, 439–441. [Google Scholar] [CrossRef]

- Mitrpant, C.; Han Vinck, A.J.; Luo, Y. An achievable region for the gaussian wiretap channel with side information. IEEE Trans. Inf. Theory 2006, 52, 2181–2190. [Google Scholar] [CrossRef]

- Chen, Y.; Han Vinck, A.J. Wiretap channel with side information. IEEE Trans. Inf. Theory 2008, 54, 395–402. [Google Scholar] [CrossRef]

- Merhav, N. Shannon’s secrecy system with informed receivers and its application to systematic coding for wiretapped channels. IEEE Trans. Inf. Theory, special issue on Inf.-Secur. 2008, 54, 2723–2734. [Google Scholar] [CrossRef]

- Csiszár, I.; Körner, J. Information Theory: Coding Theorems for Discrete Memoryless Systems; Academic: London, UK, 1981; pp. 123–124. [Google Scholar]

- Yeung, R.W. Information Theory and Network Coding; Springer: New York, NY, USA, 2008; pp. 325–326. [Google Scholar]

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Dai, B.; Luo, Y. Some New Results on the Wiretap Channel with Side Information. Entropy 2012, 14, 1671-1702. https://doi.org/10.3390/e14091671

Dai B, Luo Y. Some New Results on the Wiretap Channel with Side Information. Entropy. 2012; 14(9):1671-1702. https://doi.org/10.3390/e14091671

Chicago/Turabian StyleDai, Bin, and Yuan Luo. 2012. "Some New Results on the Wiretap Channel with Side Information" Entropy 14, no. 9: 1671-1702. https://doi.org/10.3390/e14091671

APA StyleDai, B., & Luo, Y. (2012). Some New Results on the Wiretap Channel with Side Information. Entropy, 14(9), 1671-1702. https://doi.org/10.3390/e14091671