Algorithmic Relative Complexity

Abstract

:1. Introduction

2. Preliminaries

2.1. Shannon Entropy and Kolmogorov Complexity

2.2. Mutual Information and Other Correspondences

2.3. Compression-Based Approximations

3. Cross-Complexity and Relative Complexity

3.1. Cross-Entropy and Cross-Complexity

- The cross-entropy is lower bounded by the entropy , i.e., , as the cross-complexity in (11).

- The identity also holds up to an additive term (12). Note that the strongest , does not hold in the algorithmic framework. Consider the case of x being a substring of y, with y* containing the shortest code x* to output x, then = + O(1).

- The cross-entropy of X given Y and the entropy H(X) of X share the same upper bound log(N), where N is the number of possible outcomes of X, as algorithmic complexity and algorithmic cross-complexity. This property follows from the definition of algorithmic complexity and (10).

3.2. Relative Entropy and Relative Complexity

4. Computable Approximations

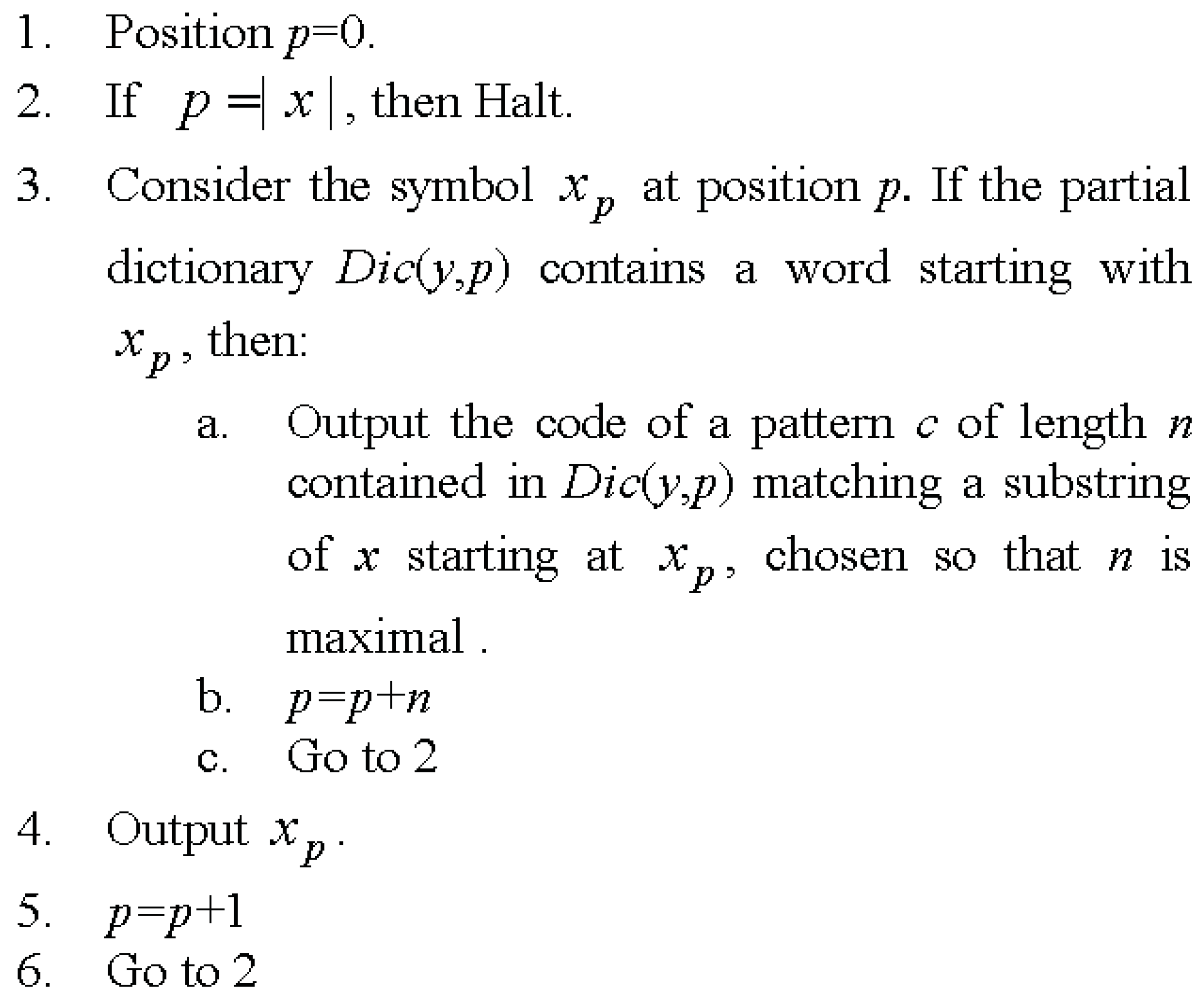

4.1. Computable Algorithmic Cross-Complexity

| A | B | ||||||

|---|---|---|---|---|---|---|---|

| a | a | ||||||

| b | ab = <256> | a | a | b | ab = <256> | A | a |

| c | bc = <257> | b | b | a | ba = <257> | B | b |

| a | ca = <258> | c | c | b | |||

| b | a | aba = <258> | <256> | <256> | |||

| c | abc = <259> | <256> | <256> | b | |||

| a | c | a | <256> | ||||

| b | cab = <260> | <258> | b | abab = <259> | <258> | ||

| c | <256> | a | <256> | ||||

| a | bca = <261> | <257> | c | b | bab = <260> | <257> | |

| b | a | <256> | |||||

| c | <256> | b | |||||

| <259> | c | <260> | <256> |

| Symbols | Bits per Symbol | Size in Bits | |

|---|---|---|---|

| A | 12 | 8 | 96 |

| B | 12 | 8 | 96 |

| 7 | 9 | 63 | |

| 6 | 9 | 54 | |

| 9 | 9 | 81 | |

| 7 | 9 | 63 |

4.2. Computable Algorithmic Relative Complexity

4.3. Symmetric Relative Complexity

5. Applications

5.1. Application to Authorship Attribution

| Author | Texts | Successes |

|---|---|---|

| Dante Alighieri | 8 | 8 |

| D’Annunzio | 4 | 4 |

| Deledda | 15 | 15 |

| Fogazzaro | 5 | 3 |

| Guicciardini | 6 | 6 |

| Machiavelli | 12 | 12 |

| Manzoni | 4 | 4 |

| Pirandello | 11 | 11 |

| Salgari | 11 | 11 |

| Svevo | 5 | 5 |

| Verga | 9 | 9 |

| TOTAL | 90 | 88 |

| Method | Accuracy (%) |

|---|---|

| 97.8 | |

| Relative Entropy | 93.3 |

| NCD (zlib) | 94.4 |

| NCD (bzip2) | 93.3 |

| NCD (blocksort) | 96.7 |

| Ziv-Merhav | 95.4 |

5.2. Satellite Images Classification

| Class | NCD(zlib) | NCD(Jpg2000) | |

|---|---|---|---|

| Clouds | 97 | 95 | 90.9 |

| Sea | 89.5 | 90 | 92.6 |

| Desert | 85 | 87 | 88 |

| City | 97 | 98.5 | 100 |

| Forest | 100 | 100 | 97 |

| Fields | 44.5 | 71.3 | 91 |

| Average | 85.5 | 90.3 | 93.3 |

6. Conclusions

Acknowledgements

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Li, M.; Vitányi, P.M.B. An Introduction to Kolmogorov Complexity and Its Applications, 3rd edition; Springer: New York, NY, USA, 2008; Chapters 2, 8. [Google Scholar]

- Li, M.; Chen, X.; Li, X.; Ma, B.; Vitányi, P.M.B. The similarity metric. IEEE Trans. Inform. Theor. 2004, 50, 3250–3264. [Google Scholar] [CrossRef]

- Cilibrasi, R. Statistical Inference through Data Compression; Lulu.com Press: Raleigh, NC, USA, 2006. [Google Scholar]

- Ziv, J.; Merhav, N. A Measure of relative entropy between individual sequences with application to universal classification. IEEE Trans. Inform. Theor. 1993, 39, 1270–1279. [Google Scholar] [CrossRef]

- Benedetto, D.; Caglioti, E.; Loreto, V. Language trees and zipping. Phys. Rev. Lett. 2002, 88, 048702. [Google Scholar] [CrossRef] [PubMed]

- Solomonoff, R. A formal theory of inductive inference. Inform. Contr. 1964, 7, 1–22. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. Three approaches to the quantitative definition of information. Probl. Inform. Transm. 1965, 1, 1–7. [Google Scholar] [CrossRef]

- Chaitin, G.J. On the length of programs for computing finite binary sequences: Statistical considerations. J. Assoc. Comput. Mach. 1969, 16, 145–159. [Google Scholar] [CrossRef]

- Vereshchagin, N.K.; Vitányi, P.M.B. Rate distortion and denoising of individual data using Kolmogorov complexity. IEEE Trans. Inform. Theor. 2010, 56, 3438–3454. [Google Scholar] [CrossRef]

- Chen, X.; Francia, B.; Li, M.; McKinnon, B.; Seker, A. Shared Information and Program Plagiarism detection. IEEE Trans. Inform. Theor. 2004, 50, 1545–1551. [Google Scholar] [CrossRef]

- Cilibrasi, R.; Vitányi, P.M.B. Clustering by Compression. IEEE Trans. Inform. Theor. 2005, 51, 1523–1545. [Google Scholar] [CrossRef]

- Keogh, E.J.; Lonardi, S.; Ratanamahatana, C. Towards parameter-free data mining. Proc. SIGKDD 2004, 10, 206–215. [Google Scholar]

- Hutter, M. Algorithmic complexity. Scholarpedia 2008, 3, 2573. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- MacKay, D.J.C. Information Theory, Inference, and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003; Chapter 2. [Google Scholar]

- Welch, T.A. A technique for high-performance data compression. Computer 1984, 17, 8–19. [Google Scholar] [CrossRef]

- Watanabe, T.; Sugawara, K.; Sugihara, H. A new pattern representation scheme using data compression. IEEE Trans. Patt. Anal. Machine Intell. 2002, 24, 579–590. [Google Scholar] [CrossRef]

- The Liber Liber dataset. Available online: http://www.liberliber.it (accessed on 31 March 2011).

- Coutinho, D.P.; Figueiredo, M. Information theoretic text classification using the Ziv-Merhav method. Proc. IbPRIA 2005, 2, 355–362. [Google Scholar]

- Parodos.it. Grazia Deledda’s biography. Available online: http://www.parodos.it/books/grazia_deledda.htm (accessed on 18 April 2011). (In Italian)

- Cerra, D.; Mallet, A.; Gueguen, L.; Datcu, M. Algorithmic information theory based analysis of earth observation images: An assessment. IEEE Geosci. Remote Sens. Lett. 2010, 7, 9–12. [Google Scholar] [CrossRef]

- Cerra, D.; Datcu, M. Algorithmic cross-complexity and conditional complexity. Proc. DCC 2009, 19, 342–351. [Google Scholar]

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Cerra, D.; Datcu, M. Algorithmic Relative Complexity. Entropy 2011, 13, 902-914. https://doi.org/10.3390/e13040902

Cerra D, Datcu M. Algorithmic Relative Complexity. Entropy. 2011; 13(4):902-914. https://doi.org/10.3390/e13040902

Chicago/Turabian StyleCerra, Daniele, and Mihai Datcu. 2011. "Algorithmic Relative Complexity" Entropy 13, no. 4: 902-914. https://doi.org/10.3390/e13040902

APA StyleCerra, D., & Datcu, M. (2011). Algorithmic Relative Complexity. Entropy, 13(4), 902-914. https://doi.org/10.3390/e13040902