Abstract

Deep learning has emerged as a powerful tool for medical image analysis and diagnosis, demonstrating high performance on tasks such as cancer detection. This literature review synthesizes current research on deep learning techniques applied to lung cancer screening and diagnosis. This review summarizes the state-of-the-art in deep learning for lung cancer detection, highlighting key advances, limitations, and future directions. We prioritized studies utilizing major public datasets, such as LIDC, LUNA16, and JSRT, to provide a comprehensive overview of the field. We focus on deep learning architectures, including 2D and 3D convolutional neural networks (CNNs), dual-path networks, Natural Language Processing (NLP) and vision transformers (ViT). Across studies, deep learning models consistently outperformed traditional machine learning techniques in terms of accuracy, sensitivity, and specificity for lung cancer detection in CT scans. This is attributed to the ability of deep learning models to automatically learn discriminative features from medical images and model complex spatial relationships. However, several challenges remain to be addressed before deep learning models can be widely deployed in clinical practice. These include model dependence on training data, generalization across datasets, integration of clinical metadata, and model interpretability. Overall, deep learning demonstrates great potential for lung cancer detection and precision medicine. However, more research is required to rigorously validate models and address risks. This review provides key insights for both computer scientists and clinicians, summarizing progress and future directions for deep learning in medical image analysis.

1. Introduction

Cancer represents a predominant cause of mortality globally [1], and early detection is pivotal for enhancing patient outcomes. Conventional techniques for cancer diagnosis, including visual examination and biopsy [2], can be time-consuming, subjective, and susceptible to mistakes. Deep machine learning (ML) constitutes a potent recent instrument with the capability to transform cancer diagnosis.

Deep ML algorithms are trained on large datasets of medical images, text, and other data. This allows them to learn to identify patterns that are invisible to the human eye. In recent years, deep ML algorithms have been shown to achieve state-of-the-art performance (SOAP) in cancer detection, often outperforming human experts [3]. This has led to a number of benefits, including: Increased accuracy of cancer detection: Deep ML algorithms can identify cancer with greater accuracy than traditional methods. This can lead to earlier detection and treatment, which can improve patient outcomes. Reduced cost of cancer diagnosis: Deep ML algorithms can analyze large datasets of medical images and data, reducing the cost of cancer diagnosis. Improved patient experience: Deep ML algorithms can automate cancer diagnosis, reducing the time and stress that patients experience.

Despite the numerous advantages of deep machine learning, certain challenges remain to be tackled. These challenges include: Data availability: Deep ML algorithms necessitate substantial datasets of medical imagery and information to train. This can pose a difficulty, as not every medical institution has access to such datasets. Bias: Deep ML algorithms may exhibit bias if trained on datasets not illustrative of the population. This can result in imprecise cancer detection. Explainability: Explaining the decision-making of deep ML algorithms can be arduous. This can engender distrust in the outcomes of deep ML algorithms.

However, the field of deep learning has witnessed remarkable advancements, driven by the availability of vast amounts of data, significant computational power, and breakthroughs in neural network architectures. This progress has paved the way for the application of deep learning techniques in various domains, including medical diagnosis [4]. Within the realm of medical imaging, deep learning algorithms have demonstrated exceptional capabilities in detecting and classifying diseases, particularly in cancer diagnosis. The unique ability of deep learning algorithms to automatically learn intricate patterns and features from complex datasets has enabled the development of robust and accurate models for cancer detection. By training on large-scale datasets of medical images, these algorithms can discern subtle nuances indicative of cancerous growth, facilitating early detection and intervention.

Moreover, deep learning techniques have transcended traditional image-based approaches by incorporating multi-modal data sources. By integrating medical images, clinical reports, genomics, and other patient-related information, deep learning algorithms can extract comprehensive features and provide a holistic view of cancer diagnosis. This integration of diverse data modalities not only enhances the accuracy of detection but also contributes to a more personalized and precise approach to cancer management.

Despite the rapid progress in this field, several challenges need to be addressed to ensure the widespread adoption and reliability of deep learning in cancer diagnosis. These challenges include the need for standardized protocols, validation on diverse populations, interpretation of deep learning model outputs, and integration into clinical workflows.

Several recent reviews have explored the application of AI in lung cancer detection. A comprehensive study by [5] underscored the use of AI in lung cancer screening via CXR and chest CT, highlighting the FDA-approved AI programs that are revolutionizing detection methods. Dodia et al. [6] provides an overview of lung cancer, along with publicly available benchmark datasets for research purposes. It also compares recent research performed in medical image analysis of lung cancer using deep learning algorithms, considering various technical aspects such as efficiency, advantages, and limitations. Ref. [7] provides an overview of recent state-of-the-art deep learning algorithms and architectures proposed as computer-aided diagnosis (CAD) systems for lung cancer detection in CT scans. The authors divide the CAD systems into two categories: nodule detection systems and false positive reduction systems. They discuss the main characteristics of the different techniques and analyze their performance. Another review [8] provided an in-depth examination of AI in improving nodule detection and classification, emphasizing the significant role of neural networks in early-stage detection. A further review [9] summarized various AI algorithm applications, including Natural Language Processing (NLP), Machine Learning, and Deep Learning, elucidating their value in early diagnosis and prognosis. Qureshi et al. [10], highlighted specific advances in deep learning, such as AlphaFold2 and deep generative models of deep learning with in understanding drug resistance mechanisms in the treatment of non-small cell lung cancer (NSCLC). Al-Tashi et al. [11], reviewed the works that have been carried out to highlight the contribution of deep learning, thanks to its ability to process large quantities of data and identify complex patterns for the distinction between prognostic, and predictive biomarkers in personalized medicine.

The main contributions of this paper diverge from existing literature in several key aspects:

- A Unique Grouping of Articles: Unlike traditional reviews that often categorize research based on methods or results, this review adopts a distinctive approach by grouping articles based on the types of databases utilized, offering a fresh perspective on the research landscape. This approach allows for a more comprehensive and nuanced understanding of the research landscape. By examining the types of databases that researchers are using, it’s therefore possible to gain insights into the availability, quality, and representativeness of data and the limitations of the current research. This information can be used to identify gaps in the literature, suggest new avenues for research, and develop more robust, and reliable research methods.

- Detailed Presentation of Widely-Used Databases: This review provides an extensive examination of the most commonly used databases in lung cancer detection, shedding light on their features, applications, and significance.

- Integration of Recent Research: In a rapidly evolving field, this review incorporates recent articles on the subject, ensuring a contemporary understanding of the latest developments and trends.

This paper is organized into distinct sections to provide a comprehensive review of AI in lung cancer diagnosis. The article begins in Section 2 with a detailed presentation of the methodology used for the selection and collection of articles, and specifically outlines the methodological approach, presenting the steps taken, the search strategies used, and the criteria for inclusion and exclusion. Following that, Section 3 describes the metrics used for the evaluation of the results models in the selected articles. Section 4 focuses on the public databases used in these selected works. Section 5, is divided into two sub-sections. Section 5.1 presents articles that have employed public databases in their research. Each of these studies is described in detail, highlighting their methods, findings, and implications for the field. Meanwhile, Section 5.2 focuses on articles that have utilized private databases. Emphasis is placed on those that have incorporated segmentation techniques, classification methods, and Natural Language Processing (NLP). Finally, Section 6 critically analyses how these different databases and techniques have influenced the results and how they can potentially contribute to advancements in the field of AI for lung cancer diagnosis.

2. Methodology

The main objective was to gather relevant articles encompassing the keywords “lung cancer”, “deep learning”, “transformer”, “NLP”, “diagnosis”, “machine learning”, “chest” and “computed tomography”.

To perform this review of literature on deep machine learning for medical diagnosis, with a specific focus on its application to lung cancer detection, a systematic methodology was followed. The search process commenced by utilizing various academic databases, including PubMed, IEEE Xplore, and Google Scholar. A combination of the above keywords was used to retrieve a wide range of articles. In particular, these combinations were: “Lung cancer + deep learning + transformer + diagnosis”, “lung cancer + Machine learning + chest + Computed Tomography + diagnosis”, “Lung cancer + deep learning + diagnosis + chestray”, “Lung cancer + deep learning + diagnosis + NLP, “Deep Learning lung cancer”. Also, the search was not constrained by date range, encompassing both recent and older publications to provide a comprehensive overview of the topic.

The initial search produced a large number of articles, which were then refined by scrutinizing their titles, abstracts and keywords for relevance. The selection process involved eliminating articles that were not directly related to deep machine learning for lung cancer diagnosis or that did not use relevant techniques, such as transformers and machine learning algorithms.

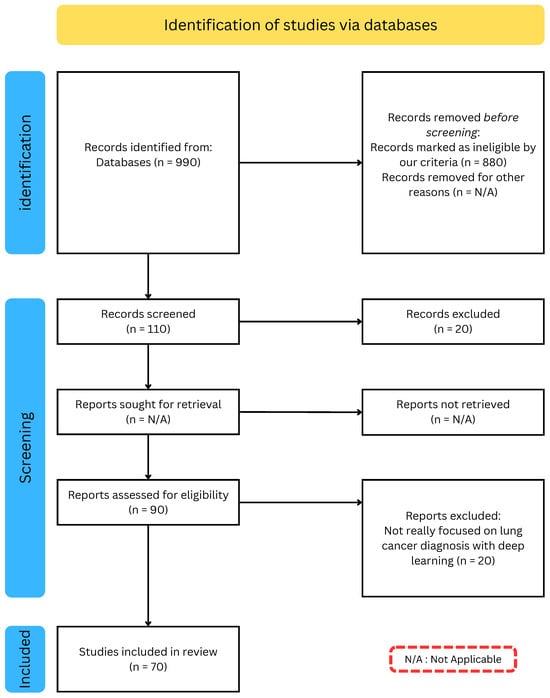

Following this selection process, a final set of articles was obtained for the literature review, ensuring a diverse representation of studies addressing the intersection of deep learning, lung cancer and medical diagnosis. The PRISMA diagram [12] in Figure 1 summarizes the successive stages of our literature search, from the initial identification of articles to the final selection of studies included in this review.

Figure 1.

PRISMA diagram: Systematic Selection Process for our Literature Review.

3. Performance Metrics

Evaluation metrics play an important role in measuring the performance of these algorithms and determining their clinical utility [13]. Some commonly used evaluation metrics include Accuracy, Sensitivity [14], Specificity [14], Receiver Operating Characteristic curves (ROC) [15], Precision and Recall [16], F1-score [17] and Area Under -ROC- Curve (AUC) [18], Dice Similarity Coefficient (DSC) [19], Intersection over Union (IOU). Appendix A Table A1 presents metrics commonly used in lung cancer diagnosis for different tasks.

Therefore, the choice of metrics plays a crucial role in the development and evaluation of deep learning models, particularly in the medical domain. Selecting the appropriate metrics can help ensure that models are accurate, reliable, and clinically relevant. Inaccurate or poorly chosen metrics can lead to flawed models that are unsuitable for clinical use. In the context of lung cancer diagnosis, the use of segmentation metrics such as Dice Similarity Coefficient and Intersection over Union can help assess the accuracy of segmentation masks and improve the localization of lung tumors. Similarly, classification metrics such as Classification Accuracy and F1-score can be used to evaluate the overall performance of models in detecting lung tumors. However, it is important to note that different metrics may have different strengths and weaknesses and may be better suited to specific applications or datasets. Finally, it is essential to carefully consider the selection of metrics in the development and evaluation of deep learning models for lung cancer diagnosis and other medical applications.

4. Datasets

In the field of deep learning for lung cancer diagnosis, having access to high-quality datasets is crucial for developing accurate and reliable models [20]. These datasets typically consist of medical images, as well as associated clinical data, such as patient demographics and medical history. By training deep learning models on these datasets, researchers and clinicians can improve their ability to accurately detect and diagnose lung cancer, ultimately improving patient outcomes. By understanding the strengths and limitations of each dataset, researchers and clinicians can make informed decisions about which dataset is most appropriate for their specific research or clinical needs. Table 1 summarizes the publicly available databases used for lung cancer diagnosis in the reviewed articles.

Table 1.

Databases used for lung cancer diagnosis.

5. Deep Learning Approach for Lung Cancer Diagnosis

As deep learning techniques continue to revolutionize the field of medical imaging, researchers have increasingly turned to large-scale databases to train and validate their algorithms. Many studies have been done to diagnose lung cancer using different datasets, both public and private. Each dataset has its own unique characteristics and challenges. To provide a comprehensive overview of the state-of-the-art in this field, this section presents a review of the relevant literature organized by the databases used in each study. By examining the approaches and results of each study in turn, we aim to identify common trends, best practices, and areas for future research in the use of deep learning for lung cancer diagnosis.

5.1. Deep Learning Techniques Using Public Databases

This section of the review aims to provide a comprehensive overview of research studies focused on the segmentation, classification, and detection of Regions of Interest (ROIs) in lung cancer using data from public databases. Appendix A Table A2 summarizes the reviewed methods for lung cancer diagnosis using Public Datasets.

5.1.1. Deep Learning Techniques for Lung Cancer Using LIDC Dataset

One of the most widely used databases for evaluating deep learning algorithms in the context of lung cancer diagnosis is the LIDC dataset. Since its release in 2011, the LIDC dataset has been used in numerous studies to develop and test deep learning algorithms for automated nodule detection, classification, and segmentation. In this section, we review a selection of studies that have utilized the LIDC database to train and validate deep learning models for lung cancer diagnosis. By examining the methods and results of these studies, we aim to identify key insights and challenges in using the LIDC dataset.

Da et al. [33] explored the performance of deep transfer learning for the classification of lung nodules malignancy. The study utilized CNN including VGG16, VGG19, MobileNet, Xception, InceptionV3, ResNet50, Inception-ResNet-V2, DenseNet169, DenseNet201, NASNetMobile, and NASNetLarge, and then classified the deep features returned using various classifiers including Naive Bayes, MultiLayer Perceptron (MLP), Support Vector Machine (SVM), K-Nearest Neighbors (KNN), and Random Forest (RF). The best combination of deep extractor and classifier was found to be CNN-ResNet50 with SVM-RBF achieving an accuracy of 88.41% and an AUC of 93.19%. These results are comparable to related works even using only a CNN pre-trained on non-medical images. The study therefore proved that deep transfer learning is a relevant strategy for extracting representative imaging biomarkers for the classification of lung nodule malignancy in thoracic CT images.

Song et al. [34] addressed the problem of inaccurate lung cancer diagnosis due to the experience of physicians. To improve the accuracy of lung cancer diagnosis, deep learning techniques were used in medical imaging. Specifically, the study compared the prediction performance of three deep neural networks (CNN, DNN, and SAE) for classifying benign and malignant pulmonary nodules on CT images. The results showed that the CNN network outperformed the other two networks with an accuracy of 84.15%, sensitivity of 83.96%, and specificity of 84.32%. The study indicated that the proposed method can be generalized for other medical imaging tasks to design high-performance CAD systems in the future.

Zhang et al. [35] aimed to tackle the problem of classifying lung nodules in 3D CT images for computer-aided diagnosis (CAD) systems. The early detection of lung nodules is critical for improving the survival rate of lung cancer patients. The proposed approach involved applying DCNN to perform an end-to-end classification of raw 3D nodule CT patches, eliminating the need for nodule segmentation and feature extraction in the CAD system. State-of-the-art CNN models such as VGG16, VGG19, ResNet50, DenseNet121, MobileNet, Xception, NASNetMobile, and NASNetLarge were modified to 3D-CNN models for this study. Experimental results showed that DenseNet121 and Xception achieved the best results for lung nodule diagnosis in terms of accuracy (87.77%), specificity (92.38%), precision (87.88%), and AUC (93.79%).

Shetty et al. [36] presented a new technique for accurate segmentation and classification of lung cancer using CT images by applying optimized deformable models and deep learning techniques. The proposed method involved pre-processing, lung lobe segmentation, lung cancer segmentation, data augmentation, and lung cancer classification. In the pre-processing step, median filtering was used, while Bayesian fuzzy clustering was applied for segmenting the lung lobes. The lung cancer segmentation was carried out using Water Cycle Sea Lion Optimization (WSLnO) based deformable model. To improve the classification accuracy, the data augmentation process was used, which involved augmenting the size of the segmented region. The lung cancer classification was done effectively using Shepard Convolutional Neural Network (ShCNN), which was trained by WSLnO algorithm. The proposed WSLnO algorithm was designed by incorporating Water cycle algorithm (WCA) and Sea Lion Optimization (SLnO) algorithm. The proposed technique showed improved performance in terms of accuracy, sensitivity, specificity, and average segmentation accuracy. The average segmentation accuracy achieved was 0.9091, while the accuracy, sensitivity, and specificity values were 0.9303, 0.9123, and 0.9133, respectively. The combination of optimized deformable model-based segmentation and deep learning techniques proved to be effective in accurately detecting and classifying lung cancer using CT images.

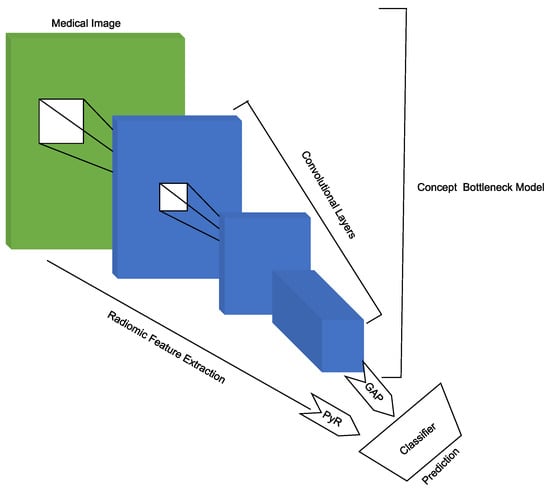

Brocki et al. [37] highlight the limitations of deep neural networks (DNNs) in clinical applications for cancer diagnosis and prognosis due to their lack of interpretability. To address this issue, the authors proposes ConRad, an interpretable classifier that combines expert-derived radiomics and DNN-predicted biomarkers for CT scans of lung cancer. The proposed model is evaluated using CT images of lung tumors, and compared to CNNs acting as a black box classifier. The ConRad models using nonlinear SVM and logistic regression with Lasso outperforms the others in five-fold cross-validation, with the interpretability of ConRad being its primary advantage. The increased transparency of the ConRad model allows for better-informed diagnoses by radiologists and oncologists and can potentially helps in discovering critical failure modes of black box classifiers. However, the study’s limitations include the focus on a single dataset, and the lack of external validation, which warrants further investigation. Nonetheless, the proposed model demonstrates the potential for the broader incorporation of explainable AI into radiology and oncology, with the code available on [38] for reproducibility. In Figure 2 presents the differents step use to obtain there results.

Figure 2.

Scheme of image processing, feature extraction, and t-distributed stochastic neighborhood embedding (t-SNE) visualization in [37].

Hua et al. [39] aim to simplify the image analysis pipeline of conventional CAD with deep learning techniques for differentiating a pulmonary nodule on CT images. The authors introduce two deep learning models, namely, a deep belief network (DBN) and a CNN, in the context of nodule classification, and compare them with two baseline methods that involve feature computing steps. The LIDC dataset is used for classification of malignancy of lung nodules without computing the morphology and texture features. The experimental results indicates that the proposed deep learning framework outperform conventional hand-crafted feature computing CAD frameworks.

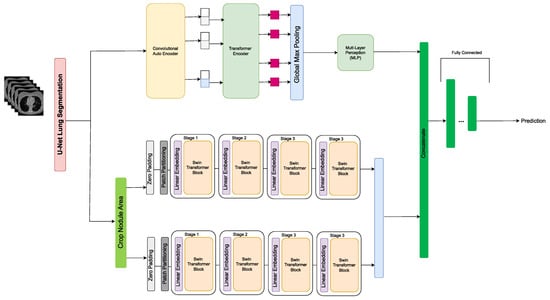

Khademi et al. [40] proposed a novel hybrid discovery Radiomics framework that integrates temporal and spatial features extracted from non-thin chest CT slices to predict Lung Adenocarcinoma (LUAC) malignancy with minimum expert involvement. The proposed hybrid transformer-based framework consisted of two parallel paths: The first was the Convolutional Auto-Encoder (CAE) Transformer path, which extracted and captured informative features related to inter-slice relations via a modified Transformer architecture, and the second the Shifted Window (SWin) Transformer path, which extracted nodules related spatial features from a volumetric CT scan. The extracted temporal and spatial features were then fused through a fusion path to classify LUACs. The proposed CAET-SWin model combined spatial and temporal features extracted by its two constituent parallel paths (the CAET and SWin paths) designed based on the self-attention mechanism. The experimental results on a dataset of 114 pathologically proven Sub-Solid Nodules (SSNs) showed that the CAET-SWin significantly improved reliability of the invasiveness prediction task while achieving an accuracy of 82.65%, sensitivity of 83.66%, and specificity of 81.66% using 10-fold cross-validation. The CAET-SWin significantly improved reliability of the invasiveness prediction task compared to its radiomics-based counterpart while increase the accuracy by 1.65% and sensitivity by 3.66%. Figure 3 presents the pipeline proposed by Khademi et al.

Figure 3.

Pipeline of the CAET-SWin Transformer in [40].

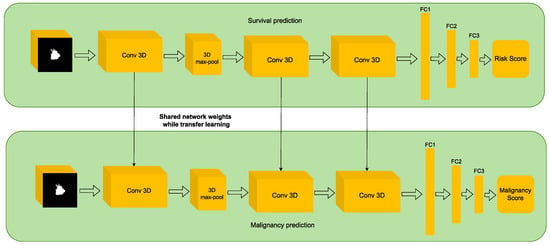

Mukhjerjee et al. [41] developed LungNet, a shallow CNN to predict the outcomes of patients with NSCLC. LungNet was trained and evaluated on four independent cohorts of patients with NSCLC from different medical centers. The results showed that the outcomes predicted by LungNet were significantly associated with overall survival in all four independent cohorts, with concordance indices of 62%, 62%, 62%, and 08% on cohorts 1, 2, 3, and 4, respectively. Additionally, LungNet was able to classify benign versus malignant nodules on the LIDC dataset with an improved performance (AUC = 85%) compared to training from scratch (AUC = 82%) via transfer learning. Overall, the results suggest that LungNet can be used as a non-invasive predictor for prognosis in patients with NSCLC, facilitating the interpretation of CT images for lung cancer stratification and prognostication. The Figure 4 shows the process of making predictions using LungNet. The input to LungNet is a CT image of a lung tumor. The network then extracts features from the image using a series of convolutional layers. These features are then passed through a fully connected layer to make a prediction about the patient’s prognosis. The code for LungNet is available at [42].

Figure 4.

The proposed LungNet architecture in [41].

Da et al. [43] addressed the problem of early detection in lung cancer. To improve early detection and increase survival rates, the study explored the performance of deep transfer learning from non-medical images on lung nodule malignancy classification tasks. The authors preprocessed the data by resizing and normalizing the images and extracting patches around the nodules. Using various convolutional neural networks trained on the ImageNet dataset, the study achieved the highest AUC value of 93.10% using the ResNet50 deep feature extractor and the SVM RBF classifier. In addition to comparing different convolutional neural network architectures and classifiers, the authors also performed ablation experiments to investigate the contribution of different components in their method such as the use of data augmentation and different types of pretraining. They found that data augmentation and using a more complex pretraining dataset ImageNet-21k [44] improved the results.

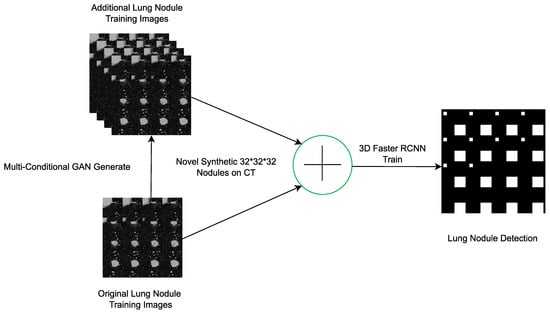

Han et al. [45] proposed a data augmentation method to boost sensitivity in 3D object detection. The authors suggested using 3D conditional GANs to synthesize realistic and diverse 3D images as additional training data. Specifically, they proposed the use of 3D Multi-Conditional GAN (MCGAN) to generate 32 × 32 × 32 nodules naturally placed on lung CT images. The MCGAN model employed two discriminators for conditioning: The context discriminator learned to classify real versus synthetic nodule/surrounding pairs with noise box-centered surroundings while the nodule discriminator attempted to classify real versus synthetic nodules with size/attenuation conditions. The results of the study indicated that 3D CNN-based detection could achieve higher sensitivity under any nodule size/attenuation at fixed False Positive rates. The use of MCGAN-generated realistic nodules helped overcome the medical data paucity and even expert physicians failed to distinguish them from the real ones in a Visual Turing Test. The bounding box-based 3D MCGAN model could generate diverse CT-realistic nodules at desired position/size/attenuation blending naturally with surrounding tissues. The synthetic training data boosted sensitivity under any size/attenuation at fixed FP rates in 3D CNN-based nodule detection. This was attributed to the MCGAN’s good generalization ability which came from multiple discriminators with mutually complementary loss functions along with informative size/attenuation conditioning. In Figure 5 MCGAN generated realistic and diverse nodules naturally on lung CT scans at desired position/size/attenuation based on bounding boxes and the CNN-based object detector used them as additional training data.

Figure 5.

3D MCGAN-based DA for better object detection in [45].

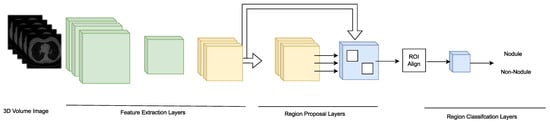

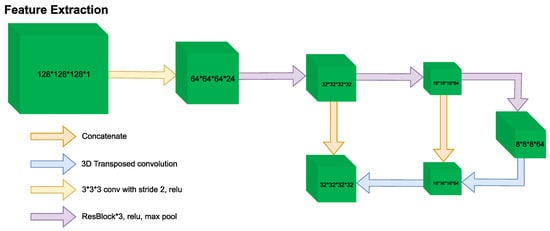

Katase et al. [46] worked on the development of a CAD system that automatically detects lung nodules in CT images. The main challenge faced by radiologists is identifying small nodule shadows from 3D volume images which can often result in missed nodules. To address this issue, the researchers used deep learning technology to design an automated lung nodule detection system that is robust to imaging conditions. To evaluate the detection performance of the system, the researchers used several public datasets including LIDC-IDRI and SPIE-AAPM as well as a private database of 953 scans and 1177 chest CT scans from Kyorin University Hospital. The system achieved a sensitivity of 98.00/96.00% at 3.1/7.25 false positives per case on the public datasets and sensitivity did not change within the range of practical doses for a study using a phantom. To investigate the clinical usefulness of the CAD system a reader study was conducted with 10 doctors including inexperienced and expert readers. The study showed that using the CAD system as a second reader significantly improved the detection ability of nodules that could be picked up clinically (p = 0.026). The analysis was performed using the Jackknife Free-Response Receiver Operating Characteristic (JAFROC). Figure 6 shows the Feature extraction layers extract characteristics from 3D image data by 3D convolution, and region proposal layers output multiple candidate regions, region classification layers determine whether each candidate region is a nodule, and make this the final output.

Figure 6.

Overview of the Feature extraction layers network in [46].

Tan et al. [47] proposed a new approach to automated detection of juxta-pleural pulmonary nodules in chest CT scans. The article highlighted the challenge of using CNN with limited datasets which can lead to overfitting and presented a novel knowledge-infused deep learning-based system for automated detection of nodules. The proposed CAD methodology infused engineered features, specifically texture features into the deep learning process to overcome the dataset limitation challenge. The system significantly reduced the complications of traditional procedures for pulmonary nodules detection while retaining and even outperforming the state-of-the-art accuracy. The methodology utilized a two-stage fusion method (early fusion and late fusion) which enhanced scalability and adaptation capability by allowing for the easy integration of more useful expert knowledge in the CNN-based model for other medical imaging problems. The results demonstrated that the proposed methodology achieved a sensitivity of 88.00% with 1.9 false positives per scan and a sensitivity of 94.01% with 4.01 false positives per scan. The methodology showed high performance compared to both existing CNN-based approaches and engineered feature-based classifications achieving an AUC of 0.82 with an end-to-end voting-based CNN method for lung nodule detection.

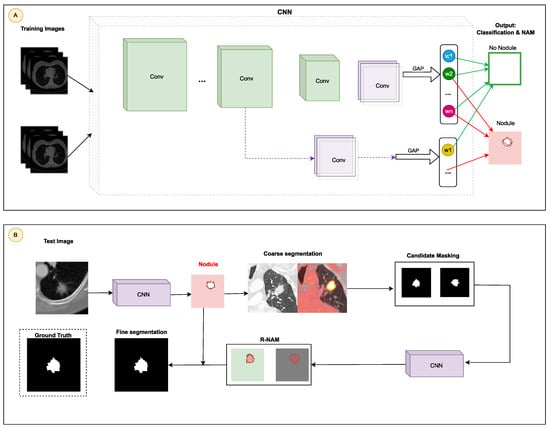

Feng et al. presented in [48] a novel weakly-supervised method for accurately segmenting pulmonary nodules at the voxel-level using only image-level labels. The objective was to extend a CNN model originally trained for image classification to learn discriminative regions at different resolution scales and identify the true nodule location using a candidate-screening framework. The proposed method employed transfer learning from a CNN trained on natural images and adapted the VGG16Net architecture to incorporate GAP operations. The authors demonstrated that their weakly-supervised nodule segmentation framework achieved competitive performance compared to a fully-supervised CNN-based segmentation method, with accuracy values of 88.40% for 1-GAP CNN, 86.60% for 2-GAP model and 84.40% for 3-GAP model on the test set. Furthermore, the proposed method exhibited smaller standard deviations, indicating fewer large mistakes. The proposed method was based on the Nodules Activation Maps (NAM) framework. Figure 7 illustrate the process of the method: In the Training part (A) a CNN model is trained to classify CT slices and generate NAMs; In Segmentation (B) for test slices classified as “nodule slice”, nodule candidates are screened using a spatial scope defined by the NAM for coarse segmentation. Residual NAMs (R-NAMs) are generated from images with masked nodule candidates for fine segmentation.

Figure 7.

Architecture of the automated detection of juxta-pleural pulmonary nodules in [48]: (A) Training: a CNN is trained to identify CT images and create nodule activation mappings (NAMs); (B) Segmentation: test images identified as containing nodules are subjected to potential nodule filtering, based on a spatial delineation established by the NAM for initial segmentation. Residual NAMs (R-NAMs) are then generated using images in which potential nodules are masked, enabling more precise segmentation.

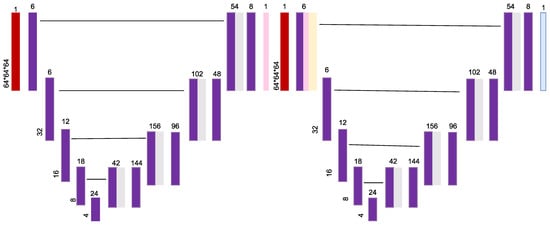

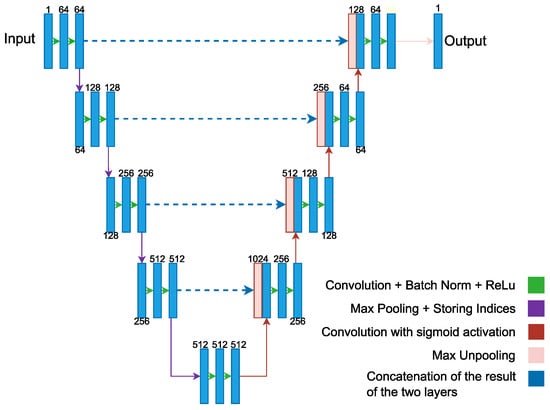

Aresta et al. [49] introduced iW-Net, a deep learning model that allowed for automatic and interactive segmentation of lung nodules in computed tomography images. iW-Net was composed of two blocks: the first one provided automatic segmentation, and the second one allowed the user to correct it by analyzing two points introduced in the nodule’s boundary. The results of the study showed that iW-Net achieved SOAP with an intersection over union score of 55.00%, compared to the inter-observer agreement of 59.00%. The model also allowed for the correction of small nodules which is essential for proper patient referral decisions and improved the segmentation of challenging non-solid nodules, thus increasing the early diagnosis of lung cancer. iW-Net improved the segmentation of more than 75.00% of the studied nodules especially those with radii between 1–4 mm, which are crucial for referral. In Figure 8 the network used a 3 × 3 × 3 × N convolution followed by batch normalization and rectified linear unit activation where N is the number of feature maps indicated on top of each layer. It also used a 3 × 3 × 3 × N convolution with a 2 × 2 × 2 stride followed by batch normalization and rectified linear unit activation. The network then employed a 2 × 2 × 2 nearest neighbor up-sample and a 3 × 3 × 3 × N convolution with sigmoid activation. The source code for iW-Net is available at [50].

Figure 8.

iW-Net: a network for guided segmentation of lung nodules as proposed in [49].

Joana Rocha et al. [51] proposes three distinct methodologies for pulmonary nodule segmentation in CT scans. The first approach is a conventional one that implements the Sliding Band Filter (SBF) to estimate the filter’s support points and match the border coordinates. The other two approaches are Deep Learning based and use the U-Net and a novel network called SegU-Net to achieve the same goal. The study aims to identify the most promising tool to improve nodule characterization. The authors used a database of 2653 nodules from the LIDC database and compared the performance of the three approaches. The results showed Dice scores of 66.30%, 83.00%, and 82.30% for the SBF, U-Net, and SegU-Net, respectively. The U-Net based models yielded more identical results to the ground truth reference annotated by specialists making it a more reliable approach for the proposed exercise. The novel SegU-Net network revealed similar scores to the U-Net while at the same time reducing computational cost and improving memory efficiency. The Figure 9 illustrate SegU-Net. SegU-Net adds a few modifications to the U-Net architecture. Firstly, it uses reversible convolutions in the ascending part. This enables SegU-net to preserve image detail during segmentation. Secondly, it uses a fusion layer to combine information from both parts of the network. This enables SegU-net to achieve more accurate segmentations.

Figure 9.

SegU-Net’s model in [51].

Wang et al. [52] present an approach called Multi-view Convolutional Neural Networks (MVCNN) that captures a diverse set of nodule-sensitive features from axial, coronal and sagittal views in CT images simultaneously. The objective is to segment various types of nodules, including juxta-pleural, cavitary, and nonsolid nodules. The methodology consists of three CNN branches each with seven stacked layers that take multi-scale nodule patches as input. These branches extract features from three orthogonal image views in CT which are then integrated with a fully connected layer to predict whether the patch center voxel belongs to the nodule. The approach does not involve any nodule shape hypothesis or user-interactive parameter settings. The study uses 893 nodules from the public LIDC-IDRI dataset where ground-truth annotations and CT imaging data were provided. The results show that MVCNN achieved an average dice similarity coefficient (DSC) of 77.67% and an average surface distance (ASD) of 24.00%, outperforming conventional image segmentation approaches.

Tang et al. [53] proposed a novel approach to solve nodule detection, false positive reduction, and nodule segmentation jointly in a multi-task fashion. The authors presented a new end-to-end 3D deep convolutional neural net (DCNN) called NoduleNet. The goal was to improve the accuracy of nodule detection and segmentation on the LIDC dataset. To avoid friction between different tasks and encourage feature diversification, the authors incorporated two major design tricks in their methodology. Firstly, decoupled feature maps were used for nodule detection and false positive reduction. Secondly, a segmentation refinement subnet was used to increase the precision of nodule segmentation. The authors used the LIDC dataset as their base dataset for training and testing their model. They showed that their model improves the nodule detection accuracy by 10.27%, compared to the baseline model trained only for nodule detection. The cross-validation results on the LIDC dataset demonstrate that the NoduleNet achieves a final CPM score of 87.27% on nodule detection and a DSC score of 83.10% on nodule segmentation, which represents the current state-of-the-art performance on this dataset. The code of NoduleNet is available on [54].

The early identification and classification of pulmonary nodules are crucial for improving lung cancer survival rates. This is considered a key requirement in computer-assisted diagnosis. To address this challenge, ref. [55] proposed a method for predicting the malignant phenotype of pulmonary nodules. The method is based on weighted voting rules. Features of the pulmonary nodules were extracted using Denoising Auto Encoder, ResNet-18, and modified texture and shape features. These features assess the malignant phenotype of the nodules. The results showed a final classification accuracy of 93.10 ± 2.4%, highlighting the method’s feasibility and effectiveness. This method combines the robust feature extraction capabilities of deep learning with the use of traditional features in image representation. The study successfully identified multi-class nodules which is the first step in lung cancer diagnosis. The study also explored the importance of various features in classifying the malignant phenotype of pulmonary nodules. It found that shape features were most crucial followed by texture features and deep learning features.

5.1.2. Deep Learning Techniques for Lung Cancer Using LUNA16 Dataset

Xie et al. [56] introduce a new approach to the complex task of automated pulmonary nodule detection in CT images. The aim of this work is to assist in the CT reading process by quickly locating lung nodules. A two-stage methodology is used, consisting of nodule candidate detection and false positive reduction. To accomplish this, the authors present a detection framework based on Faster Region-based CNN (Faster R-CNN). The Faster R-CNN structure is modified with two region proposal networks and a deconvolutional layer for nodule candidate detection. Three models are trained for different kinds of slices to integrate 3D lung information, and then the results are fused. For false positive reduction, a boosting 2D CNN architecture is designed. Three models are sequentially trained to handle increasingly difficult mimics. Misclassified samples are retrained to improve sensitivity in nodule detection. The outcomes of these networks are fused to determine the final classification. The Luna16 database serves as the evaluation benchmark, achieving a sensitivity of 86.42% for nodule candidate detection. For false positive reduction, sensitivities of 73.40% and 74.40% are reached at 1/8 and 1/4 FPs/scan, respectively.

Sun et al. [57], address the problem of low accuracy in traditional lung cancer detection methods particularly in realistic diagnostic settings. To improve accuracy, the authors propose the use of the Swin Transformer model for lung cancer classification and segmentation. They introduce a novel visual converter that produces hierarchical feature representations with linear computational complexity related to the input image size. The LUNA16 dataset and the MSD dataset are used for segmentation to compare the performance of the Swin Transformer with other models. These include Vision Transformer (ViT), ResNet-101 and data-efficient image transformers (DeiT)-S [58]. The findings reveal that the pre-trained Swin-B model achieves a top-1 accuracy of 82.26% in classification tasks, outperforming ViT by 2.529%. For segmentation tasks, the Swin-S model shows an improvement over other methods with a mean Intersection over Union (mIoU) of 47.93%. These results indicate that pre-training enhances the Swin Transformer model’s accuracy.

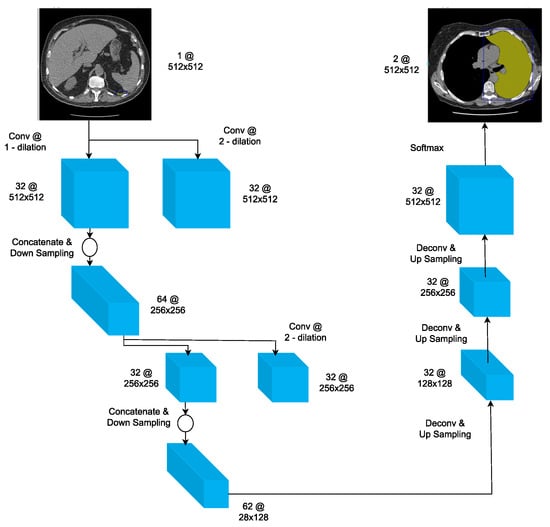

Agnes et al. [59], address the challenge of manually examining small nodules in computed tomography scans, a process that becomes time-consuming due to human vision limitations. To address this, they introduce a deep-learning-based CAD framework for quick and accurate lung cancer diagnosis. The study employs a dilated SegNet model to segment the lung from chest CT images and develops a CNN model with batch normalization to identify true nodules. The segmentation model’s performance is evaluated using the Dice coefficient, while sensitivity is used for the nodule classifier. The discriminative power of features learned by the CNN classifier is further validated through principal component analysis. Experimental outcomes show that the dilated SegNet model achieves an average Dice coefficient of 89.00 ± 23.00% and the custom CNN model attains a sensitivity of 94.80% for classifying nodules. These models excel in lung segmentation and 2D nodule patch classification within the CAD system for CT-based lung cancer diagnosis. Visual results substantiate that the CNN model effectively classifies small and complex nodules with high probability values. Figure 10 highlights the dilated SegNet for lung segmentation.

Figure 10.

The proposed dilated SegNet for lung segmentation proposed in [59].

Yuan et al. [60] introduce a novel method for early diagnosis, and timely treatment of lung cancer through the detection and identification of malignant nodules in chest CT scans. The proposed multi-modal fusion multi-branch classification network merges structured radiological data with unstructured CT patch data to differentiate benign from malignant nodules. This network features a multi-branch fusion-based effective attention mechanism for 3D CT patch unstructured data. It also employs a 3D ECA-ResNet, inspired by ECA-Net [61], to dynamically adjust the features. When tested on the LUNA16 and LIDC-IDRI databases, the network achieves the highest accuracy of 94.89%, sensitivity of 94.91% and F1-score of 94.65% along with the lowest false positive rate of 5.55%.

Hassan Mkindu et al. [62] propose a computer-aided diagnosis (CAD) scheme for lung nodule prediction based on a 3D multi-scale vision transformer (3D-MSViT). The goal of this scheme is to improve the efficiency of lung nodule prediction from 3D CT images by enhancing multi-scale feature extraction.The 3D-MSViT architecture uses a local-global transformer block structure where the local transformer stage processes each scale patch separately and then merges multi-scale features at the global transformer level. Unlike traditional methods, the transformer blocks rely solely on the attention mechanism without including CNNs to reduce network parameters. The study uses the Luna16 database to evaluate the proposed scheme. The results demonstrate that the 3D-MSViT algorithm achieved the highest sensitivity of 97.81% and competition performance metrics of 91.10%. However, the proposed scheme is limited to single image modality (CT images) and does not include a stage for false-positive reduction. 3D-ViTNet is an architecture that relies on a single-scale vision transformer encoder without using CNNs. Experimental results show that the integration of 3D ResNet with the attention module improves the detection sensitivity in all tenfold cross-validations compared to plain 3D ResNet. The introduction of 3DViTNet slightly reduces the sensitivities in each experiment fold. The optimal sensitivity is achieved using the multi-scale architecture 3D-MSViT.

5.1.3. Deep Learning Techniques for Lung Cancer Using NLST Dataset

Ardila et al. [63] develop an end-to-end deep learning algorithm for lung cancer screening using low-dose chest CT scans. The goal is to predict a patient’s risk of developing lung cancer using their current and prior CT volumes, while demonstrating the potential for deep learning models to increase accuracy, consistency and adoption of lung cancer screening worldwide. The methodology consists of four components, all trained using the TensorFlow platform: Lung segmentation: This component uses Mask-RCNN, which is a 2D object detection algorithm, to segment the lungs in the CT images and compute the center of the bounding box for further processing. Cancer ROI detection: This component uses RetinaNet, which is a 3D object detection algorithm, to detect cancer ROIs in the CT images; Full-volume model: This component uses 3D inflated Inception V1 to predict whether the patient has cancer within 1 year; Cancer risk prediction model: This component uses 3D Inception to extract features from the output of the previous two models and predict the patient’s individual malignancy score. The algorithm achieves a higher performance with an area under the curve of 94.40% on 6716 scans. The model outperforms all six radiologists with absolute reductions of 11% in false positives and 5% in false negatives when prior CT imaging was not available.

Than et al. [64], address the challenge of distinguishing between lung cancer and lung tuberculosis (LTB) without invasive procedures which can have significant risks. The study proposes using transfer learning on early convolutional layers to mitigate the challenges posed by limited training datasets. The methodology used a customized 15-layer VGG16-based 2D DNN architecture trained and tested on sets of CT images extracted from the NLST and the NIAID TB Portals [65]. The performance of the DNN was evaluated under locked and step-wise unlocked pretrained weight conditions. The results indicate that the DNN achieved an accuracy of 90.40% with an F score of 90.10%, supporting its potential as a noninvasive screening tool capable of reliably detecting, and distinguishing between lung cancer and LTB.

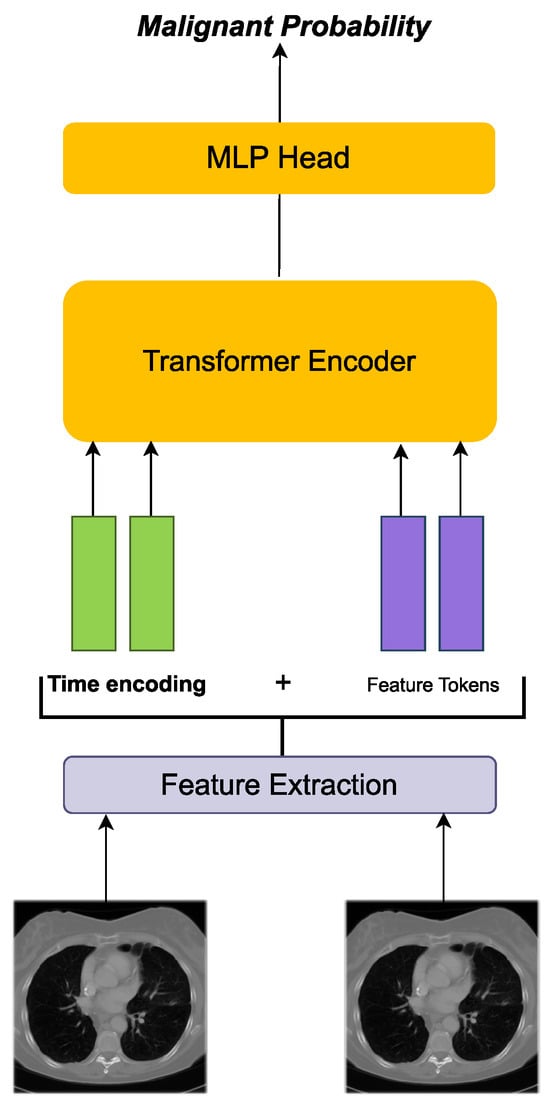

Li et al. [66], address the problem of interpreting temporal distance between sparse, irregularly sampled spatial features in longitudinal medical images. They propose two interpretations of a time-distance ViT using vector embeddings of continuous time and a temporal emphasis model to scale self-attention weights. The proposed methods were evaluated on the NLST dataset and the experiments showed a fundamental improvement in classifying irregularly sampled longitudinal images compared to standard ViTs. The two interpretations of the time-distance ViT are as follows: Time embedding strategy: This strategy uses a vector embedding of continuous time to represent the temporal distance between two spatial features. The vector embedding is learned during training; Temporal emphasis model: This strategy learns a separate temporal emphasis model in each attention head. The temporal emphasis model assigns a weight to each spatial feature, depending on its temporal distance to the other spatial features. The experiments on the NLST dataset showed that the time-distance ViTs with both the time embedding strategy and the temporal emphasis model achieved the best performance. They achieved AUC scores of 78.50% and 78.60%, respectively which significantly outperformed the standard ViTs (AUC score of 73.40%) and the cross-sectional approach (AUC score of 77.90%). The results of this study suggest that the time-distance ViTs have the potential to improve the classification of longitudinal medical images. The study also provides a new way to interpret temporal distance in longitudinal medical images. The Figure 11 explains the process of the proposed method. A region proposal network (RPN) is used to propose five local regions in each image volume. Then, the features of these regions are extracted and embedded as five feature tokens. The time distance between the repeated images is then integrated into the model using the time embedding strategy or the temporal emphasis model. The code for the proposed method is available on [67].

Figure 11.

Feature extraction of 2D data was done by taking uniform patches and linearly projecting them in to a common embedding space proposed in [66].

Lu et al. [68] describe the development and validation of a CNN called CXR-LC that predicts long-term incident lung cancer using data commonly available in the electronic medical record (EMR) 12 years before. The CXR-LC model was developed in the Prostate, Lung, Colorectal, and Ovarian (PLCO) Cancer Screening Trial and validated in additional PLCO and NLST smokers. The results showed that the CXR-LC model had better discrimination for incident lung cancer than CMS eligibility, with an AUC of 75.50% vs. 63.40%, respectively (p < 0.001). The CXR-LC model’s performance was similar to that of PLCOM2012 a state-of-the-art risk score with 11 inputs, in both the PLCO dataset (CXR-LC AUC of 75.50% vs. PLCOM2012 AUC of 75.10%) and the NLST dataset (65.90% vs. 65.00%). When compared in equal-sized screening populations, CXR-LC was more sensitive than CMS eligibility in the PLCO dataset (74.90% vs. 63.80%; p = 0.012) and missed 30.70% fewer incident lung cancers. On decision curve analysis, CXR-LC had higher net benefit than CMS eligibility and similar benefit to PLCOM2012. The CXR-LC model identified smokers at high risk for incident lung cancer, beyond CMS eligibility and using information commonly available in the EMR.

5.1.4. Deep Learning Techniques for Lung Cancer Using TCGA Dataset

Khan et al. [69] propose an end-to-end deep learning approach called Gene Transformer to address the complexity of high-dimensional gene expression data in the classification of lung cancer subtypes. The study investigates the use of transformer-based architectures which leverage the self-attention mechanism to encode gene expressions and learn representations that are computationally complex and parametrically expensive. The Gene Transformer architecture is inspired by the Transformer encoder architecture and uses a multi-head self-attention mechanism with 1D convolution layers as a hybrid architecture to assess high-dimensional gene expression datasets. The framework prioritizes features during testing and outperforms existing SOTA methods in both binary and multiclass problems. The authors demonstrate the potential of the multi-head self-attention layer to perform 1D convolutions and suggest that it is less expensive than ordinary 2D convolutional layers.

The accurate identification of lung cancer subtypes in medical images is crucial for their proper diagnosis and treatment. Despite the progress made by existing methods, challenges remain due to limited annotated datasets, large intra-class differences, and high inter-class similarities. To address these challenges, Cai et al. [70] proposed a dual-branch deep learning model called the Frequency Domain Transformer Model (FDTrans). FDTrans combines image domain and genetic information to determine lung cancer subtypes in patients. To capture critical detail information a pre-processing step was added to transfer histopathological images to the frequency domain using a block-based discrete cosine transform. The Coordinate-Spatial Attention Module (CSAM) was designed to reassign weights to the location information and channel information of different frequency vectors. A Cross-Domain Transformer Block (CDTB) was then designed to capture long-term dependencies and global contextual connections between different component features. Feature extraction was performed on genomic data to obtain specific features and the image and gene branches were fused. Classification results were output through a fully connected layer. In 10-fold cross-validation, the method achieved an AUC of 93.16% and overall accuracy of 92.33% which is better than current lung cancer subtypes classification detection methods.

In [71], Primakov et al. address the importance of detecting and segmenting abnormalities on medical images for patient management and quantitative image research. The authors present a fully automated pipeline for the detection and volumetric segmentation of NSCLC using 1328 thoracic CT scans. They report that their proposed method is faster and more reproducible compared to expert radiologists and radiation oncologists. The authors also evaluate the prognostic power of the automatic contours by applying RECIST criteria [72] and measuring tumor volumes. The results show that segmentations by their method stratify patients into low and high survival groups with higher significance compared to those methods based on manual contours. Additionally, the authors demonstrate that on average, radiologists and radiation oncologists preferred automatic segmentations in 56% of cases. The code is available at [73].

5.1.5. Deep Learning Techniques for Lung Cancer Using JSRT Dataset

Ausawalaithong et al. [74], aim to develop an automated system for predicting lung cancer from chest X-ray images using deep learning. They explore the use of a 121-layer convolutional neural network, DenseNet121 and a transfer learning scheme to classify lung cancer. The model is trained on a lung nodule dataset before being fine-tuned on the lung cancer dataset to address the issue of a small dataset. The JSRT and ChestX-ray8 datasets are used to evaluate the proposed model. The results indicate a mean accuracy of 74.43 ± 6.01%, mean specificity of 74.96 ± 9.85% and mean sensitivity of 74.68 ± 15.33%. Additionally, the model provides a heatmap for identifying the location of the lung nodule. These findings are promising for further development of chest X-ray-based lung cancer diagnosis using the deep learning approach.

Gordienko et al. [75] aim to leverage advancements in deep learning and image recognition to automatically detect suspicious lesions and nodules in CXRs of lung cancer patients. The study preprocesses the CXR images using lung segmentation and bone shadow exclusion techniques before applying a deep learning approach. The original JSRT dataset and the BSE-JSRT dataset, which is the same as the JSRT dataset but without clavicle and rib shadows were used for analysis. Both datasets were also used after segmentation, resulting in four datasets in total. The results of the study demonstrate the effectiveness of the preprocessing techniques, particularly bone shadow exclusion in improving accuracy and reducing loss. The dataset without bones had significantly better results than the other preprocessed datasets. However, the study notes that pre-processing for label noise during the training stage is crucial because the training data was not accurately labeled for the test set. The study also identifies potential areas for improvement, such as increasing the size and number of images investigated and using data augmentation techniques for lossy and lossless transformations. These improvements would enable a wider range of CXRs to be analyzed and increase the accuracy and efficiency of the deep learning approach.

5.1.6. Deep Learning Techniques for Lung Cancer Using Kaggle DSB Dataset

Yu et al. [76] address the problem of divergent software dependencies in automated chest CT evaluation methods for lung cancer detection, which makes it difficult to compare and reproduce these methods. The study aims to develop reproducible machine learning modules for lung cancer detection and compare the approaches and performances of the award-winning algorithms developed in the Kaggle Data Science Bowl. The authors obtained the source codes of all award-winning solutions and evaluated the performance of the algorithms using the log-loss function and the Spearman correlation coefficient of the performance in the public and final test sets. The low-dose chest CT datasets in DICOM format from the Kaggle Data Science Bowl website were used. The datasets consisted of a training set with ground truth labels and a public test set without labels. Most solutions implemented distinct image preprocessing, segmentation and classification modules. Variants of U-Net, VGGNet and residual net were commonly used in nodule segmentation and transfer learning was used in most of the classification algorithms.

Tekade et al. [77] propose a solution to the problem of detecting and classifying lung nodules, as well as predicting the malignancy level of these nodules using CT scan images. The authors introduce a 3D multipath VGG-like network, which is evaluated on 3D cubes and combined with U-Net for final predictions. The study uses the LIDC-IDRI, the LUNA16, and the Kaggle DBS 2017 datasets. The proposed approach achieves an accuracy of 95.60% and a logloss of 38.77%, with a dice coefficient of 90%. The results are useful for predicting whether a patient will develop cancer in the next two years. The study concludes that Artificial Neural Networks play an important role in better analyzing the dataset, extracting features and classification. The proposed approach is effective for lung nodule detection and malignancy level prediction using lung CT scan images.

5.1.7. Deep Learning Techniques for Lung Cancer Using Decathlon Dataset

Said et al. [78] proposed a system using deep learning architectures for the early diagnosis of lung cancer in CT scan imaging. The proposed system consists of two parts: segmentation and classification. Segmentation is performed using the UNETR network [79], while classification is performed using a self-supervised network. The segmentation part aims to identify the ROI in the CT scan images. This is done by first projecting the 3D patches from the volumetric image into an embedding space. A positional embedding is then added to these patches. The Transformer encoder captures global and long-range dependencies in the image through attention mechanisms. It extracts high-level representations of the ROI. The classification part is then used to classify the output of the segmentation part, either as benign or malignant. This part is developed on top of the self-supervised network and aims to classify the identified regions as either cancerous or non-cancerous. The proposed system uses 3D-input CT scan data, making it a powerful tool for early diagnosing and combating lung cancer. The system shows promising results in diagnosing lung cancer using 3D-input CT scan data, achieving a segmentation accuracy of 97.83% and a classification accuracy of 98.77%. The Decathlon dataset was used for training and testing experiments.

Guo et al. [80] propose a solution to the anisotropy problem in 3D medical image analysis. This problem occurs when the slice spacing varies significantly between training and clinical datasets, which can degrade the performance of machine learning models. The authors propose a transformer-based model called TSFMUNet, which is adaptable to different levels of anisotropy and is computationally efficient. TSFMUNet is based on a 2D U-Net backbone consisting of a downsampling stream, upsampling stream and a transformer block (Figure 12). The downsampling stream takes 3D CT scans as input and extracts features at multiple resolutions. The upsampling stream then reconstructs the image from the extracted features. The transformer block is used to encode inter-slice information using a self-attention mechanism. This allows the model to adapt to variable slice spacing and to capture long-range dependencies in the image. The authors evaluated TSFMUNet on the MSD database, which includes 3D lung cancer segmentation data. The results showed that TSFMUNet outperforms baseline models such as the 3D U-Net and LSTMUNet. TSFMUNet achieved a segmentation accuracy of 87.17% which is significantly higher than the 77.44% achieved by the 3D U-Net and the 85.73% achieved by the LSTMUNet.

Figure 12.

The propose TSFMUNet in [80].

5.1.8. Deep Learning Techniques for Lung Cancer Using Tianchi Dataset

Hao Tang et al. [81] propose a novel end-to-end framework for pulmonary nodule detection, that integrates nodule candidate screening and false positive reduction into one model. The objective is to improve nodule detection by jointly training the two stages of nodule candidate generation and false positive reduction. The proposed framework follows a two-stage strategy: (1) Generating nodule candidates using a 3D Nodule Proposal Network. (2) Classifying the nodule candidates to reduce false positives. The nodule candidate screening branch uses a 3D Region Proposal Network (RPN) adapted from Faster R-CNN. The RPN predicts a set of bounding boxes around potential nodule candidates. The predicted bounding boxes are then used to crop features of the nodule candidates using a 3D ROI Pool layer as shown in Figure 13. These features are then fed as input to the nodule false positive reduction branch. The nodule false positive reduction branch uses a CNN to classify the nodule candidates as either true positives or false positives. The CNN is trained to minimize the loss between the predicted labels and the ground truth labels. Convolution blocks are then built using residual blocks and maxpooling to further reduce the spatial resolution. This allows the model to learn more abstract features from the image while reducing the amount of memory required. The authors evaluated the proposed framework on the Tianchi competition dataset. The results showed that the end-to-end system outperforms the two-step approach by 3.88%. The end-to-end system also reduces model complexity by one third and cuts inference time by 3.6 fold.

Figure 13.

End-to-end pulmonary nodule detection framework in [81]. (*) is equivalent to the times (×) sign.

Huang et al. [82] propose an improved CNN framework for the more effective detection of pulmonary nodules. The framework consists of three 3D CNNs, namely CNN-1, CNN-2 and CNN-3 which are fused into a new Amalgamated-CNN model to detect pulmonary nodules. To detect nodules, the authors first use an unsharp mask to enhance the nodules in CT images. Then, CT images of 512 × 512 pixels are segmented into smaller images of 96 × 96 pixels and the plaques corresponding to positive and negative samples are segmented. CT images segmented into 96 × 96 pixels are then downsampled to 64 × 64 and 32 × 32 sizes respectively. The authors discard nodules less than 5 mm in diameter and use the AdaBoost classifier to fuse the results of CNN-1, CNN-2, and CNN-3. They call this new neural network framework the Amalgamated-Convolutional Neural Network (A-CNN). The authors evaluated the proposed A-CNN model on the LUNA16 dataset where it achieved sensitivity scores of 81.70% and 85.10% when the average false positives number per scan was 0.125 FPs/scan and 0.25 FPs/scan respectively. These scores were 5.40% and 0.50% higher than those of the current optimal algorithm.

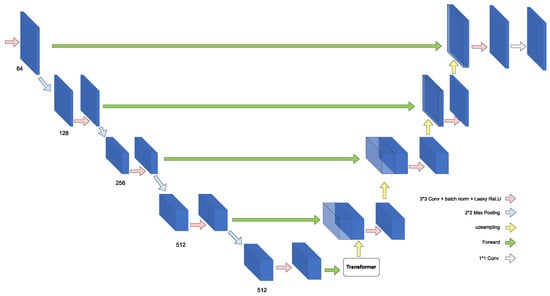

Tang et al. [83] address the challenge of detecting nodules in three-dimensional medical imaging data using DCNN. While previous approaches have used 2D or 2.5D components for analyzing 3D data, the proposed DCNN approach is fully 3D end-to-end and utilizes SOTA object detection techniques. The proposed method consists of two stages: candidate screening and false positive reduction. In the first stage, a U-Net-inspired 3D Faster R-CNN is used to identify nodule candidates while preserving high sensitivity. In the second stage, 3D DCNN classifiers are trained on difficult examples produced during candidate screening to finely discriminate between true nodules and false positives. Models from both stages are then ensembled for final predictions allowing for flexibility in adjusting the trade-off between sensitivity and specificity. The proposed approach was evaluated using data from Alibaba’s 2017 TianChi AI Competition for Healthcare. The classifier was trained for 300 epochs using positive examples from the Faster R-CNN detector balanced with hard negative samples. The input candidates for test set predictions were provided by the detector and the checkpoint with the highest CPM on the validation set was used for prediction on the test set. The proposed approach achieved superior performance with a CPM of 81.50%.

5.1.9. Deep Learning Techniques for Lung Cancer Using Peking University Cancer Hospital Dataset

Clinical staging is crucial for treatment decisions and prognosis evaluation for lung cancer. However, inconsistencies between clinical and pathological stages are common due to the free-text nature of CT reports. In [84], Fischer et al. developed an information extraction (IE) system to automatically extract staging-related information from CT reports using three components: NER [85], relation classification (RC) [86] and postprocessing (PP). The NER component was used to identify entities of interest such as tumor size, lymph node status and metastasis. The RC component was used to classify the relationships between these entities. The PP module was used to correct errors and inconsistencies in the extracted information. The IE system was evaluated on a clinical dataset of 392 CT reports. The BERT model outperformed the ID-CNN-CRF model and the Bi-LSTM-CRF model for NER, with macro-F1 scores of 80.97%, 90.06% and 90.22% respectively. The BERT-RSC model outperformed the baseline methods for RC, with macro-F1 and micro-F1 scores of 97.13% and 98.37% respectively. The PP module achieved macro-F1 and micro-F1 scores of 94.57% and 96.74% respectively for all 22 questions related to lung cancer staging. The experimental results demonstrated the system’s potential for use in stage verification and prediction to facilitate accurate clinical staging.

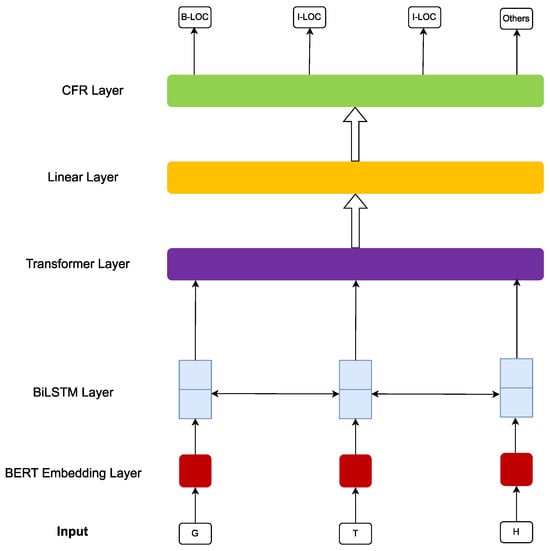

Zhang et al. [87] proposed a novel deep learning approach for extracting clinical entities from Chinese CT reports for lung cancer screening and staging. The free-text nature of CT reports poses a significant challenge to effectively using this valuable information for clinical decision-making and academic research. The proposed approach utilizes the BERT-based BiLSTM-Transformer network (BERT-BTN) with pre-training to extract 14 types of clinical entities. The BERT-BTN model first uses BERT [88] to generate contextualized word embeddings. This is followed by a BiLSTM layer to capture local sequential information. Then a Transformer layer is added to model global dependencies between words regardless of distance. The BiLSTM provides positional information while the Transformer draws long-range dependencies. After the BERT-BiLSTM-Transformer encoder a CRF layer is added to further incorporate constraints from the labels. The proposed approach was evaluated on a clinical dataset consisting of 359 CT reports collected from the Department of Thoracic Surgery II of Peking University Cancer Hospital. The results showed that the BERT-BTN model achieved an 85.96% macro-F1 score under exact match scheme which outperforms the benchmark BERT-BTN, BERT-LSTM, BERT-fine-tune, BERT-Transformer, FastText-BTN, FastText-BiLSTM, and FastText-Transformer models. The results indicate that the proposed approach efficiently recognizes various clinical entities for lung cancer screening and staging and holds great potential for further utilization in clinical decision-making and academic research. The propose BERT-BTN is shown in Figure 14.

Figure 14.

The propose BERT-BTN in [87].

5.1.10. Deep Learning Techniques for Lung Cancer Using MSKCC Dataset

Kipkogei et al. [89] introduced the Clinical Transformer a variation of the transformer architecture that is used for precision medicine to model the relationship between molecular, and clinical measurements and the survival of cancer patients. The Clinical Transformer first uses an embedding strategy to convert the molecular and clinical data into vectors. These vectors are then fed into the transformer which learns long-range dependencies between the features. The transformer also uses an attention mechanism to focus on the most important features for predicting survival. The authors proposed a customized objective function to evaluate the performance of the Clinical Transformer. This objective function takes into account both the accuracy of the predictions and the interpretability of the model. The authors evaluated the Clinical Transformer on a dataset of 1661 patients from the MSKCC. The results showed that the Clinical Transformer outperformed other linear and non-linear methods currently used in practice for survival prediction. The authors also showed that initializing the weights of a domain-specific transformer with the weights of a cross-domain transformer further improved the predictions. The attention mechanism used in the Clinical Transformer successfully captures known biology behind these therapies.

5.1.11. Deep Learning Techniques for Lung Cancer Using SEER Dataset

Doppalapudi et al. [90] aimed to develop deep learning models for lung cancer survival prediction in both classification and regression problems. The study compared the performance of ANN, CNN and RNN models with traditional machine learning models using data from the SEER. The deep learning models outperformed traditional machine learning models achieving a best classification accuracy of 71.18% when patients’ survival periods were segmented into classes of “≤6 months”, “0.5–2 years” and “>2 years”. The RMSE of the regression approach was 13.5% and the R2 value was 0.5. In contrast, the traditional machine learning models saturated at 61.12% classification accuracy and 14.87% RMSE in regression. The deep learning models provide a baseline for early prediction and could be improved with more temporal treatment information collected from treated patients. Additionally, the feature importance was evaluated to investigate the model interpretability and gain further insight into the survival analysis models and the factors that are important in cancer survival period prediction.

5.1.12. Deep Learning Techniques for Lung Cancer Using TCIA Dataset

Barbouchi et al. [91] present a new approach for the classification and detection of lung cancer using deep learning techniques applied to PET/CT images. Early detection is crucial for increasing the cure rate and this approach aims to fully automate the anatomical localization of lung cancer from PET/CT images and classify the tumor to determine the speed of progression and the best treatments to adopt. The authors used the DETR model based on transformers to detect the tumor and assist physicians in staging patients with lung cancer. The TNM staging system and histologic subtype classification were taken as a standard for classification. The proposed approach achieved an IoU of 0.8 when tested on the Lung-PET-CT-Dx dataset, indicating a high level of accuracy in detecting tumors. It also outperformed SOTA T-staging and histologic classification methods, achieving classification accuracy of 0.97 and 0.94 for T-stage and histologic subtypes respectively.

In [91], Barbouchi et al. present a new approach for the classification and detection of lung cancer using deep learning techniques applied to positron emission tomography/computed tomography (PET/CT) images. Early detection is crucial for increasing the cure rate and this approach aims to fully automate the anatomical localization of lung cancer from PET/CT images and classify the tumor to determine the speed of progression and the best treatments to adopt. The authors used the DETR model which is a transformer-based model to detect the tumor and assist physicians in staging patients with lung cancer. The TNM staging system and histologic subtype classification were used as a standard for classification. The proposed approach achieved an IOU of 80% when tested on the Lung-PET-CT-Dx dataset, indicating a high level of accuracy in detecting tumors. It also outperformed SOTA T-staging and histologic classification methods achieving classification accuracy of 97% and 94% for T-stage and histologic subtypes respectively. An IOU of 80% indicates that the predicted region overlaps with the ground truth region by 80%.

5.2. Deep Learning Technics Using Proprietary Datasets

In addition to publicly available datasets, many studies on DL-based lung cancer diagnosis have utilized private databases which may be proprietary or collected specifically for research purposes. While private datasets may offer advantages such as greater size or more detailed annotations they are often not publicly accessible and may be subject to confidentiality agreements. In this section, we review a selection of studies that have utilized private databases for the development and validation of deep learning algorithms for lung cancer diagnosis. These studies demonstrate the potential benefits of working with large and detailed datasets but also raise questions about reproducibility and generalizability of results. By examining the methods and results of these studies, we aim to identify key insights and challenges in using private datasets for deep learning-based lung cancer diagnosis and consider the implications of this approach for the wider research community. Appendix A Table A3 summarizes the reviewed methods for lung cancer diagnosis using proprietary datasets.

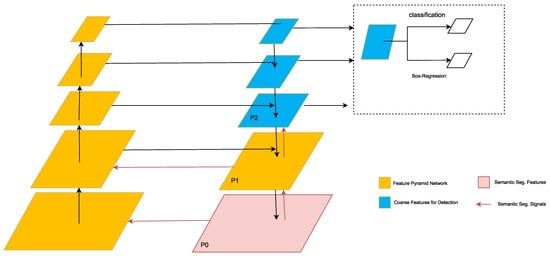

Weikert et al. [92] aimed to develop and test a Retina U-Net algorithm for detecting primary lung tumors and associated metastases of all stages on FDG-PET/CT. The methodology involved evaluating detection performance for all lesion types, assigning detected lesions to categories T, N, or M using an automated anatomical region segmentation and visually analyzing reasons for false positives. The study used a dataset of 364 FDG-PET/CTs of patients with histologically confirmed lung cancer, which was split into a training, validation and internal test dataset. The results showed that the Retina U-Net algorithm had a sensitivity of 86.2% for T lesions and 94.3% accuracy in TNM categorization based on the anatomical region approach. The Figure 15 shows that Retina U-Net architecture resembles a standard U-Net with an encoder-decoder structure. It is a segmentation model that is complemented by additional detection network branches in the lower (coarser) decoder levels for end-to-end object classification and bounding box regression. This allows the detection network to exploit higher-level object features from the segmentation model. The segmentation model provides high-quality pixel-level training signals that are back-propagated to the detection network. This enables Retina U-Net to leverage segmentation labels for object detection in an end-to-end fashion. The study’s performance metrics had wide 95% confidence intervals as the internal test set was only a small portion of the whole internal data set.

Figure 15.

Retina U-Net architecture presented in [92]. The encoder-decoder structure resembles a U-Net.

Nishio et al. [93] presented a CADx method for the classification of lung nodules into benign nodule primary lung cancer and metastatic lung cancer. The study evaluated the usefulness of DCNN for CADx in comparison to a conventional method (hand-crafted imaging feature plus machine learning), the effectiveness of transfer learning, and the effect of image size as the DCNN input. To perform the CADx, the authors used a previously-built database of CT images and clinical information of 1236 patients out of 1240. The CADx was evaluated using the VGG-16 convolutional neural network with and without transfer learning. The hyperparameter optimization of the DCNN method was performed by random search. For the conventional method, CADx was performed using rotation-invariant uniform-pattern local binary pattern on three orthogonal planes with a support vector machine. The study found that DCNN was better than the conventional method for CADx and the accuracy of DCNN improved when using transfer learning. Additionally, the authors discovered that larger image sizes as inputs to DCNN improved the accuracy of lung nodule classification. The best averaged validation accuracies of CADx were 55.90%, 68.00% and 62.40% for the conventional method, the DCNN method with transfer learning and the DCNN method without transfer learning respectively. For image size of 56,112, and 224, the best averaged validation accuracy for the DCNN with transfer learning were 60.70%, 64.70%, and 68.00%, respectively. The study demonstrates that the 2D-DCNN method is more useful for ternary classification of lung nodule than the conventional method for CADx and transfer learning enhances the image recognition for CADx by DCNN when using medium-scale training data.

Lakshmanaprabu et al. [94] presented a novel automated diagnostic classification method for CT images of lungs with the aim of enhancing lung cancer classification accuracy. The methodology comprises two phases: in the first phase, selected features are extracted and reduced using Linear Discriminant Analysis (LDA); in the second phase, an Optimal Deep Neural Network (ODNN) is employed, incorporating the Modified Gravitational Search Algorithm (MGSA) optimization algorithm for classifying CT lung cancer images. The study was conducted on a dataset of 50 low-dosage and recorded lung cancer CT images. The results demonstrate that the proposed classifier achieves a sensitivity of 96.20%, specificity of 94.20% and accuracy of 94.56%.